Service Meshes

microservices networking at layer 5

April 2018

clouds, containers, functions, applications, and their management

Show of Hands

Microservices

The more, the more merrier?

Benefits

The first few services are relatively easy

Democratization of language and technology choice

Faster delivery, service teams running independently, rolling updates

Challenges

The next 10 or so may introduce pain

Language and framework-specific libraries

Distributed environments, ephemeral infrastructure, out-moded tooling

Which is why...

I have a container orchestrator.

Core

Capabilities

-

Cluster Management

-

Host Discovery

-

Host Health Monitoring

-

-

Scheduling

-

Orchestrator Updates and Host Maintenance

-

Service Discovery

-

Networking and Load Balancing

-

Stateful Services

-

Multi-Tenant, Multi-Region

Additional

Key Capabilities

-

Application Health and Performance Monitoring

-

Application Deployments

-

Application Secrets

What do we need?

• Observability

• Logging

• Metrics

• Tracing

• Traffic Control

• Resiliency

• Efficiency

• Security

• Policy

a Service Mesh

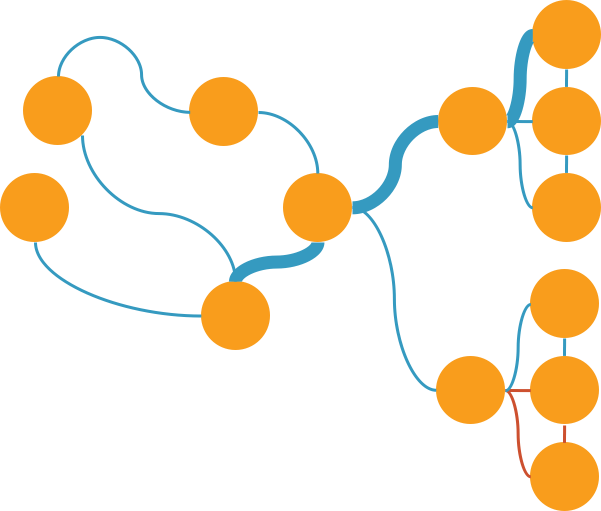

What is a Service Mesh?

a dedicated layer for managing service-to-service communication

so, a microservices platform?

obviously.

Orchestrators don't bring all that you need

and neither do service meshes,

but they do get you closer.

Missing: application lifecycle management, but not by much

partially.

a services-first network

Missing: distributed debugging; provide nascent visibility (topology)

DEV

OPS

Layer 5

where Dev and Ops meet

Problem: too much infrastructure code in services

Why use a Service Mesh?

to avoid...

-

Bloated service code

-

Duplicating work to make services production-ready

-

load balancing, auto scaling, rate limiting, traffic routing, ...

-

-

Inconsistency across services

-

retry, tls, failover, deadlines, cancellation, etc., for each language, framework

-

siloed implementations lead to fragmented, non-uniform policy application and difficult debugging

-

-

Diffusing responsibility of service management

Help with Modernization

-

Can modernize your IT inventory without:

-

Rewriting your applications

-

Adopting microservices, regular services are fine

-

Adopting new frameworks

-

Moving to the cloud

-

Address the long-tail of IT services

Get there for free

What is Istio?

An open platform to connect, manage, and secure microservices

-

Observability

-

Resiliency

-

Traffic Control

-

Security

-

Policy Enforcement

@IstioMesh

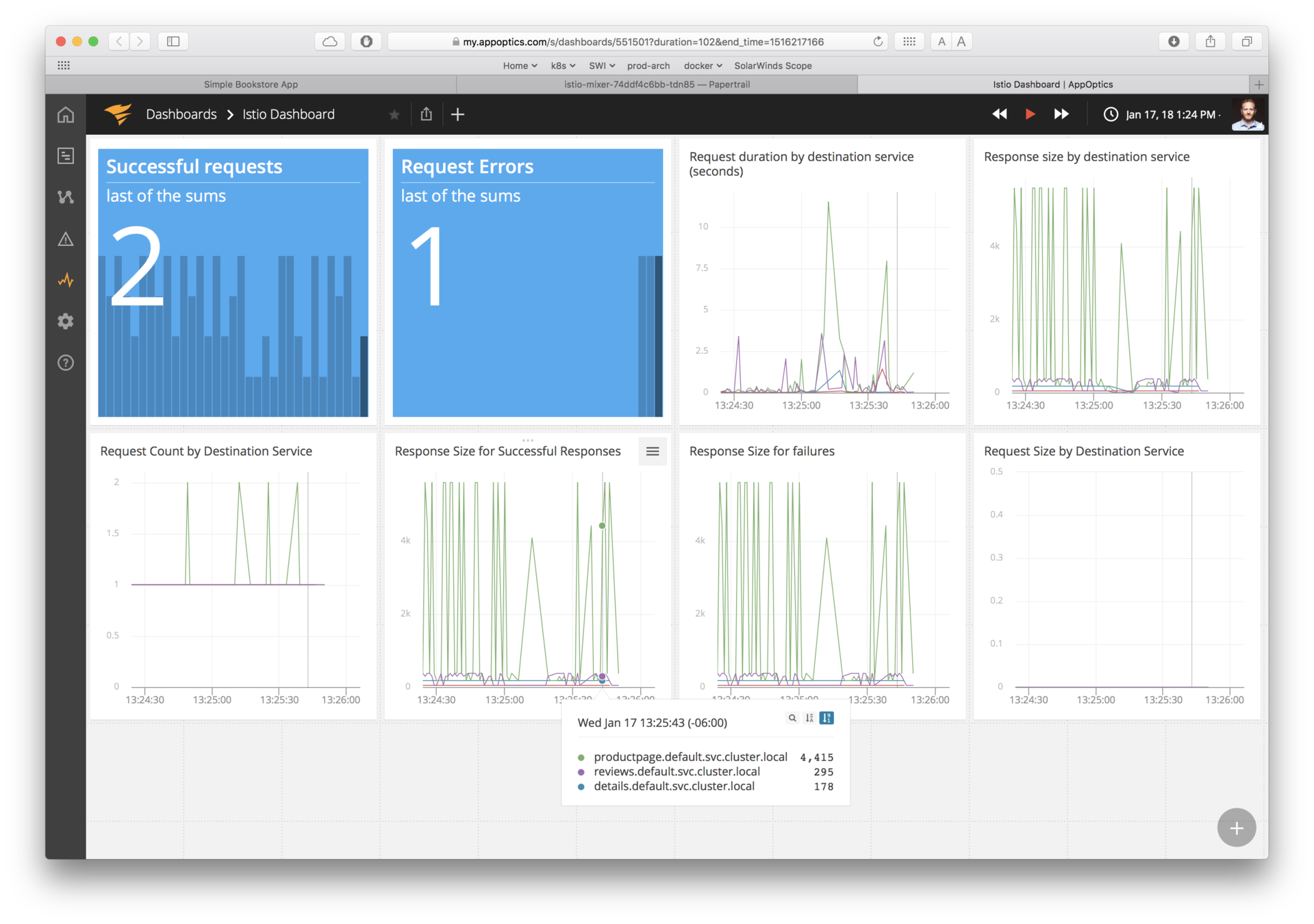

Observability

is what gets people hooked on service metrics

Goals

-

Metrics without instrumenting apps

-

Consistent metrics across fleet

-

Trace flow of requests across services

-

Portable across metric backend providers

You get a metric! You get a metric! Everyone gets a metric!

© 2018 SolarWinds Worldwide, LLC. All rights reserved.

Traffic Control

control over chaos

- Traffic splitting

- L7 tag based routing?

- Traffic steering

- Look at the contents of a request and route it to a specific set of instances.

- Ingress and egress routing

Resilency

- Systematic fault injection

-

Timeouts and Retries with timeout budget

-

Circuit breakers and Health checks

-

Control connection pool size and request load

content-based traffic steering

Istio Architecture

Istio Architecture

Control Plane

Data Plane

Touches every packet/request in the system. Responsible for service discovery, health checking, routing, load balancing, authentication, authorization and observability.

Provides policy and configuration for services in the mesh.

Takes a set of isolated stateless sidecar proxies and turns them into a service mesh.

Does not touch any packets/requests in the system.

Pilot

Citadel

Mixer

Control Plane

Data Plane

istio-system namespace

policy check

Foo Pod

Proxy Sidecar

Service Foo

tls certs

discovery & config

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

reports

Control flow during request processing

application traffic

Application traffic

application namespace

telemetry reports

Istio Architecture

What's Pilot for?

provides service discovery to sidecars

manages sidecar configuration

Pilot

Auth

Control Plane

the head of the ship

Mixer

istio-system namespace

system of record for service mesh

}

provides abstraction from underlying platforms

What's Mixer for?

- Point of integration with infrastructure backends

- Intermediates between Istio and backends, under operator control

- Enables platform & environment mobility

- Responsible for policy evaluation and telemetry reporting

- Provides granular control over operational policies and telemetry

- Has a rich configuration model

- Intent-based config abstracts most infrastructure concerns

Pilot

Auth

Mixer

Control Plane

istio-system namespace

an attribute-processing and routing machine

operator-focused

- Precondition checking

- Quota management

- Telemetry reporting

Mixer

Mixer

Control Plane

Data Plane

istio-system namespace

Foo Pod

Proxy Sidecar

Service Foo

Foo Container

Out-of-band telemetry propagation

Control flow during request processing

application traffic

application traffic

application namespace

telemetry reports

an attribute processing engine

What's Auth for?

-

Verifiable identity

- Issues certs

- Certs distributed to service proxies

- Mounted as a Kubernetes secret

- Secure naming / addressing

- Traffic encryption

Pilot

Auth

Control Plane

security at scale

Mixer

istio-system namespace

security by default

Orchestrate Key & Certificate:

- Generation

- Deployment

- Rotation

- Revocation

Service Proxy Sidecar

A C++ based L4/L7 proxy

Low memory footprint

In production at Lyft

Capabilities:

- API driven config updates → no reloads

- Zone-aware load balancing w/ failover

- Traffic routing and splitting

- Health checks, circuit breakers, timeouts, retry budgets, fault injection…

- HTTP/2 & gRPC

- Transparent proxying

- Designed for observability

the included battery

Data Plane

Pod

Proxy Sidecar

App Container

Extensibility of Istio

Envoy, Linkerd, Nginx, Conduit

Swapping Proxies

Why use another service proxy?

Based on your operational expertise and need for battle-tested proxy. You may be looking for caching, WAF, or other functionality available in NGINX Plus.

If you're already running Linkerd and want to start adopting Istio control APIs like CheckRequest.

Conduit not currently designed as a general-purpose proxy, but lightweight and focused with extensibility via gRPC plugin.

nginMesh

Currently

- Support for rules, policies, mtls encryption, monitoring & tracing

- Compatible with Mixer adaptors

- Transparent sidecar injection

- Compatible with Istio 0.3.0

Roadmap

- Support for gRPC traffic

- Support for ingress proxy

- Support for Quota Checks

- Expanding the mesh beyond Kubernetes

See sidecar-related limitations as well as supported traffic management rules --> here.

Considered beta quality

Soliciting feedback and participation from community

Istio & nginMesh

Architecture

agent

Pilot

Auth

Mixer

Control Plane

- Translator agent Istio to Nginx (in go)

- Loadable module Nginx to Mixer (in rust)

config file

Data Plane

Mixer Module

"istio-proxy" container

route rules

tcp server

istio-system namespace

check

report

listener

dest

module

tcp

http

Out-of-band telemetry propagation

Control flow during request processing

application traffic

application traffic

http servers

Recording - O'Reilly: Istio & nginMesh

Timeouts & Retries

Web

Service Foo

Timeout = 600ms

Retries = 3

Timeout = 300ms

Retries = 3

Timeout = 900ms

Retries = 3

Service Bar

Database

Timeout = 500ms

Retries = 3

Timeout = 300ms

Retries = 3

Timeout = 900ms

Retries = 3

Deadlines

Web

Service Foo

Deadline = 600ms

Deadline = 496ms

Service Bar

Database

Deadline = 428ms

Deadline=180ms

Elapsed=104ms

Elapsed=68ms

Elapsed=248ms

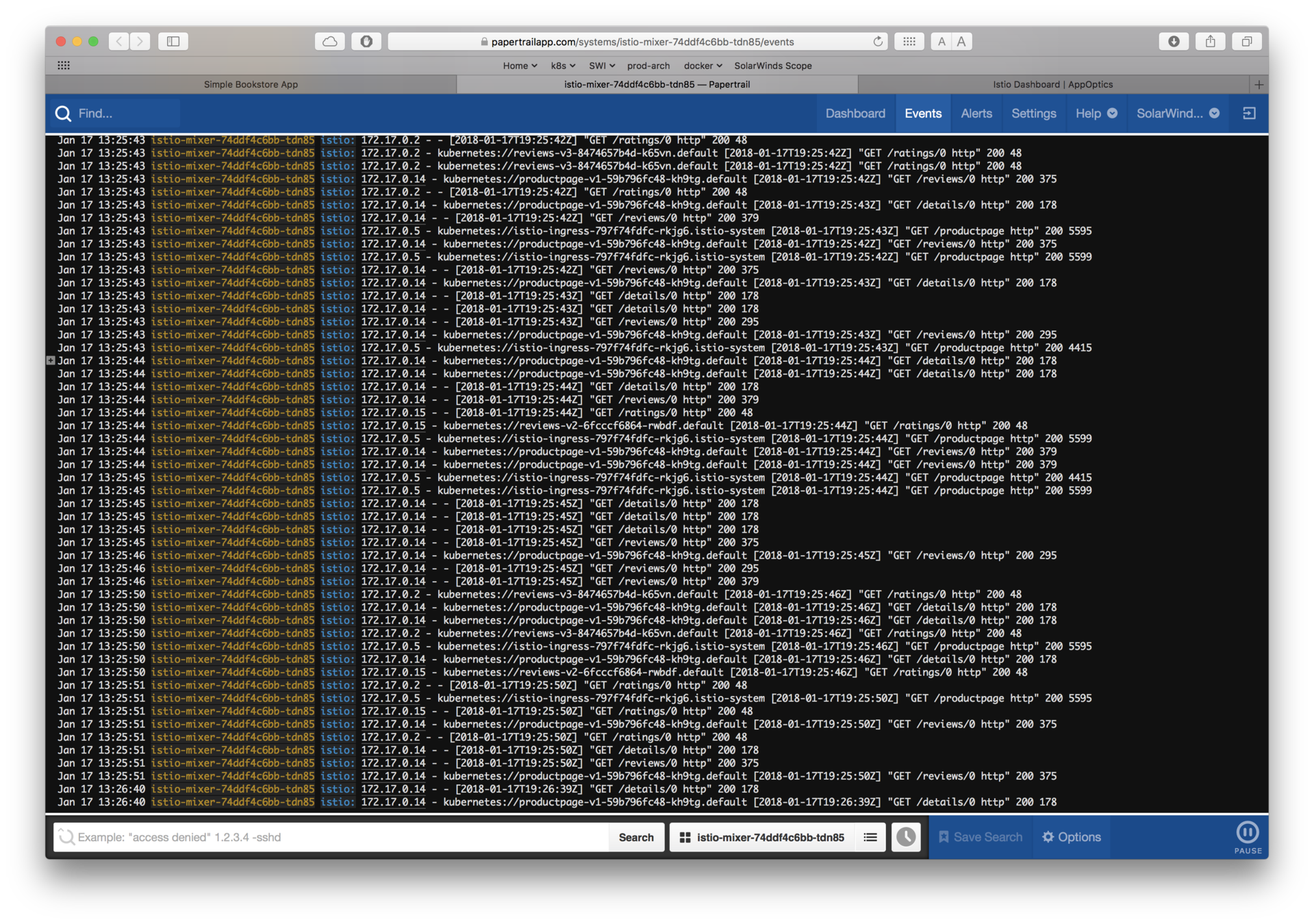

AppOptics

- Uses pluggable adapters to extend its functionality

- Adapters run within the Mixer process

- Adapters are modules that interface to infrastructure backends

- (logging, metrics, quotas, etc.)

- Multi-interface adapters are possible

- (e.g. SolarWinds adapter sends logs & metrics)

Mixer Adapters

types: logs, metrics, access control, quota

Papertrail

Prometheus

Grafana

Fluentd

Statsd

Stackdriver

Open Policy Agent

Demo

Let's look at Istio's canonical sample app.

BookInfo Sample App

Reviews v1

Reviews Pod

Reviews v2

Reviews v3

Product Pod

Details Container

Details Pod

Ratings Container

Ratings Pod

Product Container

Reviews Service

BookInfo Sample App

Reviews v1

Reviews Pod

Reviews v2

Reviews v3

Product Pod

Details Container

Details Pod

Ratings Container

Ratings Pod

Product Container

Nginx sidecar

Nginx sidecar

Nginx sidecar

Nginx sidecar

Nginx sidecar

Reviews Service

Nginx sidecar

Envoy ingress

kubectl version

kubectl get ns

kubectl apply -f ../istio-appoptics-0.5.1-solarwinds-v01.yaml

kubectl apply -f ./install/kubernetes/istio-sidecar-injector.yamlcheck environment; deploy Istio

kubectl get ns

watch kubectl get po,svc -n istio-system

kubectl apply -f <(istioctl kube-inject -f samples/bookinfo/kube/bookinfo.yaml)confirm deployment Istio; deploy sample app

watch kubectl get po,svc

kubectl describe po/ | more

echo "http://$(kubectl get nodes -o template --template='{{range.items}}{{range.status.addresses}}{{if eq .type "InternalIP"}}{{.address}}{{end}}{{end}}{{end}}'):$(kubectl get svc istio-ingress -n istio-system -o jsonpath='{.spec.ports[0].nodePort}')/productpage"confirm sample app

Demo

running Istio

Demo

running Istio

echo "http://$(kubectl get nodes -o template --template='{{range.items}}{{range.status.addresses}}{{if eq .type "InternalIP"}}{{.address}}{{end}}{{end}}{{end}}'):$(kubectl get svc istio-ingress -n istio-system -o jsonpath='{.spec.ports[0].nodePort}')/productpage"See "reviews" v1, v2 and v3

# From Docker's perspective

docker ps | grep istio-proxy

# From Kubernetes' perspective

kubectl get po

kubectl describe <a pod>Verify mesh deployment

# exec into 'istio-proxy'

kubectl exec -it <a pod> -c istio-proxy /bin/bashConnect to proxy sidecar

# Generate load for Mixer telemetry adapter

docker run --rm istio/fortio load -c 1 -t 10m \

`echo "http://$(kubectl get nodes -o template --template='{{range.items}}{{range.status.addresses}}{{if eq .type "InternalIP"}}{{.address}}{{end}}{{end}}{{end}}'):$(kubectl get svc istio-ingress -n istio-system -o jsonpath='{.spec.ports[0].nodePort}')/productpage"`Verify mesh configuration

Demo

running Istio

#Deploy new configuration to Nginx

istioctl create -f ./samples/bookinfo/kube/route-rule-all-v1.yaml

istioctl delete -f ./samples/bookinfo/kube/route-rule-all-v1.yaml

#A/B testing for user "lee"

kubectl apply -f ./samples/bookinfo/kube/route-rule-reviews-test-v2.yamlApply traffic routing policy

See Mixer telemetry

SolarWinds Istio Adapter

Try it out - github.com/solarwinds/istio-adapter

Lee Calcote

Thank you. Questions?

clouds, containers, functions,

applications and their management