work in progress on

diffeo

PCSL internal group meeting

October 7, 2021

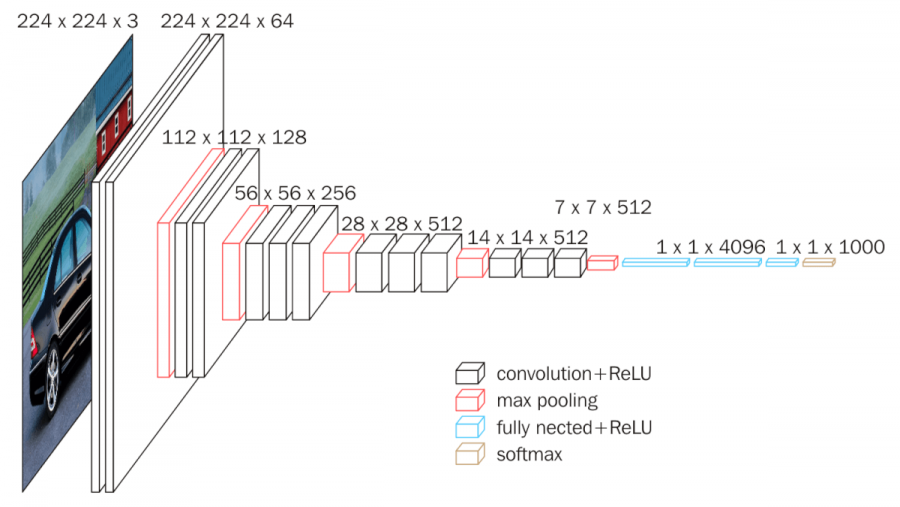

Motivation: the success of deep learning

- Deep learning is incredibly successful in wide variety of tasks

-

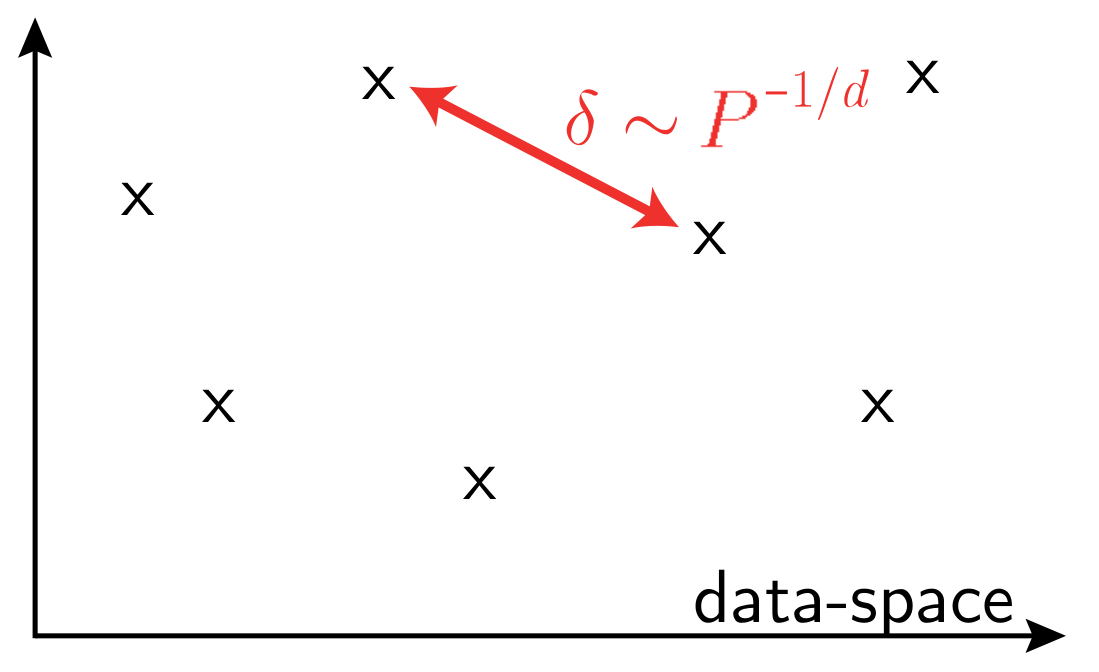

Curse of dimensionality when learning in high-dimension,

in a generic setting

vs.

- Learning in high-d implies that data is highly structured

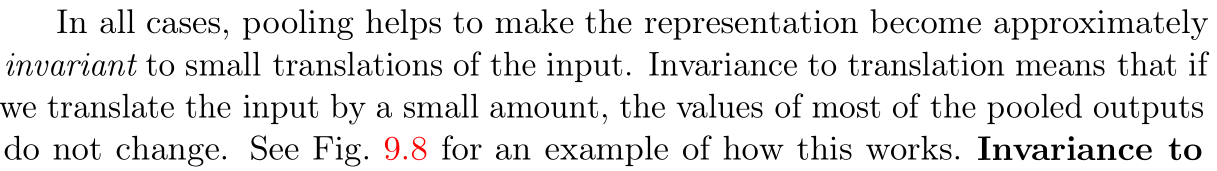

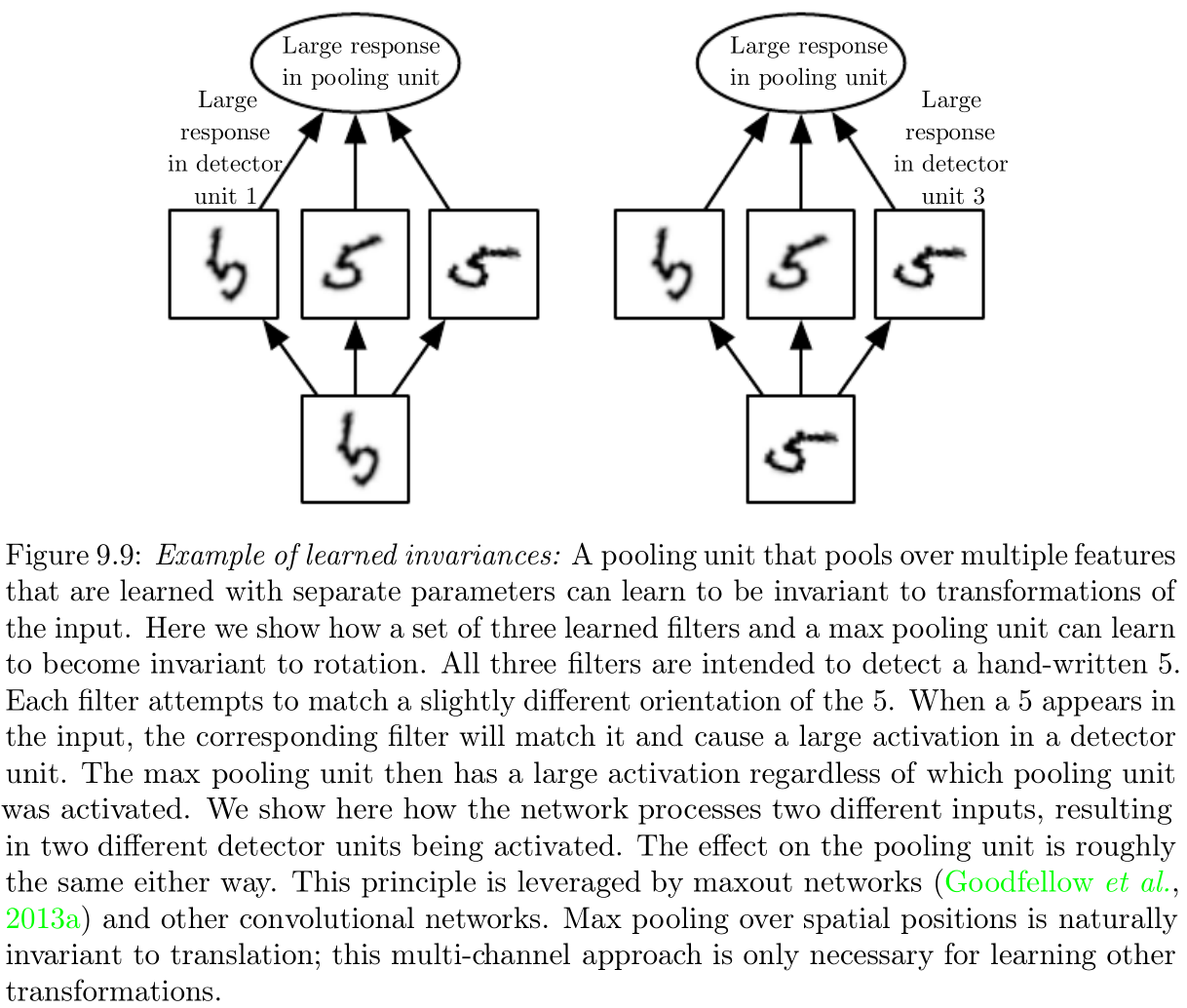

- Common idea is that neural nets can exploit such data structure by getting invariant to some aspects of the data.

\(P\): training set size

\(d\) data-space dimension

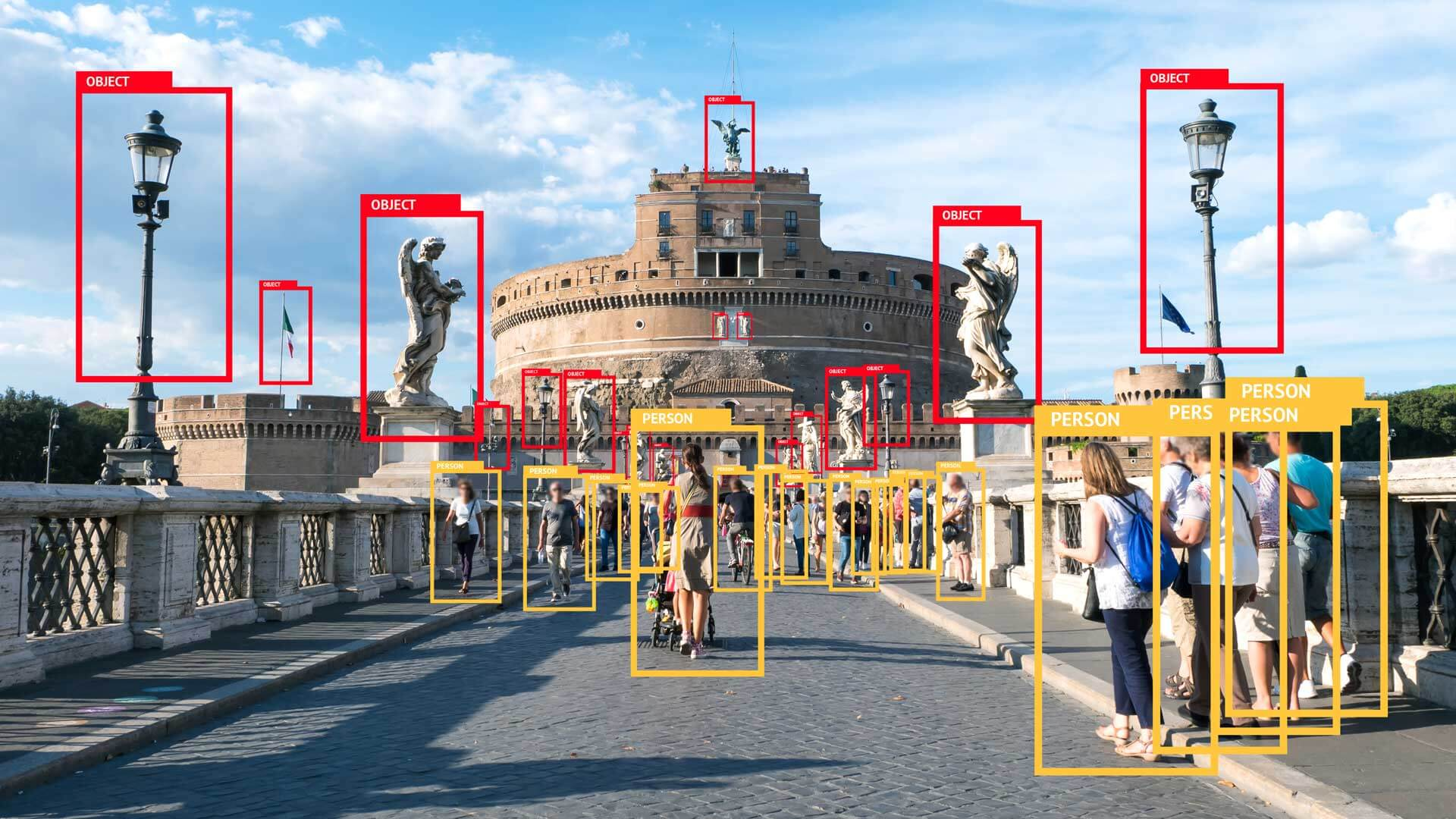

Hypothesis: images can be classified because the task is invariant to smooth deformations of small magnitude and CNNs exploit such invariance with training.

Invariance toward diffeomorphisms

Bruna and Mallat (2013) Mallat (2016)

...

Is it true or not?

Can we test it?

"Hypothesis" means, informally, that

\(\|f(x) - f(\tau x)\|^2\) is small if the deformation is small.

\(x\) : data-point

\(\tau\) : smooth deformation

\(f\) : network function

Yes! We can

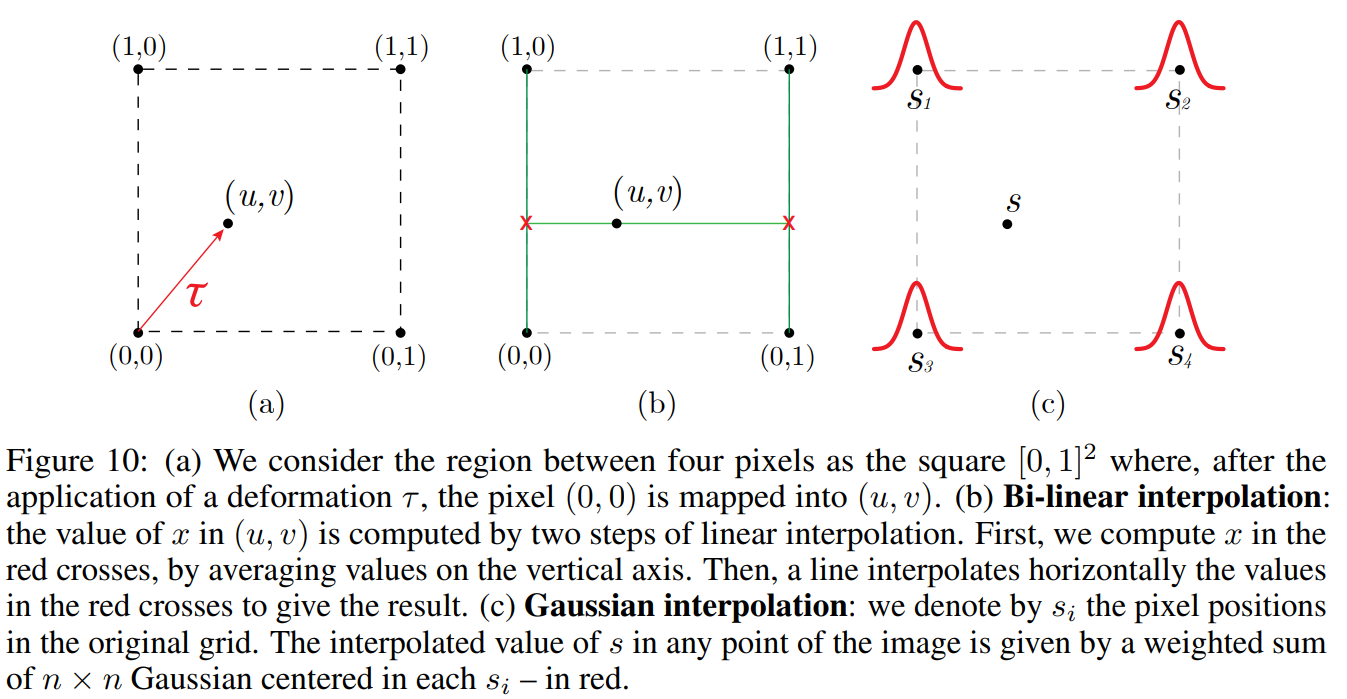

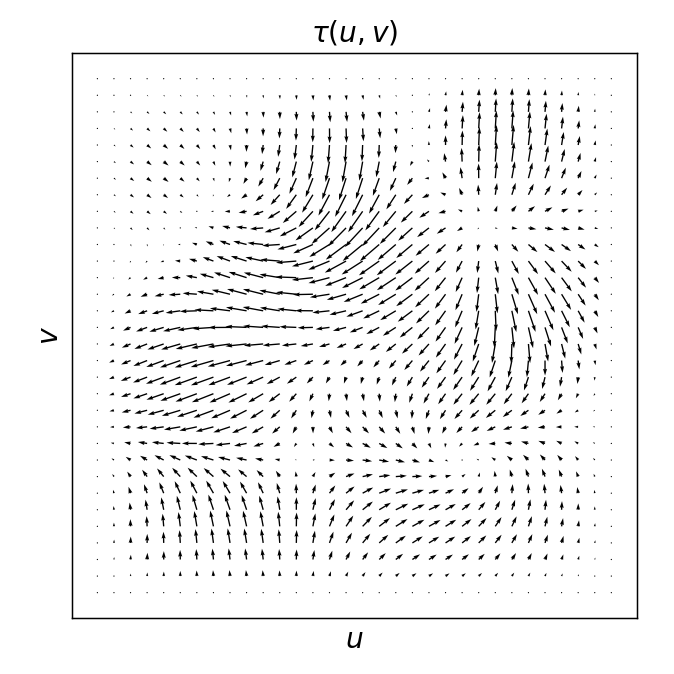

- Define an ensemble of smooth deformations to go from \(x\) to \(\tau x\) (won't talk about it here)

- Define an observable to characterize the invariance (next slide)

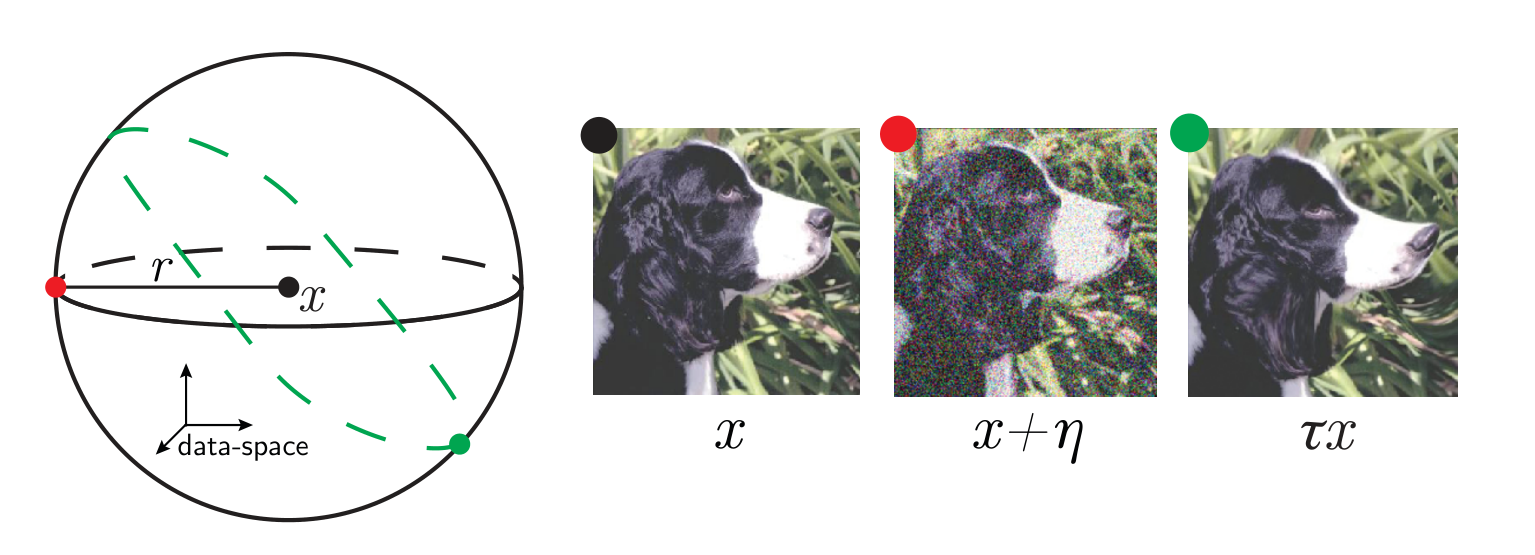

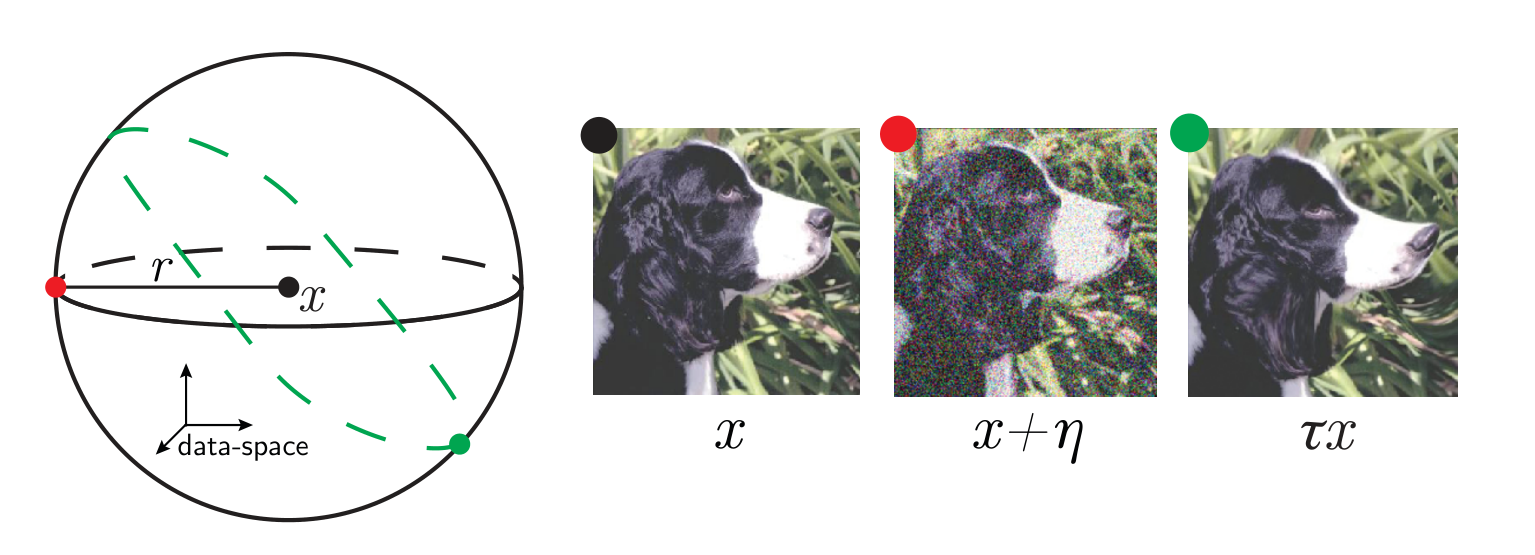

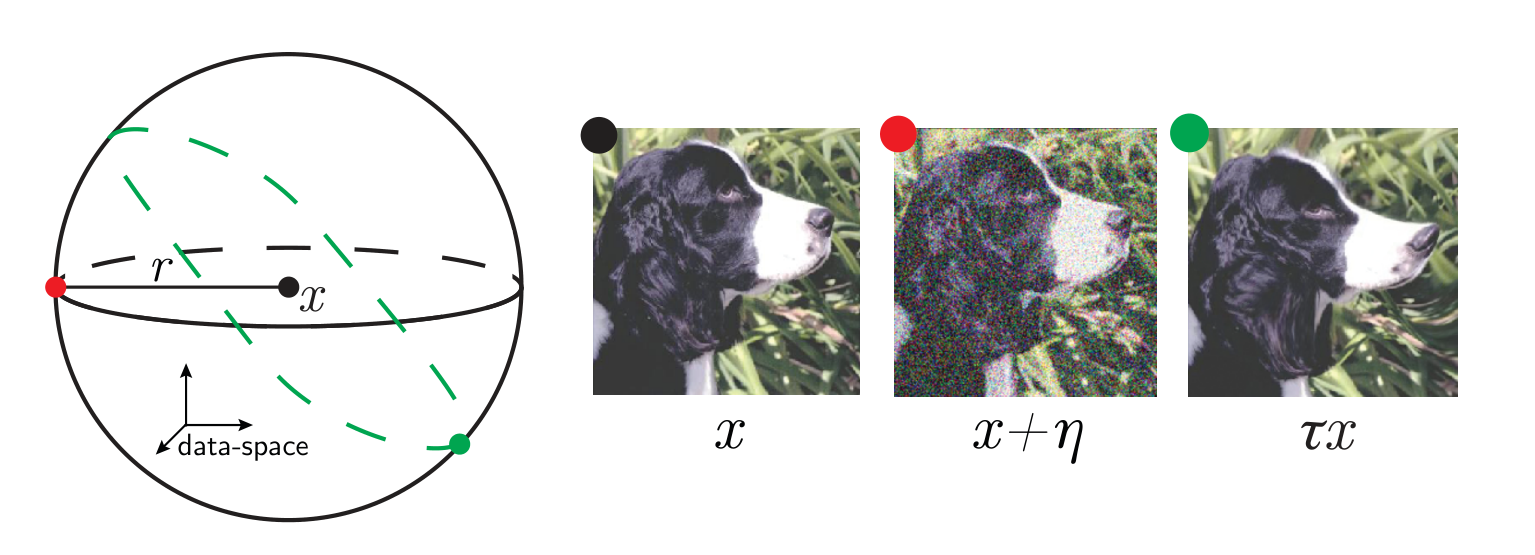

Relative stability to diffeomorphisms

\(x\) input image

\(\tau\) smooth deformation

\(\eta\) isotropic noise with \(\|\eta\| = \langle\|\tau x - x\|\rangle\)

\(f\) network function

Goal: quantify how a deep net learns to become less sensitive

to diffeomorphisms than to generic data transformations

$$R_f = \frac{\langle \|f(\tau x) - f(x)\|^2\rangle_{x, \tau}}{\langle \|f(x + \eta) - f(x)\|^2\rangle_{x, \eta}}$$

Relative stability:

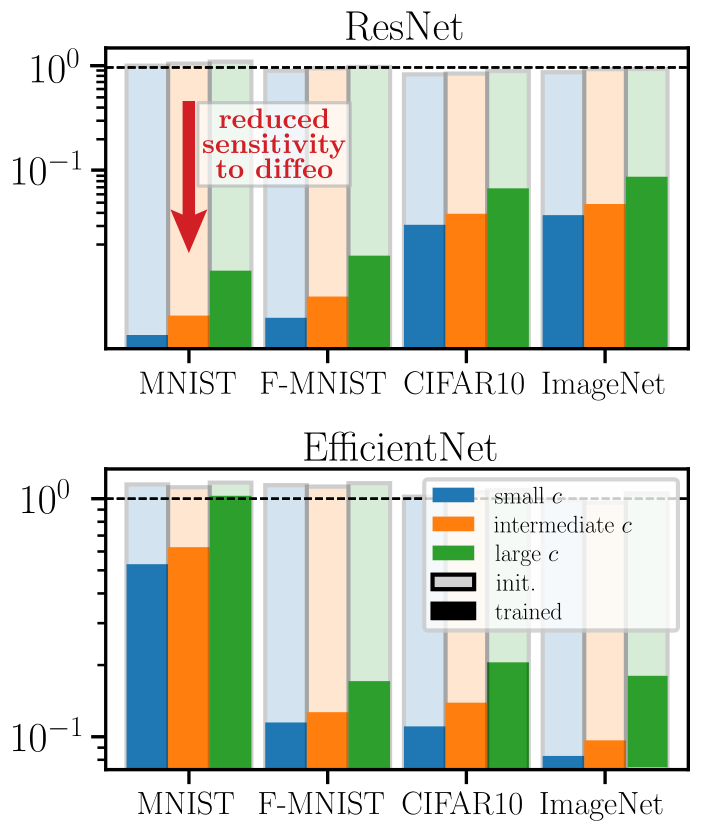

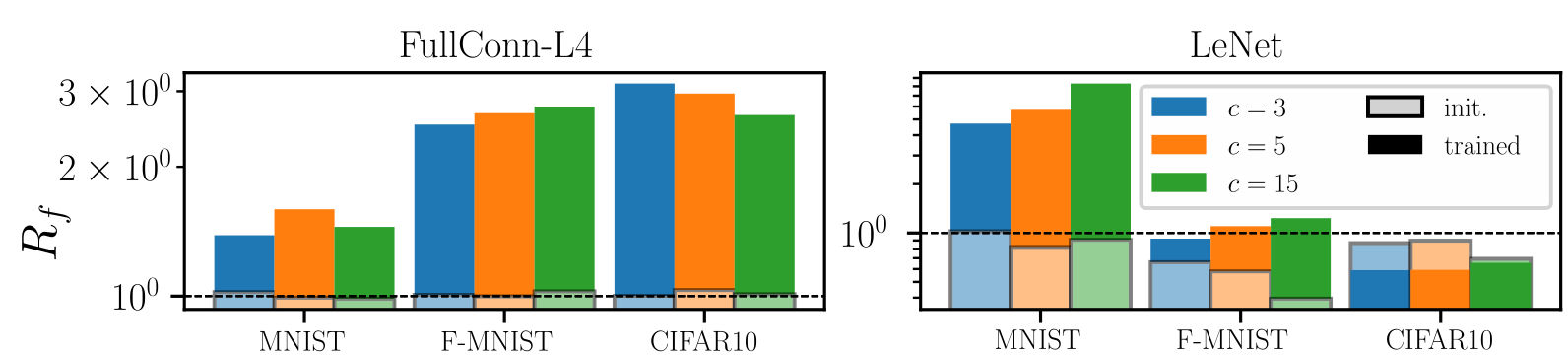

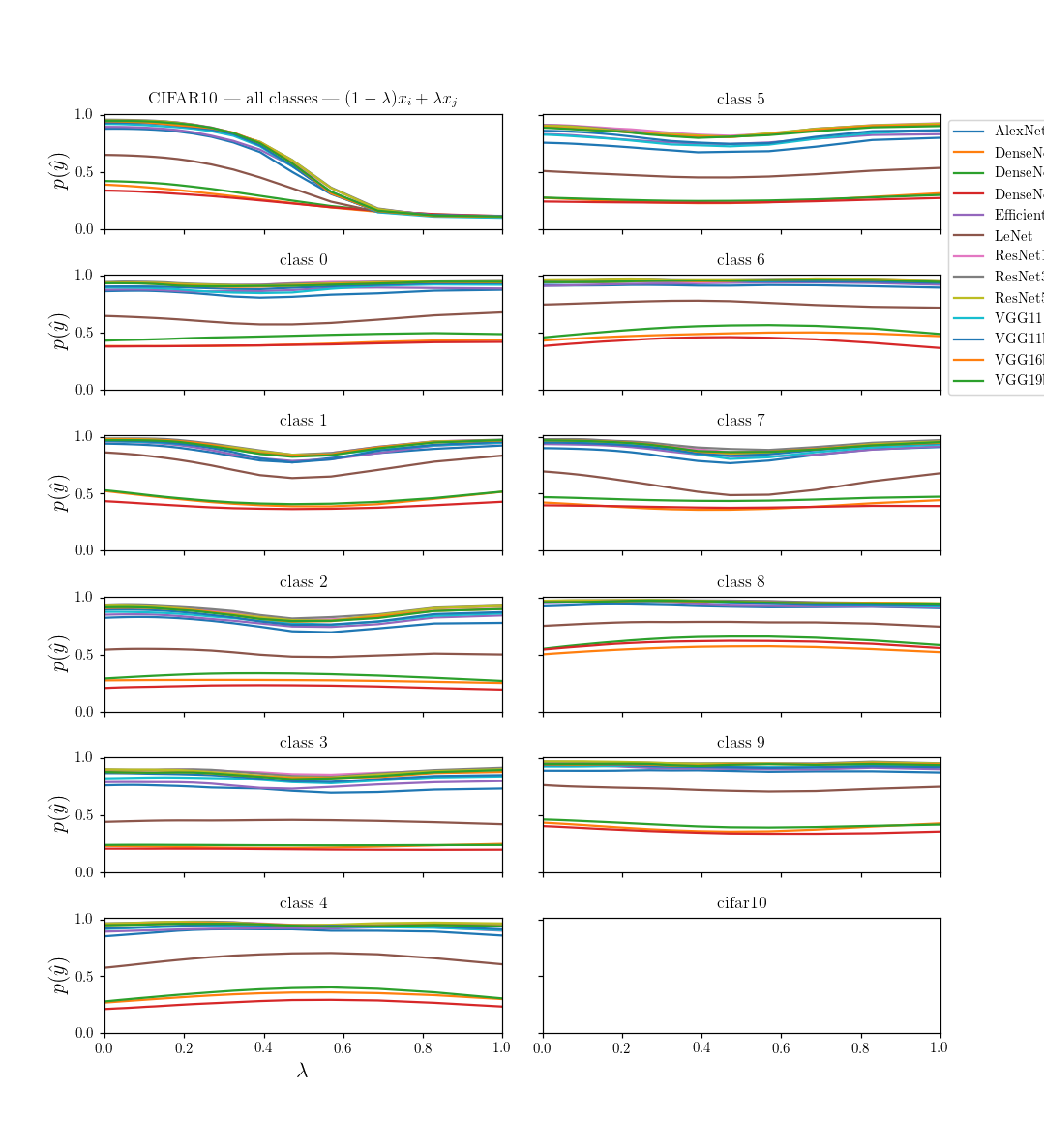

Probing neural nets with diffeo

$$R_f = \frac{\langle \|f(\tau x) - f(x)\|^2\rangle_{x, \tau}}{\langle \|f(x + \eta) - f(x)\|^2\rangle_{x, \eta}}$$

Results:

- At initialization (shaded bars) \(R_f \approx 1\) - SOTA nets don't show stability to diffeo at initialization.

-

After training (full bars) \(R_f\) is reduced by one order of magnitude or two consistently across datasets and architectures.

- By contrast, (2.) doesn't hold true for fully connected and simple CNNs.

Deep nets learn to become stable to diffeomosphisms!

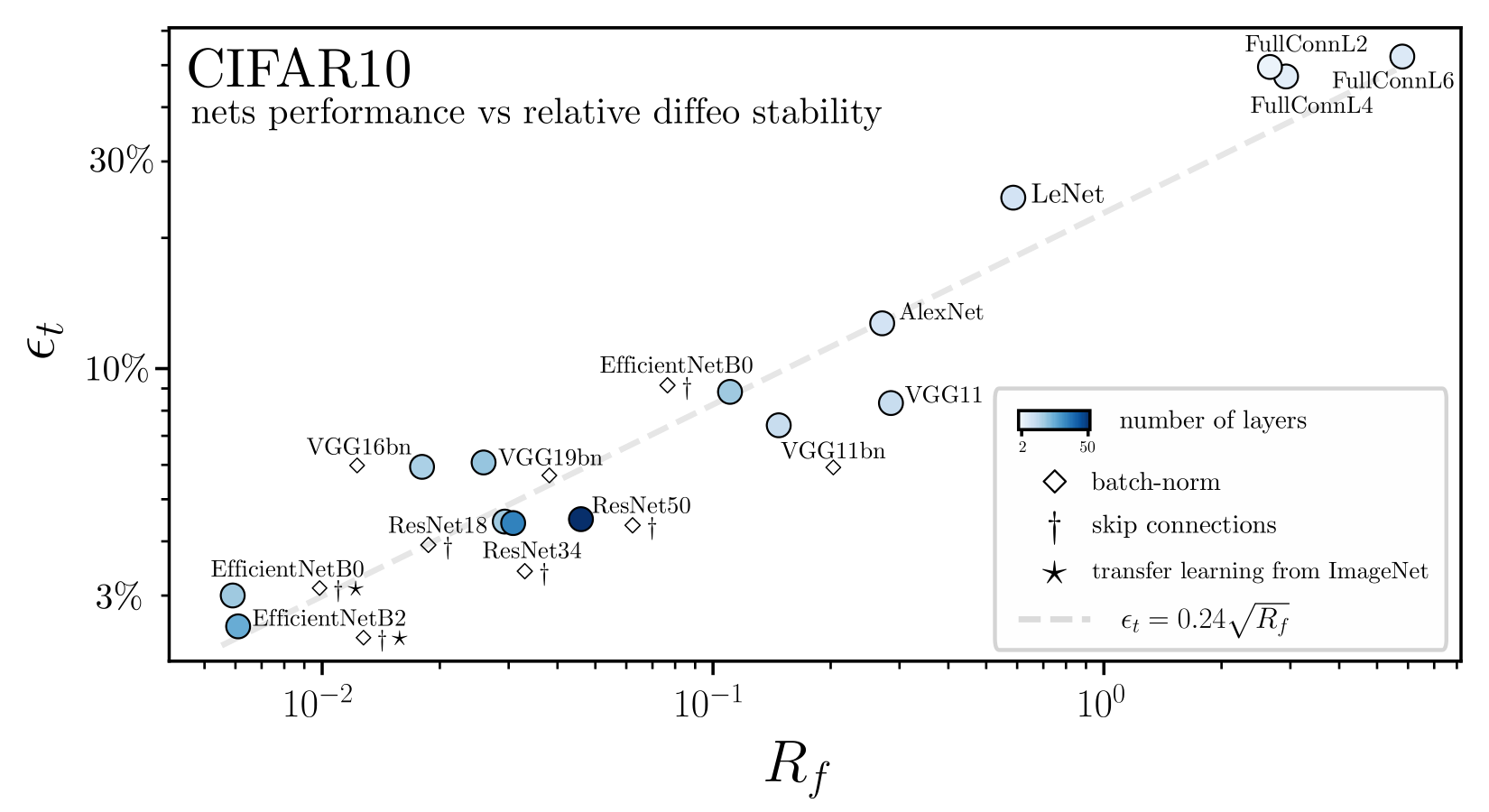

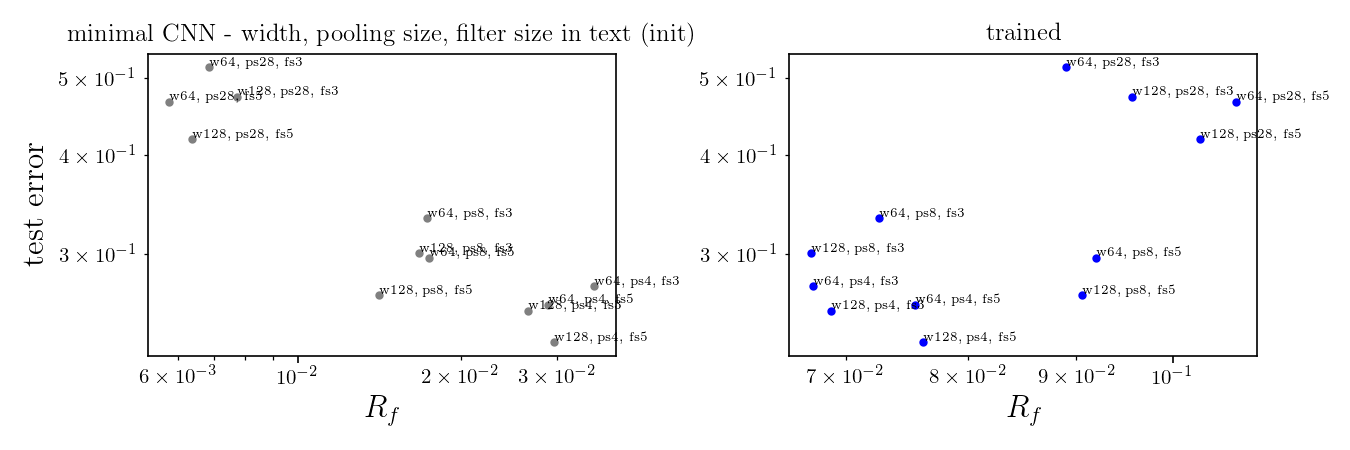

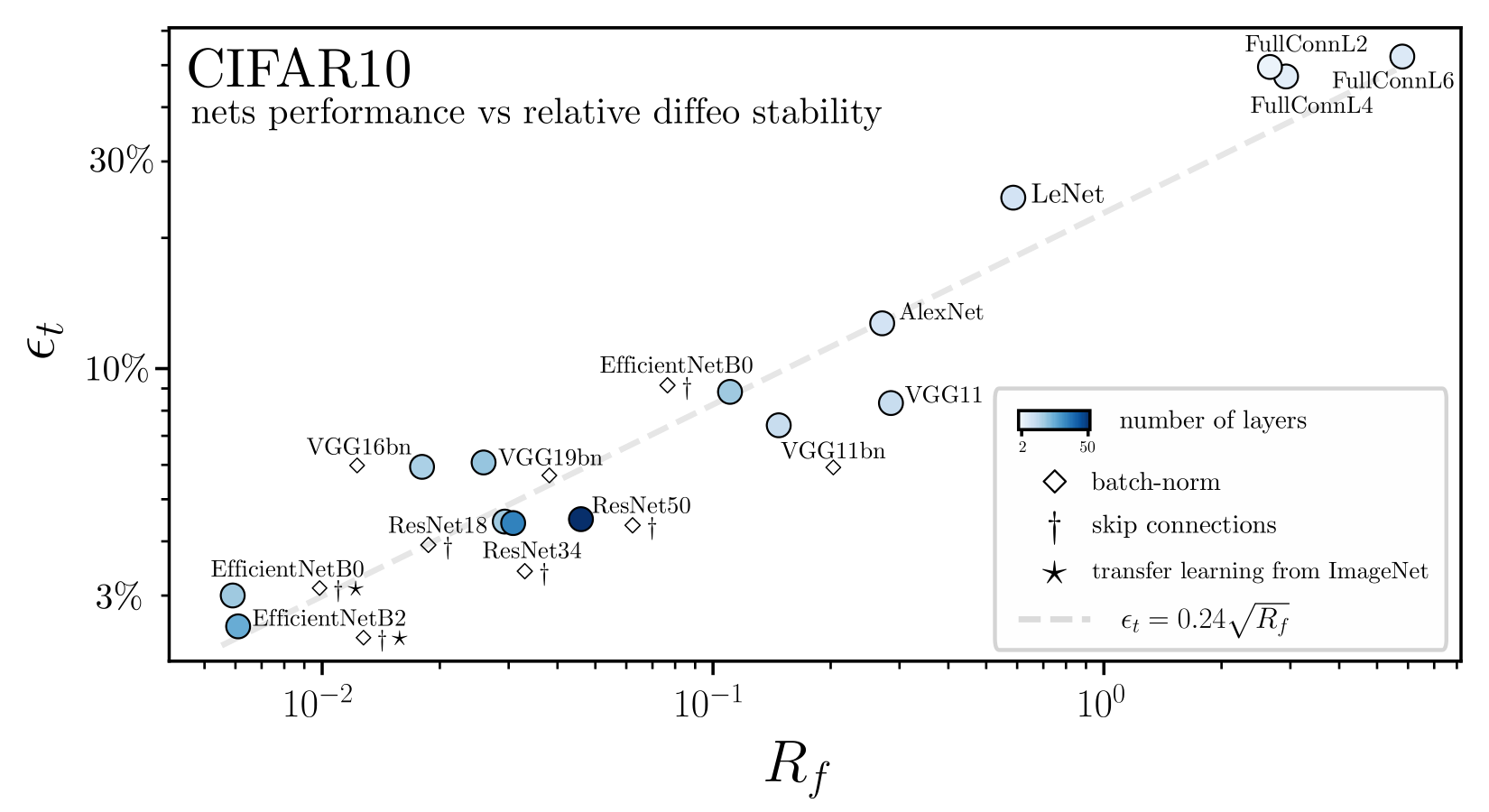

Is there a relation with performance?

Relative stability to diffeomorphisms remarkably correlates to performance!

Many questions arise:

- Up to now, we fixed \(r\). Can we better understand the properties of the predictor around data-points, as a function of \(r\)?

- Can we understand by which mechanism the network learns the invariance?

- Can we build a simple model of data where the diffeo invariance is present to be able to reproduce the \(\epsilon_t - R_f\) correlation and better understand that curve?

- ...

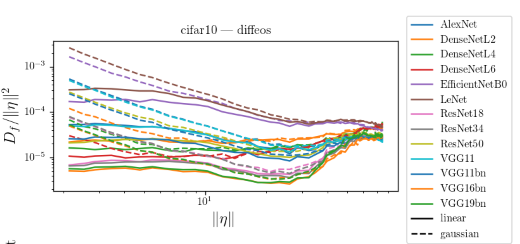

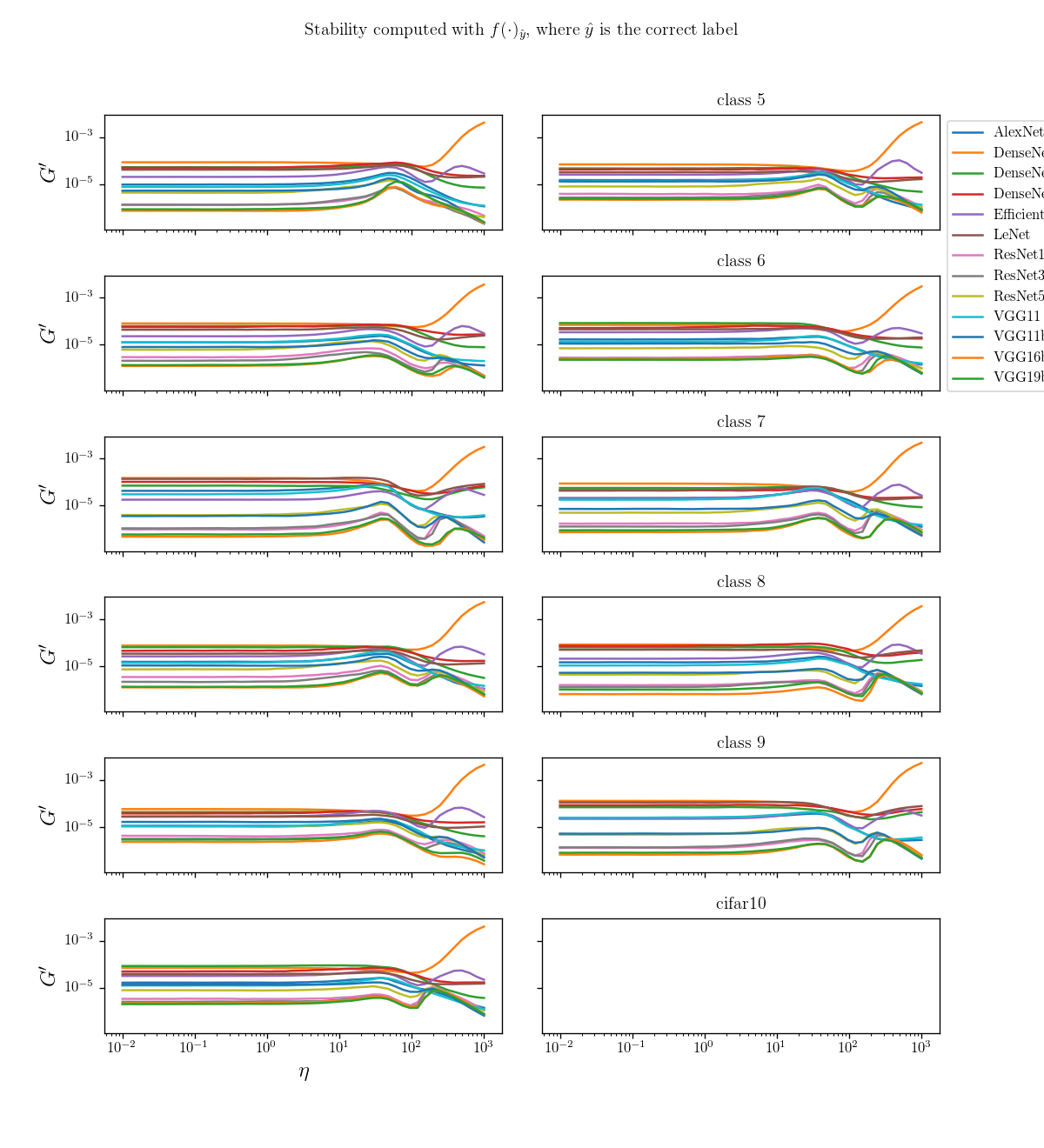

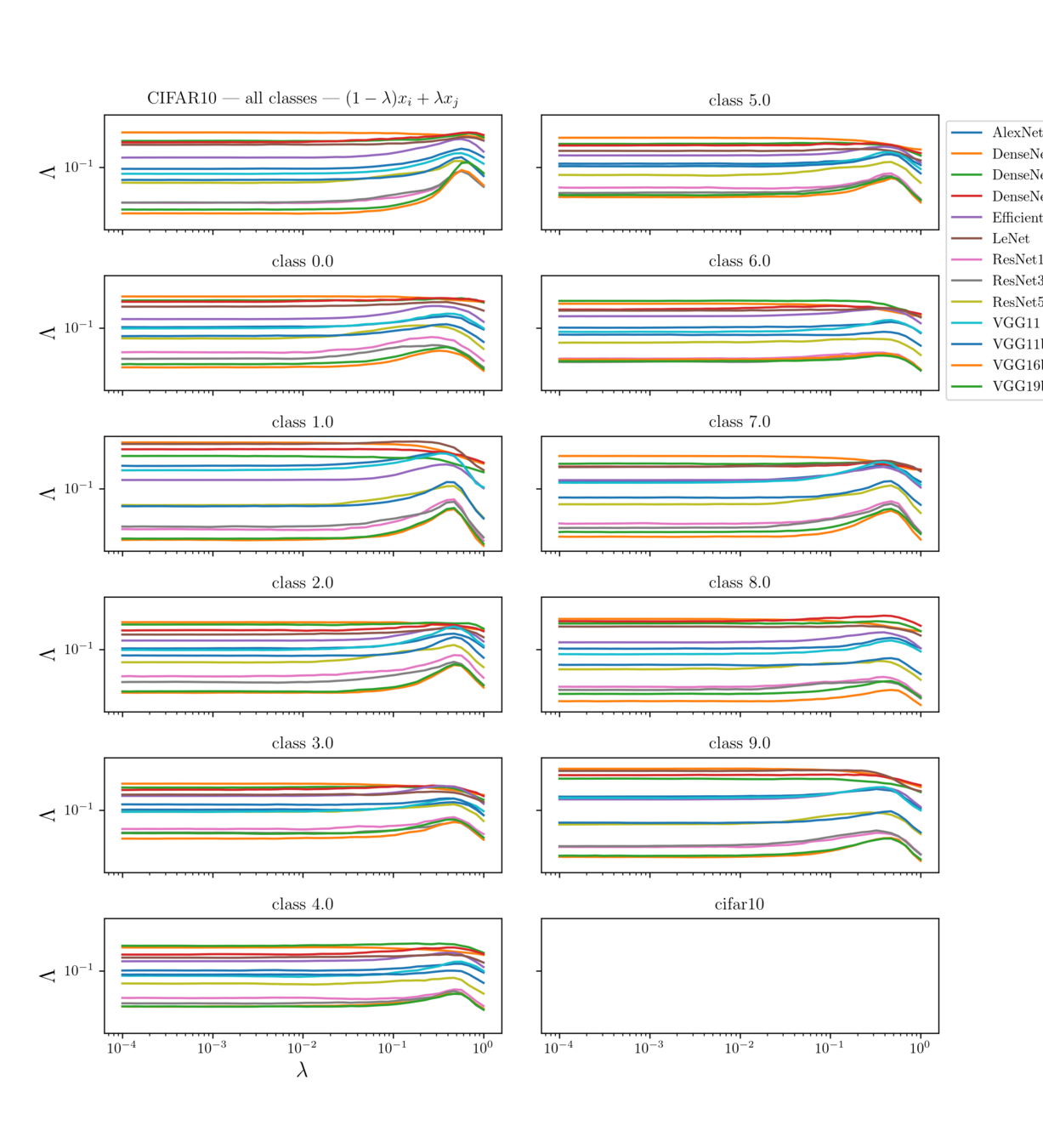

1. Stability as a function of the distance from data-points

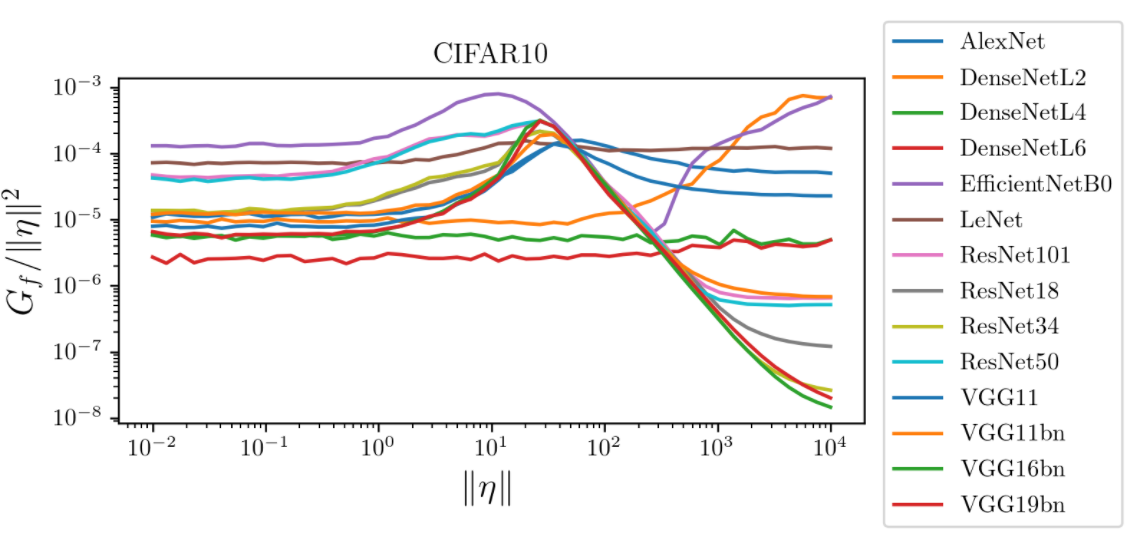

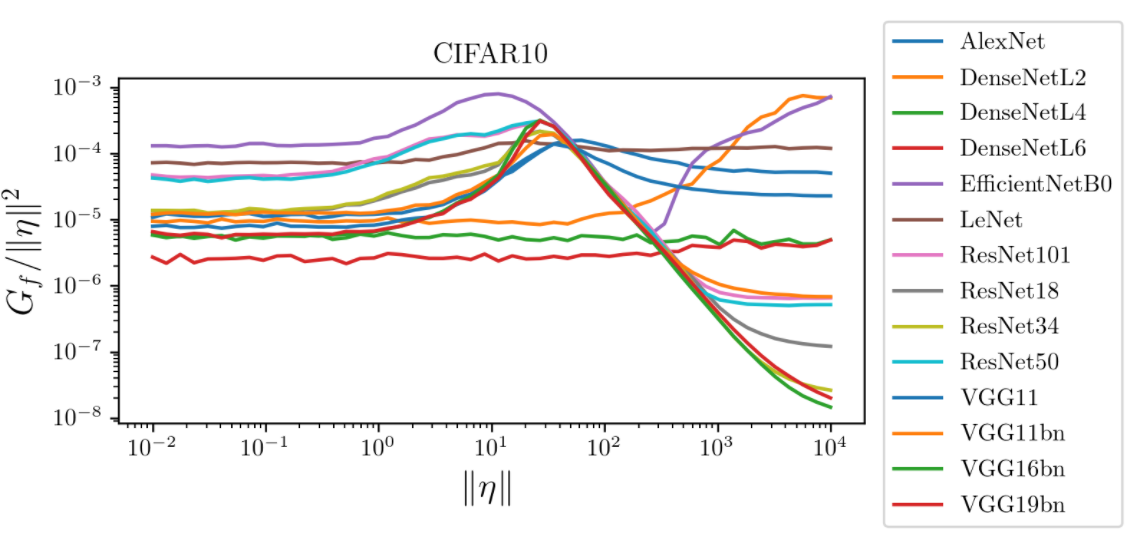

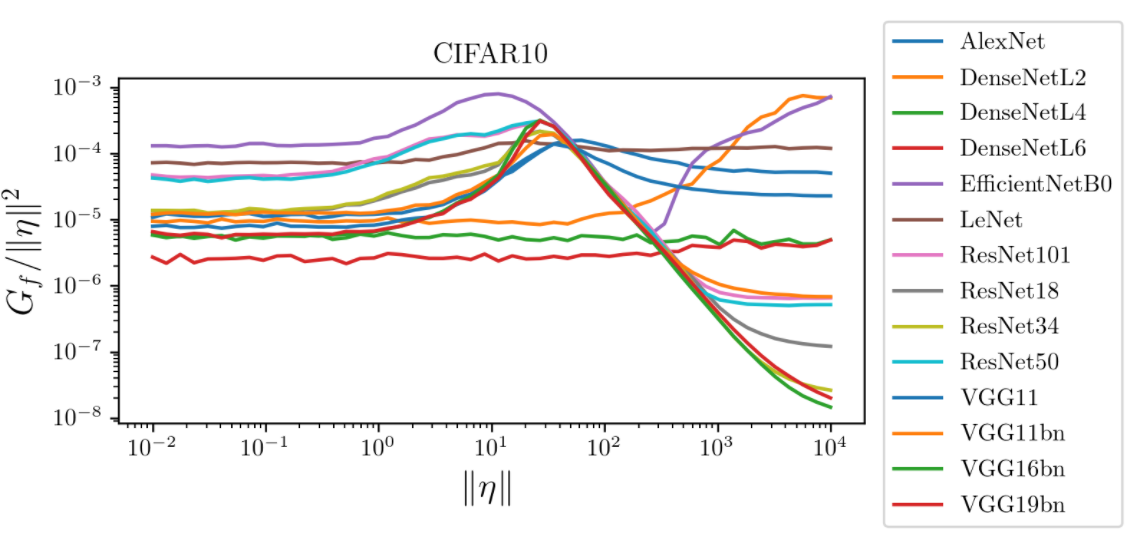

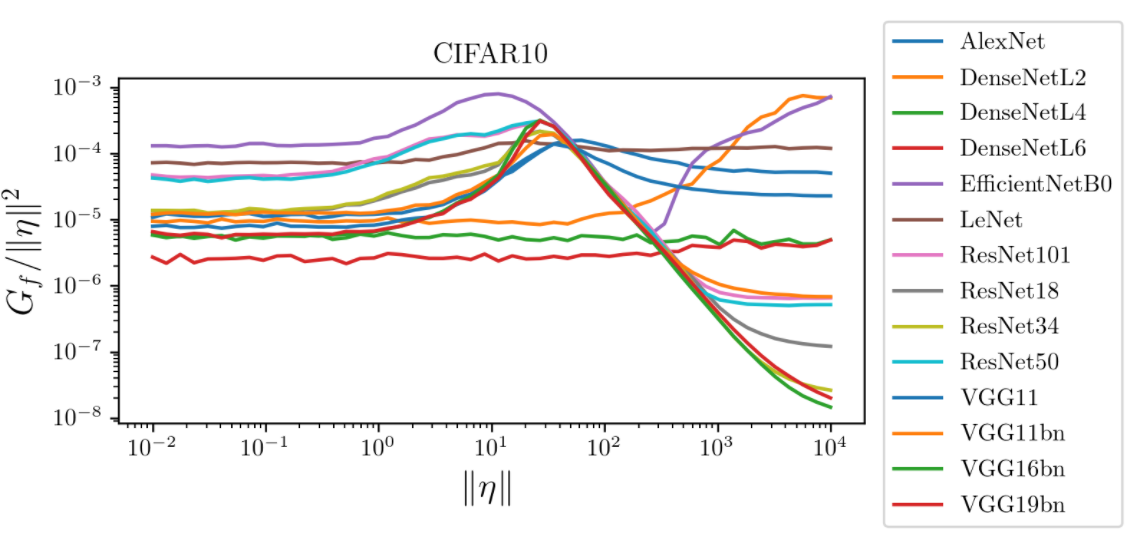

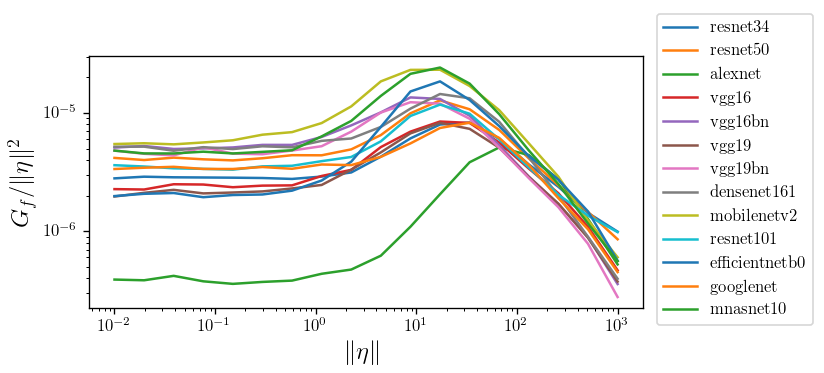

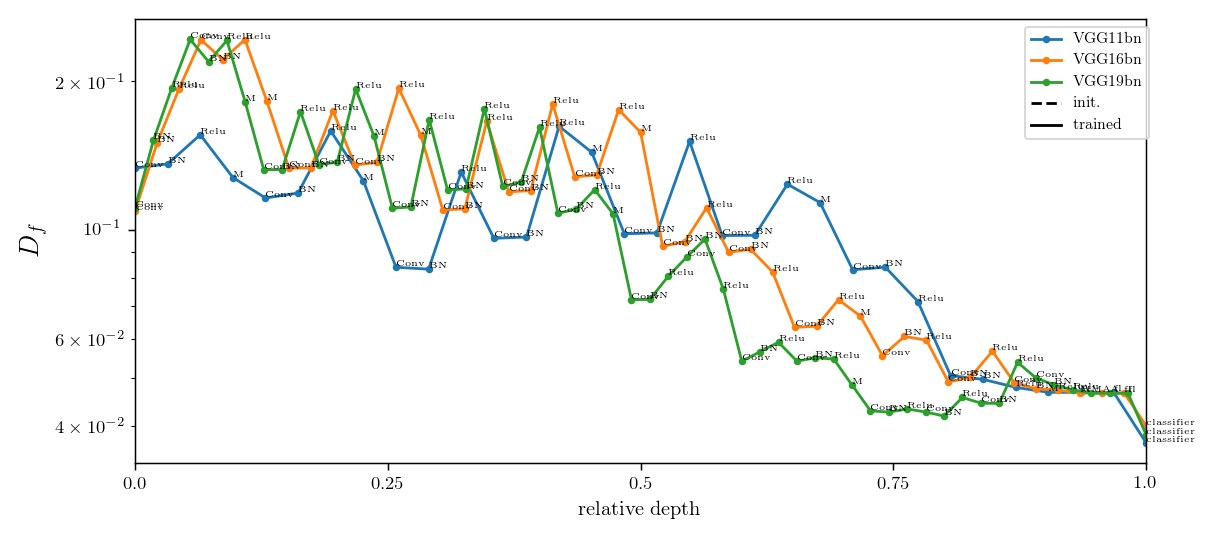

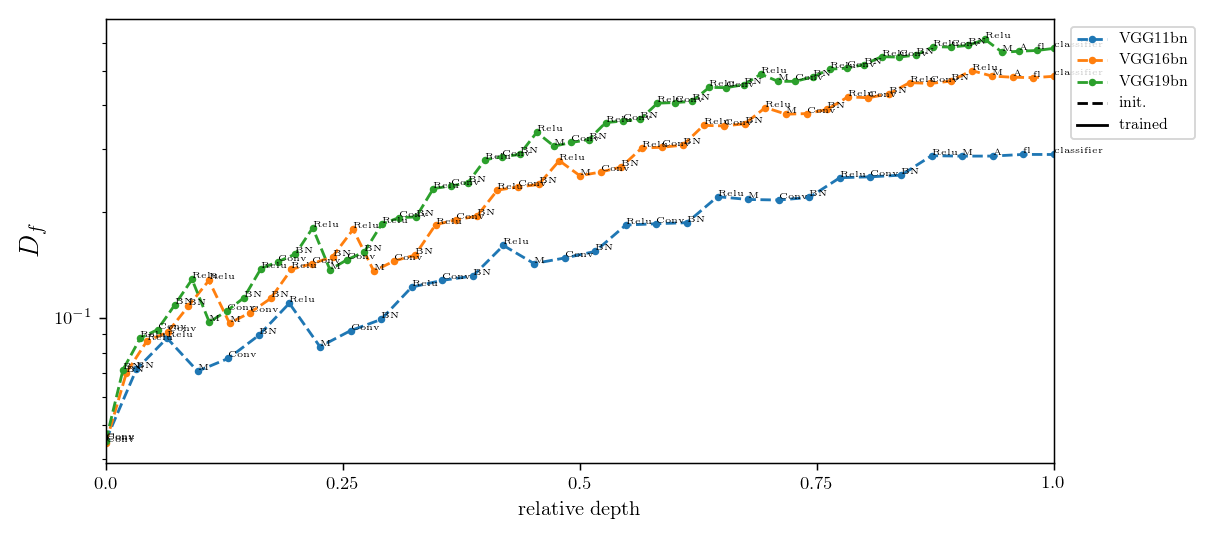

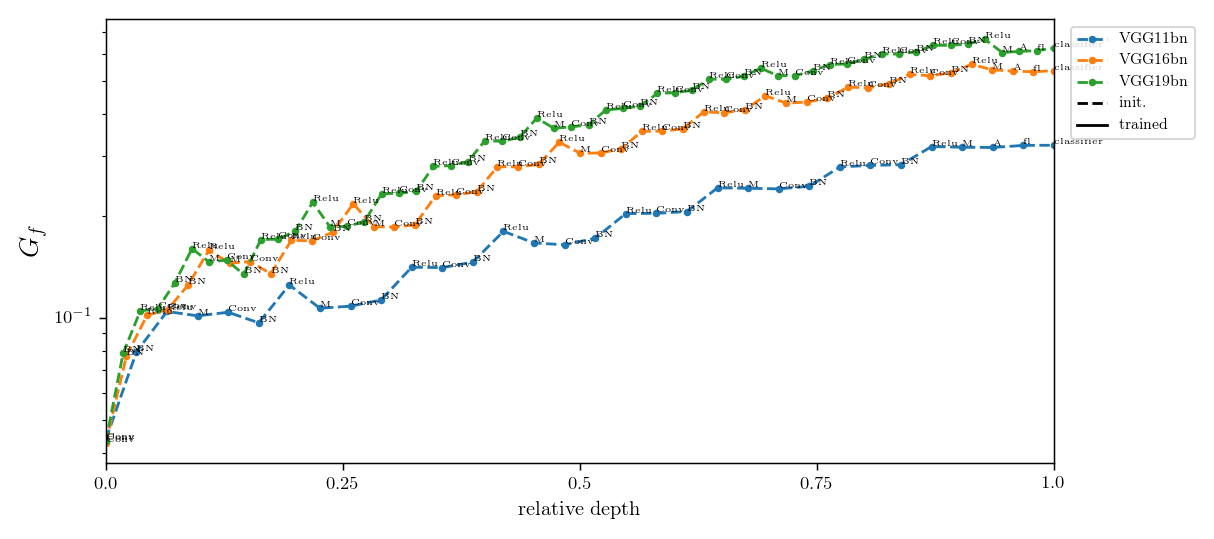

What's the shape of \(D_f\) and \(G_f\) as a function of \(r = \|\eta\|\)?

i.e. how does the predictor look like while moving away from datapoints?

- In our work, we would have liked to measure gradients, i.e. \(r \to 0\).

- In fact, this is not possible because of interpolation when computing \(\tau x\).

- We have chosen the smallest \(r\) for which interpolation does't matter.

diffeo stability

additive noise stability

Recall:

Linear regime

bump

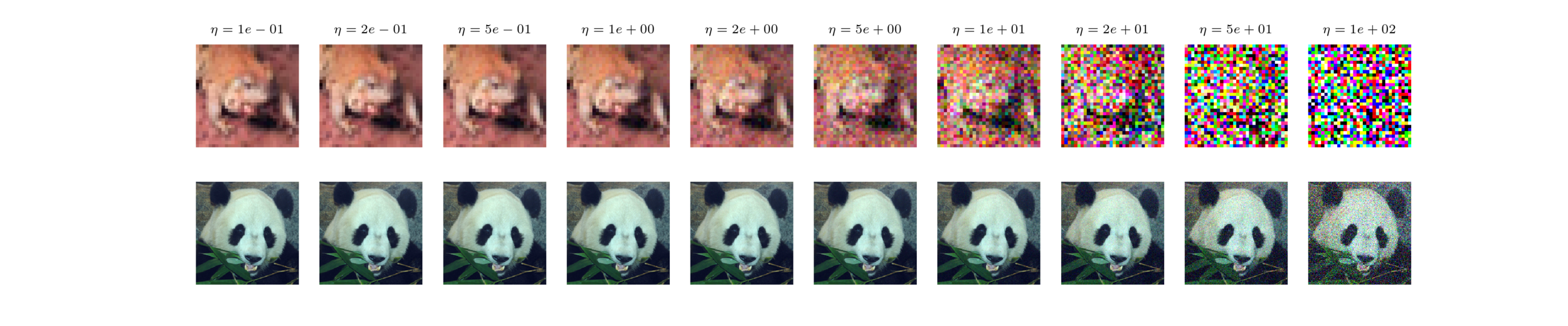

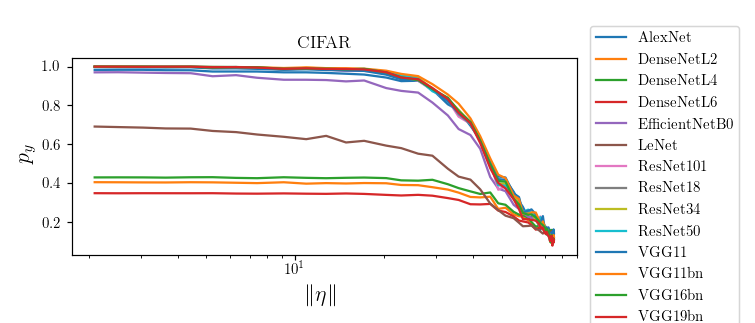

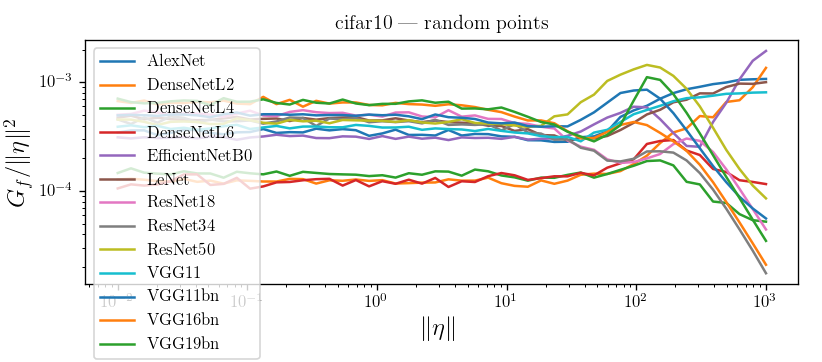

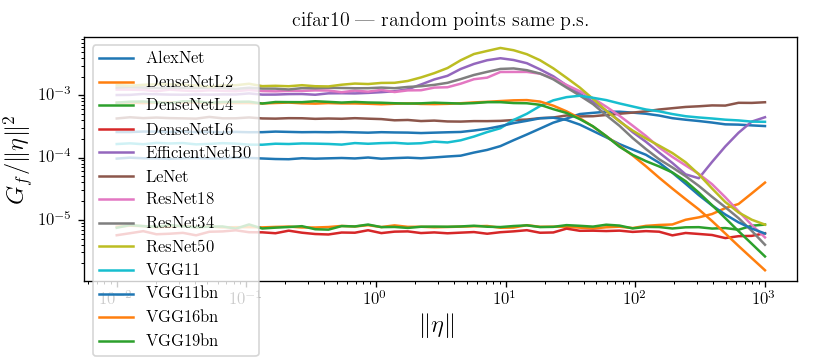

Gaussian noise stability around data-points

- For \(\eta\) small enough, \(f(x + \eta)\) will behave as its linearization around datapoints, i.e. \(f(x + \eta) - f(x)\propto \|\eta\|\).

- We plot \(G_f / \|\eta\|^2\) so that we measure deviations from the linear regime.

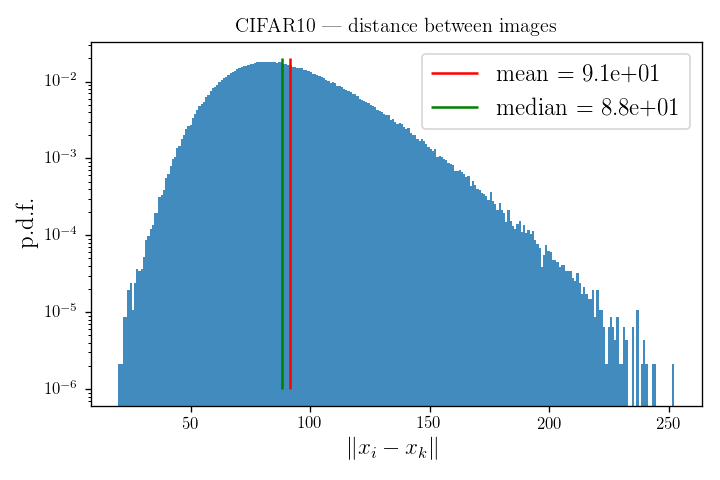

- Most architectures are in the linear regime up until \(\eta^*\sim 10\), then there is a bump.

- Hypothesis: the bump corresponds to the average decision boundary distance from data-points.

Linear regime

bump

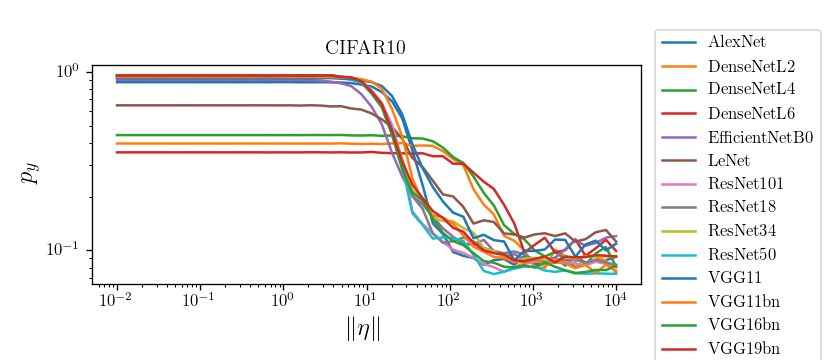

Recall: to train a net with cross-entropy loss we apply a softmax to the output

where \(\hat p_i\) can be interpreted as probability that the network assigns to \(i\) being the correct class

Bump corresponds to the regime in which nets start misclassifying!

Puzzle

- Similar results are obtained for ImageNet, with the bump occuring around the same position

- ImageNet samples - though - are 224x224 pixel

(CIFAR10 is 32x32) so that the bump occurs

here at much smaller noise per pixel

Visually, the position of the bump makes sense for CIFAR10, not much for ImageNet

CIFAR10:

ImageNet:

What's the case for diffeo stability?

- No bump if we stay on the diffeo manifold

- The two interpolation methods (full vs dashed) disagree in \(G_f\) linear region

- \(R_f\) is computed in the bump region

where \(\eta_z\) is pointing in the direction of another datapoint \(z\)

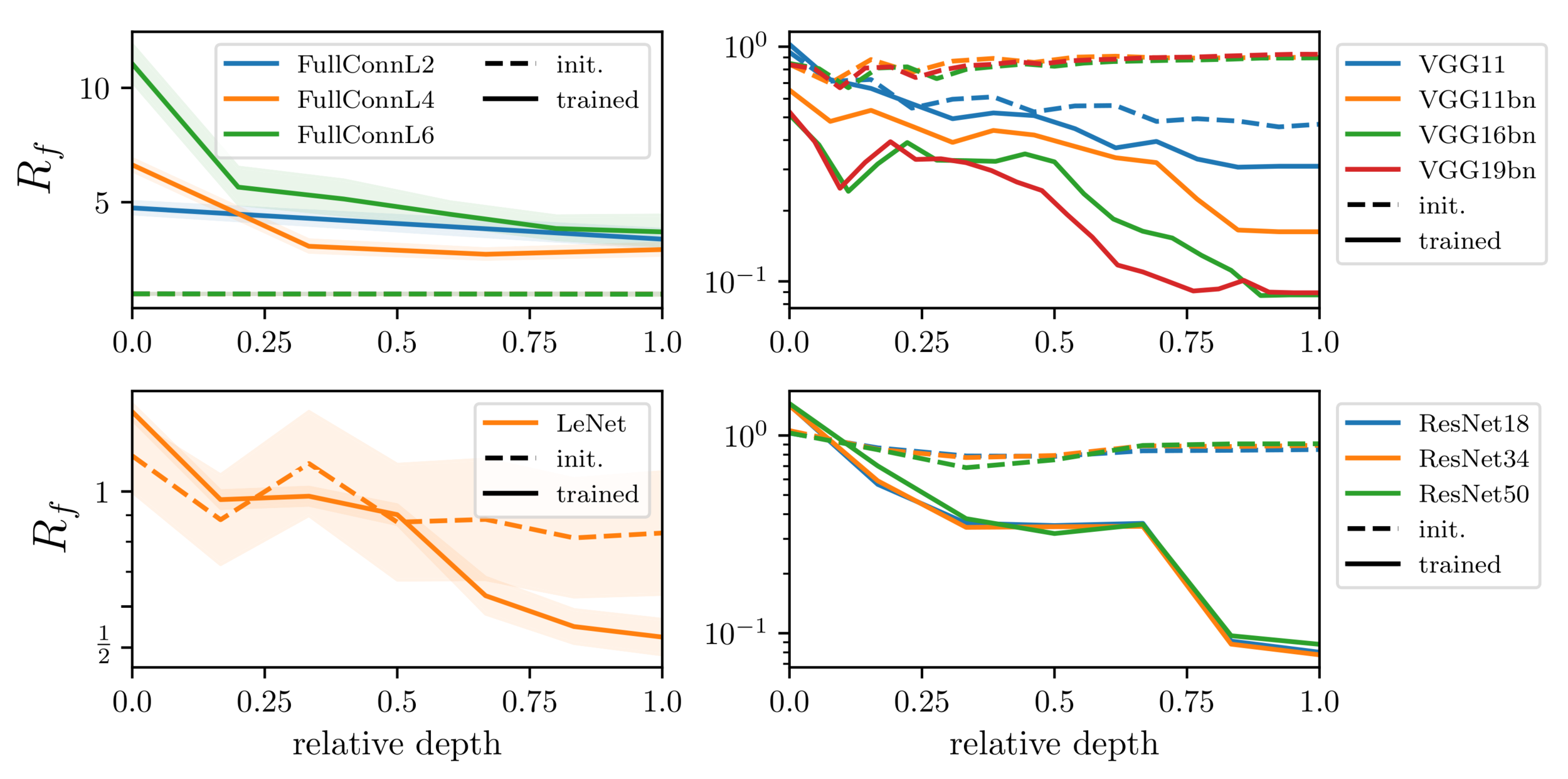

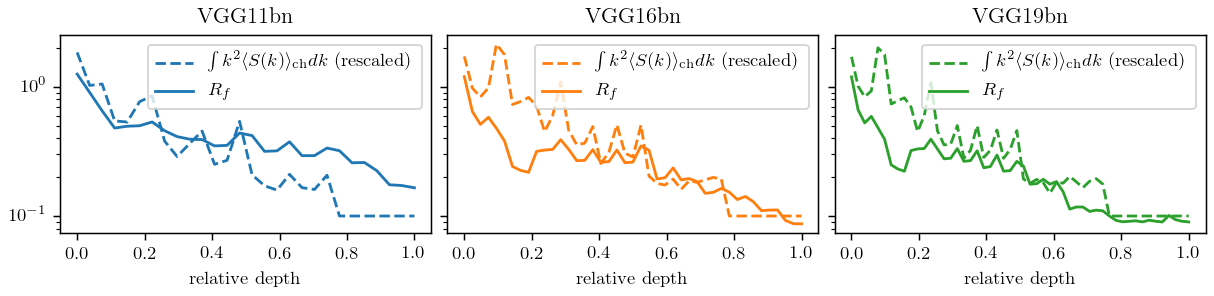

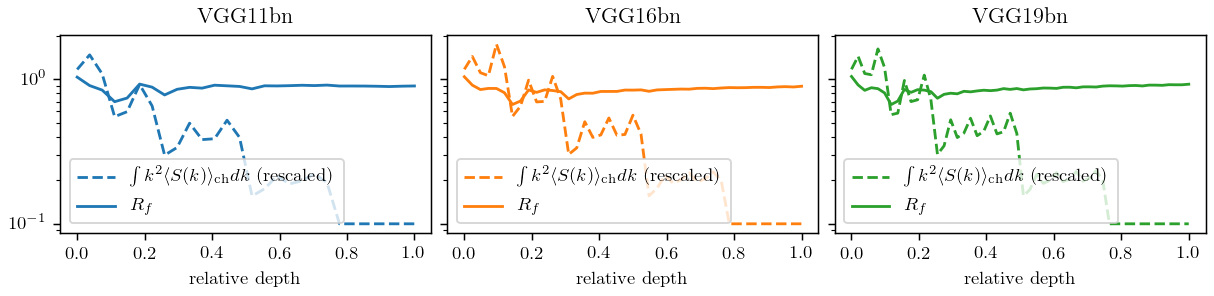

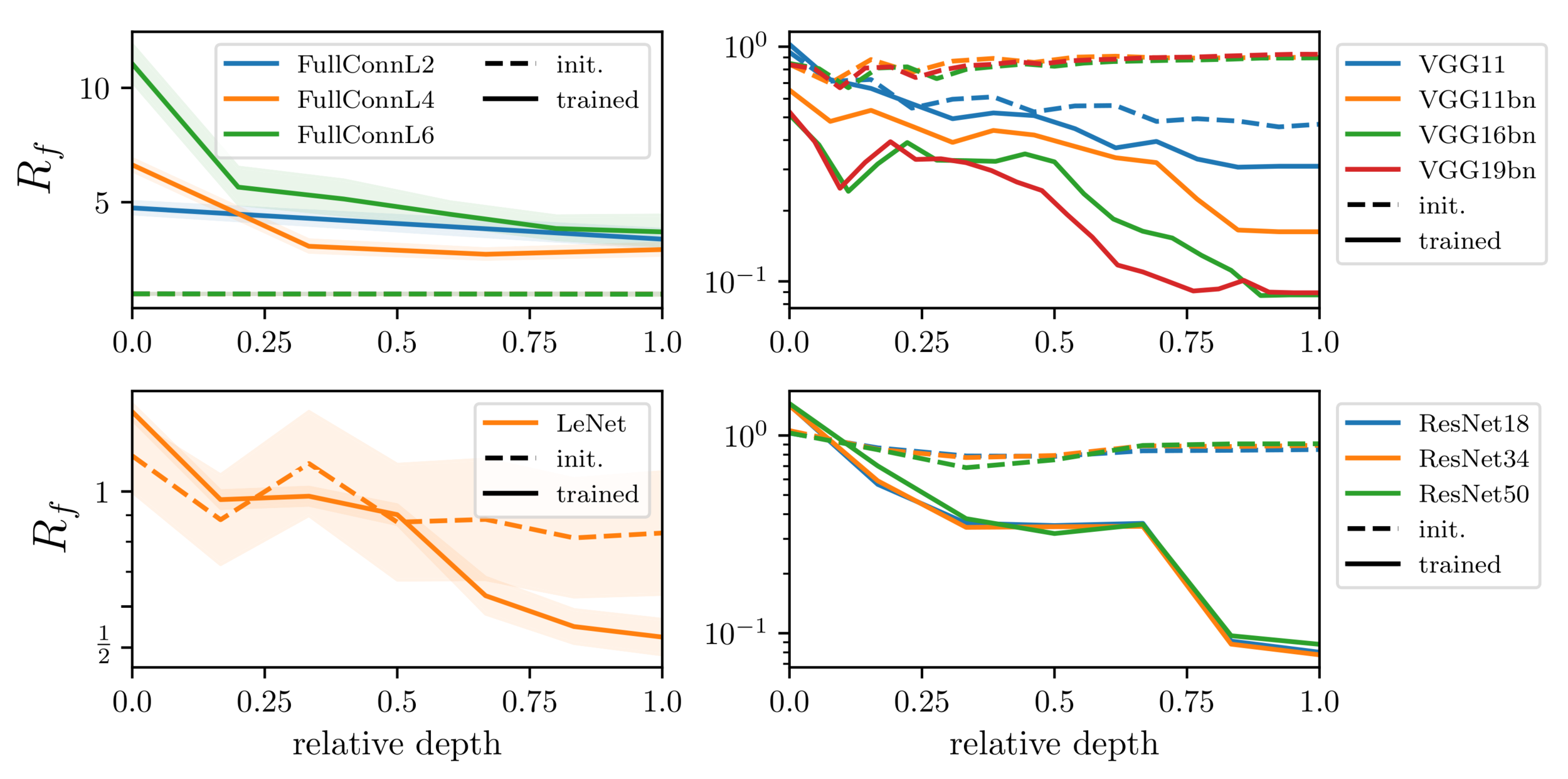

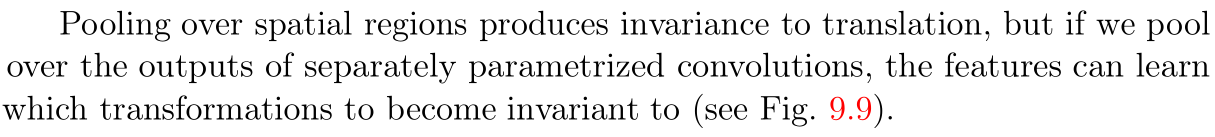

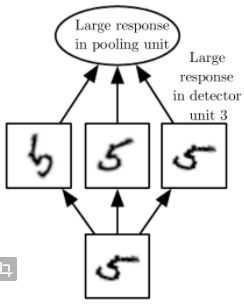

2. What's the mechanism by which relative stability is achieved?

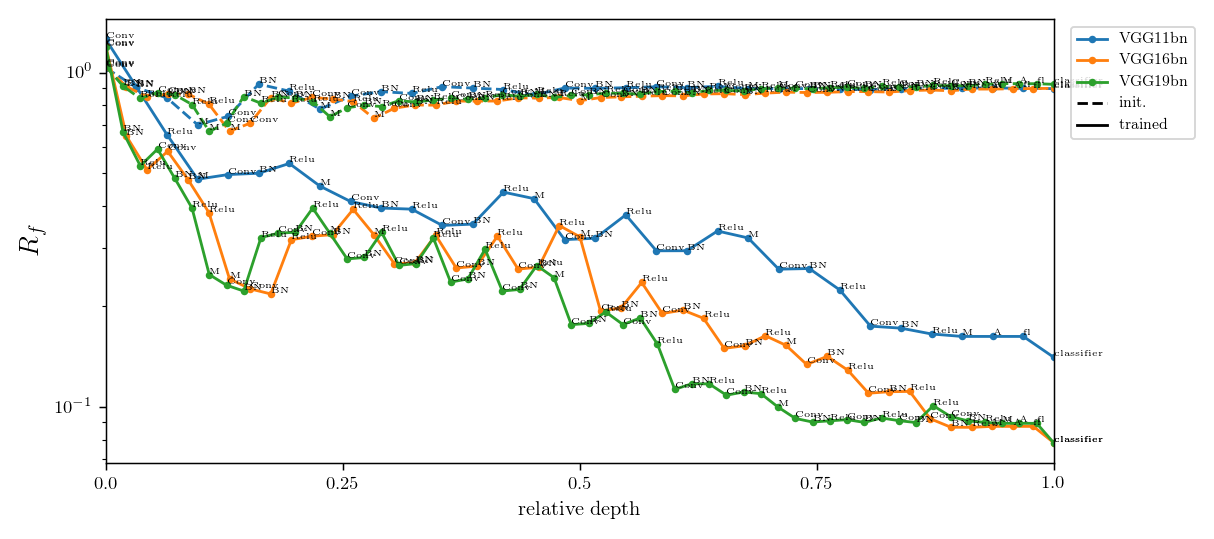

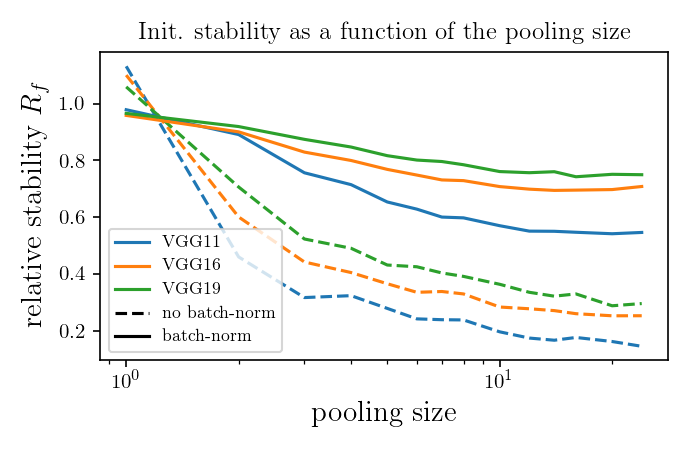

- \(R_f\) monotonically decreases with depth

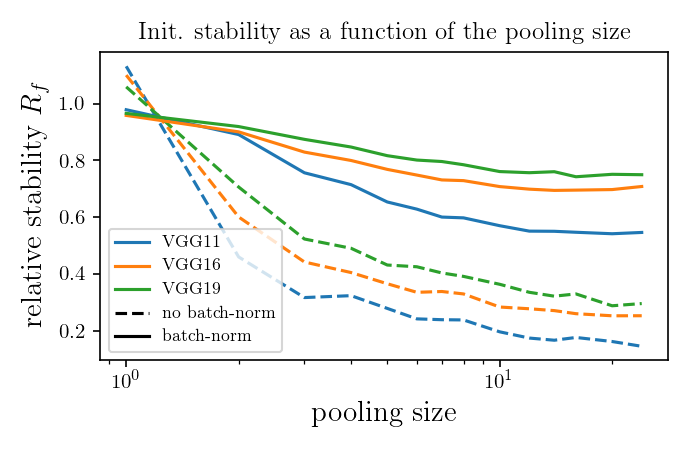

- Pooling gives relative stability

Observations:

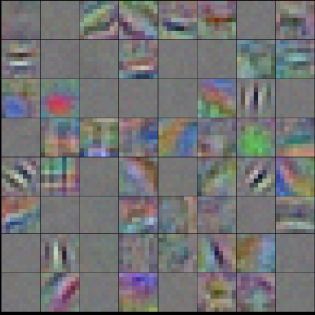

Hypothesis: nets become relatively stable by making filters low-pass with training.

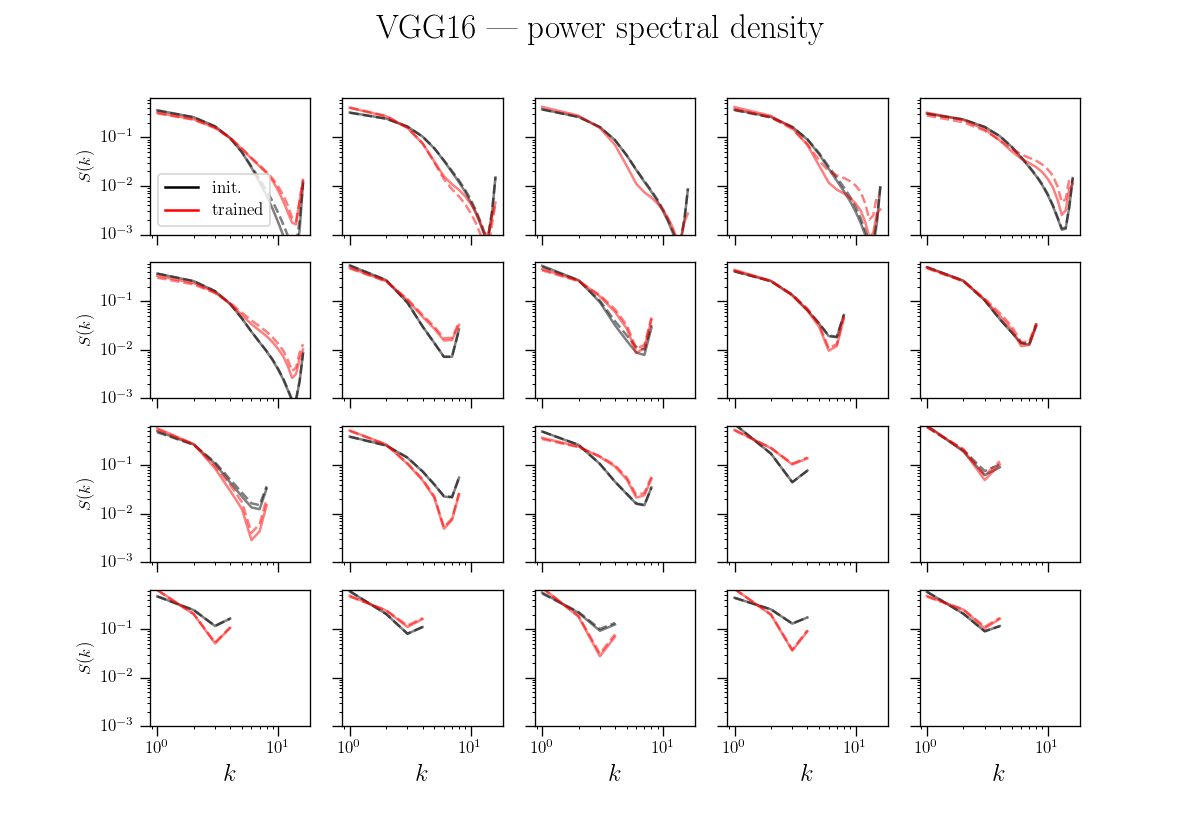

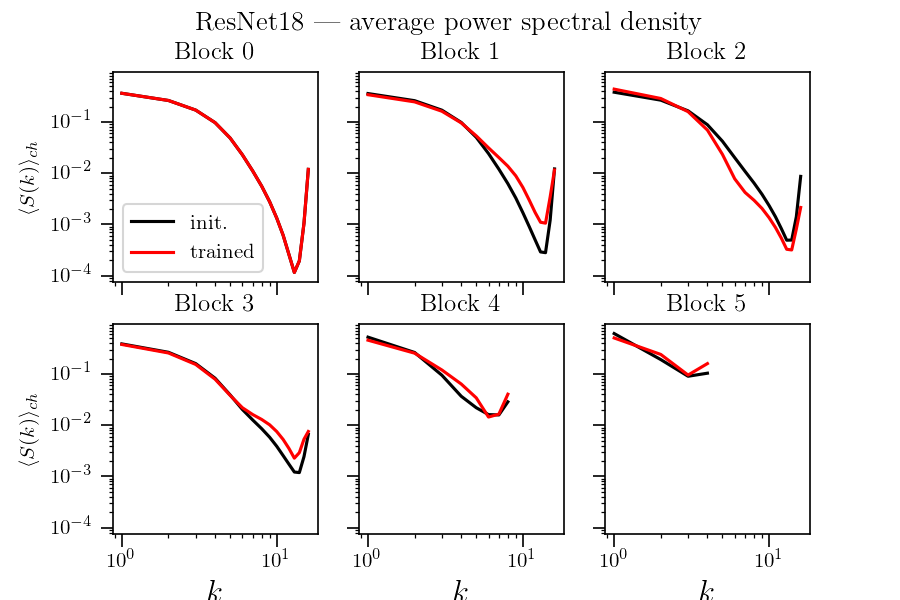

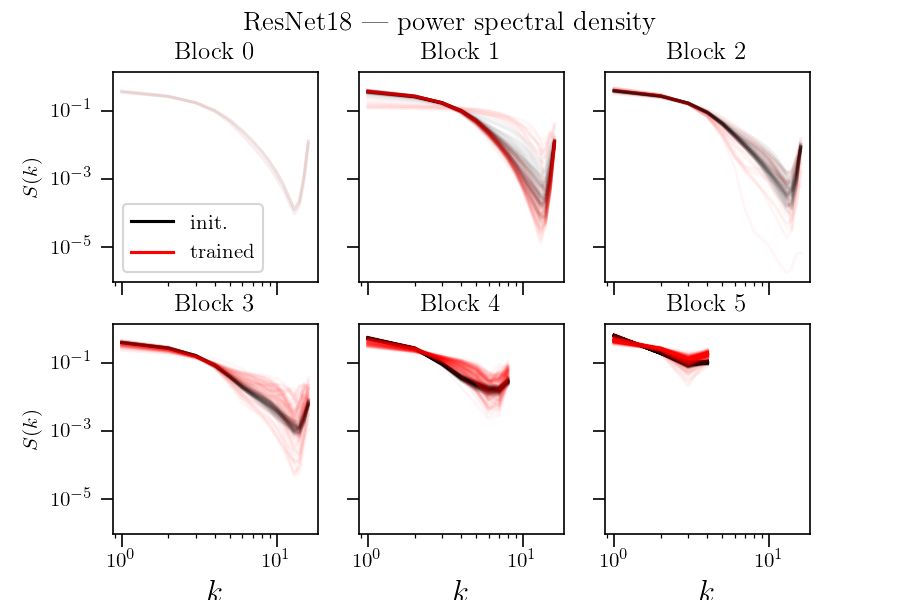

If hyp. is true, the activations power spectrum should become more low-frequency after training.

We test it...

Simple models show a different phenomenology than SOTA nets

Here a net trained with 9x9 filters seems to get to Gabor filters

Usually, 3x3 filters are used though.

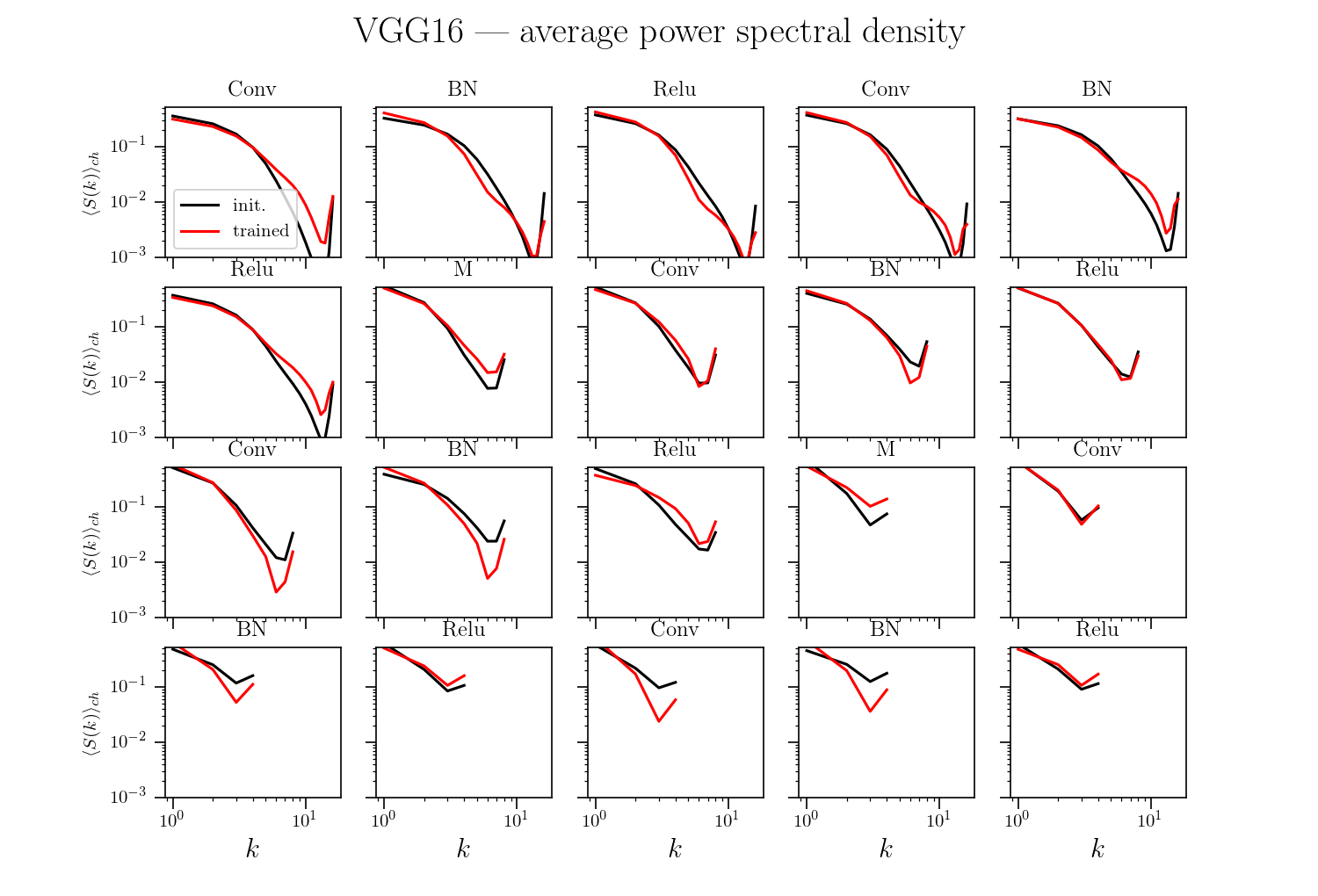

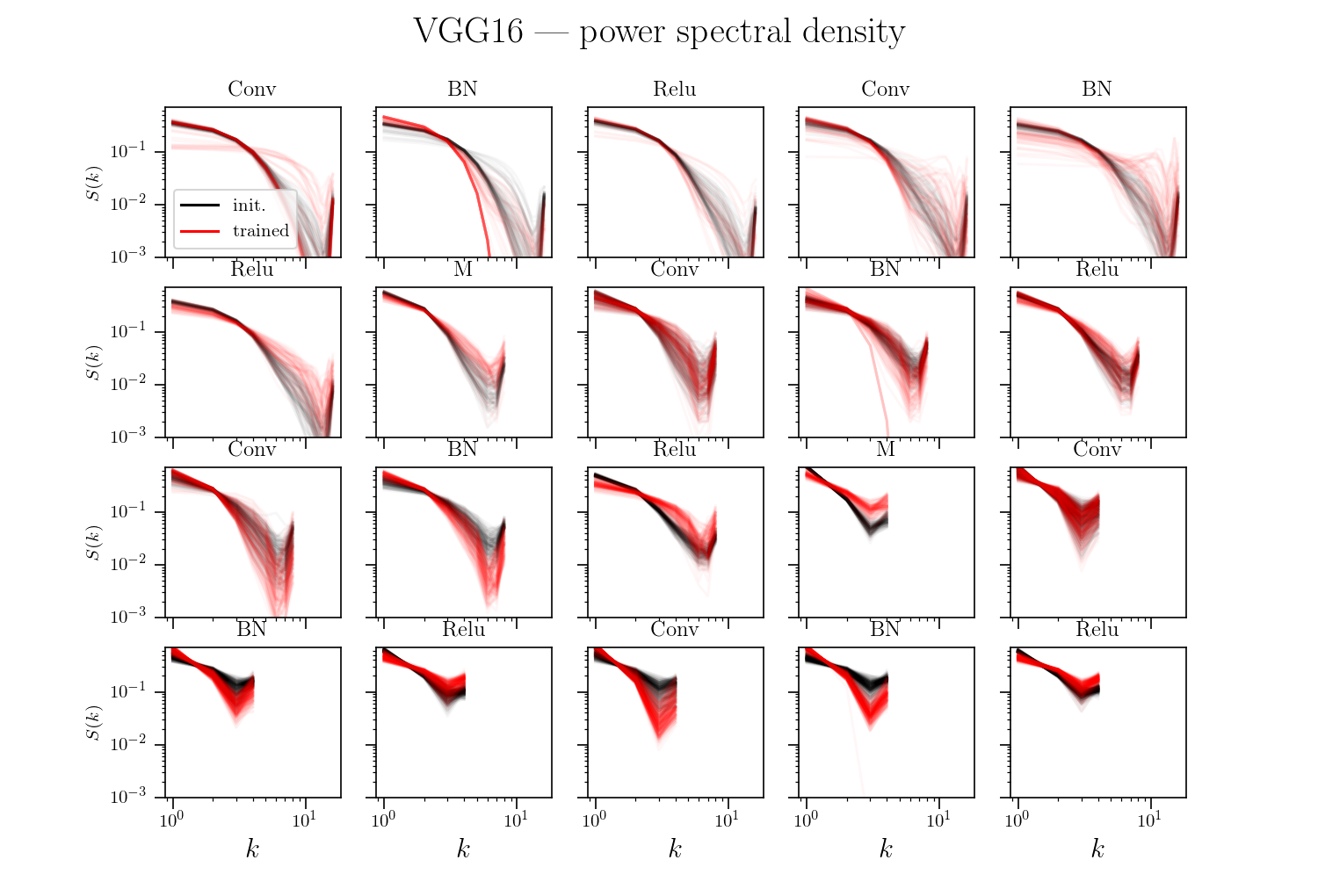

Here,

- power spectrum is computed for each channel

- they are averaged

- the result of the average is normalized to 1

for each channel

Same as previous slide plus (in dashed) the power spectral density when normalizing power spectra before averaging over channels

Hypothesis: nets become relatively stable by making filters low-pass with training.

What's the mechanism by which relative stability is achieved?

back to our hypothesis...

- Activations power spectrum seems to contradict hyp.

- Can we more directly track the evolution of the filters during training to see if they become more low pass?

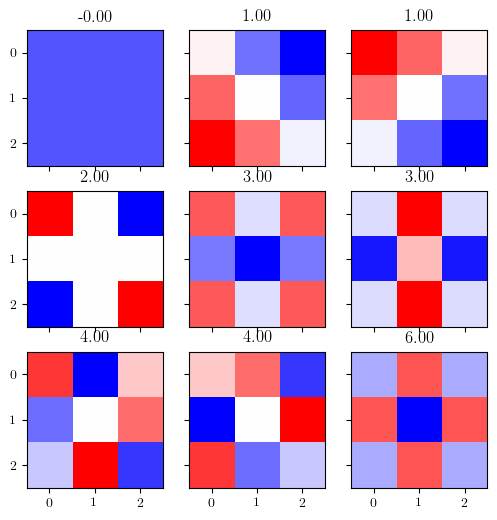

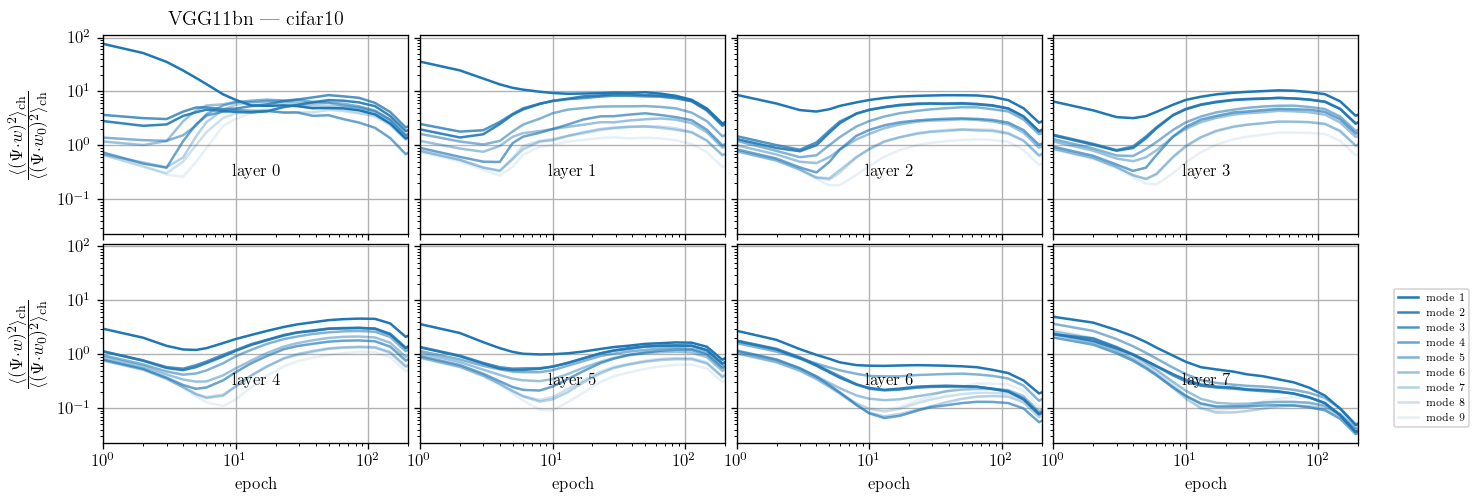

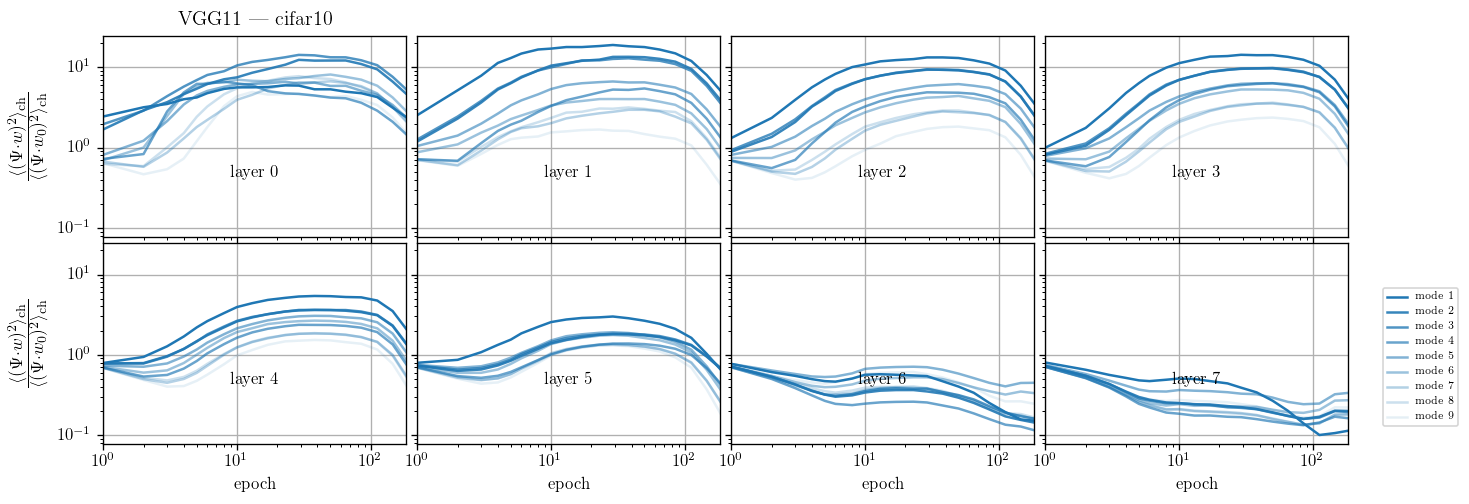

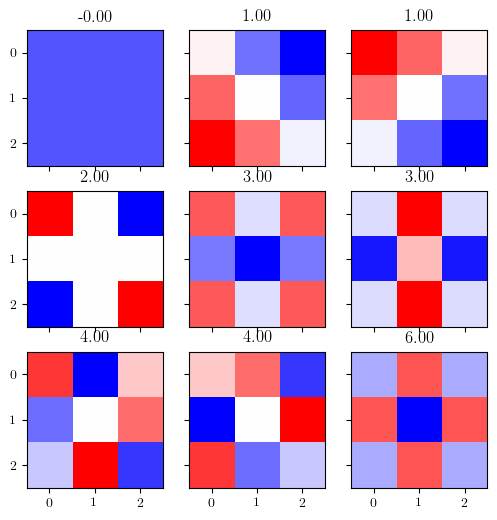

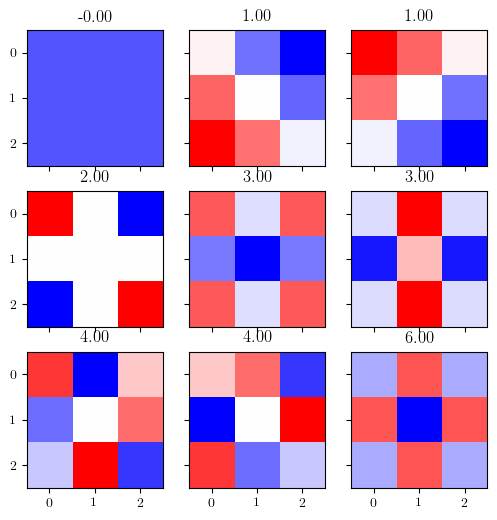

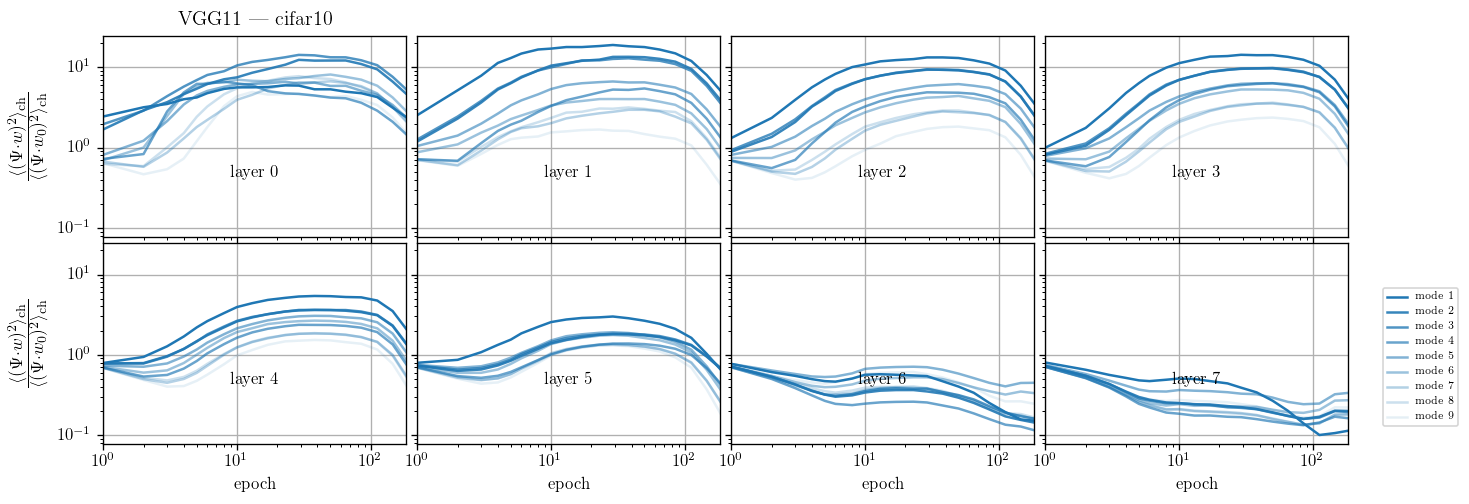

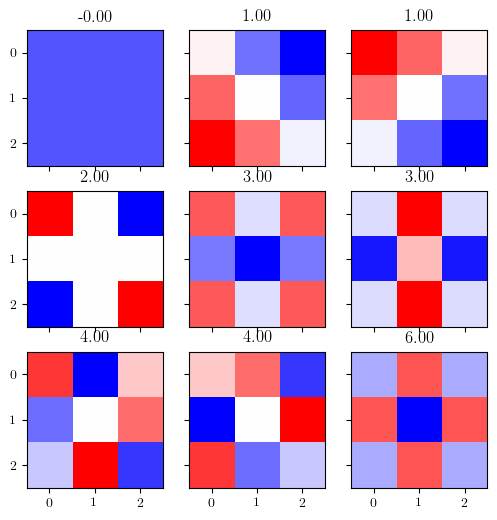

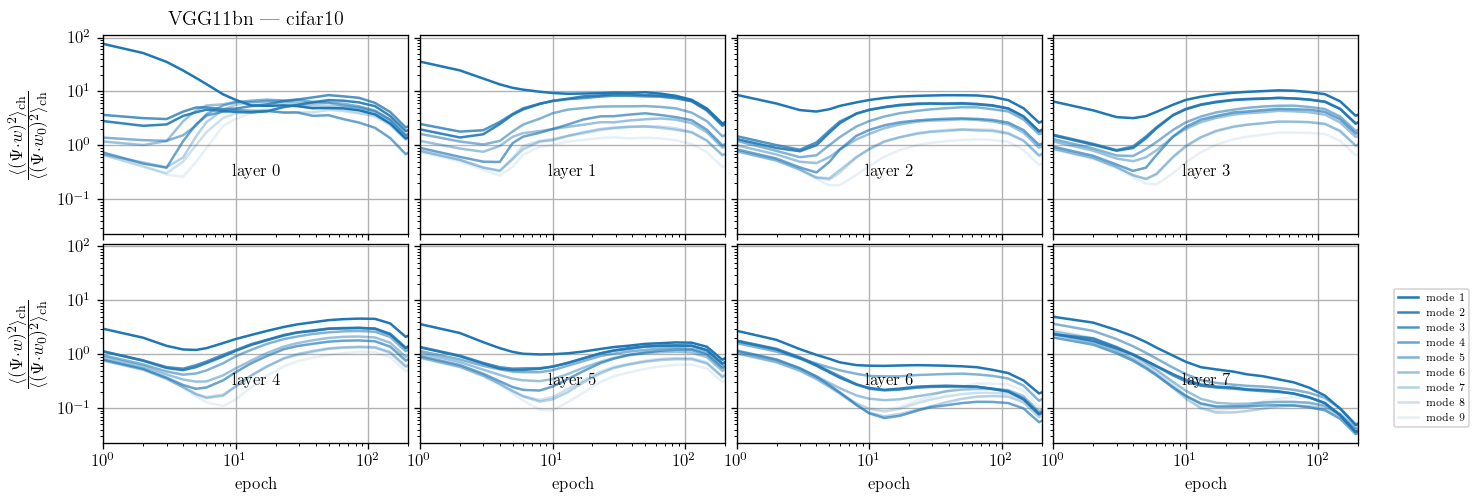

We take a basis that is the eigenvectors of the Laplacian on the 3x3 grid and follow weigths evolution on each of the components and average over the channels:

$$c_{t, \lambda} = \langle (w_{\rm{ch}, t} \cdot \Psi_\lambda )^2\rangle_{\rm{ch}}$$

I plot $$\frac{c_{t, \lambda}}{c_{t=0, \lambda}}$$

Filters actually learn to pool but other operations (e.g. ReLU) still make the spectrum more high freq.?

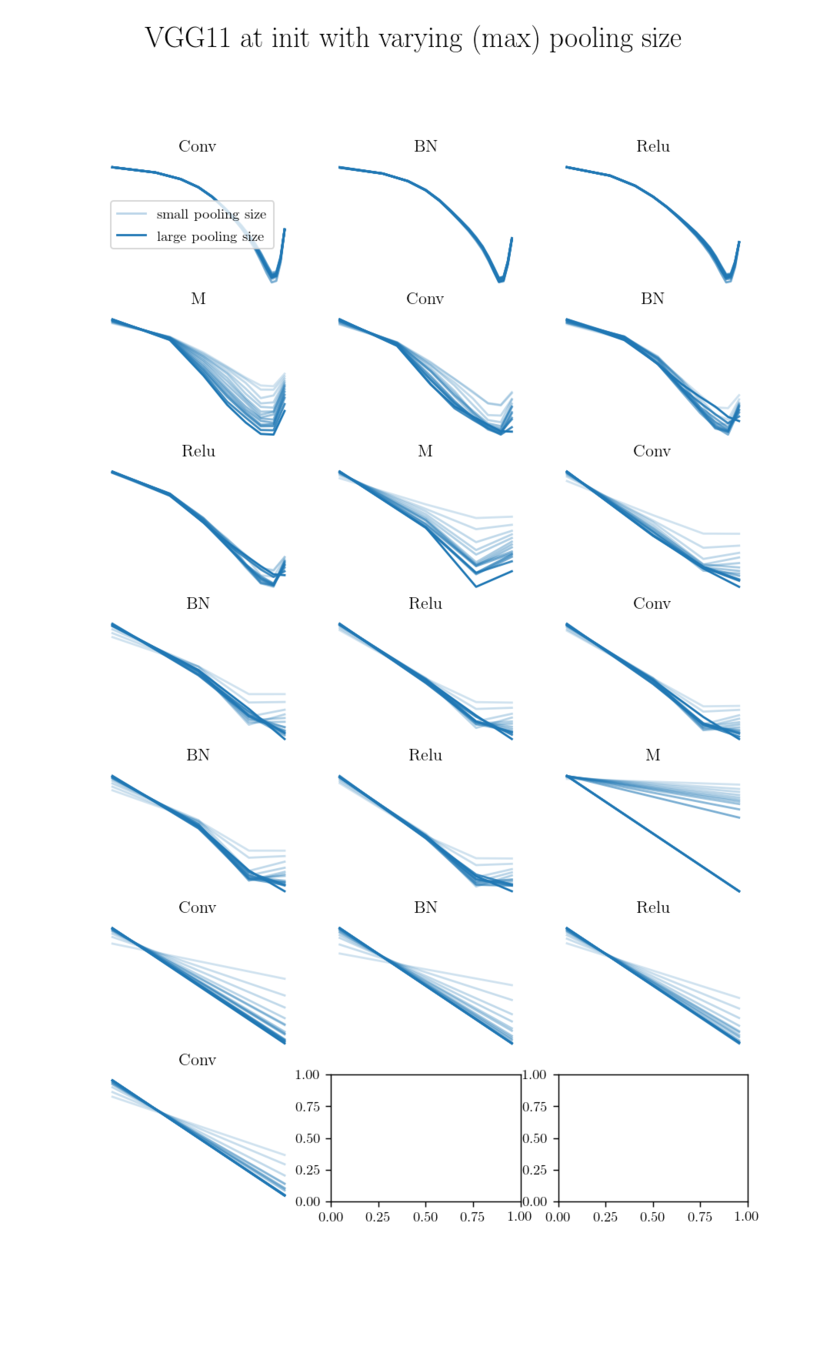

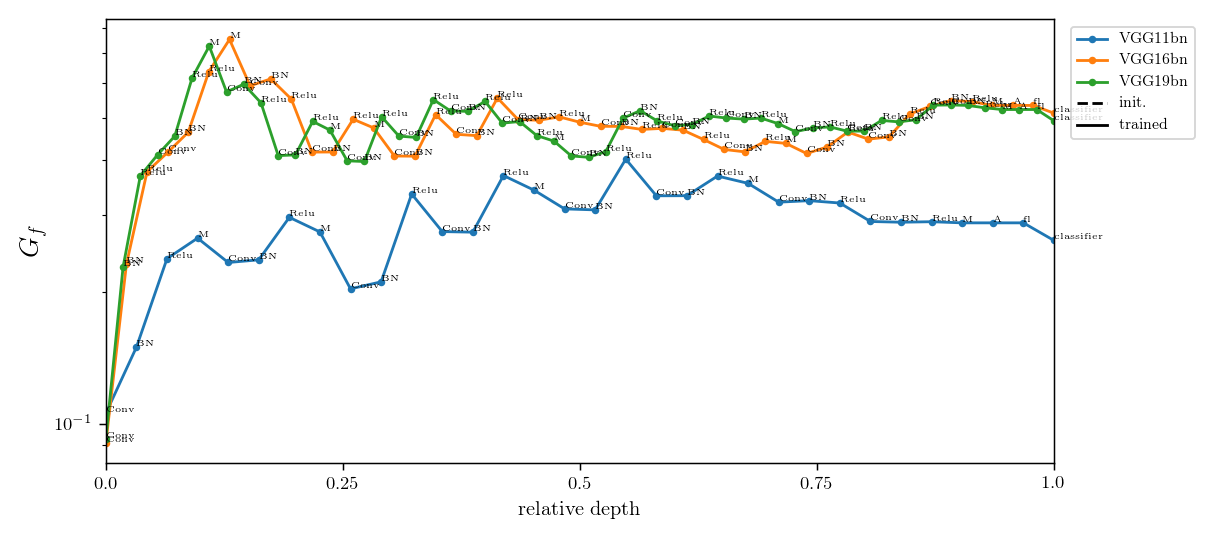

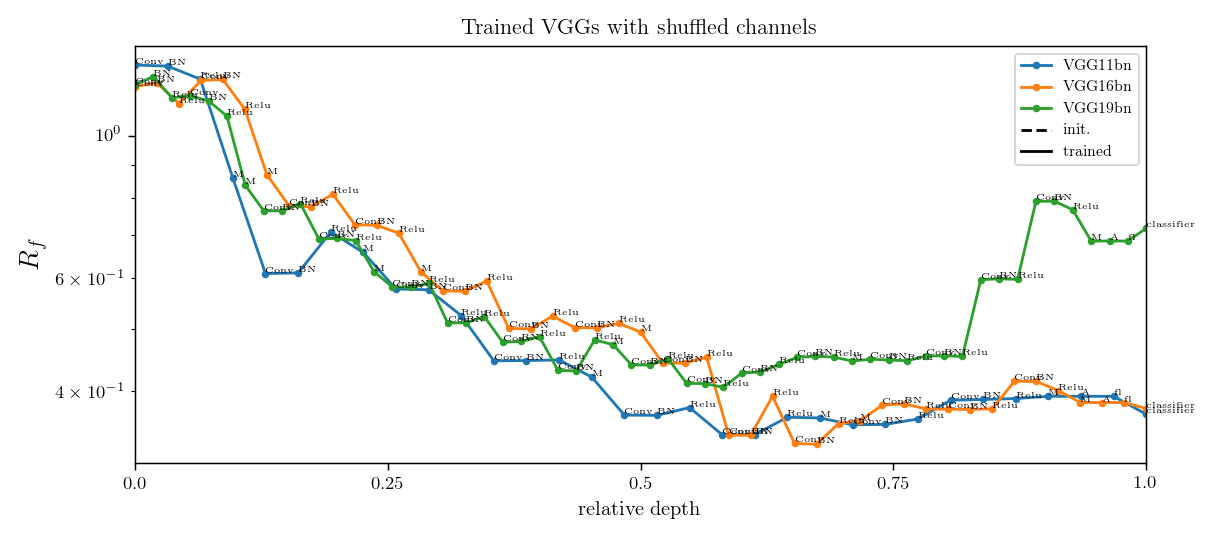

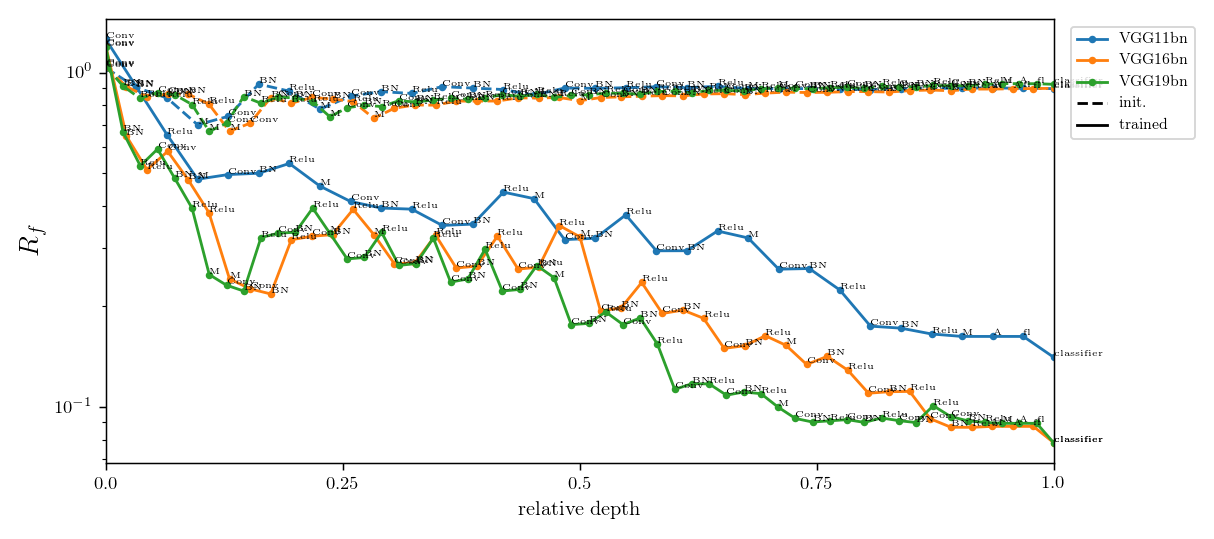

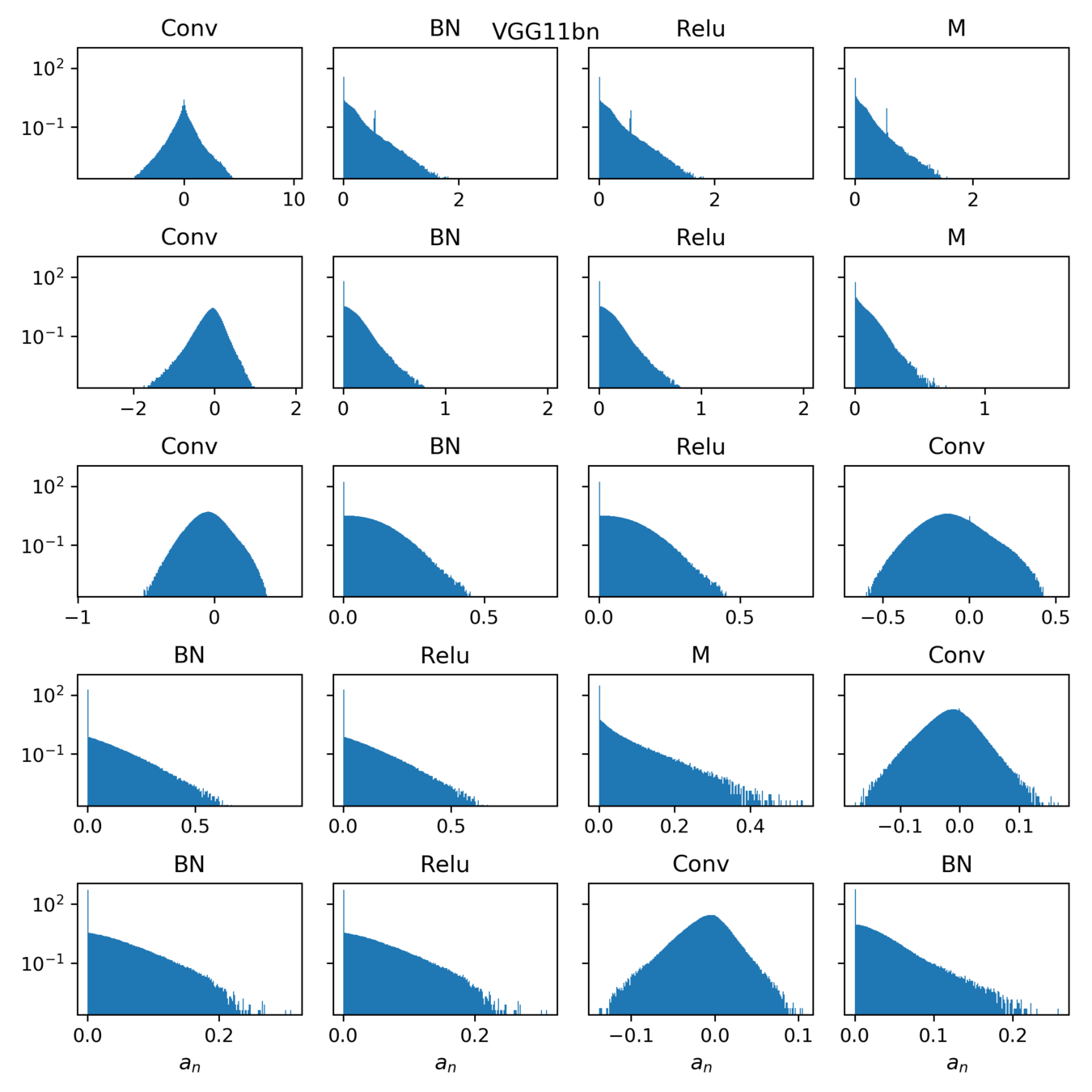

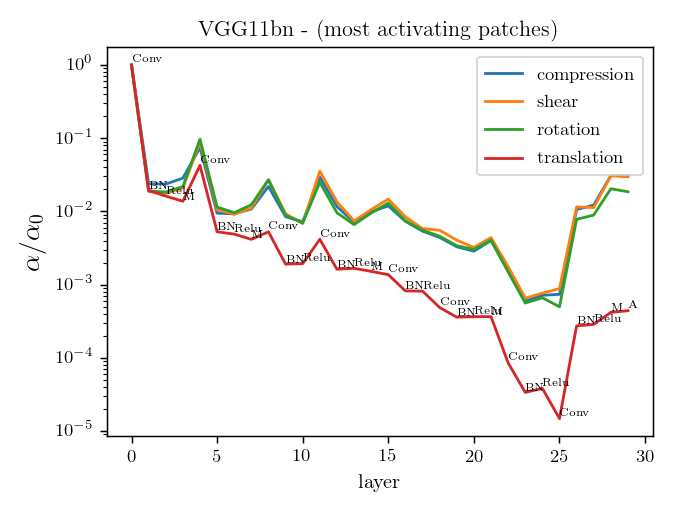

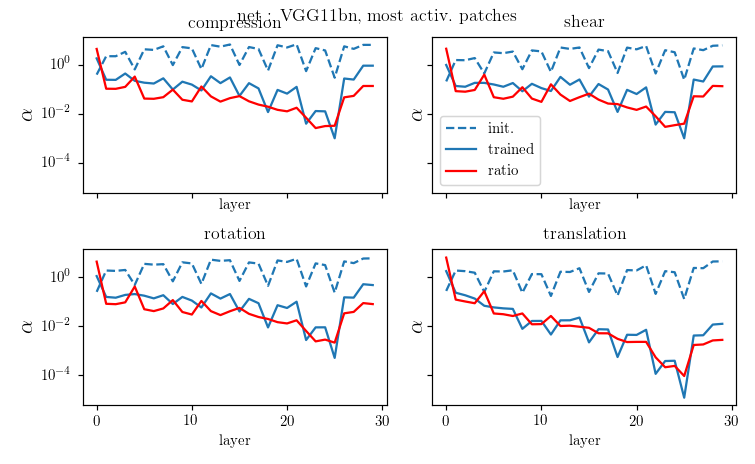

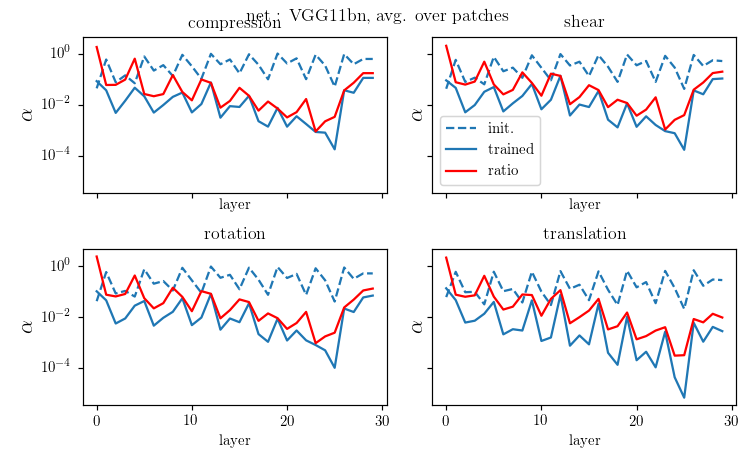

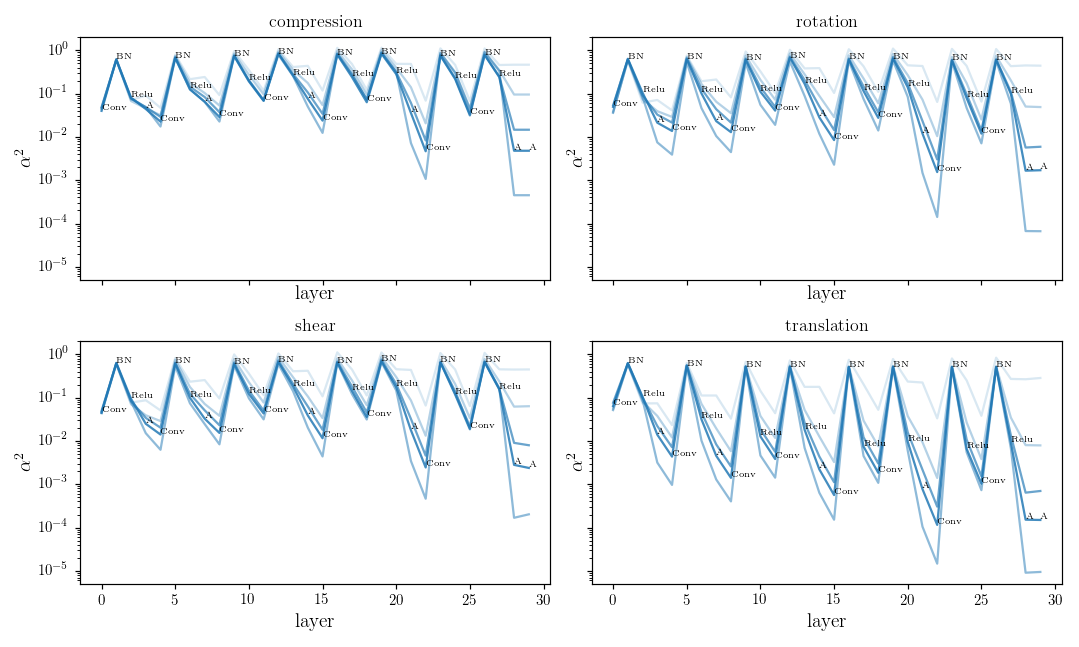

Relative stability after each operation (conv. batch-norm, ReLU, max-pool) inside VGGs

diffeo stability

init

trained

trained

init

noise stability

initialization:

trained:

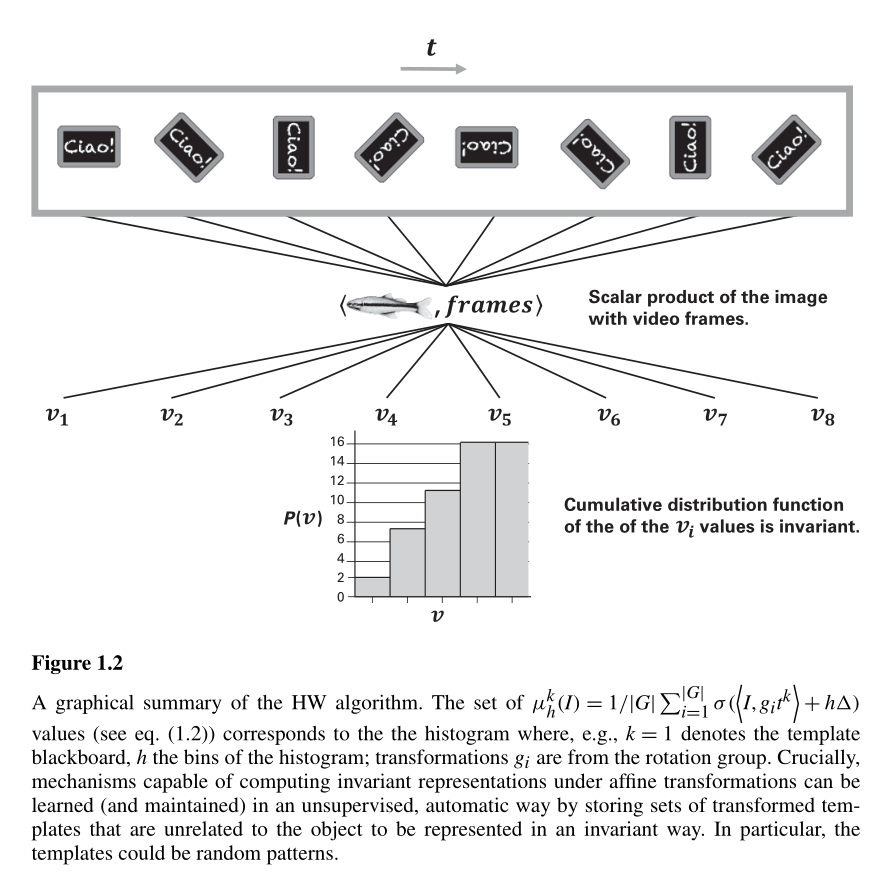

from Visual Cortex and Deep Networks by Poggio and Anselmi

What's the mechanism by which relative stability is achieved?

Hypothesis 2:

Hyp. 1: Filters learn to pool

Hyp. 2: Say we want to implement rotational invariance with two layers of weights. One can do that by

-

Taking a finite group of rotations \(G = \{g_1, g_2, \dots, g_{|G|}\}\) - Take an arbitrary weight \(w\)

- Define the first layers weights as \(W = \{ g_1w, g_2w, \dots, g_{|G|}w\} \)

- The second layer of weights pools on the first layer

This idea can also be found in neuroscience literature to explain how invariances are implemented in the visual cortex.

Can we test this idea by looking at subsequent layers of weights (say L1 and L2) and see if neurons in L2 are such that they couple neurons in L1 that do the same thing, up to a rotation?

What's the mechanism by which relative stability is achieved?

from Visual Cortex and Deep Networks by Poggio and Anselmi

work in progress on

diffeo

PCSL internal group meeting

November 11, 2021

part II

= "cat"

diffeo stability

additive noise stability

What's the mechanism by which relative stability is achieved?

- \(R_f\) monotonically decreases with depth

- Pooling gives relative stability

Observations:

Hypothesis: nets become relatively stable by making filters low-pass with training.

We take a basis that is the eigenvectors of the Laplacian on the 3x3 grid and follow weigths evolution on each of the components and average over the channels:

$$c_{t, \lambda} = \langle (w_{\rm{ch}, t} \cdot \Psi_\lambda )^2\rangle_{\rm{ch}}$$

I plot $$\frac{c_{t, \lambda}}{c_{t=0, \lambda}}$$

Weights become low-pass with training

We take a basis that is the eigenvectors of the Laplacian on the 3x3 grid and follow weigths evolution on each of the components and average over the channels:

$$c_{t, \lambda} = \langle (w_{\rm{ch}, t} \cdot \Psi_\lambda )^2\rangle_{\rm{ch}}$$

I plot $$\frac{c_{t, \lambda}}{c_{t=0, \lambda}}$$

Weights become low-pass with training

Filters actually learn to pool!

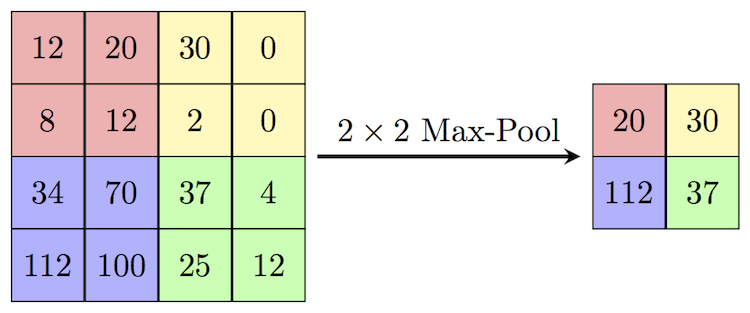

Pooling only gives stability to small translations...

Stability to other transformations requires pooling over channels

...still, our diffeo are not just local translations.

Input:

Filters:

Pooling:

Diffeo stability can be achieved by:

(1) Spatial pooling (2) Channel pooling

What if we take a trained net and randomly permute the channels?

If it was all about (1), nothing should change.

Can we decouple the contribution of the two?

standard

shuffled channels

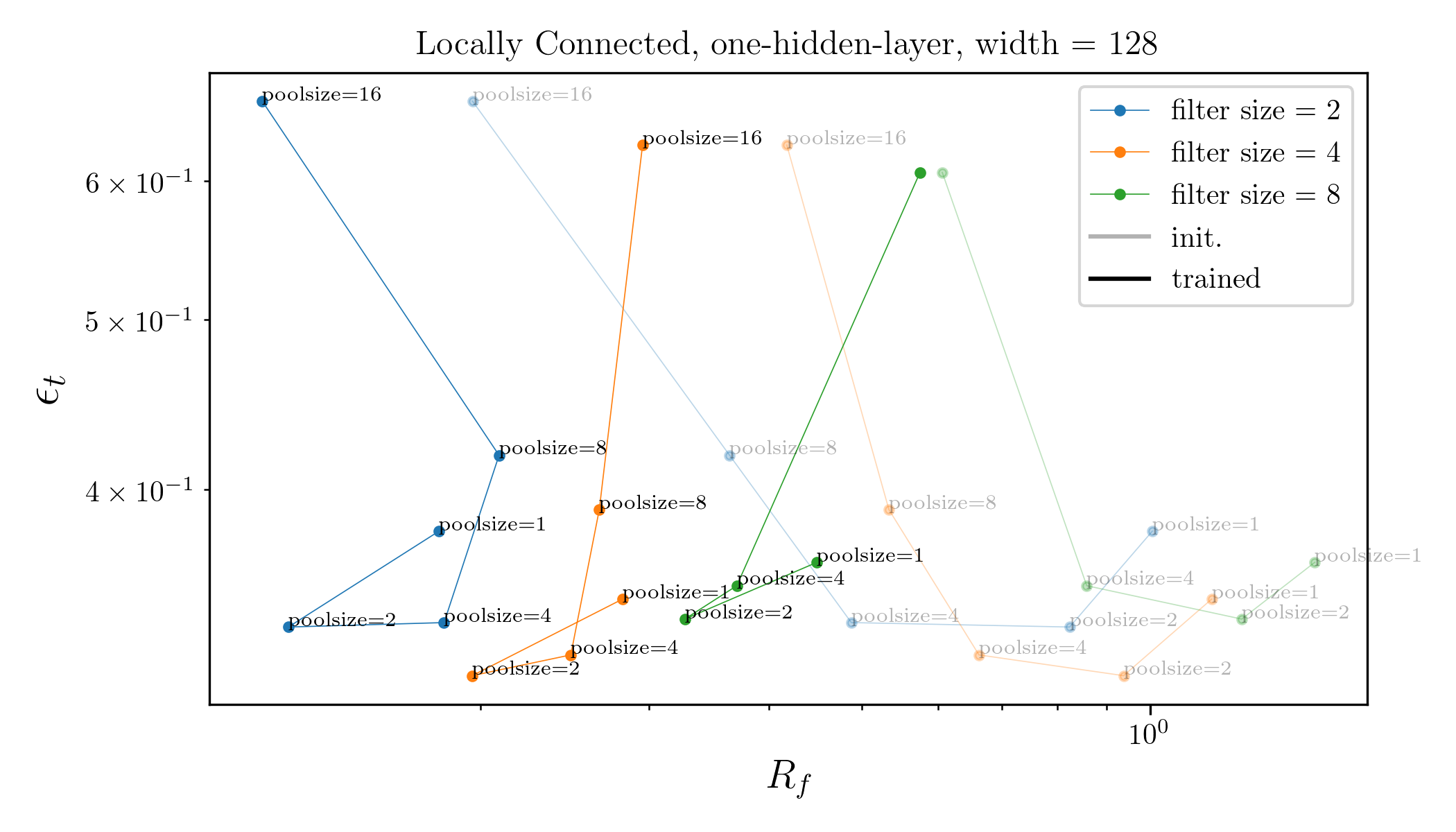

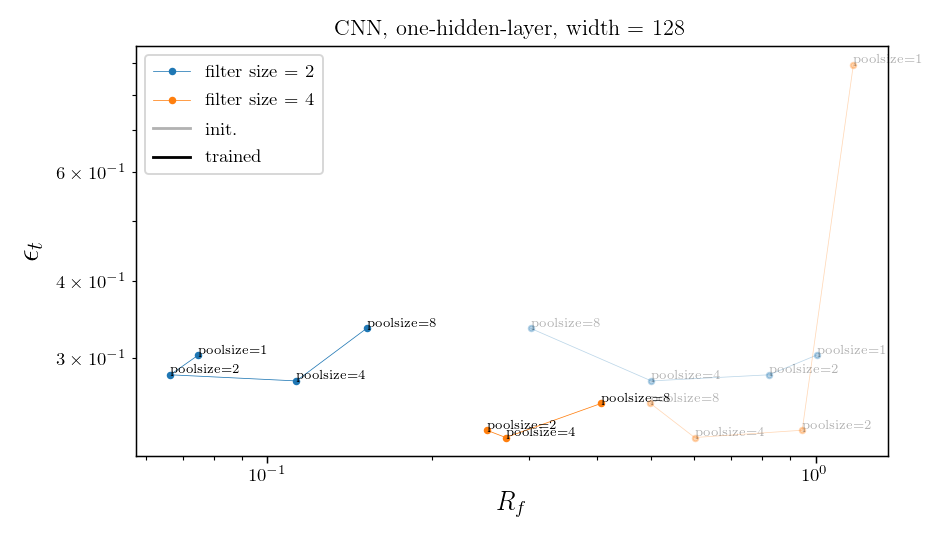

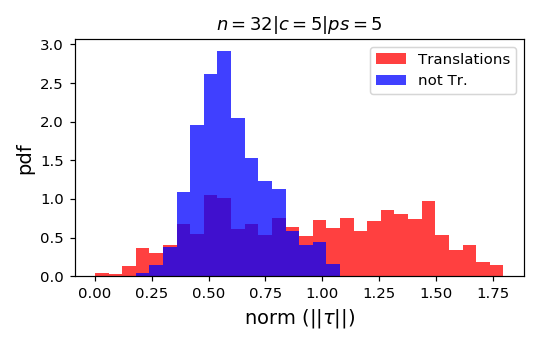

in progress: Can we understand how the invariance is learned in simple local models?

Take one hidden layer CNN or LCN, i.e.:

- conv. / locally conn. layer with \(h\) channels and filter size \(f_s\)

- pooling with pooling size \(p_s\)

- fully-connected layer (FC)

Can either train the whole net or just the FC layer.

Would this allow to say something about how the invariance is learned in these simple nets?

e.g. if only FC layer is trained, the net can only learn channel pooling and not spatial pooling.

Still, the net can still change spatial pooling by select the channels that are more low frequency...

only FC is trained

whole net is trained

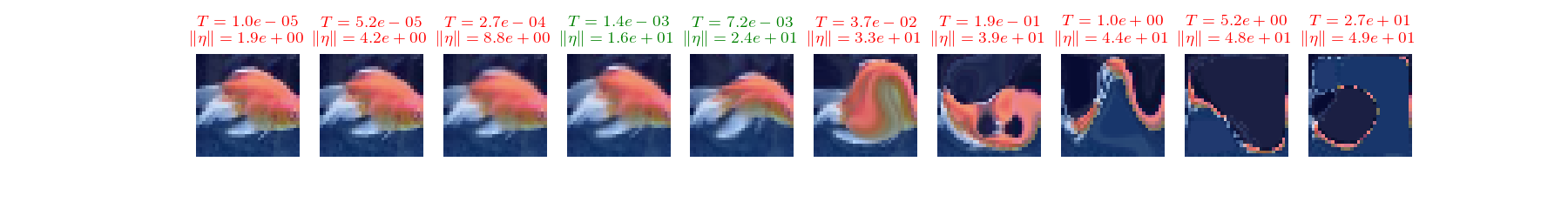

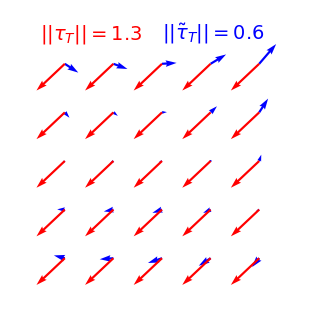

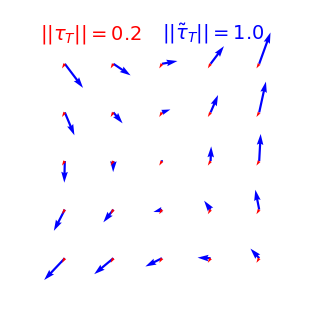

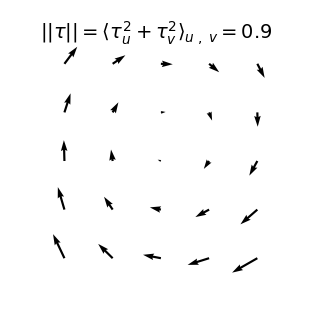

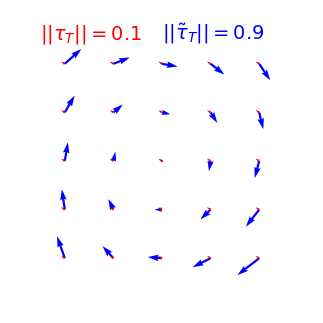

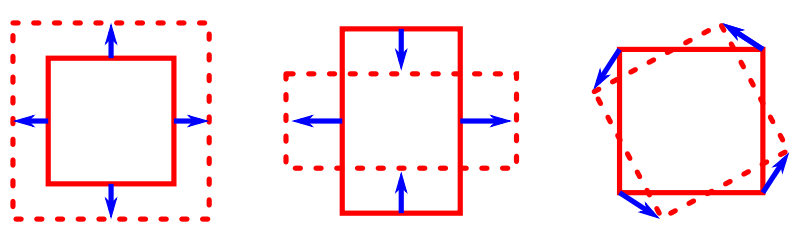

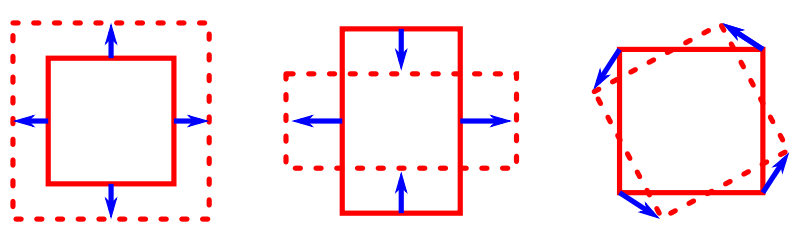

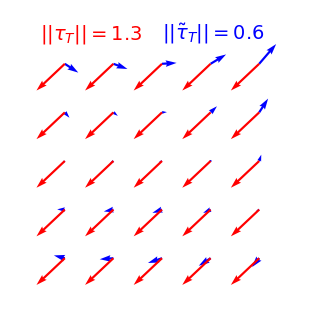

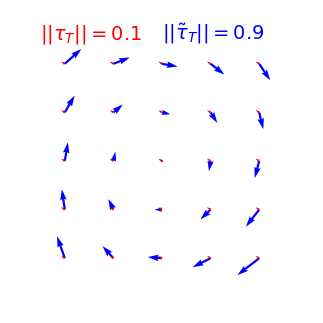

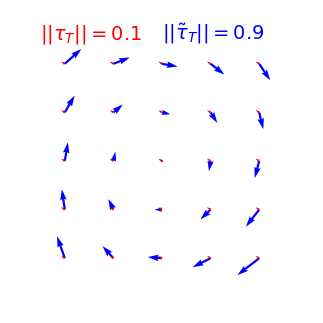

Diffeos are not just local translations

local translation

NOT a local translation

Which transformations make up the blue displacement field?

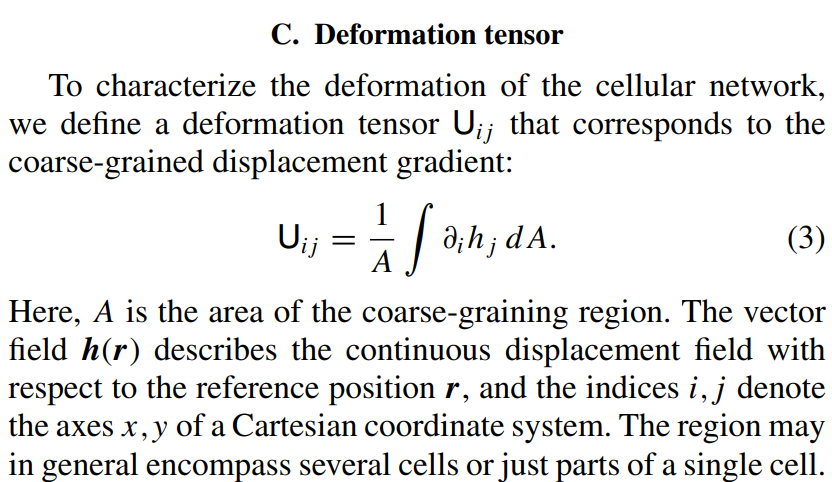

Linear transformations

compression / expansion

pure shear

rotation

Locally, we can decompose our displacement field \(\tau(u, v)\) into translations, i.e. $$\langle\tau\rangle_\text{patch}$$

and linear transformations (i.e. defined by its 1st order derivatives):

Where

- compression corresponds to the trace of \(U\)

- pure shear to its symmetric traceless part

- rotation to its antisymmetric part

compression / expansion

pure shear

rotation

Example: compression / expansion

trace

symmetric traceless

antiymmetric

compression / expansion

pure shear

rotation

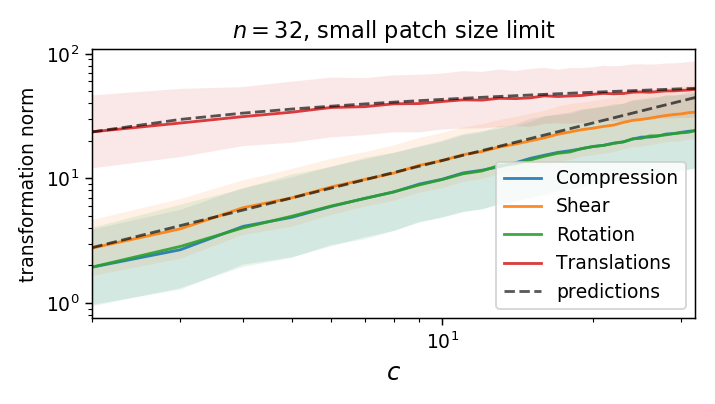

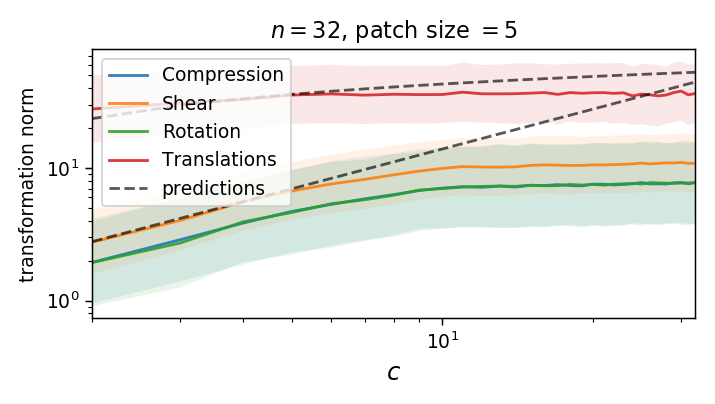

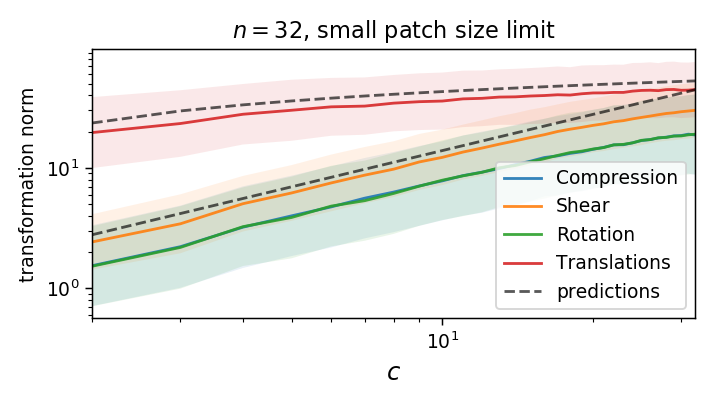

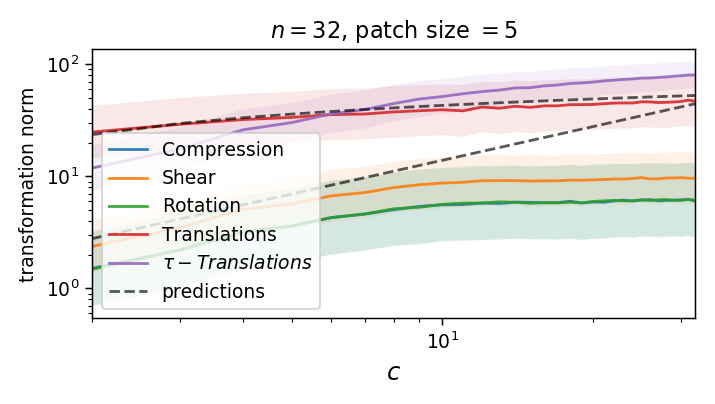

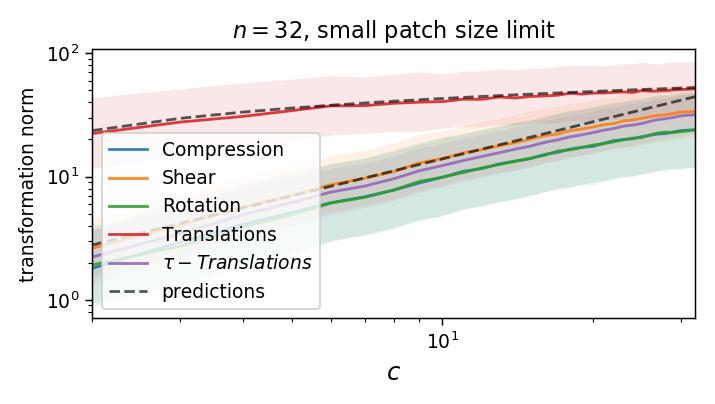

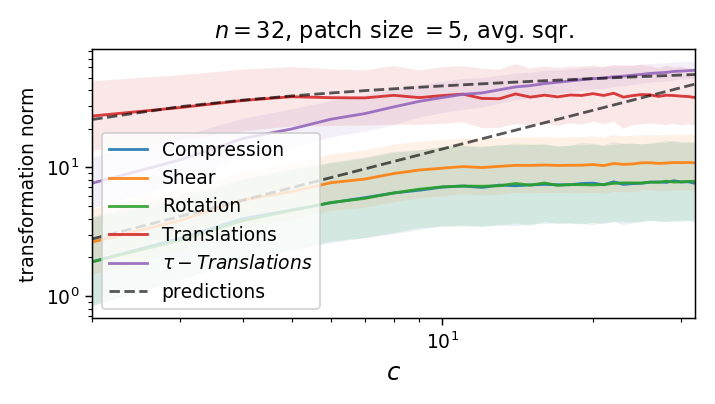

How much of these transformations is there in a diffeo?

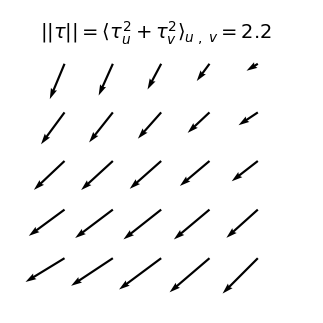

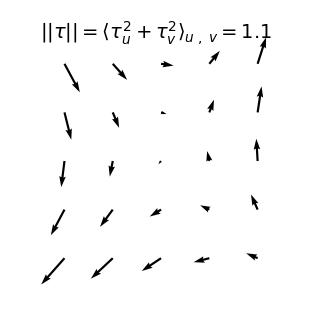

Our diffeo ensemble is defined as \(\tau = (\tau_u, \tau_v)\) with components

Where the norm of local translations can be computed as the average pixel displacement:

While the norm of each component of \(U\) reads

Compute the average of the norm (w/o the square)

Compute the empirical average over finite patches

Is it ok to compare translations norm and gradients norm?

We can compute the norm of the part of \(\tau\) that is not a translation (on a patch):

As we take a finite patch size, it's not ok anymore!

https://journals.aps.org/pre/pdf/10.1103/PhysRevE.95.032401

to recap:

- Filters learn to pool but not in all layers

- Diffeo are not only made up by local translations

Is diffeo relative stability achieved also by getting locally invariant to the other linear transformations

(i.e. rotation, compression, pure shear)?

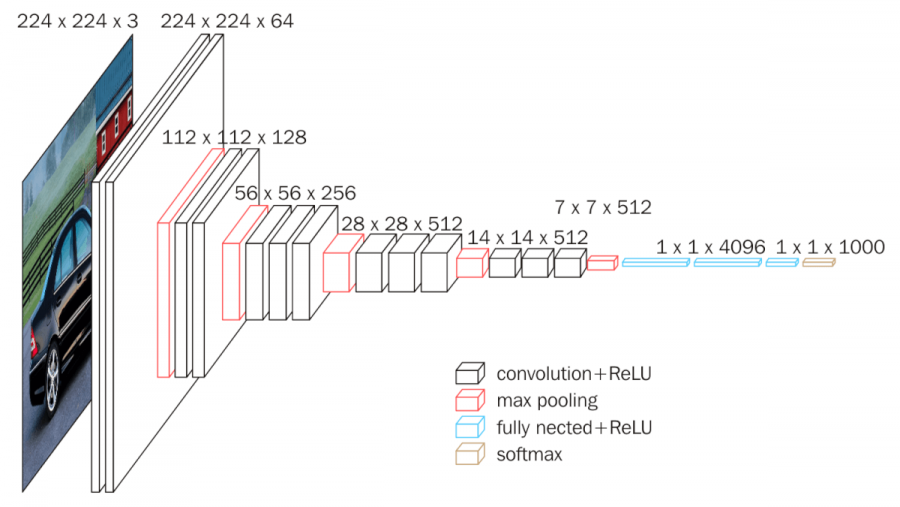

Neurons' receptive field

convolution layer

- As mentioned, a diffeo can be approximated by a linear transformation but only locally.

- If we want stability to (say) rotations, we cannot apply them to the whole image.

- Still, each neuron is not looking at the whole image but only at a small patch (its receptive field)

VGG11

8x8 activation map receptive field

input

Neurons' receptive field

8x8x256

We can measure neurons' stability to linear transformations by deforming locally what's in the their receptive field!!

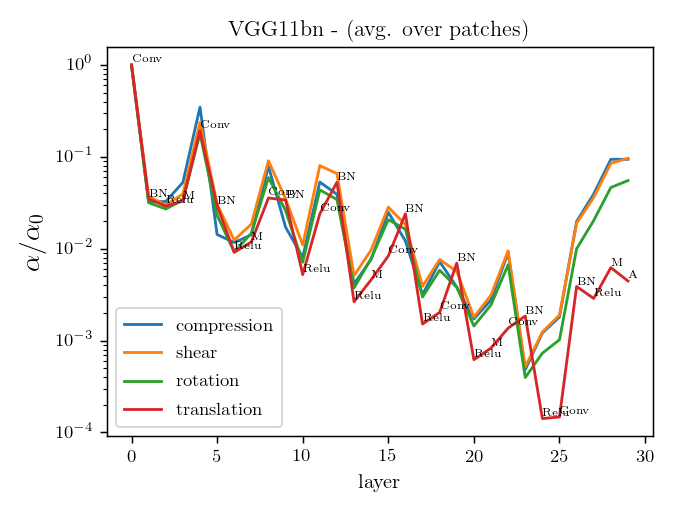

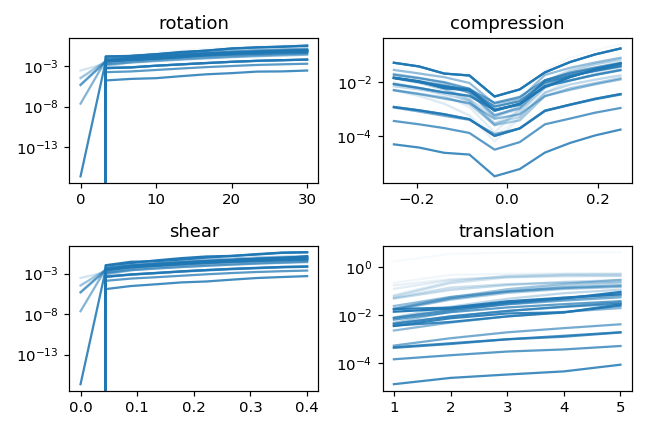

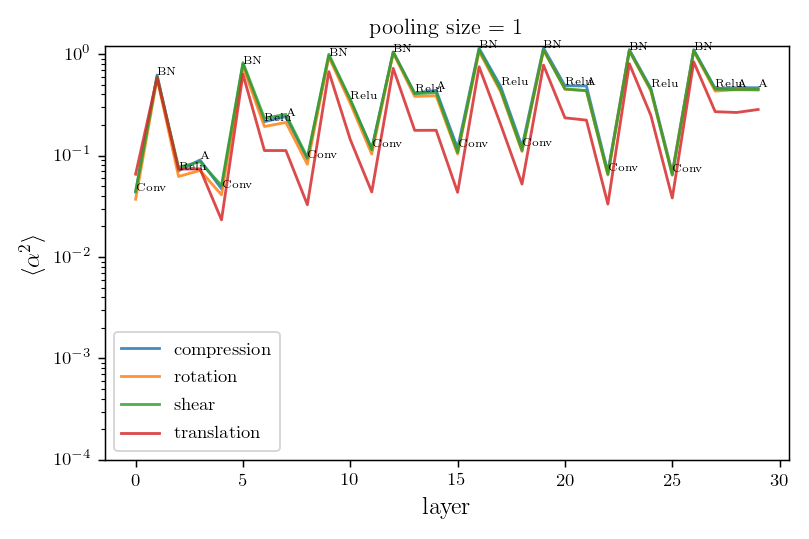

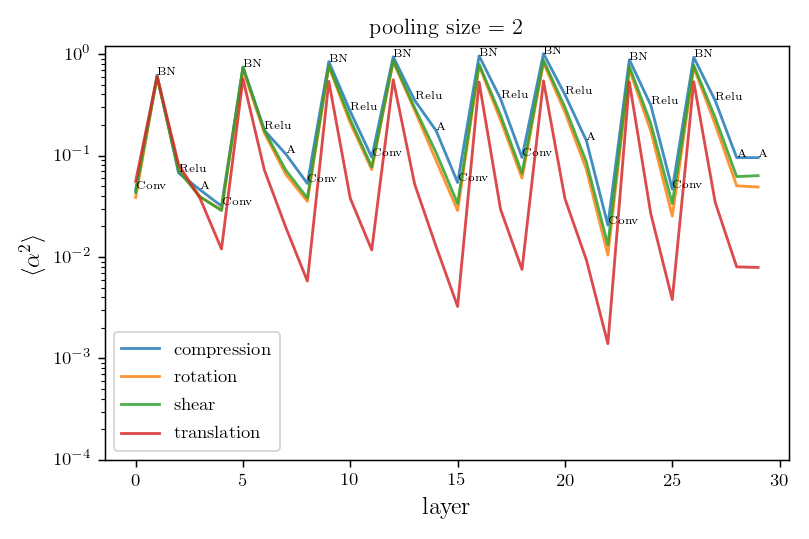

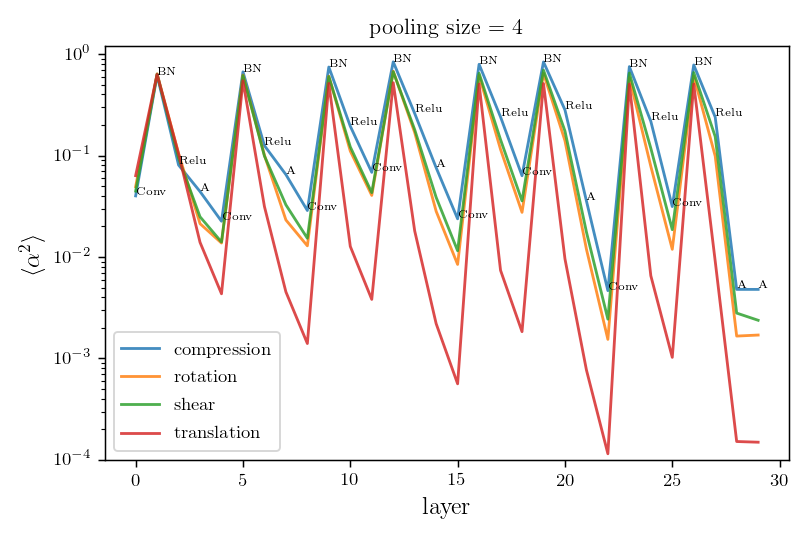

Linear transformations stability

We compare local translations, rotations, compressions, pure shears, by fixing their norm to 1, i.e. $$\delta = \|R\| =\|C\| =\|S\| = 1.$$

We compute the average neurons stability as

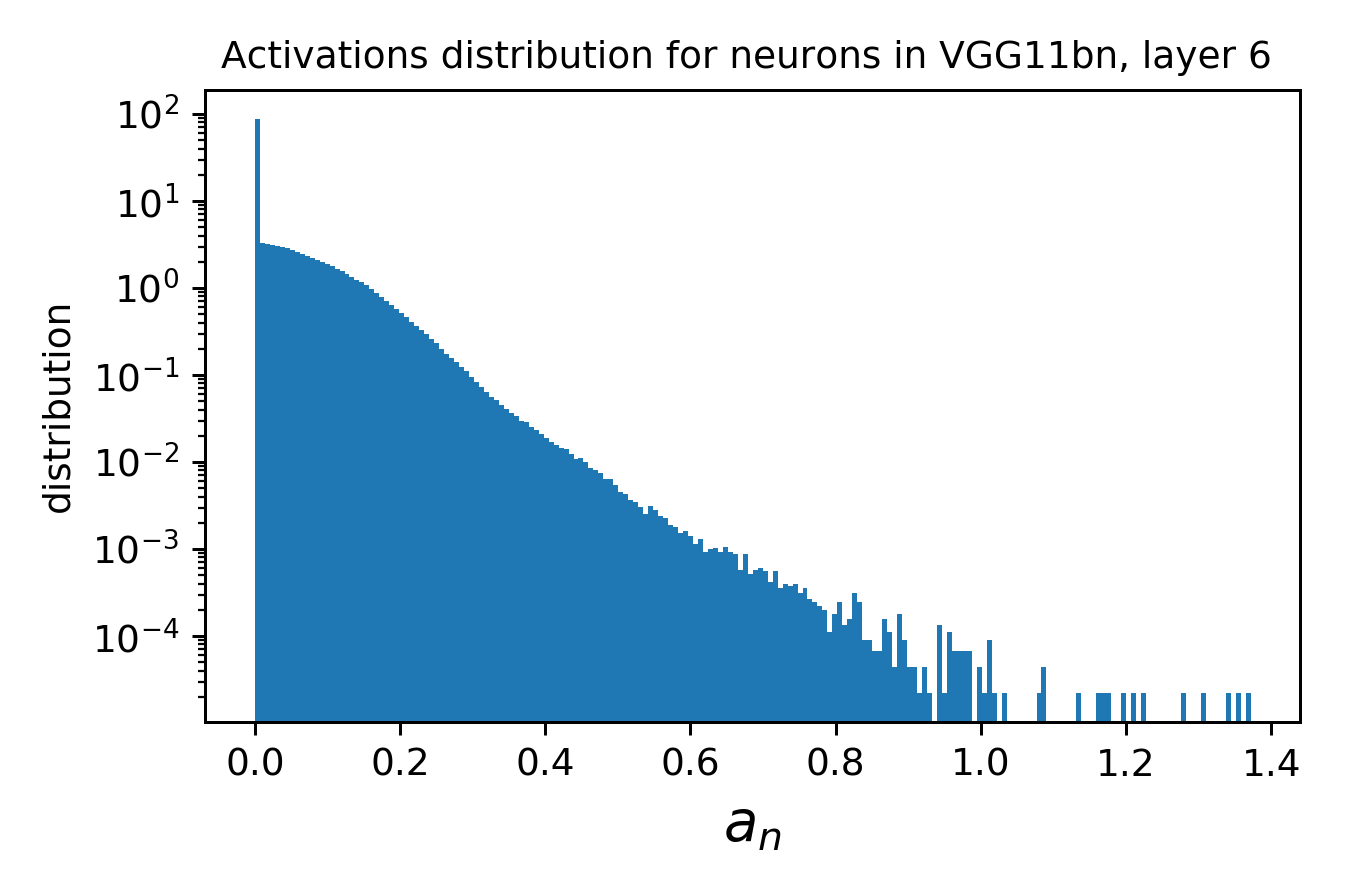

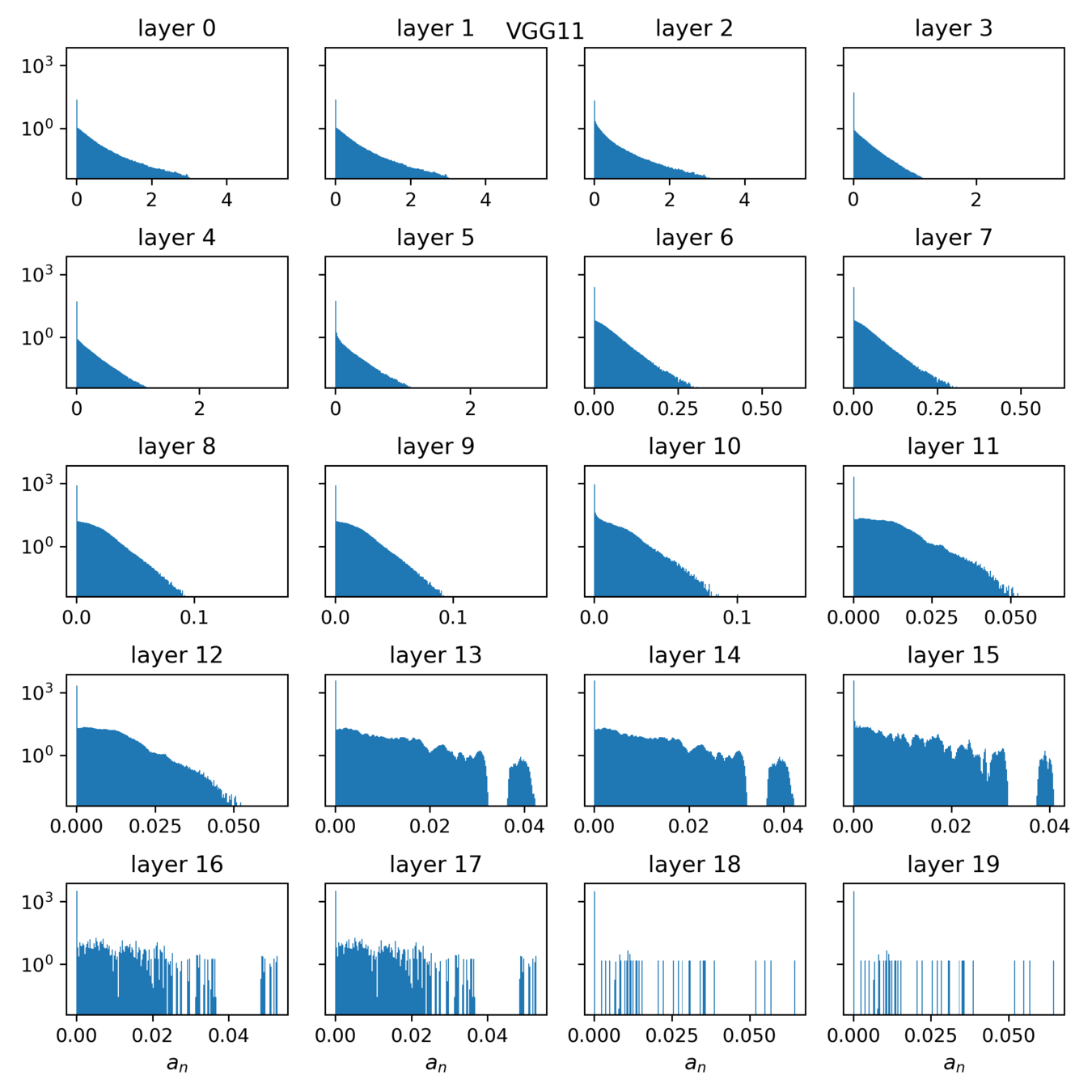

where \(a_n\) is the activation of neuron \(n\) on patch \(x\). The neurons' stability \(\alpha\) can be measured for each layer.

Note:

- expensive to get statistics (need one forward pass per deformation per sample per neuron)

- in many layers, most activations are zero

Is it a fair comparison..?

Side note: each deformation, being applied locally to a single receptive field, depends on the neuron we are measuring. As a consequence, to measure \(\alpha\), one needs to do one forward pass per image per neuron, and to get some statistics over inputs gets very expensive.

This could be an issue if neurons are only active on a few patches, i.e. when they detect something.

Solutions..?

- Be sure that the neurons is active by computing \(\alpha\) on \(x_n = \argmax_i a(x_i)\), i.e. the patch making it the most active.

- Technical (TODO): implement forward passes over batches as for trainings NNs (using torch dataloaders and GPUs).

Results

What quantity should we look at?

- Average neurons response over

issues:

- \(a_n = 0\) for most patches

- having lot of statistics is expensive

a few random patches

max response patch

Average over the top n response patches?

- Should we divide \(\alpha\) by average neuron response (similarly to what we do for noise and diffeo stability)?

Issue (?): small rotation in large receptive fields looks like a translation

rotation

translation

receptive field

object

r.f. center

For neurons deep in the net, receptive fields are large and the effect of different transformations can start to mix up.

Does pooling have an effect also on transformations other than translations?

Issue (?): Average norm of the displacement increases with patch size for linear tr.

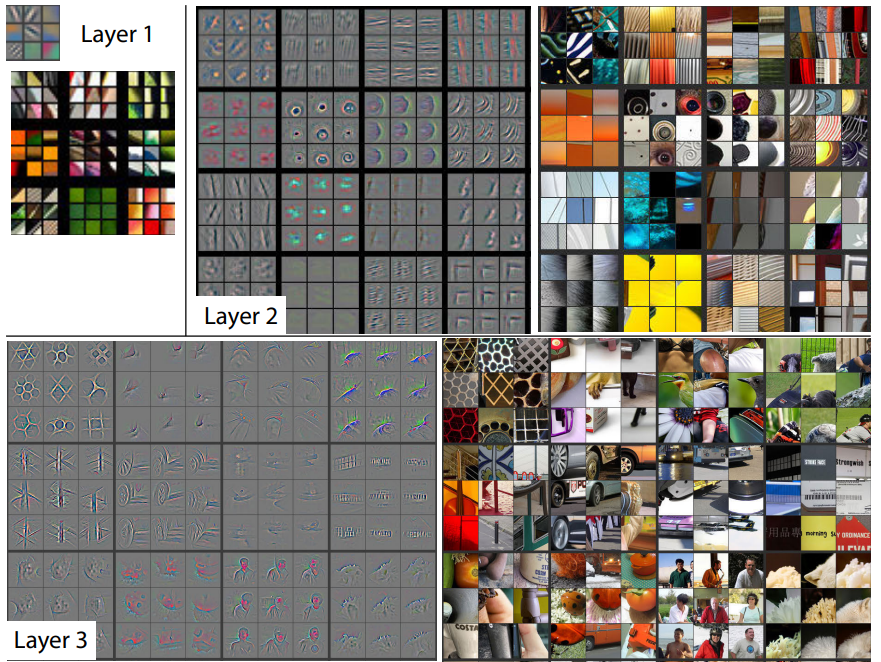

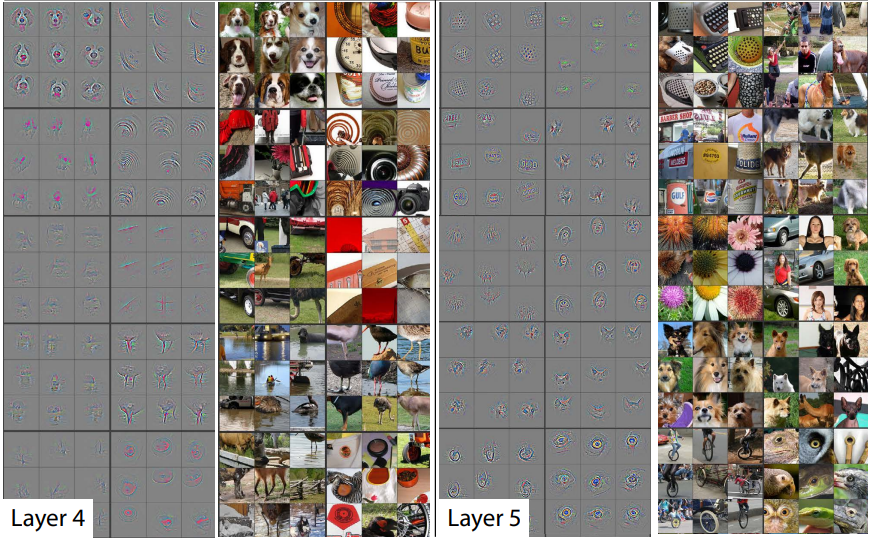

- Goal: understand which part in the images make neurons activate in different layers

- How: deconvolutional layers

Pixels displacement field along \(x\):

where Fourier coefficients are sampled from

work in progress on

diffeo

part III

Journal club

PCSL retreat

December 2021