Review on "A simple introduction to Markov Chain Monte-Carlo sampling"

Lucas Oliveira David

ld492@drexel.edu

Introduction

Markov Chain Monte-Carlo

(MCMC)

"A computer-driven sampling method." [1]

Characterizes a distribution even without knowing all its mathematical properties.

From "MCMC". Available at: wikiwand.

Monte-Carlo

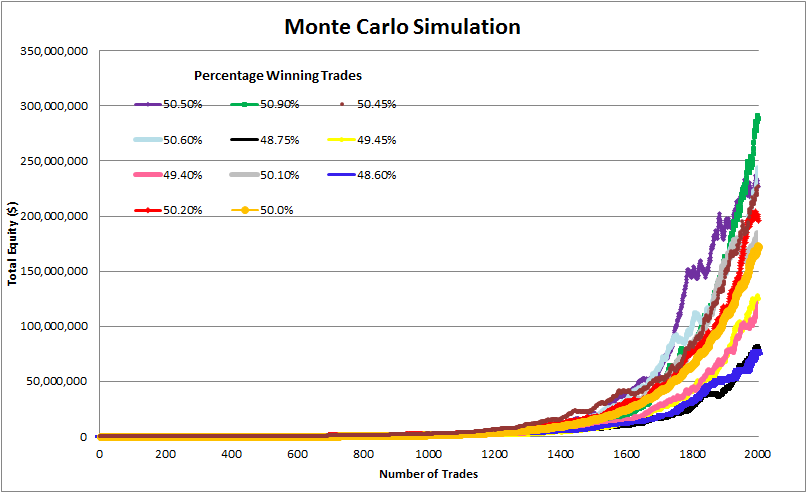

Family of methods called Monte-Carlo simulations that estimate properties of a distribution by repeatedly random sampling from it.

Simulated equity curve for a trading system, from "Monte-Carlo Simulation". Available at: knispo-guido-to-stock-trading.

Monte-Carlo

Mean computation

Example: estimating the mean of a distribution based on a randomly small subset of it.

Example within the example: finding the average score of each currently valid move in a Othelo game, so the autonomous agent can select the one with greater value (see PlayerLikeAMonteCarlo).

Markov Chain

Let r be a random variable which describes the weather (whether it's 0-sunny or 1-raining), s.t.

A possible sequence of day weathers described by this model could be:

1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0

Rain happens 40% of the time, but the sequence doesn't describe our world very well, as, in practice, sunny periods tend to stay that way and raining days tend to be followed by more rain. What about:

0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0

That seems more realistic. To accomplish that, let's use the idea of states.

Markov Chain

What if we want to find out about day #42?

sunny

raining

.1

.5

.9

.5

(the probability of raining given all previous states)

That looks very complicated to know. Let's assume something to make our problem easier...

Markov Chain

(Markov property: a state can be fully determined by its direct previous state)

Now, let's say today is day #0 and it IS raining. Therefore:

Markov Chain

Is the change in the state vector decreasing, as n increases? Is it converging to a stationary state? I.e.,

Markov Chain

Def: Markov chains are instances of random processes that share the Markov property. That is, mathematical models for random phenomena evolving over time [2].

Def: A Markov chain is said to be stationary if and only if the marginal distribution X_n doesn't depend on n [3] (i.e., it has a stationary state).

MCMC

Completely random sampling from highly dimensional distributions is very inefficient.

How to improve it?

MCMC

So what is MCMC really about?

Dermatology data set, preprocessed with PCA for visualization purposes

Given a likelihood function p, an initial guess x_0 and a set of hyper-parameters, MCMC efficiently samples from a distribution by creating a Markov chain with stationary distribution equivalent to the desired real distribution of the problem at hand.

Samples can now be drawn from the created model, simulating drawing samples from the distribution of interest.

Expectation of the created model's distribution can be easily estimated, hence finding an estimation for the expectation of the real distribution.

MCMC

So what is MCMC really about?

MCMC

import numpy.random as r

def metropolis(n_cycles, p, initial_guess, std):

samples = [initial_guess]

for i in range(n_cycles):

x_i = samples[-1]

x_c = x_i + std * r.randn()

if r.rand() < min(1, p(x_c) / p(x_i)):

samples.append(x_c)

else:

samples.append(x_i)

return samplesMCMC

Execution example

Markov chain created

Real (unknown) distribution and the generated Markov chain

How to find out the mean of test scores in a student population when only one student's score is known to be 100?

A experienced lecture knows that the scores are normally distributed with a std of 15.

MCMC

Example: in-set class

MCMC

Example: in-set class

Chains for three different initial guesses.

Dashed line: true distribution.

Continuous line: synthetic distribution.

MCMC

Example: Equation of State Calculations by Fast Computing Machines [4]

A 2-dimensional configuration of particles.

MCMC

import numpy.random as r

def metropolis(n_cycles, E, initial_guess, box_width, k, T):

samples = [initial_guess]

for i in range(n_cycles):

x_i = samples[-1]

x_c = x_i[:]

i = r.randint(x_c.shape)

x_c[i] = (x_c[i][0] + box_width * r.randn(),

x_c[i][1] + box_width * r.randn())

if E(x_c) < E(x_i) or r.rand() < (E(x_c) - E(x_i)) / (k*T):

sample.append(x_c)

else:

samples.append(x_i)

return samplesExample: Equation of State Calculations by Fast Computing Machines [4]

Limitations

Limitations

Likelihood function

The likelihood function p used must be equal or at least proportional to the true density function in the target distribution.

Markov chain created

Limitations

Proposal distribution

- High std's throw us out of the target distribution.

- Low std's might take too long to converge, or result in sub-optimal convergence (local maxima).

Highly variate chain

Lowly variable chain

Limitations

Proposal distribution

# ...

def metropolis_hastings(n_cycles, p, initial_guess, std):

# for ...

x_c ~ g(x_c, x_i)

if r.rand() < min(1, p(x_c) * g(x_i, x_c) / (p(x_i) * g(x_c, x_i))):

# return ...The proposal distribution g must be symmetric, s.a.:

x_c = x_i + std * r.randn()If not, an adjust must be made (known as Metropolis-Hastings).

Limitations

Distant initial guesses (burn-in)

Initial values are likely offset from the true distribution and therefore must be discarded.

Limitations

Multivariate distribution with correlated parameters

In practice, correlated model parameters lead to extremely slow convergence of sampling chains or even non-covergence.

Extensions

Extensions

For each parameter of the model, and accept/reject the update based on the all other parameters. I.e., use a specific conditional distribution for each parameter.

A cycle of Metropolis is finished when all parameters are updated.

Metropolis within Gibbs

Chains produced, the real distribution and

the density of the sampled values.

Extensions

Differential Evolution

Metropolis-Hastings explores within a circumference.

Keeps chains.

At every iteration, for every chain, randomly select two chains m and n and compute a new candidate as follows:

Metropolis-Hastings exploration

Differential evolution exploration

Final Thoughts

Final Thoughts

- MCMC is a very efficient way to solve very complicated problems, and can be applied to pretty much every area.

- Metropolis-Hastings was named one of the top 10 algorithm of the 20th century [5].

- It has some problems such as burn-in and convergence dependency on tuning/initial state, but extensions to handle these were developed as well.

References

[1] Ravenzwaaij, Don, Pete Cassey, and Scott D. Brown. "A simple introduction to Markov Chain Monte–Carlo sampling." Psychonomic bulletin & review (2016): 1-12.

[2] Norris, J.R. "Markov Chains", Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, 1998.

[3] Geyer, Charles. "Introduction to markov chain monte carlo." Handbook of Markov Chain Monte Carlo (2011): 3-48.

[4] Metropolis, Nicholas, et al. "Equation of state calculations by fast computing machines." The journal of chemical physics 21.6 (1953): 1087-1092.

[5] Cipra, Barry A. "The best of the 20th century: editors name top 10 algorithms." SIAM news 33.4 (2000): 1-2.