User testing

- UX testing - why it matters (story time)

- Methods

- Usability testing

- Analysis

Agenda

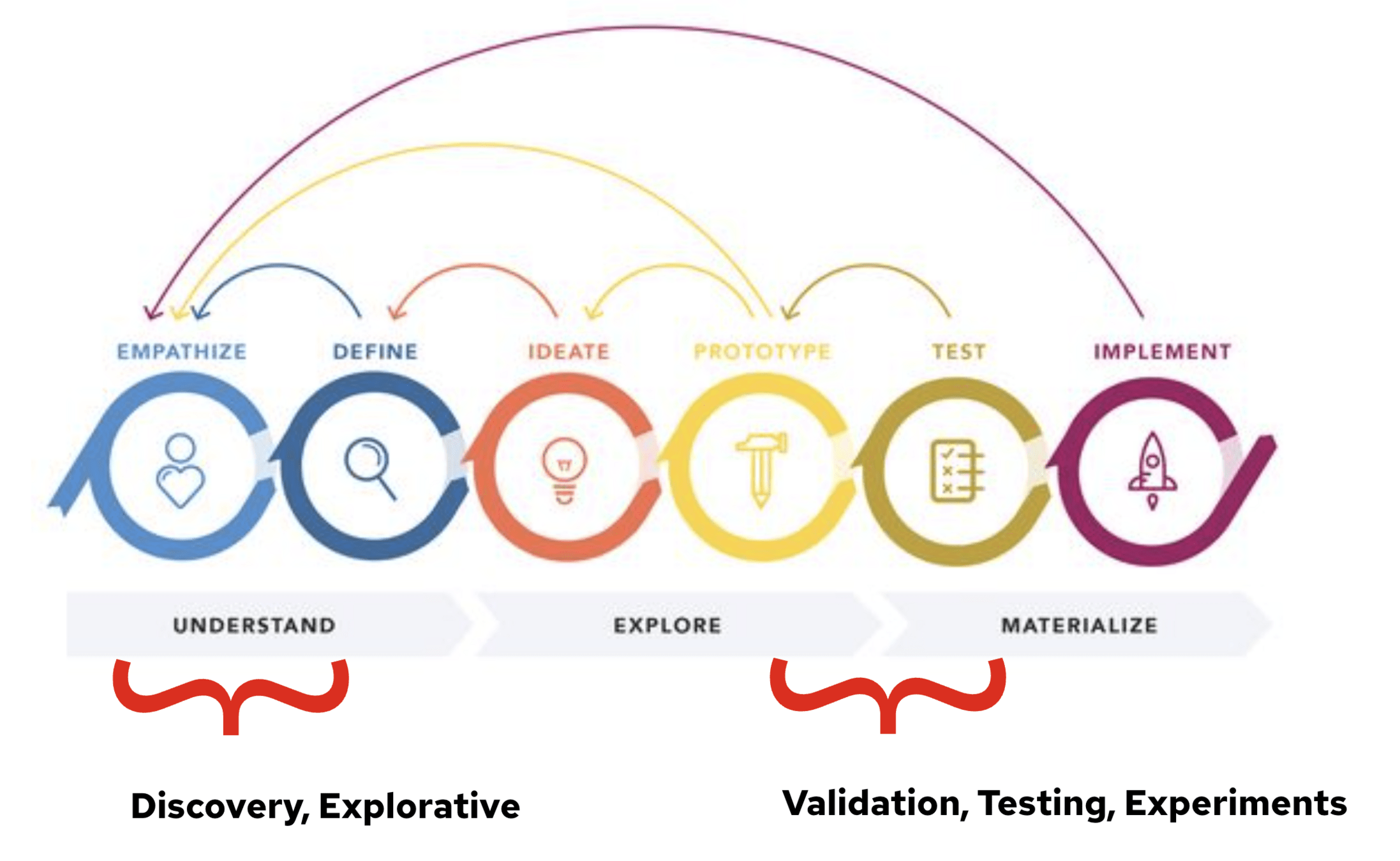

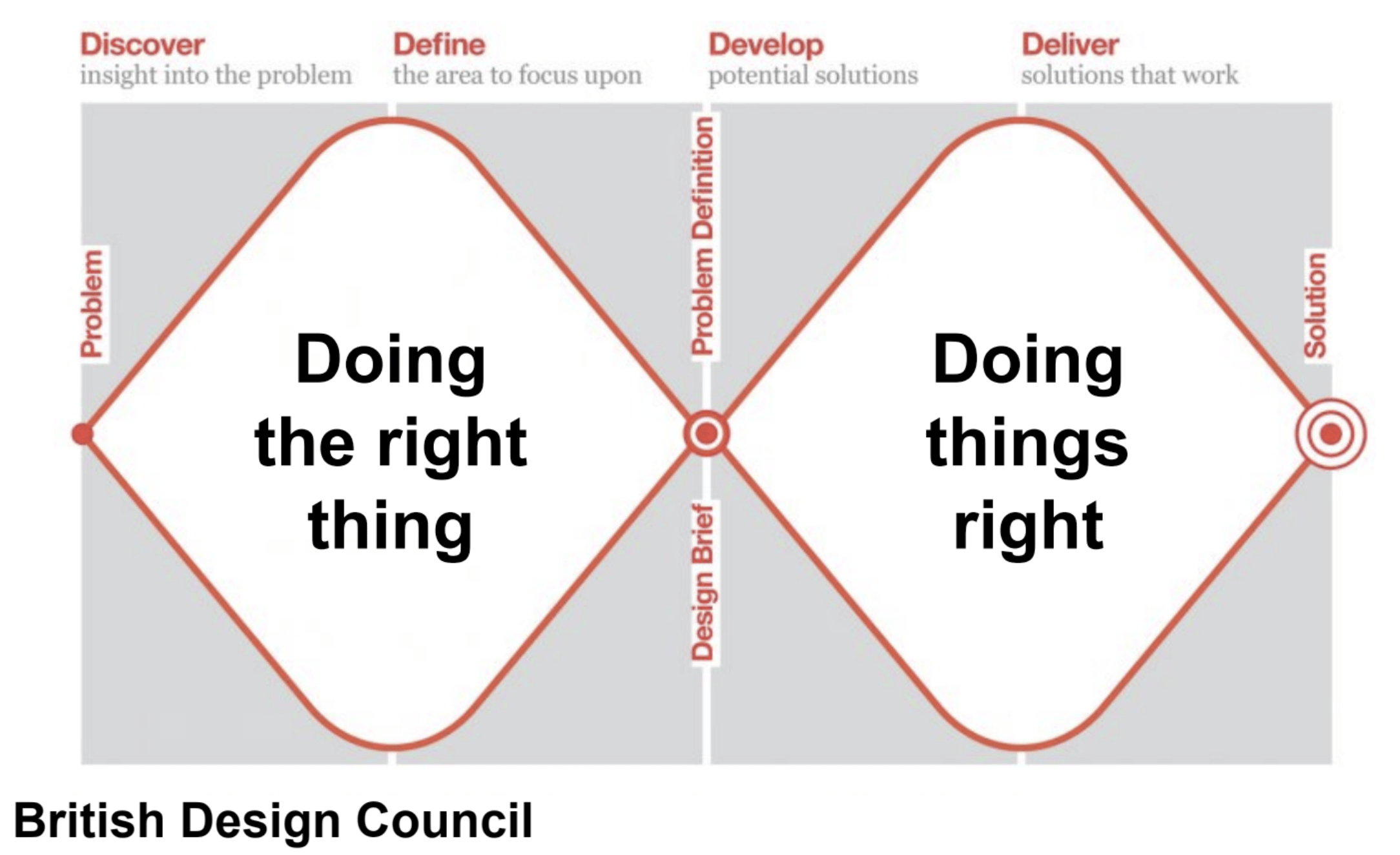

Where are we in the process?

Doing things right...

How did the Lucky Fish alleviate iron deficiency?

https://luckyironlife.com/products/lucky-iron-fish

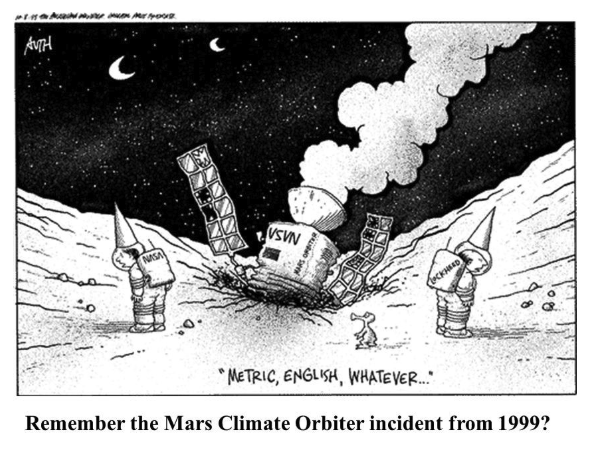

Test Early,

Test Often

https://www.simscale.com/blog/nasa-mars-climate-orbiter-metric/

Fail fast,

Fail quickly

...do it before testing to save some time

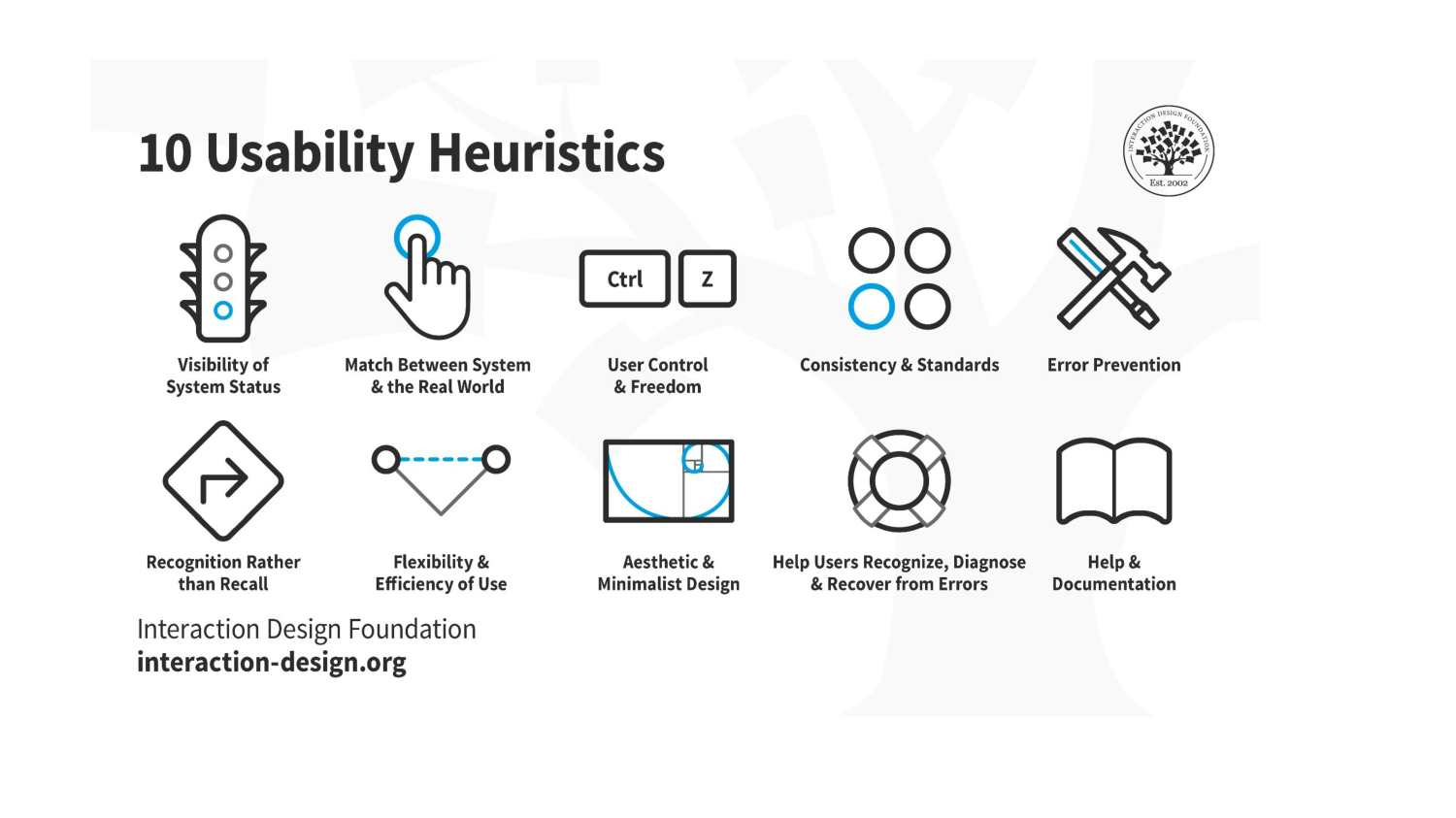

5 usability principles

Methods

| Methods | Questions | ||

|---|---|---|---|

| Qualitative | usability testing, wizard of oz, | Why? How? | Time-consuming, expensive, deep dive, 7 people |

| Quantitative | surveys, benchmarking, A/B test, umux | How many? How often? | quicker, superficial, data from analytics |

- 5-second test

- Usability testing

- Wizard of Oz

- Fake door test

- Tree testing

- UMUX

- Web analytics

- A/B testing

Some testing methods

moderated/non-moderated

self-reporting / behavioural

https://www.youtube.com/watch?v=X0FG0jCqLYQ - 5 second test

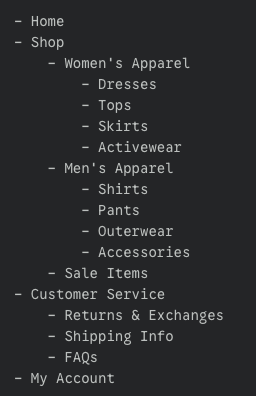

- evaluates the findability of topics within a website or app's navigation in a hierarchical, text-only version of the site.

Tree testing

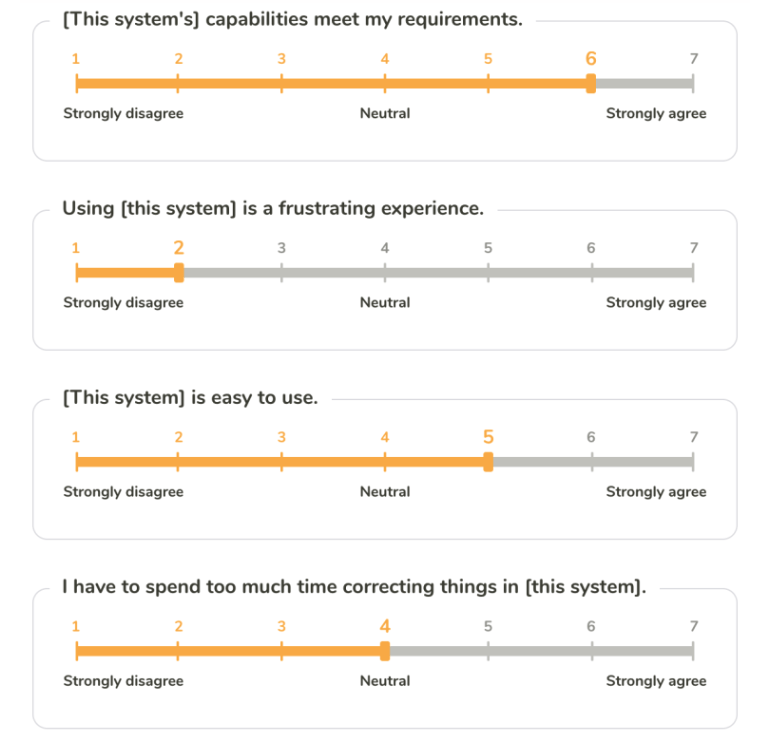

- 4-question survey designed to measure the usability

- Quick and cheap

- Benchmarking tool - support comparison

- Comes from the 10-question SUS, also UMUX-lite

UMUX

Usability Metric for User Experience

- [This system’s] capabilities meet my requirements.

- Using [this system] is a frustrating experience.

- [This system] is easy to use.

- I have to spend too much time correcting things with [this system.]

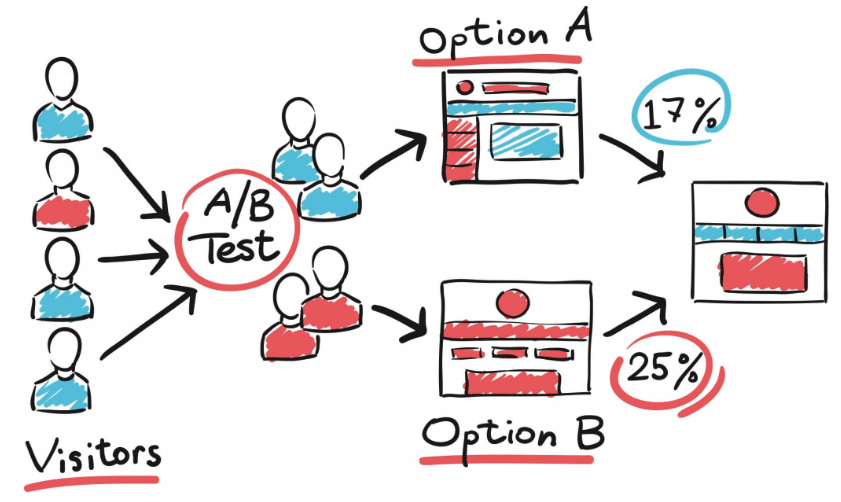

- comparing two versions of something to see which performs better

A/B testing

https://www.uxtweak.com/website-survey-tool

Fake door test

Did you know about McSpaghetti?

https://mcdonalds.fandom.com/wiki/McSpaghetti

https://www.youtube.com/watch?v=hKrWSXVx1bE

Wizard of OZ

What are you trying to find out?

Any questions? Hypothesis? What do you want to verify the most? Any uncertainty?

Even small tests matter.

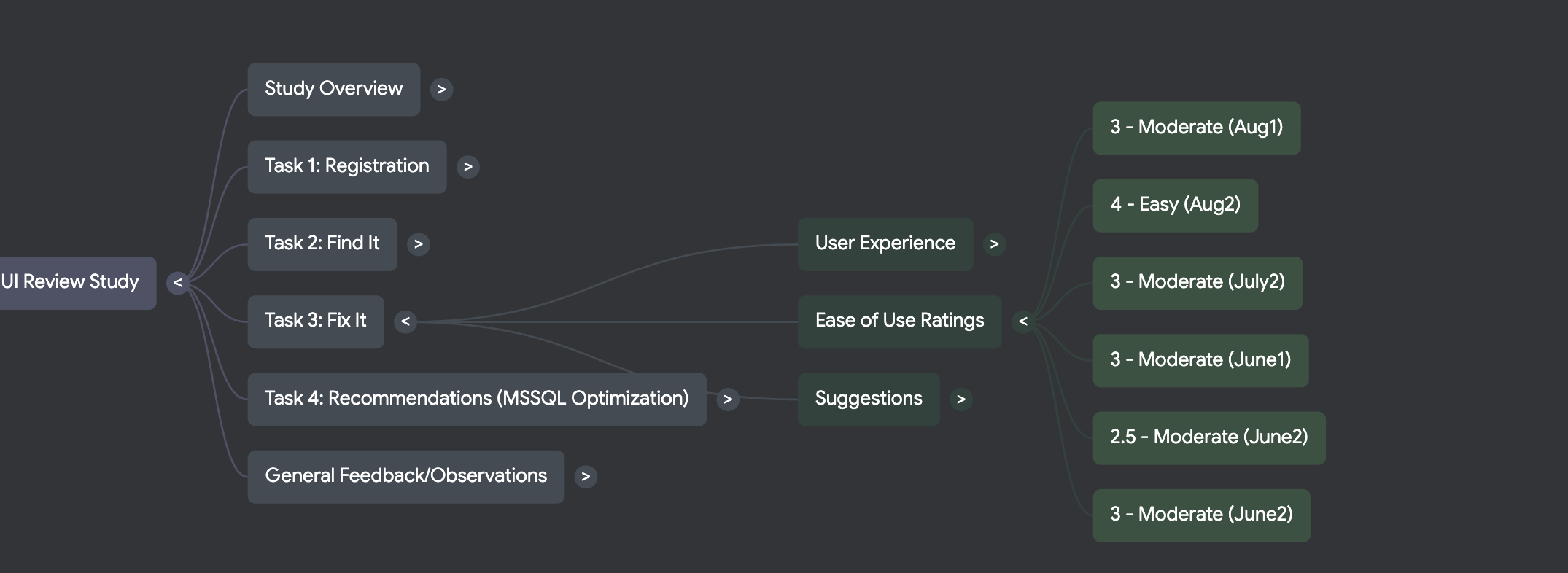

Usability testing

1. PLAN

- What do we want to find out? What is the success?

- Create a script with tasks

2. Prototype

- Must work for the tested parts

- Make sure to have a landing page with the name and description

3. Testing

- Set up a stage - story, context, introduction

- Introduce the first task

- Probing questions

- Documentation

4. Analysis

- Affinity mapping

- How bad

- How often

- INSIGHTS

Structure

-

Introduction,

-

small talk, establish trust

Intro

-

Task 1

-

Task 2

-

Task 3

-

Open ended questons

Core

-

Do you want to add something?

-

Thank you

-

Reward (?)

Wrap up

Tips and tricks

-

Establish a relationship of trust and respect

-

Use open-ended questions

-

Nodding and affirming

-

Active listening

-

Inquiring and probing

-

"Why" (but use a different word)

-

Always return to the topic

-

Replace "typically" with "the last time you"

- Don't judge or comment

-

Don't explain

-

Don't lead (no leading questions)

-

Don't interrupt

-

Don't use closed-ended questions

-

Don't explain

-

Don't use double-barreled questions

-

Don't use hypothetical questions

Compare what they DO

with what they SAY

Data analysis

-

How bad?

High: an issue that prevents the user from completing the task at all

Moderate: an issue that causes some difficulty, but doesn’t prevent the user from completing the task

Low: a minor problem that doesn’t affect the user’s ability to complete the task

-

How often?

High: 30% or more participants experience the problem

Moderate: 11–29% of participants experience the problem

Low: 10% or fewer of participants experience the problem

-