TDD session #1

generic overview

purpose of this session

common ground

terminology

workflow

good / bad practices

initiate a conversation

where to start

how to adapt

recommend

dev process

resources

goals

front-end devs

understand principles

enjoy writing tests

our codebase

maintainable

performant

easy to move forward

three type of questions

WHATs

definitions

terminology

WHYs

concepts

good practices

HOWs

challenges

patterns

the WHATs

WHAT is a unit?

a module

a component

a function

WHAT is test code?

requirements / AC turned into code

also documentation of your code

But WHAT is production code then?

manifestation of requirements

your creation helping people out there

so make sure it works properly === tested :)

(some TDD obsessed just say "side effect")

WHAT is the cycle of TDD?

RED: write a new test case, see it fail

GREEN: fulfil it by implementing functionality

REFACTOR: make it more clean / efficient

1. Red

understand your requirements

write your first test case / assertion

run your tests and see the new one fail

2. Green

write the least sufficient production code

don't worry about design, just make it work

3. Refactor

make your changes, clean up the mess :)

look for code smells (duplication, etc), fix them

after each tiny bit of refactoring, run your tests

Make it work. Make it right. Make it fast.

Kent Beck (father of TDD)

Refactoring is not something you do at the end of the project

Uncle Bob Martin (cleancoder.com)

it's something you do on a minute-by-minute basis

Refactoring is a continuous in-process activity

not something that is done late (and therefore optionally)

WHAT are stubs, mocks, fakes, spys?

helpful patterns to make devs life easier

whilst we are writing tests

we call them test doubles

test double

any kind of pretend object is a test double

in place of a real object for testing purposes

term origin: stunt double

stubs

you can stub out e.g. a method of an object

to return a certain value

your test cases can rely on

spys

keep track of method calls

what arguments they were called with

help when testing contract between components

can be overused in a wrong way though (!)

fakes

fully working test doubles

faking behaviours of a collaborator object

resolving their own dependencies (e.g. in-memory db)

to help you test dependant objects easier

mocks

a pre-set doubles of a collaborator objects

containing certain expectations / assertions

so your tests won't be bloated

and you can keep them DRY

The WHYs

WHY would you write tests?

must test our code somehow

spotting mistakes immediately

finding bugs quickly

knowledge transfer + documentation

(automated, of course)

WHY not just go "Cowboy"?

writing code that works by pure luck

test if it works manually, or just forget

ashamed when demoing, it "should" work!

unintentionally introduce regressions

MYTH: "TDD slows you down"

yes, it has a learning curve + needs practicing

yes, needs a completely different mindset

but gives you confidence + piece of mind

less pain, more gain! :)

it's all about TIME

fixing OR preventing a defect?

might take more time up-front (for a Dev)

reduces time later (for more people involved)

debugging time converges to zero

more time to create and experiment

WHY unit tests?

peace of mind: test and "forget"

unit tests document components

tests have their own place (e2e, integration, smoke etc)

helps devs how to use them

fast!

unit testing drives design

modular as opposed to monolithic

testable pieces of code

WHY test driven, test first?

helps you

understand what needs to be done

immediate feedback when not clear

write the only necessary code

requirements >>> automated tests

better code

easier to change

shortens turnaround time / release cycle

TDD improves design

automated testing !== TDD

remember

TDD === writing tests first

WHY break your test?

is potentially dangerous

breaking tests helps to spot mistakes

made in your tests

getting used to writing and relying on tests

WHY run tests in automation?

on each file save tests should run

so you can experiment quickly

we tend to forget running tests (manually)

and spot mistakes immediately (!)

it's all about TIME

unit test suite must be run in seconds

at most

remember

WHY refactor your tests?

keep them tidy and fast

you will improve how you write tests over time

tests are code, keep them DRY

refactoring vs rewriting

rewriting is changing code

refactoring is keeping behaviour + improving code

tests are your requirements

change them when requirements changed

remember

WHY test code in isolation?

all have a contract with the outside world

test their behaviours

components are LEGO(tm) blocks

treat them as blackboxes / 3rd party libs

WHY integration tests?

we create functionality by composing them

are dependencies used as expected?

components must play together nicely

still "unit level"

WHY end-to-end tests?

make sure they're complete

manual testing is tedious and error prone

user journeys defined in AC / stories

WHY team effort?

discuss test cases with your tester!

before you start ;)

figuring how to test something properly is sometimes difficult

WHY avoid spying?

do not write test against implementation

writing tests AFTER might require it

spying tends to drive design to the wrong direction

it prevents refactoring

WHY is it red sometimes?

because it is non-deterministic

unreliable dependencies

ok, but how to prevent it?

set up unique test context for each test case

run tests in isolation

The HOWs

HOW to deal with bugs?

hunting down bugs is the most time consuming

when a bug is discovered

write test(s) to reproduce the bug, see them fail

fix production code, make it green

HOW to do efficient unit testing?

automation and speed is the key

least amount of tests + make them fast

run them as often as possible

HOW to deal with duplicates?

the bigger the team, the more chance

why test something twice? code smell?

tidying up / refactoring is always encouraged!

but comprehensiveness has priority

HOW to

write human readable tests?

are easy to understand, even for non-devs

describe behaviours

give good examples on how to use a component

good test cases

Bad example

Good example

HOW to deal with dependencies?

other code / component, database, remote service etc.

avoid coupling, inject dependencies

it's all about the contract between components

fake / stub / mock dependencies of the unit-under-test

enclose 3rd parties into adapters

3rd party libs

allows you to consider replacing / updating them

good test coverage

poor coverage

base jumping naked! :P

it's all about TIME

remember

don't let dependencies slow you (and your tests) down!

HOW to write just enough tests?

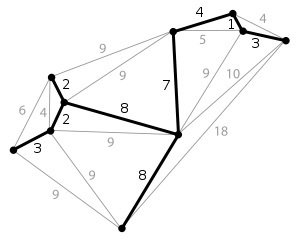

imagine the test suite as a Minimum Spanning Tree

nodes === requirements

edges === tests

translated to unit testing

find the minimal amount of tests

to cover a component and fulfil all requirements

possible multiplicity

there might be several spanning trees in a graph

there might be several ways to cover all requirements

there's no such a thing as the "only" right solution

HOW to add coverage afterwards?

probably the most difficult thing in TDD

code written without tests is already LEGACY code

"code written without tests is BAD code,

doesn't matter how well-written it is"

Michael Feathers

difficult because

you have to set aside the current implementation

to focus on real requirements

understand and write tests against them

adding tests afterwards is

you will most likely

have to break dependencies

run into several difficulties

rewrite components instead of improve them

whilst refactoring existing code

HOW can I still find

the joy of crafting code?

we always start writing tests first in TDD

which is a challenge itself :)

but implementing functionality also remains a challenge

it's about HOW you approach problems

try to find joy in

writing proper test cases

which all your peers can understand easily

writing just enough tests and still cover everything

refactoring your code with confidence you've never felt

focusing on cool stuff instead of hunting bugs :)

TDD is an ART

the more you practice it

the more you enjoy it

is pretty much learning how to ride a bicycle

first you need to learn balancing

and might need a helping hand

then you will try it out yourself

over time it will become an instinct

Recommended resources

Next session?

Creating HTML5 apps / pages via TDD

automated tests for HTML / CSS / JS