Content generation in Tensorflow

Martyn Garcia

Mandatory disclaimer

- not imagination

- deep networks

- unique content generation

Statistical models

HyperGAN

focused on scaling

https://github.com/255BITS/HyperGAN

Unsupervised learning

Supervised data for a dog dataset:

* each image labelled by breed

* hard, error prone

Unsupervised data for a dog dataset:

* scrape reddit/r/dogpics

Sequence

- char rnn

- sketch

- wavenet

Real-valued

- autoencoder

- gan

- vae

- hybrid

TF Quiz

What type of layer does this function create?

tf.nn.xw_plus_b

TF Quiz

What type of layer does this function create?

tf.nn.xw_plus_b

Linear layer

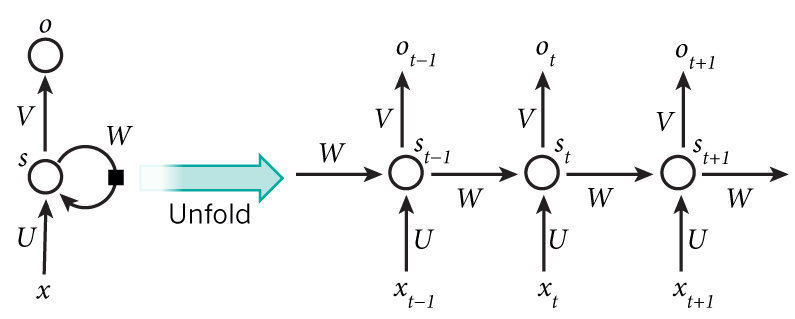

char-rnn

Character

vector

RNN

Softmax

char-rnn

Character vectors example

| a | (1 0 0) |

| b | (0 1 0) |

| c | (0 0 1) |

x = np.eye(vocab)[char]

tf_x = tf.constant(x)

sess.run(tf_x)

char-rnn

RNN

tf.nn.rnn_cell.LSTMCell

tf.nn.rnn_cell.GRUCell

char-rnn

Softmax loss

net = rnn(input)

net = linear(net, vocab_size)

loss = tf.nn.softmax_cross_entropy_with_logits(net, y)

char-rnn

Example output

char-rnn

Drawbacks:

Sampling

Invalid character combinations

No higher level meaning

TF QUIZ

What is this called?

net = tf.maximum(0, net)

TF QUIZ

tf.nn.relu

sketch

https://github.com/hardmaru/sketch-rnn

https://arxiv.org/pdf/1308.0850v5.pdf

Stroke

vector

RNN

Mixture

Density

Network

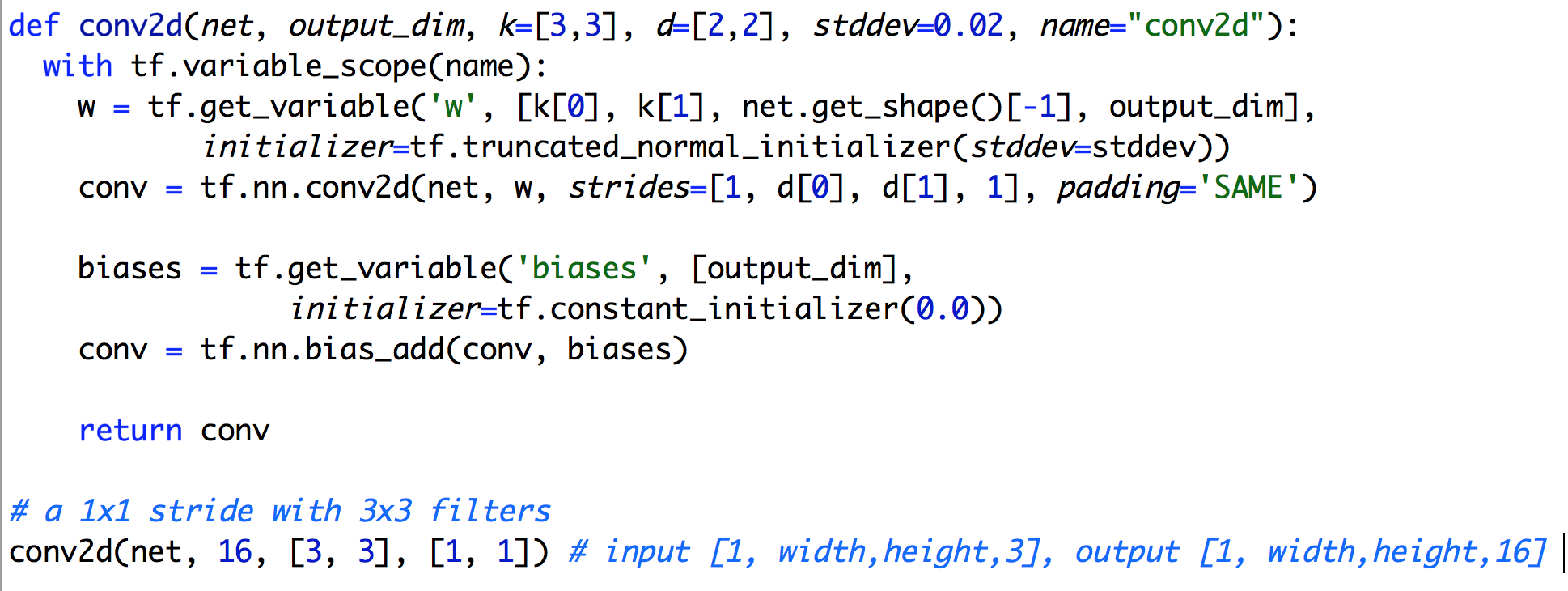

Conv

Locally connected layers with shared weights

https://github.com/vdumoulin/conv_arithmetic

tf.nn.conv2d

TF QUIZ

What is this called?

net = tf.maximum(net, 0.2*net)

TF QUIZ

Leaky Relu!

| def lrelu(x, leak=0.2, name="lrelu"): | |

| return tf.maximum(x, leak*x) | |

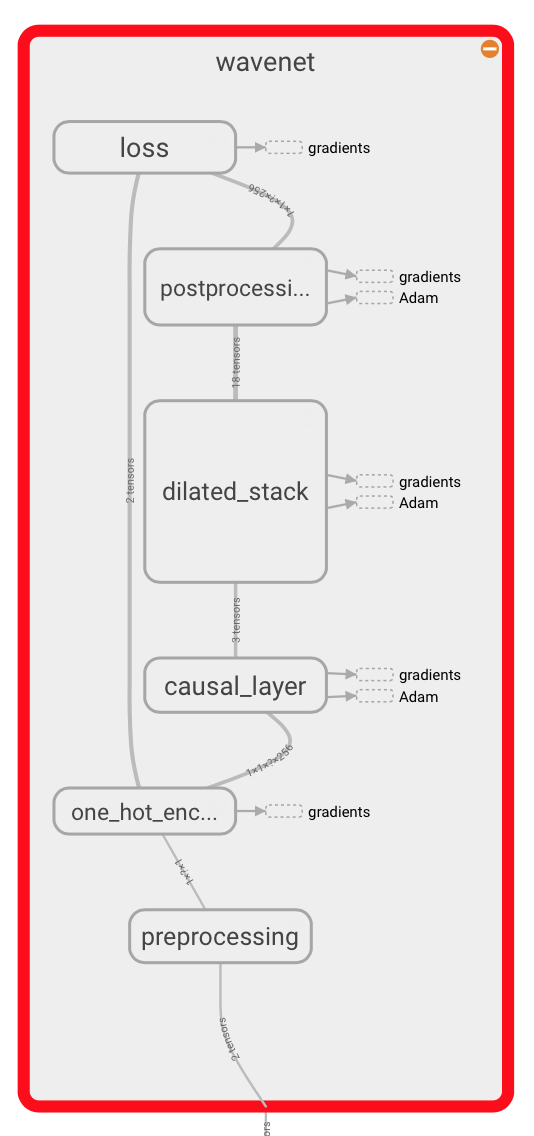

Wavenet

https://github.com/ibab/tensorflow-wavenet

Generates audio using a pixel-cnn inspired architecture.

Incredible results from Google

https://deepmind.com/blog/wavenet-generative-model-raw-audio/

Auto Encoder

Encoder

z

Generator

Loss

MSE(G(z), x)

x

tf.square(G(z)-x)

MSE in Tensorflow

64x64x3

128

64x64x3

Auto Encoder

Encoder

z

Generator

x

Problem: This doesn't work well

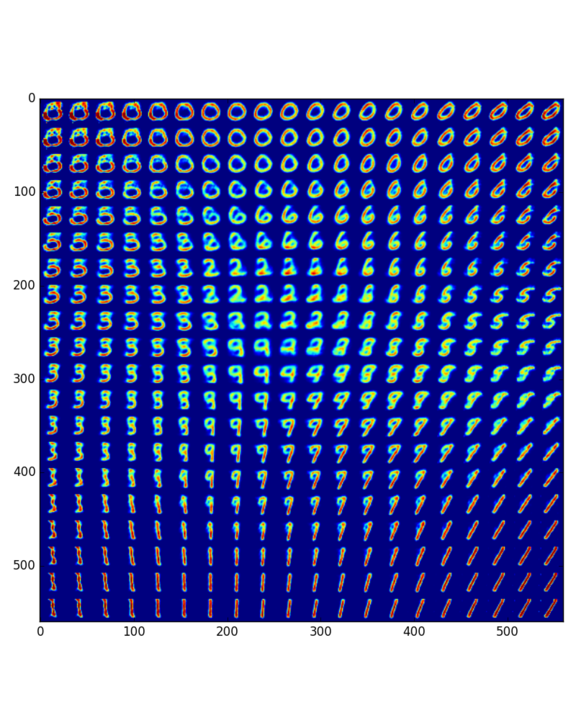

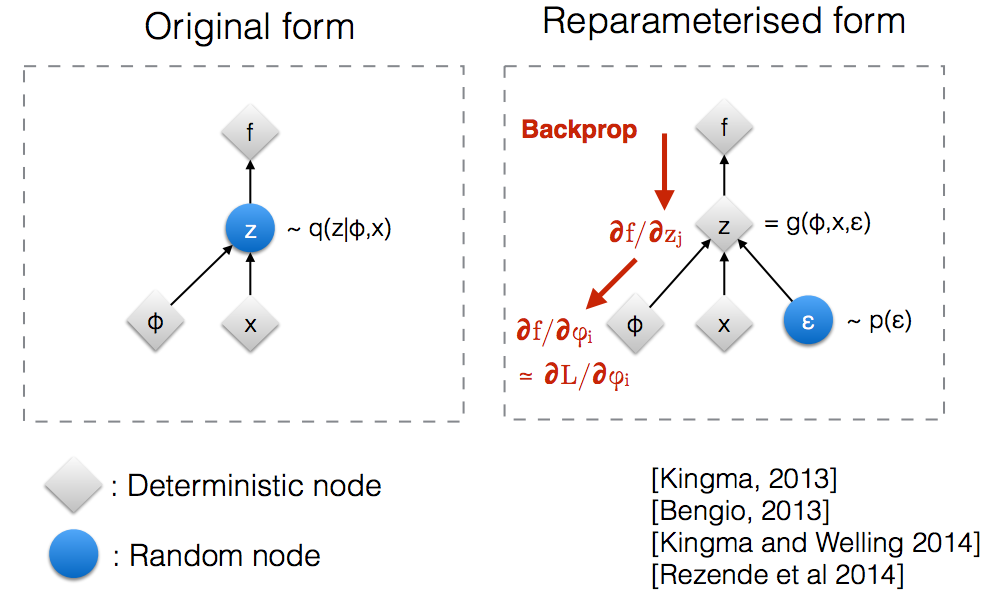

VAE

z = 2

http://github.com/255bits/hyperchamber

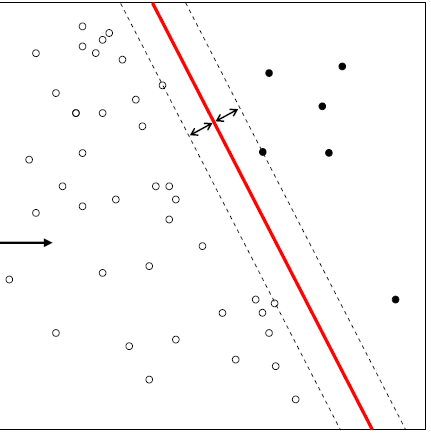

GAN

z

Generator

gloss

Discriminator

x

dloss

Xent(D(G(z)),1)

Xent(D(x),1) + Xent(D(G(z)),0)

GAN

z

Generator

Discriminator

x

This, and the variations that are now being proposed is the most interesting idea in the last 10 years in ML, in my opinion.

LeCun https://www.quora.com/What-are-some-recent-and-potentially-upcoming-breakthroughs-in-deep-learning

Other techniques

- Real NVP (Invertible discriminator)

- Pixel RNN / CNN

- Image generation from caption

- Super Resolution

- Machine Translation

- Auto-captioning images

- Exciting papers constantly being released

- Not a comprehensive list

HyperGAN