Airflow

Agenda

- Get to know what is Airflow

- Get a better understanding on when should I use it

- Case study: How we did in R**

-

It started at Airbnb in October 2014.

-

Became a Top-Level Apache Software Foundation project in January 2019.

Start your first airflow pipeline

export AIRFLOW_HOME=~/airflow pip install apache-airflow airflow initdb (You may need to `pip install werkzeug==0.16.0`) airflow webserver -p 8080

- Web server

- Scheduler

- Worker

Concept

- DAG

- Task

- Operator

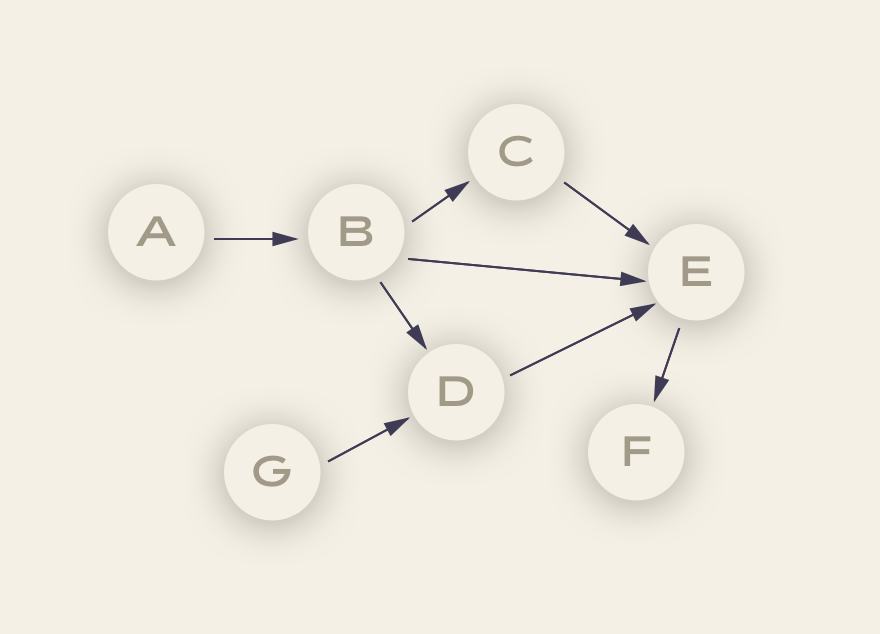

DAG

- Directed Acyclic Graph

- A collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies.

- Defined in a Python script

A DAG run is a physical instance of a DAG, containing task instances that run for a specific execution_date.

DAG

Directed - If multiple tasks exist, each must have at least one defined upstream (previous) or downstream (subsequent) tasks, although they could easily have both.

Acyclic - No task can create data that goes on to reference itself. This could cause an infinite loop.

Graph - All tasks are laid out in a clear structure with discrete processes occurring at set points and clear relationships made to other tasks.

Task

- Defines a unit of work within a DAG.

- Represented as a node in the DAG graph.

- Implements an operator by defining specific values for that operator.

Operator

- Determine what actually gets done by a task.

- BashOperator

- EmailOperator

- SimpleHttpOperator

- Sensor - an Operator that waits (polls) for a certain time, file, database row, S3 key, etc…

with DAG('my_dag', start_date=datetime(2016, 1, 1)) as dag: task_1 = PythonOperator('task_1') task_2 = BashOperator('task_2') task_1 >> task_2 # Define dependencies

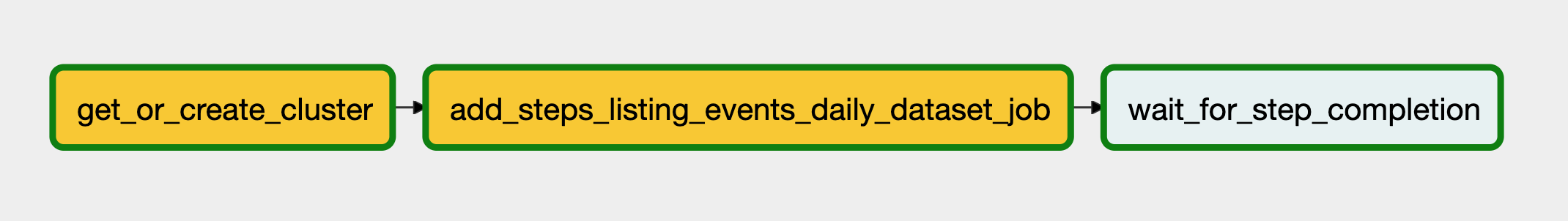

SubDAGs TaskGroups

with TaskGroup("group1") as group1:

task1 = DummyOperator(task_id="task1")

task2 = DummyOperator(task_id="task2")

task3 = DummyOperator(task_id="task3")

group1 >> task3

Now let's talk about something unique here

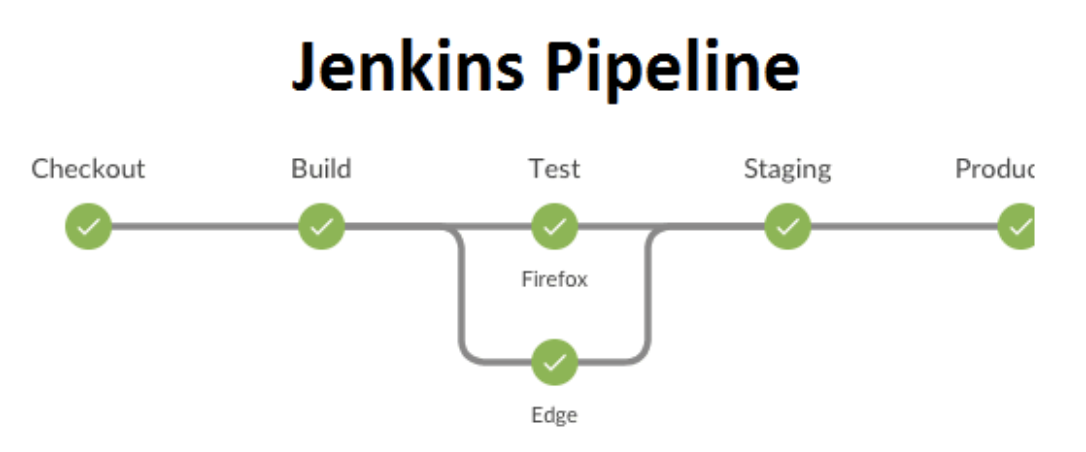

Is that a CI tool?

Airflow

CI tool

Scheduler

Worker

Web Server

Master

Slave/Client

Web Server

Airflow

CI tool

plugin

logging

monitoring

visualizing

plugin

logging

monitoring

visualizing

Airflow

CI tool

What's the difference?

Airflow

CI tool

For a scheduled pipeline, its output will be the same no matter when we run it.

Focus on CODE.

For a scheduled pipeline, executing on different days will have different results.

Focus on DATA.

Airflow

CI tool

Manage a list of shell commands.

Manage variant task groups.

- Abstraction

- Modular

- Reusability

- Extensibility

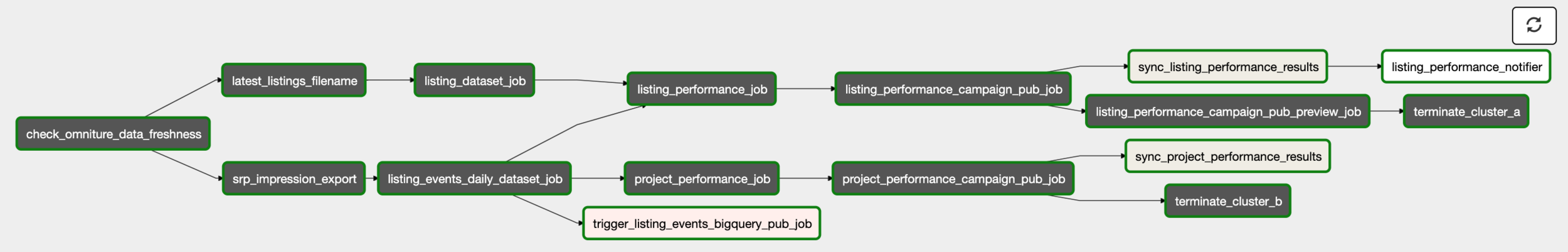

Airflow in R**(AU)

怎样更加高效地使用Airflow (用R当例子

/data-platform/airflow

/data-platform/airflow-rea-common

-

Shared python library for Airflow DAGs

As fragile as global CSS

-

python dev?

-

focus on data makes it hard to test in local

-

you may break the whole Airflow

/data-platform/bg-ingestor

/data-management/airflow-audmax

/data-platform/breeze

Improvement Areas

More friendly concept

- execution_date - can we stop the confusion?

Real event-based triggering

- Time-based trigger based on a cron schedule

- On-demand/ad-hoc trigger using APIs.

- Using TriggerDagRunOperator

- ?

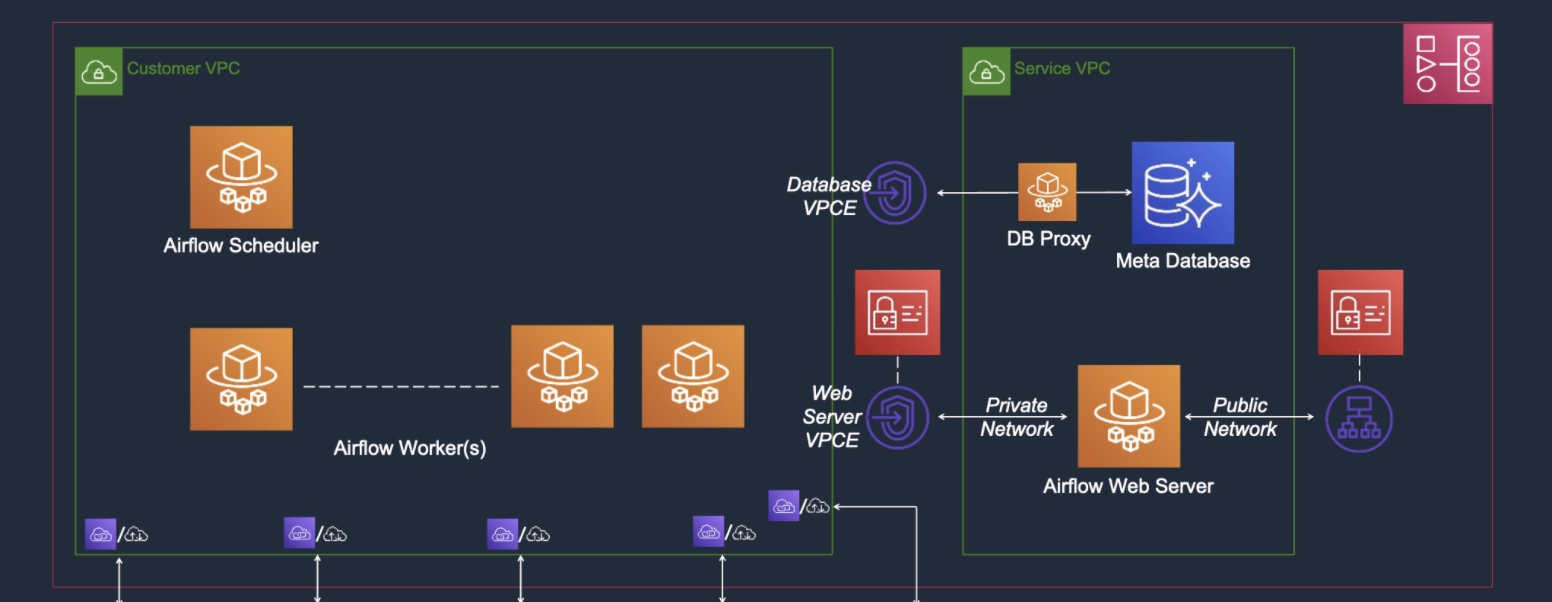

Airflow in Cloud

MWAA in AWS

Amazon Managed Workflows for Apache Airflow starting from November 2020