RESILIENCE PATTERNS

USING RESILIENCE4J

What is resilience?

AWS: “The capability to recover when stressed by load (more requests for service), attacks (either accidental through a bug, or deliberate through intention), and failure of any component in the workload’s components.”

Ability to handle unexpected errors and failures, recover from those failures, and maintain a consistent level of performance despite external challenges.

Main goal is build robust components that can tolerate faults within their scope.

The more components a system has, the more likely it is that something will fail.

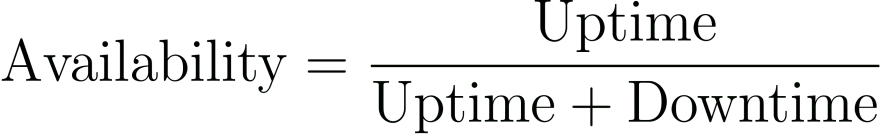

# AVAILABILITY

Availability

It expresses the amount of time a component is actually available, compared to the amount of time the component is supposed to be available

High availability is a characteristic of a system that aims to ensure that the system is continuously operational and able to provide its services to users without interruption.

Traditional approaches aim at increasing the uptime, while modern approaches aim for reduced recovery times(downtime)

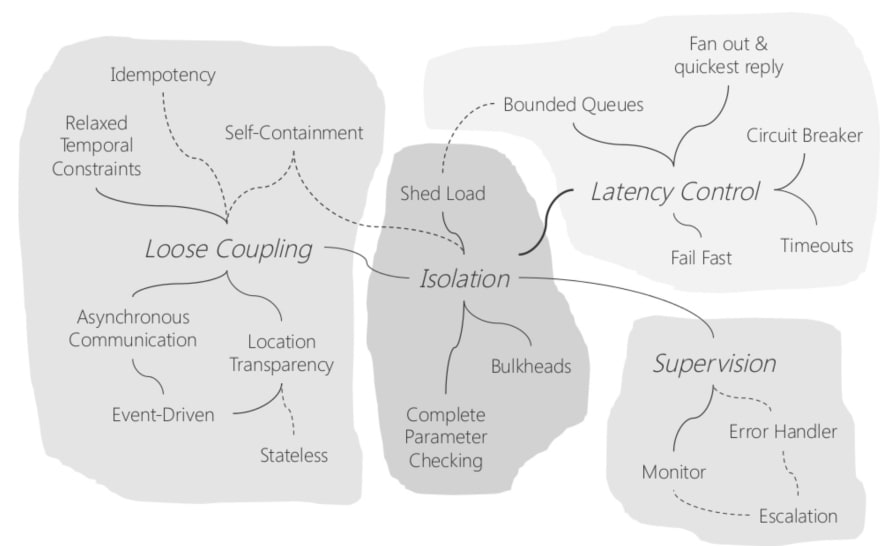

Categorization of resilience design patterns by Uwe Freidrichesen

Resilience4J

# RESILIENCE

Resilience4J is a lightweight fault tolerance library designed for functional programming.

This library was inspired by Netflix Hystrix.

Circuit Breaker

1.

Rate Limiter

2.

Retry

3.

Bulkhead

4.

Time Limiter

5.

# PRESENTING CODE

| Hystrix | Resilience4j |

|---|---|

| uses a thread-per-request model | uses a more lightweight and modular approach |

| integrates with several other libraries and frameworks, such as Spring and Netflix OSS | designed to be more standalone |

| includes a dashboard for monitoring the health and performance of your system | does not have a built-in dashboard but can be used with other monitoring tools |

Key differences between Resilience4j and Hystrix:

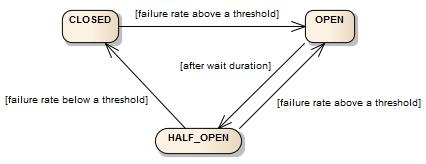

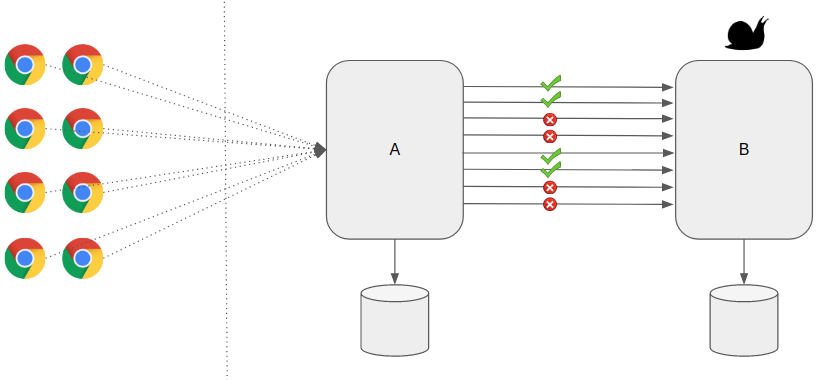

Circuit Breaker

The idea of circuit breaker is to prevent calls to a remote service if we know that the call is likely to fail or time out. This way, we don’t unnecessarily waste critical resources both in our service and in the remote service.

If there are failures in the Microservice ecosystem, then you need to fail fast by opening the circuit. This ensures that no additional calls are made to the failing service so that we return an exception immediately.

Two types of circuit breaker:

1. count-based (last N number of calls failed)

2. time-based (responses in the last N seconds failed)

Circuit breaker has 3 states:

- Closed

- Open

- Half-Open

# STATES

# CIRCUIT BREAKER

When not to use circuit breaker?

- when the system has low traffic

- when the system is experiencing non intermittent failures

- when the system is designed to handle failures

- when the system can't afford downtime

@Override

@CircuitBreaker(name = "circuitBreakerApi",

fallbackMethod = "fallback")

public String getCircuitBreaker() throws Exception {

System.out.println("Hello World");

throw new Exception("Error");

// Throw an exception and trigger the fallback method

}

public String fallback(Throwable t) {

System.out.println("Fallback");

return "Sorry ... Service not available!!!";

}# SERVICE

#path=resilience4j.circuitbreaker.instances.circuitBreakerApi

path.registerHealthIndicator=true

path.ringBufferSizeInClosedState=44

path.ringBufferSizeInHalfOpenState=2

path.waitDurationInOpenState=30s

path.failureRateThreshold=60# CONFIGURATION

Circuit Breaker configuration

Rate Limiter

Rate limiting is a technique used to control the rate at which a service or system processes requests in order to prevent it from being overwhelmed or degraded.

It can be used to maintain performance and stability, and ensure that resources are used efficiently.

Incoming requests are analyzed and compared to a set of rules, and only a certain number of requests are allowed to pass through per unit of time.

# RATE LIMITER

When not to use rate limiter?

- when the system is experiencing high traffic

- when the system has limited resources

#path=resilience4j.ratelimiter.instances.rateLimiterApi

resilience4j.ratelimiter.metrics.enabled=true

path.register-health-indicator=true

path.limit-for-period=5

path.limit-refresh-period=60s

path.timeout-duration=0s

path.allow-health-indicator-to-fail=true

path.subscribe-for-events=true

path.event-consumer-buffer-size=50# CONFIGURATION

Rate Limiter configuration

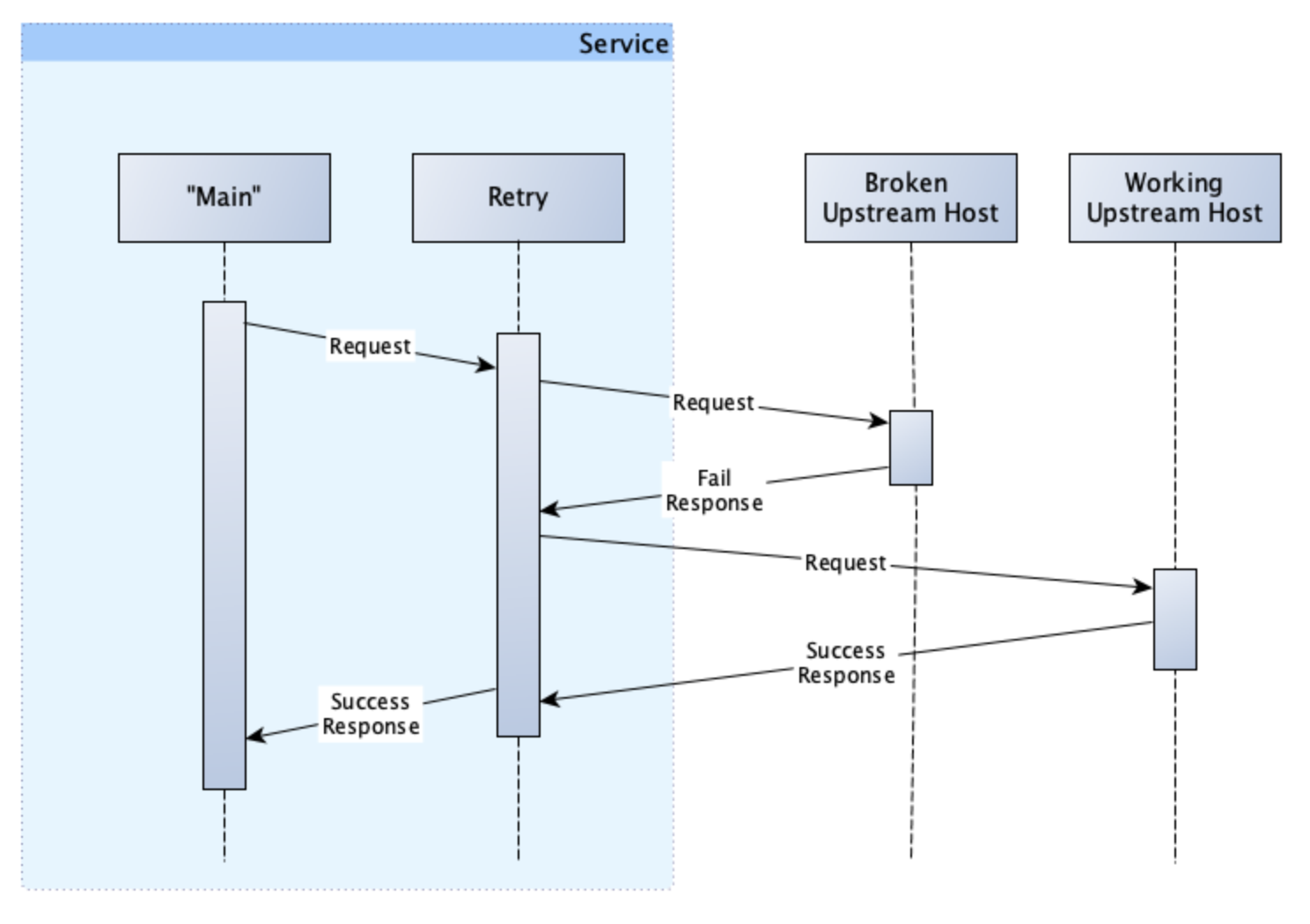

Retry

Enabling an application to handle transient failures when it tries to connect to a service, by transparently retrying a failed operation.

This minimizes the effects faults can have on the business tasks the application is performing.

Strategies:

- Cancel

- Retry

- Retry after delay

# RETRY

When not to use:

- when a fault is likely to be long lasting

- for handling failures that aren't due to transient faults (internal exceptions, errors in business logic)

# RETRY

# CONFIGURATION

Retry configuration

#path=resilience4j.retry.instances.retryApi

path.max-attempts=3

path.wait-duration=2s

path.enable-exponential-backoff=true

path.exponentialBackoffMultiplier=2

resilience4j.retry.metrics.legacy.enabled=true

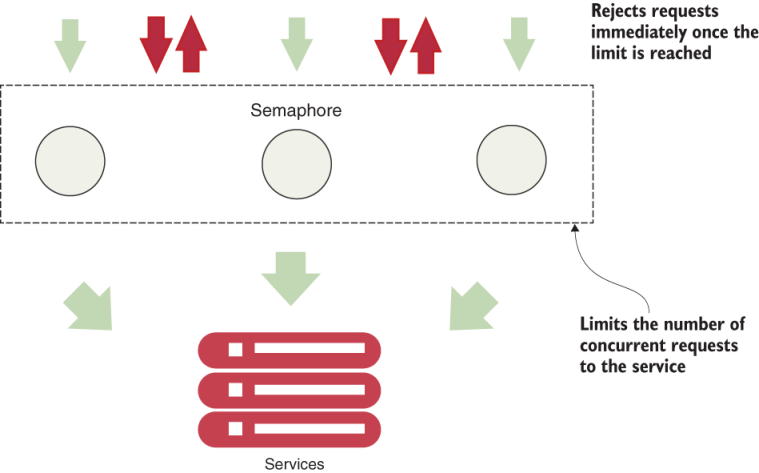

resilience4j.retry.metrics.enabled=trueBulkhead

In a bulkhead architecture, elements of an application are isolated into pools so that if one fails, the others will continue to function.

All services need to work independently of each other, so that bulkhead can be implemented.

Strategies:

- semaphore

- fixed thread pool bulkhead

# BULKHEAD

When to use bulkhead:

- when the system is not experiencing high concurrent request load

- when the system does not depend on external resources

# BULKHEAD

Fixed thread pool bulkhead

#path=resilience4j.bulkhead.instances.bulkheadApi

resilience4j.bulkhead.metrics.enabled=true

path.max-concurrent-calls=3

path.max-wait-duration=1# CONFIGURATION

Bulkhead configuration

Time Limiter

Amount of time we are willing to wait for an operation to complete is called time limiting. If the operation does not complete within the time, it will throw exception.

This pattern ensures that users don't wait indefinitely or take server's resources indefinitely.

Main goal is to not hold up resources for too long.

# CONFIGURATION

When to use time limiter?

- when the system is experiencing slow response times

- when the system depends on external resources

#path=resilience4j.timelimiter.instances.timeLimiterApi

resilience4j.timelimiter.metrics.enabled=true

path.timeout-duration=2s

path.cancel-running-future=true# CONFIGURATION

Time Limiter configuration

Sources:

- https://resilience4j.readme.io/docs

- https://firatkomurcu.com/microservices-bulkhead-pattern

- https://blog.codecentric.de/resilience-design-patterns-retry-fallback-timeout-circuit-breaker

- https://www.datacore.com/blog/availability-durability-reliability-resilience-fault-tolerance/

- https://www.baeldung.com/spring-boot-resilience4j