CS6015: Linear Algebra and Random Processes

Lecture 42: Information Theory, Entropy, Cross Entropy, KL Divergence

Learning Objectives

Slides to be made

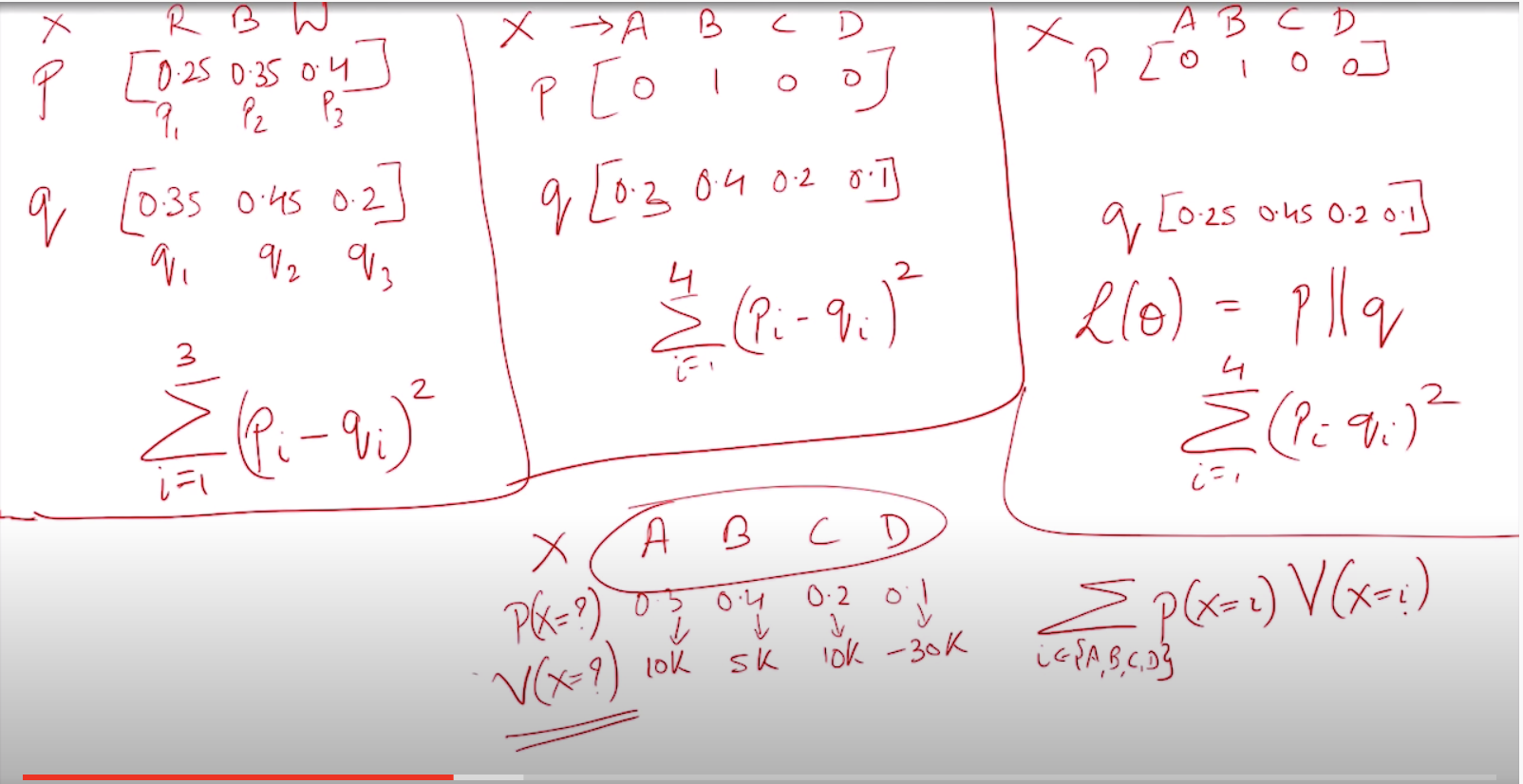

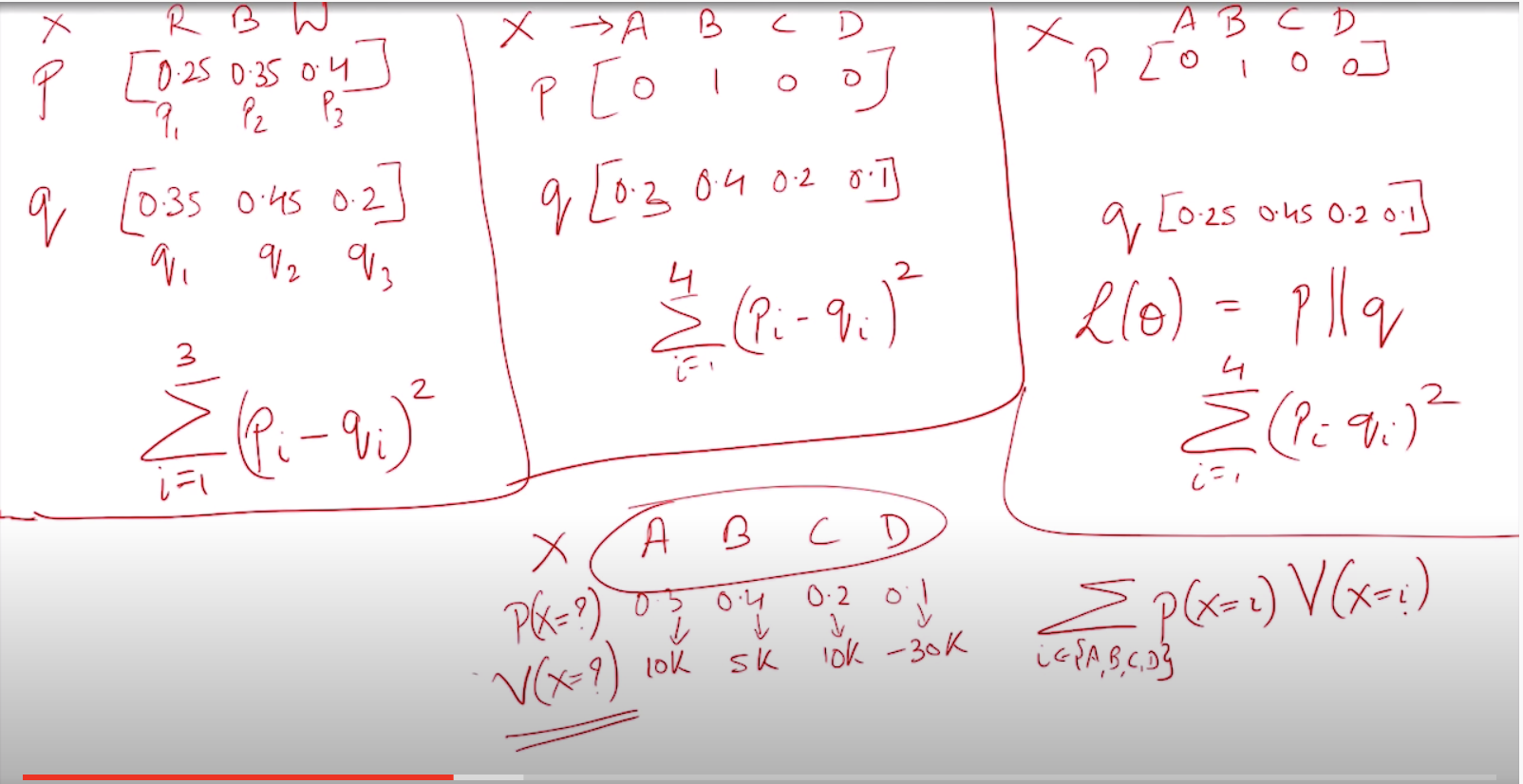

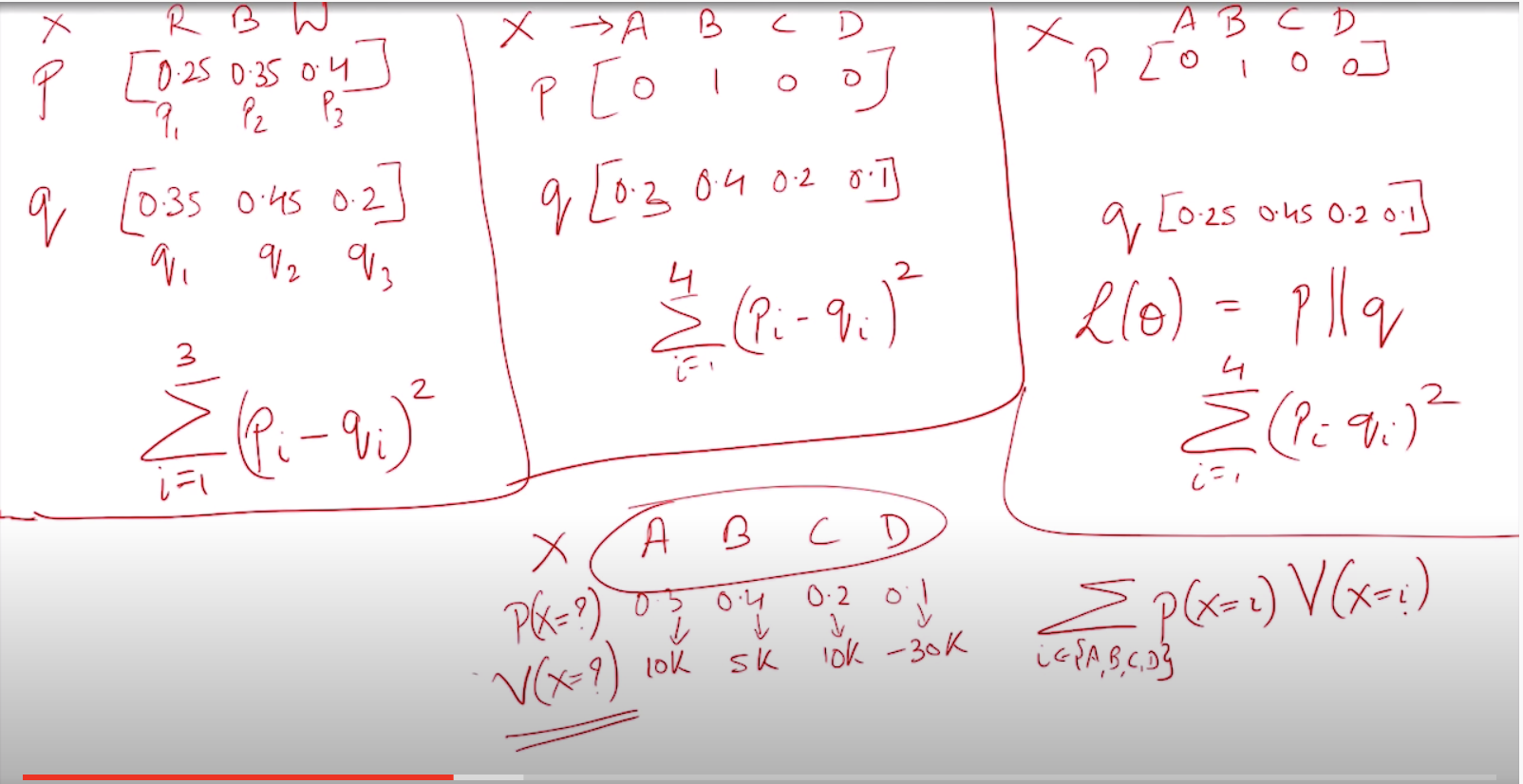

A prediction game

A prediction game (with certainty)

The ML perspective

Compute the difference between the true distribution and the predicted distribution

We will take a detour and then return back to this goal

Goal

Learning Objectives

Slides to be made

Information content

Is there any information gain?

sun rise in the east

moon in the sky

lunar eclipse

Can you relate it to probabilities ?

More surprise = more information gain

low probability = more information gain

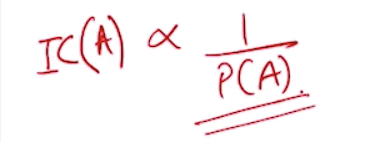

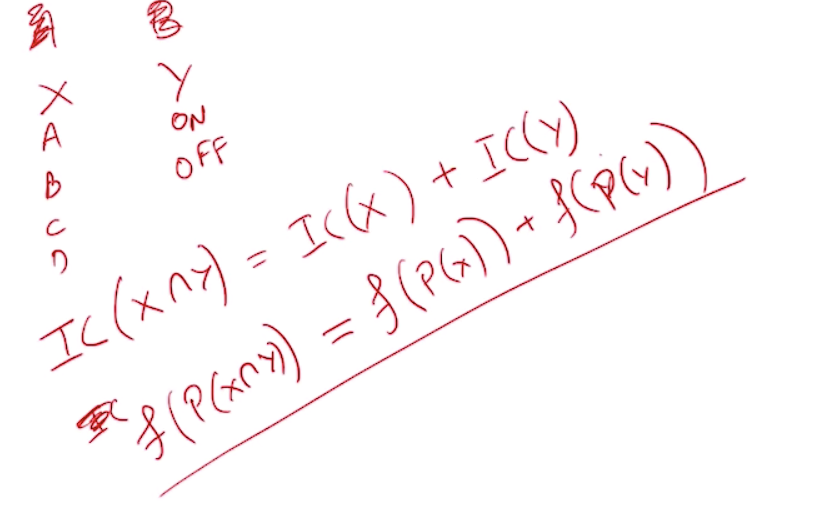

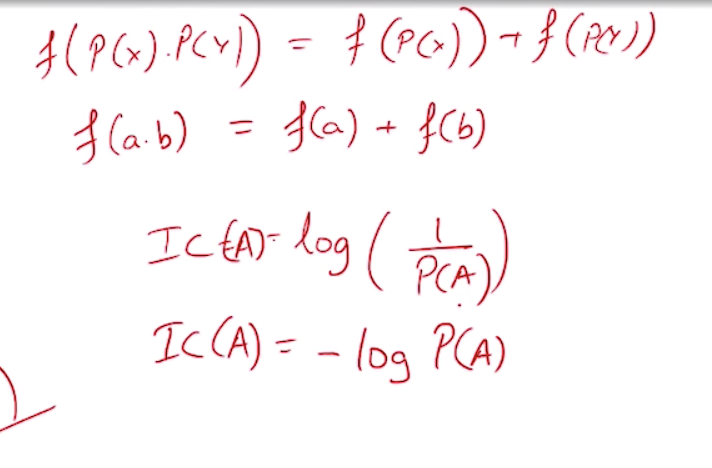

Information content

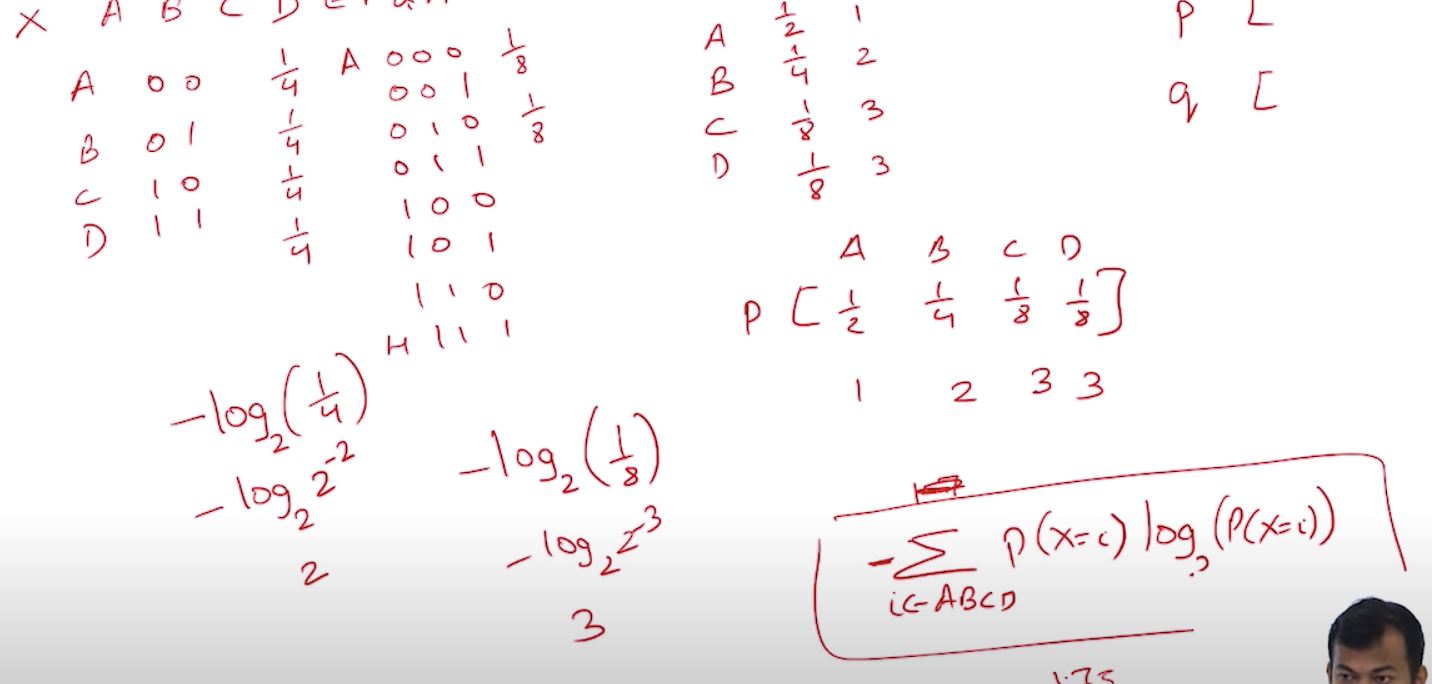

Entropy

given pmf

given IC of each value

formula

Entropy and number of bits

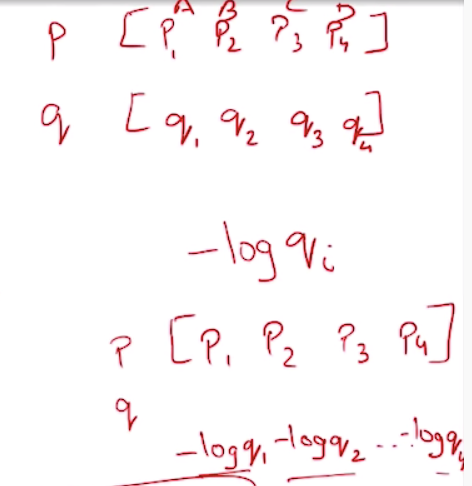

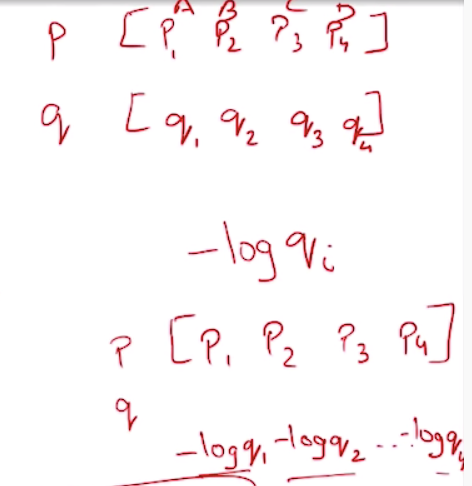

Cross Entropy

KL divergence