CS6910: Fundamentals of Deep Learning

Lecture 1: A (brief/partial) History of Deep Learning

Nobel Prize

Both Golgi (reticular theory) and Cajal (neuron doctrine) were jointly awarded the 1906 Nobel Prize for Physiology or Medicine, that resulted in lasting conflicting ideas and controversies between the two scientists.

Reticular Theory

Neuron Doctrine

1871-1873

1888-1891

1906

Nobel Prize

1950

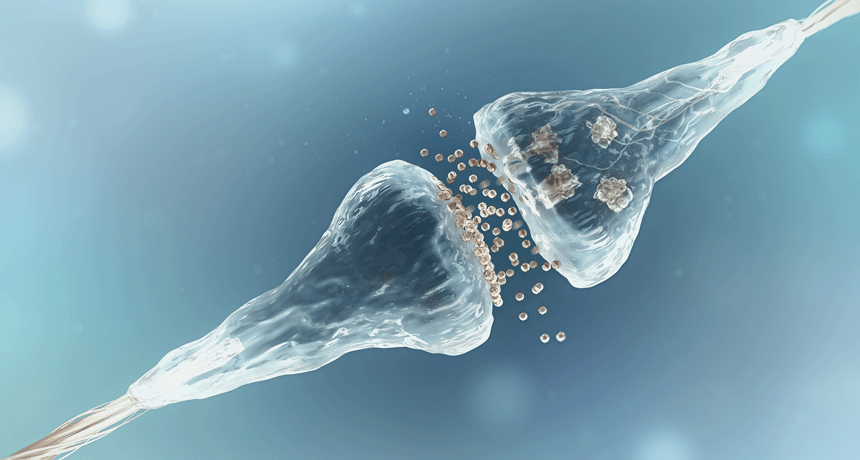

Synapse

Image source: https://www.sciencenewsforstudents.org/article/scientists-say-synapse

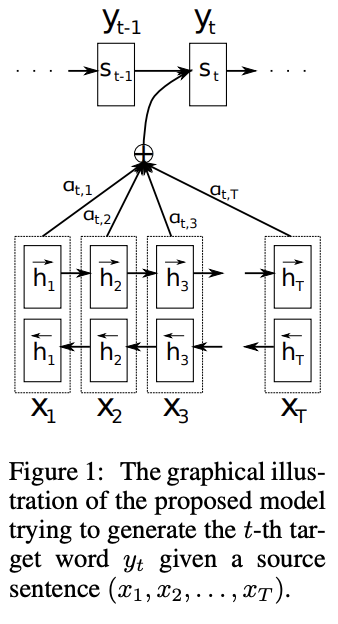

Neural MT

The introduction of seq2seq models and attention (perhaps, the idea of the decade!) lead to a paradigm shift in NLP ushering the era of bigger, hungrier (more data), better models!

Reticular Theory

Neuron Doctrine

1871-1873

1888-1891

1906

Nobel Prize

1950

Synapse

Source: Dzmitry Bahdanau, Kyunghyun Cho, Yoshua Bengio: Neural Machine Translation by Jointly Learning to Align and Translate. ICLR 2015

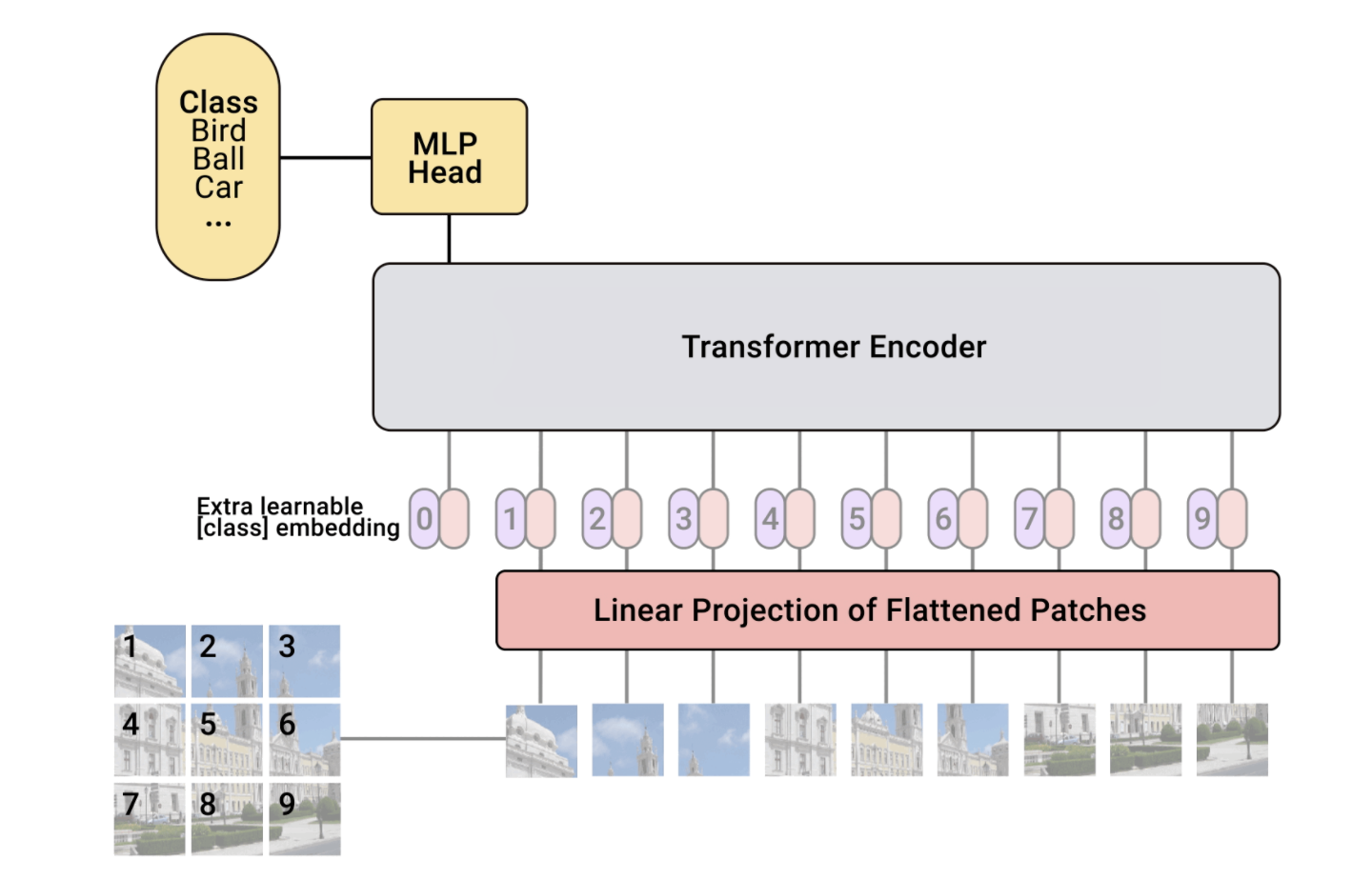

From language to Vision

A vision model based as closely as possible on the Transformer architecture originally designed for text-based tasks (another paradigm shift from CNNs which have been around since 1980s!)

Reticular Theory

Neuron Doctrine

1871-1873

1888-1891

1906

Nobel Prize

1950

Synapse

Source: :https://ai.googleblog.com/2020/12/transformers-for-image-recognition-at.html