Technical Training Programme

NodeJS, ExpressJS, MongoDB

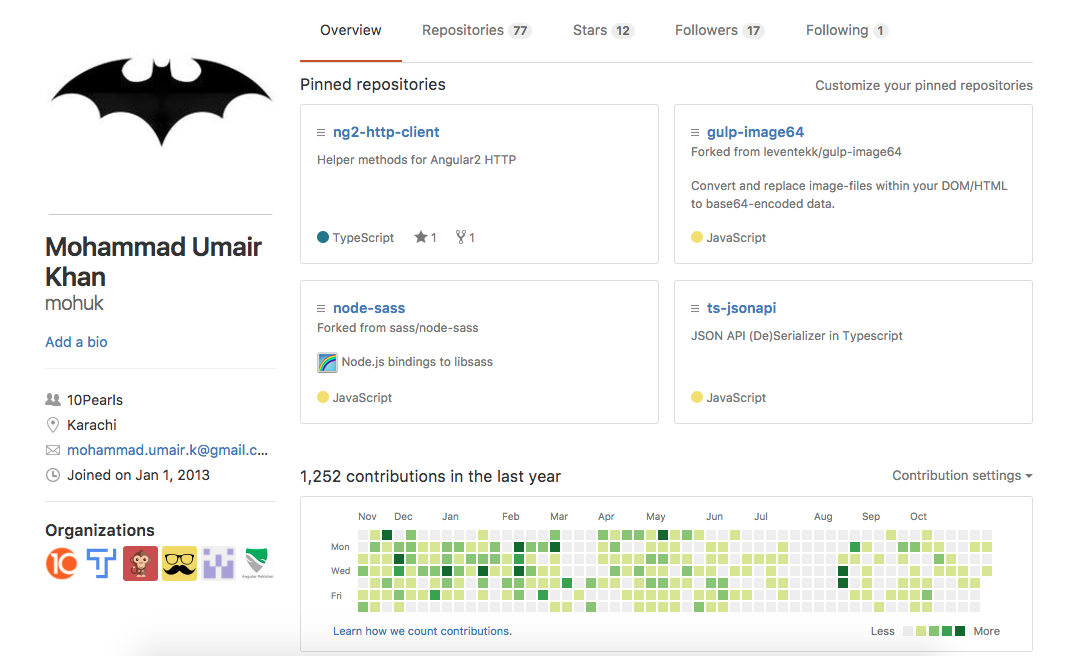

$ whoami

$ whoami

Agenda

- Refresh our JS

- Learn NodeJS fundamentals

- Learn ExpressJS for RESTful APIs

- Learn MongoDB

- Build an app on ExpressJS backed by MongoDB

- Deploying NodeJS + MongoDB apps to production

Today's Agenda

- Refresh our JS

- Learn NodeJS fundamentals

Methodology

- Follow through/guided tutorial

- Exercises, exercises, exercises

Ground rules

Don't copy paste code

Prep

- NodeJS installation

- Git installation

- VSCode installation

- Slack account

- Gitlab account

History

Mocha

LiveScript

10 Days

Platforms

- Desktop Browsers

- Mobile Browsers

- Mobile Web Views

- Mobile Native on Javascript

- Progressive Web Apps

Today

- Dynamic and fast

- Concurrency of requests

- Very Popular

- Reduces the gap between front/backend engineers

Day 2

Technical Training Programme

NodeJS, ExpressJS, MongoDB

Recap Day 1

Event Emitter

Event Emitter

- Core

- Has many implementations in NodeJS

- Observer pattern

Methods

- .on

- .emit

- .once

Event Emitter

Example

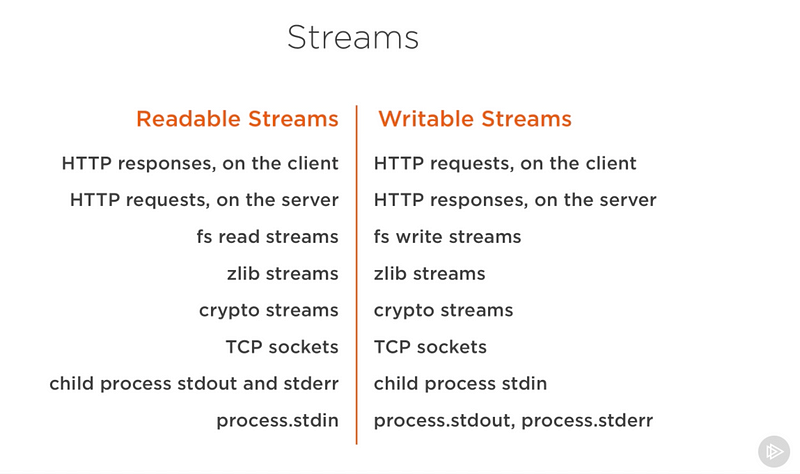

Streams

Probably the hardest concept in NodeJS

Streams are simple collections of data, the only difference is, they are not available at once

Streams are composable via its pipe method.

Let's try it out

Streams can be ...

- Readable

- Writeable

- Duplex (Both Readable & Writeable)

- Transform

Readable

- Its the source

- Flowing data like water from faucet

- Is able to sink into any other container (writeable/duplex/transform)

Writeable

- Its the sink/drain

- Receives data

- Is able to sink data from any other container (readable/duplex/transform)

Duplex

- Imagine a server which receives as well as sends data

- Can do both read and write

Transform

- Transforms a readable stream and makes it available to write

Streams implement the EventEmitter interface

Readable

- data - stream will keep on receiving this event as more data is available to read

- end - stream will receive this event once no more data is available to read

Writeable

- drain - stream will keep on receiving this event indicating it can receive more data

- finish - stream will receive this event once all data has been written/flushed

const fs = require('fs');

const zlib = require('zlib');

const readStream = fs.createReadStream('./big.file');

readStream

.pipe(zlib.createGzip())

.pipe(fs.createWriteStream('./compressed.gz'));

CSV to JSON

Exercise

Core: http

Part 1

Core: http

Part 2

Refresher Quiz

Event Loop

Recap

NodeJS Async functions are based on

- Callbacks

- Promises

- Async/Await

Core Module: fs

- fs stands for file system

- Async/Sync

- Subset of APIs are based on Event Emitter and Streams

Core Module: path

- file system path builder solves OS path conflicts

- makes the code portable (usable across OS-es)

- __dirname global represents the current directory

Core Module: Event

- Allows writing Event driven code (observer pattern)

- fs, streams implement the Event Emitter interface

- on, once allows event subscription

- emit dispatches events

- removeListener removes the event

Core Module: Streams

- Collection of data received or sent in chunks

- Composeable via pipe

- Writeable, Readable, Duplex, Transform

- Readable events are data, end

- Writeable events are drain, finish

Event loop

- Backbone of async operations

- All async operations consist of the following process

Callstack > APIs > Callback Queue > Event loop > Callstack

Core: http

- Module that enables HTTP

- Can become an HTTP Server

- Can become an HTTP Client

- HTTP response is a Stream

Core: http

- Module that enables HTTP

- Can become an HTTP Server

- Can become an HTTP Client

- HTTP response is a Stream

Feedback Day 2

https://goo.gl/XyXJxU

Day 3

Technical Training Programme

NodeJS, ExpressJS, MongoDB

Web scrapper solution

ExpressJS

Based off of http core module

Provides a simple API for creating web servers

- Deliver static content

- Modularize business logic

- Construct an API

- Connect to various data sources (with additional plugins)

- Write less code (see Express vs. http)

- Validate data (with additional plugins)

Hello World Express

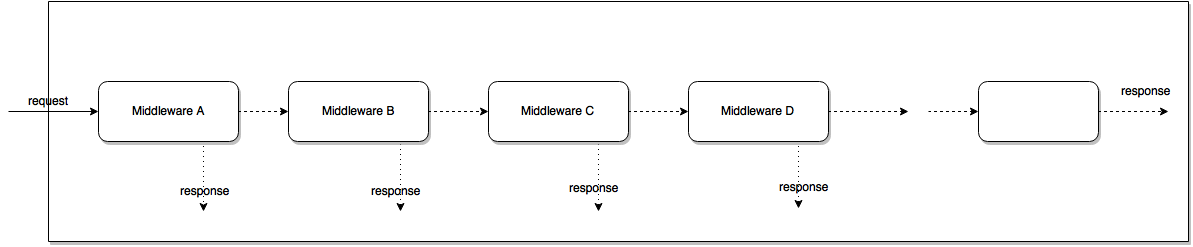

Express Middlewares

Middleware Pattern

Middlewares

- Parsing inputs

- Rendering

- Authentication

- Authorization

- Response Formatting

- Error Handling

Hello World Express in HTML

Middlewares Challenge

RESTful API

Addressable Resources. Every “thing” on your network should have an ID. With REST over HTTP, every object will have its own specific URI.

- /users/:id

- /users/:userId/courses/:courseId

RESTful Principles

A Uniform, Constrained Interface. When applying REST over HTTP, stick to the methods provided by the protocol. This means following the meaning of GET, POST, PUT, and DELETE religiously.

- GET /users/:userId

- PUT /users/:userId

RESTful Principles

Representation oriented. You interact with services using representations of that service. An object referenced by one URI can have different formats available. Different platforms need different formats. AJAX may need JSON. A Java application may need XML.

RESTful Principles

Communicate statelessly. Stateless applications are easier to scale.

RESTful Principles

Let's develop some RESTful APIs

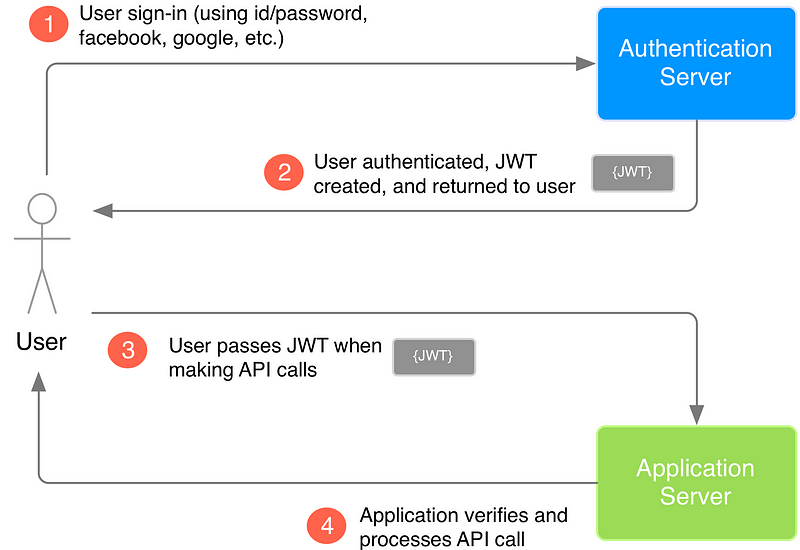

Json Web Token

header.payload.signature

Header

{

"typ": "JWT",

"alg": "HS256"

}Payload

{

"userId": "090078601",

"roles": ["Admin", "King"]

}Signature

// signature algorithm

data = `${base64urlEncode(header)}.${base64urlEncode(payload)}`

signature = Hash(data, secret);All together

header.payload.signature

eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJ1c2VySWQiOiJiMDhmODZhZi0zNWRhLTQ4ZjItOGZhYi1jZWYzOTA0NjYwYmQifQ.-xN_h82PHVTCMA9vdoHrcZxH-x5mb11y1537t3rGzcM

Authentication Middleware

Blog API Challenge

Feedback Day 3

Day 4

Technical Training Programme

NodeJS, ExpressJS, MongoDB

MongoDB

Introduction to NoSQL

NoSQL

Documents as records

- Create

- Read

- Update

- Delete

{

"_id": ObjectID("1298798e198291"),

"username": "bruce@wayne.com",

"password": "kasjdl123k1092sad123wdadakjhdka12o31",

"classes": [{

"name": "Physics",

"classId": "PHY-101"

},

{

"name": "Chemistry",

"classId": "CHM-101"

}

]

}

Documents as BSON (Binary JSON)

[

{

"_id": ObjectID("1298798e198291"),

"username": "bruce@wayne.com",

"password": "kasjdl123k1092sad123wdadakjhdka12o31"

},

{

"_id": ObjectID("1298asd1238sad"),

"username": "robin@wayne.com",

"password": "aslkdjpo2eposdopo122weopqsdioqwe1231"

}

]

Documents as collections

[

{

"_id": ObjectID("1298798e198291"),

"username": "bruce@wayne.com",

"password": "kasjdl123k1092sad123wdadakjhdka12o31",

"accounts": [

{ "type": "openid"},

{ "type": "oauth2" }

]

},

{

"_id": ObjectID("1298asd1238sad"),

"username": "robin@wayne.com",

"password": "aslkdjpo2eposdopo122weopqsdioqwe1231"

}

]

Documents are schema-less

Data types

- String

- Double

- Object

- Array

- ObjectId

- Boolean

- Date

- Null

- RegEx

- Timestamp

src: BSON Types

Shell Basics

Schema Design in MongoDB

Make sure your use case is fit for MongoDB

Organize data how you want to access it

- One-to-Few

- One-to-Many

- One-to-Squillions

MongoDB Rules

One-to-Few

// person

{

name: "Keon Kim",

hometown: "Seoul",

addresses: [

{ city: 'Manhattan', state: 'NY', cc: 'USA' },

{ city: 'Jersey City', state: 'NJ', cc: 'USA' }

]

}All information in one query

It is impossible to search the contained entity (addresses) independently

One-to-Many

{

_id: ObjectID('AAAA'),

partno: '123-aff-456',

name: 'Awesometel 100Ghz CPU',

qty: 102,

cost: 1.21,

price: 3.99

}

// products

{

name: 'Weird Computer WC-3020',

manufacturer: 'Haruair Eng.',

catalog_number: 1234,

parts: [

ObjectID('AAAA'),

ObjectID('DEFO'),

ObjectID('EJFW')

]

}It is easy to handle insert, delete on each documents independently

It has flexibility for implementing N-to-N relationship because it is an application level join

Performance drops as you call documents multiple times.

> product = db.products

.findOne({catalog_number: 1234});

db.parts.find({

_id: { $in : product.parts }}

).toArray() ;One-to-Squillions

// host

{

_id : ObjectID('AAAB'),

name : 'goofy.example.com',

ipaddr : '127.66.66.66'

}

// logmsg

{

time : ISODate("2015-09-02T09:10:09.032Z"),

message : 'cpu is on fire!',

host: ObjectID('AAAB')

}Scales up with the 16MB per document limit

> host = db.hosts

.findOne({ipaddr : '127.66.66.66'});

> db.logmsg.find({host: host._id})

.sort({time : -1})

.limit(5000)

.toArray()Two-Way Referencing

// person

{

_id: ObjectID("AAF1"),

name: "Koala",

// reference task document

tasks [

ObjectID("ADF9"),

ObjectID("AE02"),

ObjectID("AE73")

]

}

// tasks

{

_id: ObjectID("ADF9"),

description: "Practice Jiu-jitsu",

due_date: ISODate("2015-10-01"),

// reference person document

owner: ObjectID("AAF1")

}It is easy to search on both Person and Task documents

It requires two separate queries to update an item. The update is not atomic.

Many-to-One Denormalization

// products - before

{

name: 'Weird Computer WC-3020',

manufacturer: 'Haruair Eng.',

catalog_number: 1234,

parts: [

ObjectID('AAAA'),

ObjectID('DEFO'),

ObjectID('EJFW')

]

}

// products - after

{

name: 'Weird Computer WC-3020',

manufacturer: 'Haruair Eng.',

catalog_number: 1234,

parts: [ // denormalization

{ id: ObjectID('AAAA'), name: 'Awesometel 100Ghz CPU' },

{ id: ObjectID('DEFO'), name: 'AwesomeSize 100TB SSD' },

{ id: ObjectID('EJFW'), name: 'Magical Mouse' }

]

}It is not a good choice when updates are frequent

When you want to update the part name, you have to update all names contained inside product document

Denormalization reduces the cost of calling the data.

One-to-Many Denormalization

// parts - before

{

_id: ObjectID('AAAA'),

partno: '123-aff-456',

name: 'Awesometel 100Ghz CPU',

qty: 102,

cost: 1.21,

price: 3.99

}

// parts - after

{

_id: ObjectID('AAAA'),

partno: '123-aff-456',

name: 'Awesometel 100Ghz CPU',

// denormalization

product_name: 'Weird Computer WC-3020',

// denormalization

product_catalog_number: 1234,

qty: 102,

cost: 1.21,

price: 3.99

}It is not a good choice when updates are frequent

When you want to update the part name, you have to update all names contained inside product document

Denormalization reduces the cost of calling the data.

One-to-Squillions Denormalization

// logmsg - before

{

time : ISODate("2015-09-02T09:10:09.032Z"),

message : 'cpu is on fire!',

host: ObjectID('AAAB')

}

// logmsg - after

{

time : ISODate("2015-09-02T09:10:09.032Z"),

message : 'cpu is on fire!',

host: ObjectID('AAAB'),

ipaddr : '127.66.66.66'

}

> db.logmsg.find({ipaddr : '127.66.66.66'})

.sort({time : -1})

.limit(5000)

.toArray(){

time : ISODate("2015-09-02T09:10:09.032Z"),

message : 'cpu is on fire!',

ipaddr : '127.66.66.66',

hostname : 'goofy.example.com'

}Rule 1 of 6

Favour embedding unless there is a compelling reason not to

Rule 2 of 6

Needing to access an object on its own is a compelling reason not to embed it

Rule 3 of 6

Arrays should not grow without bound.

array[200+] == Don't embed

array[2000+] == Don't use ObjectID references

Rule 4 of 6

Don’t be afraid of application-level joins: if you index correctly and use the projection specifier then application-level joins are barely more expensive than server-side joins in a relational database.

Rule 5 of 6

Consider the write/read ratio when denormalizing.

Rule 6 of 6

As always with MongoDB, how you model your data depends – entirely – on your particular application’s data access patterns.

Aggregation

Problem

In the restaurants collection, I want to know the count of 'A' grades received by their name

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

"address" : {

"building" : "2911",

"coord" : [

-73.982241,

40.576366

],

"street" : "West 15 Street",

"zipcode" : "11224"

},

"borough" : "Brooklyn",

"cuisine" : "Italian",

"grades" : [

{

"date" : ISODate("2014-12-18T00:00:00Z"),

"grade" : "A",

"score" : 13

},

{

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

{

"date" : ISODate("2013-06-12T00:00:00Z"),

"grade" : "A",

"score" : 9

}

],

"name" : "Gargiulo's Restaurant",

"restaurant_id" : "40365784"

}

db.collection.aggregation([

{_stage1_},

{_stage2_}

]);$unwind

Untangles the array by creating multiple records for each item in the array

db.restaurants.aggregate([

{$unwind: "$grades"}

])$unwind

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

"address" : {

"building" : "2911",

"coord" : [-73.982241, 40.576366],

"street" : "West 15 Street",

"zipcode" : "11224"

},

"borough" : "Brooklyn",

"cuisine" : "Italian",

"grades" : [{

"date" : ISODate("2014-12-18T00:00:00Z"),

"grade" : "A",

"score" : 13

},

{

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

{

"date" : ISODate("2013-06-12T00:00:00Z"),

"grade" : "A",

"score" : 9

}],

"name" : "Gargiulo's Restaurant",

"restaurant_id" : "40365784"

}

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-12-18T00:00:00Z"),

"grade" : "A",

"score" : 13

},

...

},

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

...

}

$match

Returns the records with the matching condition

db.restaurants.aggregate([

{$unwind: "$grades"},

{$match: {"grades.grade": "A"}

])$match

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-12-18T00:00:00Z"),

"grade" : "B",

"score" : 13

},

...

},

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

...

}

...

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

...

}

...$addFields

Adds a custom field in each record

db.restaurants.aggregate([

{$unwind: "$grades"},

{$match: {"grades.grade": "A"}

{$addFields: {grade: "$grades.grade"}}

])$addFields

...

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

...

}

......

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

"grade": "A"

...

}

...$project

Create a projection

db.restaurants.aggregate([

{$unwind: "$grades"},

{$match: {"grades.grade": "A"}

{$addFields: {grade: "$grades.grade"}},

{$project: {_id:1, grade: 1, name: 1}}

])$project

...

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

...

"grades" : {

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 12

},

"grade": "A"

...

},

{

"_id" : ObjectId("59074c7c057a12312a12da64"),

...

"grades" : {

"date" : ISODate("2014-05-15T00:00:00Z"),

"grade" : "A",

"score" : 33

},

"grade": "A"

...

}

......

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

"grade": "A",

"name": "Ponche Taqueria"

},

{

"_id" : ObjectId("59074c7c057a12312a12da64"),

"grade": "A",

"name": "Fordham Pizza & Pasta"

}

...$group

Create a projection

db.restaurants.aggregate([

{$unwind: "$grades"},

{$match: {"grades.grade": "A"}

{$addFields: {grade: "$grades.grade"}},

{$project: {_id:1, grade: 1, name: 1}},

{$group: {_id: "$name", count: {$sum: 1}}}

])$group

...

{

"_id" : ObjectId("59074c7c057aaffaafb0da64"),

"grade": "A"

},

{

"_id" : ObjectId("59074c7c057a12312a12da64"),

"grade": "A"

}

...{ "_id" : "Ponche Taqueria", "count" : 3 }

{ "_id" : "Place To Beach Cantina", "count" : 1 }

{ "_id" : "Homecoming", "count" : 3 }

{ "_id" : "Fordham Pizza & Pasta", "count" : 3 }Final Query

db.restaurants.aggregate([

{$unwind: "$grades"},

{$match: {"grades.grade": "A"}

{$addFields: {grade: "$grades.grade"}},

{$project: {_id:1, grade: 1, name: 1}},

{$group: {_id: "$name", count: {$sum: 1}}}

])Indexes

Types

- default (_id)

- Single Value

- Compound

- Multikey

- Geospatial

Indexing

Builds an in memory tree so that specific searches do not require an entire collection scan

Default

- An _id index is created on each document insert

- _id is unique and can traverse to both ascending and descending searches

- _id cannot be removed

Single Field

- Additional single field index can be created

- Does not guarantee uniqueness

- can be removed

- can traverse in both directions

Compound Field

- Additional single field index can be created

- Does not guarantee uniqueness

- can be removed

- cannot traverse in both directions

Multikey Index

- Created on array fields

- Sort order applies similar to single field

Securing MongoDB

Authentication

- Default

- Certificate

- LDAP

- Kerberos

Default

use reporting

db.createUser(

{

user: "reportsUser",

pwd: "12345678",

roles: [

{ role: "read", db: "reporting" },

{ role: "read", db: "products" },

{ role: "read", db: "sales" },

{ role: "readWrite", db: "accounts" }

]

}

)Replication

MongoDB ReplicaSet

- At least 3 nodes to be setup

- 1 Primary, 1 Secondary, 1 Arbiter

- WriteConcern allows to decide the number of nodes for a successful write