HOW TO TRICK SQL INTO DOING ALL THE WORK FOR YOU

PyGotham 2018

Molly Leen

Molly Leen

Senior Software Engineer @

At Kyruus we help health systems match patients with the right providers and enhance patient access enterprise-wide

Who am I?

Talk Overview

LETS ADD THIS LATER

The Problem

- Large amount of data

- 2x the number of rows currently in our system

"BIG" data

Current Infrastructure

raw data

API server

- Post each record 1 at a time

- Estimated time: ~48 hours

POST

{

"first_name": "Molly",

"last_name": "Leen",

"conferences": ["PyGotham"]

}

ingestion script

INSERT

Why is this so slow?

- Network request for each record

- Single threaded

- API commits to db on EACH request

PostgreSQL - COPY

Use COPY to load all the rows in one command, instead of using a series of INSERT commands. The COPY command is optimized for loading large numbers of rows; it is less flexible than INSERT, but incurs significantly less overhead for large data loads.

From the PostgreSQL docs:

The Plan

API server

ONE request

raw data

ingestion script

COPY

What is COPY?

https://www.postgresql.org/docs/9.4/static/sql-copy.html

COPY reads from a file or file-like object which is formatted to match the structure of the table

COPY needs a structured file...

...but this file would be very large....

...we don't want to have to download a large file to disk....

...lets define some requirements....

...and the structure of the file is very important....

Requirements

Requirements:

- Do not download file to disk

- Create records as JSON as if creating only one record

- Add API specific metadata

Requirement #1

Do not download file to disk

What is a file?

According to Google:

- a collection of data, programs, etc., stored in a computer's memory or on a storage device under a single identifying name.

What is a file-like object?

According to the python docs:

- An object exposing a file-oriented API (with methods such as read() or write()) to an underlying resource. Depending on the way it was created, a file object can mediate access to a real on-disk file or to another type of storage or communication device (for example standard input/output, in-memory buffers, sockets, pipes, etc.).

- https://docs.python.org/3/glossary.html#term-file-object

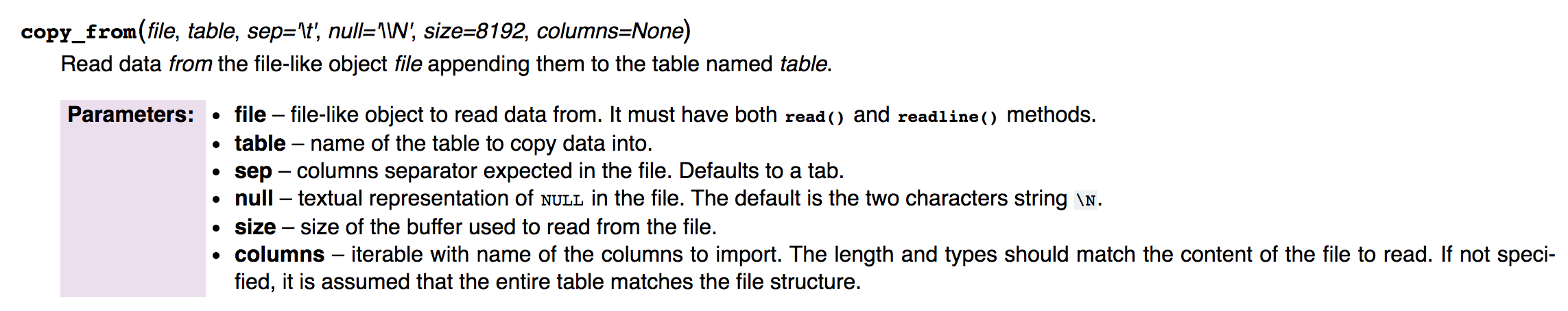

Psycopg2

- PostgreSQL adapter for use in Python apps/scripts/etc.

- reads data from a file-like object

- object must have read() and readline() methods

Extracting data from s3

With a Pre-Signed s3 URL and python requests, we can iterate over the data line by line using a generator

- Pre-Signed s3 URL: Authenticated url to access s3 file via HTTP requests

- python requests: Python library for HTTP requests

- Generator: A python class that behaves like an iterator

Definitions:

with requests.get(url, stream=True) as data:

# Data is now a generator we can access either

# in a loop or by calling data.next()Generator -> File

We have a generator...

...We need a file-like object with read() and readline() methods...

...Lets build one!