The role of A/B testing in a modern organisation

Narbeh Yousefian

Co Founder, Digdeep Digital

November 11, 2014

www.digdeepdigital.com.au

Be Predictive, Not Predictable

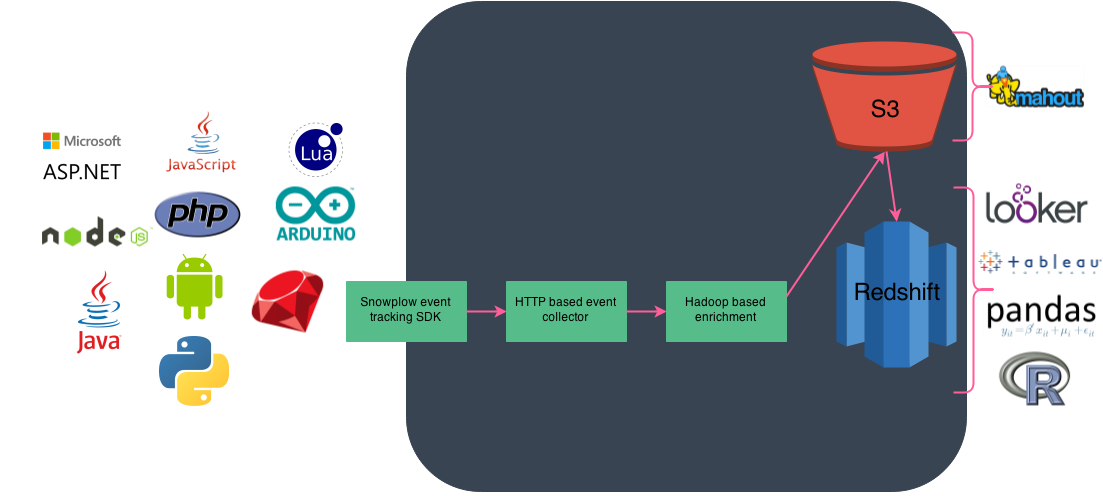

We are a global partner of Snowplow Analytics, an event analytics platform

We build on top of this data

We Experiements

So much so, we have our own

{

/**

* User Level IGLU Schema using data Layer variables

*/

"$schema": "http://iglucentral.com/schemas/com.au.MYSITE.self-desc/schema/jsonschema/0-0-0#",

"description": "Schema for user trackPageView",

"self": {

"vendor": "com.au.MYSITE",

"name": "user",

"format": "jsonschema",

"version": "1-0-0"

},

"type": "object",

"properties": {

"event_id": {

"type": "string"

},

"CookieID": {

"type": "string"

},

"DeviceID": {

"type": "string"

},

"expLab": {

"type": "array",

"items": {

"type": "string"

}

},

"required": ["event_id","CookieID","DeviceId","expLab"],

"additionalProperties": false{

schema: "iglu:com.com.au.MYSITE/user/jsonschema/1-0-0",

data: {

event_id: 'xyz123abc',

CookieId: 'ahwiwcobwcob',

DeviceId: 'bmeoiheorubverovbev',

expLabs: ['A', 'B', 'C', 'D', 'E']

}

}Event level data + self describing JSON schema pushing custom context data on every request

We also push a custom key across all platforms so we joining 3rd party systems

Redshift FTW!

Putting it together

A/B Testing

An A/B test involves testing two versions of a web page (the control and variation version) — with live traffic and measuring the effect each version has on your conversion rate.

https://blog.bigcommerce.com/10-ecommerce-ab-tests/

AKA: Split Testing, randomised control design, RBT, hypothesis testing, t (z) test, ANOVA, MANOVA, online control experiments, variant testing, parametric, non parametric, Spearman, Kendall Tau, Chi Square, Wilson Binomial, Wilcoxon, Mann-Whitney, McNemar's Test, and so on...

Talk Boundaries

Selling stuff online

Why Discrete Non Contractual?

Excellence in any undertaking can be found in details that most people barely notice, but those who know know. - President Abraham Lincoln

The Modern Organisation

This is not a modern organisation..

Why the Caveat?

"I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail."

It is important to distinguish the difference between what A/B testing tools can do for you and where you need to draw the line in the sand.

Especially when you make strong associations regarding your impact to the bottom line..

An Example

Another example

My Favourite

So what's the beef?

When all you have is black box 3rd party javascript

<script src"//cdn.optimizely.com/js/111111111.js"></script>

chisq.test(matrix(c(A,B,C,D), ncol=2, byrow=T))but under the hood, all it is doing is something like this

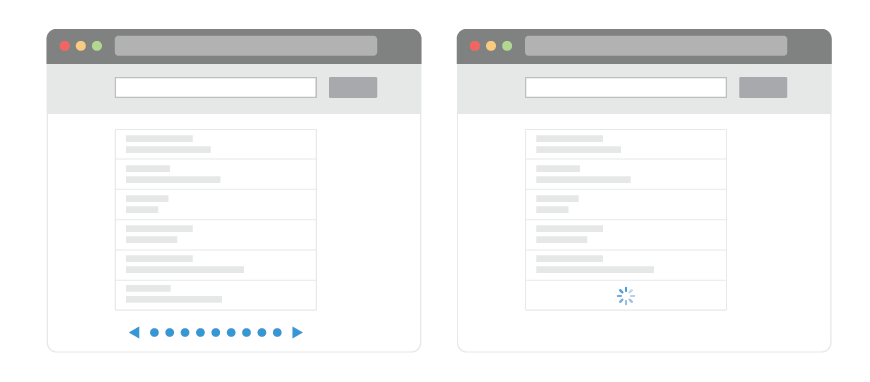

You will likely see a report resembling this

Yet, the examples shown all imply a direct relationship

A common scenario

Let us assume we are an Australian wide online retailer, selling beds and accessories. As a business we have decided to be part of an online sale event, let's call it Click Frenzy.

100 SKU's of our inventory will be part of this sale and as a business, we want to measure the impact of this sales initiative.

In support of this mega sale, we also decide to promote this within our domain emphasising the sale messaging across our website.

Lastly, to ensure we get the best click for our buck, we further decide to run A/B test on the buy Now messaging, introducing a variant during the sale period.

- It is a record month in sales

- Click Frenzy worked!

- Green button is clear winner

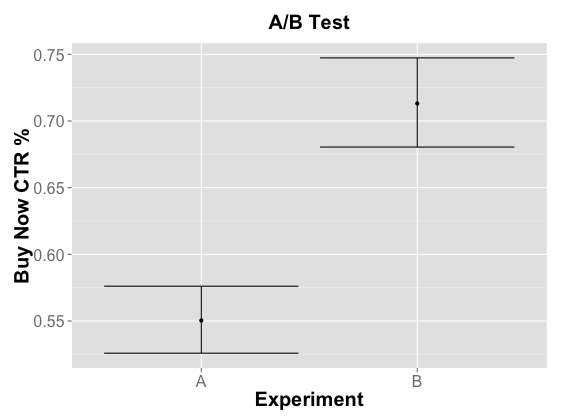

Comparing the difference between the buttons for a statistically significant sample we are 95% confident we are AWESOME!

(this happens more so than you think)

At best this is a large assumption that an interaction event can call the purchase event its own.

Put Simply,

Using some non parametric test as a measure of success is a leap of faith when your product page change is assumed to impact revenue

library(Hmisc)

library(ggplot2)

SE_Binom <- data.frame(binconf(c(1835,1735), c(333389,243302), method="wilson") *100 )

SE_Binom$Experiment <- c("A", "B")

SE_Binom

#Buy Now Click through rate

qplot(ymin=Lower, ymax=Upper, x=Experiment, data=SE_Binom, geom="errorbar") +

labs(title="A/B Test", y="Buy Now CTR %") + geom_point(aes(y=PointEst)) +

theme(axis.title.x = element_text(vjust=0.5, face="bold", colour="#000000", size=20),

axis.text.x = element_text(vjust=0.5, size=16),axis.title.y = element_text(vjust=0.5,

face="bold", colour="#000000", size=20),axis.text.y = element_text(vjust=0.5, size=16),

plot.title = element_text(vjust=1.5, face="bold",colour="#000000", size=20))

Useful test, wrong context

Might as well get this dude as your spokesman for insights

Let's open up the tool box

Stuff sales Org. cares about

- Demand All potential customer sales regardless of stock outs.

- Forecast Error (as opposed to forecast accuracy) RMSE

- Fill Rate how many customer orders able to fill

- Gross Sales Total sales dollars prior to returns and markdowns

- Returns $ amount of goods returned as a % of Gross Sales.

- Discounts $ amount of the discount a customer receives as a % of Gross Sales

- Net Sales Gross Sales after returns and markdowns are subtracted

- Margin and Profit: (COGS, ...)

- Inventory: (sale/stock ratio,...)

SKU

Price

Units

Competitor Price

Weather

Dummy Vars

Seasonality

Day of week

Month

Intervention

...

Forecast Model

Product A == ARIMA(1,0,0)×(0,1,1)

Product B == ARIMA(1,1,1)×(0,1,1)

Product C == ARIMA(0,1,1)×(0,0,1)

...Stuff sales Org. already does

What ever the process, your variabe selection will expose a model per SKU

Prioritise your operational Goals

We know that our forecasting model can predict with an overall X% error. That means, if your objective is to increase revenue, your goal is to reduce prediction error.

For any A/B test, you have a rolling start when addressing any experimental type questions because we already have a set of efficient predictors already uncovered by SKU.

Remember: "I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail."

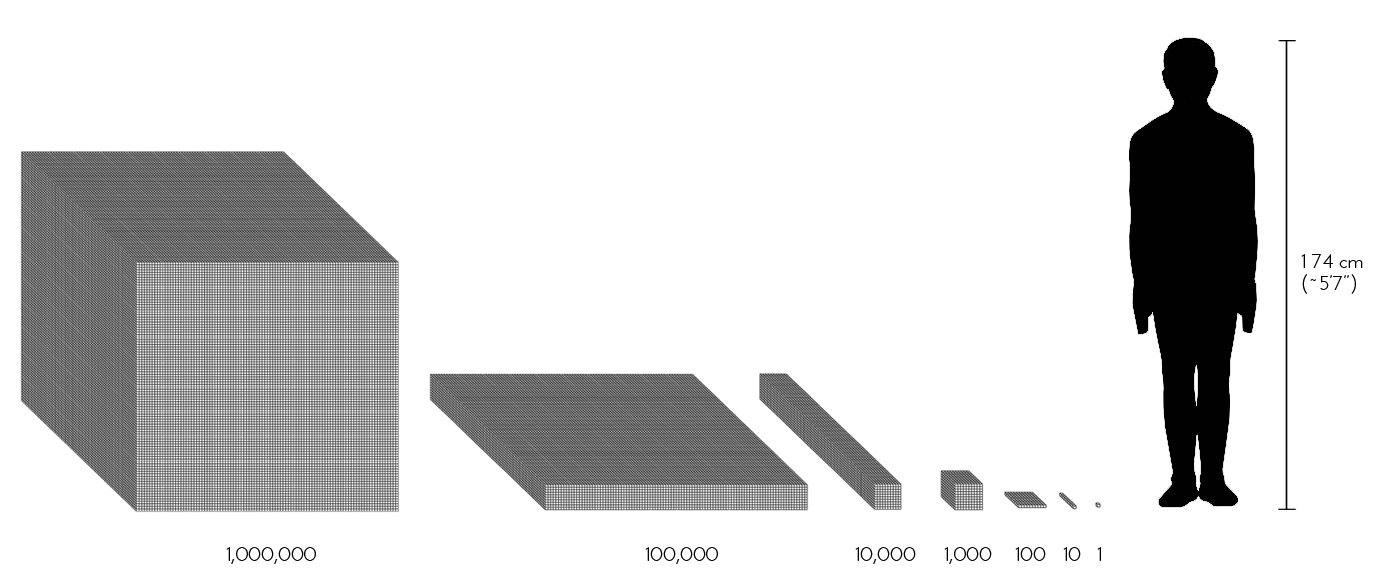

1M Users or 10K SKU's?

It is a bold claim that a button change has a direct relationship on revenue when so much of the sales driver is already known from a better model.

This is a good thing though, because working together, you can test your way to better predictors.

Just don't forget to Block!

BLOCKING

for SKU, Price, Dummy Vars like Day of week, seasonality, weather, Click Frenzy Sale/ Not Sale, we also introduced The Buy Now variant, ...

Product A sample

Product B sample

Product A == ARIMA(1,0,0)×(0,1,1)

Product B == ARIMA(1,1,1)×(0,1,1)

Product C == ARIMA(0,1,1)×(0,0,1)

...Blindly comparing product A of $100 to other SKU's varying from $20 - $2000 will fail looking at an overall group comparison.

If our forecasting model is by SKU, our blocking means our experiment become concurrent tests, so for every SKU it is a mini experiment in itself.

Concurrent Testing

Example Outcome

for the 100 SKU's introduced to the Click Frenzy Sale, only 20 of these SKU's performed significantly better as a result of the Click Frenzy.

60 out of 100 SKU's did not have enough sample to test the button variation, of those tested, only 1 SKU performed significantly better.

Product A sample

Product B sample

Take Away

In a setting heavily driven by sales, A/B Testing is a complimentary tool in the toolbox

Rather than model users, we flip it is as a product input

Practicality is the order of the day, support what is already known

Final Thought's

Be honest about your internal process: Data and experimentation must be baked into the business operations - there is no point hacking A/B Testing onto a waterfall process, you will lose.

Incremental Testing, Long term objectives. The value lies in your contribution to the long term cause. What is your worth to a billion dollar organisation when you reduce error by 1% over the course of the year?

100 failed tests mean nothing when you are fast to act, and are sensible about your goals.

"My point is not that infinite scroll is stupid. It may be great on your website. But we should have done a better job of understanding the people using our website" – Dan McKinley, Principal Engineer at Etsy

Thank you