Productionizing ML Systems without fear or heroism

Nastasia Saby

@saby_nastasia

Examples of ML Systems I've worked on:

- Predicting breakdowns

- Anomalies detection

- Sales models

- etc

#SupervisedLearning #UnsupervisedLearning

Looking at best practices in software engineering

Data monitoring

Unit tests for data

Data versioning

Looking at best practices in software engineering

Predictive systems have a lot to learn from traditional programming

DataTalks.Club @saby_nastasia

- Test code

- Version code

- Monitor code

DataTalks.Club @saby_nastasia

But predictive systems are different from traditional programming

DataTalks.Club @saby_nastasia

Data

Fonction

Programme

Results

DataTalks.Club @saby_nastasia

Data

Fonction

Result

Programme

DataTalks.Club @saby_nastasia

Data - models > Code

DataTalks.Club @saby_nastasia

- Test code, data and models

- Version code, data and models

- Monitor code, data and models

DataTalks.Club @saby_nastasia

Using best practices to constantly add value and be able to maintain a regular pace

DataTalks.Club @saby_nastasia

Agile software development principles

"Sustainable development, able to maintain a constant pace"

DataTalks.Club @saby_nastasia

Software crafting manifesto

"Not only responding to change, but also steadily adding value"

DataTalks.Club @saby_nastasia

Reproducibility

No fear or "heroism"

Our goals

- To add value at a sustainable pace

- Being able to reproduce a bug or a past prediction

#serenity #withoutFear #withoutHeroism

Our solution

Look at best practices from traditional programming, but we must go beyond to take into account the specificities of ML systems

Version data

Why should you version your data?

Data > Code

DataTalks.Club @saby_nastasia

Doing it yourself

- year = 2019

- month = 11

- month = 12

DataTalks.Club @saby_nastasia

Doing it yourself by saving state or events

- year = 2019

- month = 11

- month = 12

DataTalks.Club @saby_nastasia

With a tool

#DeltaLake

DataTalks.Club @saby_nastasia

DEMO

Version data

Version models

Version code

=

Reproducibility

DataTalks.Club @saby_nastasia

Test data

Data can will change

#PyDeequ, #GreatExpectations

DataTalks.Club @saby_nastasia

DEMO

Different strategies to deal with "bad" data

DataTalks.Club @saby_nastasia

Monitor data

Why should you monitor your data?

#modelDrift

#dataDrift

DataTalks.Club @saby_nastasia

Once upon a time, a virus was born in Wuhan

DataTalks.Club @saby_nastasia

How can you protect yourself from model and data drift?

DataTalks.Club @saby_nastasia

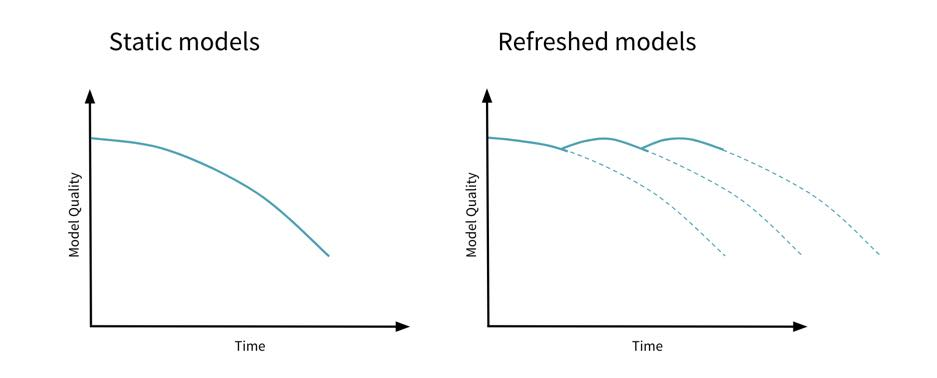

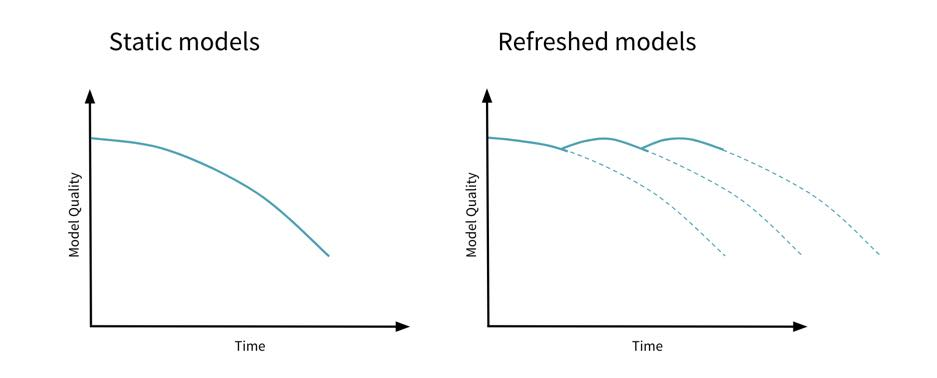

Retraining

DataTalks.Club @saby_nastasia

Monitor retraining

DataTalks.Club @saby_nastasia

With retrainings

DataTalks.Club @saby_nastasia

Monitor real life

DataTalks.Club @saby_nastasia

Monitor data

DataTalks.Club @saby_nastasia

Monitor data

- Statistical distances

- Statistical tests

=> Open field in the research area

DataTalks.Club @saby_nastasia

NO DEMO

Custom

DataTalks.Club @saby_nastasia

Azure Data Drift

DataTalks.Club @saby_nastasia

Alibi-detect

DataTalks.Club @saby_nastasia

EvidentlyAI

DataTalks.Club @saby_nastasia

- Statistical tests => black boxes

- Data drift techniques will be popularized soon (I hope)

DataTalks.Club @saby_nastasia

Then what you can do?

Unit tests for data + Model drift detection

DataTalks.Club @saby_nastasia

DEMO

Then:

- Offline model drift

- Monitoring real life (business impact)

- Unit tests for data

To monitor model drift

MaltAcademy @saby_nastasia

Looking at best practices from software engineering

Model Drift monitoring

Unit tests for data

Data versioning