Methods, Tools, and Use Cases of Deploying Machine Learning Models to Production

Nawfal Tachfine

Data Scientist

Company

- hybrid business model

- we buy and sell cars online

- 28 dealerships nationwide

- a car sold every 5 minutes

- 400+ employees

About

Data Team

- 1 chief data officer

- 1 manager

- 3 data engineers

- 6 data scientists

- 1 architect

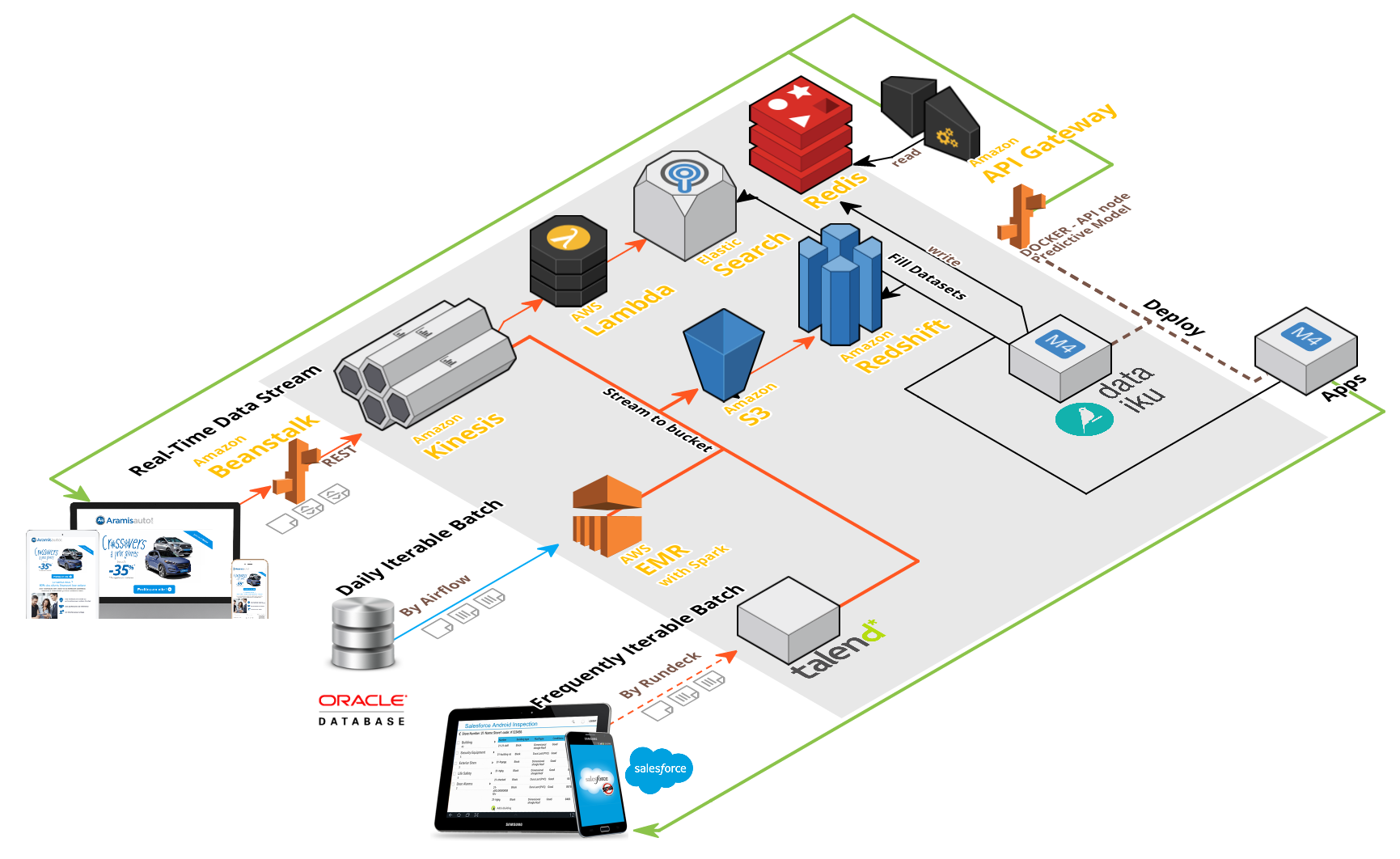

Data Science @AramisAuto

- Mission - leveraging data to create business value

-

Approach

- measurement based

- business driven

- iterative (business problem → production < 3 months)

- Perspectives

-

Products

- Which cars to buy?

- At which price to sell?

-

Customers

- Which leads to call?

- How to distribute acquisition resources?

-

Products

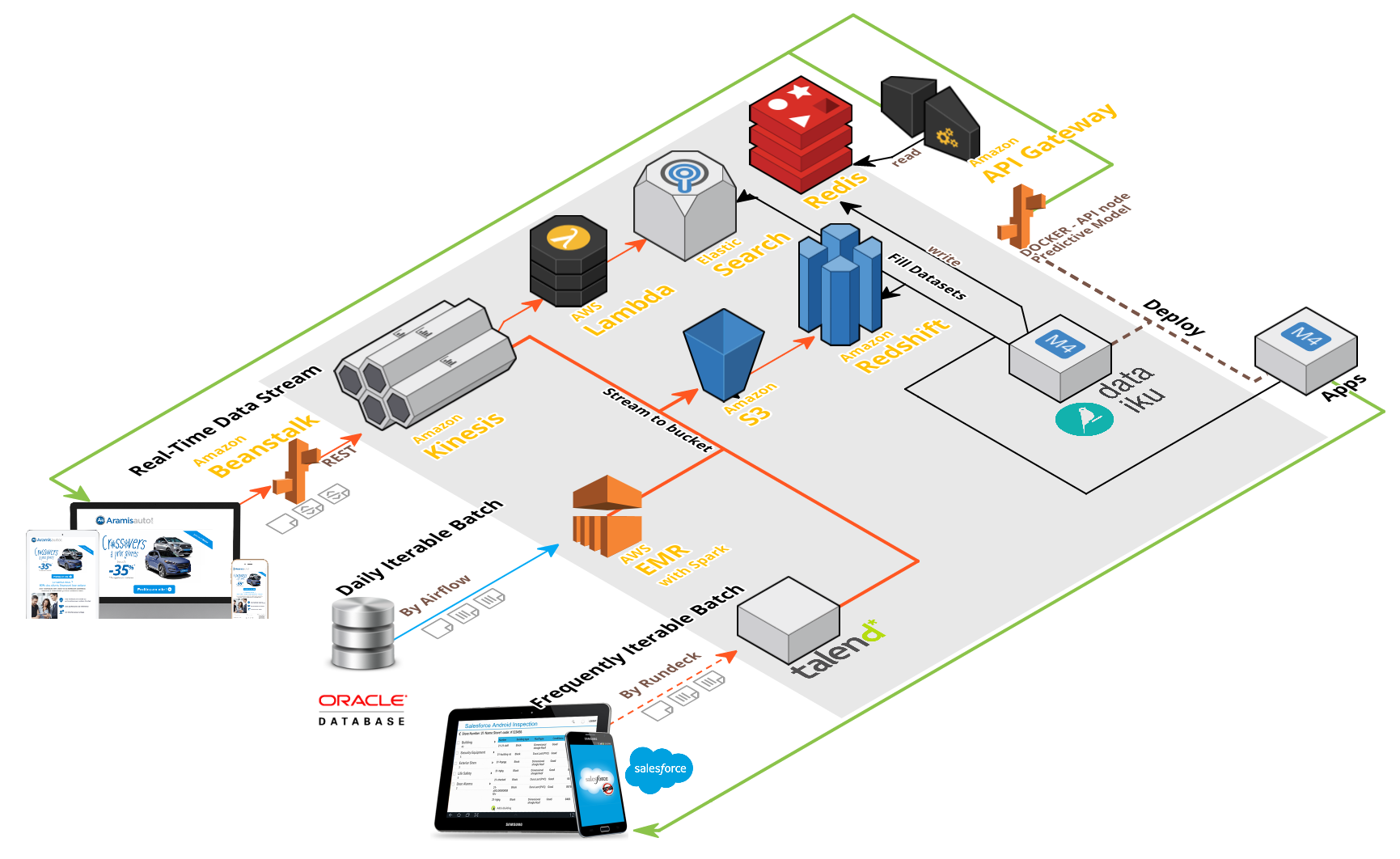

Infrastructure

METHODOLOGY

Typical Data Science Workflow

formulate

problem

get data

build and clean dataset

study dataset

train model

+ feature selection

+ algorithm selection

+ hyperparameter optimization

Then what?

Enter Industrialization...

Goal

automatically serve predictions to any given information system

Constraints

-

Data - source refresh rate, enrichment

-

Model - stability, maintainability

-

Operations - scalability, resilience, availability

-

Resources - no dedicated developers

Philosophy

start from final use case and work your way back to data preparation

Conception

-

On demand - client/server architecture

-

Complete features → REST API

-

Partial features → enrichment necessary

-

Lookup sufficient → REST API + key-value data store

-

Complex enrichment from database → API usable but slow

-

-

-

Trigger-based (time/event) → batch mode

-

Computationally efficient

-

Can serve predictions directly to destination

-

When will predictions be computed?

Design Patterns

-

Think about your production use-case as early as possible.

- Your data pre-processing pipelines must be deterministic and reproduce the same conditions as in training.

- make your transformations robust against abnormal values

- variable order matters (cf. scikit-learn)

- Log as much as you can.

- Make sure your development and production environments are identical (esp. framework versions!)

- Write clean code: your models will evolve (re-training, new features, ... etc)

- Keep things simple - stability trumps complexity.

TOOLS

Dataiku

-

DSS Scenarios

- customizable (cf. global variables)

- simple to integrate

- API, triggers, and reporters → easy scheduling and monitoring

-

API Scoring Node

- easy to deploy and update models

- lightweight

- compatible with DSS visual models

-

Automation Node

- data-change triggers

- built-in orchestration

- advanced monitoring

Toolbox

Flask - a python web application microframework

- simple,

- lightweight,

- powerful

Serialization - persisting models on disk

- pickle / joblib dump

- POJO objects for JVM-based environments

- PMML

@app.route('/api/v1.0/aballone', methods=['POST'])

def index():

query = request.get_json()['inputs']

data = prepare(query)

output = model.predict(data)

return jsonify({'outputs': output})

if __name__ == '__main__':

app.run(host='0.0.0.0')- JSON - universal I/O format

-

Docker - soft virtualization:

- no more dependency problems

- runs (almost) anywhere

-

AWS EC2 - flexible cloud computing resources

- spot instances for cost optimization

-

AWS Elastic Beanstalk - web app deployment and scaling

- (very) easy to use

- (relatively) cheap

- provides auto-scaling and load-balancing

- Redis - powerful in-memory key-value data store

- Elasticsearch - NoSQL text search engine for JSON documents

Toolbox (cont.)

USE CASES

Use case 1 - Pricing

- Goal - providing price estimates for clients' used cars online

- Use - web service callable by website

- Features - 14 car descriptors (brand, model, age, energy, trim, mileage, transmission, engine)

- Model/Performance - XGBoost regression, test MAPE <5%

- Deployment Specs - response <100ms, JSON I/O

- Solution - dockerized API Scoring Node on AWS Elastic Beanstalk, manual model deployment

Dataiku

API Node

query

prediction

Use case 2 - Inventory Turnover

- Goal - predicting how long a car would take to be sold

- Use - web service callable by internal quote generation tool

- Features - car characteristics, competition descriptors (25 total)

- Model/Performance - XGBoost classification, test accuracy 76%

- Deployment Specs - response <5s, JSON I/O

- Solution - dockerized Flask enrichment API via AWS Redshift, dockerized API Scoring Node on AWS Elastic Beanstalk

API Node

query

predictions

Backoffice

Redshift

Master API

partial features

full features

features

predictions

Use case 3 - Lead Scoring

- Goal - detecting leads most likely to transform into customers

- Use - sorting lead portfolios for sales representatives in Salesforce

- Features - 11 offline (localization, email, phone calls, ...) + 47 online (visits, searches, viewed pages, quotes, vehicle preferences, ...)

- Model/Performance - scikit-learn RandomForest classification (unbalanced), 75% of test set targets in top 10% scores

- Deployment Specs - history-based enrichment, fresh data every 15min

- Solution - Flask app, batch mode, loop run, dataprep in Redshift, result sync to Salesforce, Rundeck deployment on EC2

Redshift

Scoring App

leads

features

scores

Use case 4 - Visitor Scoring

- Goal - detecting visitors most likely to transform into leads

- Use - selecting visitors to target in SEM/Retargeting campaigns

- Features - online behavior descriptors + acquisition channels interactions

- Model/Performance - scikit-learn RandomForest classification (unbalanced), 79% of test set targets in top 3% scores

- Deployment Specs - history-based enrichment, fresh data every 15min

- Solution - [TEST] DSS daily Scenario, Redshift dataprep, sync to Redis

Redshift

DSS Scenario

lookup

score

features

scores

Infrastructure (again)

@NawfalTachfine