EVOLVE

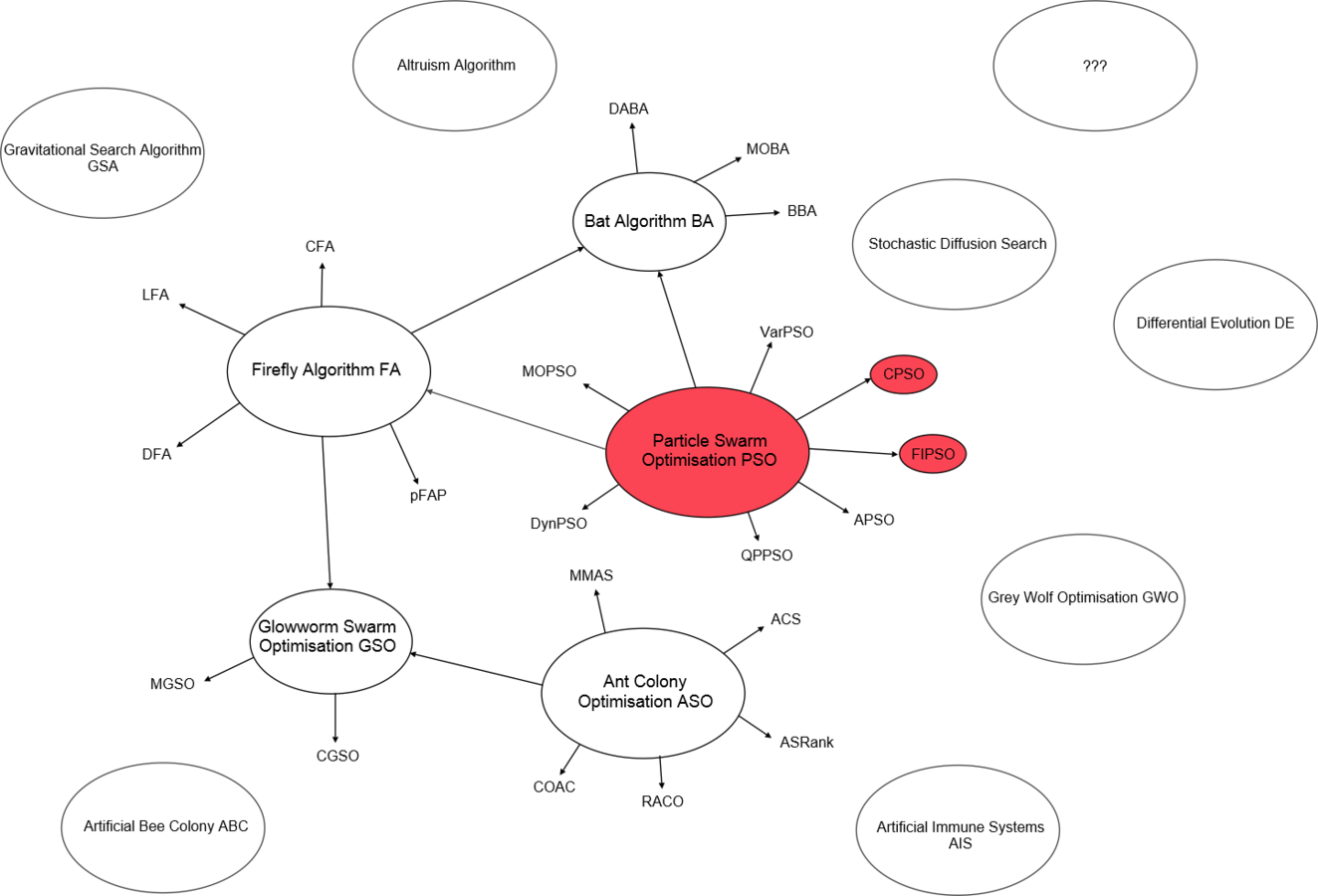

Particle Swarms for optimisation

Nicholas Browning

A Multi-objective Evolutionary Toolbox

Introduction

- Swarm Intelligence

- Applications

- Introduction to Particle Swarm Optimization (PSO)

- The Idea

- The Implementation (Vanilla)

- Topology

- PSO Variants

- Constriction PSO (CPSO)

- Fully informed PSO (FIPSO)

- Benchmark Functions

- Benchmark Analysis

- Cool Videos

Swarm Intelligence

Swarm Intelligence

Swarm Intelligence

Artificial intelligence technique based on study of collective behaviour in decentralised, self-organised systems.

Population of simple "agents" capable of interacting locally, and with their enviroment.

Usually no centre of control - local interactions between agents usually leads to global behaviour

Swarm Intelligence

Swarm Intelligence

Sunderland A.F.C PSO?

Swarm Intelligence

5 tenets of swarm intelligence, proposed by Millonas [1]

- Proximity: simple space and time calculations

- Quality: respond to quality factors in the environment

- Diverse Response: activites must not occur along excessively narrow channels

- Stability: mode of behaviour should not change upon environment change.

- Adaptability: Mode of behaviour must be able to change if it's "worth" the computational price

[1] M. M. Milonas. Swarms, Phase Transitions, and Collective Intelligence. Artificial Life III, Addison Wesley, Reading, MA, 1994.

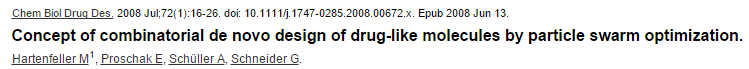

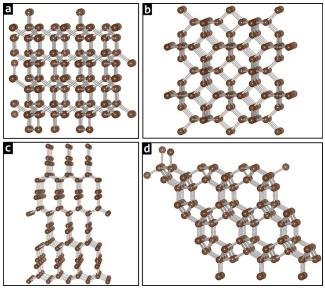

Applications

Also Batman, LOTR, Lion king, Happy Feet...

Particle Swarm Optimisation

First proposed by J. Kennedy and R. Eberhart [2], intent to imitate social behaviour of animals which display herding/swarming characteristics.

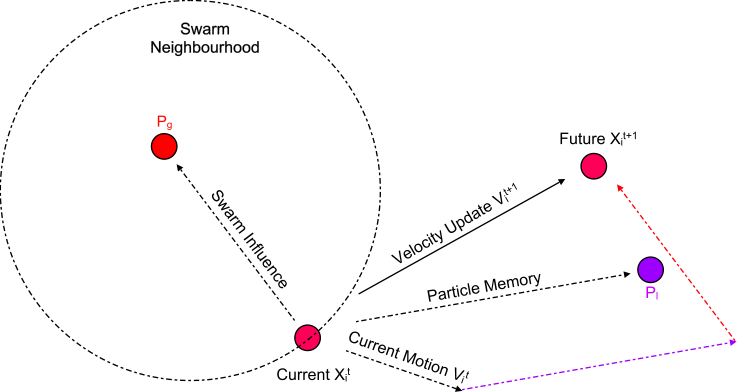

Each individual in the swarm learns from both its own past experiences (cognitive behaviour) and also from its surrounding neighbours (social behaviour).

Optimisation was not intentional!

[2] Kennedy, J.; Eberhart R., Particle Swarm Optimisation, Proc. Int. Conf. Neural Networks, 1995.

Each particle is initialized in space:

Each particle must move and hence has a velocity:

The Implementation (Vanilla)

The Implementation (Vanilla)

How is cognitive and social learning achieved?

COGNITIVE AND SOCIAL INFLUENCE

The Implementation (Vanilla)

Velocity update is defined by:

New particle position can then be calculated:

Cognitive Influence

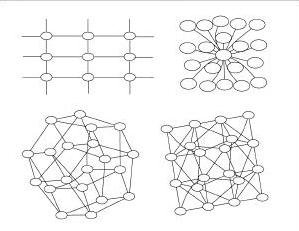

Swarm Influence

Particle will cycle unevenly about the point:

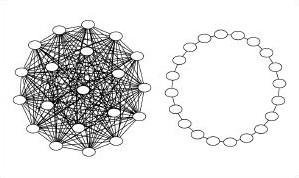

SWARM NEIGHBOURHOOD: TOPOLOGY

All

Ring

2D Von Neumann

3D Von Neumann

Star

Pyramid

Many graph representations possible!

Clerc [3] adapted PSO algorithm to include constriction coefficient. Convergence guaranteed, but not necessarily to global optimum.

Eberhart and Shi [4] later proposed modified version with improved performance

Constriction PSO

[3] M. Clerc. The Swarm and the Queen: Towards a Deterministic and Adaptive Particle Swarm Optimisation, Evolutionary Computation, 1999.

[4] R. C. Eberhart, Y. Shi. Comparing Inertia Weights and Constriction Factors in Particle Swarm Optimisation, Evolutionary Computation, 2000.

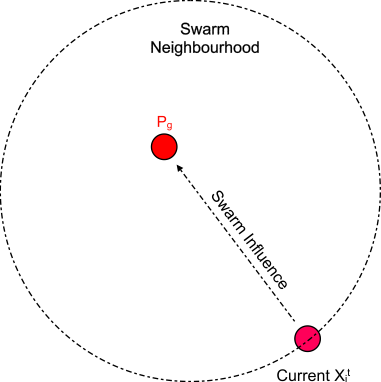

Particle searches through it's neighbours to identify the one with the best result: biases the search in a promising direction

Fully informed PSO

Perhaps chosen neighbour is in fact not in a better region, and is simply heading toward a local optima?

Important information may be neglected through overemphasis on a single best neighbour

Fully informed PSO

Mendes et al. [5] proposed a PSO variant which captures a weighted average influence of all particles in neighbourhood. Poli et al. [6] proposed the following simplified FIPSO update equation:

[5] R. Mendes, J. Kennedy, and J. Neves. The Fully Informed Particle Swarm: Simpler, Maybe Better. IEEE Transactions on Evolutionary Computation, 2004.

[6] R. Poli, J. Kennedy and T. Blackwell. Particle Swarm Optimisation: An Overview. Swarm Intell., 2007.

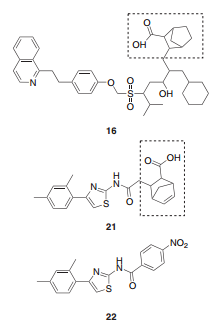

Benchmark functions

De Jong's Standard Sphere Function

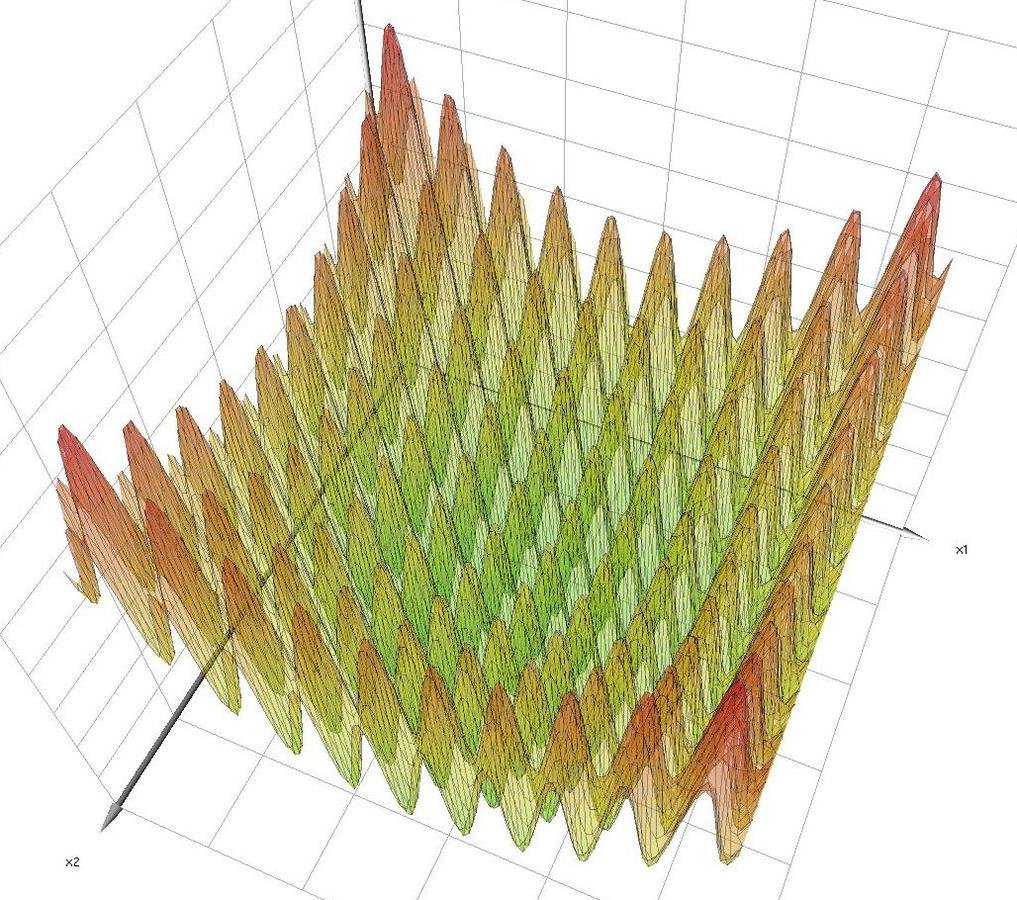

Eggcrate Function

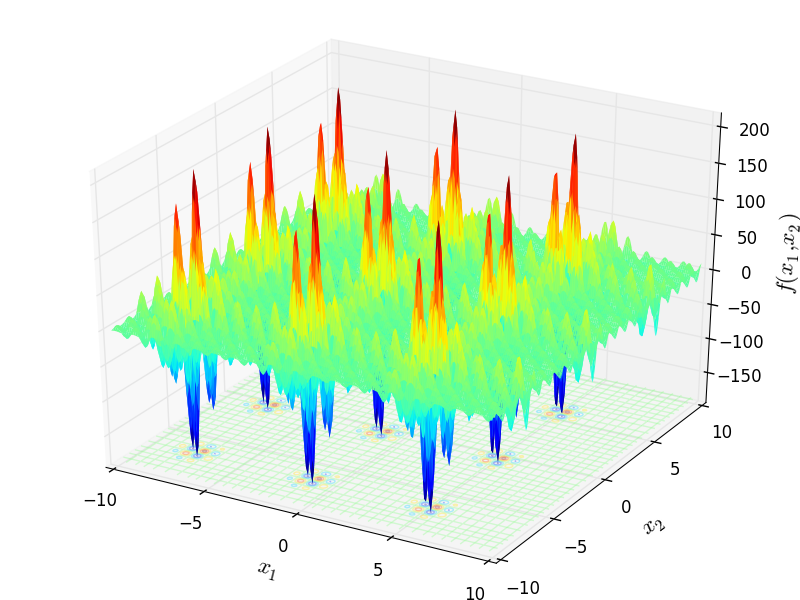

Benchmark functions

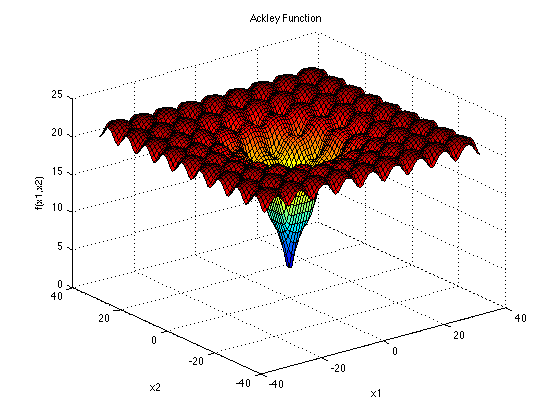

Ackley's Function

Benchmark functions

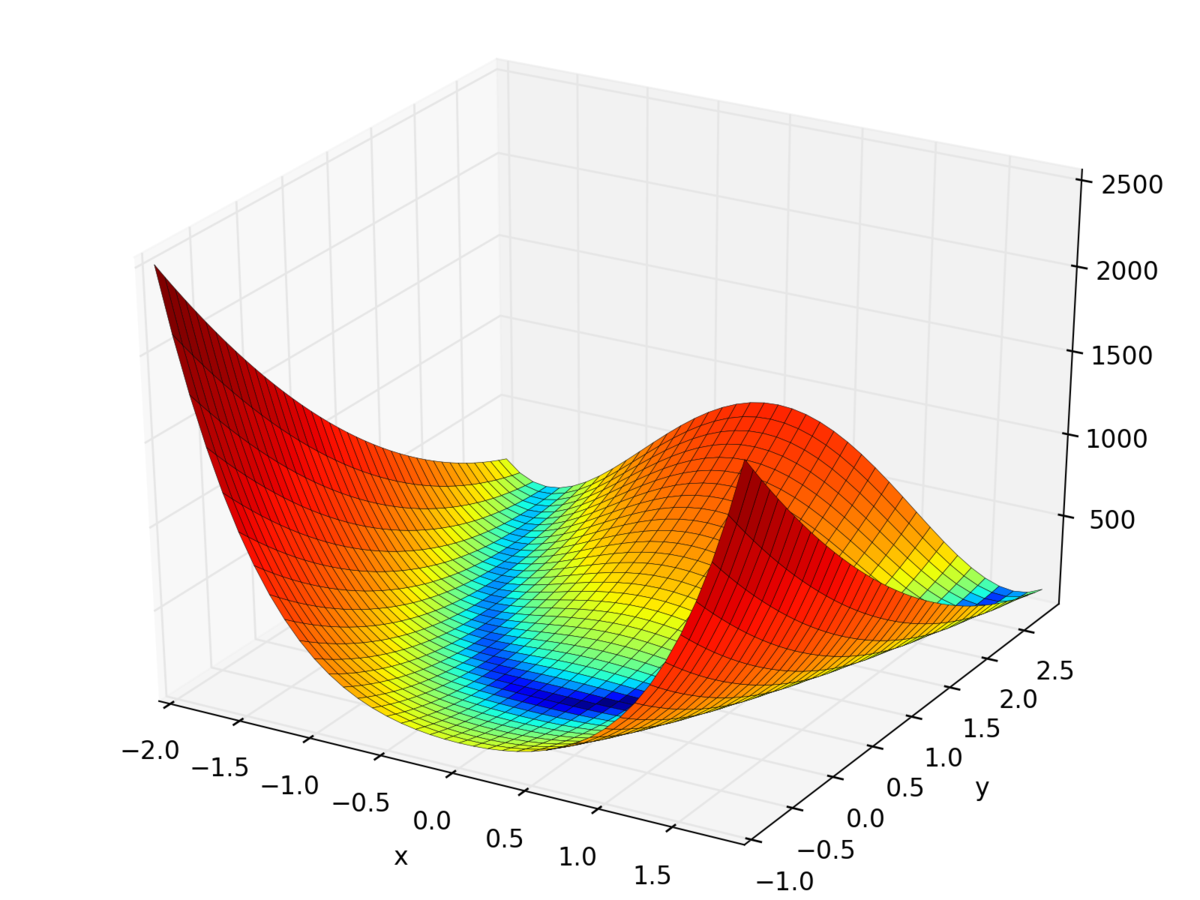

Rosenbrock's Function

Michaelewicz's Function

d! local minima in domain

where i = 1, 2, ..., d

Benchmark functions

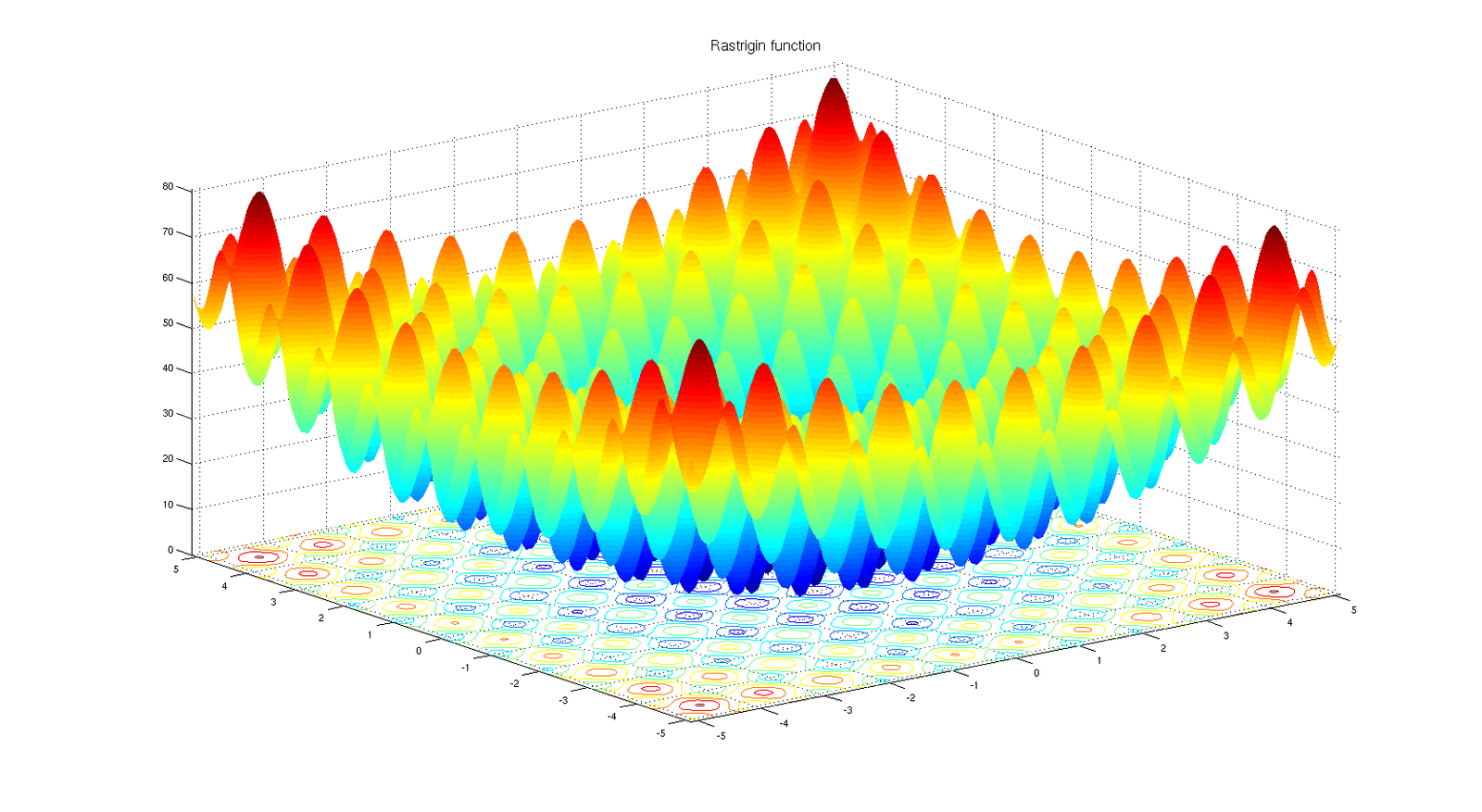

Rastrigrin's Function

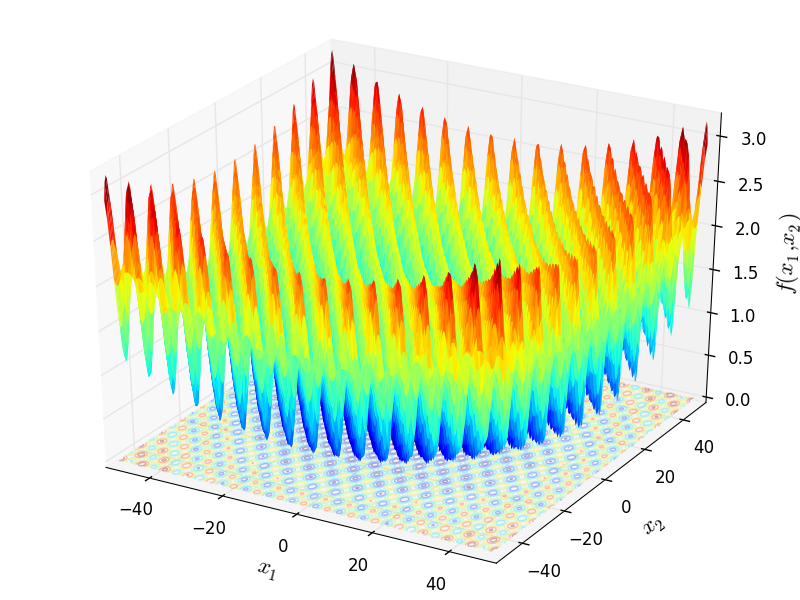

Griewank's Function

Benchmark functions

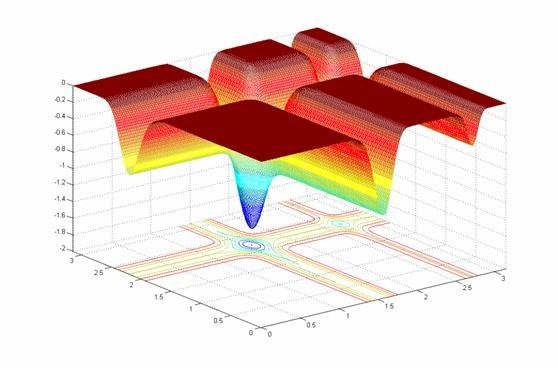

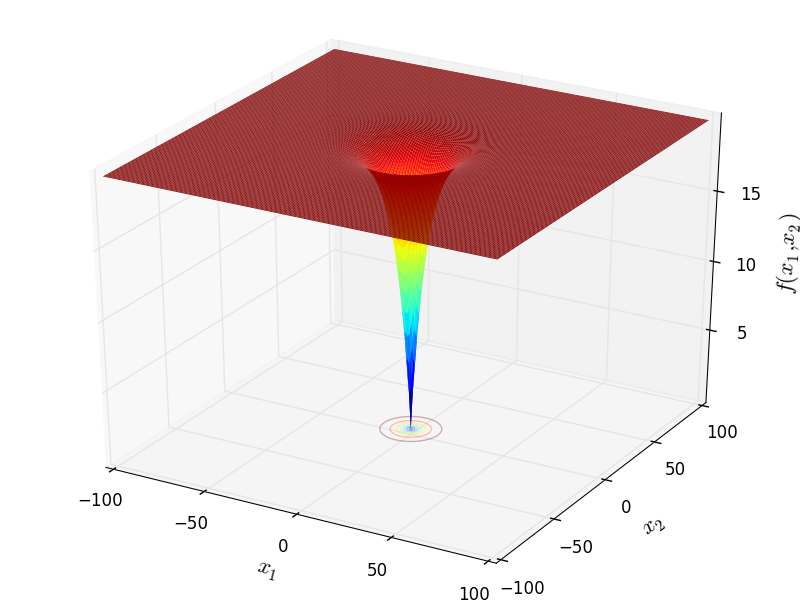

Easom's Function

Shubert's Function

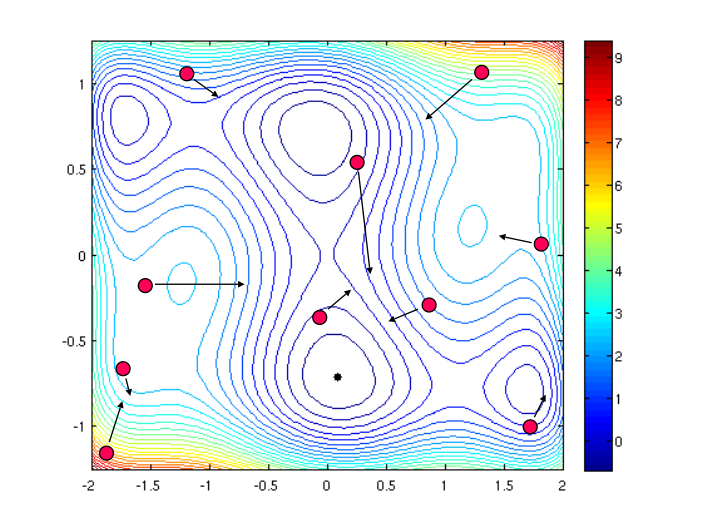

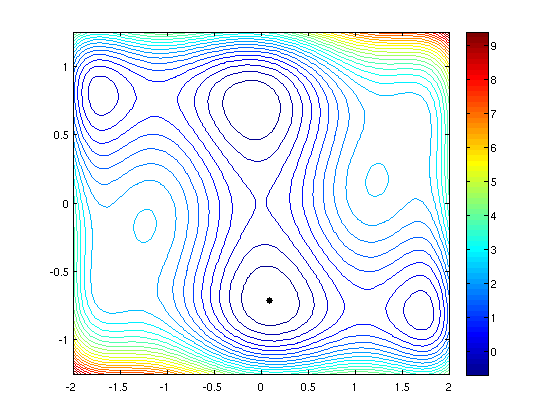

Dynamics Visualisation

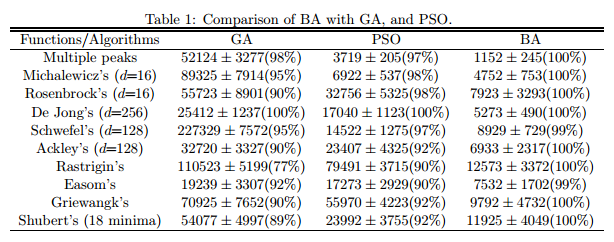

Benchmark Analysis

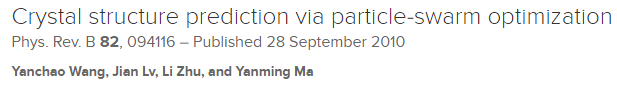

Comparison performed by Yang [7] - study introduces new PSO variant: "The Bat Algorithm"

[7] X. S. Yang. A New Metaheurstic Bat-Inspired Algorithm. Nature Inspired Cooperative Strategies for Optimisation, 2010.

Dynamic Optima

PSO in Theatre

Glowworm swarm Optimisation

Bat swarm algorithm

Special variant of PSO

Other similar ideas exist e.g Glowworm algorithm

based on the echolocation behaviour of microbats with varying pulse rates of emission and loudness.

TODO