The limits of shallow approaches on

MCTest

Previous work on

Question Answering

- SAT-style, Zweig and Burges 2012

- DeepRead, Hirschman et al. 1999

- DeepSelection, Yu et al. 2014

- QANTA, Iyyer et al. 2014

MCTest

- Multiple choice

- Two question types

- Open-domain

- Common sense

- Children stories

- Fictional settings

Single

Multiple

Inviting Giraffes to parties (160test Q33)

The blue ball said hello (160dev Q7)

Owls having socks (160dev Q10)

MC160

MC500

TRAIN DEV TEST

TRAIN DEV TEST

Quality check by hand

Quality check by algorithm

Project Goal

- Limit of shallow approaches

- Exploring Rule-based system

- Improve upon original baseline

Results

MC160

MC500

69.3%

63.3%

73.5%

64.2%

4%

1%

+70% First

SHALLOW METHODS

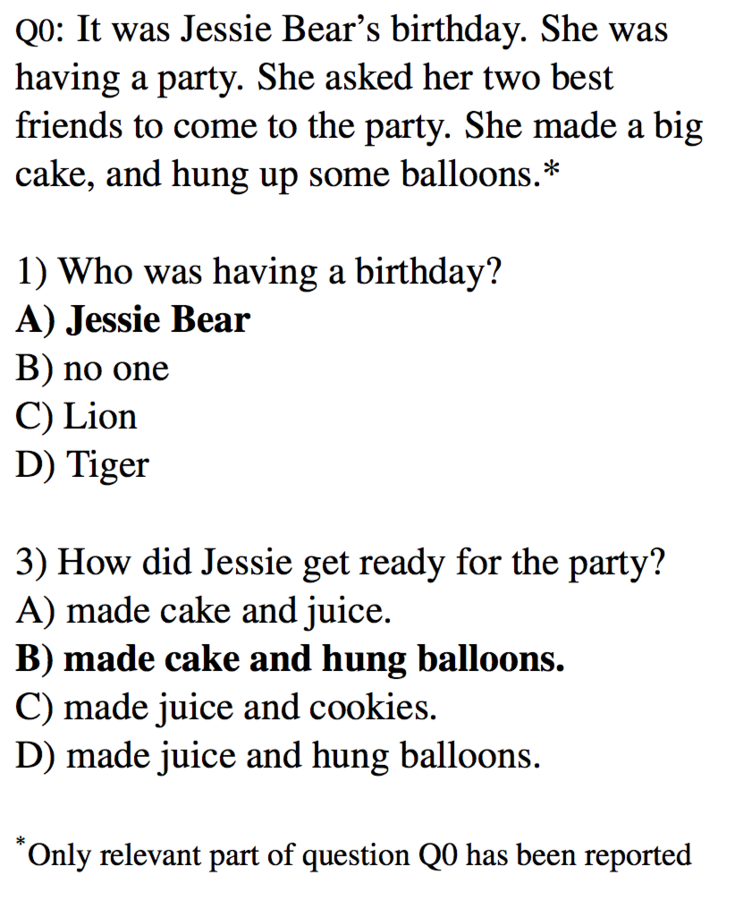

It was Jessie Bear's birthday. She was having a party. She asked her two best friends to come to the party. She made a big cake, and hung up some balloons

- A) Jessie Bear

- B) no one

- C) Lion

- D) Tiger

be

be

have

ask

she

friend

make

hang

be

have

LEMMATISATION

STOPWORDS

COREFERENCE

1) Who was having a birthday?

Jessie

Jessie

Jessie

Jessie

Jessie birthday.

party.

ask two friend come party.

make big cake hang balloon

- A) Jessie

- B) no one

- C) Lion

- D) Tiger

birthday

birthday

birthday

birthday

2

1

1

1

QA combining

matching

Scoring

Word Matching (WM)

Single 67.88% 62.76%

Multi 50.31% 46.72%

All 58.43% 53.97%

on train+dev sets

MC160

All +3.26% +1.49%

+ co-reference

MC500

What did John do at the beach?

John was at the beach. It was a very warm day.

He decided to go for a swim.

- -went for a swim

Sentence selection

window up to 3 senteces

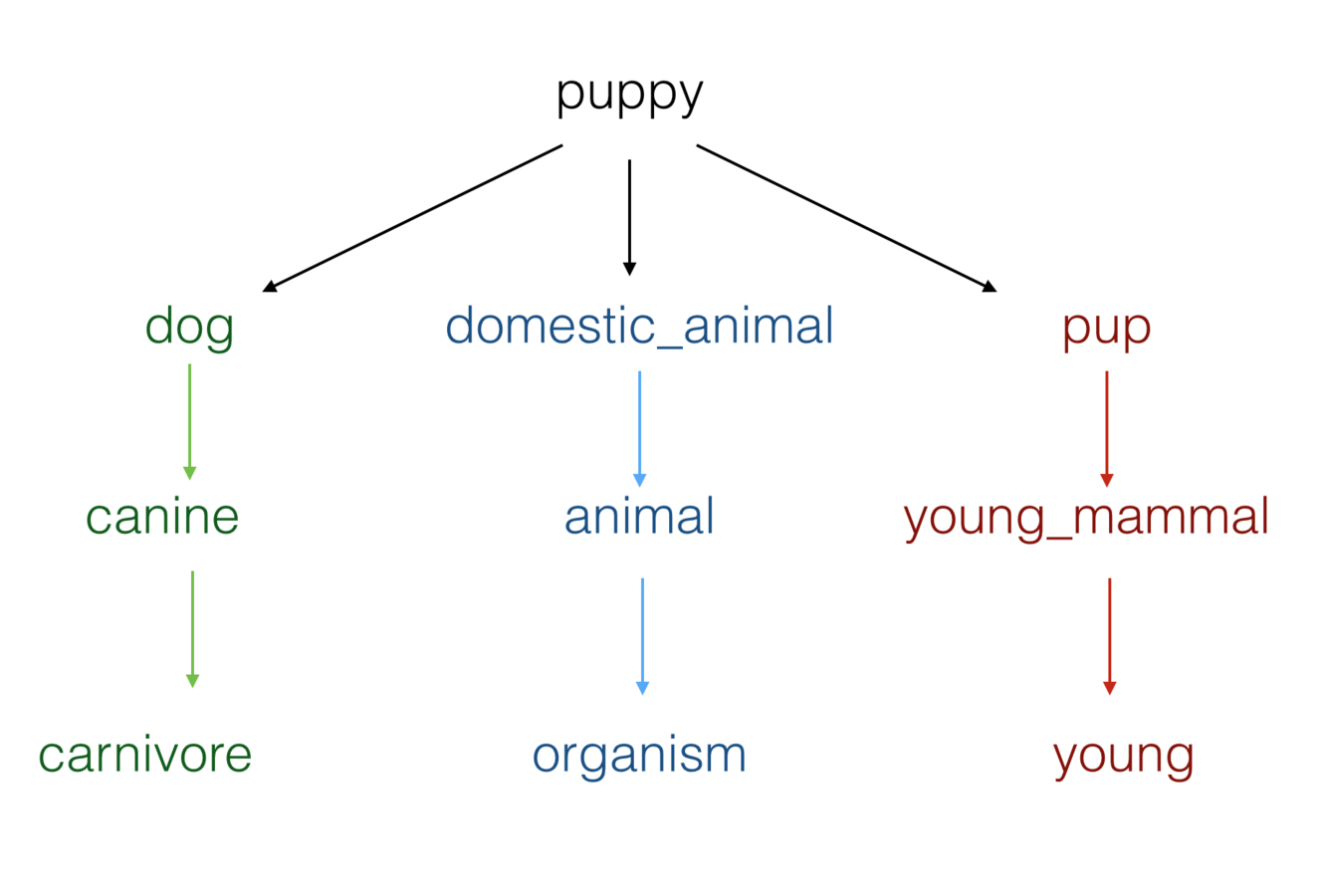

Hypernymy

Peter the puppy.

Who is the animal?

puppy (1.0) -> dog (0.5) -> animal (0.3)

Word Matching would score 0

Hypernym would score 0.3

animal

All 58.43% 53.97%MC160

All +1.55% +1.48%

+ hypernym

MC500

Word Matching

Rule-based systems

Implementation

def applyTransformations(Story):

if matchesRuleA(question):

Story = applyTransformationA(Story)

if matchesRuleB(question):

Story = applyTransformationB(Story)

if matchesRuleC(question):

Story = applyTransformationC(Story)

...

return StoryApplying a series of transformations to the story when a question matches patterns

Rules we explored

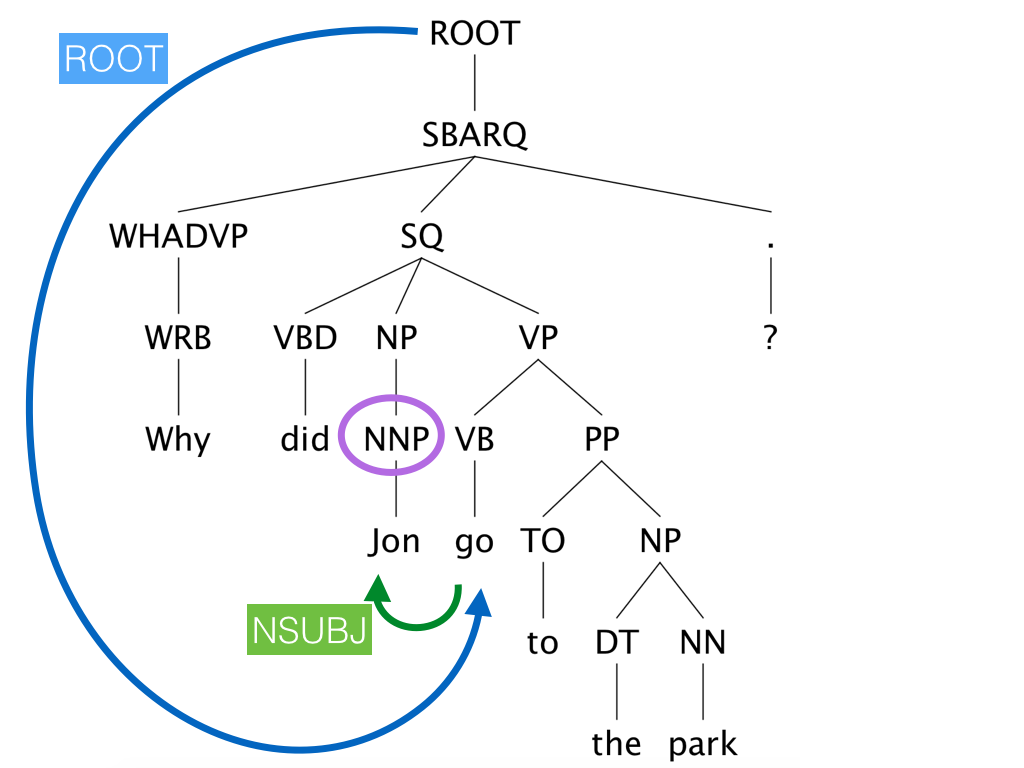

Syntactic pattern matching

- Negation

- Why questions

- Character subject

- Narrative

- Temporal

- Implicative

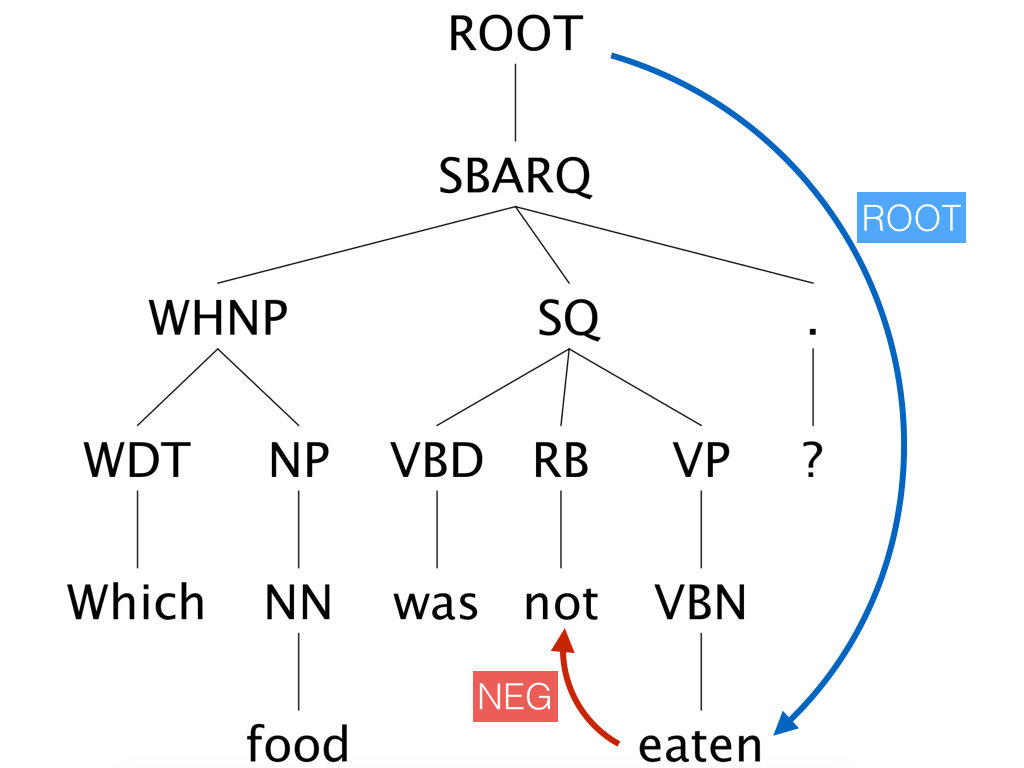

Negation rule

Which food was not eaten?

Hence,

negate the weights

of word tokens

100% accurate

Solution

Character-subject rule

Why did Jon go to the park?

Hence,

we introduce coreference to accurately locate the character

Solution

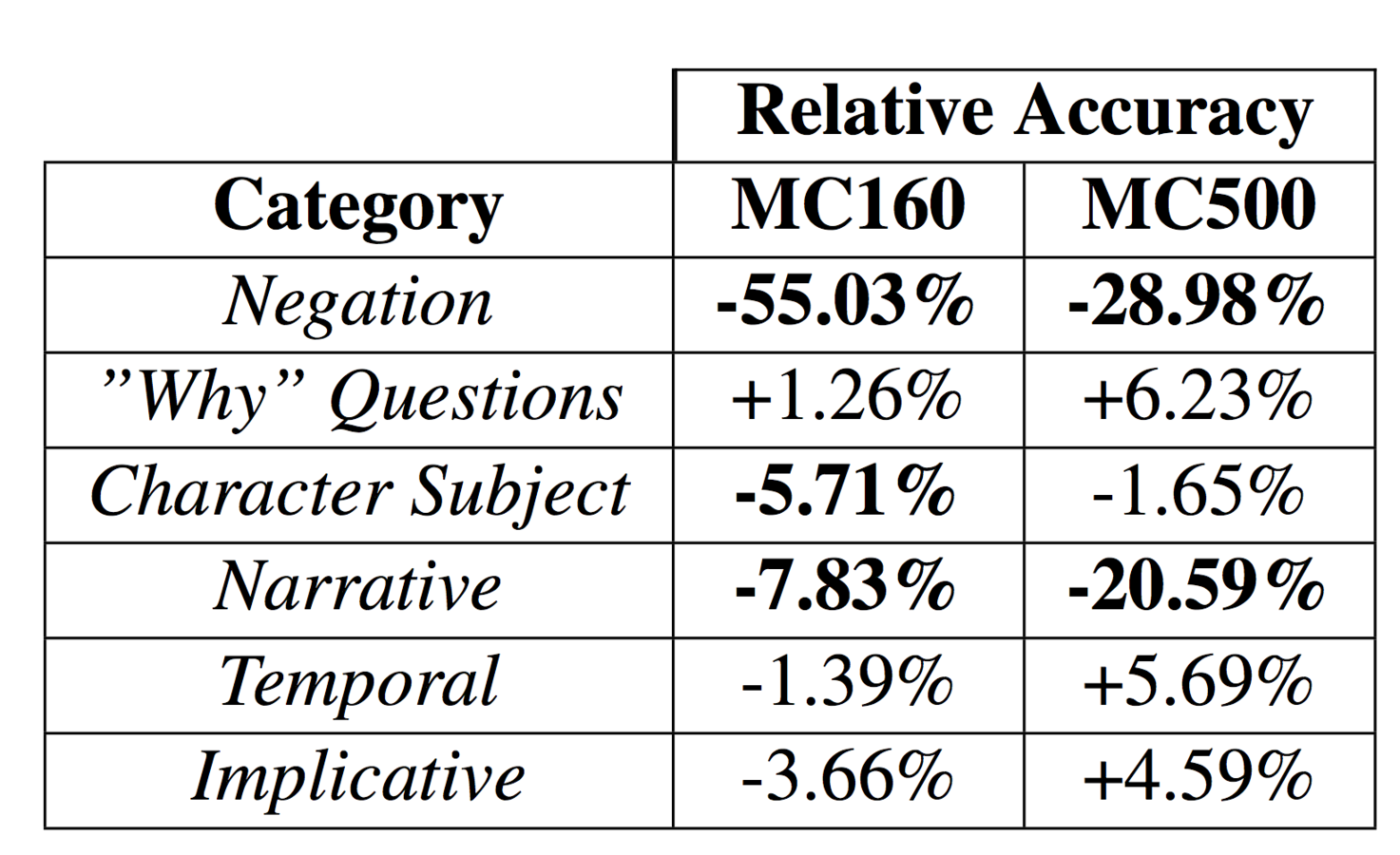

Result

70.3% 59.6%

MC160

MC500

on training set

Analysis

Using this system we

- can analyze the performance

- can understand the limitations

of a lexical system

Limitations

What two characters are in this book?

This is a story of a girl and what kind of animal?

What is the name of the boy in the story?

Lexical system has no understanding of narrative or characters.

Learning a

Scoring function

SVM

WM+Coref

WM+Hypernym

WM+Coref Selection on Q

WM+Coref

WM+Coref Selection on QA

WM+Coref

WM+Coref Selection on QA

WM+Hypernym

WM+Hypernym Selection on QA

WM+Coref

Platt Scaling

Shallow methods

MC160

MC500

68.0%

59.9%

71.4%

60.2%

3.4%

0.3%

SW+D

SVM

(combined)

Textual Entailment

Augmented our Rule-based system with RTE BIUTEE

RTE Result

MC160

MC500

SW+D

+RTE

RBS

+RTE

69.3%

63.3%

73.5%

64.2%

4.2%

0.9%

Conclusions

MC160 can be beaten

by shallow methods

MC500 requires deeper

understanding of natural language

Shallow methods have a limit

74%

- More sophisticated Rule-based system

- Natural Logic (Angeli and Manning 2014)

- Deep Sentence Selection (Yu et al. 2014)

Future

Questions?

:)