Запись аудио в браузере

Ольга Маланова

Немного истории воспроизведения аудио

<embed>

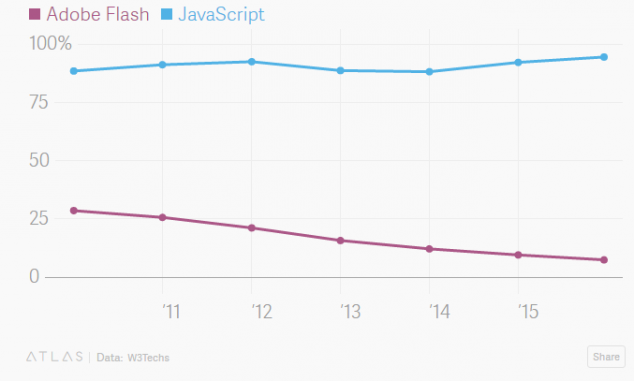

Плагины

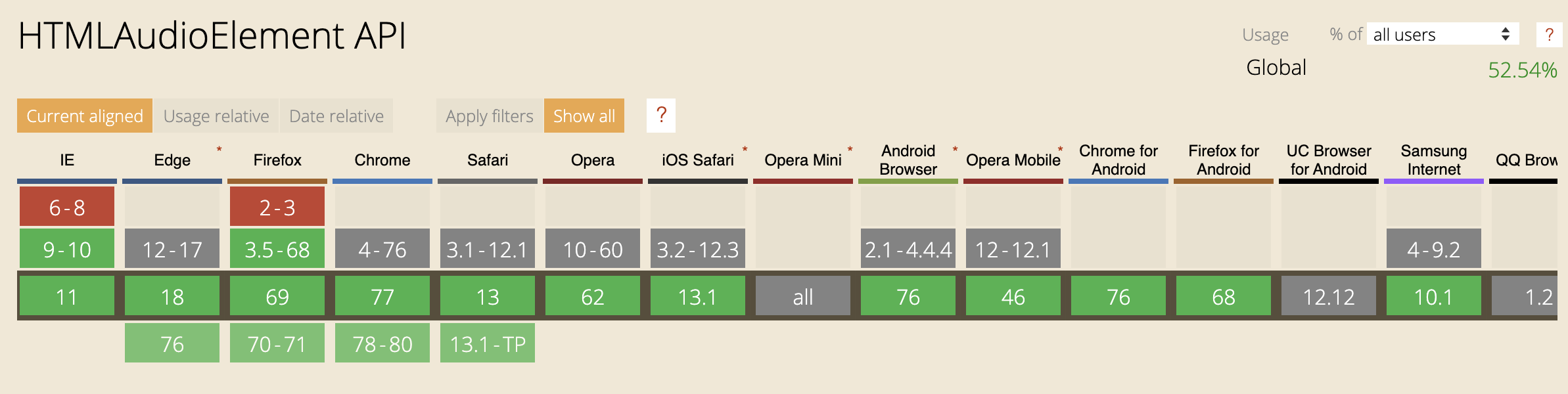

<audio>

Доступ к потоку медиаданных

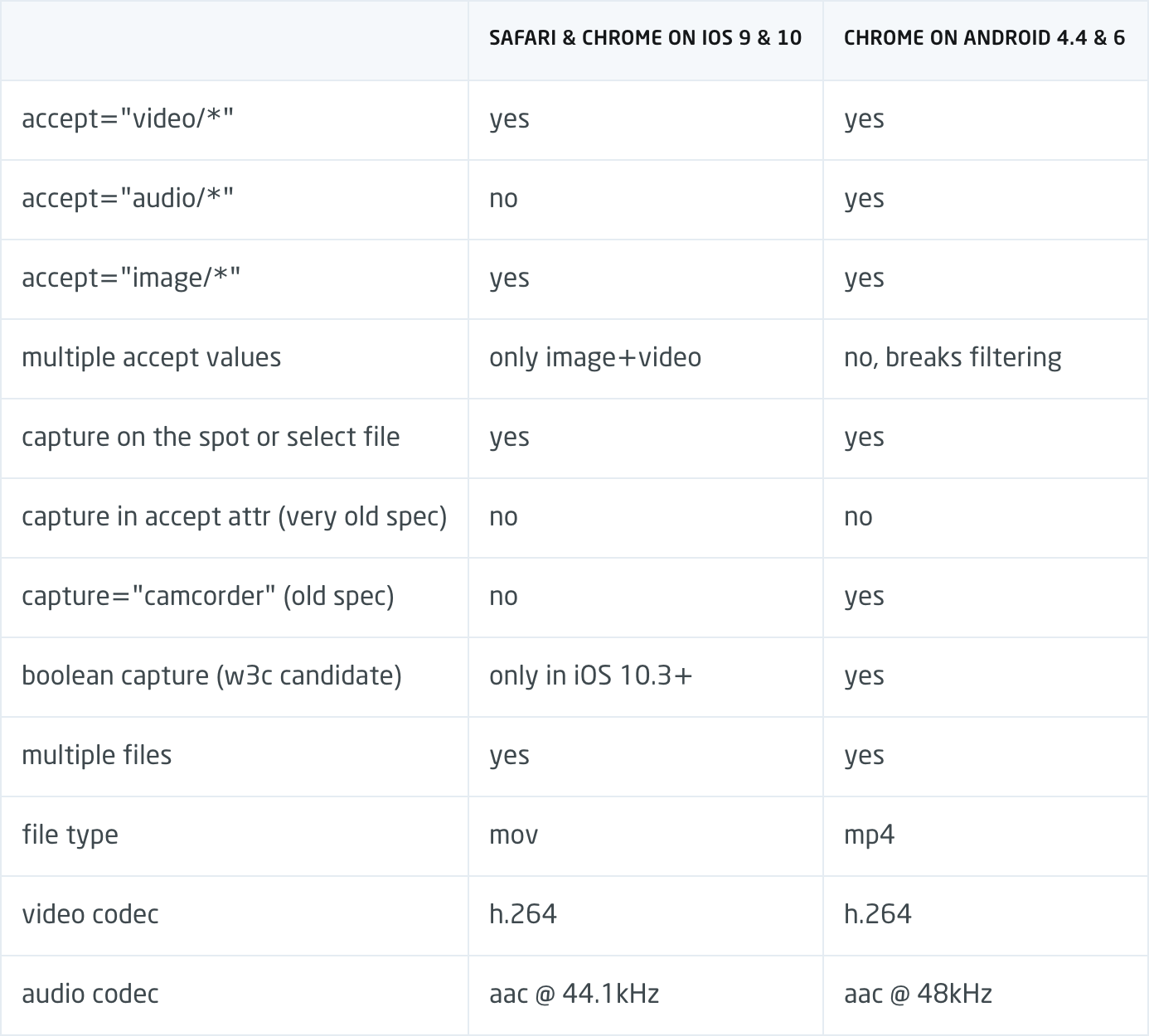

<input type="file"/>

<input type="file" accept="audio/*;capture=microphone">

<device type="media" onchange="update(this.data)"></device>

<audio></auidio>

<script>

function update(stream) {

document.querySelector('audio').src = stream.url;

}

</script><device>

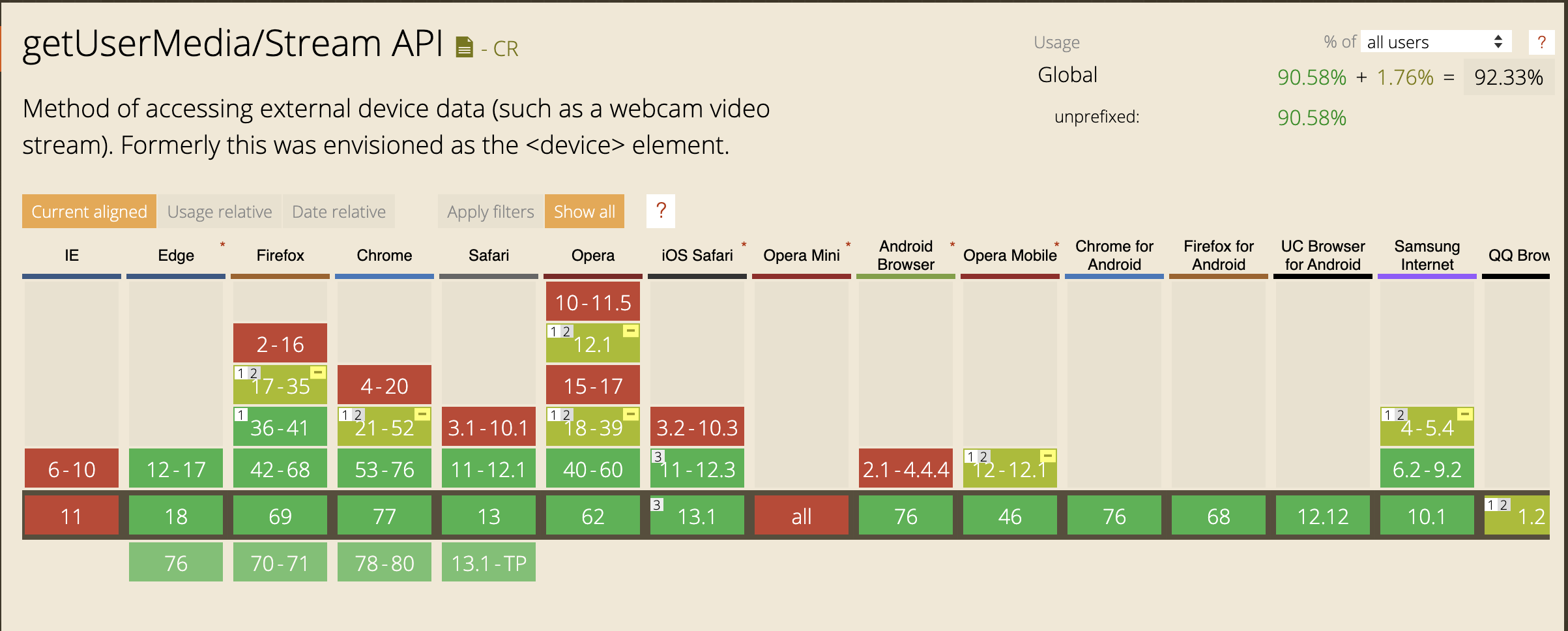

getUserMedia

getUserMedia

async getMedia = () => {

try {

const stream = await navigator.mediaDevices.getUserMedia({ audio: true })

/* handle the stream */

successCallback(stream)

} catch (err) {

/* handle the error */

errorCallback(err)

}

}Обработка и сохранение

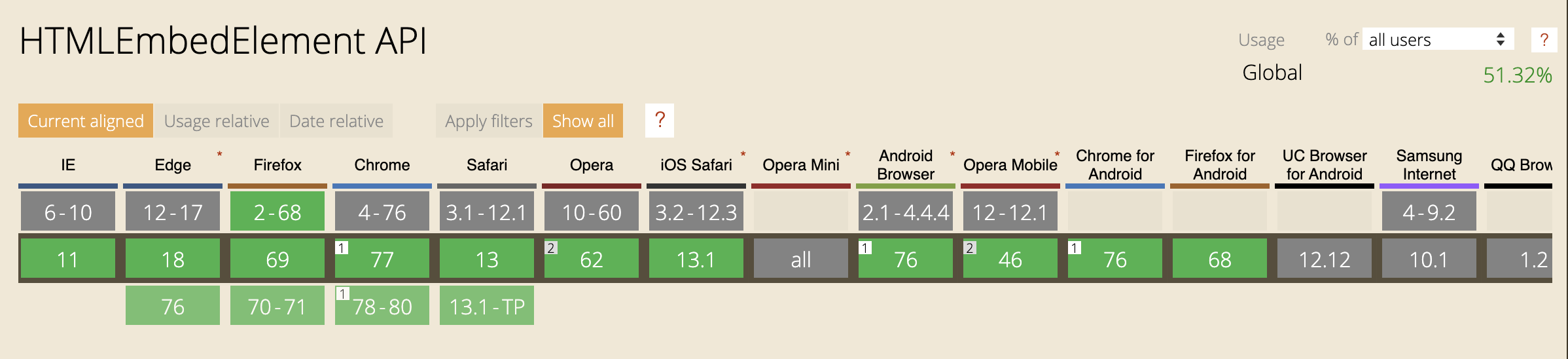

Web APIs

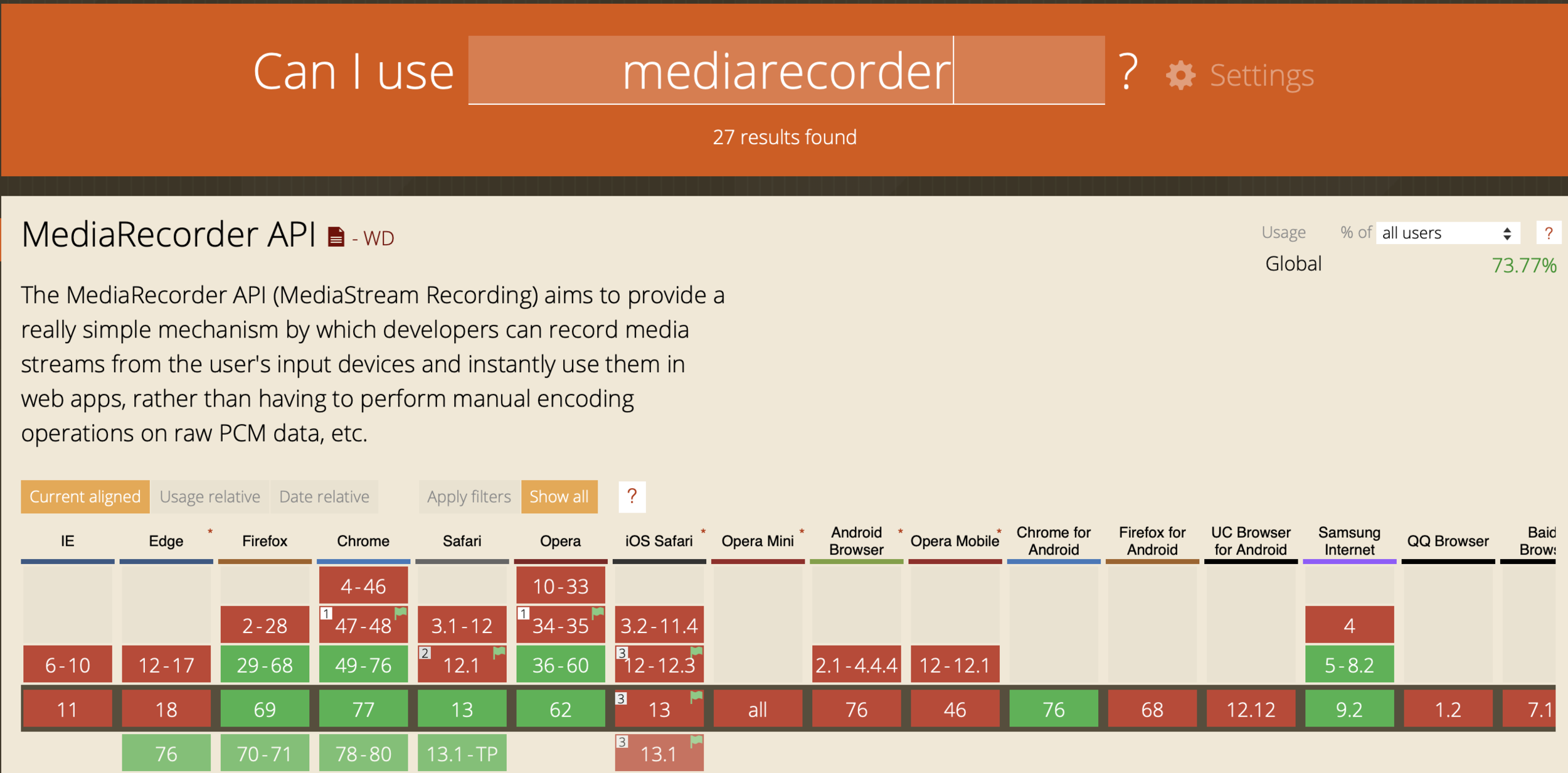

MediaRecorder API

Media Streams API

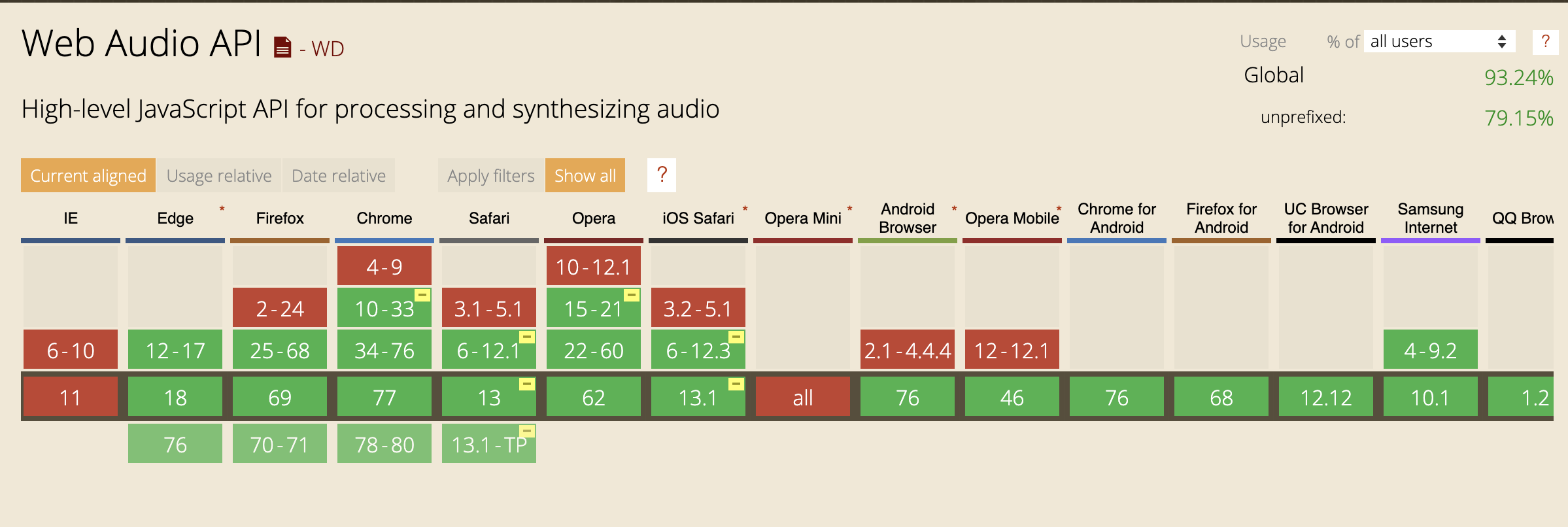

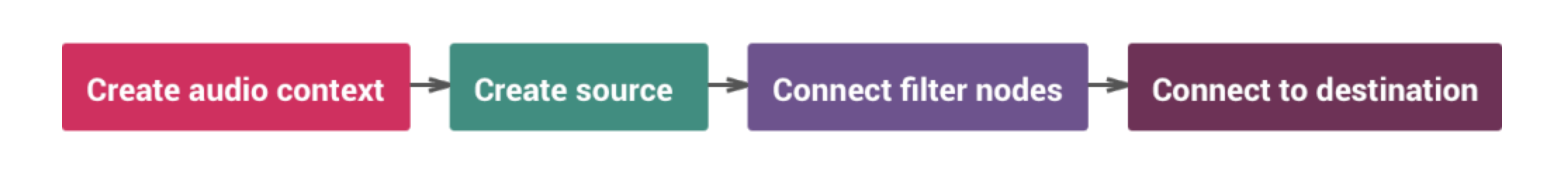

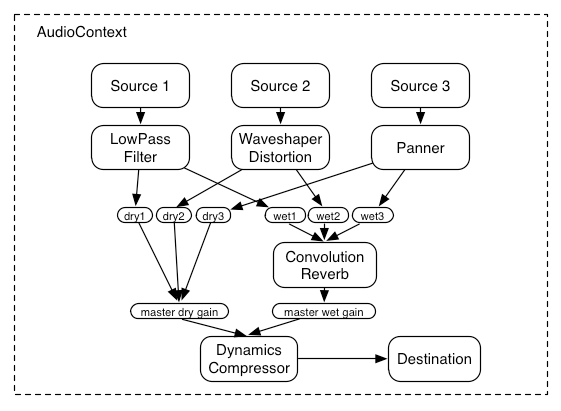

Web Audio API

Web Audio API

Web Audio API

const AudioContext = window.AudioContext || window.webkitAudioContext

const audioCtx = new AudioContext()

const sourceNode = audioCtx.createMediaStreamSource(stream)

const gainNode = audioCtx.createGain()

const finishNode = audioCtx.destination

sourceNode.connect(gainNode);

gainNode.connect(finishNode)AudioNode

пример

/* NTSC */

const freq1 = 60 * (525 - 35 ) / 2 * 3 // 44100

/* PAL */

const freq2 = 50 * (625 - 37) / 2 * 3 // 44100NTSC vs PAL

MediaStream

ondataavailable

Blob

MediaRecorder API

MediaRecorder

export default {

name: 'MediaRecorderComponent',

data () {

return {

mediaRecorder: null

isAudioAccessReceived: false,

isRecording: false,

chunks: [],

audio: this.$refs.audio

}

},

methods: {

toggleRecord () {

if (!this.isAudioAccessReceived) {

try {

const stream = await navigator.mediaDevices.getUserMedia({ audio: true })

this.mediaRecorder = new window.MediaRecorder(stream, { type: 'audio/ogg' })

this.mediaRecorder.ondataavailable = () => this.chunks.push(event.data)

mediaRecorder.onstop = (e) => {

const blob = new Blob(chunks, { 'type' : 'audio/ogg; codecs=opus' });

this.chunks = [];

const audioURL = window.URL.createObjectURL(blob);

audio.src = audioURL;

}

this.isAudioAccessReceived = true

} catch (e) { /* handle error */ }

}

if (!this.isRecording) {

this.mediaRecorder.start()

} else {

this.mediaRecorder.stop()

}

this.isRecording = !this.isRecording

}

}

}

<template>

<div class="text-center" padding>

<div class="q-ma-md text-center text-h4">MediaRecording API</div>

<div class="q-ma-md text-center">

<q-btn @click="start" label="start" class="q-ma-sm" rounded color="blue"/>

<q-btn @click="stop" label="stop" class="q-ma-sm" rounded color="blue"/>

</div>

<div v-show="!isMediaRecorderSupported">{{ error }}</div>

</div>

</template>

<script>

export default {

name: 'VueMediaRecorder',

methods: {

start () {

this.mediaRecorder.start()

},

stop () {

this.mediaRecorder.stop()

}

}

}VueJS with MediaRecorder

VueJS with MediaRecorder

export default {

name: 'MediaRecorderComponent',

data () {

return {

chunks: []

}

},

async mounted () {

try {

this.mediaRecorder.onstop = this.handleStop

} catch (e) { /* handle error */ }

},

methods: {

handleStop () {

const blob = new Blob(this.chunks, { type: 'audio/ogg; codecs=opus' })

const audioURL = window.URL.createObjectURL(blob)

this.chunks = []

}

}

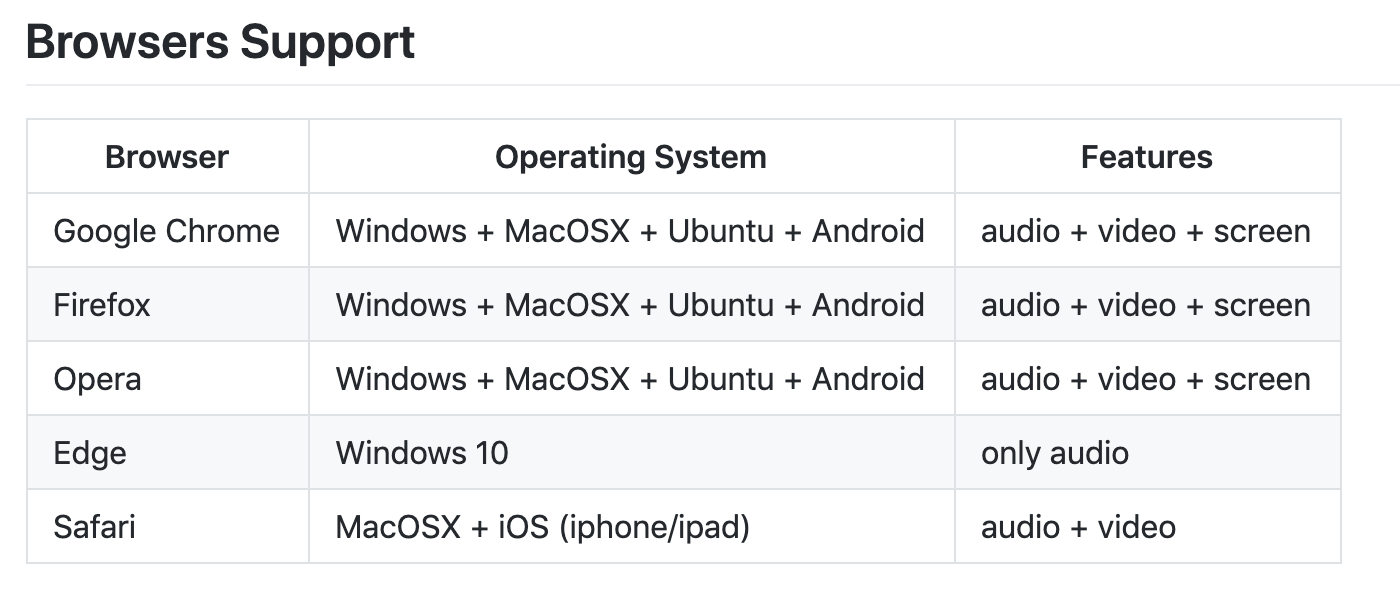

}MediaRecorder Support

Альтернативы

RecorderJS

<template>

<div padding>

<div v-if="getIsMediaDevicesAvailable">

<q-btn

@click="toggleRecord()"

value="record"

>

</q-btn>

</div>

</div>

</template>

<script>

export default {

name: 'AudioRecorder',

components: {

AudioAnswerRecord,

CommonTimerCounter

},

data () {

return {

notStoredAnswersRecords: [],

isRecording: false,

recordProcessCircle: 0,

isDataDownloading: false,

recButtonDisabled: false,

isWaiting: false,

recorder: null,

audioContext: null,

recorderUnAvailable: null

}

},

created () {

this.audioContext = new this.AudioContextClass()

},

beforeDestroy () {

this.audioContext = null

},

methods: {

toggleRecord () {

if (this.isRecording) {

this.isRecording = false

setTimeout(() => {

this.recorder.stop()

this.stream.getAudioTracks()[0].stop()

this.recorder.exportWAV((finalUserData) => {

const notStoredAnswerRecord = {

name: `${new Date().getTime()}-${finalUserData.size}.wav`,

blobObj: finalUserData

}

this.notStoredAnswersRecords.push(notStoredAnswerRecord)

this.writeRecordAnswer(notStoredAnswerRecord)

.then(() => {

console.debug(

'AudioRecorder writeRecordAnswer this.notStoredAnswersRecords =',

this.notStoredAnswersRecords

)

this.notStoredAnswersRecords = this.notStoredAnswersRecords

.filter(record => record.name !== notStoredAnswerRecord.name)

})

.catch(

() => this.$refs.notStoredAnswersRecords.filter(notStoredComponentRecord => {

console.debug('notStoredComponentRecord =', notStoredComponentRecord)

notStoredComponentRecord.setErrorDownloading()

})

)

this.recButtonDisabled = false

})

}, 700)

} else {

this.recButtonDisabled = true

console.debug('Try record start this.recButtonDisabled =', this.recButtonDisabled)

navigator.mediaDevices.getUserMedia({

audio: true

})

.then((stream) => {

this.setIsMediaDevicesAvailable(true)

if (this.audioContext.state === 'suspended') {

/**

* Это связано с особенностью хрома. Нужно в ручную запускать auido context,

* если пользотватель еще не выполнидл никакого действия.

* https://developers.google.com/web/updates/2017/09/autoplay-policy-changes#webaudio

*/

this.audioContext.resume()

}

this.stream = stream

console.debug('audioRecorder this.audioContext =', this.audioContext)

this.recorder = new window.Recorder(

this.audioContext.createMediaStreamSource(stream),

{ numChannels: 1, type: 'audio/wav' }

)

this.recorder.record()

this.isRecording = true

this.recButtonDisabled = false

})

.catch(err => {

console.error('The following getUserMedia error occured: ' + err)

this.setIsMediaDevicesAvailable(false)

this.recButtonDisabled = false

})

console.debug('this.recorder = ', this.recorder)

}

},

}

}

</script>

XSound

import { X } from 'xsound'

export default {

name: 'XSoundRecorder',

data () {

return {

isStop: false

}

},

mounted () {

X('oscillator').setup(true)

X('oscillator').module('recorder').setup(4)

this.isStop = true

},

methods: {

toggleRecording () {

if (this.isStop) {

X('oscillator').module('recorder').ready(0)

X('oscillator').module('recorder').start()

X('oscillator').start(440)

} else {

X('oscillator').module('recorder').stop()

X('oscillator').stop()

console.log(X('oscillator').module('recorder').getActiveTrack())

}

this.isStop = !this.isStop

}

}

}

WebAudioRecorder

let webAudioRecorder = new WebAudioRecorder(source, {

workerDir: 'web_audio_recorder_js/',

encoding: 'mp3',

options: {

encodeAfterRecord: true,

mp3: { bitRate: '320' }

}

});

webAudioRecorder.onComplete = (webAudioRecorder, blob) => {

let audioElementSource = window.URL.createObjectURL(blob);

audioElement.src = audioElementSource;

audioElement.controls = true;

}

webAudioRecorder.onError = (webAudioRecorder, err) => {

console.error(err);

}

webAudioRecorder.startRecording();

webAudioRecorder.finishRecording();

MediaStreamRecorder

<script>

var mediaConstraints = {

audio: true

};

navigator.getUserMedia(mediaConstraints, onMediaSuccess, onMediaError);

function onMediaSuccess(stream) {

var mediaRecorder = new MediaStreamRecorder(stream);

mediaRecorder.mimeType = 'video/webm';

mediaRecorder.ondataavailable = function (blob) {

// POST/PUT "Blob" using FormData/XHR2

var blobURL = URL.createObjectURL(blob);

document.write('<a href="' + blobURL + '">' + blobURL + '</a>');

};

mediaRecorder.start(3000);

}

function onMediaError(e) {

console.error('media error', e);

}

mediaRecorder.stop();

mediaRecorder.start();

mediaRecorder.save();

</script>RecordRTC

let stream = await navigator.mediaDevices.getUserMedia({video: true, audio: true});

let recorder = new RecordRTCPromisesHandler(stream, {

type: 'video'

});

recorder.startRecording();

const sleep = m => new Promise(r => setTimeout(r, m));

await sleep(3000);

await recorder.stopRecording();

let blob = await recorder.getBlob();

invokeSaveAsDialog(blob);

WebRTC adapter

Готовые компоненты

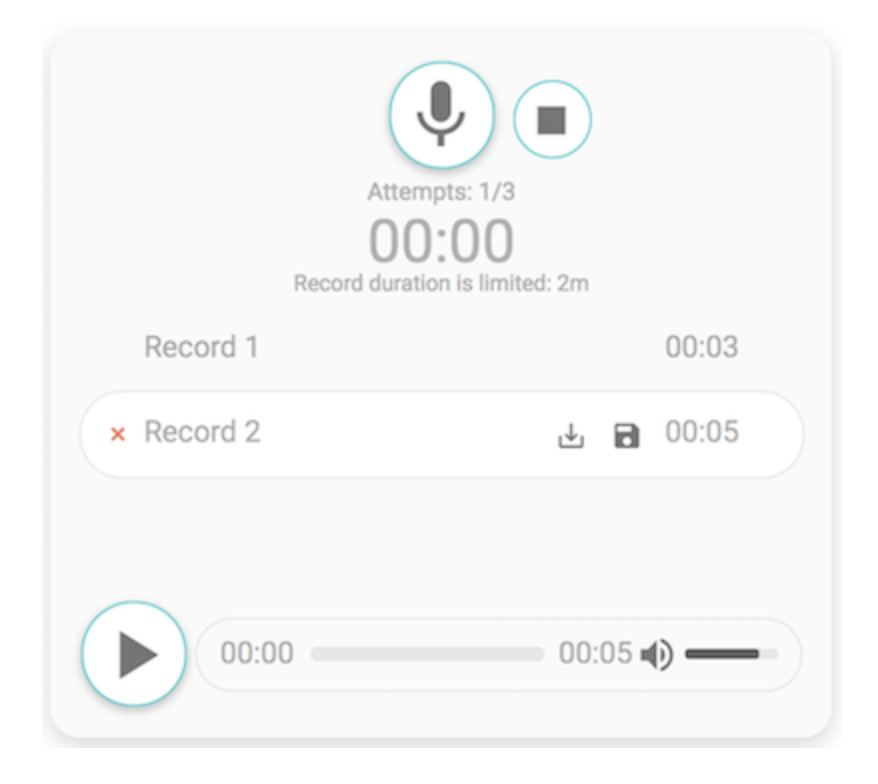

VueAudioRecorder

/* boot/audioRecorder.js */

import AudioRecorder from 'vue-audio-recorder'

export default ({ Vue }) => {

Vue.use(AudioRecorder)

}

VueAudioRecorder

/* MyVueAudioRecorder.vue */

<template>

<audio-recorder

upload-url="some url"

:attempts="3"

:time="2"

:before-recording="callback"

:after-recording="callback"

:before-upload="callback"

:successful-upload="callback"

:failed-upload="callback"></audio-recorder>

</template>

<script>

export default {

name: 'MyVueAudioRecorder',

methods: {

callback (msg) {

console.debug('Event: ', msg)

}

}

}

</script>Пара сниппетов

Audio Blob

const BASE64_MARKER = ';base64,';

const convertDataURIToBinary = (dataURI) => {

const base64Index = dataURI.indexOf(BASE64_MARKER) + BASE64_MARKER.length;

const base64 = dataURI.substring(base64Index);

const raw = window.atob(base64);

const rawLength = raw.length;

let array = new Uint8Array(new ArrayBuffer(rawLength));

for(i = 0; i < rawLength; i++) {

array[i] = raw.charCodeAt(i);

}

return array;

}

const binary= convertDataURIToBinary(data);

const blob=new Blob([binary], {type : 'audio/ogg'});

const blobUrl = URL.createObjectURL(blob);Создаем объект для скачивания

mediaRecorder.addEventListener("stop", () => {

const audioBlob = new Blob(audioChunks)

const audioUrl = URL.createObjectURL(audioBlob)

})Полезные ссылки

- https://github.com/yandex/audio-js/blob/master/tutorial/sound.md

- https://developers.google.com/web/fundamentals/media/recording-audio

- https://gist.github.com/borismus/1032746

- https://github.com/mattdiamond/Recorderjs

- https://github.com/higuma/web-audio-recorder-js

- https://recordrtc.org/

- https://github.com/streamproc/MediaStreamRecorder