Deep Learning

Introduction

Nov 2019

SK C&C

Tech Training Group

박석 수석

Welcome

Surk Park

Software Engineer @SK C&C

Creative AI Researcher

Travel Swimming Hiking SnowBoarding

E-mail : parksurk@gmail.com

Blog : http://parksurk.github.io

Facebook : http://www.facebook.com/parksurk

Linkedin: https://www.linkedin.com/in/parksurk

GitHub : https://github.com/parksurk

AI = New Electricity ?

Leading Big Transformation

Introduction

to deep learning

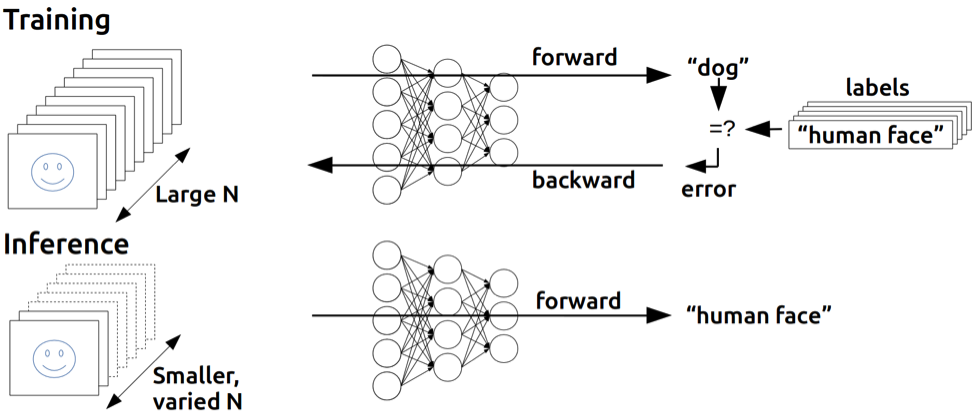

Be able to explain the major trends driving the rise of deep learning, and understand where and how it is applied today

Session 1

Brief History of DL

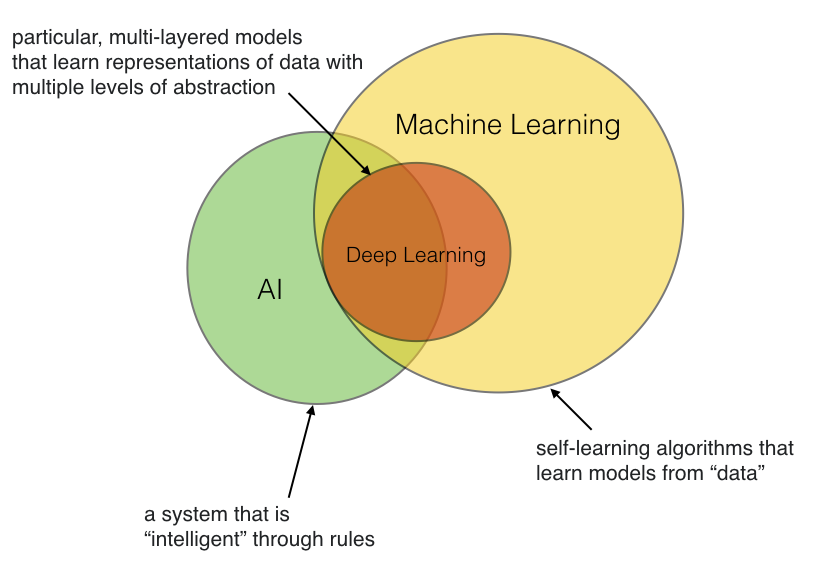

Position of Deep Learning

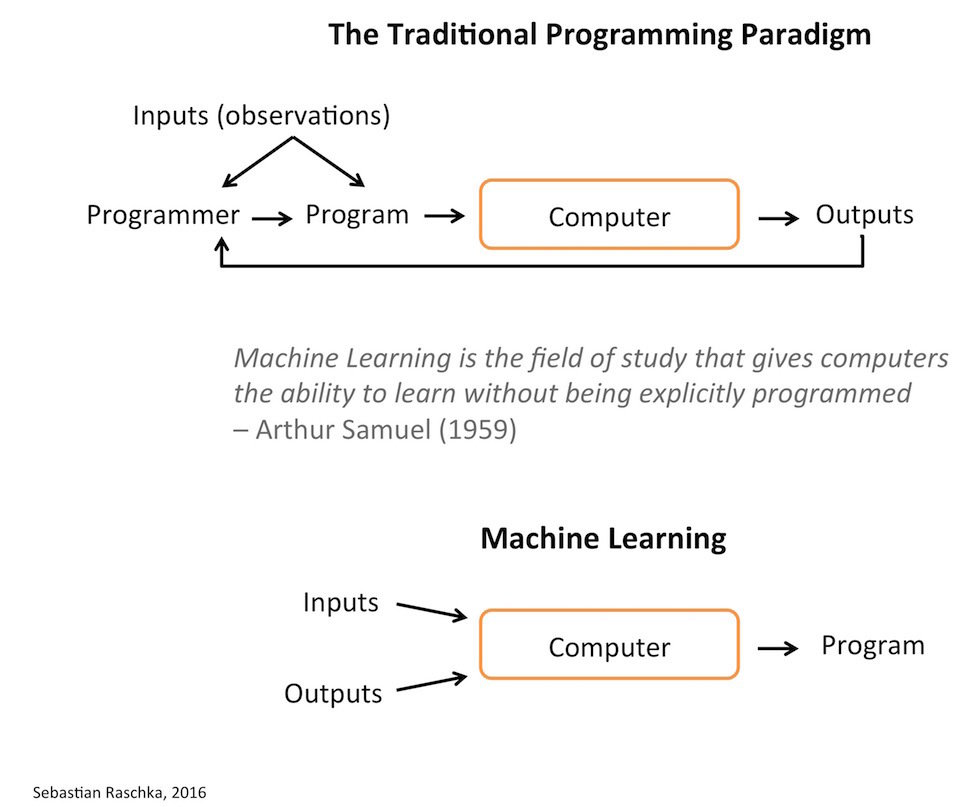

Machine Learning ???

Human Dream : Thinking Machine

The stuff promised in this video - still not really around.

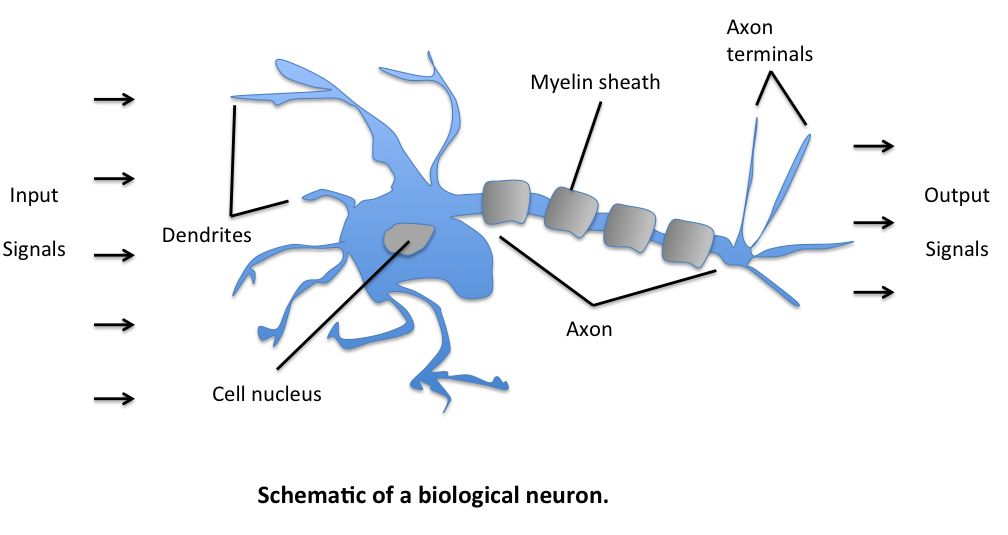

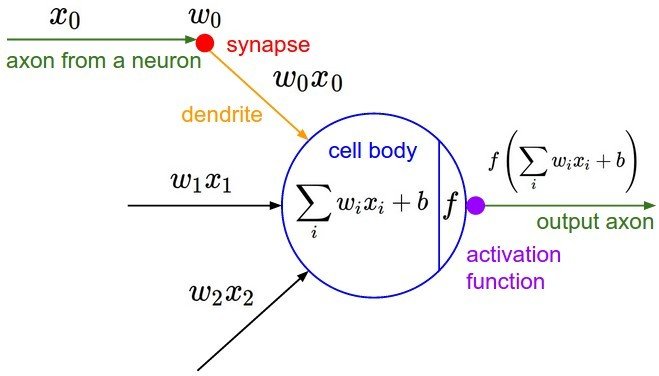

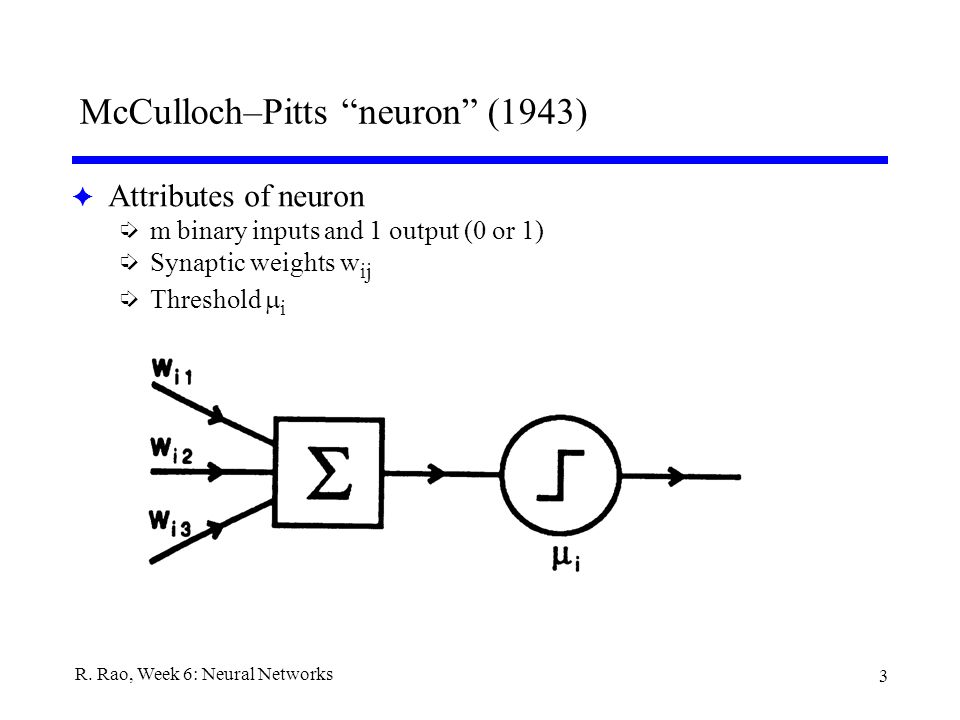

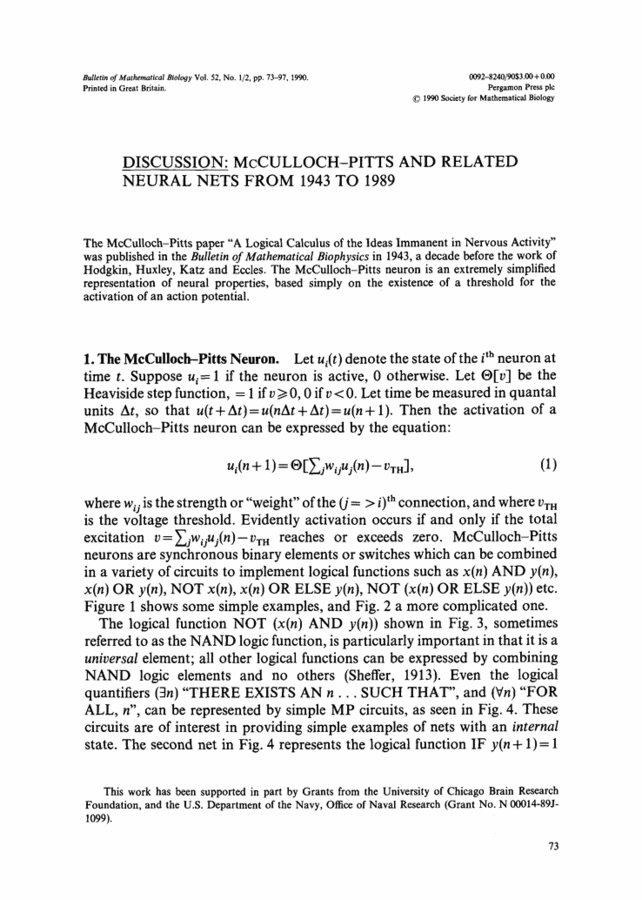

Hint from Neuron

Biological Inspiration

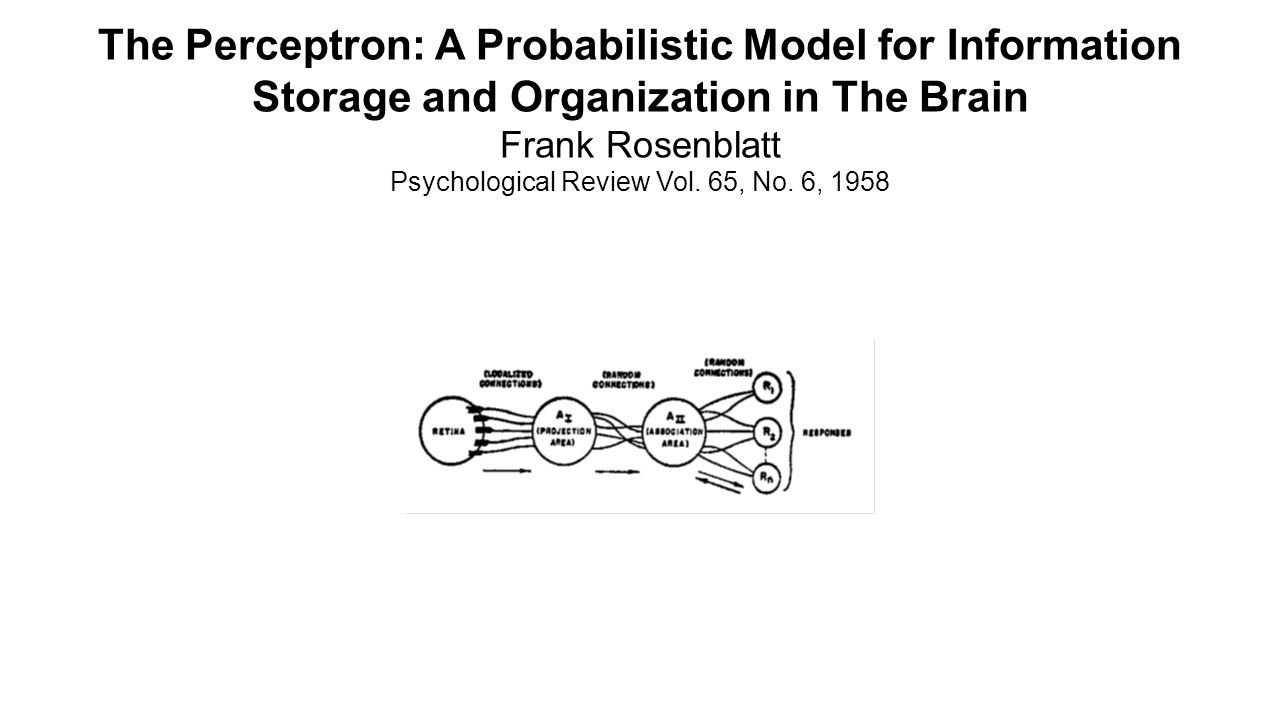

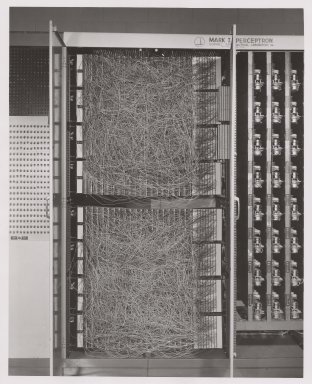

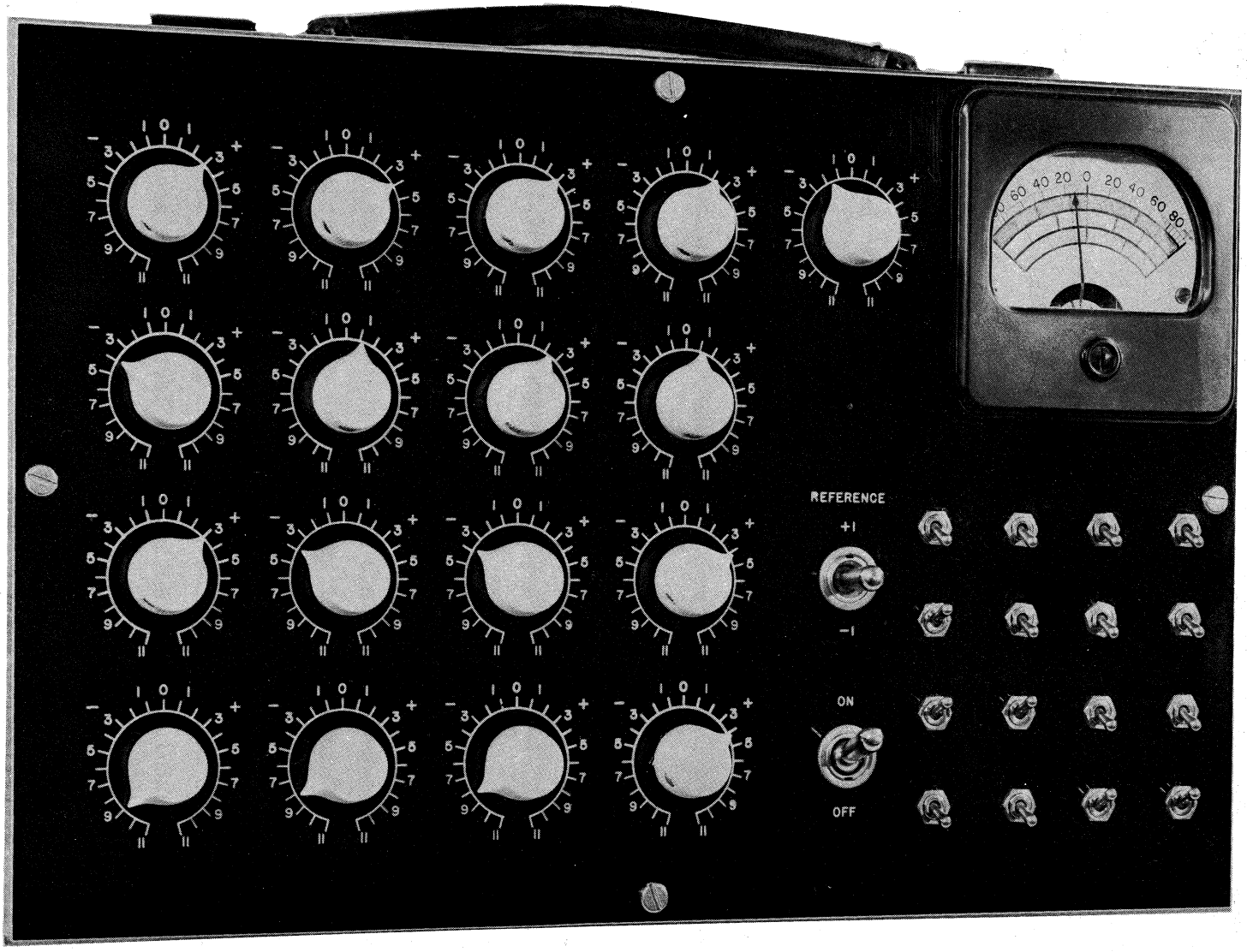

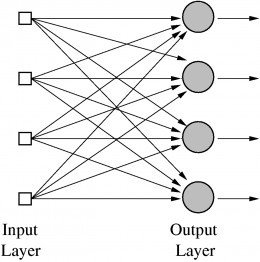

Perceptron(1958)

H/W of Perceptron

Perceptron

by Frank Rosenblatt

1957

Adaline

by Bernard Widrow and Tedd Hoff

1960

People believe False Promises

“The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself an be conscious of its existence … Dr. Frank Rosenblatt, a research psychologist at the Cornell Aeronautical Laboratory, Buffalo, said Perceptrons might be fired to the planets as mechanical space explorers”

New York Times

July 08, 1958

XOR problem

linearly Separable?

Perceptrons(1969)

Perceptrons

by Marvin Minsky (founder of MIT AI lab)

1969

-

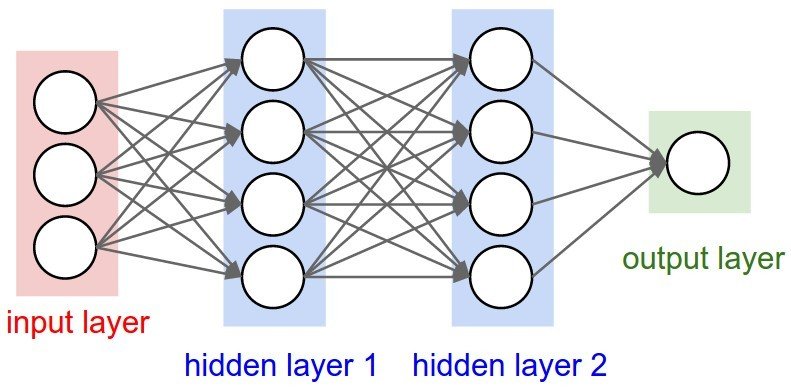

We need to use MLP, multilayer perceptrons

-

No one on earth had found a viable way to train MLPs good enough to learn such simple functions.

MLP can solve XOR problem

Text

1st Winter(1969)

"No one on earth had found a viable way to train..."

Marvin Minsky 1969

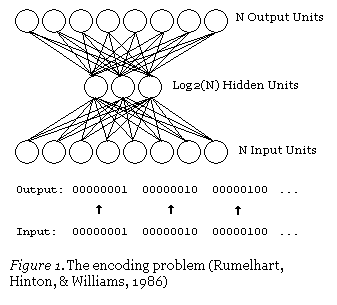

Backpropagation(1986)

(1974, 1982 by Paul Werbos, 1986 by Hinton)

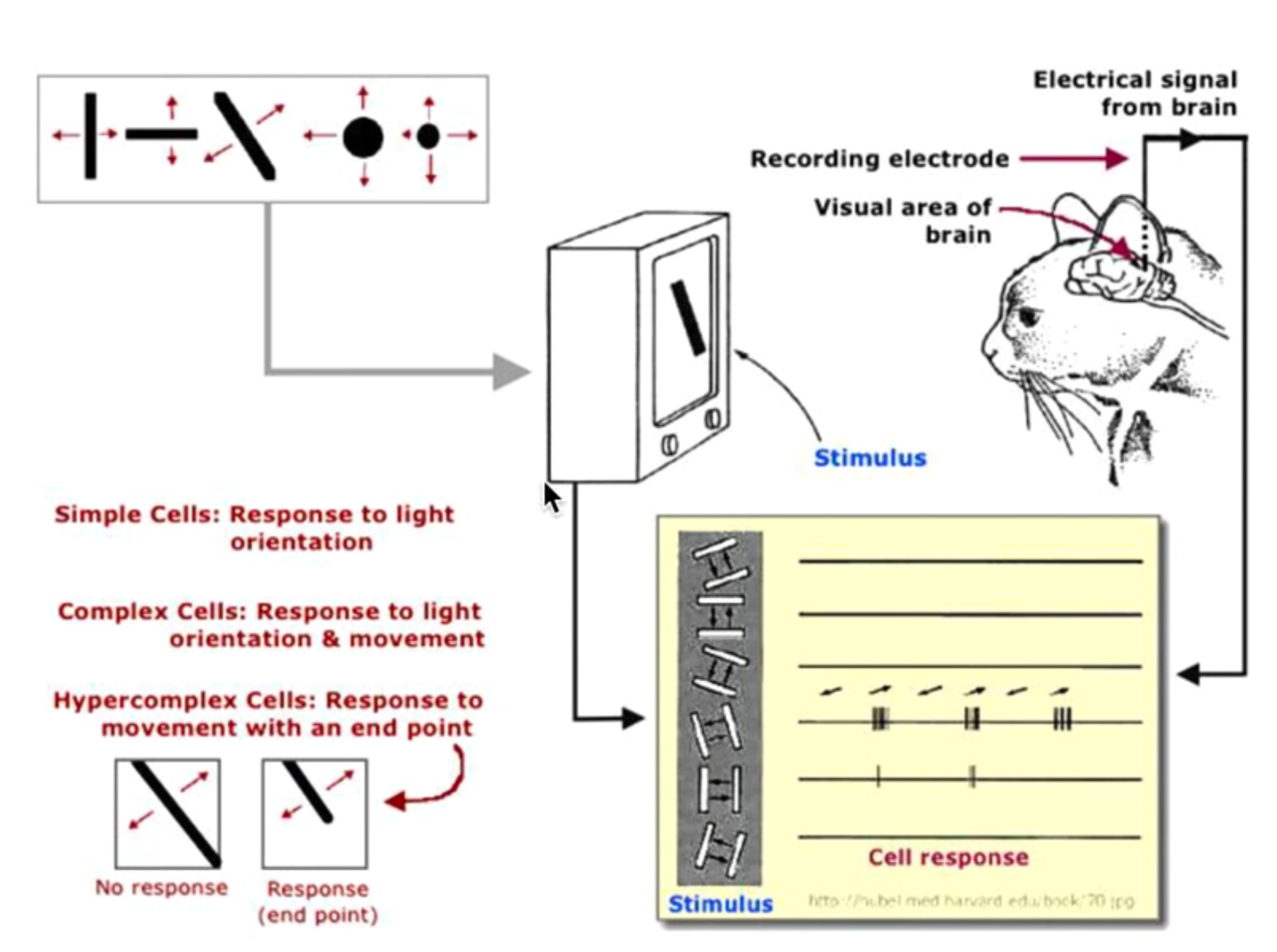

CNN

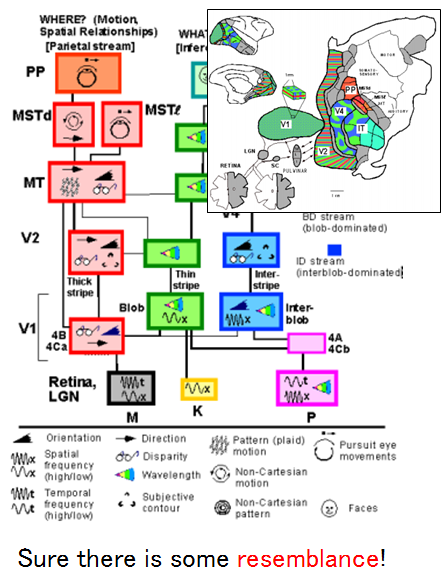

(by Hubel & Wiesel, 1959)

motivated by biological insights

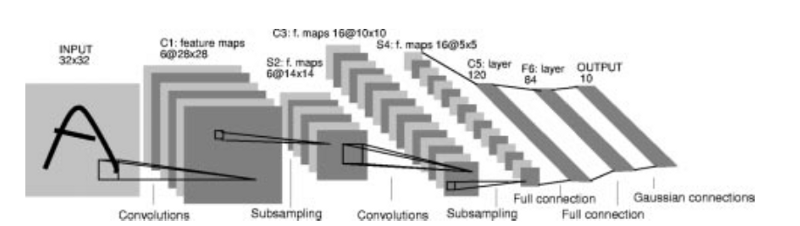

CNN

(LeNet-5, Yann LeCun 1980)

CNN + Vision

"At some point in the late 1990s, one of these systems was reading 10 to 20% of all the checks in the US.”

CNN + Self Driving Car

"NavLab 1984 ~ 1994 : Alvinn”

NN + Unsupervised Learning

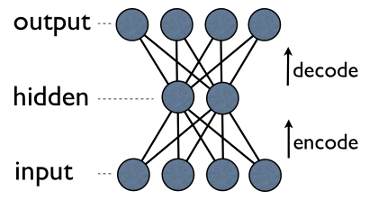

Autoencoder

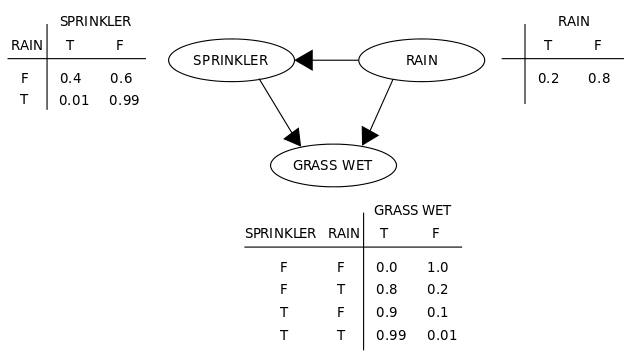

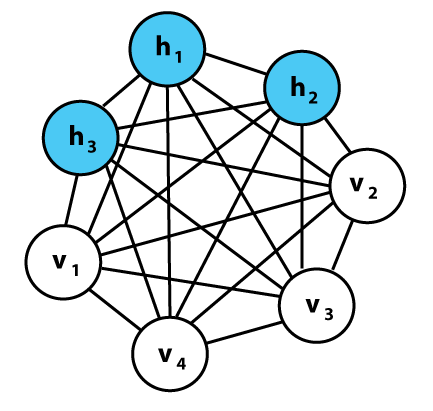

NN + Possibility Distribution

Boltzmann Machine

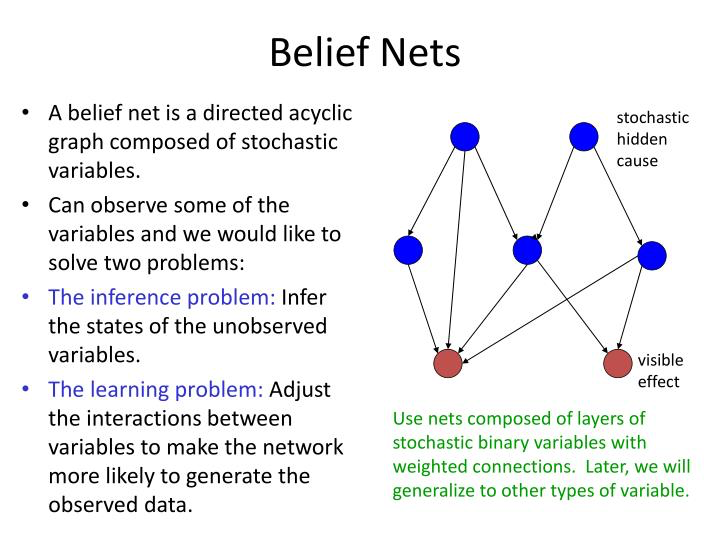

Belief Nets

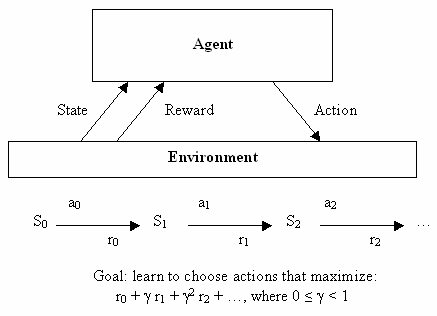

NN + Reinforcement Learning

Reinforcement Learning

Double pendulum

control problem

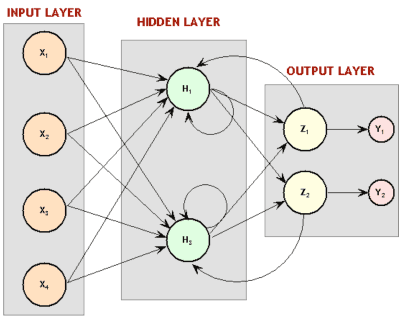

RNN

Recurrent Neural Network

Bengio wrote the 1993

Terminator 2 (1991)

BIG Problem

-

Backpropagation just did not work well for normal neural nets with many layers

-

Other rising machine learning algorithms : SVM, RandomForest, etc.

2nd Winter(1995)

1995 Paper

"Comparison of Learning Algorithm For Handwritten Digit Recognition"

"New Machine Learning approach worked better"

Yann LeCun 1995

CIFAR

-

Canadian Institute for Advanced Research -

which encourages basic research without direct application, was what motivated Hinton to move to Canada in 1987, and funded his work afterward.

CIFAR

- “But in 2004, Hinton asked to lead a new program on neural computation. The mainstream machine learning community could not have been less interested in neural nets.

- “It was the worst possible time,” says Bengio, a professor at the Université de Montréal and co-director of the CIFAR program since it was renewed last year. “Everyone else was doing something different. Somehow, Geoff convinced them.”

- “We should give (CIFAR) a lot of credit for making that gamble.”

- CIFAR “had a huge impact in forming a community around deep learning,” adds LeCun, the CIFAR program’s other co-director. “We were outcast a little bit in the broader machine learning community: we couldn’t get our papers published. This gave us a place where we could exchange ideas.”

http://www.andreykurenkov.com/writing/a-brief-history-of-neural-nets-and-deep-learning-part-4/

Breakthrouth(2006,2007)

by Hinton and Bengio

-

Neural networks with many layers really could be trained well, if the weights are initialized in a clever way rather than randomly. (By Hinton)

-

Deep machine learning methods (that is, methods with many processing steps, or equivalently with hierarchical feature representations of the data) are more efficient for difficult problems than shallow methods (which two-layer ANNs or support vector machines are examples of). (By Benzio)

Rebranding to Deep Learning

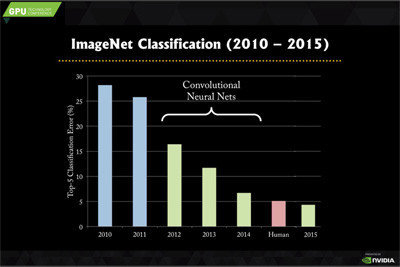

Imagenet

- Total number of non-empty synsets: 21841

- Total number of images: 14,197,122

- Number of images with bounding box annotations: 1,034,908

- Number of synsets with SIFT features: 1000

- Number of images with SIFT features: 1.2 million

- Object localization for 1000 categories.

- Object detection for 200 fully labeled categories.

- Object detection from video for 30 fully labeled categories.

- Scene classification for 365 scene categories on Places2 Database http://places2.csail.mit.edu.

- Scene parsing for 150 stuff and discrete object categories

Short history of Imagenet

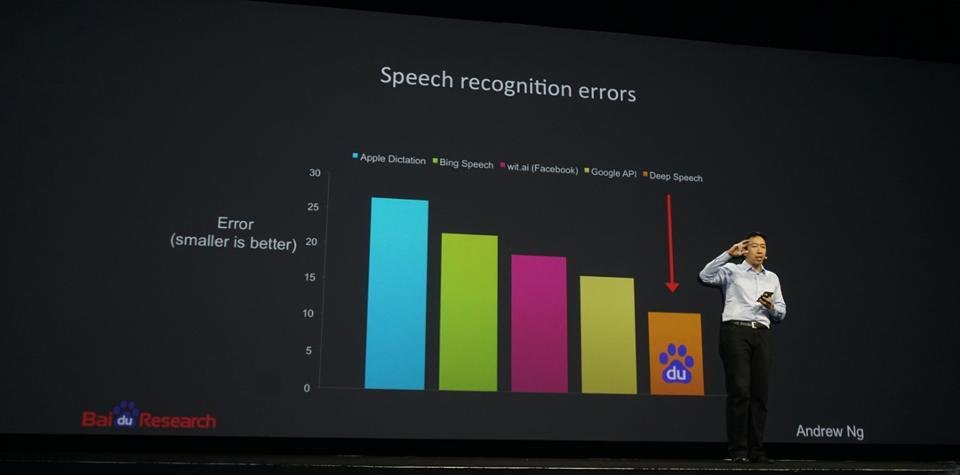

Speech Recognition

Geoffrey Hinton summarized the findings up to today

- Our labeled datasets were thousands of times too small.

- Our computers were millions of times too slow.

- We initialized the weights in a stupid way.

- We used the wrong type of non-linearity.

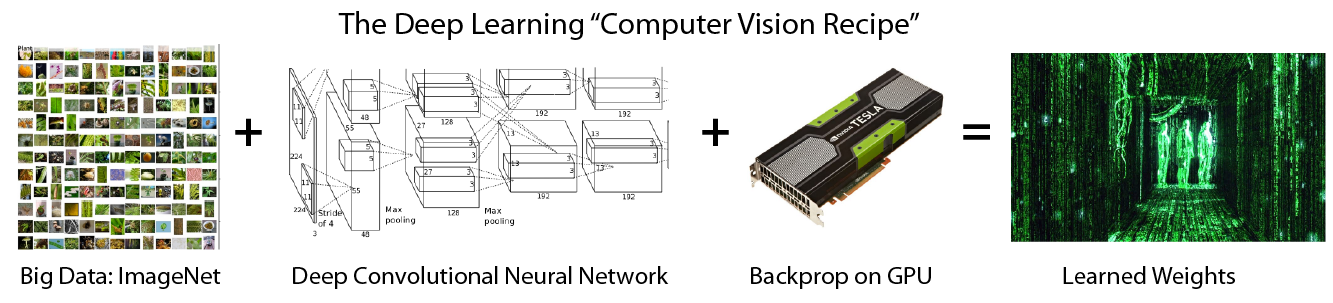

Deep Learning =

Lots of training data + Parallel Computation + Scalable, smart algorithms

Session 2

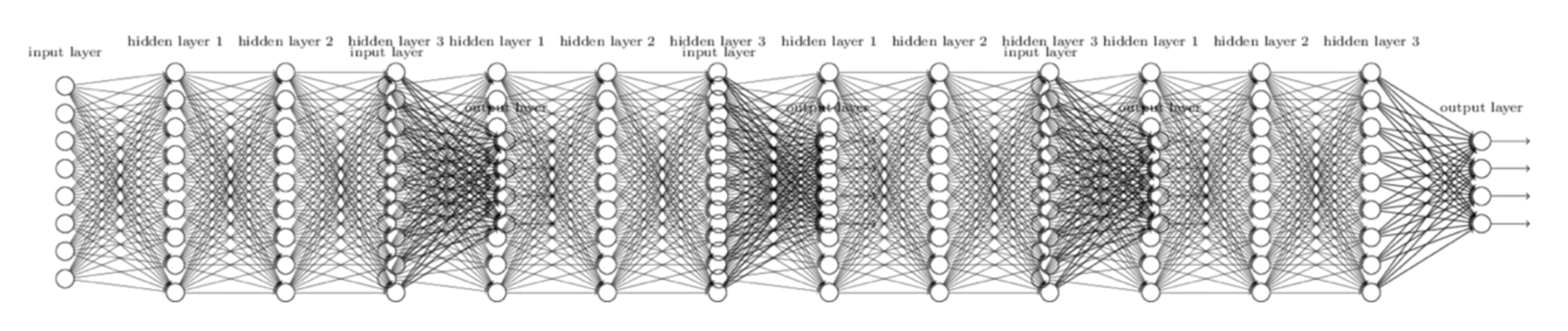

Neural Network

Neural Network

Neural Network

Neural Network

Neural Network

Linear

Fitting

선형 맞춤

비선형 변환

Nonlinear

Transformation

Linear

Fitting

Nonlinear

Transformation

Linear

Fitting

Nonlinear

Transformation

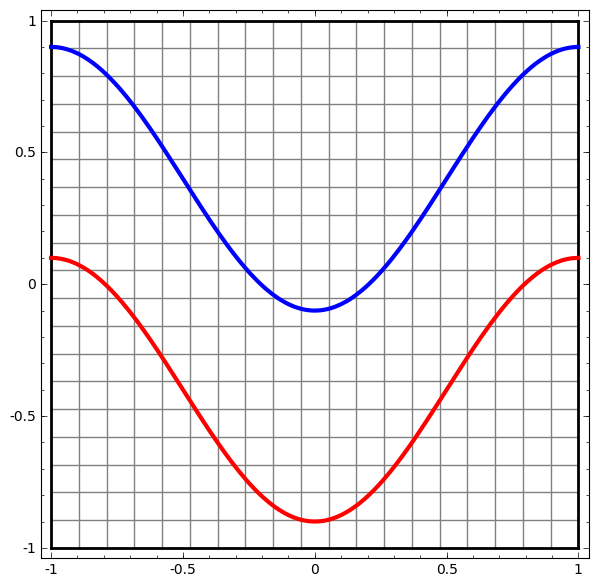

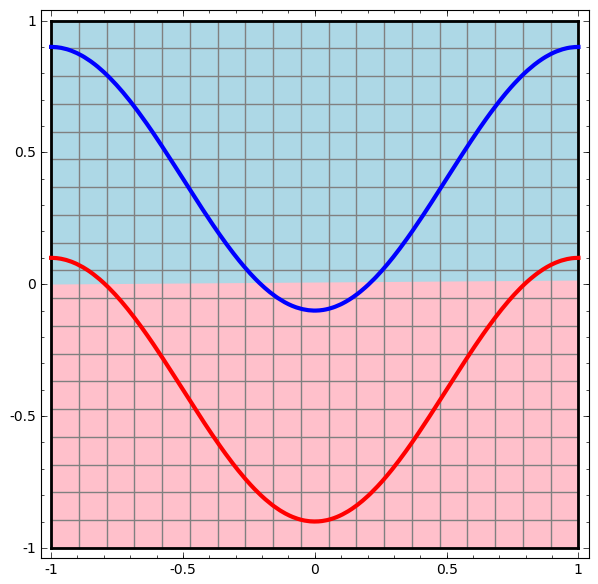

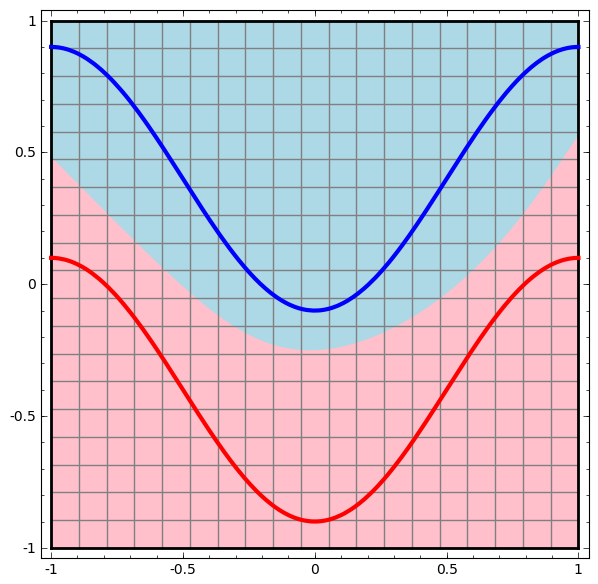

Neural Network

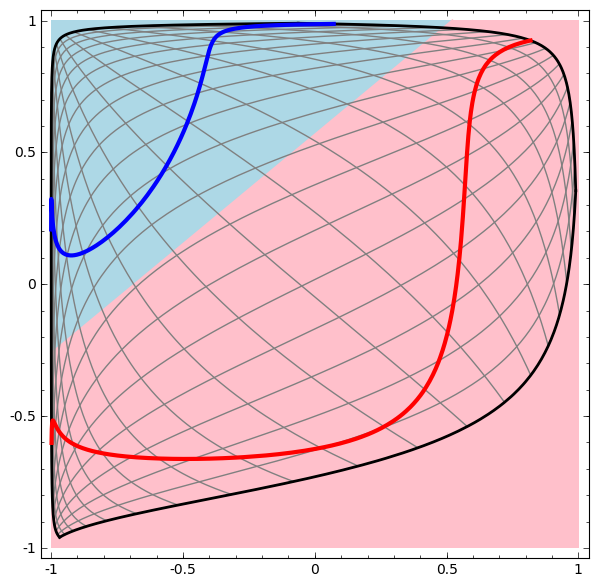

신경망은 데이터를 잘 구분할 수 있는 선들을 긋고 이 공간들을 잘 왜곡해 합하는 것을 반복하는 구조라고 할 수 있습니다.

선 긋고, 구기고, 합하고, 선 긋고, 구기고, 합하고, 선 긋고, 구기고, 합하고 ...

Neural Network

어떠한 규칙으로 선을 긋고 공간을 왜곡할까요?

Neural Network

어떠한 규칙으로 선을 긋고 공간을 왜곡할까요?

Big Data

아주 많은 데이터와 아주 오랜 시간의 최적화를 통해 데이터를 학습합니다.

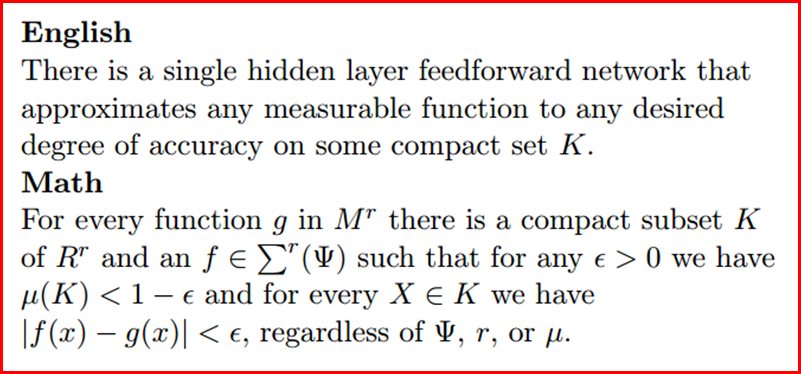

Neural Network

여러 개의 뉴런(선형 맞춤 + 비선형 변환)이 합쳐지면 복잡한 형상의 함수도 추정할 수 있다.

Neural Network의 문제점

최적화 알고리즘이 만약 진짜 최적값이 아닌 잘못된 최적값에 도달하면 어떻게 될까?

최적화

Neural Network의 상황역전

최적화 알고리즘이 만약 진짜 최적값이 아닌 잘못된 최적값에 도달하면 어떻게 될까?

신경망의 각 층을 먼저 비지도 학습방법(unsupervised learning)을 통해 잘 손질해주고, 그렇게 전처리한 데이터를 여러 층 쌓아올려 인공신경망 최적화를 수행하면 해결될수 있다.

Neural Network의 상황역전

딥 러닝 기법은 이후 압도적인 성능으로 각종 기계학습(Machine Learning) 대회의 우승을 휩쓸며 자신이 유아독존의 기법임을 과시했고, 현재는 다른 기계학습 방법을 통해 영상처리, 음성인식 등을 연구가 다시 딥 러닝으로...

DL Success Point

1. 비지도 학습방법을 이용한

전처리 방법

DL Success Point

2. Convolutional Neural Network

Traditional ML

- 데이터(Data) → 지식(knowledge)

- 데이터(Data) → 특징(Feature) → 지식(knowledge)

- Feature Extraction을 사람이 함

ConvolutionalNN

- Feature Extraction 까지도 딥러닝 알고리즘에 포함

- 특히 이미지 인식에서 큰 발전

DL Success Point

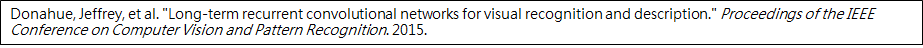

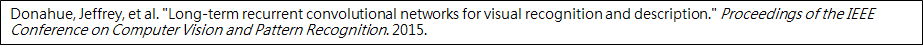

3. Recurrent Neural Network

RNN

- 시계열 데이터(Time-series data)

- DL중 가장 Deep한 구조

- Vanishing Gradient Problem

- Long-Short Term Memory

DL Success Point

4. GPU 병렬 컴퓨팅의 등장과 학습 방법의 진보

- GPGPU(General-Purpose computing on Graphics Processing Units) 등 저렴한 하드웨어 개발

- GPU를 효율적으로 사용하는 언어구조(예: CuDA) 개발

- 연구에 사용할 수 있는 데이터 풀이 많아짐 ImageNet MNIST

- 알고리즘 진보 - 비선형 변환 ReLU(Rectified Linear Unit) 개발, 거대망 선택적 학습 Drop-out

refer to "Deep Learning Tutorial" by Yann LeCun and others

Session 3

Deep Learning

Deep Learning

Wikipedia says:

“Deep learning is a branch of machine learning based on a set of algorithms that attempt to model high-level abstractions in data by using multiple processing layers, with complex structures or otherwise, composed of multiple non-linear transformations.”

Machine Learning

Text

SCALE UP

Totally NEW?

Neural Nets

Perception

RNN

CNN

RBM

DBN

D-AE

AlexNet

GoogLeNet

McCulloch&Pitt 1943

Rosenblatt 1958

Grossberg 1973

Fufushima 1979

Hinton 1999

Hinton 2006

Vincent 2008

Alex 2012

Szegedy 2015

Learning Representation

-

ML/AI: how do we learn features?

- What is the fundamental rule?

- What is the learning algorithms?

- CogSci: how does the mind learn abstract concepts on top of less abstract ones?

- Neuroscience: how does the cortex learn perception?

- Deep learning : addresses the problem of learning hierarchical representation with a single algorithm.

Trainable Feature Transform

Inspired by Nature?

L'Avion III de Clement Ader, 1897

Do not go TOO FAR

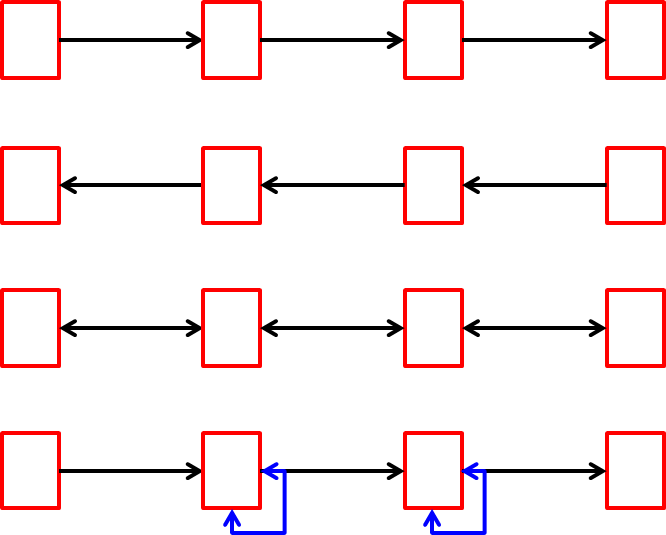

4 Types of Deep Architecture

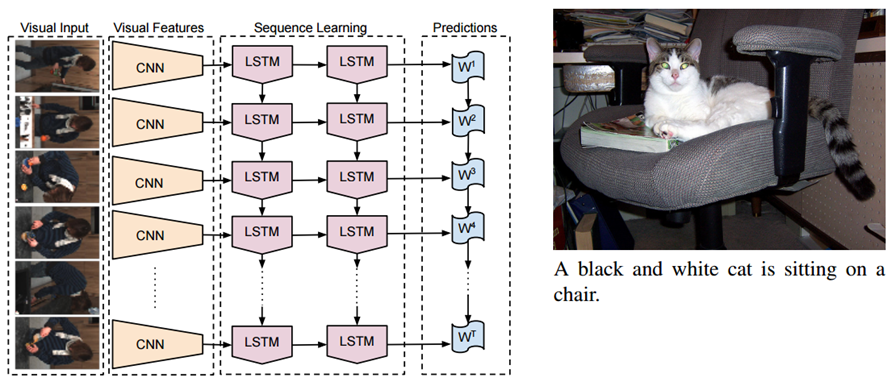

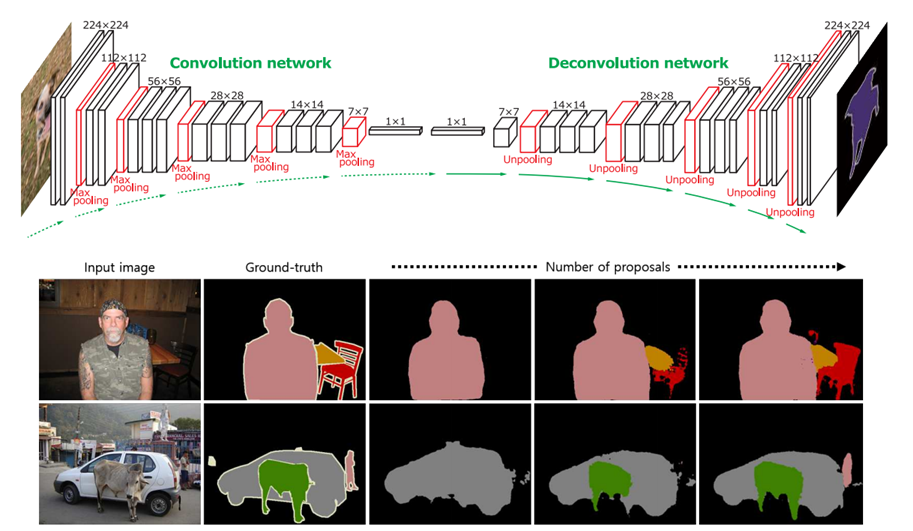

Feed-Forward : multilayer neural nets, convolutional nets

Feed-Back : Stacked Sparse Coding, Deconvolutional Nets

Bi-Directional : Deep Boltzman Machine, Stacked Auto-Encoders

Recurrent : Recurrent Nets, Long-Short Term Memory

Really need DEEP?

What is Approach?

multi layers...

but...each layer has

complex model

with small data

The researchers say even they weren’t sure this new approach (152 layers!) was going to be successful – until it was.

“We even didn’t believe this single idea could be so significant,”

said Jian Sun, a principal research manager at Microsoft Research

is DL Omnipotent?

Session 4

DL Applications

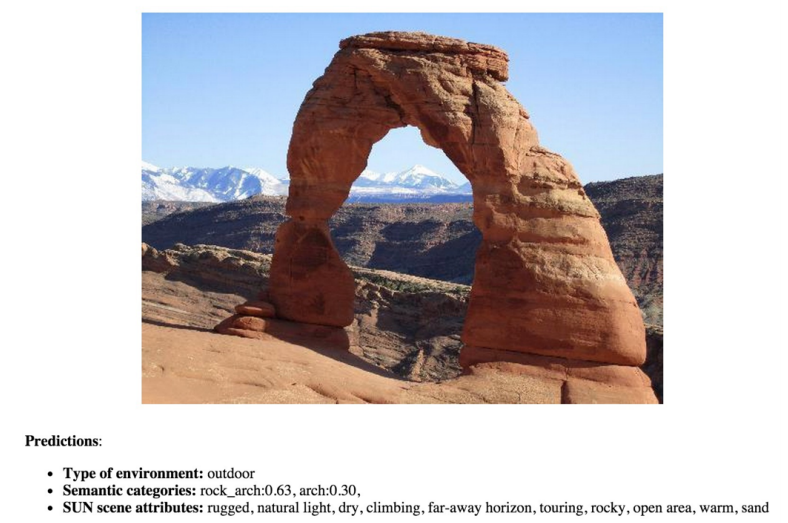

Scene Recognition (CNN)

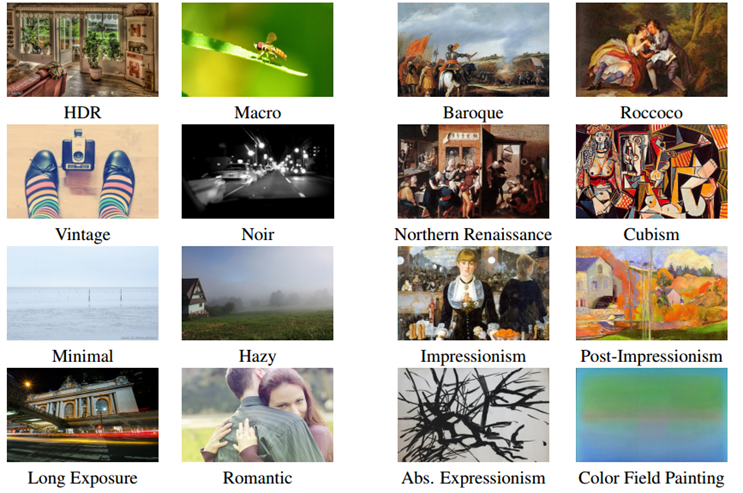

Visual Style Recognition (CNN)

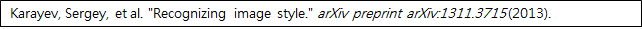

Object Detection (R-CNN)

Image Captioning (CNN+LSTM)

Segmentation (DeconvNet)

Deep Visuomotor Control

(CNN)

Image Generation

(GAN)

A Style-Based Generator Architecture for Generative Adversarial Networks

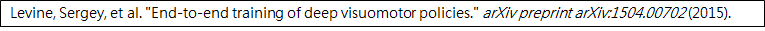

Im2Pencil (GAN)

- Controllable한 photo-to-pencil image translation 방법을 제안함.

- Pencil outline(rough, clean)과 Pencil shading(4가지)를 변화시키며 pencil image를 생성해낼 수 있는 것이 장점

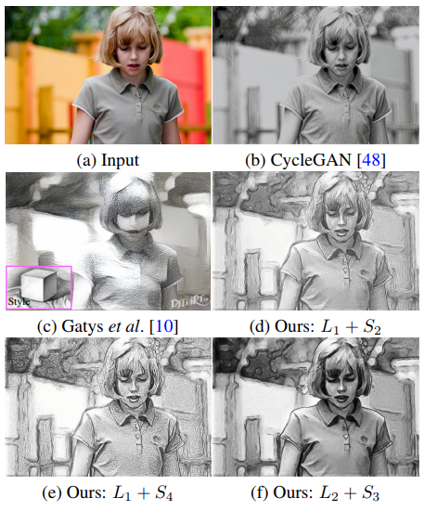

StoryGAN

(GAN)

- Story로부터 주요 문장들을 추출하여 각 문장에 해당하는 이미지를 생성하는 Story Visualization 이라는 새로운 task를 제안하였고, 이를 학습하기 위한 StoryGAN이라는 모델을 제안함.

- Story Encoder, Context Encoder, Image Discriminator, Story Discriminator 등 다양한 model들로 구성이 되어있으며 Sequential Conditional GAN을 기반으로 한 방법을 제안함

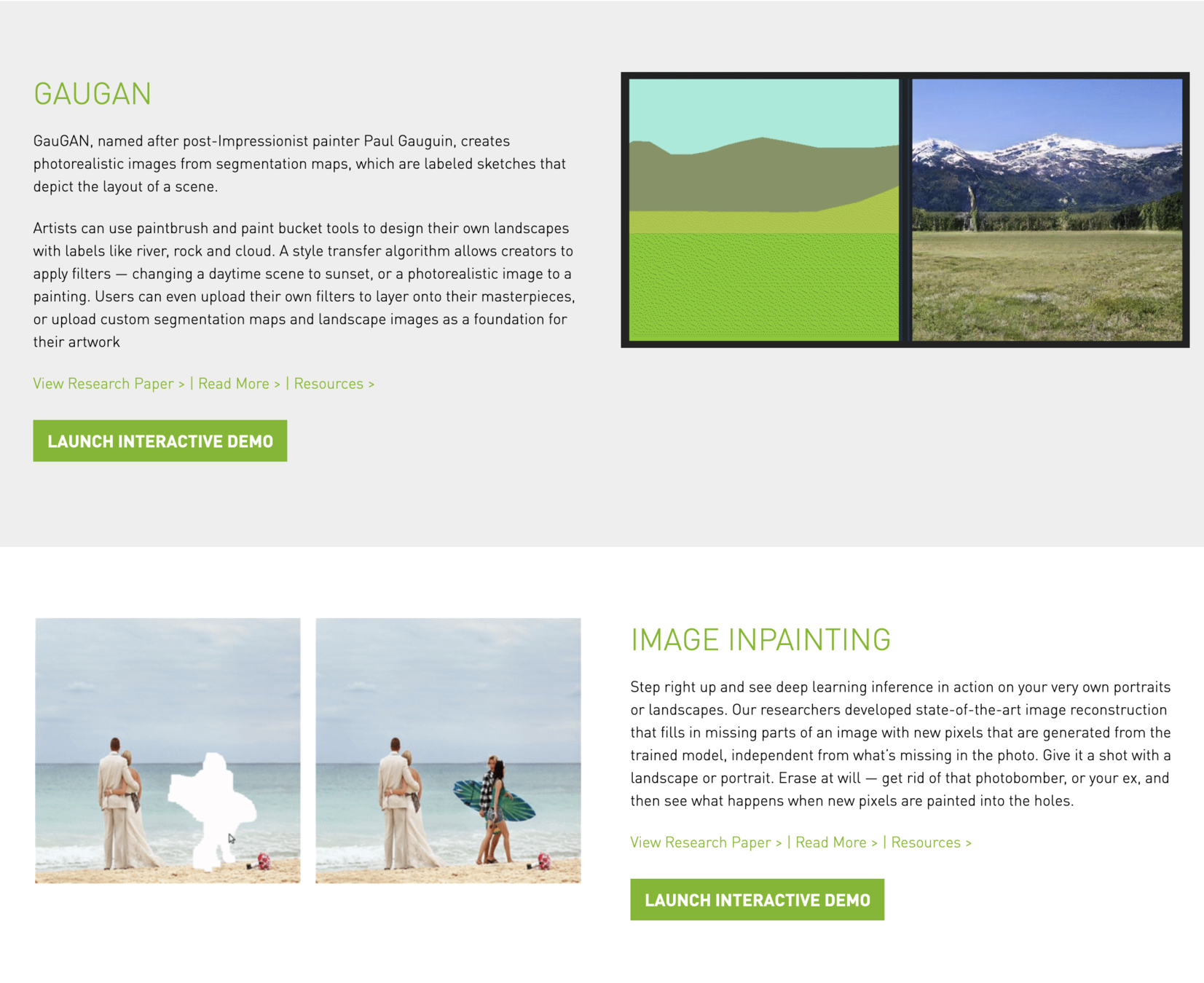

NVIDIA AI PLAYGROUND

Video Synthesis (GAN)

Few shot Adversarial Leaning of Realistic Neural Talking Head Models http://arxiv.org/abs/1905.08233

Audio Video Synthesis

(LSTM, RNN)

Speech Translation

(End to End Speech to Speech Translation)

Deep Generative Models for Speech by Heiga Zen (Google Brain)

Speech

Synthesis

네오사피엔스 https://typecast.ai

AI Speaker

네이버 Clova AI https://clova.ai/ko/events/celeb_voice/

Text

AI + broadcasting

머니브레인 http://www.moneybrain.ai

AI + Game

딥마인드 https://deepmind.com

AI + Robitics

보스턴 다이나믹스 https://www.bostondynamics.com

AI + Self Driving Cars

AI + Music

- #AICAT : NLP 논문 #Transformer 사용하여 움악 생성

- Google #Magenta

- #Jukedeck : 악보를 Generation하는 수준에서 producing하는 수준까지 발전

Text

AI + ART

- 파인만 교수 “내가 만들지 못하는 것은 내가 완벽히 이해하지 못한 것이다.”

- Deep Dream : AI가 그린 29점이 1억 정도에 팔림

- Gemini : 로봇팔이 그린 수묵화가 $13,000 팔림

AI + ART

(TeamVoid)

- Gentle Monster 의 경제적 도움을 받아 “Malfuction” 로봇팔 연극...이것이 첫사업 시작이였음

- Samsung Galuxy 와 작업...10개국에 2명이 동시 전시 경험

- 전기의 발명으로 인해 인류에게 동시성을 경험하게 해줌

- 공기의 질이 않좋은 날/지역은 그림의 질이 더 좋음

AI + ART

(펄스나인)

Deep Learning Trends

- Multi-modal(Image+Voice) -> 1 Label

- DL Best Practice -> Theory

The Era of Deep Learning !!!

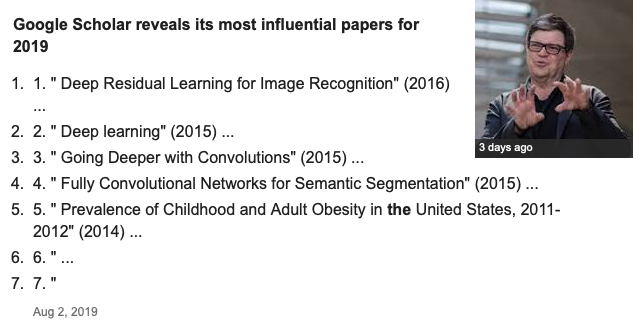

- 4 of 7 papers most cited by Nature are on deep learning for 2019.

- 3 of 4 deep learning papers are on computer vision: ResNet, GoogleNet, and FCN.

Session 5

How to learn DL?

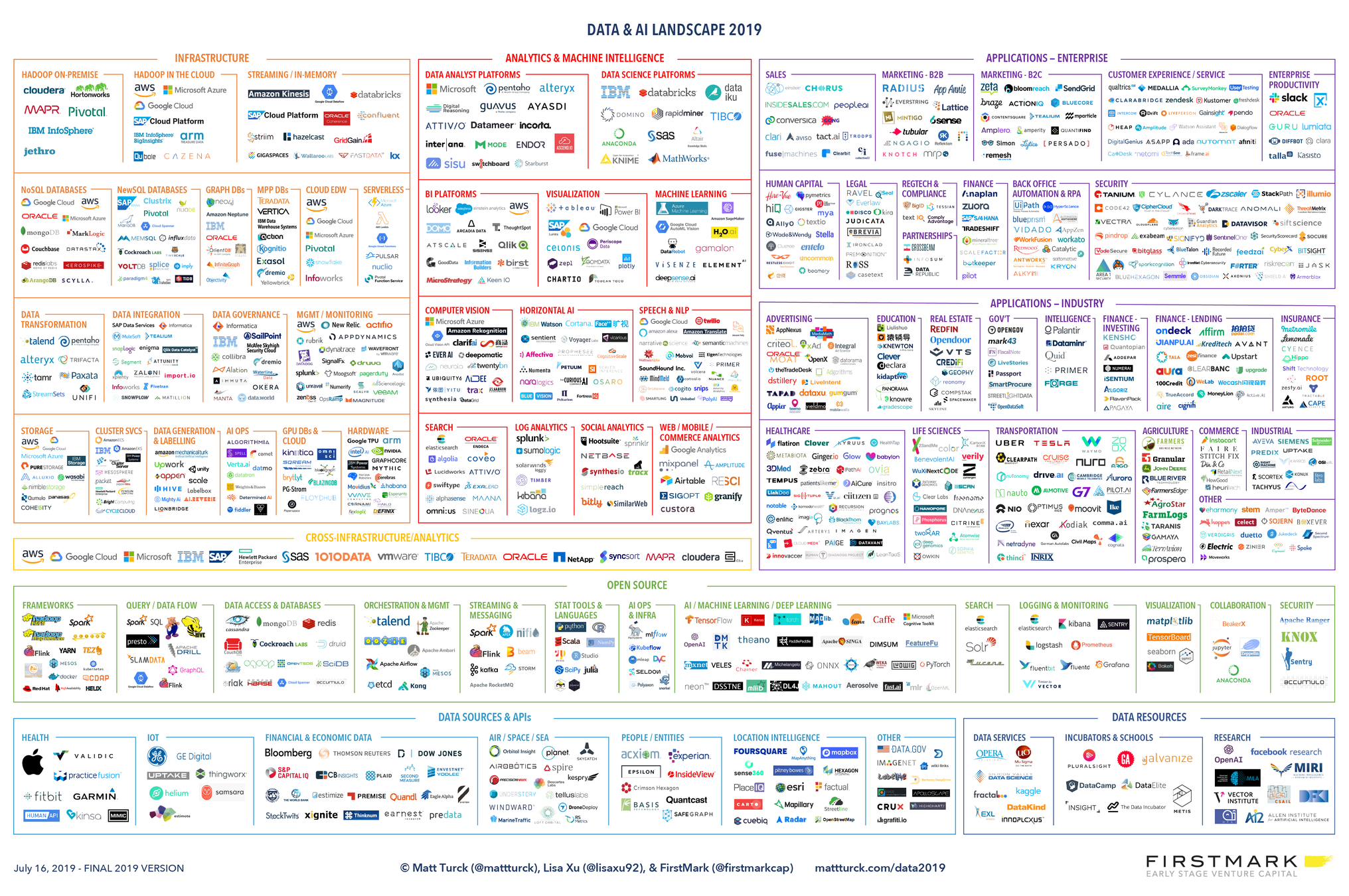

The 2019 Data & AI Landscape

Start Learning

with Python

Python

Scientific Ecosystem

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

PyMC

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

PyMC

NIPY

<Many more tools>

- Performance : Numba, Weave, Numexpr, Theano ...

- Visualization : Bokeh, Seaborn, Plotly, Chaco, mpld3, ggplot, MayaVi, vincent, toyplot, HoloViews ...

- Data Structures & Computation : Blaze, Dask, DistArray, XRay, Graphlab, SciDBppy, pySpark ...

- Packaging & Distribution : pip/wheels, conda, EPD, Canopy, Anaconda ...

<Recent Development>

1. Foundation

- Python 3

2. Visualization

- Matplotlib 1.4 , 2.0

- Seaborn = Matplotlib + Pandas + statistical visualization

- Bokeh = Powerful Interactive Visualization, HTML5, Javascript lib

3. Arrays & Data Structures

- Xray = NumPy + Pandas

- Dask = lightweight tool for general parallelized array storage and computation

4. Computation & Performance

- Numba = with a simple decorator, Python JIT compiles to LLVM and excutes at near C/Fortran speed

5. Distribution & Packaging

- Anaconda

<IPython & Jupyter>

So much happening ...

- The IPython/Jupyter split

- Widgets = awesome

- Docker-based backends

- Jupyter Hub

- new $6M grant 2015 July first week

<Why Python?>

<Why Python?>

- Python was created in the 1980s as a teaching language, and to bridge the gap between the shell and C.

Shell

C

<Why Python?>

- Guido Van Rossum "I thought we'd write small Python programs, maybe 10 lines, maybe 5, maybe 500 lines - that would be a big one"

Write Small

Be Big

<Why Python?>

- Python is not a scientific programming language

: Why did a "toy language" become the core of a scientific stack?

Python

Scientific Programming Language

!=

<Why Python?>

- Python is a glue

- Python glues together this hodge-podge of scientific tools.

<Why Python?>

- high-level syntax wraps low-level C/Fortran libraries, which is (mostly) where the computation happens.

<Why Python?>

- it is speed of development, not necessarily speed of execution. that has driven Python's popularity.

Speed of Development

Speed of Execution

<Why Python?>

Why don't you use C instead of Python? it's so much faster!

<Why Python?>

Why don't you use C instead of Python? it's so much faster!

Why don't you commute by airplane instead of by car?

it's so much faster!

<Why Python?>

- Python was created in the 1980s as a teaching language, and to bridge the gap between the shell and C.

- Guido Van Rossum "I thought we'd write small Python programs, maybe 10 lines, maybe 5, maybe 500 lines - that would be a big one"

- Python is not a scientific programming language

: Why did a "toy language" become the core of a scientific stack?

- Python is a glue

- Python glues together this hodge-podge of scientific tools.

- high-level syntax wraps low-level C/Fortran libraries, which is (mostly) where the computation happens.

- it is speed of development, not necessarily speed of execution. that has driven Python's popularity.

- "Why don't you use C instead of Python? it's so much faster!"

: "Why don't you commute by airplane instead of by car? it's so much faster!"

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

<But this efficiency depends on the Scientific Stack...>

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

1995 : "Numeric" was an early Python scientific array library. largely written by Jim Hugunin. Numeric -> NumPy

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Multipack

1998 : "Multipack" built on Numeric, was a set of wrappers of Fortran packages written by Travis Oliphant. Multipack -> SciPy

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

Multipack

2002 : "Numarray" was created by Perry Greenfield, Paul Dubois, and others to address fundamental deficiencies in Nemeric for larger datasets

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

2006 : In a herculean effort to head-off this split in the community. Travis oliphant incorporated best parts of Numeric + Numarray into "Numpy"

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

2000 : Eric Jones, Travis oliphant. Pearu Peterson, and others spun multipack into the "SciPy" package. aiming for a full Python MatLab replacement.

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

2001 : Fernando Perez started the "IPython" projects, aiming for a mathematica-style environment for Scientific Python

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

Matplotlib

2002 : John Hunter wanted an open MatLab replacement, and started "matplotlib" as an effort at MatLab-style visualization

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

Notebook

Matplotlib

2012 : The Ipython team released the "IPython Notebook" and the world has never been the same

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

Notebook

Matplotlib

Pandas

2009 : Wes McKinney began "Pandas", eventually drawing-in much larger Python user-base. especially in industry data science.

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

Notebook

Matplotlib

Pandas

Scikits

2009 : With SciPy's sheer size making fast development difficult. community decided to promote "scikits" as an avenue for more specialized algorithms.

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

Notebook

Matplotlib

Pandas

Scikits

Conda

2012 : Continuum releases "conda". a package manager for scientific computing.

<Lessons Learned>

1. No centralized leadership! What is "core" in the ecosystem evolves & up to the community

- Evolving computational core : Numba?

: Just as Cython matured to become a core piece. perhaps Numba might as well? How might a JIT-enabled SciPy, sklearns, pandas, etc. look?

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

PyMC

NIPY

<Lessons Learned>

2. To be most useful as an ecosystem, we must be willing for packages to adapt to the changing landscape.

- Evolving computational core : Pandas?

: Modern data is sparse, heterogeneous, and labeled, and NumPy arrays don't measure up : let's make Pandas a core dependency!

- Evolving computational core : pandas, Seaborn --> matplotlib

: With Pandas core dependency. what elements of Seaborn & Pandas could be moved into matplotlib?

- Evovling the core : SciPy

: SciPy's monolithic design was driven by packaging & distribution difficulties.

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

PyMC

NIPY

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

PyMC

NIPY

Seaborn

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

----------------------------------------------------------------------------------

PyMC

NIPY

<Lessons Learned>

3. interoperability with core pieces of other languages has been key to the success of the SciPy stack(e.g. C/Fortran libraries, new Jupyter framework

- Universal Plotting Serialization?

: Much of modern interactive plotting (d3, HTML5, Bokeh, ggvis, mpld3, etc) involves generating & processing plot serializations

: matplotlib -> {JSON} -> javascript --> plotting at web

: Doing this natively in matplotlib would open up extensibility!

- Universal DataFrames?

: R, Python, Julia use C/Fotran Memory Block

: R, Python, Julia use RDataFrame , Pandas, Dataframe.jl

: in the future R, Python, Julia use ...so called...Uber DataFrame ?

matplotlib -> {JSON} -> javascript -> plotting at web

Universal Plotting Serialization

Universal DataFrames

R : RDataFrame

Python : Pandas

Julia : Dataframe.jl

Uber DataFrame ???

<Lessons Learned>

4. The stack was built from both continuity(e.g. Numeric/Numarray->NumPy) and brand-new efforts(e.g. matplotlib, Pandas). Don't discount either approach!

- Considering the Future of Matplotlob (Usual compliaints about Matplotlib)

: Non-optimal stylistic defaults -> matplotlib 2.0

: Non-optimal API -> Seaborn, ggplot

: Difficulty exporting interactive plots -> Serialization to mpld3/Bokeh

: Difficulty with large datasets ->???

- Lesson from Numeric/Numarray, etc

:Stick with matplotlib & modify it(e.g serialization to VisPy? Numba-driven backend? new backend architecture? etc.)

- Lesson from Pandas & Matplotlib, etc: : Start something from scratch; features will draw users!(e.g. VisPy, Bokeh, Something new?)

Don't discount either approach!!!

Continuity

Brand-new Effort

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

Notebook

Matplotlib

Pandas

Scikits

Conda

2006 : In a herculean effort to head-off this split in the community. Travis oliphant incorporated best parts of Numeric + Numarray into "Numpy"

Continuity

-----------------------------------------------------------------------------------------

1995

2005

2015

2010

2000

Numeric

Numarray

NumPy

Multipack

SciPy

IPython

Notebook

Matplotlib

Pandas

Scikits

Conda

Brand-new Effort

2009 : Wes McKinney began "Pandas", eventually drawing-in much larger Python user-base. especially in industry data science.

2002 : John Hunter wanted an open MatLab replacement, and started "matplotlib" as an effort at MatLab-style visualization

How to study deep learning?

관심있는 연구주제에 대한 Data를 얻을 수 있는지 먼저 볼것!!!

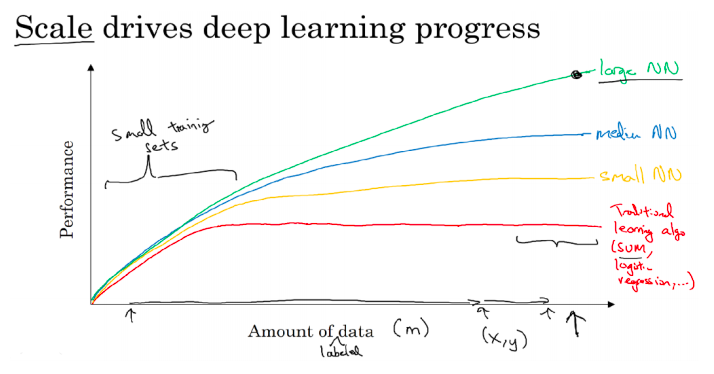

Why DL is taking off?

Deep learning is taking off due to a large amount of data available through the digitization of the society, faster computation and innovation in the development of neural network algorithm.

Data + GPU H/W + Algorithm

1. Being able to train a big enough neural network

2. Huge amount of labeled data

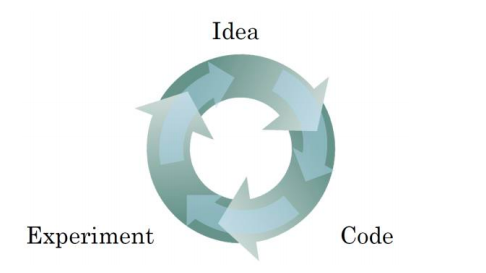

Iterative NN Training Process

Faster computation helps to iterate and improve new algorithm

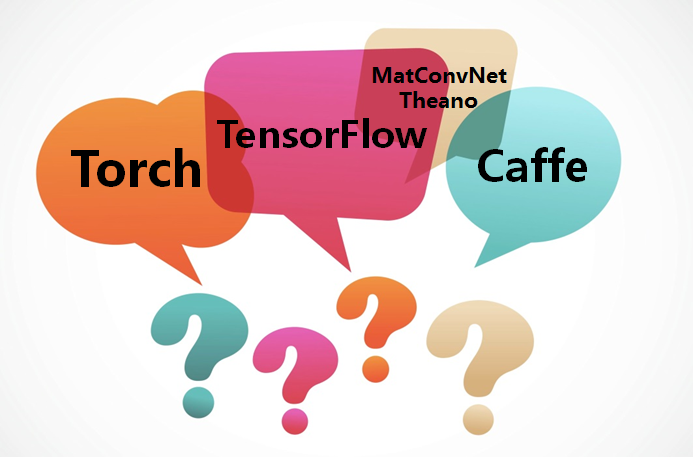

Use DL Tools

Refer to

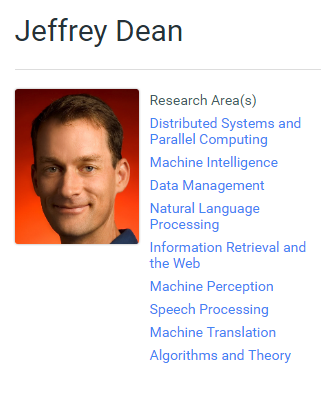

Interview

Geoffrey Hitton

- 1966 : 고등학교시절, 수학을 잘했던 친구로 부터 인간두뇌 홀로그램을 보게 되면서 처음 관심을 갖게 됨(Lashley's experiments : 기억은 두뇌의 여러부분에 흩어져서 저장되어 있다는 사실을 실험)

- 대학시절 : physiology and physics 로 시작...philosophy...결국...psychology 선택..."지능이란 무엇인가?" 라는 문제 풀기..

- psychology는 해답을 주지 못했다...carpenter

- PhD in AI @Edinburgh in Britain...couldn't get a job

- 1982 : backprop algorithm 연구 시작 @UCSD in California w/ David Rumelhart and Ron Williams ( 실제 최초의 아이디어는 Rumerlhart 것이지만...논문으로 최초로 나온 것은 Paul Werbos 이었다. 별로 관심을 받지 못했음)

- 1986 : backprop algorithm 논문발표 into Nature

- 1990 : Word Embedding 를 이야기 하기 시작 w/ Benzio

Geoffrey Hitton's Story

- 가장 멋진일 : Boltzmann machines 을 w/ Terry Sejnowski 발견한 일

- 2007 : resurgence of neural net 을 이끌어 내어 현재의 Deep Learning 이 나온 것은 restricted Boltzmann machines 의 역할이 크다.

- 1993 : 1st variational Bayesian learning 관련 논문 발표 w/ Van Camp

- 2014 : @Google about using ReLUs and initializing with the identity matrix

- 1987 : recirculation algorithm w/ Jay McClelland ( euroscientists의 Spike-timing-dependent plasticity 이론과 같음...where the new thing is good and the old thing is bad in the learning rule.)

- 2015 : Multiple Time Skill w/ Jimmy Ba => LSTM

- Now : have a little Google Brain team in Toronto => Unsupervised Learning, Wegstein algorithm, GAN

Geoffrey Hitton's Story

Hinton's Advice

-

Keep on doing

-

Never stop programming

-

Go for it and find out

<학생들에게>

- 같은 관심 주제를 가진 Advisor를 찾아라.

<직장인들에게>

- 모든 조직은 Revolution에 느리게 되어 있다.

- 회사 안의 교육이 중요.

Paradigm Shift

Von Neumann

Symbolic(=Rule) AI

Current AI : reasoning

Logic

Symbolic Expression

Reasoning

Big Vector