logging techniques

with a touch of

Marcin Stożek "Perk"

How apps log things

- local file

- remote location

- stdout/stderr

stdout/err logging

Twelve-factor app compliant:

"A twelve-factor app never concerns itself

with routing or storage of its output stream."

Problem with multi-line, unstructured logs

How Docker manages logs

- Logging drivers (EE vs CE)

- $ docker logs

- local

- json-file

- journald

- Logging driver dies sometimes

- Remote LaaS dies sometimes

How Docker stores logs with json-file

/var/lib/docker/containers/88f1c.../88f1c...-json.log

{

"log":"2019-06-03 12:02:10 +0000 [info]: #0 delayed_timeout is overwritten by ack\n",

"stream":"stdout",

"time":"2019-06-03T12:02:10.252731041Z"

}

Kubernetes logging

Logging at the node level

Logging at the node level

✓ logs available through kubectl logs

✓ logs available locally on the node

❌this is not really convenient

(but helpful)

Cluster-level logging architectures

- Exposing logs directly from the application

- Sidecar container with a logging agent

- Node-level logging agent on every node

- Streaming sidecar container

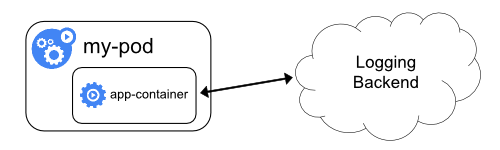

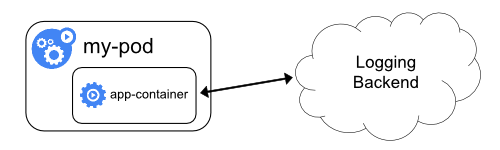

Logging from the application

Logging from the application

Push logs directly from the applications

running in the cluster.

The kubectl logs does not work anymore.

Depends on available libraries.

Not twelve-factor compliant.

Works for multi-line logs.

Sidecar container

with a logging agent

Sidecar container

with a logging agent

Same problems as with logging directly from the application.

Multi-line logs problem.

Who is rotating the log files?

Useful when application can log to file only.

Logging with node-agent

Logging with node-agent

Application logs into the stdout.

On every node there is a node-agent - DaemonSet.

Node-agent takes the logs and pushes them somewhere else.

Logs are still available through kubectl logs command.

Problem with multi-line logs.

Streaming sidecar container

Streaming sidecar container

Use when your application logs to file only.

Streaming container gets the logs and pushes them to stdout.

The rest is the node-agent scenario.

Space problem with two log files - inside and outside the pod.

Multiple sidecars for multiple files.

spec:

containers:

- name: snowflake

...

volumeMounts:

- name: varlog

mountPath: /var/log

- name: snowflake-log-1

image: busybox

args: [/bin/sh, -c, 'tail -n+1 -f /var/log/1.log']

volumeMounts:

- name: varlog

mountPath: /var/log

- name: snowflake-log-2

image: busybox

args: [/bin/sh, -c, 'tail -n+1 -f /var/log/2.log']

volumeMounts:

- name: varlog

mountPath: /var/log

volumes:

- name: varlog

emptyDir: {}$ kubectl logs my-pod snowflake-log-1

0: Mon Jan 1 00:00:00 UTC 2001

1: Mon Jan 1 00:00:01 UTC 2001

2: Mon Jan 1 00:00:02 UTC 2001

...

$ kubectl logs my-pod snowflake-log-2

Mon Jan 1 00:00:00 UTC 2001 INFO 0

Mon Jan 1 00:00:01 UTC 2001 INFO 1

Mon Jan 1 00:00:02 UTC 2001 INFO 2

...

So how to log things?

So how to log things?

That depends on the use case, but...

So how to log things?

🚫 Exposing logs directly from the application

🚫 Sidecar container with a logging agent

So how to log things?

✓ Streaming sidecar container

So how to log things?

✓✓✓ Node-level logging agent on every node

Node-agent logging solutions

FluentD is your friend here

Under CNCF like Kubernetes itself

Fluentd Kubernetes Daemonset - plug and play

Recommended by k8s fficial documentation

kind: DaemonSet

spec:

template:

spec:

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:forward

...

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containersShould we log everything?

It's not cheap

Logs throttling

Using Kubernetes means one have many services.

We don't use k8s because it's fancy, right?

Many services means many log messages.

At certain scale it doesn't have much sense to get all the logs.

FluentD can help with a fluent-plugin-throttle

Where to store your logs

$ kubectl -n kube-system \

create secret generic logsense-token \

--from-literal=logsense-token=YOUR-LOGSENSE-TOKEN-HERE

$ kubectl apply -f logsense-daemonset.yaml{

"kubernetes":"{

"container_name":"kafka",

"namespace_name":"int",

"pod_name":"kafka-kafka-0",

"container_image":"strimzi/kafka:0.11.1-kafka-2.1.0",

"container_image_id":"docker-pullable://strimzi/kafka@sha256:e741337...",

"pod_id":"c8eeb49c-67cb-11e9-9b29-12f358b019b2",

"labels":{

"app":"kafka",

"controller-revision-hash":"kafka-kafka-58dc7cdc78",

"tier":"backend",

"statefulset_kubernetes_io/pod-name":"kafka-kafka-0",

"strimzi_io/cluster":"kafka",

"strimzi_io/kind":"Kafka",

"strimzi_io/name":"kafka-kafka"

},

"host":"ip-10-100-1-2.ec2.internal",

"master_url":"https://172.20.0.1:443/api",

"namespace_id":"7fe790af-23d2-11e9-8903-0edd77f6b554",

"namespace_labels":{

"name":"int"

}

}",

"docker":"{

"container_id":"e5254e89e508126dbdea587080ec6e01aab660bf62392e41b6e0..."

}",

"stream":"stdout",

"log":" 2019-06-09 18:05:44,774 INFO Deleting segment 271347611 [kafka-scheduler-8] "

}

{

"kubernetes":"{

"container_name":"kafka",

"namespace_name":"int",

"pod_name":"kafka-kafka-0",

"container_image":"strimzi/kafka:0.11.1-kafka-2.1.0",

"container_image_id":"docker-pullable://strimzi/kafka@sha256:e741337...",

"pod_id":"c8eeb49c-67cb-11e9-9b29-12f358b019b2",

"labels":{

"app":"kafka",

"controller-revision-hash":"kafka-kafka-58dc7cdc78",

"tier":"backend",

"statefulset_kubernetes_io/pod-name":"kafka-kafka-0",

"strimzi_io/cluster":"kafka",

"strimzi_io/kind":"Kafka",

"strimzi_io/name":"kafka-kafka"

},

"host":"ip-10-100-1-2.ec2.internal",

"master_url":"https://172.20.0.1:443/api",

"namespace_id":"7fe790af-23d2-11e9-8903-0edd77f6b554",

"namespace_labels":{

"name":"int"

}

}",

"docker":"{

"container_id":"e5254e89e508126dbdea587080ec6e01aab660bf62392e41b6e0..."

}",

"stream":"stdout",

"log":" 2019-06-09 18:05:44,774 INFO Deleting segment 271347611 [kafka-scheduler-8] "

}

Links, lynx, wget, w3m, curl...

logger.info("Thank you!")