The LASSO:

least absolute shrinkage and selection operator

Pierre Ablin

12/03/2019

Parietal Tutorial

Overview

- Linear regression

- The Lasso model

- Non-smooth optimization: proximal operators

Supervised learning

GOAL: Learn \( f \) from some realizations of \( (\mathbf{x}, y) \)

Linear regression

GOAL: Learn \( f \) from some realizations of \( (\mathbf{x}, y) \)

Assumption:

\(f\) is linear

GOAL: Learn \( \beta \) from some realizations of \( (\mathbf{x}, y) \)

Maximum likelihood?

For one sample, if we observe \( \mathbf{x}_{\text{obs}}\) and \( y_{\text{obs}} \) , we have:

i.e. :

Maximum likelihood?

For one sample, if we observe \( \mathbf{x}_{\text{obs}}\) and \( y_{\text{obs}} \) , we have:

If we observe a whole dataset of \(N\) i.i.d. samples$$ (\mathbf{x}_1,y_1), \cdots, (\mathbf{x}_N, y_N) \enspace, $$

the likelihood of this observation is:

maximum likelihood estimator:

Matrix interlude

Define the \(\ell_2\) norm of a vector:

$$|| \mathbf{v}|| = \sqrt{\sum_{n=1}^N v_n^2}$$

And note in condensed matrix form:

$$ \mathbf{y} = [y_1,\cdots, y_N]^{\top} \in \mathbb{R}^N$$

Then,

M.L.E.

Pros:

- Consistant: as \( N \rightarrow \infty \), \(\beta_{\text{MLE}} \rightarrow \beta^* \)

- Comes from a clear statistical framework

- Can behave badly when the model is mispecified (e.g. \(f\) is not really linear)

- Lots of variance in \(\beta_{\text{MLE}}\) when \(N\) is not big enough

- Ill posed problem when \(N < P\) !

Cons:

What do we do when n < p?

Bet on sparsity / Ockham's razor: only a few coefficients in \( \beta \) play a role

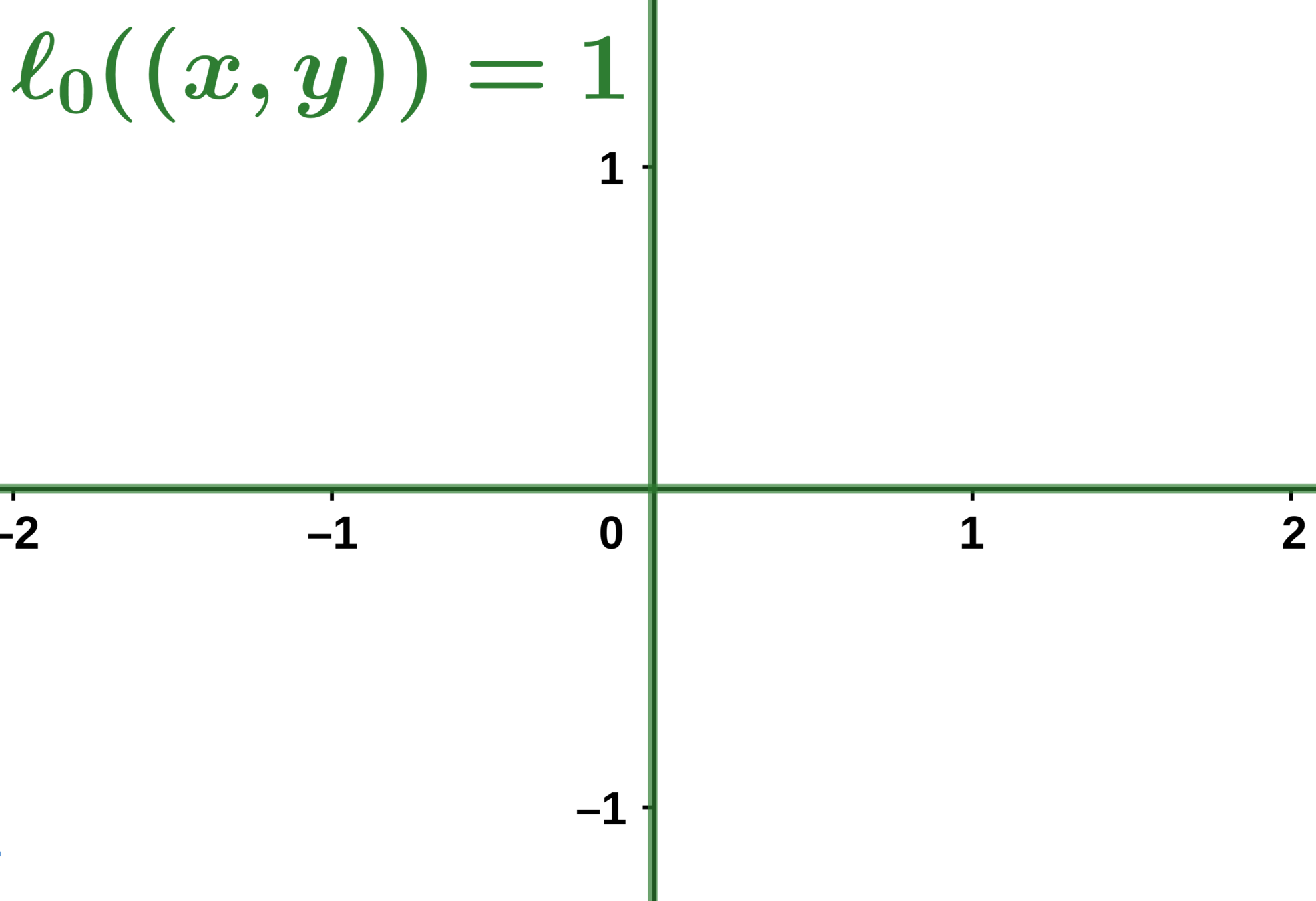

The \( \ell_0 \) Norm:

Example:

Subset selection/matching pursuit

Idea: select only a few active coefficients with the \( \ell_0 \) norm.

Where \( t\) is an integer controlling the sparsity of the solution.

- Non-convexity

- Instability: adding a sample may completely change \( \beta \) and its support.

- NP-Hard (loooong to solve)

Problems:

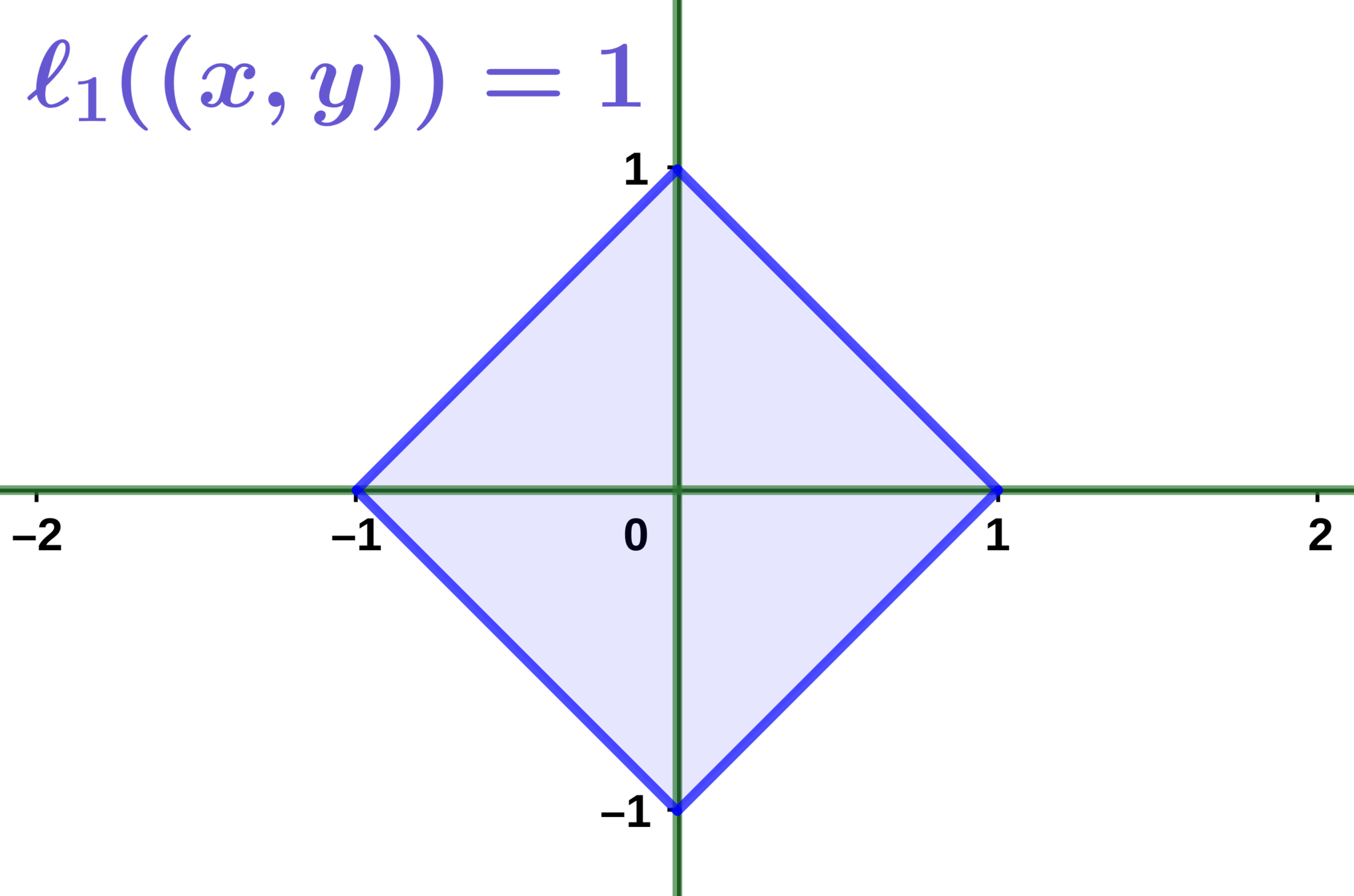

The Lasso

Idea: relax the \(\ell_0\) norm to obtain a convex problem

Where \( t\) is a level controlling the sparsity of the solution.

See notebook

The Lasso: Lagrange formulation

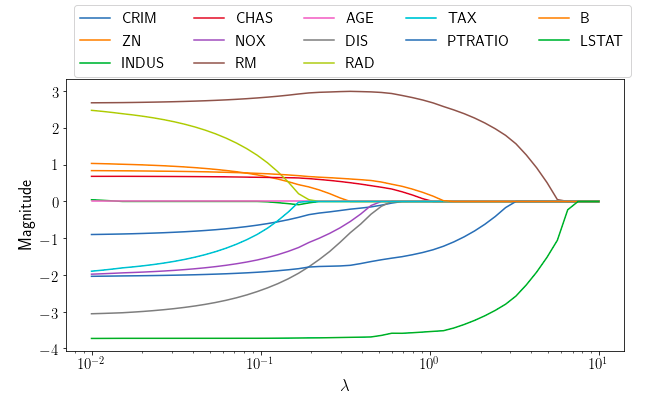

Where \( \lambda \) is a level controlling the amount of sparsity.

- Relationship between \(\lambda\) and \(t\) depends on the data

- It promotes sparsity: there is a threshold \(\lambda_{\text{max}}\) such that \( \lambda >\) \(\lambda_{\text{max}}\) implies \(\beta_{\text{lasso}} = 0 \)

Derivation

\(\beta = 0\) is a solution to the lasso if:

Where \(\partial \) is the subgradient.

So the condition writes:

Lasso is useful because...

- It performs at the same time model selection and estimation : it tells you which coefficients in \(\mathbf{x}\) are important.

Boston dataset:

Lasso is useful because...

- Leveraging sparsity enables fast solvers

If \(p = 1000 \) and \(n = 1000 \), computing \(X\beta\) takes \(\sim n \times p = 10^6 \) operations

Intuition:

But if we know that there are only \(10 \) non-zero coefficients in \(\beta\), it takes only \(\sim 10^4\) operations

The same reasoning applies to most quantities useful to estimate the model

Lasso estimation

how do we fit the model?

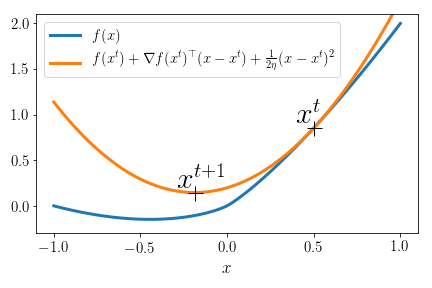

Gradient descent 101

Minimize \( f(\mathbf{x})\) where \(f\) is differentiable.

Iterate \(\mathbf{x}^{t+1} = \mathbf{x}^t - \eta \nabla f(\mathbf{x}^t) \)

Equivalent to minimizing a quadratic surrogate:

The simplest lasso:

\(P=1, N = 1, X = 1:\) can we minimize \(\frac12 (y - \beta)^2 + \lambda |\beta|\) ?

Yes: proximity operator

Soft-thresholding:

- \(0\) if \(|y|\leq \lambda\)

- \(y- \lambda\) if \(y>\lambda\)

- \(y + \lambda\) if \(y < -\lambda\)

Soft thresholding

- \(0\) if \(|y|\leq \lambda\)

- \(y- \lambda\) if \(y>\lambda\)

- \(y + \lambda\) if \(y < -\lambda\)

Separability:

ISTA: Iterative soft threshdolding algorithm

Gradient of the smooth term:

ISTA:

Comes from:

ISTA: Iterative soft threshdolding algorithm

- Some coefficients are \(0\) because of the prox

- One iteration takes \(O(\min(N, P) \times P)\)

Can be problematic for large \(P\) !

COORDInate descent

Idea : update only one coefficient of \( \beta \) at each iteration.

- If we know the residual \( \mathbf{r} =X\beta^{t} - \mathbf{y}\), each update is \(O(n) \) :)

Residual update:

After updating the coordinate \(j\):

\( \beta^{t+1}_i = \beta^{t}_i \) for \( i \enspace \ne j \)