A Software Engineer's Toolkit for Quant. Research

Patrick Laub

QMNET, 4th June 2021

Outline

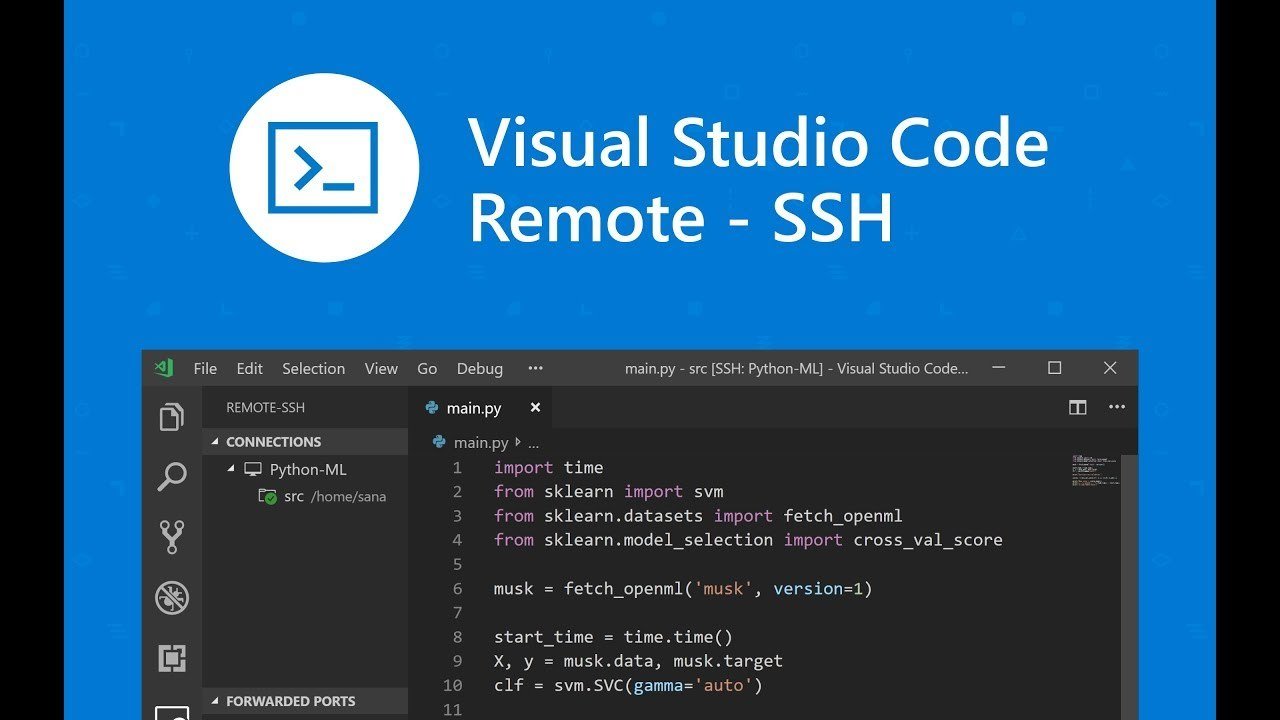

Demo 1: Python & Jupyter remotely

- SSH

- JIT-compiling & parallelisation with numba

- Slideshow with Reveal.js & Markdown

Demo 2: Mathematica

Demo 3: LaTeX

- Automatic bibliography with BibTeX

Demo 4: Make a basic website

- Git, Jekyll, Github pages

Work

Home

Melbourne Research Cloud

Spartan cluster

Amazon AWS

Background

- UQ Software engineering & math

- PhD (Aarhus & UQ): Computational applied probability

- Post-doc #1 (ISFA): Insurance applications

- Post-doc #2 (UoM): Empirical dynamic modelling

Lifewire / Tim Liedtke

Chrome Remote Desktop

TeamViewer

Windows Terminal Preview v 1.9

'Quake mode' (Win/Cmd + `)

All have a terminal

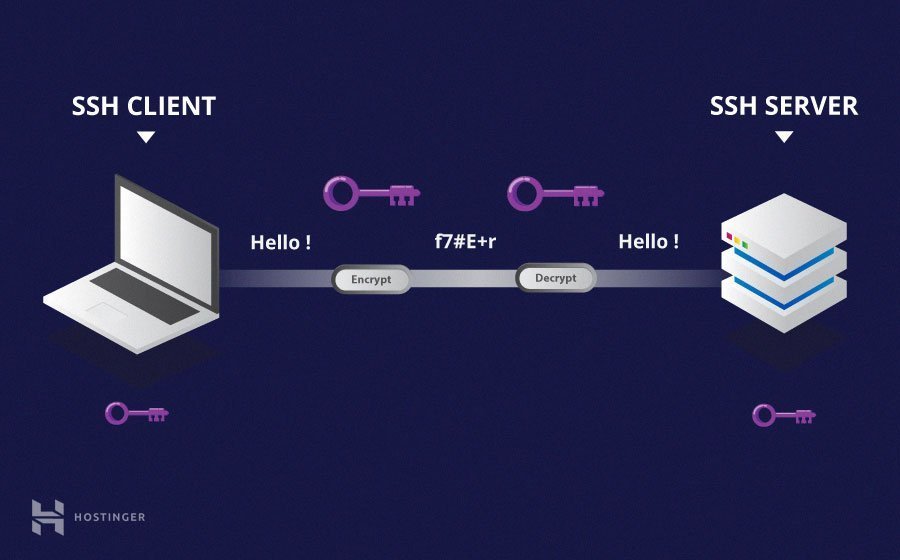

Secure shell

(Use "mosh" for cellular connections)

Secure shell

ssh username@ip-address

<enter password>

~/.ssh/config

Host mrc

HostName 123.123.123.123

User plaub

ssh-keygen

- Makes "id_rsa" private key

- Makes "id_rsa.pub" public key

(Use "mosh" for cellular connections)

Terminal commands

Moving around the filesystem

ls = list files

cd = change directory

pwd = print working directory

Forgotten something?

<Ctr> + <r> = reverse search

Installing a program

sudo apt-get install ...

conda install ...

brew install ...

winget install ...

File stuff

cp = copy

mv = move (= rename)

Terminal commands

Moving around the filesystem

ls = list files

cd = change directory

pwd = print working directory

Special folders

. = current directory

~ = home directory

Flags

ls -ltrh

Help me!

man bla

Things that aren't flags

ls ./My Project

ls "./My Project"

ls ./My\ Project

Forgotten something?

<Ctr> + <r> = reverse search

Installing a program

sudo apt-get install ...

conda install ...

winget install ...

/ = Linux/Mac separator

\ = Windows separator

File stuff

cp = copy

mv = move (= rename)

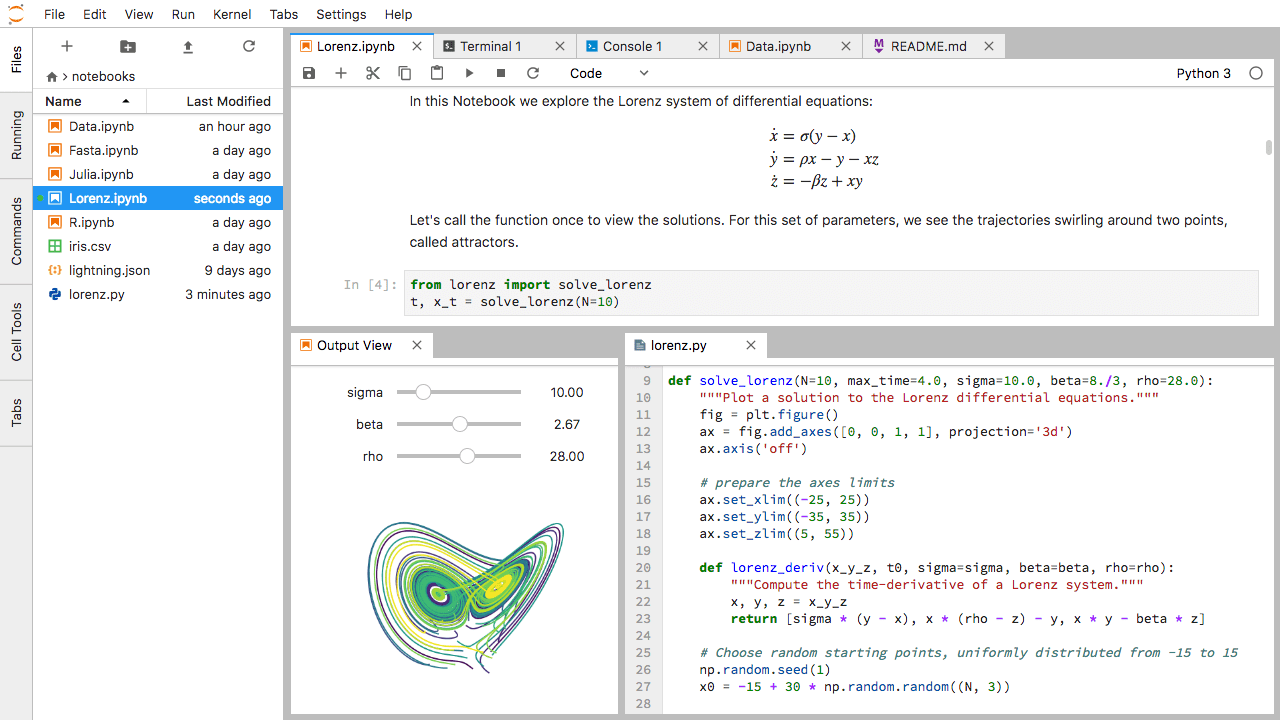

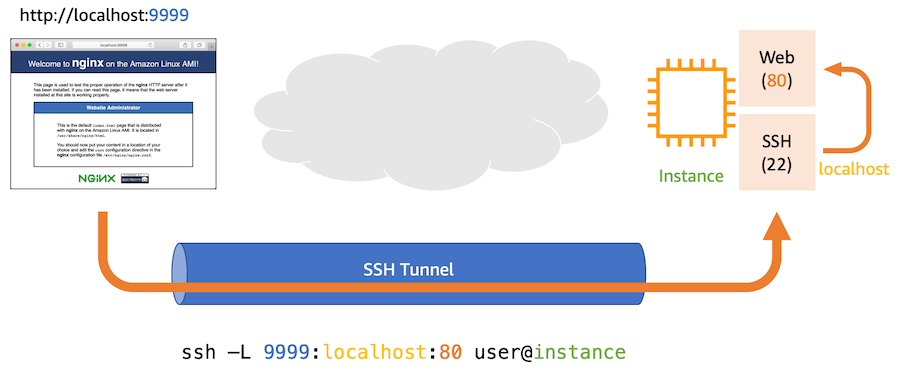

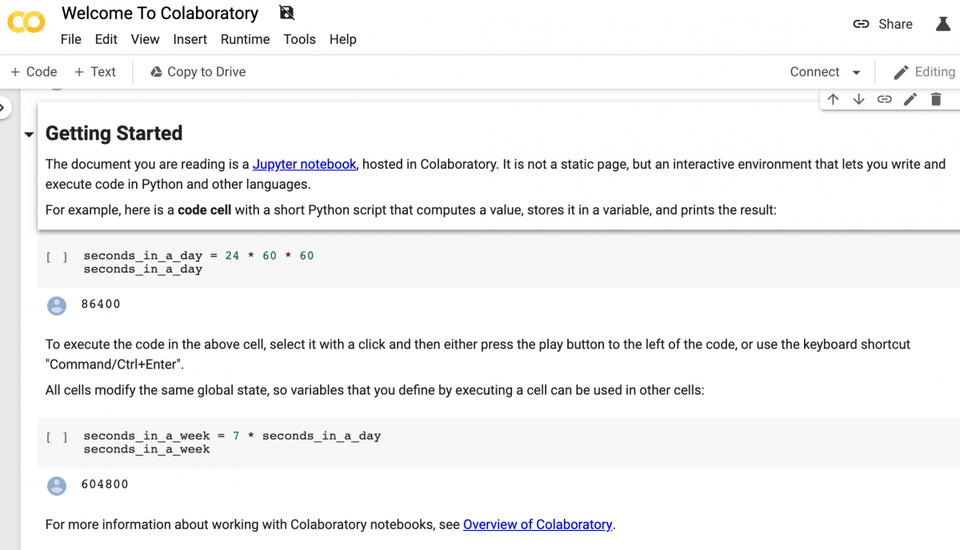

Jupyter

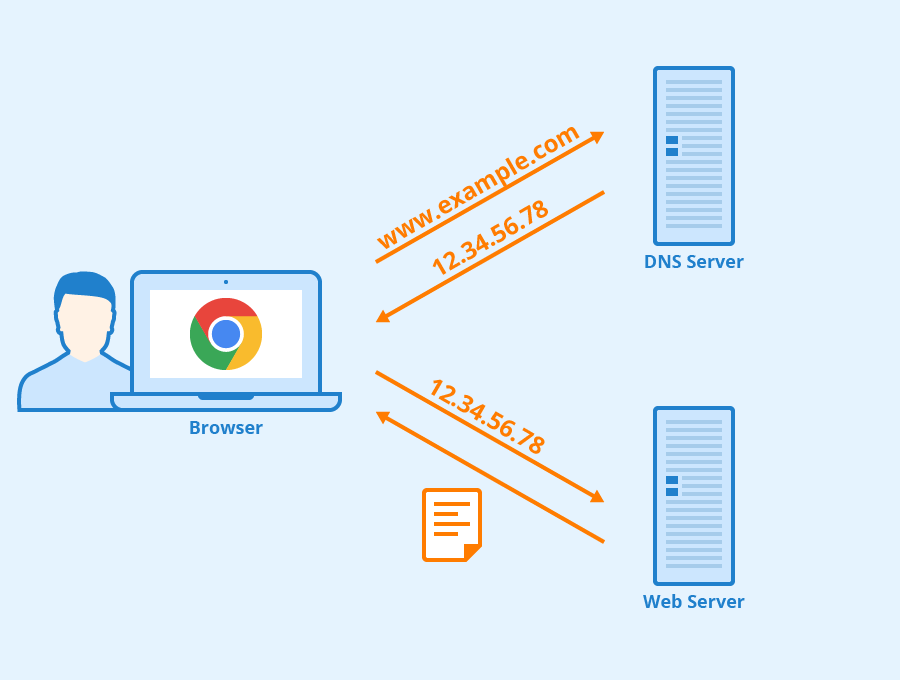

Port

IP Address

(Open ports to firewall)

Hidden IP Address'

Google Colab

reveal.js

-

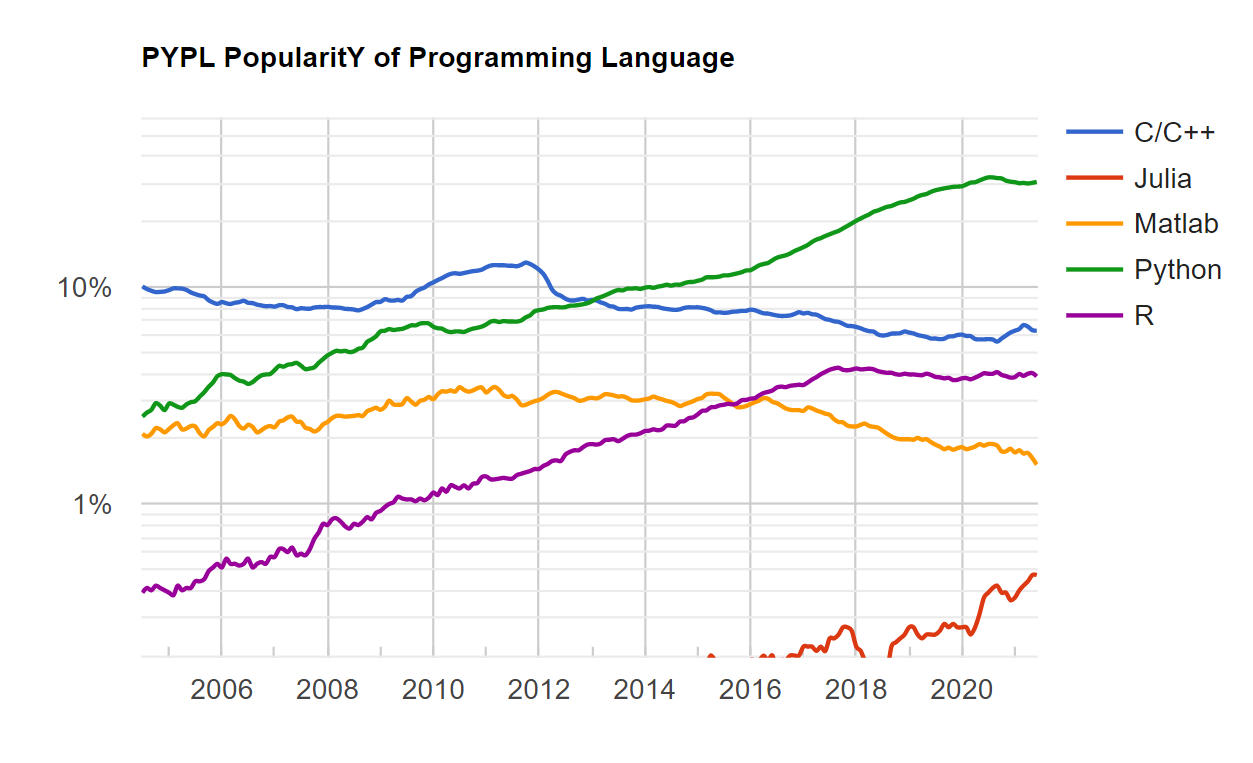

Python 3.9 (October 2020)

-

R 4.1.0 (May 2021)

-

C++ (2020)

-

Julia 1.6.1 (April 2021)

-

Mathematica 12.3 (May 2021)

Software experience

- Assembly

- Bash

- C

- C#

- C++

- CSS

- Dart

- HTML

- Java

- JavaScript

- Jekyll (Markdown)

- Julia

- LaTeX

- Mathematica

- Matlab

- Perl

- PHP

- Python

- R

- Ruby (on Rails)

- Stata

- SQL

- Visual Basic

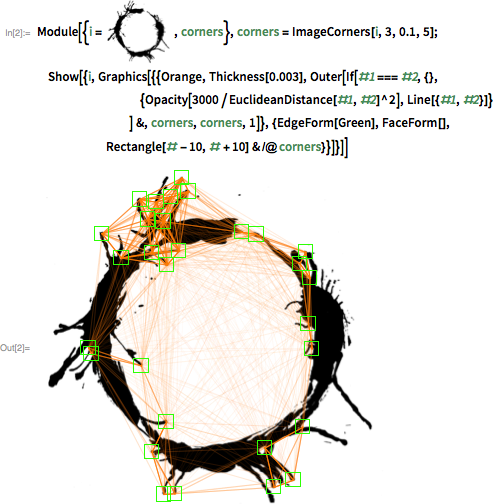

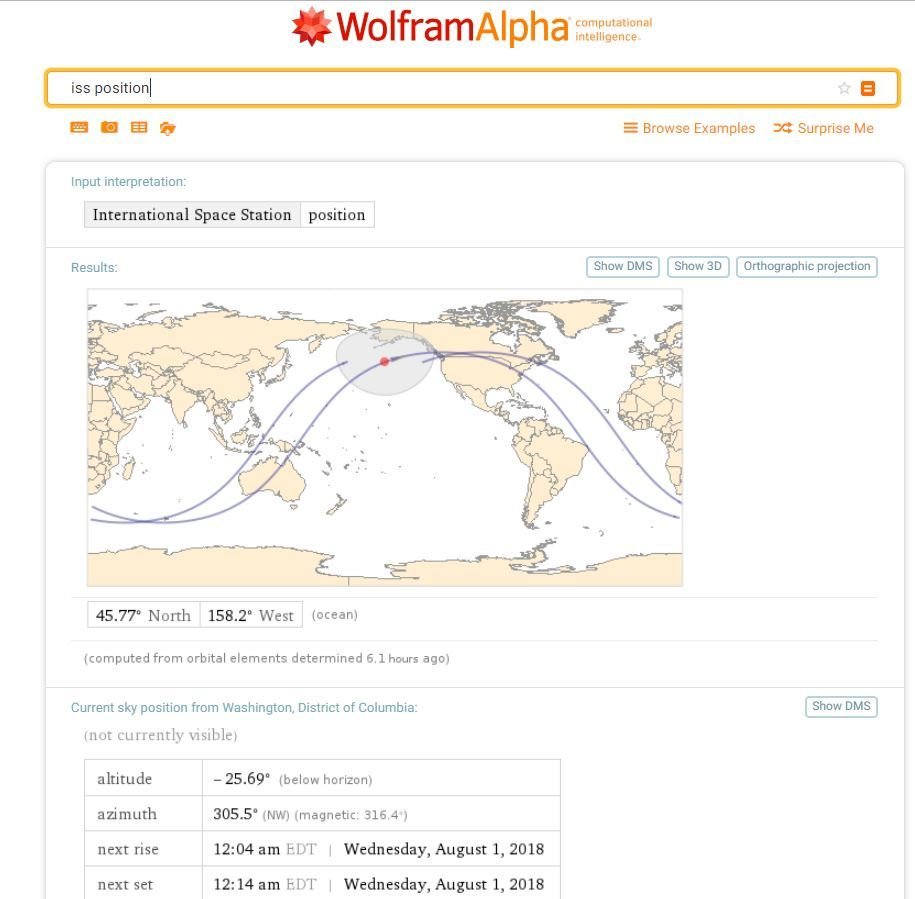

Mathematica

https://writings.stephenwolfram.com/2016/11/quick-how-might-the-alien-spacecraft-work/

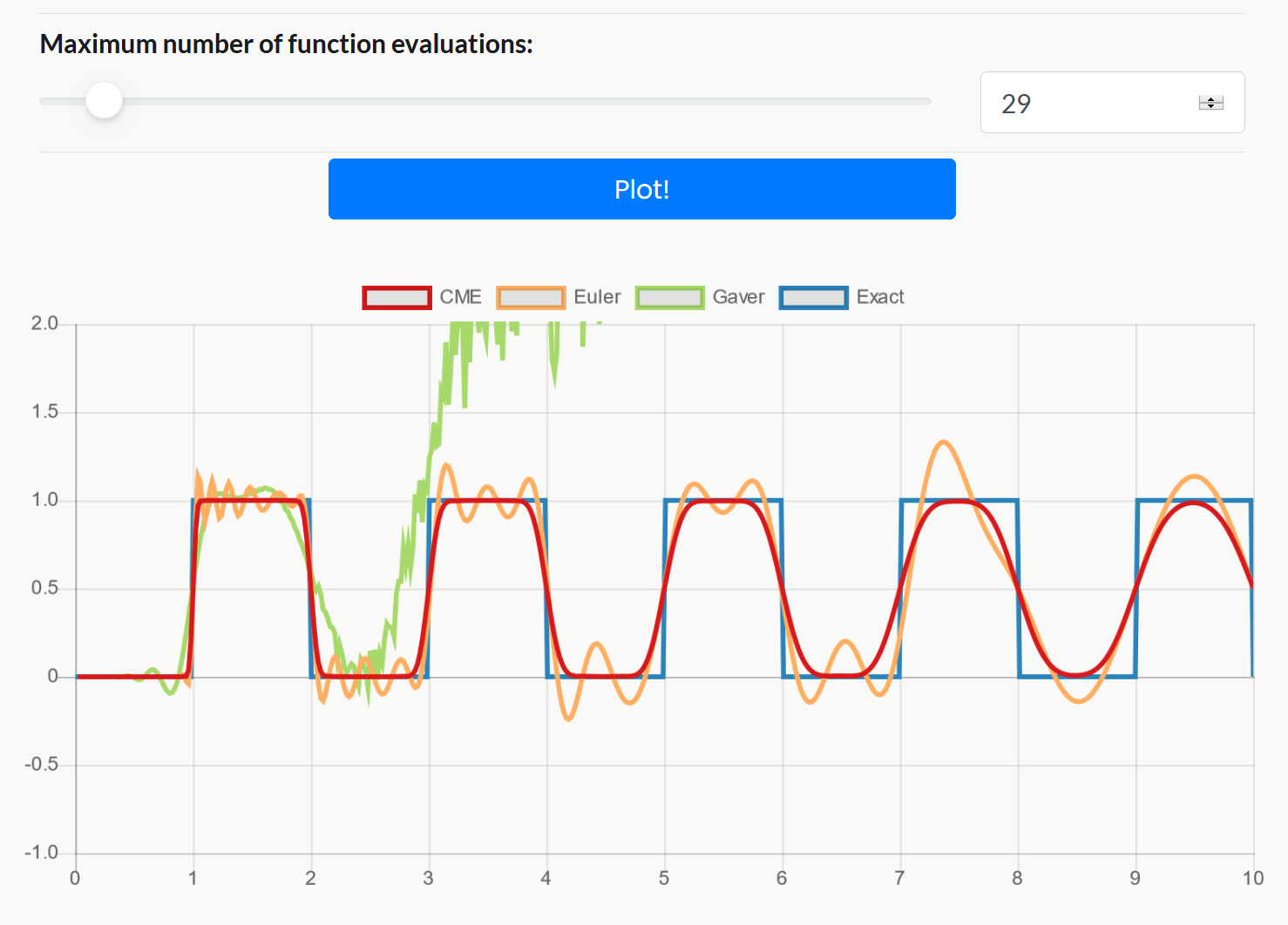

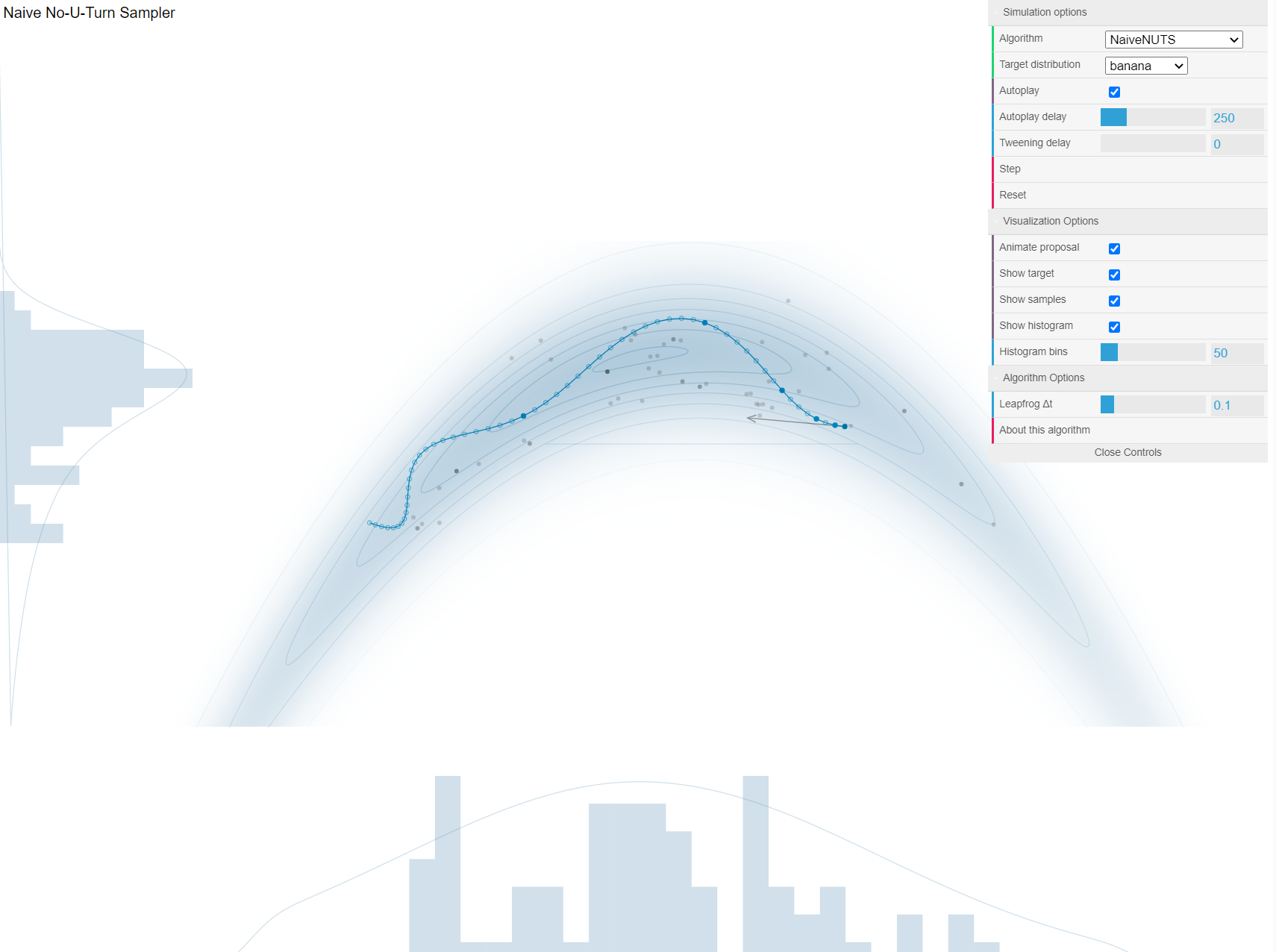

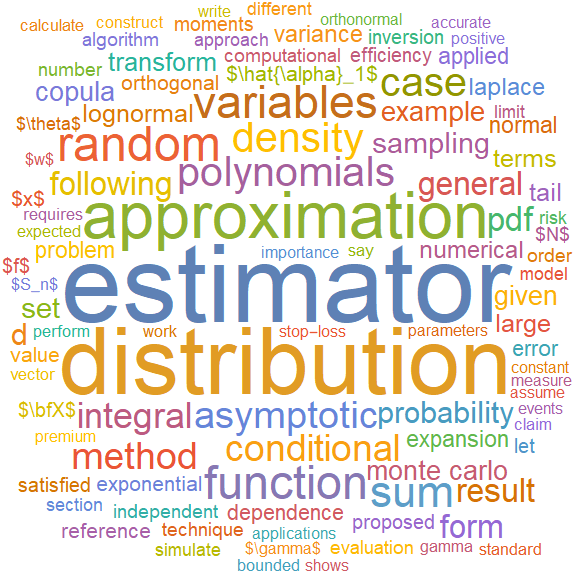

Monte Carlo

My Thesis

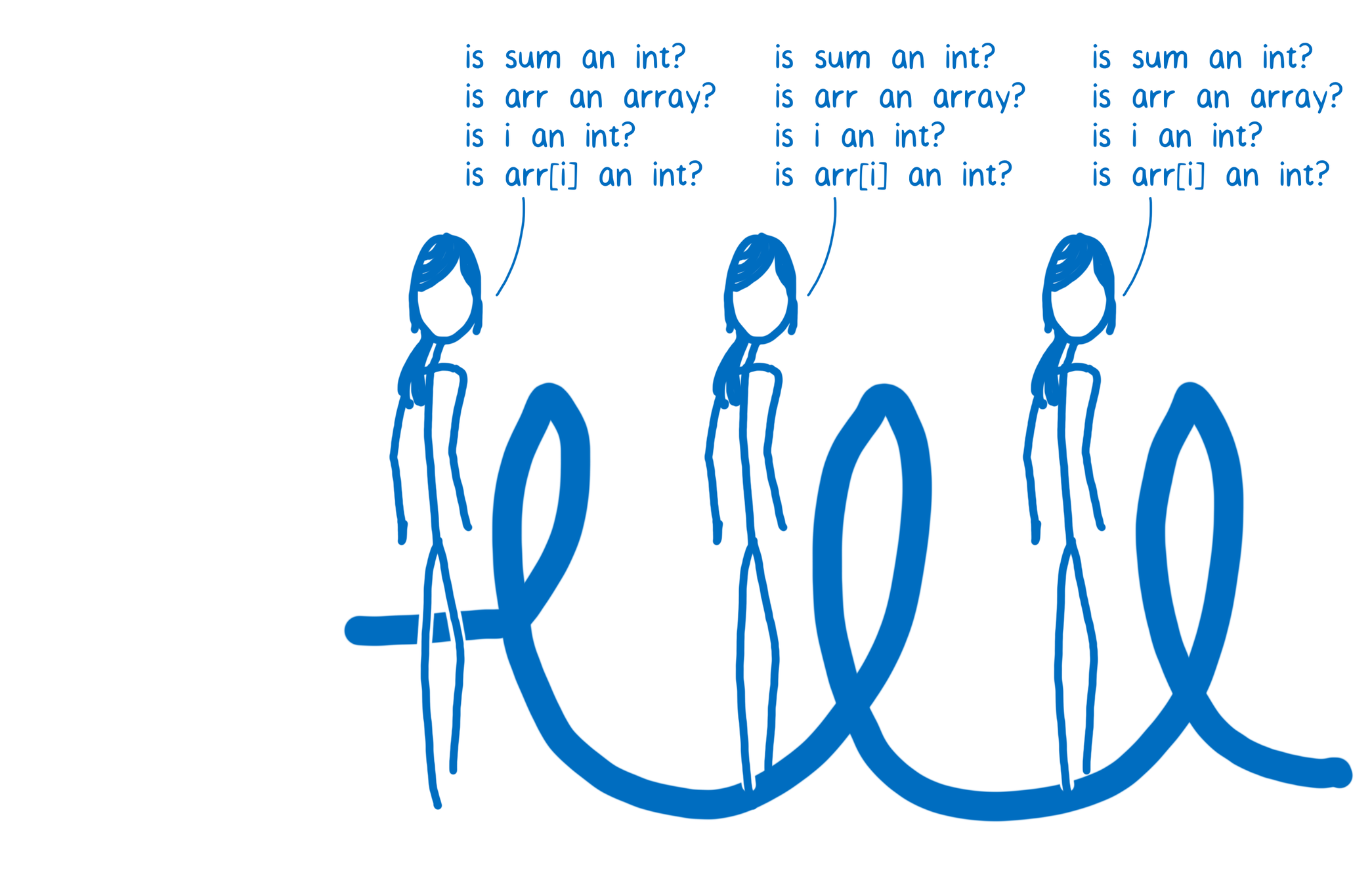

C.f. Lin Clark (2017), A crash course in JIT compilers

https://hacks.mozilla.org/2017/02/a-crash-course-in-just-in-time-jit-compilers/

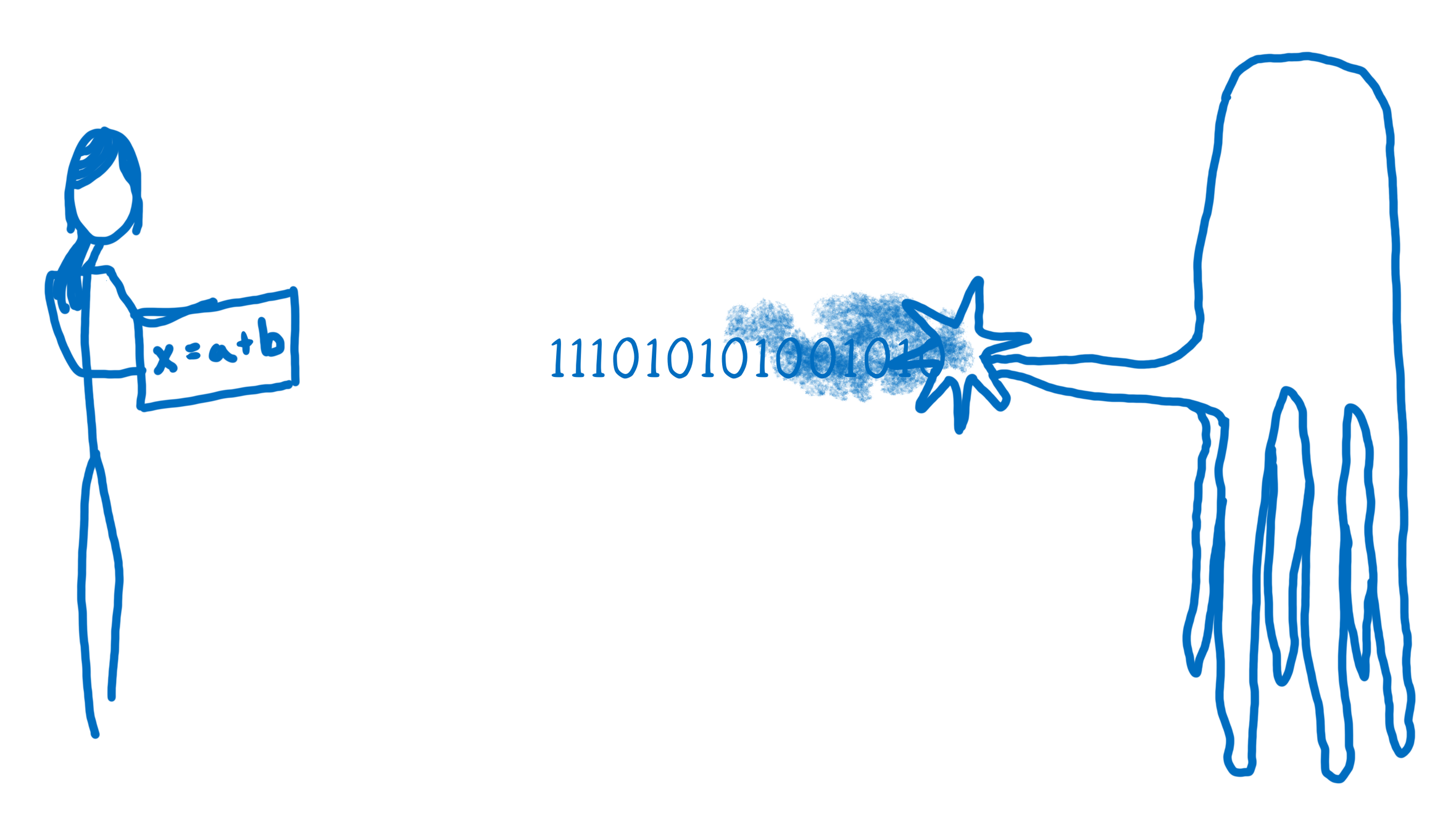

Talking to a computer

What does a machine understand?

Intel i5 (2010): add (0101), subtract (1111), ...

Intel i7 (2020): add (0101), subtract (1111), add_vectors (1011), ...

Apple M1 (2020): add (100), subtract (111), ...

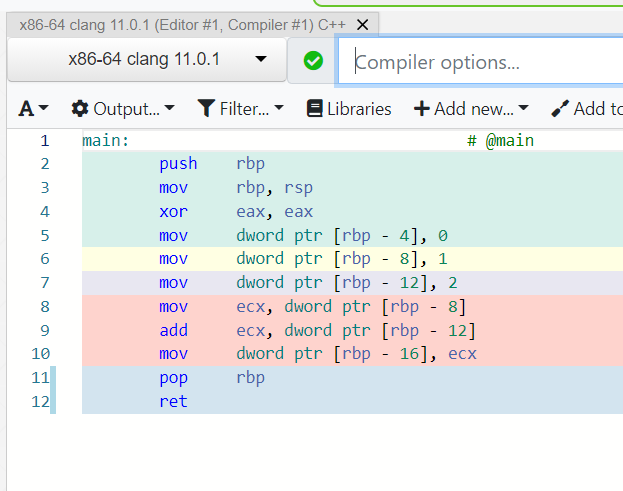

movl $0x1, -0x8(%rbp)int x = 1;Instruction set architecture (ISA), e.g. x86-64

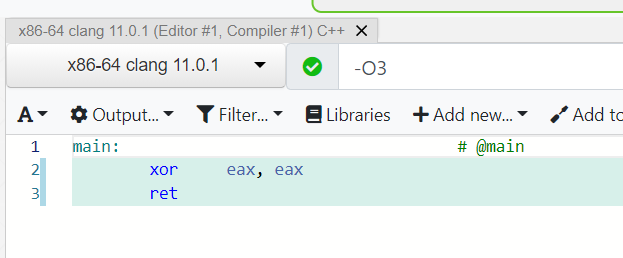

Try Compiler Explorer https://godbolt.org/z/dTv78M

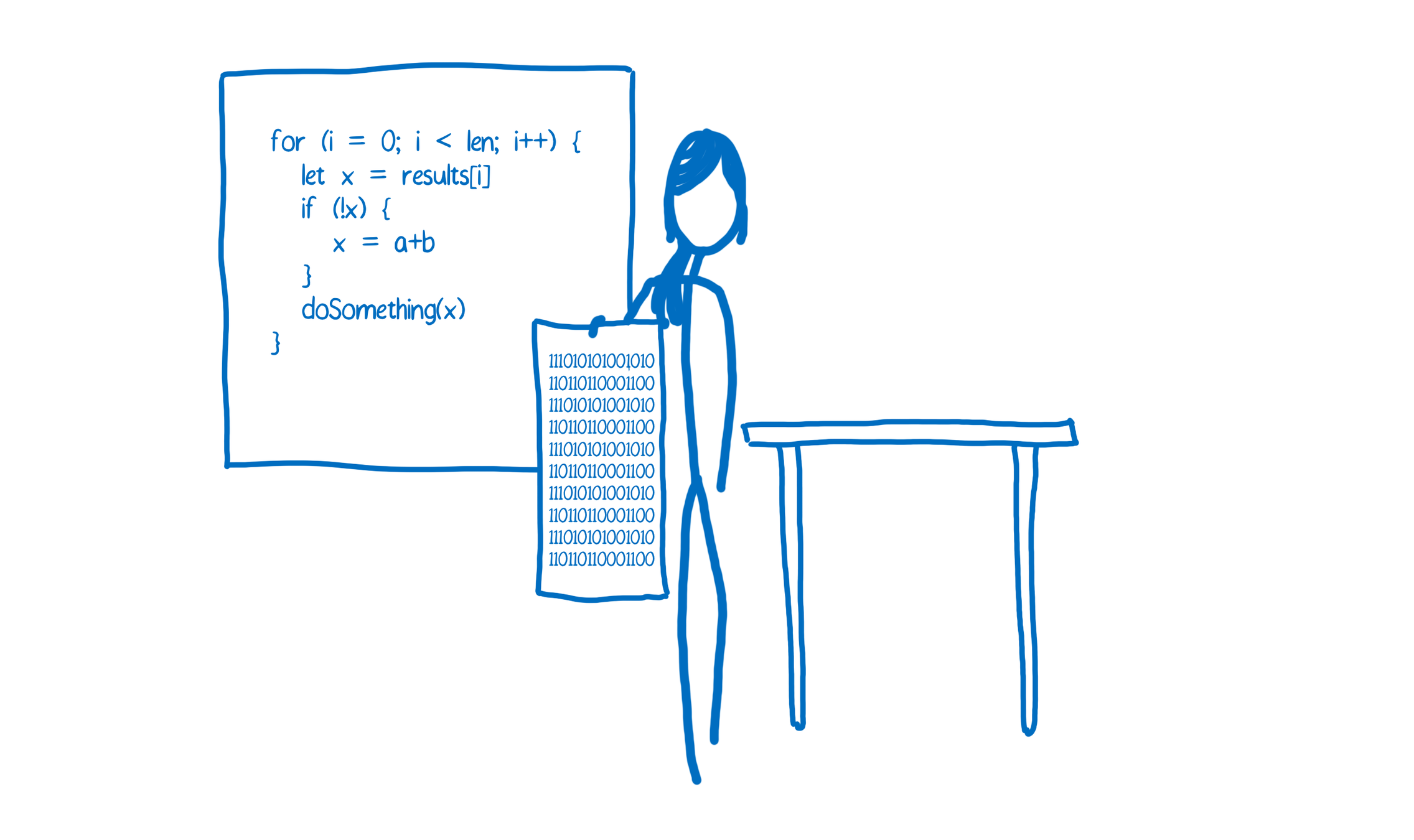

Interpreters & compilers

C.f. Lin Clark (2017), A crash course in JIT compilers

Why is (raw) Python slow?

x = 1

y = 2

z = x + yint x = 1;

int y = 2;

int z = x + y;// x = 1

if (PyDict_SetItem(__pyx_d, __pyx_n_s_x, __pyx_int_1) < 0) {

__PYX_ERR(0, 2, __pyx_L1_error);

}

// y = 2

if (PyDict_SetItem(__pyx_d, __pyx_n_s_y, __pyx_int_2) < 0) {

__PYX_ERR(0, 3, __pyx_L1_error);

}

// z = x + y

__Pyx_GetModuleGlobalName(__pyx_t_1, __pyx_n_s_x);

if (unlikely(!__pyx_t_1)) {

__PYX_ERR(0, 4, __pyx_L1_error);

}

__Pyx_GOTREF(__pyx_t_1);

__Pyx_GetModuleGlobalName(__pyx_t_2, __pyx_n_s_y);

if (unlikely(!__pyx_t_2)) {

__PYX_ERR(0, 4, __pyx_L1_error);

}

__Pyx_GOTREF(__pyx_t_2);

__pyx_t_3 = PyNumber_Add(__pyx_t_1, __pyx_t_2);

if (unlikely(!__pyx_t_3)) {

__PYX_ERR(0, 4, __pyx_L1_error);

}

__Pyx_GOTREF(__pyx_t_3);

__Pyx_DECREF(__pyx_t_1);

__pyx_t_1 = 0;

__Pyx_DECREF(__pyx_t_2);

__pyx_t_2 = 0;

if (PyDict_SetItem(__pyx_d, __pyx_n_s_z, __pyx_t_3) < 0) {

__PYX_ERR(0, 4, __pyx_L1_error);

}

__Pyx_DECREF(__pyx_t_3);

__pyx_t_3 = 0;Python

C++

Try Compiler Explorer https://godbolt.org/z/dTv78M

def arraySum(arr):

sum = 0;

for i in range(len(arr)):

sum += arr[i];

return sum

C.f. Lin Clark (2017), A crash course in JIT compilers

https://hacks.mozilla.org/2017/02/a-crash-course-in-just-in-time-jit-compilers/

def sample_geometric_exponential_sums(T, p, μ):

X = np.zeros(T)

N = rnd.geometric(p, size=T)

U = rnd.exponential(μ, size=N.sum())

i = 0

for t in range(T):

X[t] = U[i:i+N[t]].sum()

i += N[t]

return XVanilla Python

from numba import njit

@njit()

def sample_geometric_exponential_sums(T, p, μ):

X = np.zeros(T)

N = rnd.geometric(p, size=T)

U = rnd.exponential(μ, size=N.sum())

i = 0

for t in range(T):

X[t] = U[i:i+N[t]].sum()

i += N[t]

return XFirst run is compiling (500 ms), but after we are

down from 2.7 ms to 164 μs (16x speedup)

JIT-compilation

def minimise(f, x0 = 0.0, step = 0.1, maxIters = 100):

epsilon = 1e-2

x = x0

for iter in range(maxIters):

fDash = (f(x + epsilon) - f(x)) / epsilon

x -= step * fDash

return x, f(x)

if __name__ == "__main__":

f = lambda x: (x-4)**2

minimiser, minValue = minimise(f)

print(f"Minimum value of {minValue} attained at {minimiser}")36.63 s

(1,000,000 repetitions)

Going to C++

#include <iostream>

auto minimise(double f(double), double x0 = 0.0,

double step = 0.1, int maxIters = 100) {

double x = x0;

double epsilon = 1e-2;

for (int iter = 0; iter < maxIters; iter++) {

double fDash = (f(x + epsilon) - f(x)) / epsilon;

x -= step * fDash;

}

return std::make_pair(x, f(x));

}

int main(void) {

auto f = [](double x) { return (x-4)*(x-4); };

auto [minimiser, minValue] = minimise(f);

std::cout << "Minimum value of " << minValue

<< " attained at " << minimiser << std::endl;

return 0;

}clang++ gradient-descent.cpp -std=c++17 -O31.18 s

(1,000,000 repetitions)

double arr[3] = { 1.0, 2.0, 3.0 };

for (auto& x : arr) {

std::cout << x << std::endl;

}For-each loops & auto types

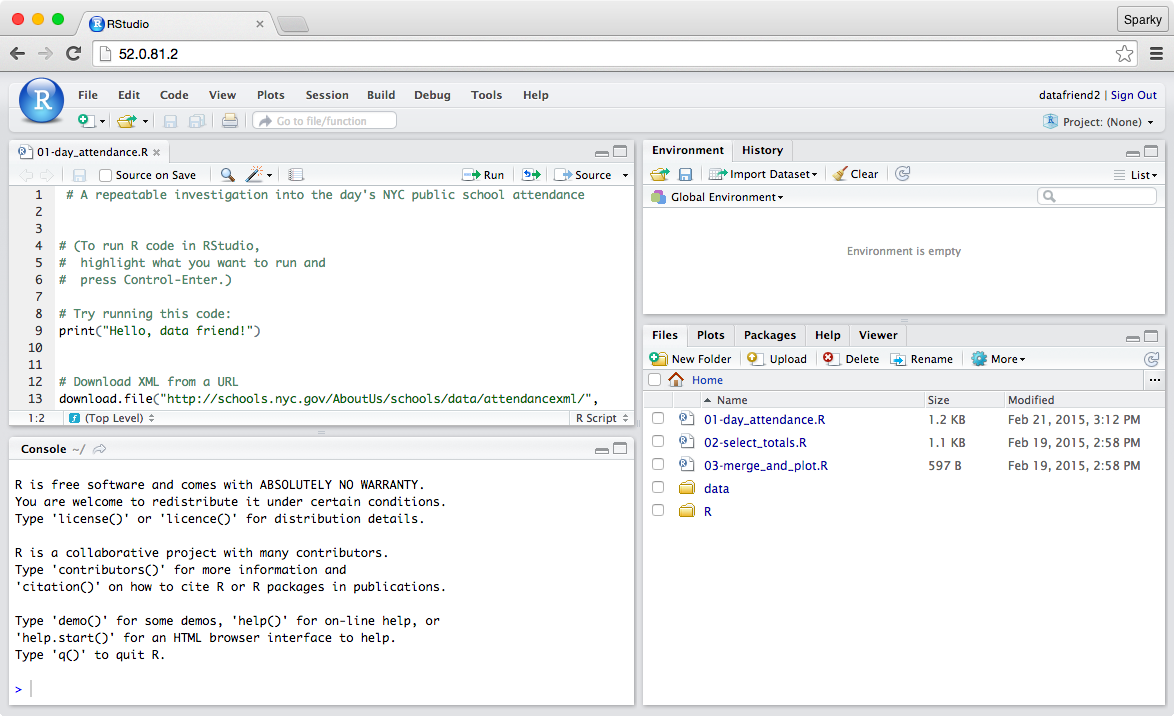

RStudio server

# Import `dplyr` library

library(dplyr)

# Load the data

data(babynames)

# Count how many young boys with the name "Taylor" are born

sum(select(filter(babynames, sex=="M", name=="Taylor"), n))

# Do the same but now with `%>%`

babynames %>%

filter(sex=="M", name=="Taylor") %>%

select(n) %>%

sumPipes

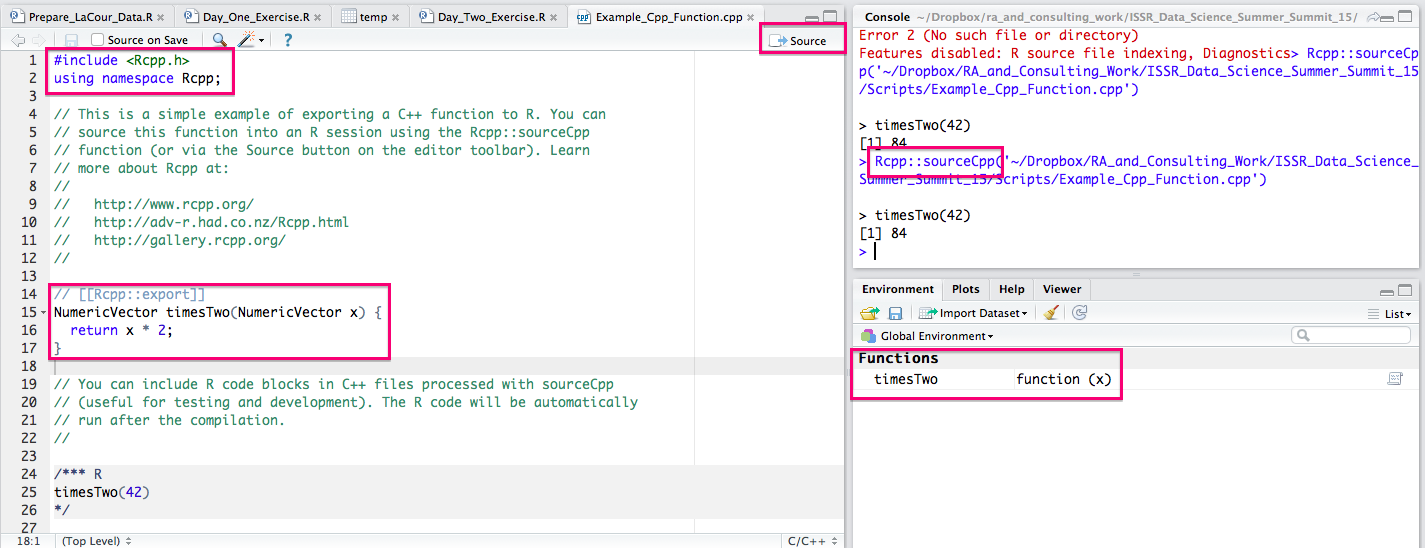

Escaping R for C++...

The "two language problem"

Matlab Julia

C to Julia

void rungekutta(int p, double *avector, double *gvector, double *bvector,

double **cmatrix, double dt, double h, double **T, double *t,

double **ka, double **kg, double **kb, double ***kc)

{

int i, j, k, m;

double eps, h2, sum;

i = dt/h;

h2 = dt/(i+1);

init_matrix(ka, 4, p);

init_matrix(kb, 4, p);

init_3dimmatrix(kc, 4, p, p);

if (kg != NULL)

init_matrix(kg, 4, p);

...

for (i=0; i < p; i++) {

avector[i] += (ka[0][i]+2*ka[1][i]+2*ka[2][i]+ka[3][i])/6;

bvector[i] += (kb[0][i]+2*kb[1][i]+2*kb[2][i]+kb[3][i])/6;

for (j=0; j < p; j++)

cmatrix[i][j] +=(kc[0][i][j]+2*kc[1][i][j]+2*kc[2][i][j]+kc[3][i][j])/6;

}

}

}

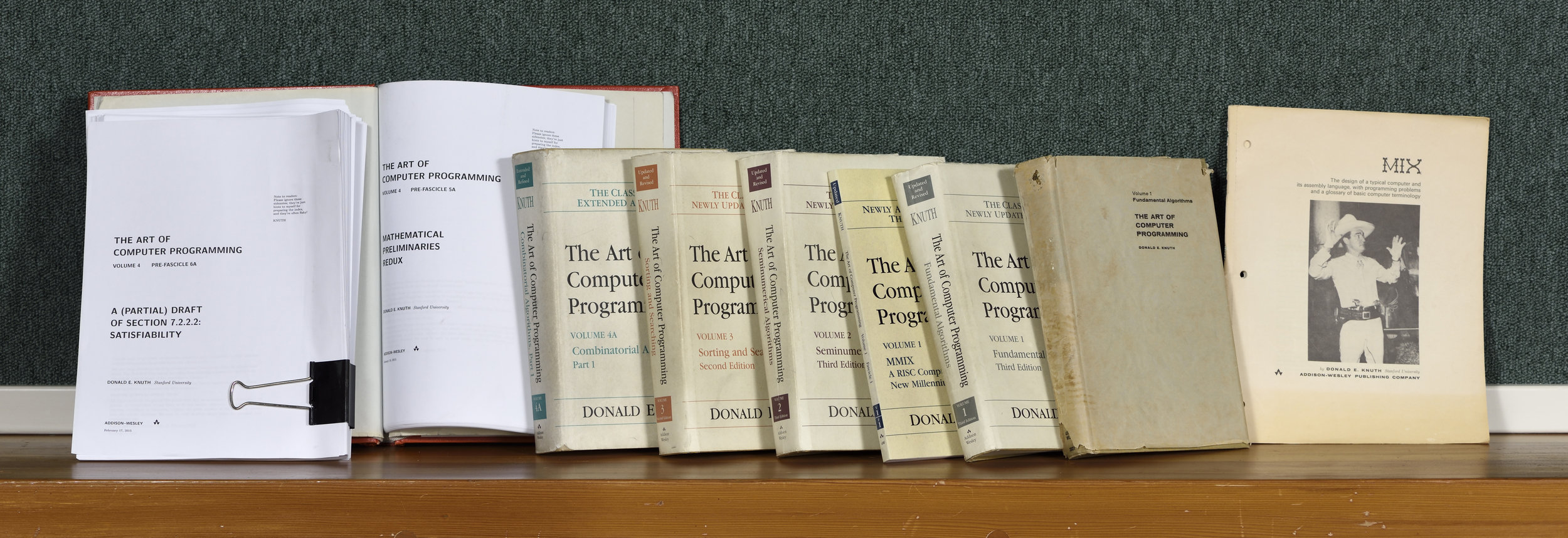

This function: 116 lines of C, built-in to Julia

Whole program: 1700 lines of C, 300 lines of Julia

# Run the ODE solver.

u0 = zeros(p*p)

pf = ParameterizedFunction(ode_observations!, fit)

prob = ODEProblem(pf, u0, (0.0, maximum(s.obs)))

sol = solve(prob, OwrenZen5())https://github.com/Pat-Laub/EMpht.jl

EMPht.jl

using Pkg

using EMpht

lt = EMpht.parse_settings("life_table.json")[1]

phCF200 = empht(lt, p=200, ph_structure="CanonicalForm1")LaTeX

New York Times, "Donald Knuth, The Yoda of Silicon Valley"

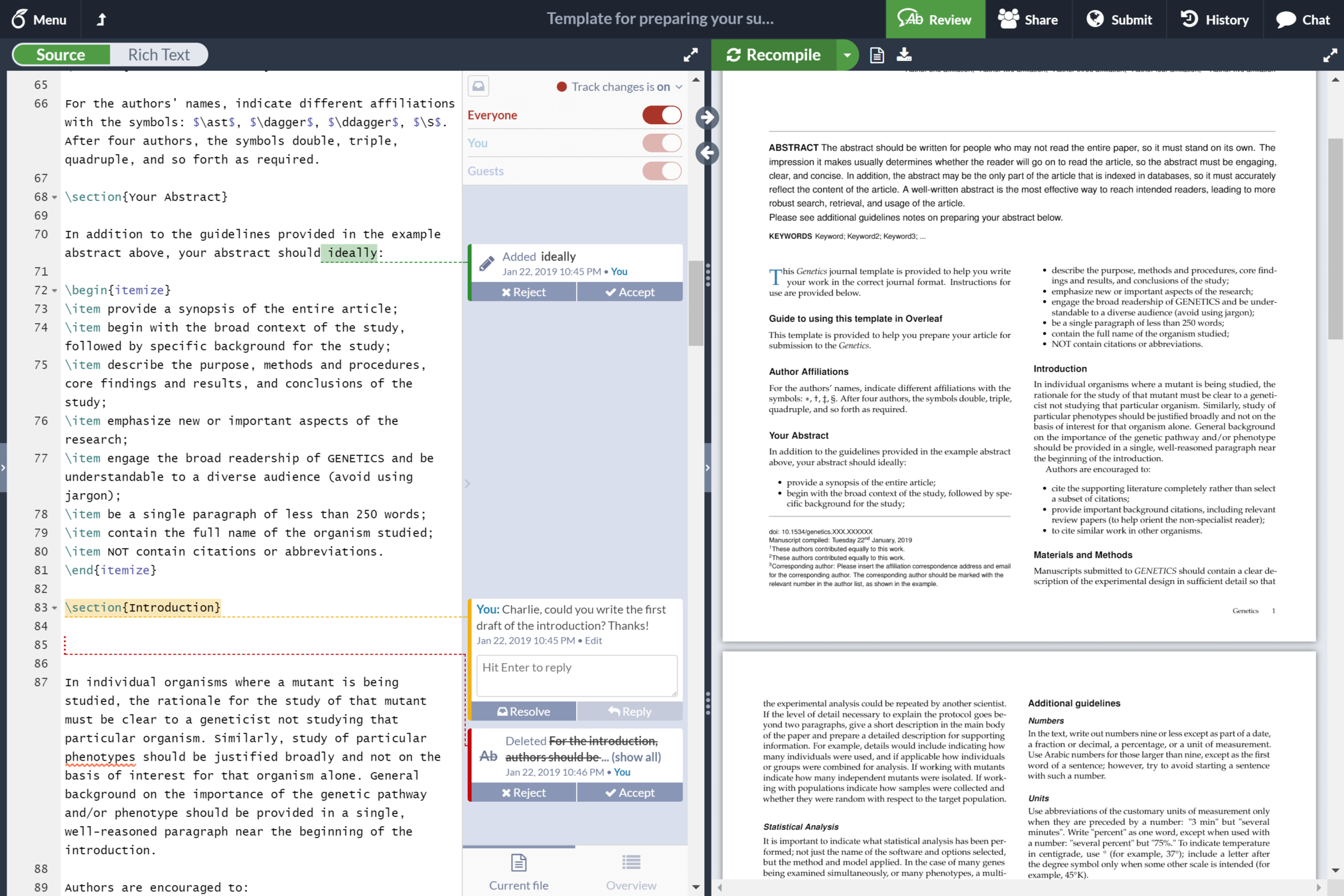

Overleaf

(Free for Uni Melb)

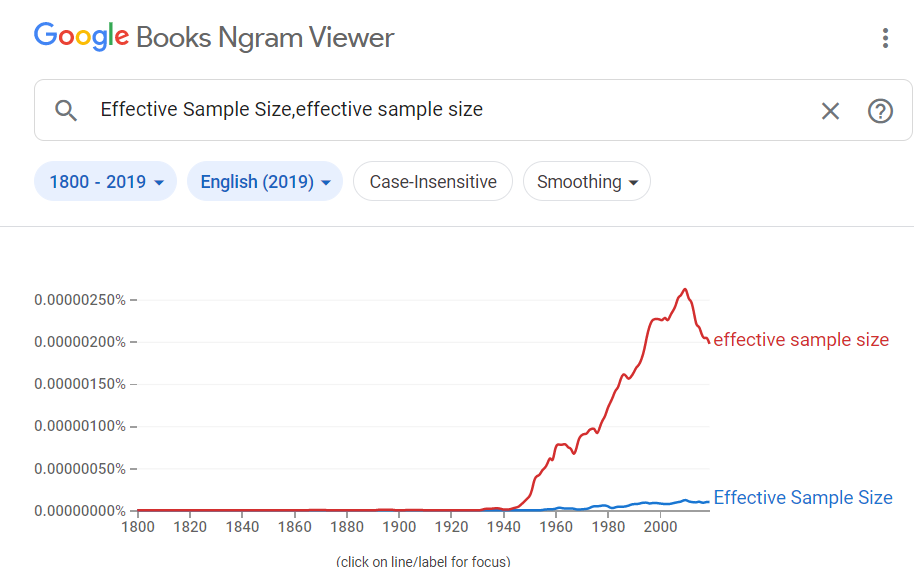

Google Ngrams

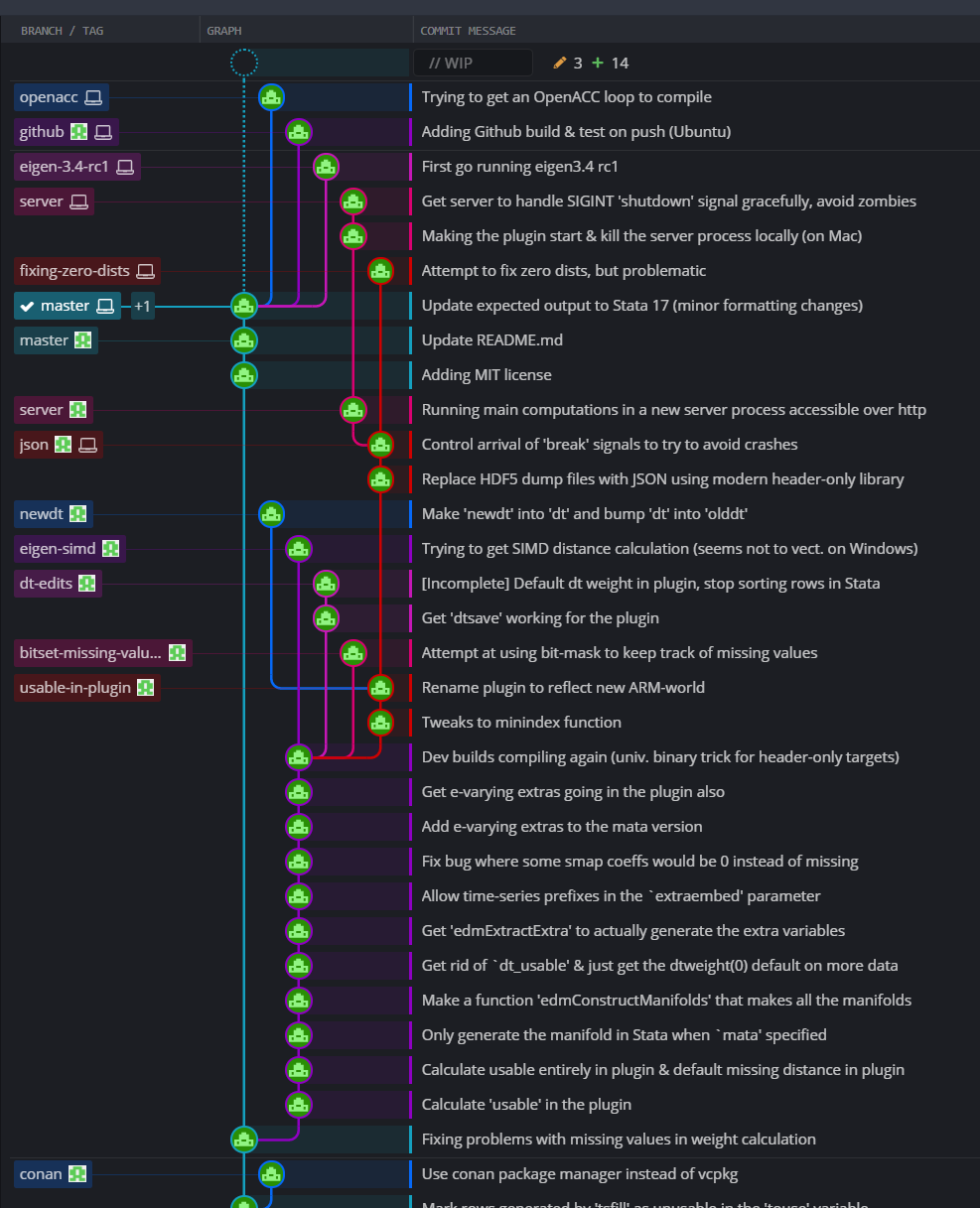

Versioning & code review

(Git & Github)

Gitkraken

Take home messages

On your next project

be brave & try one new thing..

Appendix

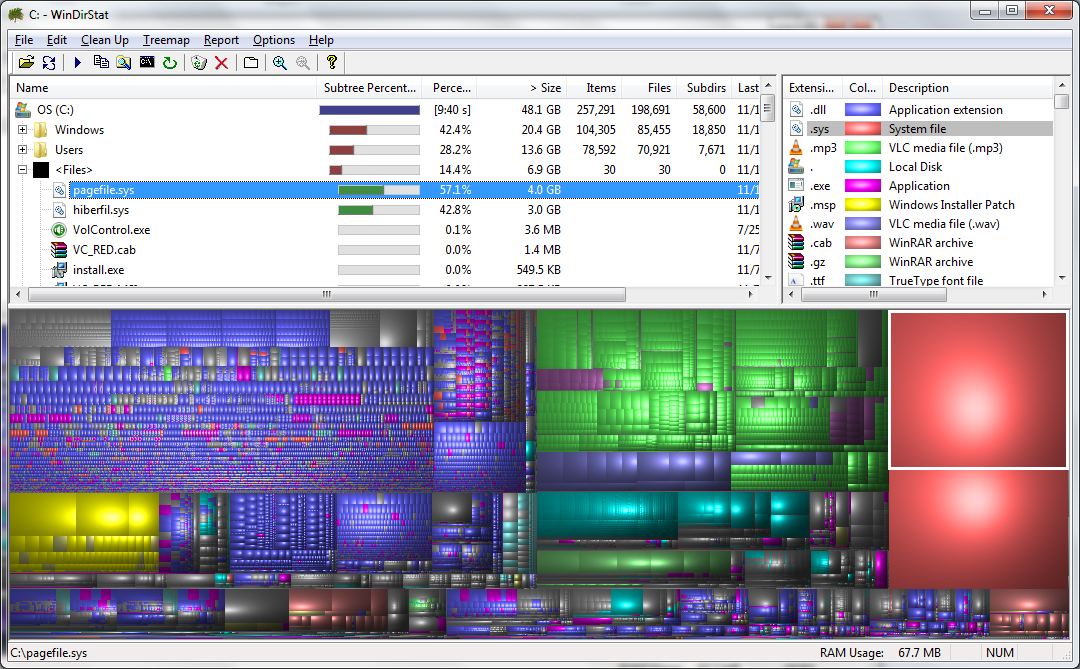

WinDirStat

Regex

https://alf.nu/RegexGolf

Windows, Mac, and Linux

Linux \(\approx\) Unix \(\approx\) Ubuntu

Live in Mac/Windows, work on Linux

iPadOS / ChromeOS?

Linux is everywhere:

- Most phones (Android)

- High performance clusters

- Remote access to, e.g. WRDS

Remote access to private data

python experiment1.py

python experiment2.py

...

Extra shell tips

A long-running program

screen

./run_everything.sh

<disconnect>

Put it in a "run-everything.sh" file

./run-everything.sh

pdflatex paper.tex

bibtex paper

pdflatex paper.tex

pdflatex paper.tex

Put it in a "Makefile" file

make

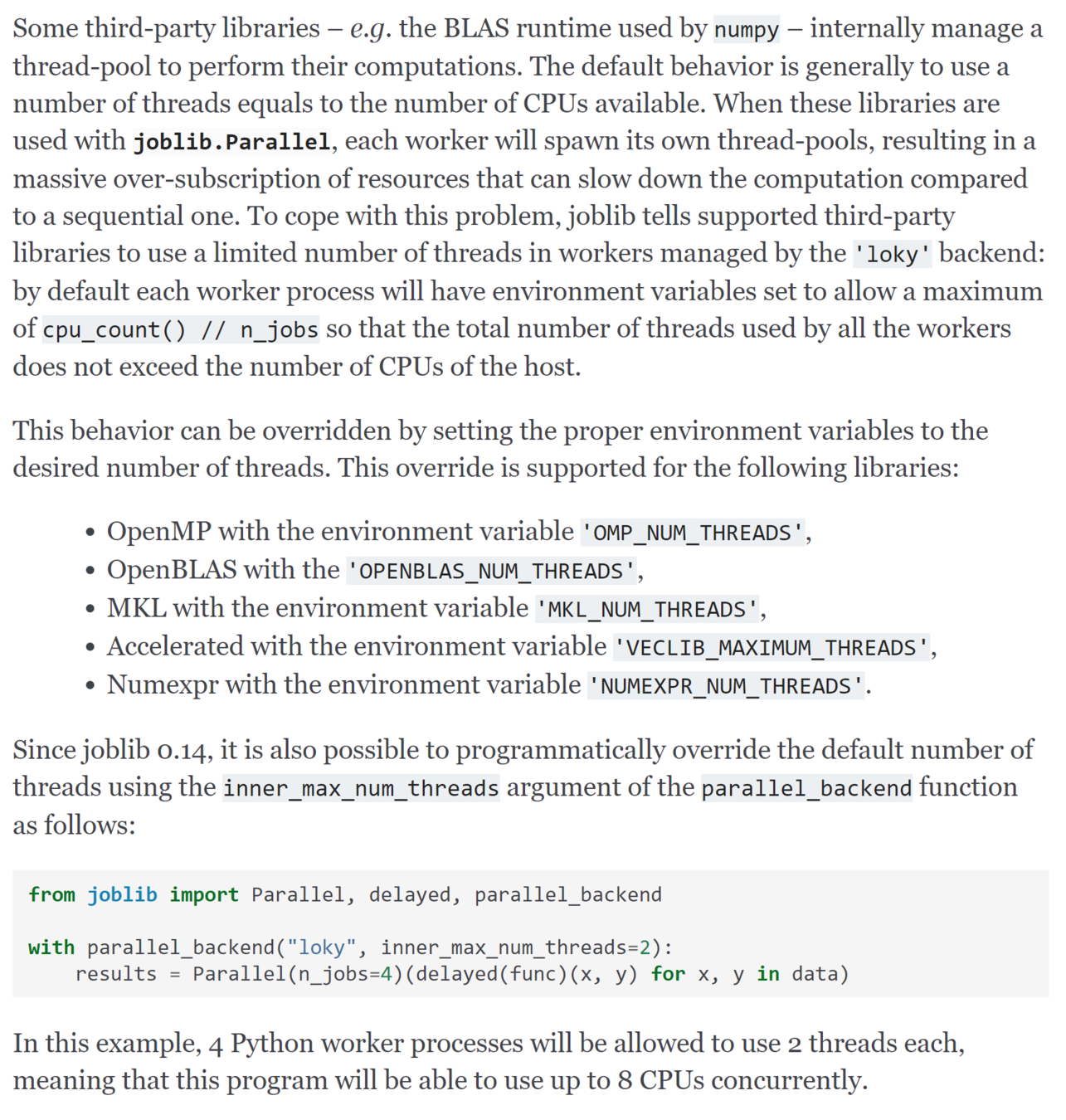

Parallel Python

results = []

for i in range(N):

result_i = slow_function(i)

results.append(result_i)

from joblib import Parallel, delayed

with Parallel(n_jobs=40) as parallel:

fastResults = (delayed(slow_function)(i) for i in range(N))Use joblib

Multiple threads or multiple processes?

Use multiple processes in Python,

probably should use multiple threads elsewhere.

Baumann et al. (2019), A fork() in the road

17th Workshop on Hot Topics in Operating Systems

In practice..

Either:

- too much parallelism for the computer

- a problem which is too small for the parallelism

# This tells Python to just use one thread for internal

# math operations. When it tries to be smart and do multithreading

# it usually just stuffs everything up and slows us down.

import os

for env in ["MKL_NUM_THREADS", "NUMEXPR_NUM_THREADS", "OMP_NUM_THREADS"]:

os.environ[env] = "1"

sample_abc_population(N)

sample_one()

sample_one()

sample_one()

sample_one()

np.solve(A, x)