Serverless, VMs & Containers

Architecture & Economics

Agenda

- Overview

- Scenarios - when what, makes sense

- Frugality - Engineering decisions

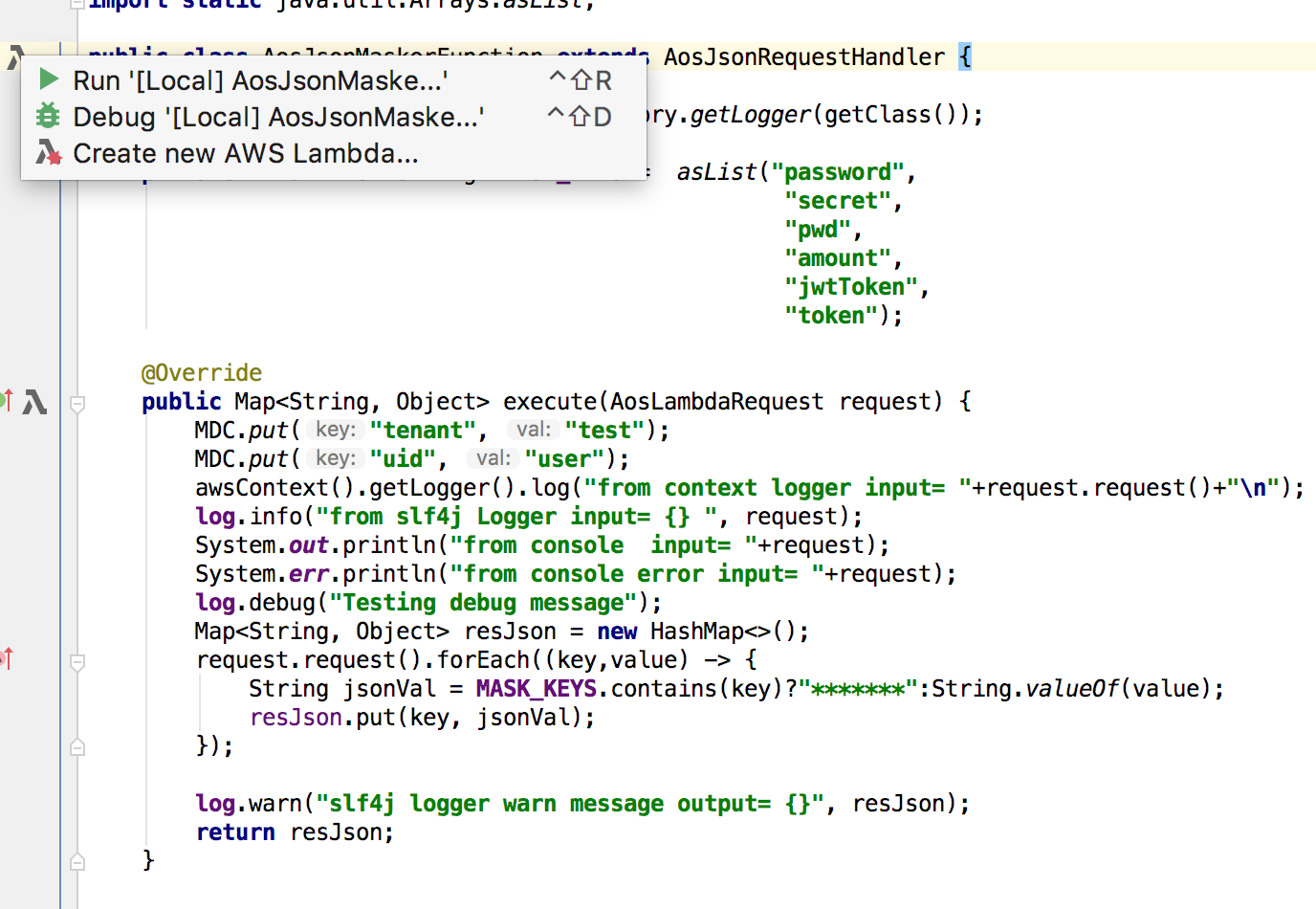

- AWS Lambda - Demo

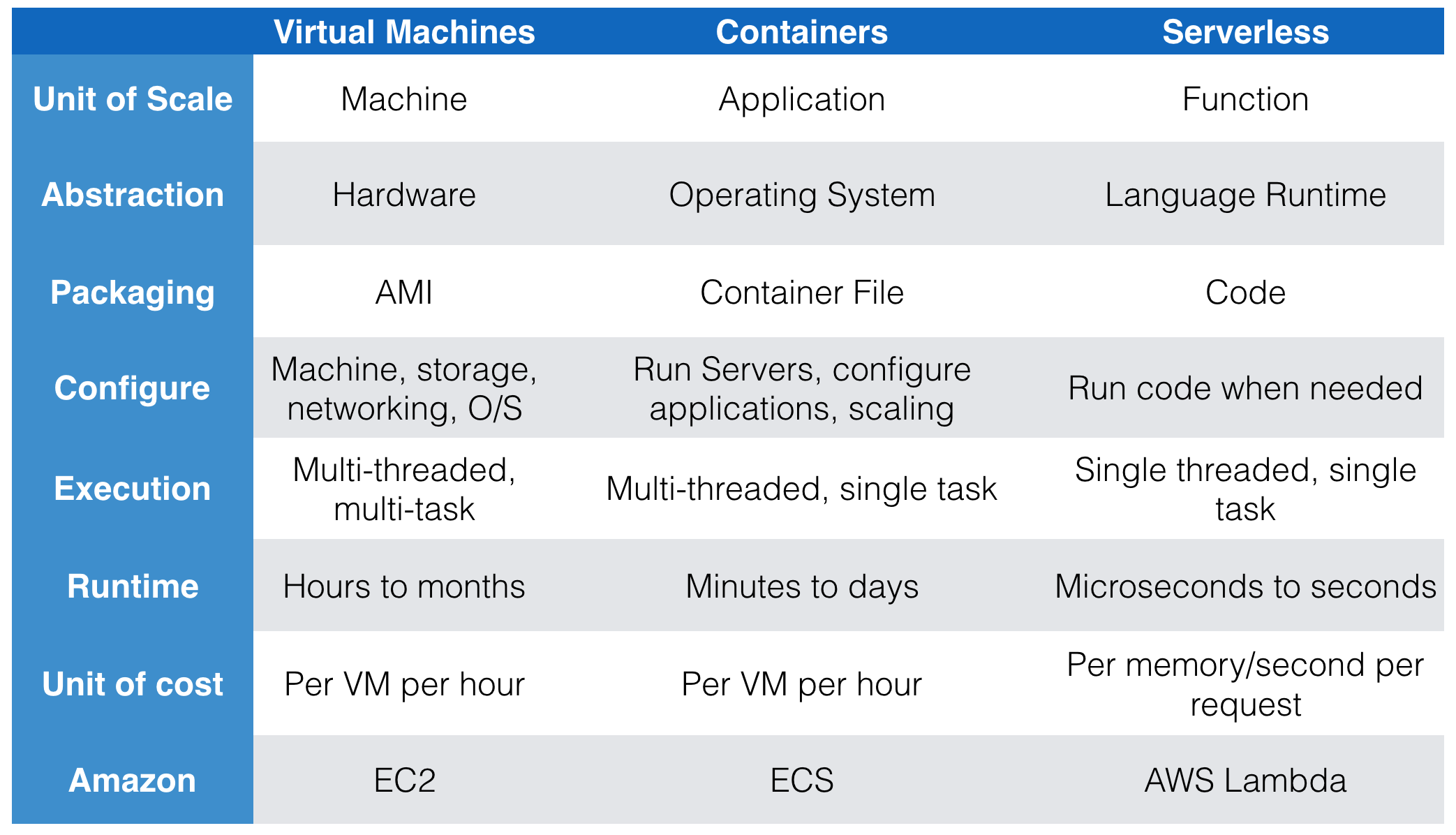

VMs, Containers, Lambdas

What is Serverless?

Application developers are no longer in control of the ‘server’ process that listens to a TCP socket, hence the name ‘serverless’.

AWS Lambda is a

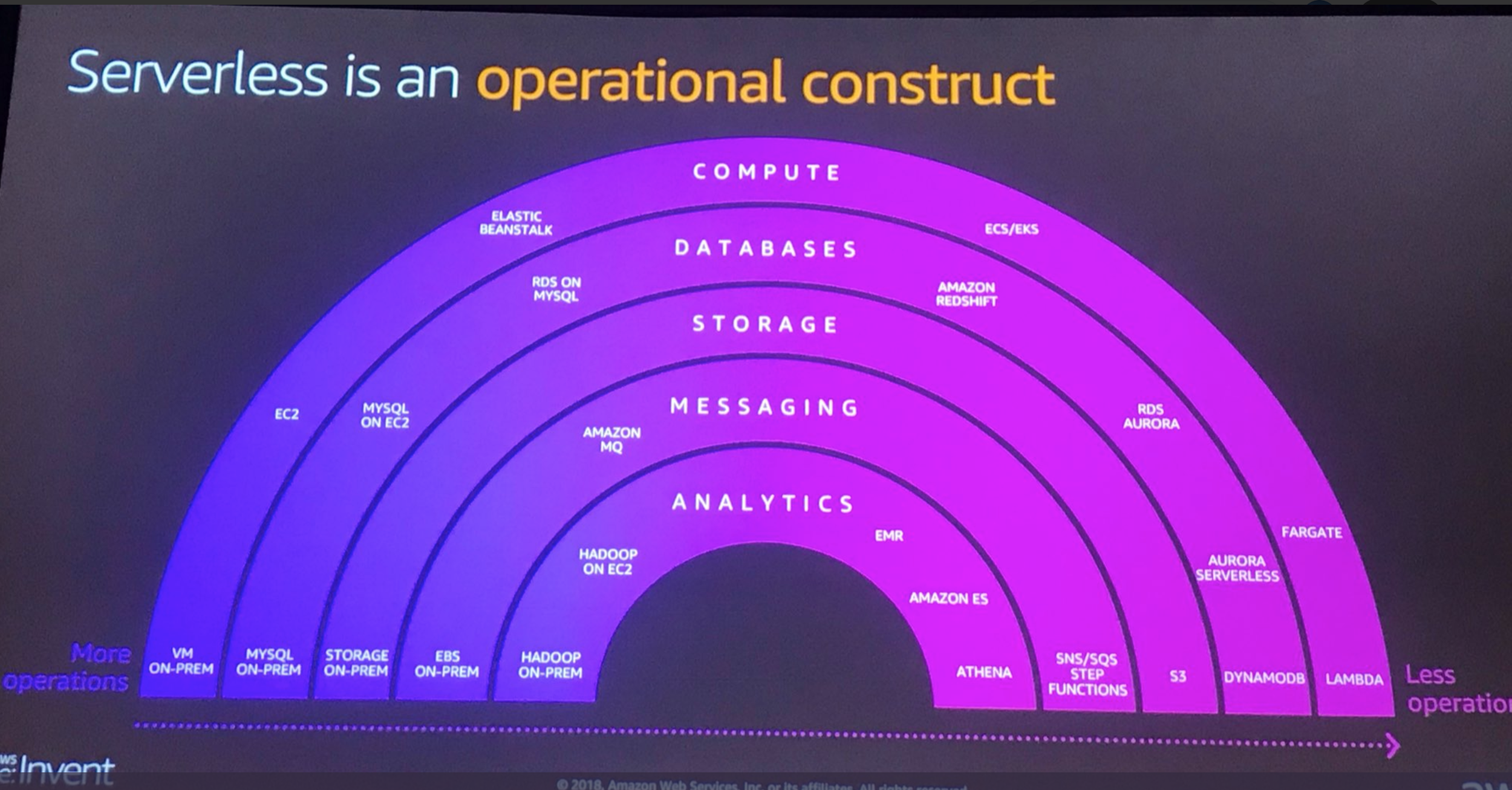

How AWS sees it

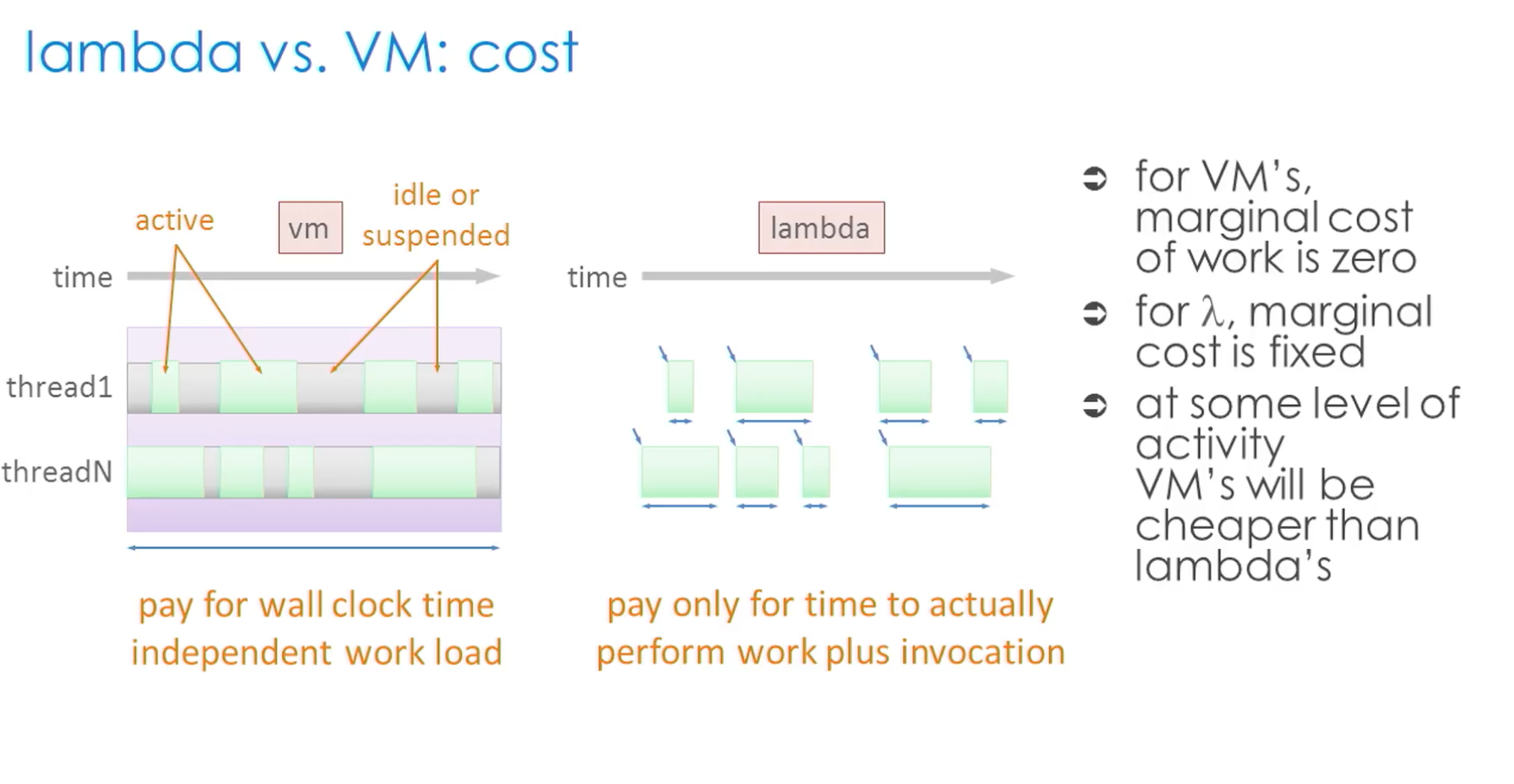

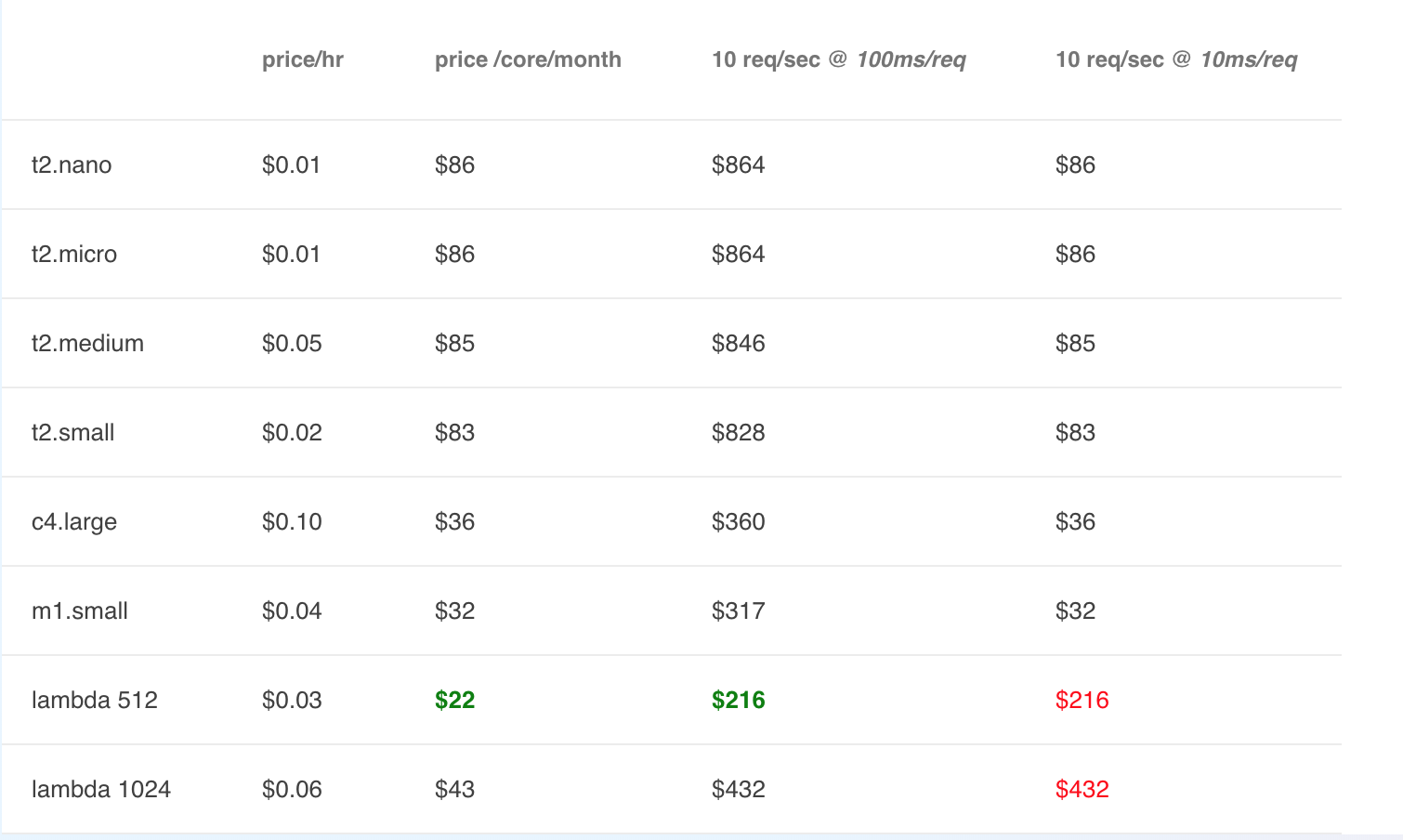

EC2 Vs Lambdas

REPORT RequestId: 17d0ab6c-498c-41be-bbc1-e28694b2e4d9 Duration: 134.46 ms Billed Duration: 200 ms Memory Size: 128 MB Max Memory Used: 8 MB

REPORT RequestId: a8629095-0f21-11e9-b23e-2d156c30c368 Duration: 1146.18 ms Billed Duration: 1200 ms Memory Size: 128 MB Max Memory Used: 63 MB

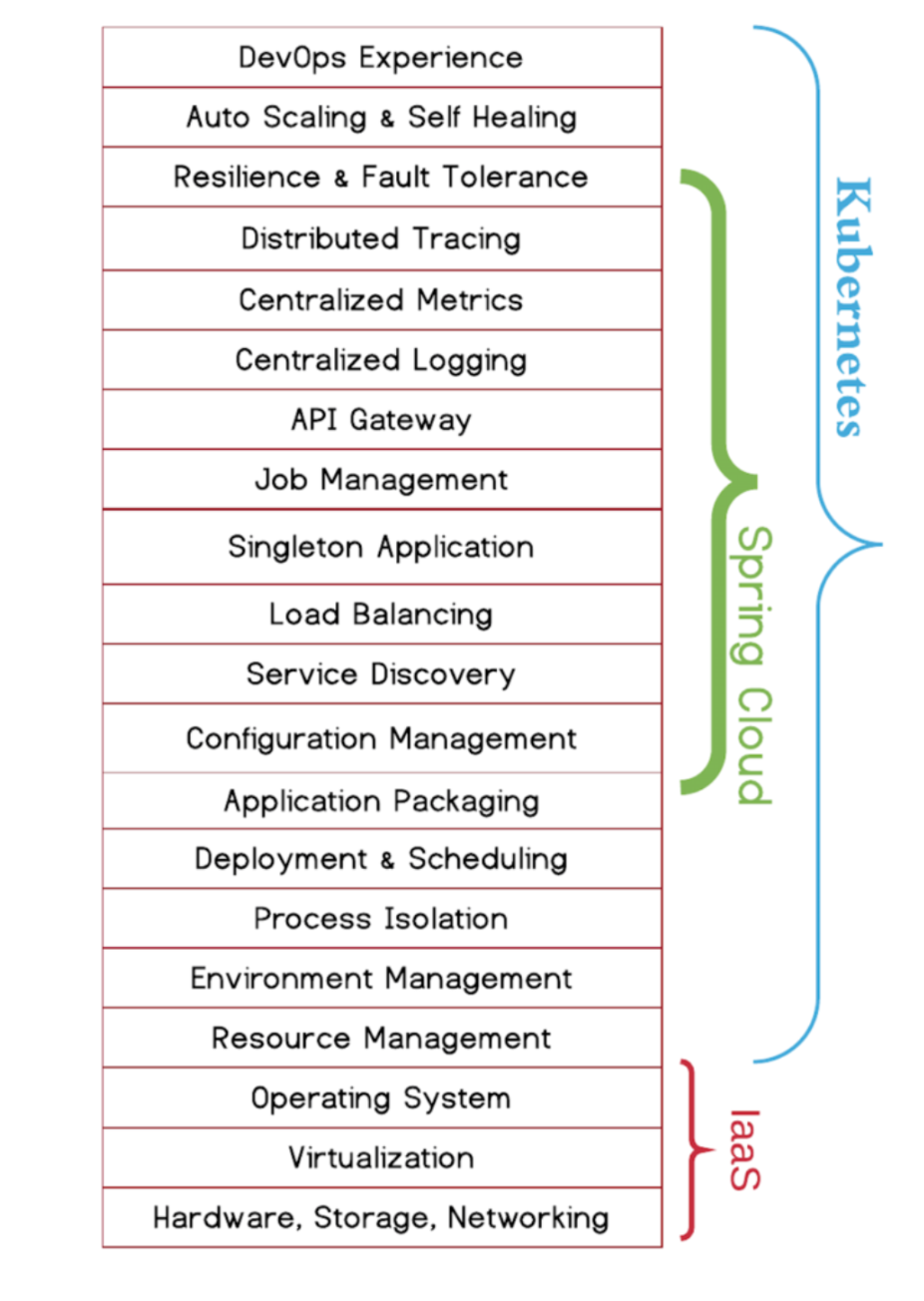

Spring Cloud - Kubernetes

Microservice is Independently deployable Implementing a business capability Doing one thing, and doing it well Communicating over the network

Understand the sweetspots

AWS EC2 offers 2 types of instances: "burst" (t2) and "fixed" (m4, c4, etc.)

Burst or "T-class" instances are pitched for burst use cases. They're typically half the price of a "fixed performance instance" (m4, c4, etc.), but you'll only get 20% of the throughput. we also need to configure/maintain autoscaling or risk depleting your "credits" at which point AWS will throttle the instance to almost nothing

https://www.ec2instances.info/

Serverless

- no management of server hosts or server processes,

- self auto-scaling and auto-provisioning based upon load, costs that are based on precise usage, with zero usage implying approximately zero cost,

- performance capabilities defined in terms other than host size and count, and implicit high availability

Servers

- No vendor lock-ins if we choose all operations with aws lambda - New Oracle?

- back up and fail-over support

- caching, long-running processes.

- No Loss of control

- Tooling, No implementation limitations

P.S. it doesn't mean no servers OR machine, it means cloud companies have found an efficient way to "spin up an instance when an individual function is actually called to execute and return the results"

Amazon Web Services unveiled their ‘Lambda’ platform in late 2014 but now it's getting streamlined.

all your eggs in one AWS basket carries risks with it

Separate Lambda handler from core logic

use for "transform" and not "transport"

read only what you need

No orchestrations - Avoid lambda linking, use dedicated tools for this

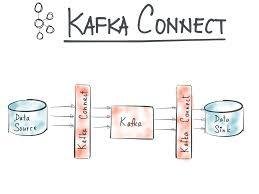

re-try/throttle - Use Kafka

Avoid sleep OR threads

EC2 - Billing

On Demand

Spot Pricing

Reserved

Dedicated.

Instance types

General Purpose

Memory Optimized

Accelerated Computing Instances

Storage Optimized

Server-full code - SpringBoot,spring libraries

Monitoring code is missing

No local testing/manual deployment

Fault containment

Functions as future

All cloud companies are embracing and unstoppable

Reducing DevOps and Maintenance Costs

The speed of development

Frugality

Security

Elasticity

Lambda Languages

A Lambda function with 512 MB of memory run for 1 hour (or, more likely, as several calls of the same function adding up to an hour of uptime) costs $0.030024, while an on-demand EC2 server with the same statistics (a t2.nano server with 0.5 GB of memory) costs $0.0059 per hour.

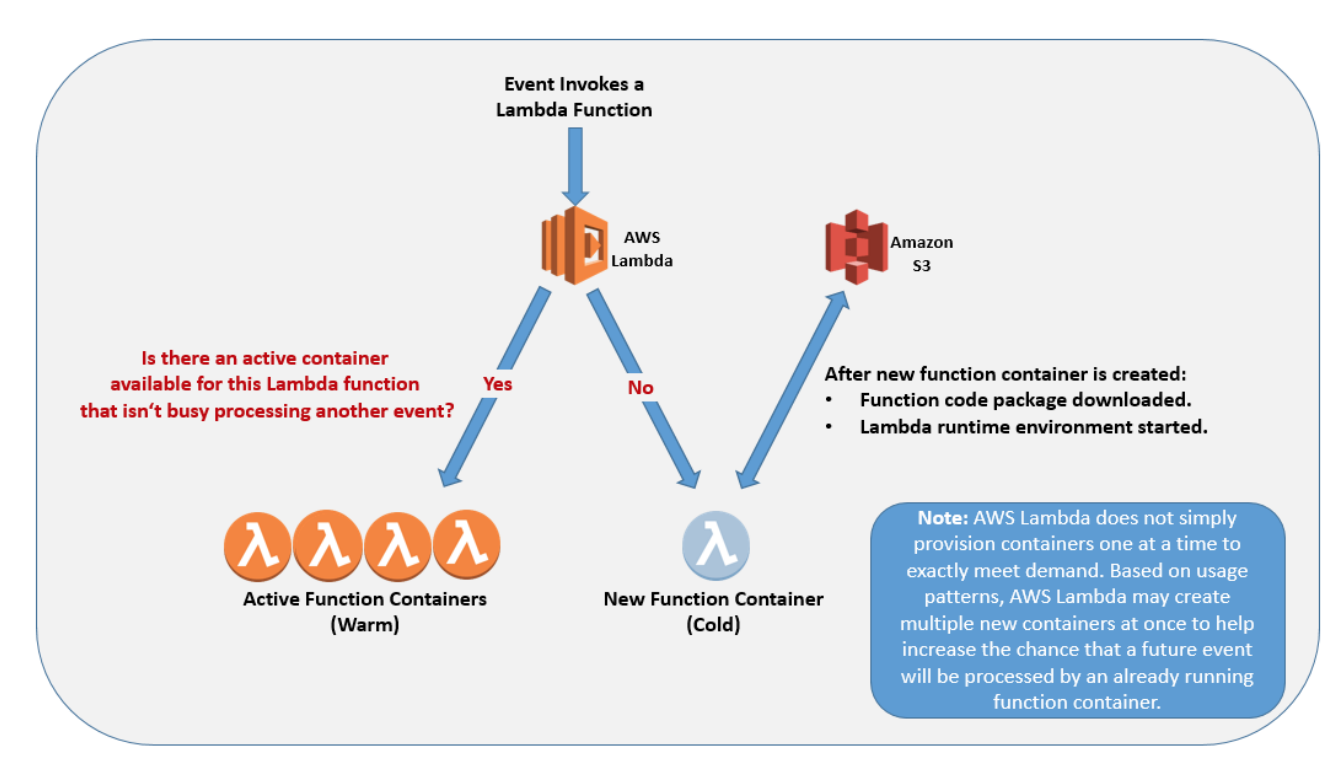

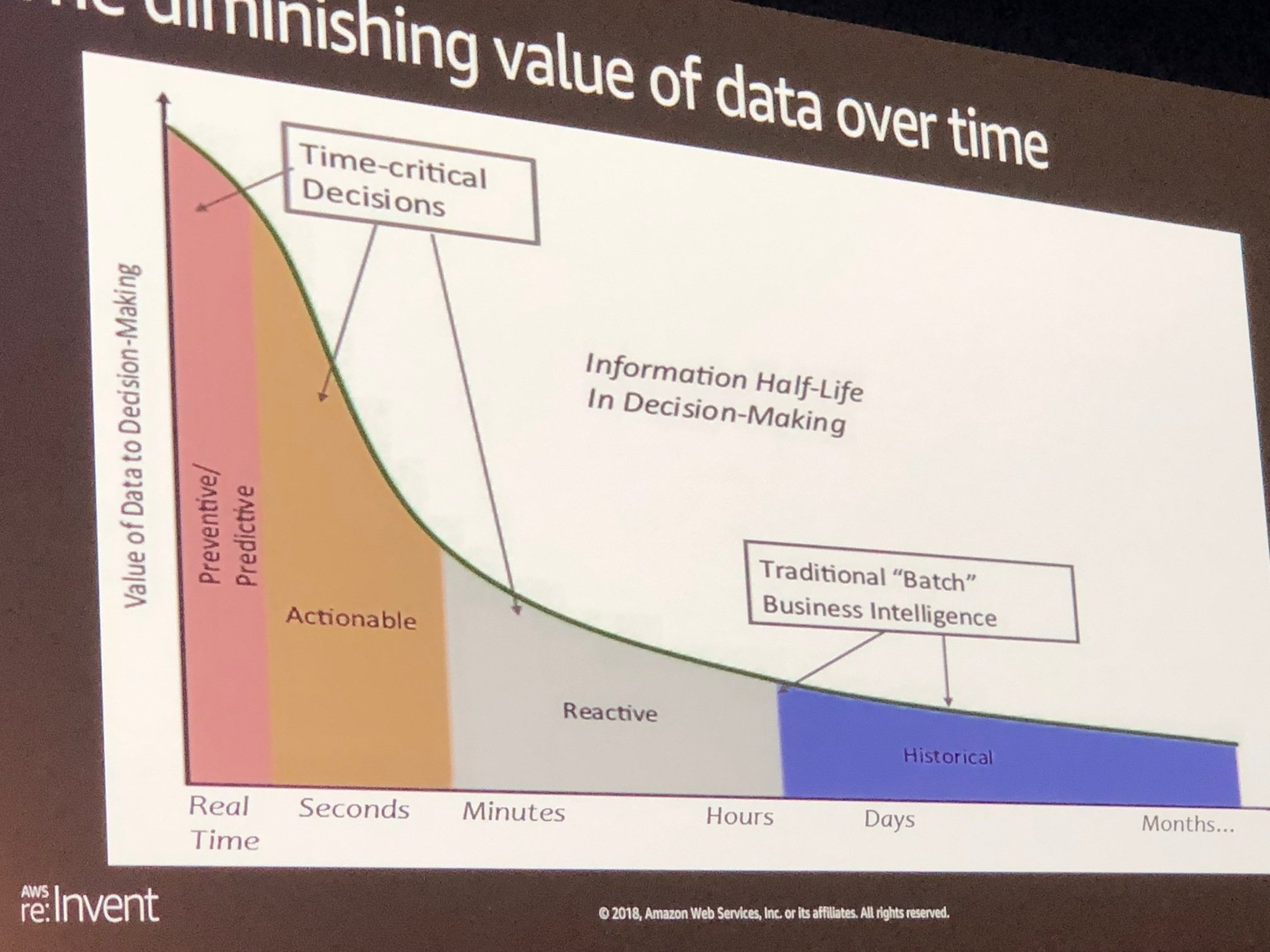

Cold Start Vs Warm start

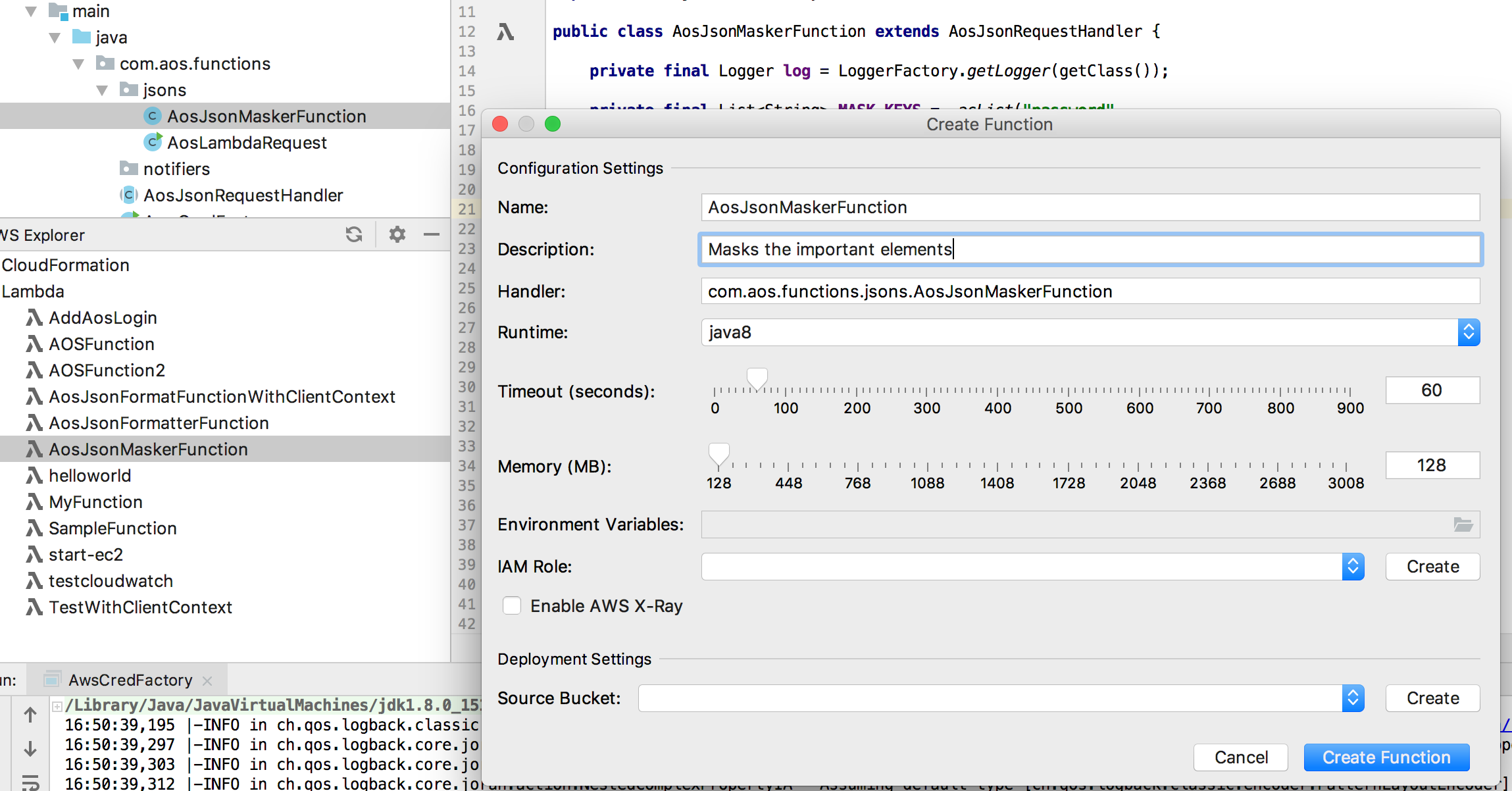

Deploying new lambda function

Updating lambda configuration

Updating lambda configuration

Increasing lambda concurrency

Invoking lambda functionality after a period of inactivity (cold starts happen when a function is idle for ~5 mins)

Lambda bills in 100ms increments, so 10ms workloads probably don't make sense on Lambda

If the workload is between 70ms and 300s, and you can tolerate some cold starts (up to 4s delay), then Lambda is the rational choice.

The pricing sweet spot is for workloads with memory <= 512MB and duration >= 70ms

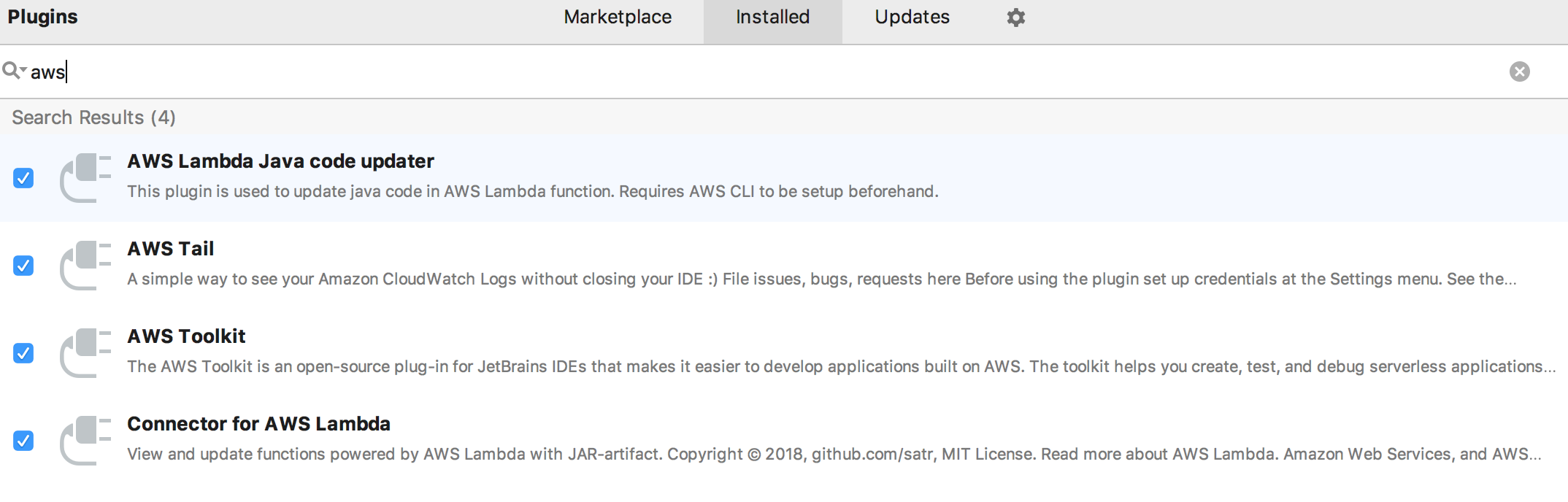

Install plugins

Dev setup (IntelliJ as an example)

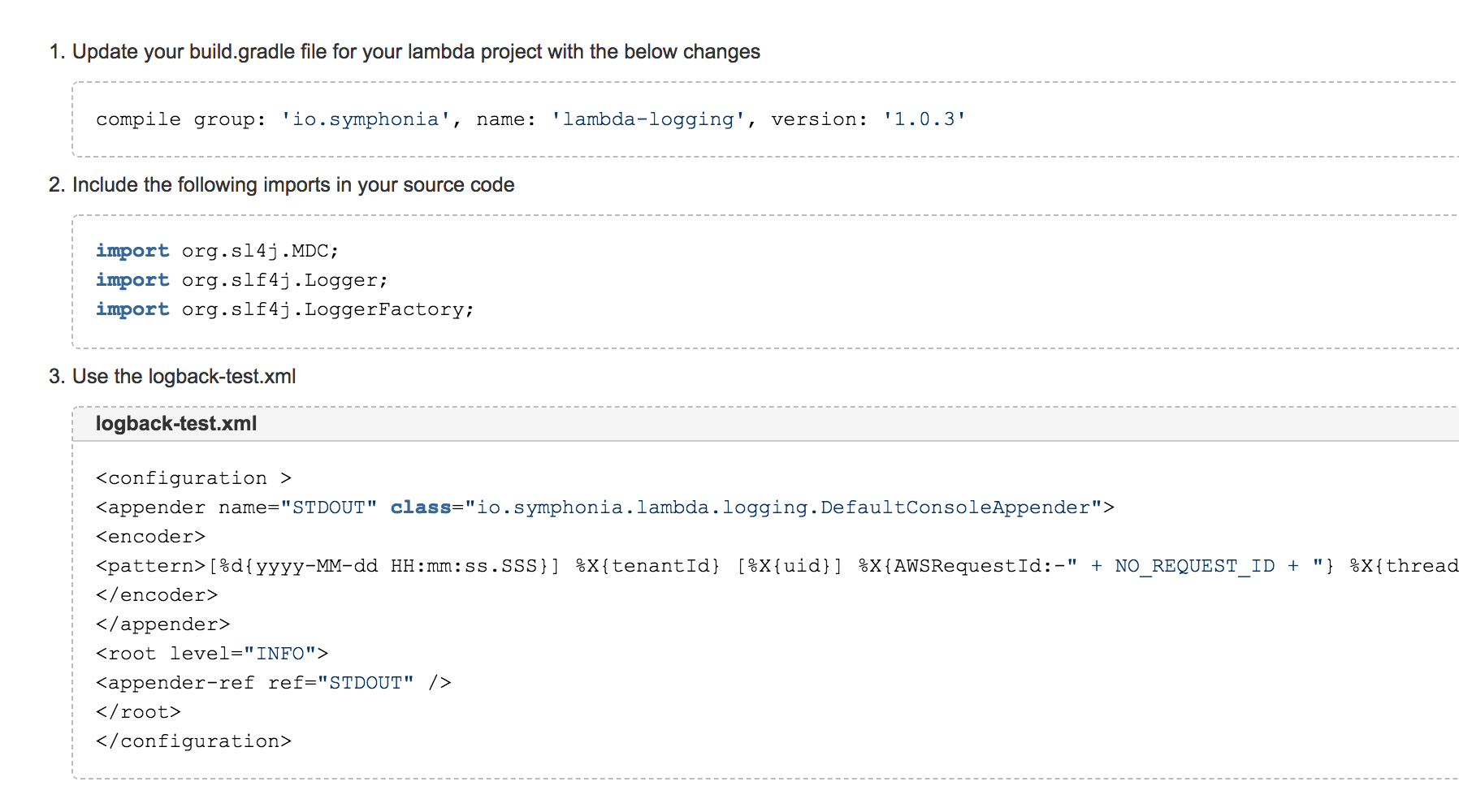

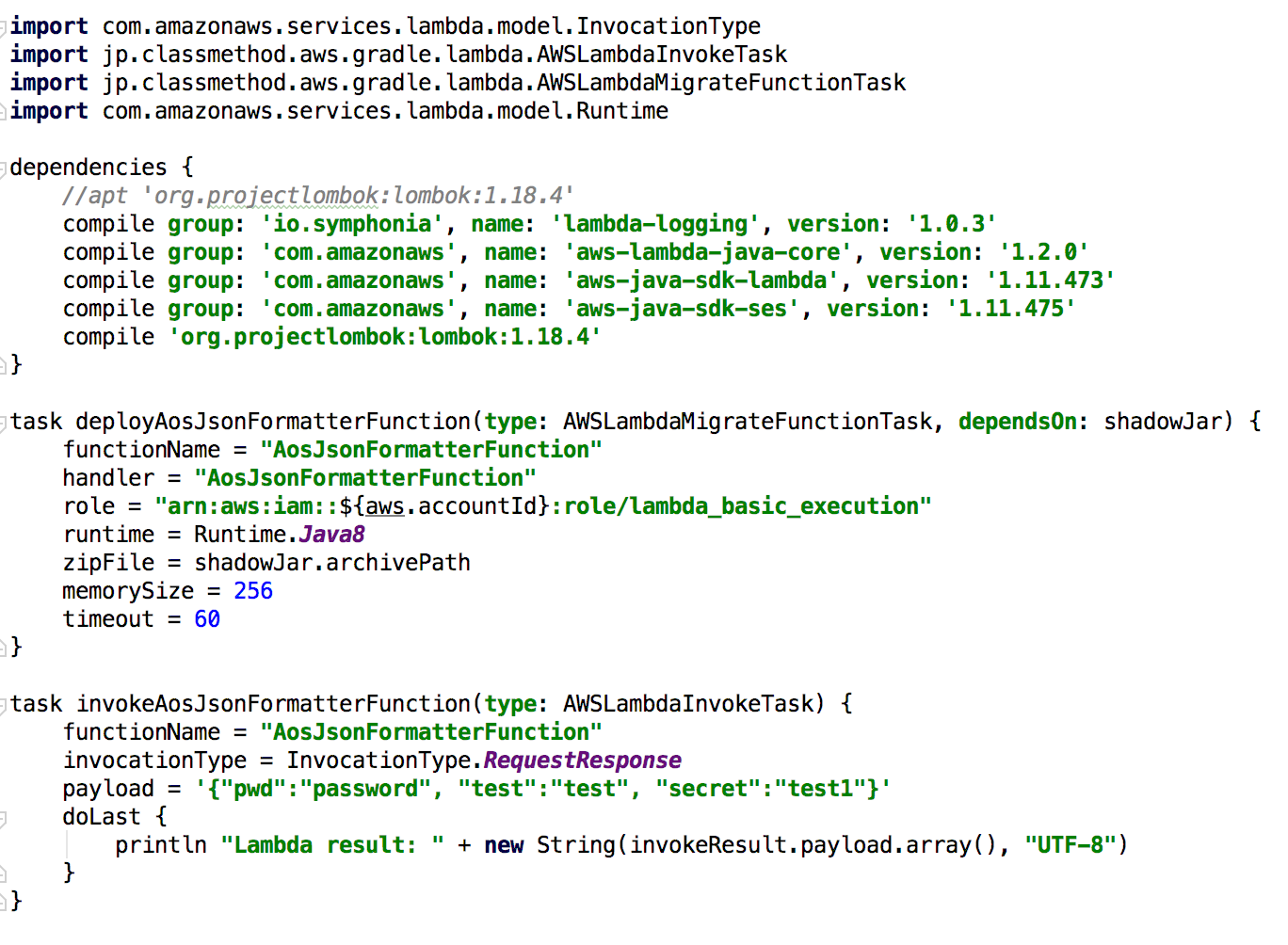

Gradle and config changes

Orchestrations

Long-lived

Data-driven

Durable

Unified Logging

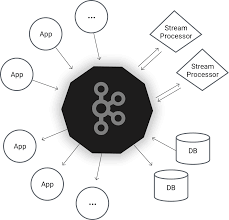

Eventing

Loosely coupled

Async

Buffered

Durable

APIs

Tightly Coupled

Contract Driven

Synchronous

Fast

Integration Patterns

docker as primitive, everything is a docker image

How about Orchestration?

Docker compose -> pods (eks)

Docker compose -> task definitions (ecs)

Dev effort only with docker-compose

Same orchestration on dev/testing/performance/production with difference flavors (cpu, mem)

Multi-cloud with kubernetes

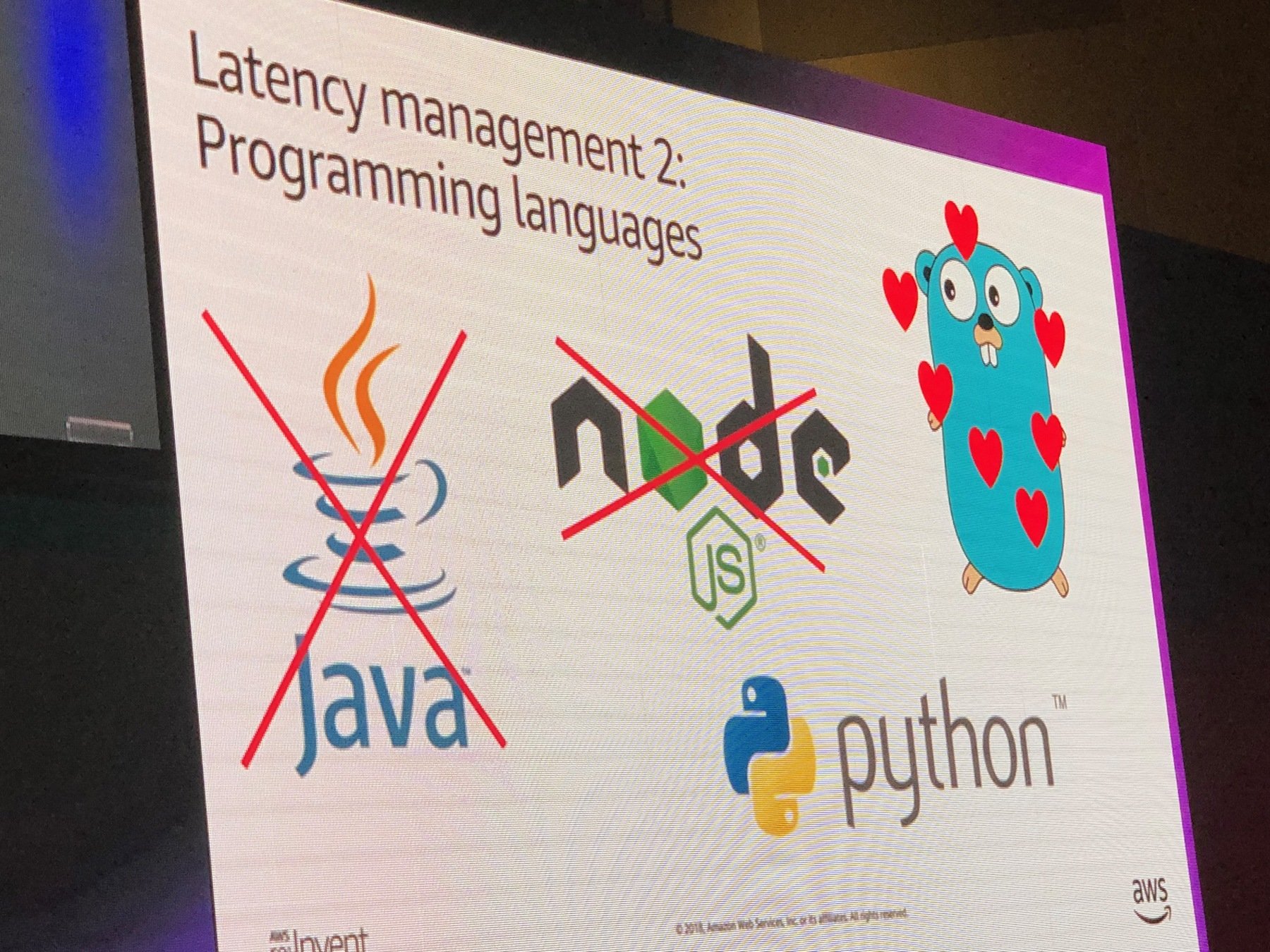

Lambda Languages

A Lambda function with 512 MB of memory run for 1 hour (or, more likely, as several calls of the same function adding up to an hour of uptime) costs $0.030024, while an on-demand EC2 server with the same statistics (a t2.nano server with 0.5 GB of memory) costs $0.0059 per hour.

Cold Start Vs Warm start

Deploying new lambda function

Updating lambda configuration

Updating lambda configuration

Increasing lambda concurrency

Invoking lambda functionality after a period of inactivity (cold starts happen when a function is idle for ~5 mins)

Lambda bills in 100ms increments, so 10ms workloads probably don't make sense on Lambda

If the workload is between 70ms and 300s, and you can tolerate some cold starts (up to 4s delay), then Lambda is the rational choice.

The pricing sweet spot is for workloads with memory <= 512MB and duration >= 70ms

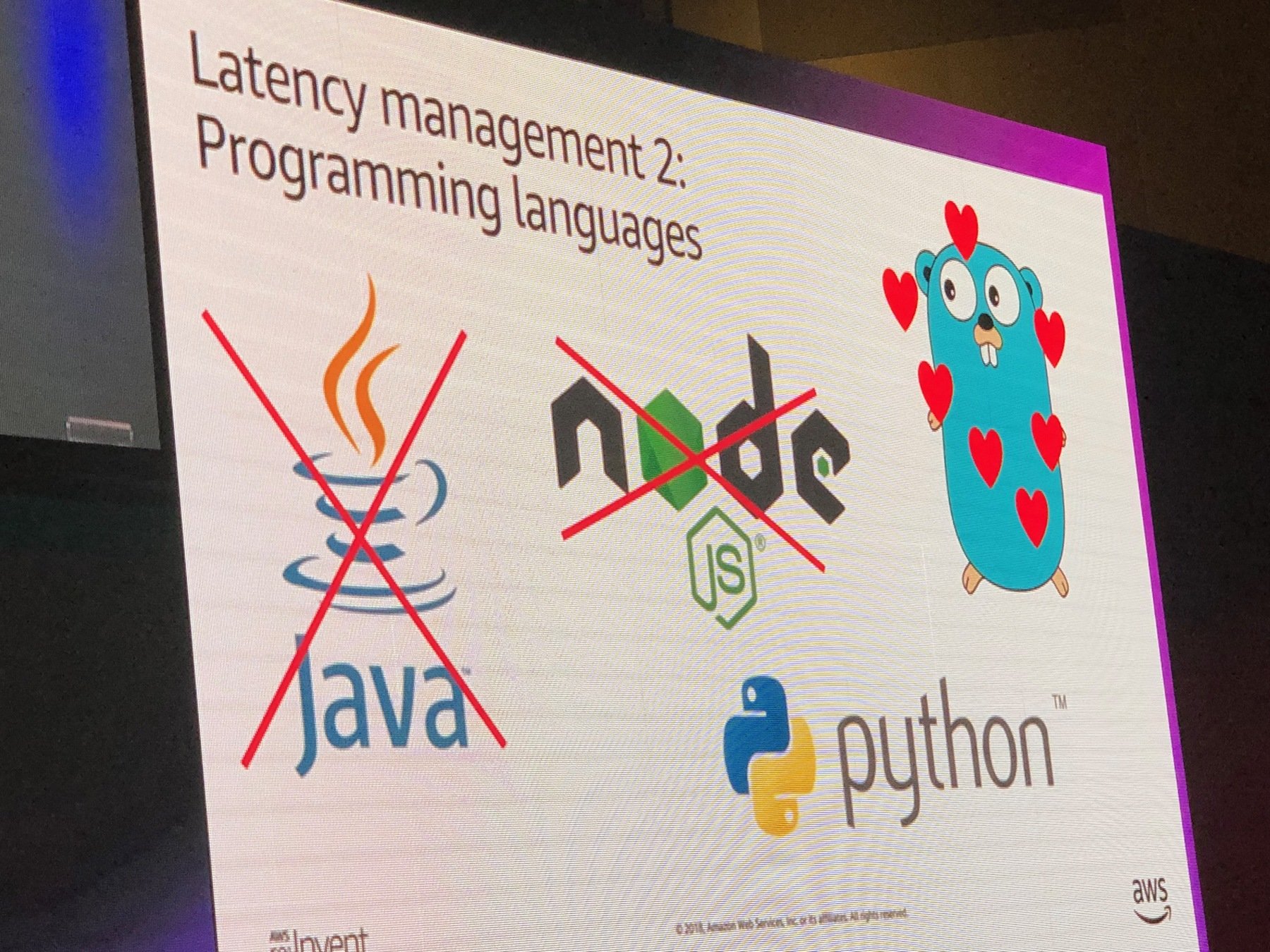

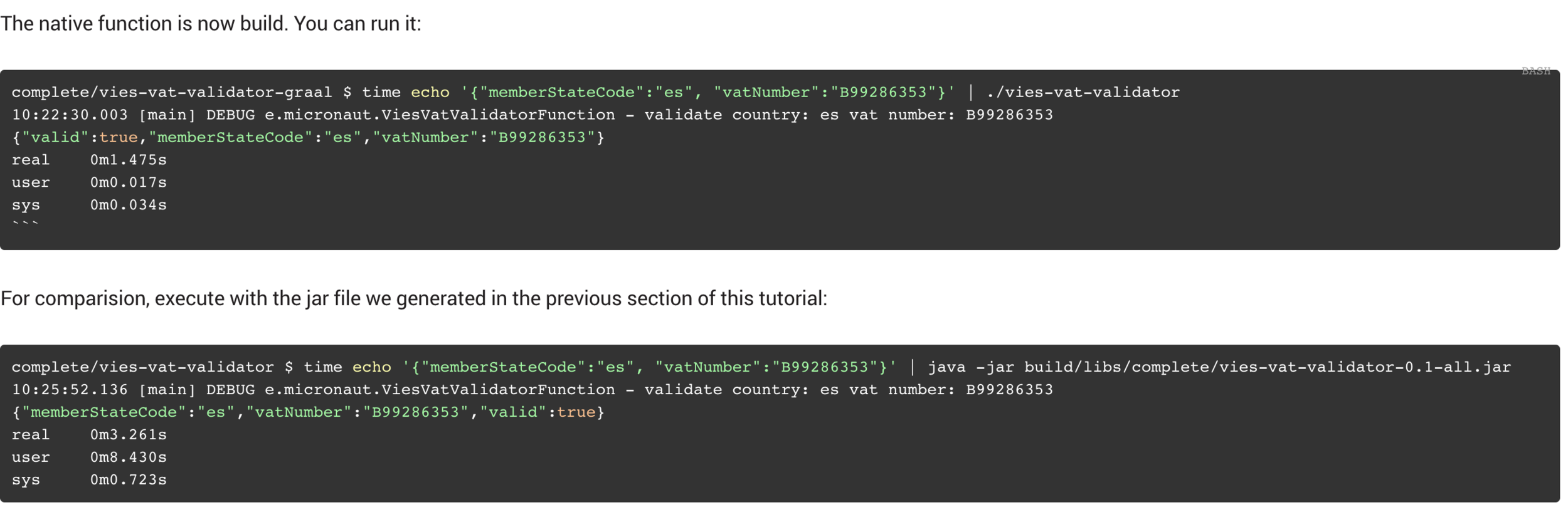

golang outperforms all runtime across memory limits in most of the cases

Java has highest cold start invocation time

when there are many < 1GB RAM CPU-bound jobs where concurrency matters and Lambda is a silver bullet.

http://guides.micronaut.io/micronaut-function-aws-lambda/guide/index.html

Which Integration Pattern to pick?

https://docs.aws.amazon.com/lambda/latest/dg/retries-on-errors.html - Retry behaviour

https://www.ec2instances.info/?cost_duration=monthly&selected=m1.xlarge - EC2 Information