Evaluation of Key-Value Stores for Distributed Locking Purposes

Piotr Grzesik

dr hab. inż. Dariusz Mrozek

Agenda

- Why do we need distributed locks ?

- Which properties are important while evaluating distributed locking mechanisms ?

- Selected key-value stores

- Related works

- Simulation environment

- Implementation details for selected solutions

- Performance experiments

- Results and concluding remarks

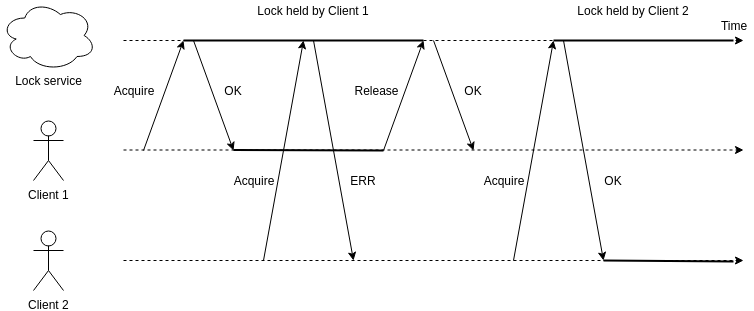

Distributed locks

Distributed locks are used to ensure that certain resource is used by only one process at given point in time, where that resource can be a database, email server or a mechanical appliance.

Distributed locks

-

Safety - property that satisfies the requirement of mutual exclusion, ensuring that only one client can acquire and hold the lock at any given moment

-

Deadlock-free - property that ensures that, eventually, it will be possible to acquire and hold a lock, even if the client currently holding the lock becomes unavailable

- Fault tolerance - property ensuring that as long as the majority of the nodes of the underlying distributed locking mechanism are available, it is possible to acquire, hold, and release locks

Selected Key-Value stores

- etcd - open source, distributed key-value store written in Go, currently developed under Cloud Native Computing Foundation. Uses Raft as underlying consensus algorithm.

- Consul - open source, distributed key-value store written in Go, developed by Hashicorp. Uses Raft as underlying consensus algorithm.

- Zookeeper - open source, highly available coordination system, written in Java, initially developed by Yahoo, currently maintained by Apache Software Foundation. Uses Zab atomic broardcast protocol.

- Redis - open source, in-memory key-value database, that offers optional durability. It was developed by Salvatore Sanfilippo and is written in ANSI C. Redlock algorithm is used with Redis as a way to implement distributed locking.

Related works

-

In his research, Gyu-Ho Lee compared key write performance of Zookeeper, etcd and Consul with regards to disk bandwidth, network traffic, CPU utilization as well as memory usage

-

Patrick Hunt, in his analysis, evaluated Zookeeper server latency under varying loads and configurations, observing that for standalone server, adding additional CPU cores did not provide significant performance gains. He also observed that in general, Zookeeper is able to handle more operations per second with a higher number of clients (around 4 times more operations per second between 1 and 10 clients)

Related works

The liveness and safety properties of Raft were presented in paper by Diego Ongaro, creator of Raft consensus algorithm. The same properties of Zab, algorithm underpinning Zookeeper, were presented by Junqueira, Reed and Serafini.

Redlock algorithm was developed and described by Salvatore Sanfilippo, however safety property of that algorithm was later disputed by Martin Kleppmann, which presented in his article, that under certain conditions, the safety property of Redlock might not hold.

Kyle Kingsbury in his works evaluated etcd, Consul and Zookeeper with Jepsen, the framework for distributed system verification. During that process, it was revealed that Consul and etcd experienced stale reads. This research prompted maintainers of both etcd and Consul to provide mechanisms that enforce consistent reads.

Simulation environment

-

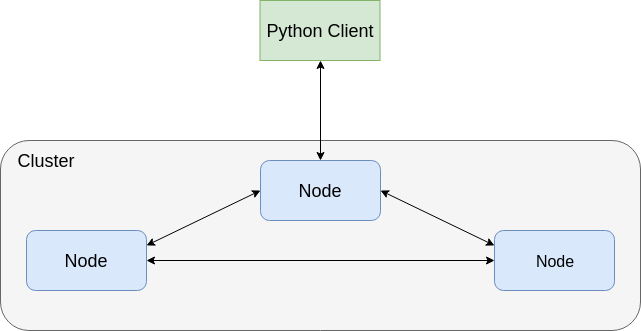

Infrastructure deployed in Amazon Web Services cloud offering

-

All solutions were developed as 3-node clusters, with one additional machine to act as client process

-

Each cluster node was deployed in different availability zone in the same geographical region, eu-central-1, to ensure fault tolerance of a single availability zone, while maintaining latency between instances in sub-milliseconds range

-

Client application used for simulations was developed in Python 3.7, that was able to acquire lock, simulate a short computation and release the lock and measure the time taken to acquire lock

Consul, etcd and Zookeeper configuration

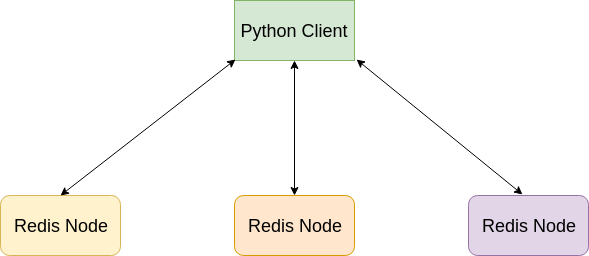

Redis with Redlock configuration

etcd implementation details

-

etcd in version 3.3.9 was used, compiled with Go version 1.10.3

-

Cluster was configured to ensure sequential consistency model, to satisfy safety property

-

Client application was developed to use python-etcd3 library, which implements locks using the atomic compare-and-swap mechanism

-

To ensure deadlock-free property, TTL for a lock is set, which releases the lock if the lock-holding client crashes or is network partitioned away

-

Underlying Raft consensus algorithm ensures that fault tolerance property is satisfied

Consul implementation details

-

Consul in version 1.4.0 was used, compiled with Go version 1.11.1

-

Cluster was configured with "consistent" consistency mode, to satisfy safety property

-

Client application was developed to use Python consul-lock library, which implements locks by using sessions mechanism with the check-and-set operation

-

According to the Consul documentation, depending on the selected health-checking mechanism of sessions, either safety or liveness property might be sacrificed. Selected implementation uses only session TTL health check, which guarantees that both properties hold

-

TTL is applied at the session level and session timeout either deletes or releases all locks related to the session

-

Underlying Raft consensus algorithm ensures that fault tolerance property is satisfied

Zookeeper implementation details

-

Zookeeper in version 3.4.13 was used, running on Java 1.7, with Open JDK Runtime Environment

-

Client application was developed to use Python kazoo library, which offers lock recipe, taking advantage of Zookeeper's ephemeral and sequential znodes

-

On lock acquire, a client creates znode with ephemeral and sequential flag and checks if sequence number is the lowest - if true - acquires lock, otherwise can watch the znode and be notified when the lock is released

-

Usage of ephemeral nodes ensures deadlock-free property

-

Underlying Zab algorithm ensures that fault tolerance and safety properties are satisfied

Redis with Redlock

implementation details

-

Redis in version 5.0.3. was used

-

Client application was developed to use Python redlock library

-

Redlock algorithm works by trying to sequentially acquire lock on all independent Redis instances

-

Lock is acquired if it was successfully acquired on majority of the nodes

-

In most cases, majority requirements ensures safety property

-

In specific circumstances safety property can be lost

-

Deadlock-free property is satisfied by using lock timeouts

-

Fault tolerance is satisfied, tolerates up to (N/2) - 1 failures of independent Redis nodes

Performance experiments

-

Simulation for a workload where all processes try to acquire different lock keys

-

Simulation for a workload where all processes try to acquire the same lock key

-

Both simulations were performed for 1, 3 and 5 clients

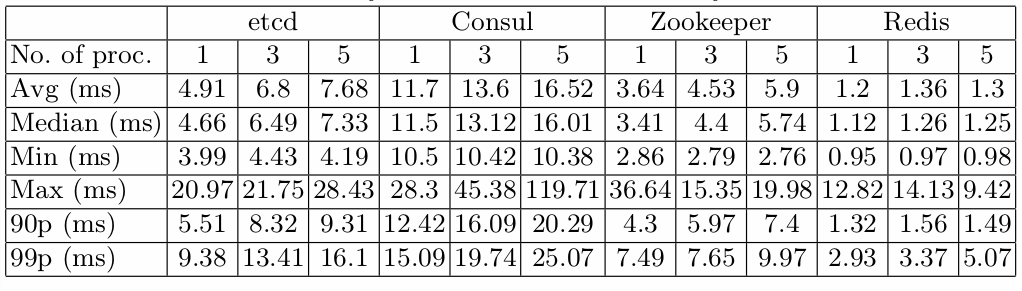

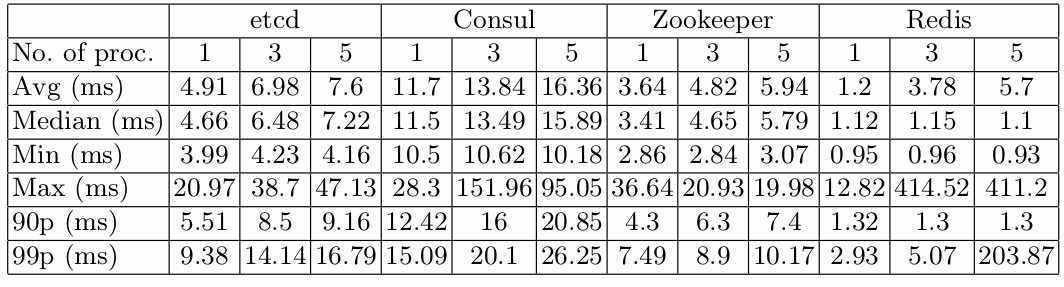

Summary of results for different keys simulation

Summary of results for

same keys simulation

Results summary

-

Zookeeper, Consul and

etcd show very little difference in performance between both workloads -

Redis offers stable and the best performance in case of clients accessing different lock

keys, while becoming unstable for workload involving multiple clients contesting over the same key, having the 99th percentile of lock acquire time for 5 processes as high as 203.87 ms, over 10 times more than other tested solutions -

Considering both workloads, the most performing solution is Zookeeper, which in worst case scenario (5 processes, different lock keys) offers average lock time of 5.94 ms, with the maximum of 19.98 ms, the 90th percentile of 7.4 and the 99th percentile of 10.17 ms.

-

If the upper lock time bound is not essential for

given application, then Redis is the best performing solution, which offers 90th percentile of lock acquire time of 1.3 ms for 5 concurrent processes

Bibliography

-

https://www.consul.io/docs/index.html

-

https://etcd.readthedocs.io/en/latest/

-

Hunt, P.: Zookeeper service latencies under various loads and configurations, https://cwiki.apache.org/confluence/display/ZOOKEEPER/ServiceLatencyOverview

-

Junqueira, F., Reed, B., Hunt, P., Konar, M.: Zookeeper: Wait-free coordination for internet-scale systems. In: Proceedings of the 2010 USENIX conference on USENIX Annual Technical Conference (June 2010)

-

Junqueira, F., Reed, B., Serafini, M.: Zab: High-performance broadcast for

primary-backup systems. In: IEEE/IFIP 41st International Conference on Depend-

able Systems and Networks (DSN). pp. 245–256 (June 2011) -

Kingsbury, K.: Jepsen: etcd and Consul (accessed on January 9th, 2019), https://aphyr.com/posts/316-call-me-maybe-etcd-and-consul

Bibliography

-

Kingsbury, K.: Jepsen: Zookeeper https://aphyr.com/posts/291-call-me-maybe-zookeeper

-

Kleppmann, M.: How to do distributed locking,

http://martin.kleppmann.com/2016/02/08/how-to-do-distributed-locking.

html -

Lee, G.H.: Exploring performance of etcd, zookeeper and consul consistent key-value datastores, https://coreos.com/blog/performance-of-etcd.html

-

Ongaro, D., Ousterhout, J.: In search of an understandable consensus algorithm.

In: Proceedings of the 2014 USENIX conference on USENIX Annual Technical

Conference. pp. 305–320 (June 2014) -

Distributed locks with Redis, https://redis.io/topics/distlock