Занятие №15:

Проксимальная оптимизация стратегии

Основные понятия

Проксимальная оптимизация стратегии

Основная идея проксимальной оптимизации стратегии - избежать слишком большого обновления стратегии.

Проксимальная оптимизация политики

Проблема заключается в размере шага:

- Слишком малое значение. Тренировочный процесс шел слишком медленно;

- Слишком большое значение. Очень много вариаций образуется в тренировках.

L(\theta) = E_{t}[log\pi_{\theta}(a_{t}|s_{t}) * A_{t}]

Ошибка политики

Логарифмическая вероятность выполнения этого действия в этом состоянии

Если преимущество A> 0, то это действие лучше, чем другое действие, возможное в этом состоянии

Ограниченная суррогатная целевая функция

Данный параметр обозначает отношение вероятностей между новой и старой стратегиями:

- Если rt (θ)> 1, это означает, что действие более вероятно в текущей стратегии, чем в старой;

- Если rt (θ) находится между 0 и 1: это означает, что действие более вероятно для старой стратегии, чем для текущей.

r_{t}(\theta) = \frac{\pi_{\theta}(a_{t} | s_{t})}{\pi_{\theta_{old}}(a_{t} | s_{t})}

Ограниченная суррогатная целевая функция

L(\theta) = E_{t}[\frac{\pi_{\theta}(a_{t}|s_{t})}{\pi_{\theta_{old}}(a_{t}|s_{t})}] = E[r_{t}(\theta)A_{t}]

L(\theta) = E_{t}[min(r_{t}(\theta)A_{t}, clip(r_t(\theta), 1-\varepsilon, 1+\varepsilon)A_{t})]

Значение r ограничено между (1-e,1+e)

Минимизируем и ограничиваем это же значение как в предыдущей функции

Ограниченная суррогатная целевая функция

1 + \epsilon

1

0

r

A > 0

L_{CLIP}

A < 0

r

0

L_{CLIP}

1 + \epsilon

1

- В случае положительного преимущества мы хотим увеличить вероятность выполнения этого действия на этом этапе, но не слишком сильно;

- В случае отрицательного преимущества мы хотим уменьшить вероятность выполнения этого действия на этом этапе, но не слишком сильно.

Ограниченная суррогатная целевая функция

Код

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense, Lambda

from keras.models import Model, load_model

import gym

import numpy as np

import matplotlib.pyplot as plt

# gamma - коэффициент дисконтирования

# lambda_1 - параметр сглаживания

gamma = 0.95

lambda_1 = 0.99

clip_ratio = 0.1Код

#Веса модели будут сохраняться в папку Models.

Save_Path = 'Models'

if not os.path.exists(Save_Path):

os.makedirs(Save_Path)

path = '{}_PPO'.format("Name")

Model_name = os.path.join(Save_Path, path)Код класса Actor

class Actor:

def __init__(self, state_dim, action_dim):

self.state_dim = state_dim

self.action_dim = action_dim

self.model = self.create_model()

self.opt = tf.keras.optimizers.Adam(learning_rate=0.001)

# Архитектура нейронной сети для дискретного случая

def create_model(self):

return tf.keras.Sequential([

Input((self.state_dim,)),

Dense(32, activation='relu'),

Dense(16, activation='relu'),

Dense(self.action_dim, activation='softmax')

])Код класса Actor

def compute_loss(self, old_policy, new_policy, actions, gaes):

gaes = tf.stop_gradient(gaes)

old_log_p = tf.math.log(

tf.reduce_sum(old_policy * actions))

old_log_p = tf.stop_gradient(old_log_p)

log_p = tf.math.log(tf.reduce_sum(

new_policy * actions))

# PPO использует соотношение между недавно обновленной политикой

# и старой политикой на этапе обновления.

ratio = tf.math.exp(log_p - old_log_p)

clipped_ratio = tf.clip_by_value(

ratio, 1 - clip_ratio, 1 + clip_ratio)

surrogate = -tf.minimum(ratio * gaes, clipped_ratio * gaes)

return tf.reduce_mean(surrogate)

Код класса Actor

def train(self, old_policy, states, actions, gaes):

actions = tf.one_hot(actions, self.action_dim)

actions = tf.reshape(actions, [-1, self.action_dim])

actions = tf.cast(actions, tf.float64)

with tf.GradientTape() as tape:

logits = self.model(states, training=True)

loss = self.compute_loss(old_policy, logits, actions, gaes)

grads = tape.gradient(loss, self.model.trainable_variables)

self.opt.apply_gradients(zip(grads,

self.model.trainable_variables))

return loss

def load(self, Model_name):

self.model = load_model(Model_name + ".h5", compile=True)

def save(self, Model_name):

self.model.save(Model_name + ".h5")Код класса Critic

class Critic:

def __init__(self, state_dim):

self.state_dim = state_dim

self.model = self.create_model()

self.opt = tf.keras.optimizers.Adam(learning_rate=0.001)

def create_model(self):

return tf.keras.Sequential([

Input((self.state_dim,)),

Dense(32, activation='relu'),

Dense(16, activation='relu'),

Dense(16, activation='relu'),

Dense(1, activation='linear')

])Код класса Critic

def compute_loss(self, v_pred, td_targets):

mse = tf.keras.losses.MeanSquaredError()

return mse(td_targets, v_pred)

def train(self, states, td_targets):

with tf.GradientTape() as tape:

v_pred = self.model(states, training=True)

loss = self.compute_loss(v_pred, tf.stop_gradient(td_targets))

grads = tape.gradient(loss, self.model.trainable_variables)

self.opt.apply_gradients(zip(grads,

self.model.trainable_variables))

return loss

def load(self, Model_name):

self.model = load_model(Model_name + ".h5", compile=True)

def save(self, Model_name):

self.model.save(Model_name + ".h5")Код класса Agent

class Agent:

def __init__(self, env):

self.env = env

self.state_dim = self.env.observation_space.shape[0]

self.action_dim = self.env.action_space.n

self.actor = Actor(self.state_dim, self.action_dim)

self.critic = Critic(self.state_dim)

def gae_target(self, rewards, v_values, next_v_value, done):

n_step_targets = np.zeros_like(rewards)

gae = np.zeros_like(rewards)

gae_cumulative = 0

forward_val = 0

if not done:

forward_val = next_v_valueКод класса Agent

for k in reversed(range(0, len(rewards))):

delta = rewards[k] + gamma * forward_val - v_values[k]

gae_cumulative = gamma * lambda *

gae_cumulative + delta

gae[k] = gae_cumulative

forward_val = v_values[k]

n_step_targets[k] = gae[k] + v_values[k]

return gae, n_step_targets

def list_to_batch(self, list):

batch = list[0]

for elem in list[1:]:

batch = np.append(batch, elem, axis=0)

return batchКод класса Agent

def train(self, max_episodes=100):

if os.path.exists("Models/Name_PPO_Actor.h5") and os.path.exists("Models/Name_PPO_Critic.h5"):

self.actor.load("Models/Name_PPO_Actor")

self.critic.load("Models/Name_PPO_Critic")

print("Модели загружены")

for ep in range(max_episodes):

state_batch = []

action_batch = []

reward_batch = []

old_policy_batch = []

episode_reward, done = 0, False

state = self.env.reset()

while not done:

probs = self.actor.model.predict(

np.reshape(state, [1, self.state_dim]))

action = np.random.choice(self.action_dim, p=probs[0])

Код класса Agent

next_state, reward, done, _ = self.env.step(action)

state = np.reshape(state, [1, self.state_dim])

action = np.reshape(action, [1, 1])

next_state = np.reshape(next_state, [1, self.state_dim])

reward = np.reshape(reward, [1, 1])

state_batch.append(state)

action_batch.append(action)

reward_batch.append(reward * 0.01)

old_policy_batch.append(probs)

if len(state_batch) >= 5 or done:

states = self.list_to_batch(state_batch)

actions = self.list_to_batch(action_batch)

rewards = self.list_to_batch(reward_batch)

old_policys = self.list_to_batch(old_policy_batch)

Код класса Agent

v_values = self.critic.model.predict(states)

next_v_value = self.critic.model.predict(next_state)

gaes, td_targets = self.gae_target(

rewards, v_values, next_v_value, done)

for epoch in range(3):

actor_loss = self.actor.train(

old_policys, states, actions, gaes)

critic_loss = self.critic.train(states, td_targets)

state_batch = []

action_batch = []

reward_batch = []

old_policy_batch = []

Код класса Agent

episode_reward += reward[0][0]

state = next_state[0]

print('Эпизод {}; награда за эпизод = {}'.format(ep, episode_reward))

if ep % 5 == 0:

self.actor.save("Models/Name_PPO_Actor")

self.critic.save("Models/Name_PPO_Critic")

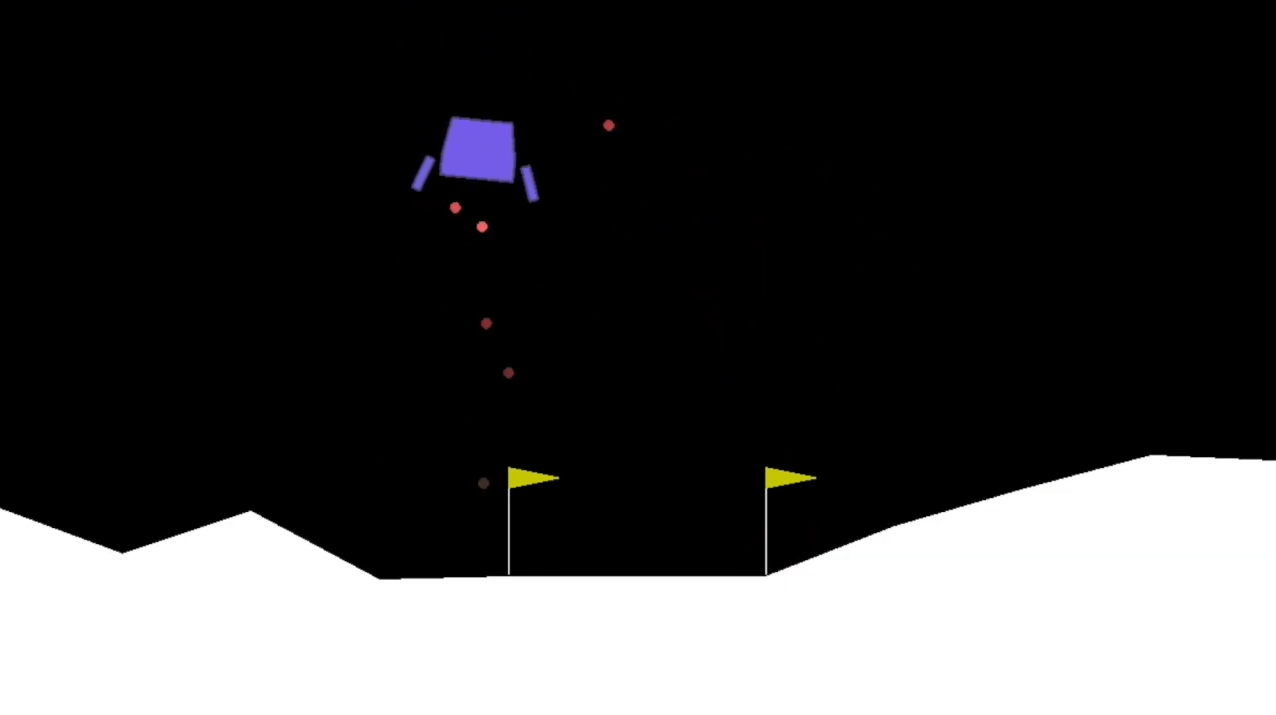

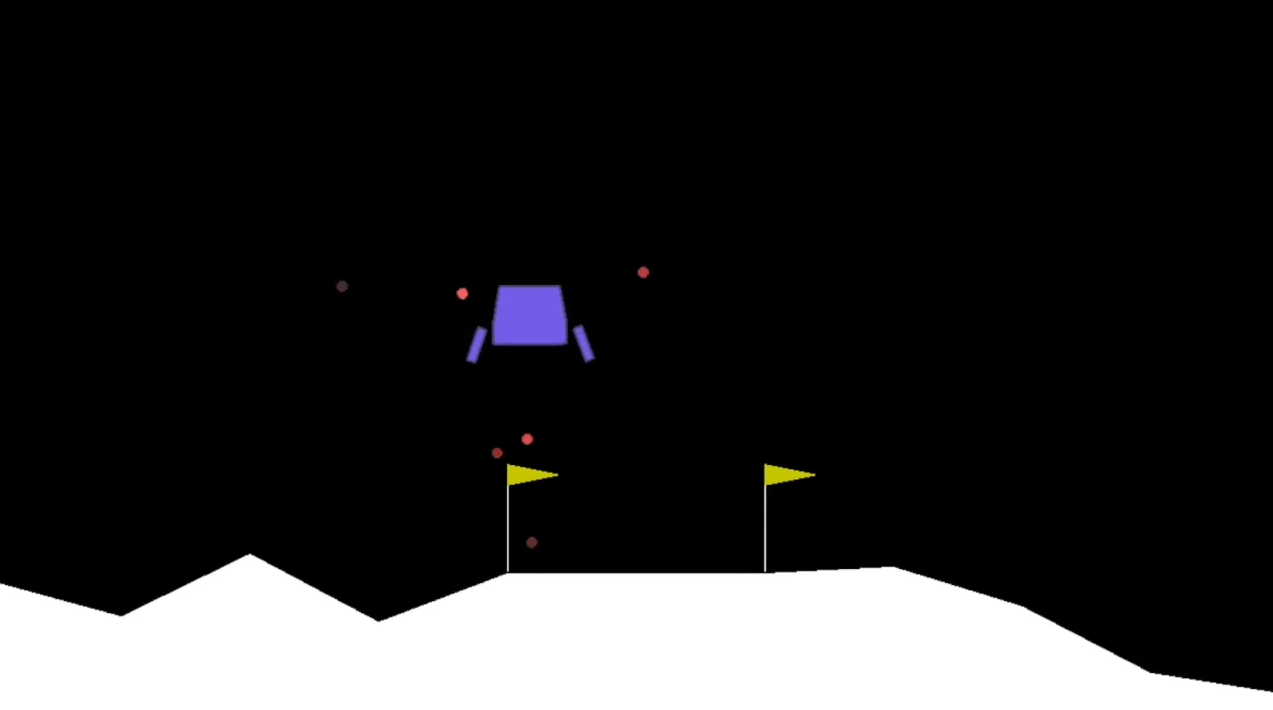

env_name = 'Pong-v0'

env = gym.make(env_name)

env = gym.wrappers.Monitor(env,"recording",force=True)

agent = Agent(env)

agent.train()

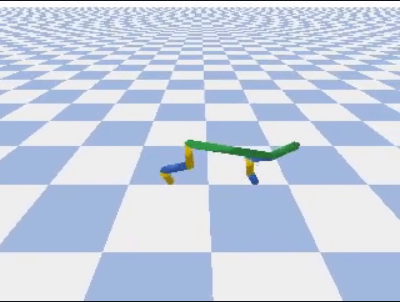

Результат

Непрерывная среда

#Изменения в программе для непрерывной среды

# gamma - коэффициент дисконтирования

# lambda_1 - параметр сглаживания

gamma = 0.95

lambda_1 = 0.99

clip_ratio = 0.1

#Веса модели будут сохраняться в папку Models.

Save_Path = 'Models'

if not os.path.exists(Save_Path):

os.makedirs(Save_Path)

path = '{}_PPO'.format("Name")

Model_name = os.path.join(Save_Path, path)

class Actor:

def __init__(self, state_dim, action_dim, action_bound, std_bound):

self.state_dim = state_dim

self.action_dim = action_dim

# Ограничения на значения действий и

# среднеквадратическое отклонение

self.action_bound = action_bound

self.std_bound = std_bound

self.model = self.create_model()

self.opt = tf.keras.optimizers.Adam(learning_rate=0.001)

Непрерывная среда

#Изменения в программе для непрерывной среды

# Смотрите задачу №2

def get_action(self, state):

state = np.reshape(state, [1, self.state_dim])

mu, std = self.model.predict(state)

action = np.random.normal(mu[0], std[0], size=self.action_dim)

'''

action =

log_policy =

'''

return log_policy, action

Непрерывная среда

#Изменения в программе для непрерывной среды

def log_pdf(self, mu, std, action):

td = tf.clip_by_value(std, self.std_bound[0], self.std_bound[1])

log_policy_pdf = -0.5 * (action - mu) ** 2 /

(std ** 2) - 0.5 * tf.math.log((std ** 2) * 2 * np.pi)

return tf.reduce_sum(log_policy_pdf, 1, keepdims=True)Непрерывная среда

#Изменения в программе для непрерывной среды

# Смотрите задачу №3

def compute_loss(self, log_old_policy, log_new_policy, actions, gaes):

#ratio =

gaes = tf.stop_gradient(gaes)

'''

clipped_ratio =

surrogate =

'''

return tf.reduce_mean(surrogate)Непрерывная среда

#Изменения в программе для непрерывной среды

# Смотрите задачу №4

def train(self, log_old_policy, states, actions, gaes):

with tf.GradientTape() as tape:

'''

mu, std =

log_new_policy =

loss =

'''

grads = tape.gradient(loss, self.model.trainable_variables)

self.opt.apply_gradients(zip(grads,

self.model.trainable_variables))

return loss

def load(self, Model_name):

self.model = load_model(Model_name + ".h5", compile=True)

def save(self, Model_name):

self.model.save(Model_name + ".h5")Непрерывная среда

#Изменения в программе для непрерывной среды

class Agent:

def __init__(self, env):

self.env = env

self.state_dim = self.env.observation_space.shape[0]

self.action_dim = self.env.action_space.shape[0]

self.action_bound = self.env.action_space.high[0]

self.std_bound = [1e-2, 1.0]

self.actor_opt = tf.keras.optimizers.Adam(learning_rate=0.0005)

self.critic_opt = tf.keras.optimizers.Adam(learning_rate=0.001)

self.actor = Actor(self.state_dim, self.action_dim,

self.action_bound, self.std_bound)

self.critic = Critic(self.state_dim)Непрерывная среда

#Изменения в программе для непрерывной среды

class Agent:

'''

'''

def train(self, max_episodes=100):

'''

'''

while not done:

log_old_policy, action = self.actor.get_action(state)

next_state, reward, done, _ = self.env.step(action)

state = np.reshape(state, [1, self.state_dim])

action = np.reshape(action, [1, 1])

next_state = np.reshape(next_state, [1, self.state_dim])

reward = np.reshape(reward, [1, 1])

log_old_policy = np.reshape(log_old_policy, [1, 1])Непрерывная среда

#Изменения в программе для непрерывной среды

'''

'''

env_name = 'HalfCheetahBulletEnv-v0'

env = gym.make(env_name)

env = gym.wrappers.Monitor(env,"recording",force=True)

agent = Agent(env)

Результат