Gaia Network

Planetary-scale science and decision infrastructure

VAISU

May 24, 2024

Rafael Kaufmann

The Metacrisis is here

Everything is connected (climate, food, security, biodiv)

× Self-evolving tech (inc. runaway AI)

× Coordination failure ("Moloch")

= Total risk for humanity and biosphere

Schmachtenberger: hypothesized attractors

- Chaotic breakdown

- Oppressive authoritarian control

Designing a third attractor

The Gaia Network

A "web of models" for scalable decision-making and automation

Goal: Help people, organizations and AI agents with:

-

Making sense of a complex world

-

Grounding decisions, conversations and negotiations

How: A shared source of quantitative knowledge and model-based prediction

Where: Applications in:

- Climate policy and investment

- Participatory planning for cities

- Sustainability and risk mitigation in supply chains

- Safe AI for automation in the physical world

- Etc

How Gaia works (in a nutshell)

1

Users contribute quantitative knowledge claims

Observational/experimental data + statistical models -> analytical data

Gaia scores contributions by quality and impact

Using a simple protocol based on Bayesian probability theory

2

Gaia answers queries by aggregating across contributions

Full chain of data and analysis, with attribution

3

Users disagree by contributing competing analyses

Over time, Gaia converges on the best models, crediting the best contributors

4

Queries are monetized to finance the network

Incentives for targeted, high-quality data collection, experiment and analysis

5

1

Users contribute quantitative knowledge claims

Like the Web is made up of websites that host content, Gaia is made up of nodes that host claims (which we call beliefs) about the world:

(*) These examples are all about climate, but the system works for any quantifiable domain

| Beliefs about | Example (*) |

|---|---|

| Facts on the ground | "How much carbon was captured over time in this specific farm?" |

| Effects of interventions | "What is the effect of regenerative practices on agricultural carbon emissions?" |

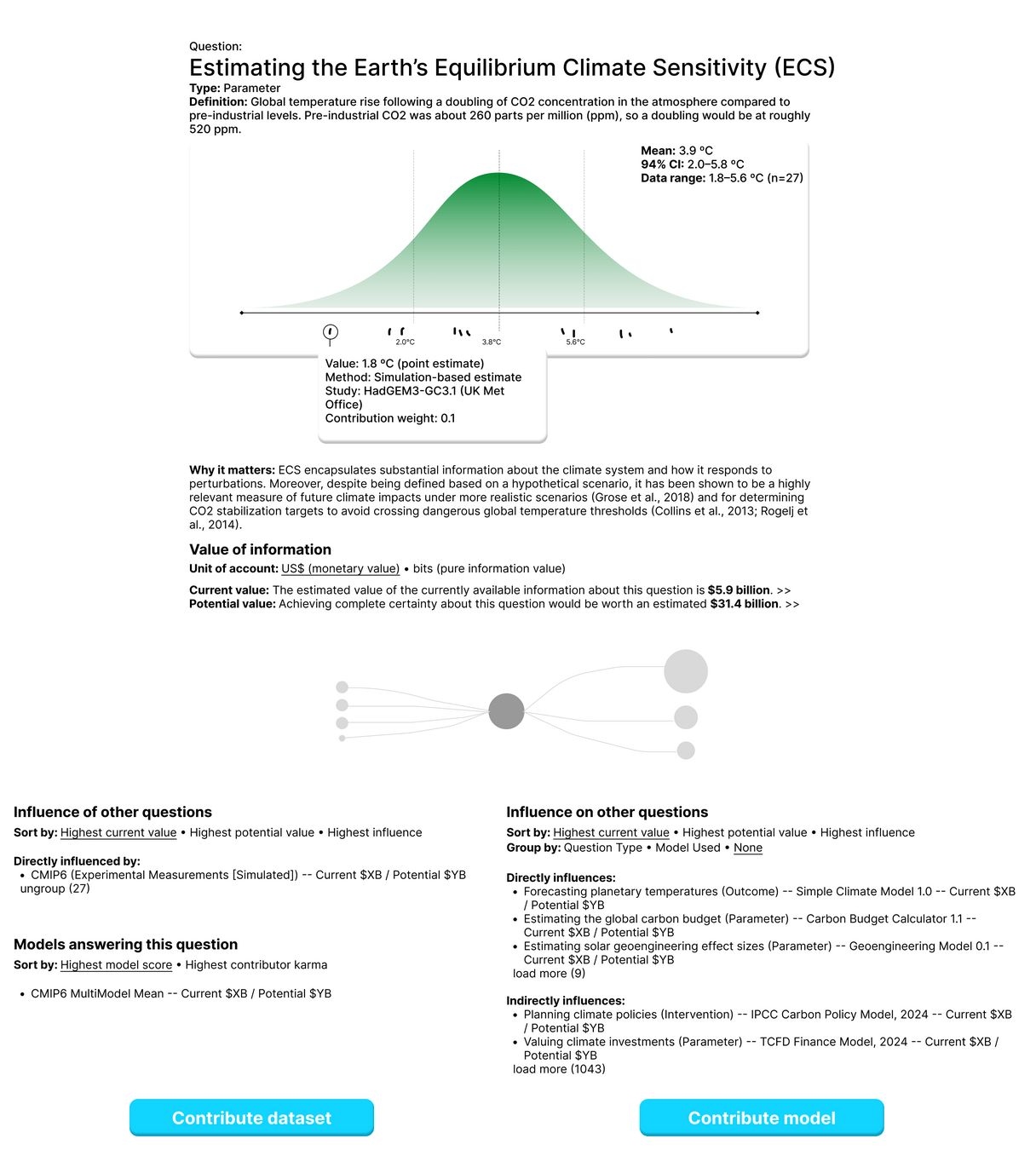

| Scientific parameters | "What is the Earth's climate sensitivity?" |

| Predicted outcomes | "When will (or did) the world reach the 1.5 degree warming mark?" |

Gaia scores contributions by quality and impact

2

Just like a website can host any content, a node can host any beliefs. However, some beliefs are more useful than others: they agree with the available evidence and help predict future data (Bayesian probability theory, “the logic of science”).

Gaia scores beliefs by their Bayesian usefulness, using the following protocol:

- Beliefs are published together with rationales (data + probabilistic models). One node’s beliefs can be another node’s data, forming a graph of rationales.

- Nodes score each other on the basis of how useful and trustworthy they are (attribution). Scores “trickle down” the graph, forming a way to account for the value of beliefs.

Nodes with low-usefulness beliefs get attributed low scores by their peers, and thus tend to become ignored.

Gaia answers queries by aggregating across contributions

3

Gaia answers users' pivotal questions in high-stakes decisions:

- Where to prioritize climate action?

- How to value some real estate in light of climate and nature issues?

- Is this autonomous system capable of causing harm?

Questions are expressed as probabilistic queries to a Gaia node, which query all the other relevant nodes that it needs in order to intelligently update its beliefs. This is like Google aggregates knowledge across billions of sources and queries that database on the fly to answer user queries.

Unlike Google, this computation is distributed across the nodes, simulating thousands of possible scenarios. Then the simulation results get aggregated back at the querying node to form summary beliefs.

Users disagree by contributing competing analyses

4

If you think the output of a node seems wrong, instead of trying to argue and convince people on Twitter, you just set up your own node using an alternative model and publish its beliefs to the network.

Your peer nodes will compare the alternate models and score them by their usefulness and truthfulness.

This is how Gaia evolves and is resilient to error and misinformation.

Queries are monetized to finance the network (*)

5

Gaia’s contribution accounting can be used to incentivize users to quickly publish lots of high-quality knowledge.

A Gaia user could pay a node for the value of the information it provides (for instance, by helping reduce risk in a high-stakes investment), and the node would distribute payments upstream to its own information providers, and so on, creating a data value chain.

(*) This part is more speculative: while accounting is straightforward and objective, pricing isn’t.

great, but...

Will they use it?

Target opportunity 1: high-stakes complex systems like climate, epidemiology, ecology, infrastructure management and much of public policy, where pivotal questions are often the hardest to answer and controversy is rife.

There’s a strong desire from both knowledge creators and decision-makers to ensure decisions are well-grounded.

But the state of the art is primitive:

- Publishing knowledge: PDF papers and websites

- Turning knowledge into decisions: Google Search + spreadsheets and documents

This knowledge cycle is slow, expensive, non-scalable and prone to bias.

Gaia can help users systematically ground their decisions on all the relevant knowledge, with rationales tracing back to the relevant sources and assumptions, making decisions more efficient, transparent and trustworthy.

Sample App #1

Collaborative climate science to unlock action and direct research.

Sample App #2

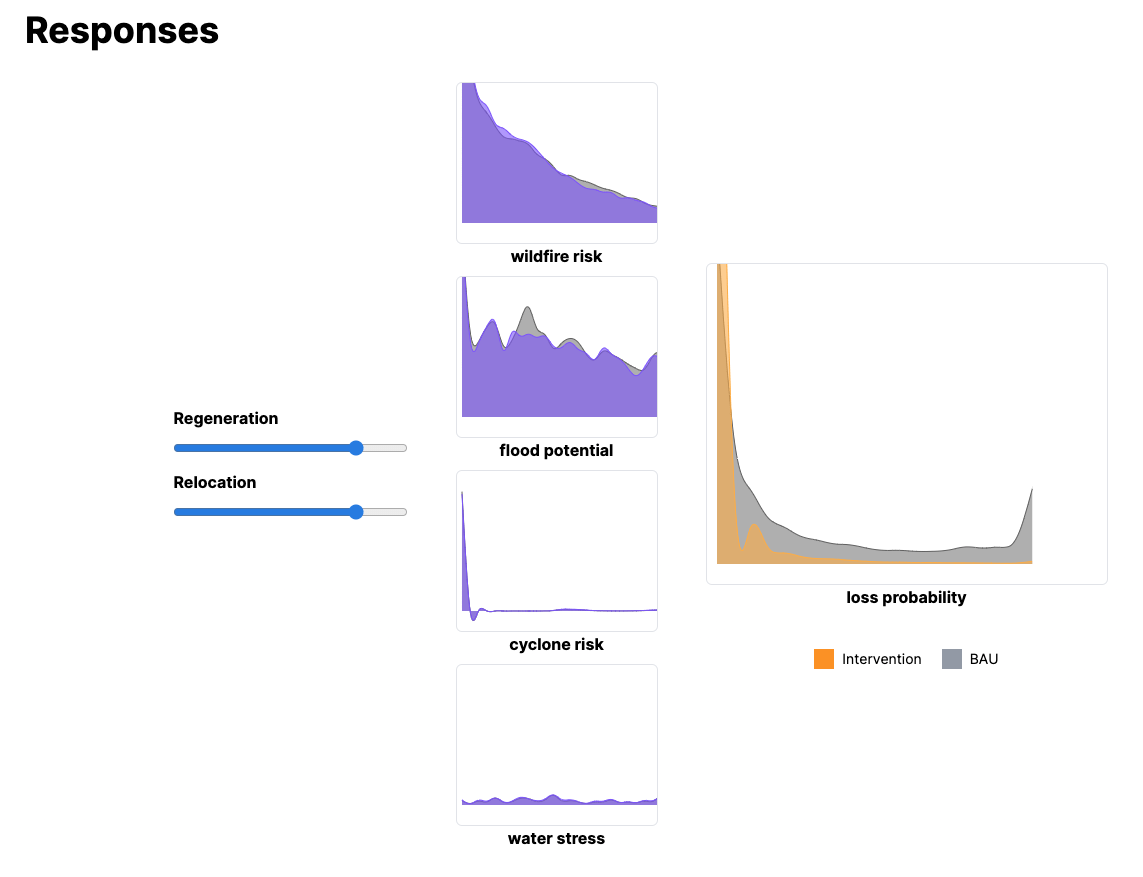

Analyze and mitigate nature risk to food supply. (Real-world)

Sample App #3

Estimate and forecast the impact of regeneration projects. (Real-world)

Target opportunity 2: AI safety in real-world systems. AI is proliferating across all kinds of applications, increasingly in the form of autonomous agents. These complex, highly capable and black-box AI systems can't be adequately supervised by humans, leading to mounting risk.

Leading AI researchers (Bengio, Legg...) have called for safeguarding AI deployments through model-based oracles or gatekeepers.

Gaia-based gatekeepers can vet and constrain AI agent actions at any spatiotemporal scale, with built-in explainability and governance.

Sample App #4

Calculate and enforce sustainable fishing practices to safeguard AI-augmented fishing vessels.

Demo coming May/2024

- More real-world use cases

- More models

- Generalize existing infrastructure

- Complete development

What's next?

Some of Our contributors (so far)!

Help us build the planetary web of models

rafael.k@digitalgaia.earth