Throwing Light on PyTorch

The Pythonic Deep Learning Framework

The What and Why of PyTorch ?

- Python based Deep Learning Framework

- Developed by Facebook Research Group

- Dynamic Computational Graphs

- Easy Debugging

- More Pythony

What ?

Why ?

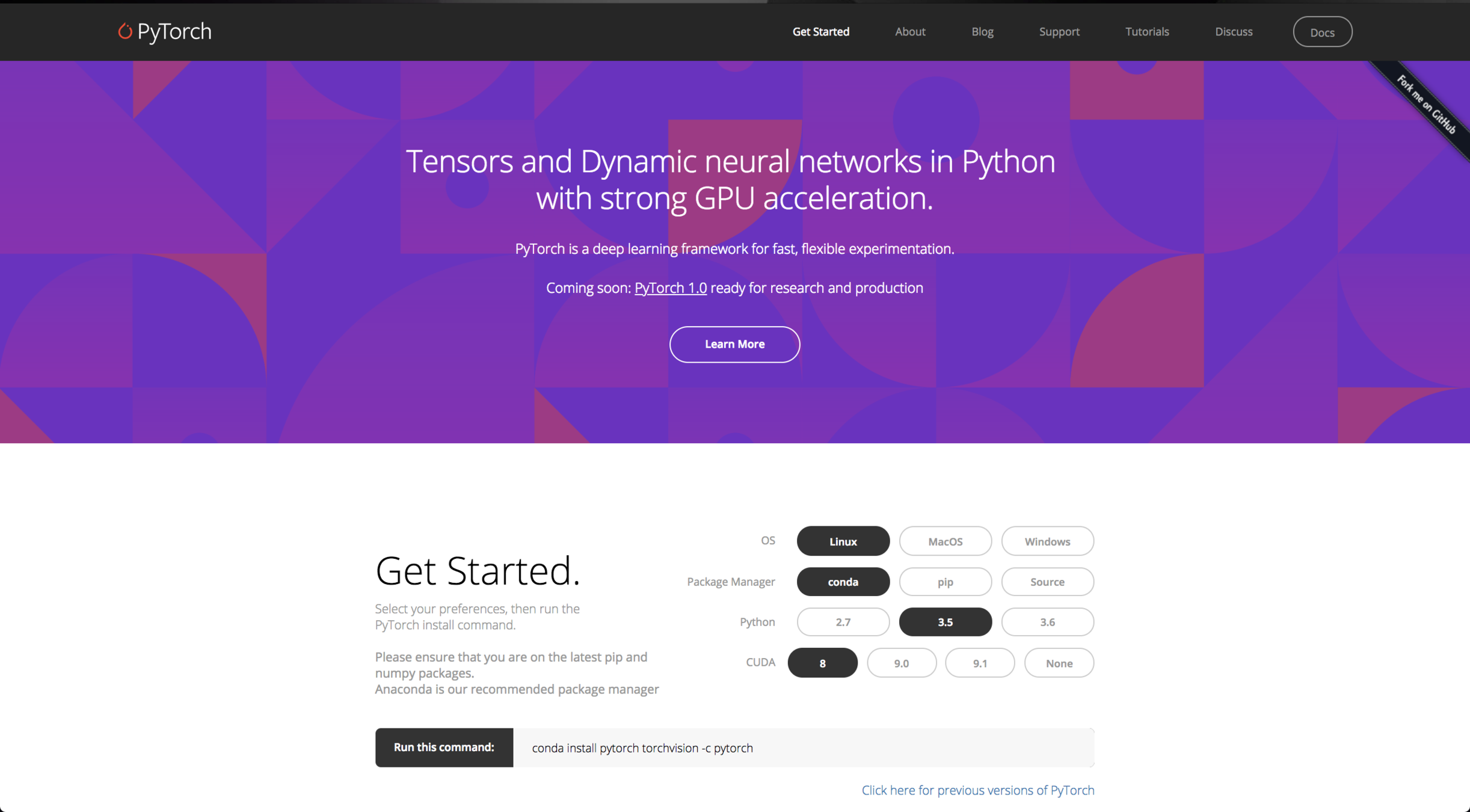

Getting Started with PyTorch

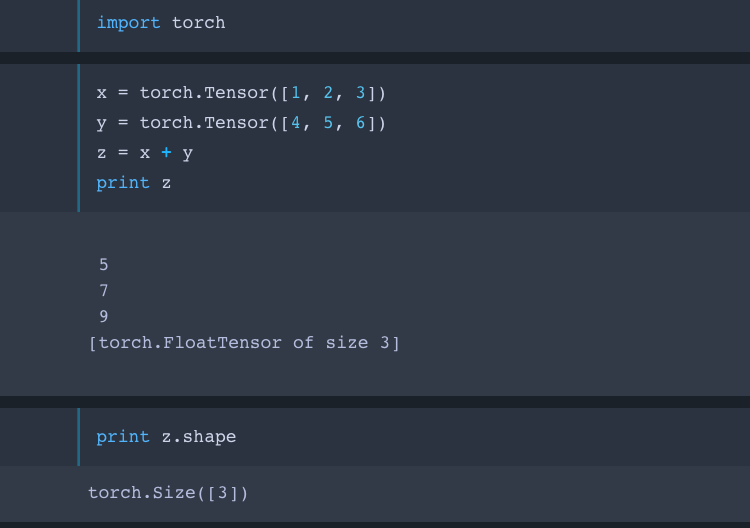

Tensors : Lego Blocks of Neural Networks

Tensors : Lego Blocks of Neural Networks

Numpy Arrays on GPU Steroids

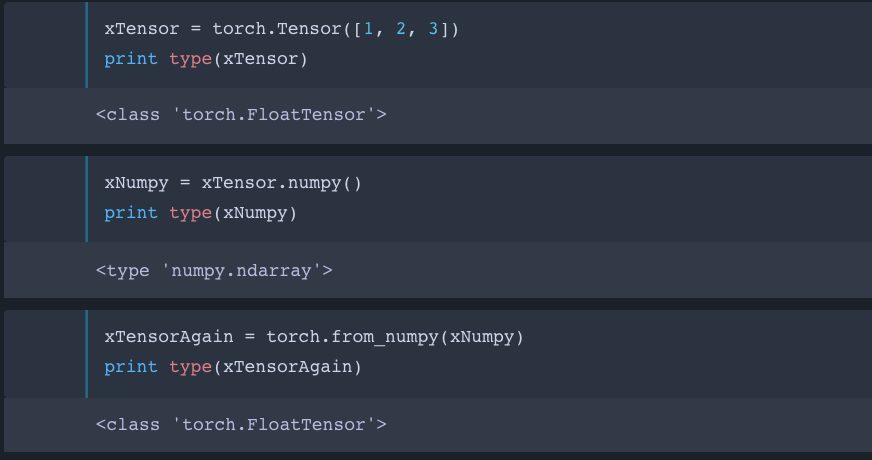

Tensors : Lego Blocks of Neural Networks

Numpy Conversion Bridge

Tensors : Lego Blocks of Neural Networks

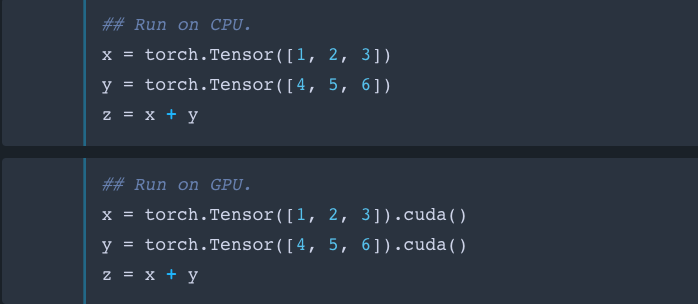

Easy switch between CPU and GPU

Neural Networks

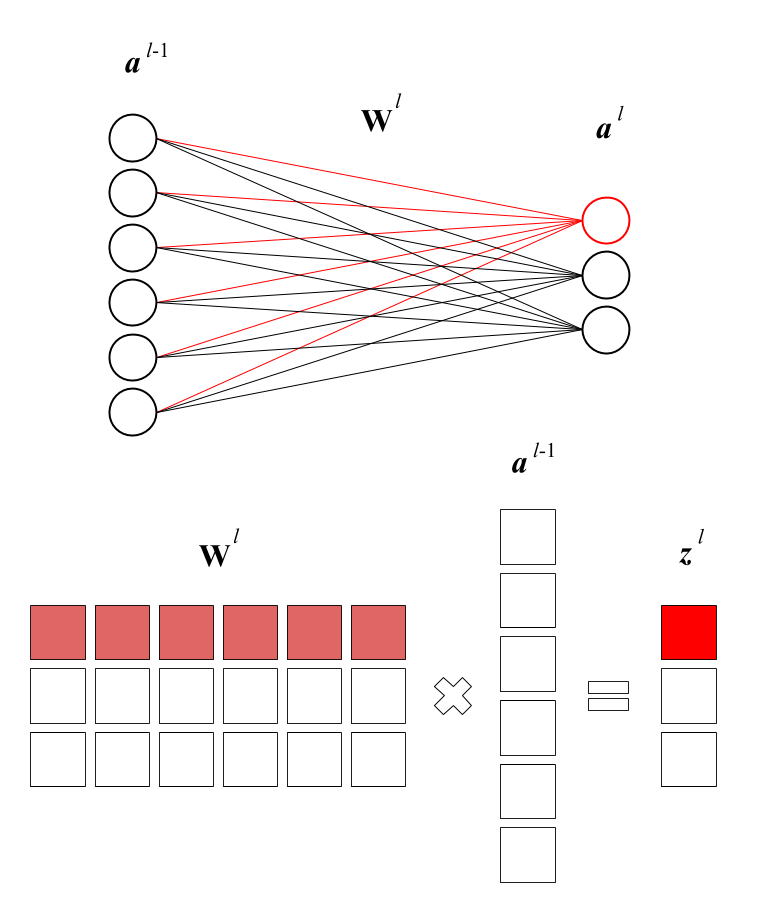

Forward Propagation

Neural Networks

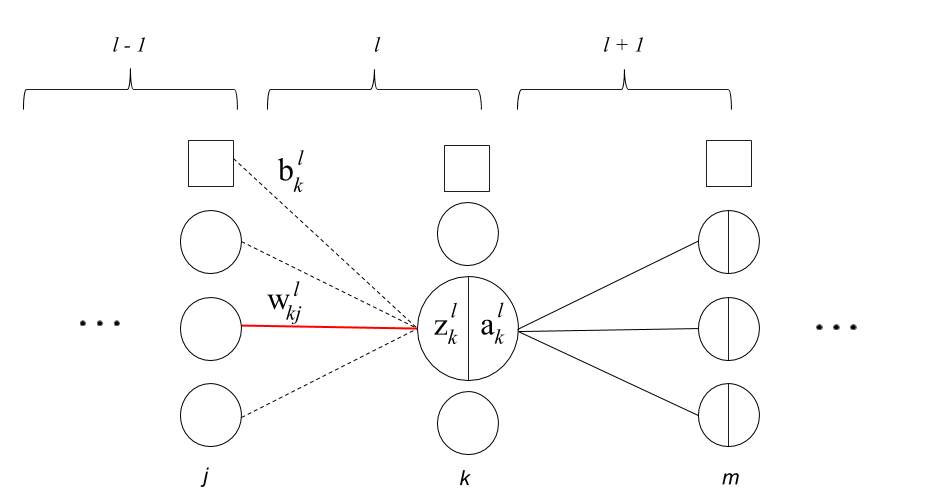

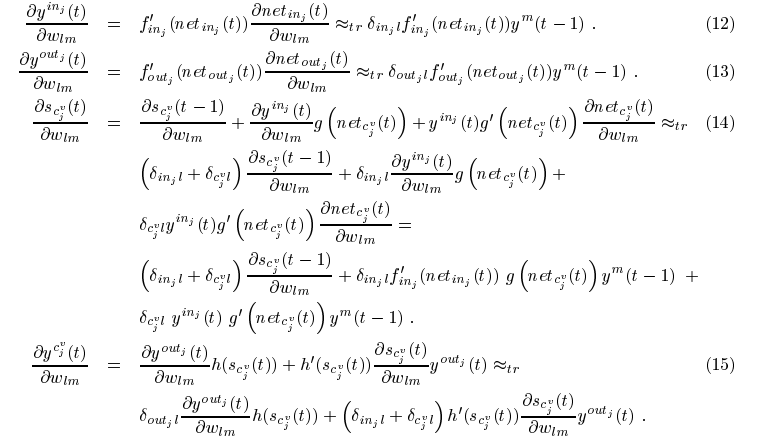

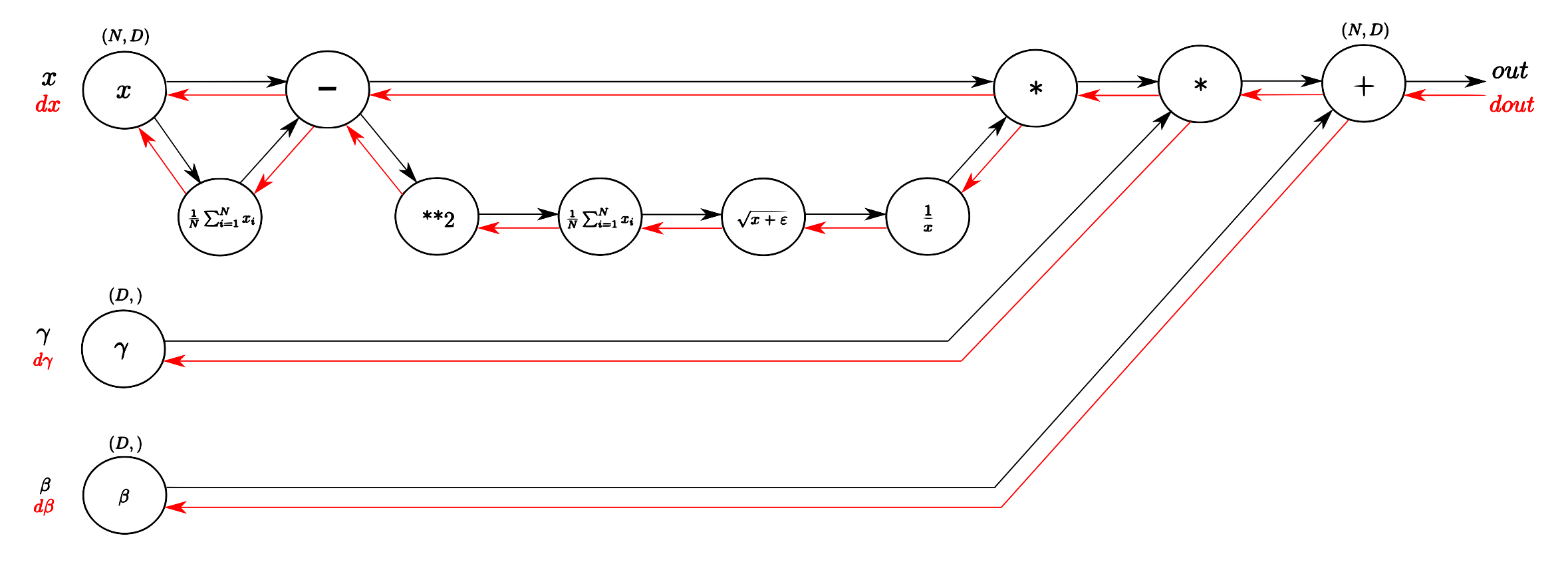

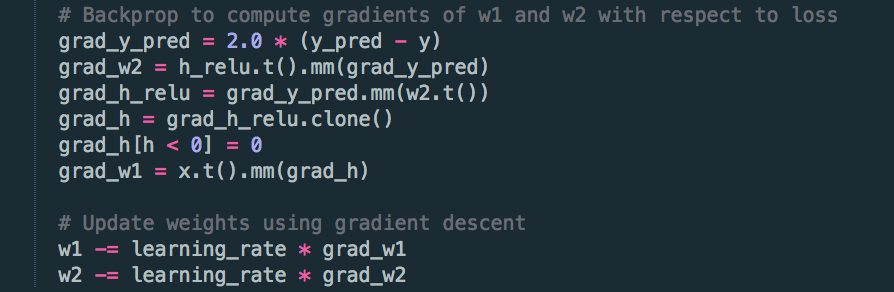

Backpropagation

Neural Networks

Problem

Neural Networks

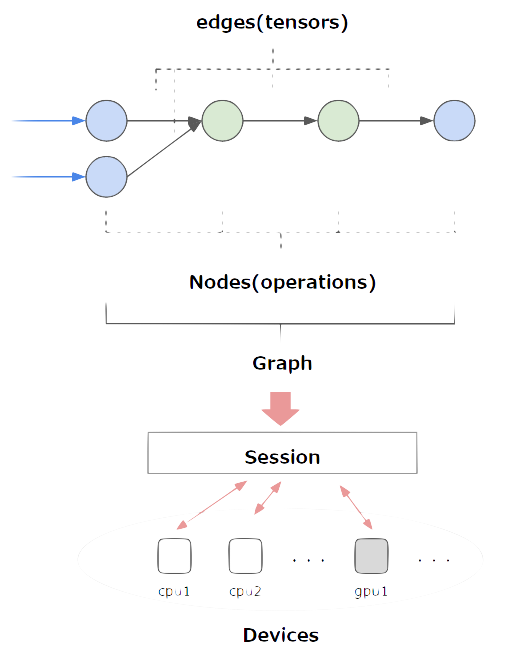

Solution : Computational Graphs

Static Computational Graphs

- Define the graph structure beforehand

- No Actual Data, Only placeholders

- Used by Tensorflow

Dynamic Computational Graphs

- Define the graph on the fly

- Feed in Actual Data

- Used by PyTorch

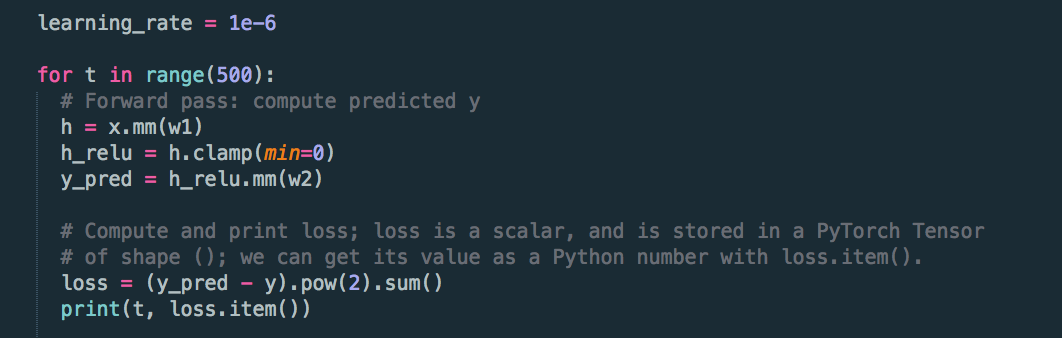

Simple Neural Network in PyTorch

Simple Neural Network in PyTorch

Simple Neural Network in PyTorch

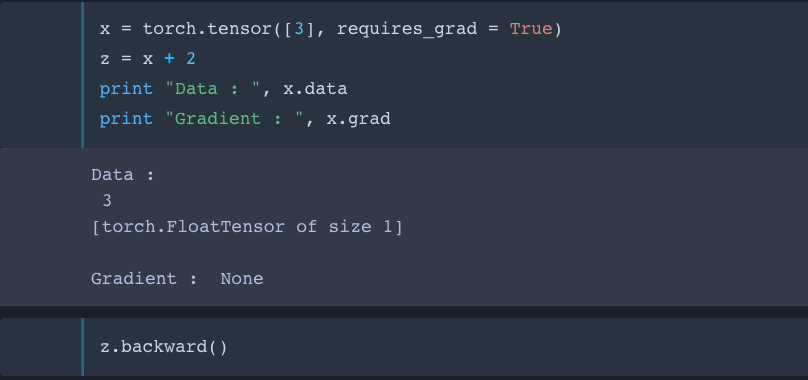

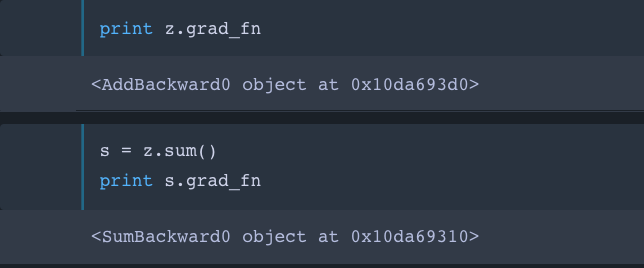

AutoGrad (Automatic Differentiation)

AutoGrad Package is central to all Neural Networks in PyTorch.

It provides classes and functions implementing automatic differentiation of arbitrary scalar valued functions.

It requires minimal changes to the existing code.

Tensors Continued (Previously Variables)

- It is a part of the AutoGrad Package.

- It allows for computing the gradient.

- Unlike TensorFlow tensors, PyTorch tensors have actual data.

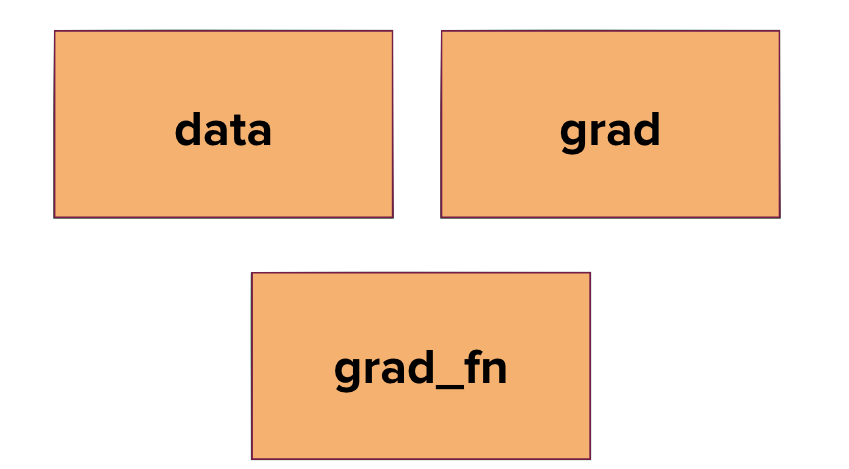

Tensor Frame

Tensors Continued (Previously Variables)

Tensors Continued (Previously Variables)

Neural Networks in PyTorch

- They are nothing but Computational Graphs.

- Supports Dynamic Computational Graphs.

- Varying level of abstraction according to usage and expertise.

Neural Networks in PyTorch

import torch.nn as nn

# Example of using Sequential

model = nn.Sequential(

nn.Conv2d(1,20,5),

nn.ReLU(),

nn.Conv2d(20,64,5),

nn.ReLU()

)

# Example of using Sequential with OrderedDict

model = nn.Sequential(OrderedDict([

('conv1', nn.Conv2d(1,20,5)),

('relu1', nn.ReLU()),

('conv2', nn.Conv2d(20,64,5)),

('relu2', nn.ReLU())

]))Keras of PyTorch

Neural Networks in PyTorch

class MnistModel(nn.Module):

def __init__(self):

super(MnistModel, self).__init__()

# input is 28x28

# padding = 2 for same padding

self.conv1 = nn.Conv2d(1, 32, 5, padding=2)

# feature map size is 14*14 by pooling

# padding = 2 for same padding

self.conv2 = nn.Conv2d(32, 64, 5, padding=2)

# feature map size is 7*7 by pooling

self.fc1 = nn.Linear(64*7*7, 1024)

self.fc2 = nn.Linear(1024, 10)

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), 2)

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

x = x.view(-1, 64*7*7)

x = F.relu(self.fc1(x))

x = F.dropout(x, training = self.training)

x = self.fc2(x)

return xNeural Networks in PyTorch

## Saving only model parameters.

torch.save(model.state_dict(), Path)

## Pickle entire model.

torch.save(model, Path)## Loading model parameters.

torch.save(modelParamPath)

## Loading entire model.

torch.load(modelPath)Saving and Loading Neural Nets in PyTorch

Questions ?

rahulbaboota

rahulbaboota