Generative Agents: Interactive Simulacra of Human Behavior

Motivation

-

LLMs are everywhere and are being used for everything. -

Idea is to develop "generalized" agents where "generalized" captures the following:-

Long-term coherence -

Retrieval of relevant events -

Reflections of events to draw high-level inferences -

Planning and reacting in a non sub-optimal way (actions make sense now and in the future)

-

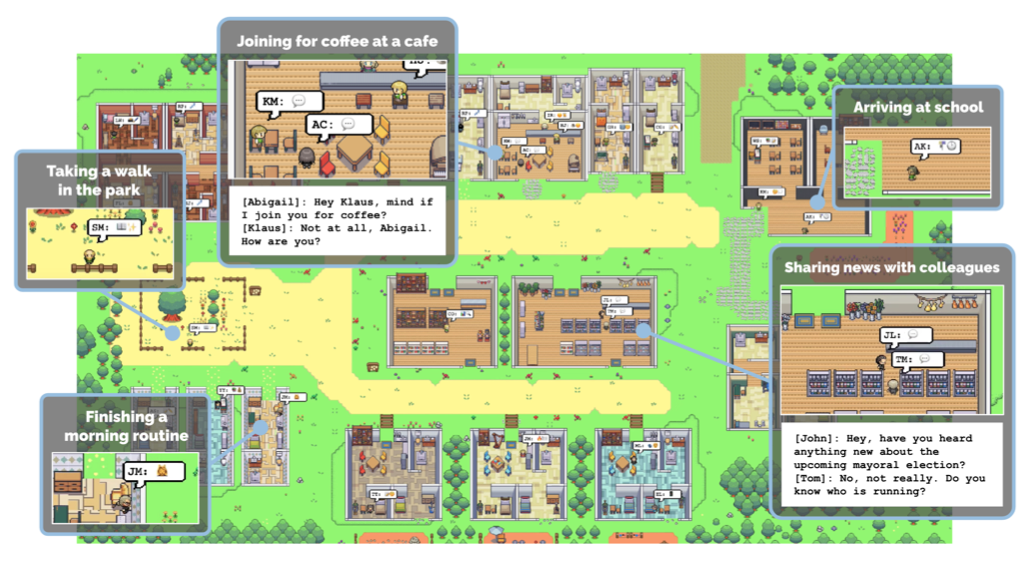

Experiment Setup

-

25 Agents are initialized where Agent -> LLM

-

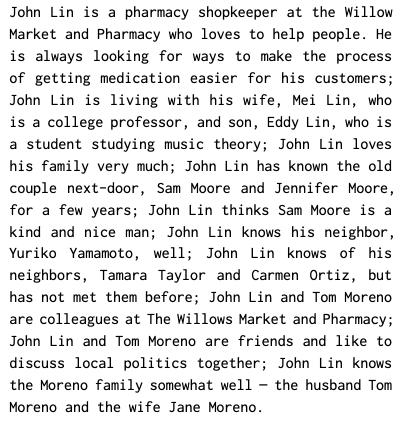

Each agent is initialized with a natural language paragraph with following information:-

Identity (Name, Occupation, Nature) -

Information about social and physical environment -

Information about relationships -

Initial guided directive to their actions

-

Experiment Setup

-

Agents communicate with the environment and with other agents through Natural Language. -

With Environment:-

"Isabella Rodriguez is checking her emails" -

"Isabella Rodriguez is talking with her family on the phone"

-

-

With other Agents:

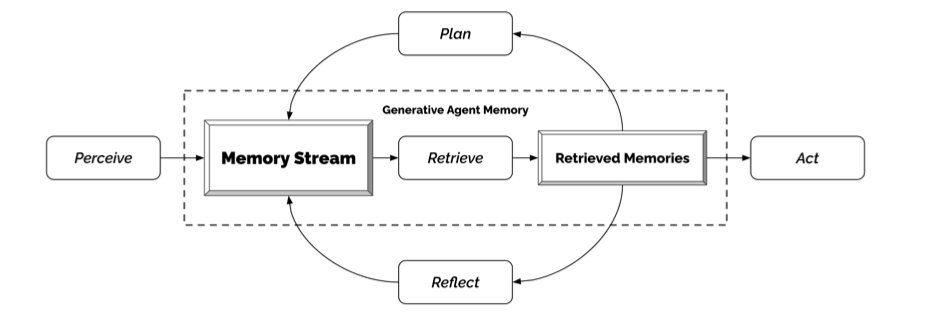

Generative Agent Architecture

-

Due to finite context length, we cannot just put all the experience of the agent in an LLM Prompt. -

Challenge is to extract relevant pieces of memory when needed. -

Their approach is the creation of a "Memory Stream" alongside a retrieval mechanism.

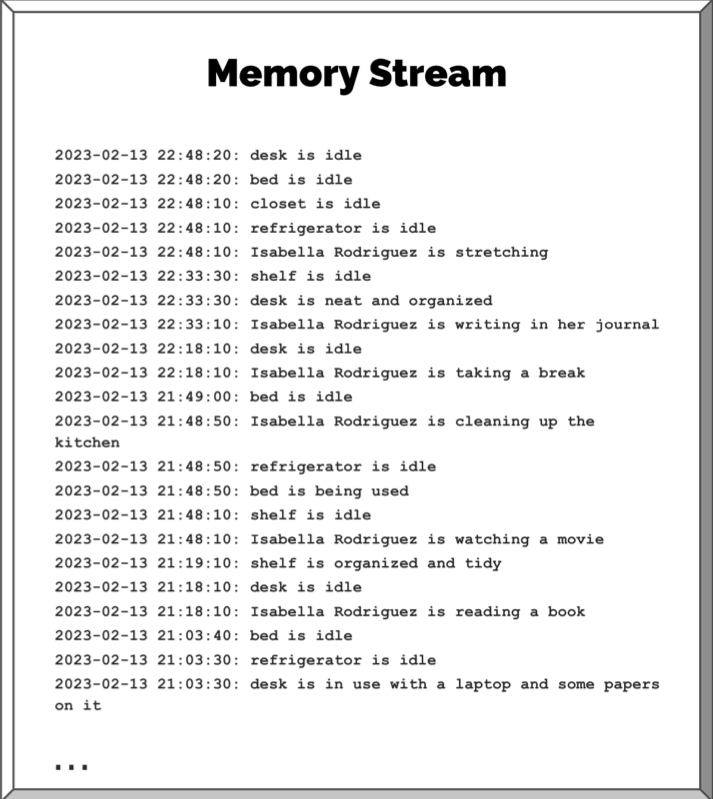

Memory Stream

-

Observations are events directly perceived by an agent. -

Can be behaviors performed by the agent themselves or behaviors perceived from other agents. -

Stored in the Memory Stream with the following information:-

Natural Language description -

Creation time-stamp -

Most Recently Accessed time-stamp

-

Observations

Memory Stream

-

Reflections are higher-level and more abstract thoughts generated by the agent. -

Generated periodically by prompting the model with insight-extracting prompts. -

Example-

Klaus Mueller is writing a research paper. -

Klaus Mueller enjoys reading a book on gentrification [.....]

-

What 5 high-level insights can you infer from the above statements?

-

Reflections

Memory Stream

-

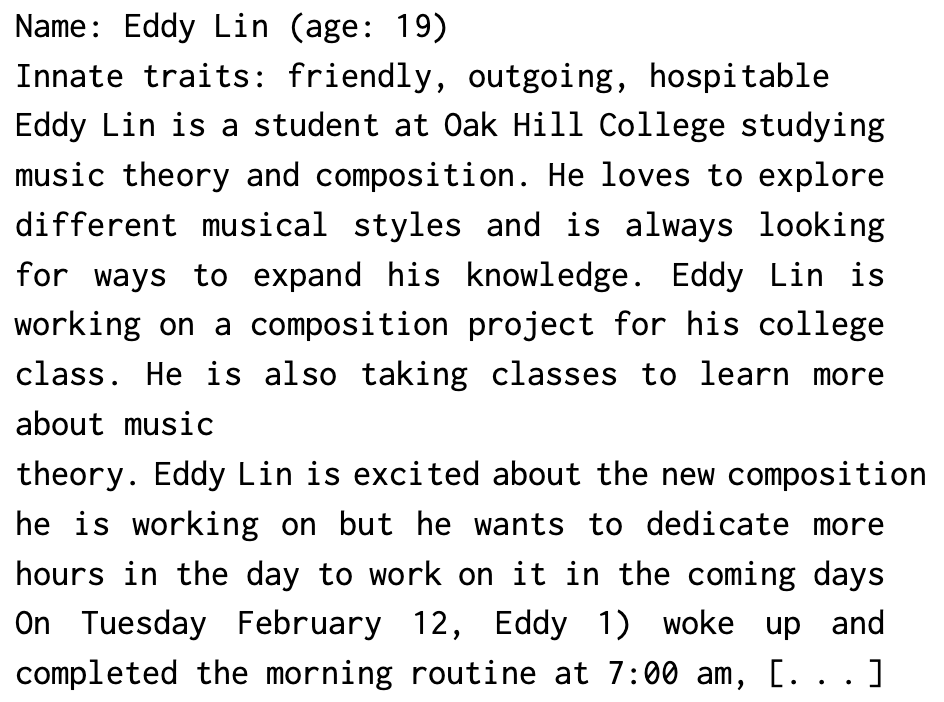

Plans describe a future sequence of actions for the agent. -

A Plan includes the following:-

Location -

Starting Time -

Duration

-

-

Plans are created top-down and recursively-

Prompt the model with agent summary and their previous day. -

LLM gives a brief plan for the entire day. -

Recursively break down the plan for the day into granular time scales.

-

Plans

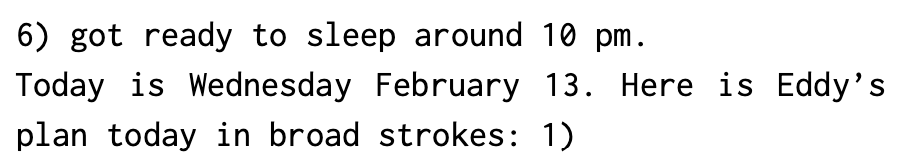

Retrieval

-

How to retrieve relevant experiences from the agent's memory stream? -

They consider 3 parameters while designing the retrieval function-

Recency: Higher score to memory stream objects that were recently accessed (Exponential decay function) -

Importance: Simple! Just ask the LLM.-

Brushing Teeth : 1 (Mundane Task) -

Breakup : 10 (Big Event)

-

-

Relevance: Context relevance. Use LLM to generate embeddings for all objects in memory stream and every incoming query.

-

Retrieval

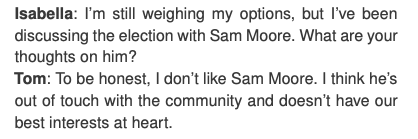

Emergent Social Behaviors

-

Observed 3 prominent social behaviors.-

Information Diffusion-

Sam tells Tom about his candidacy in the local elections which soon becomes the talk of the town.

-

-

Relationship Memory-

Sam and Latoya do not know each other but in their initial meet, Latoya tells Sam she's working on a photography project. In a later interaction between them, Sam asks Latoya about her project progress.

-

-

Coordination-

Isabella is initialized with an intent to organize a Valentines Day party. She asks her friend Maria for help to which Maria agrees and they both successfully organize the party inviting many guests.

-

-

QUESTIONS ?

BabootaRahul

rahulbaboota