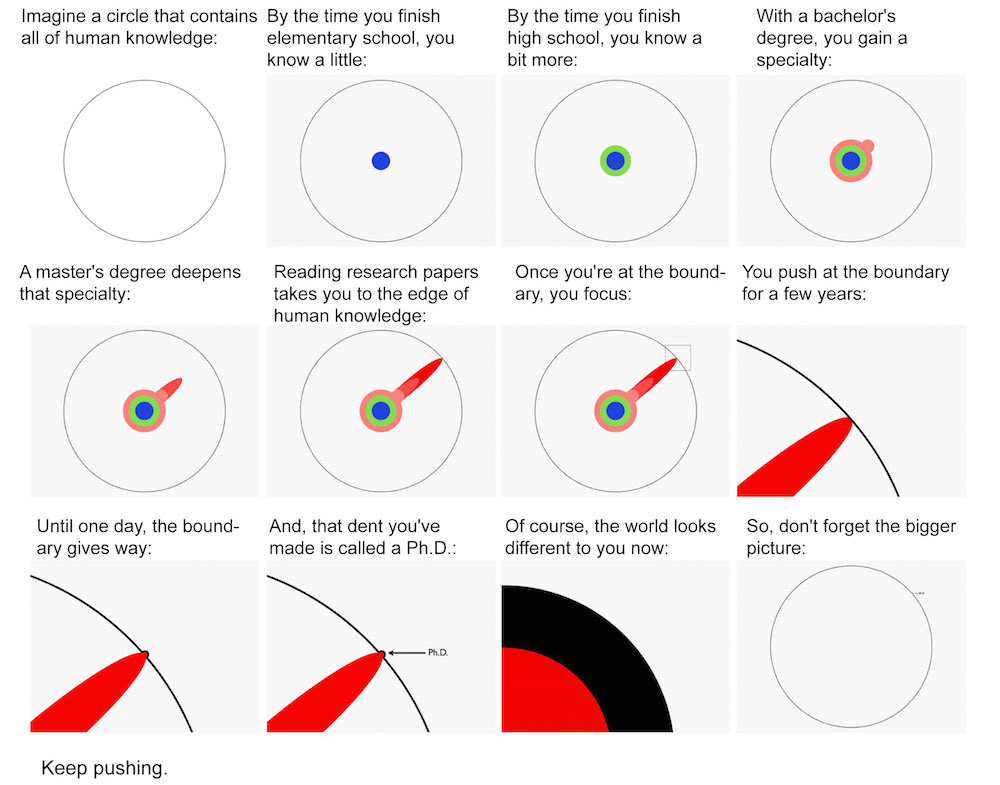

1

2, 1

1

light enters your eyes

1

signals travel to visual cortex

2

cortical processing

3

perceptual experience

4

How can we understand visual representations?

How can we understand visual representations?

“ambient”

dimensions

- single neurons

- voxels from fMRI

- EEG/MEG sensors

- abstract dimensions

neural response

stimulus

vector of neuron responses

neuron 1

neuron 4

neuron N

...

How can we understand visual representations?

all axes are orthogonal (90°)

neuron 2

neuron 1

neuron 3

neuron 4

...

neuron N

neuron 2

neuron 1

neuron 3

neuron 4

...

neuron N

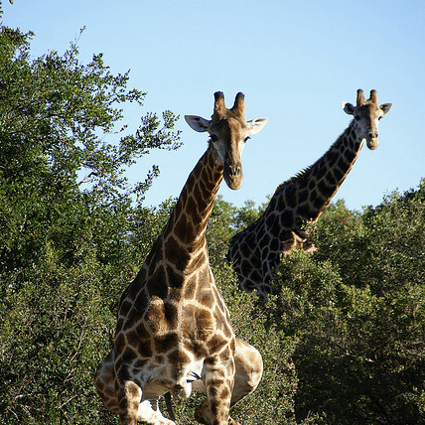

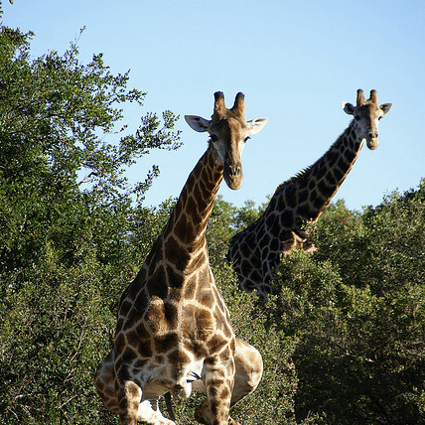

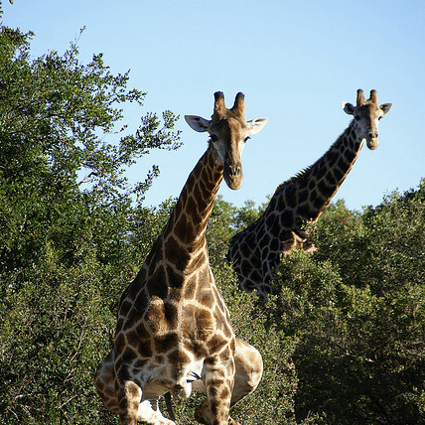

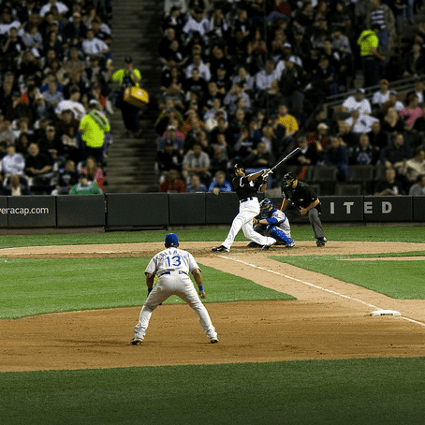

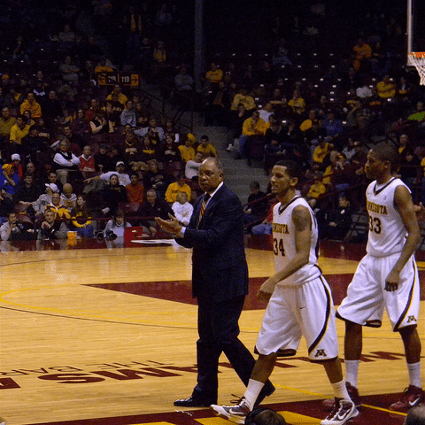

has many people

has no people

A common approach is to study latent dimensions.

“latent”

dimension

How can we understand visual representations?

all axes are orthogonal (90°)

A common approach is to study latent dimensions.

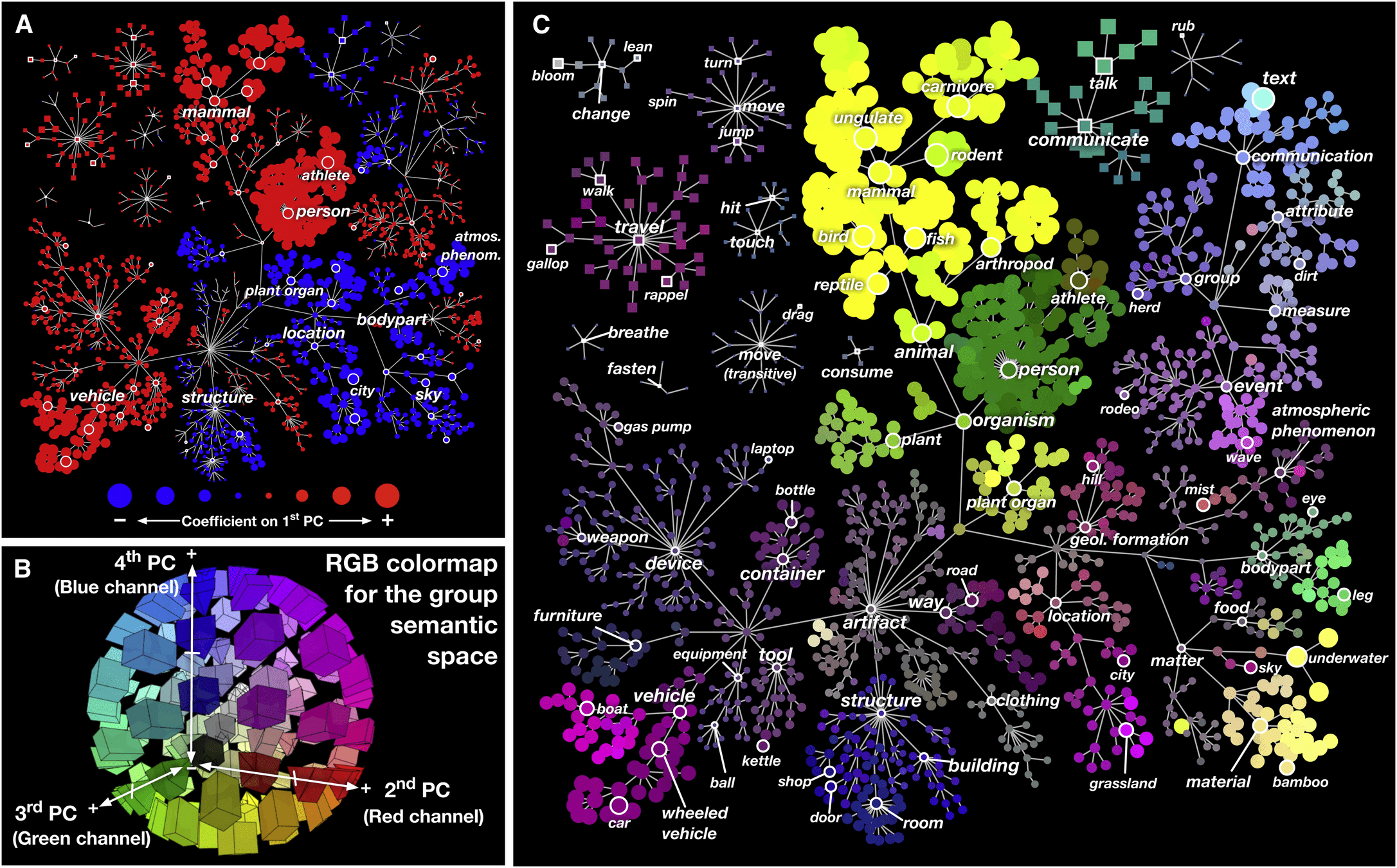

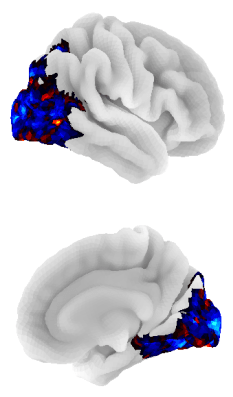

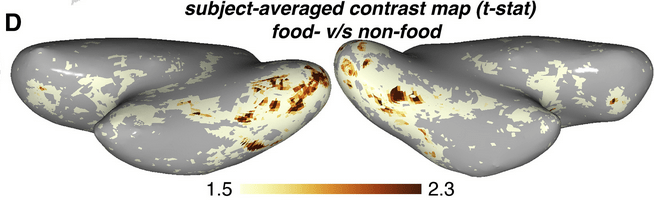

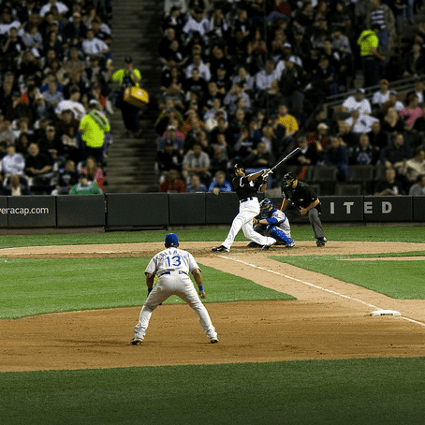

“animacy” dimension in human visual cortex

A common approach is to study latent dimensions.

“food” dimension in human visual cortex

A common approach is to study latent dimensions.

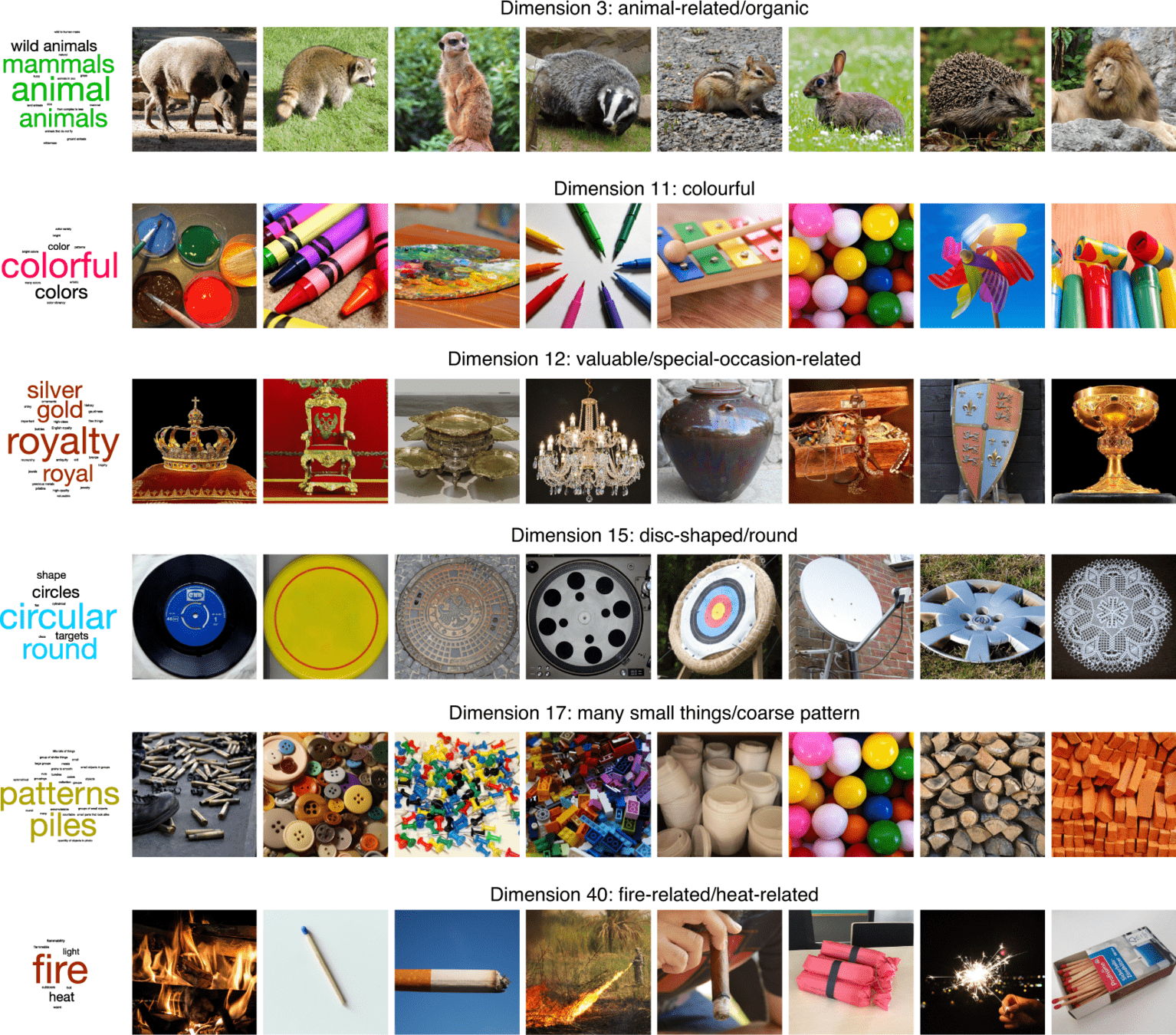

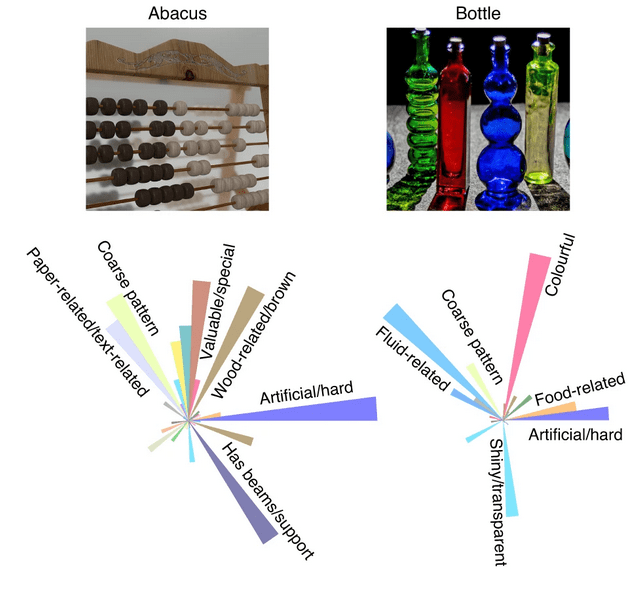

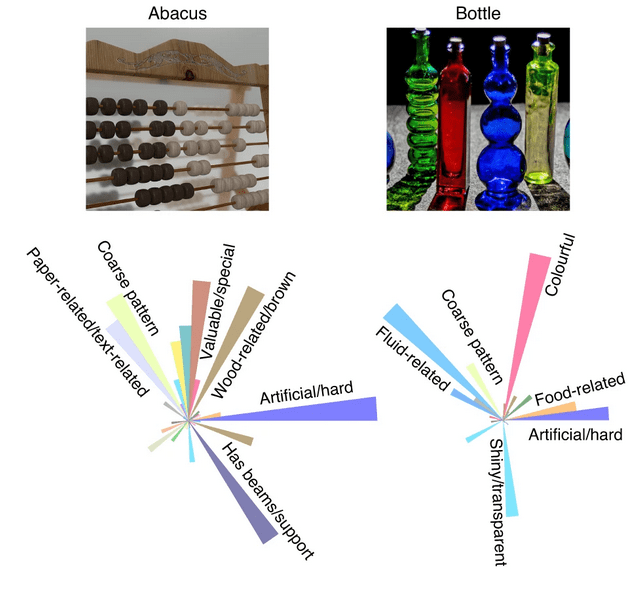

66 dimensions of mental object representations (inferred from behavioral data)

A common approach is to study latent dimensions.

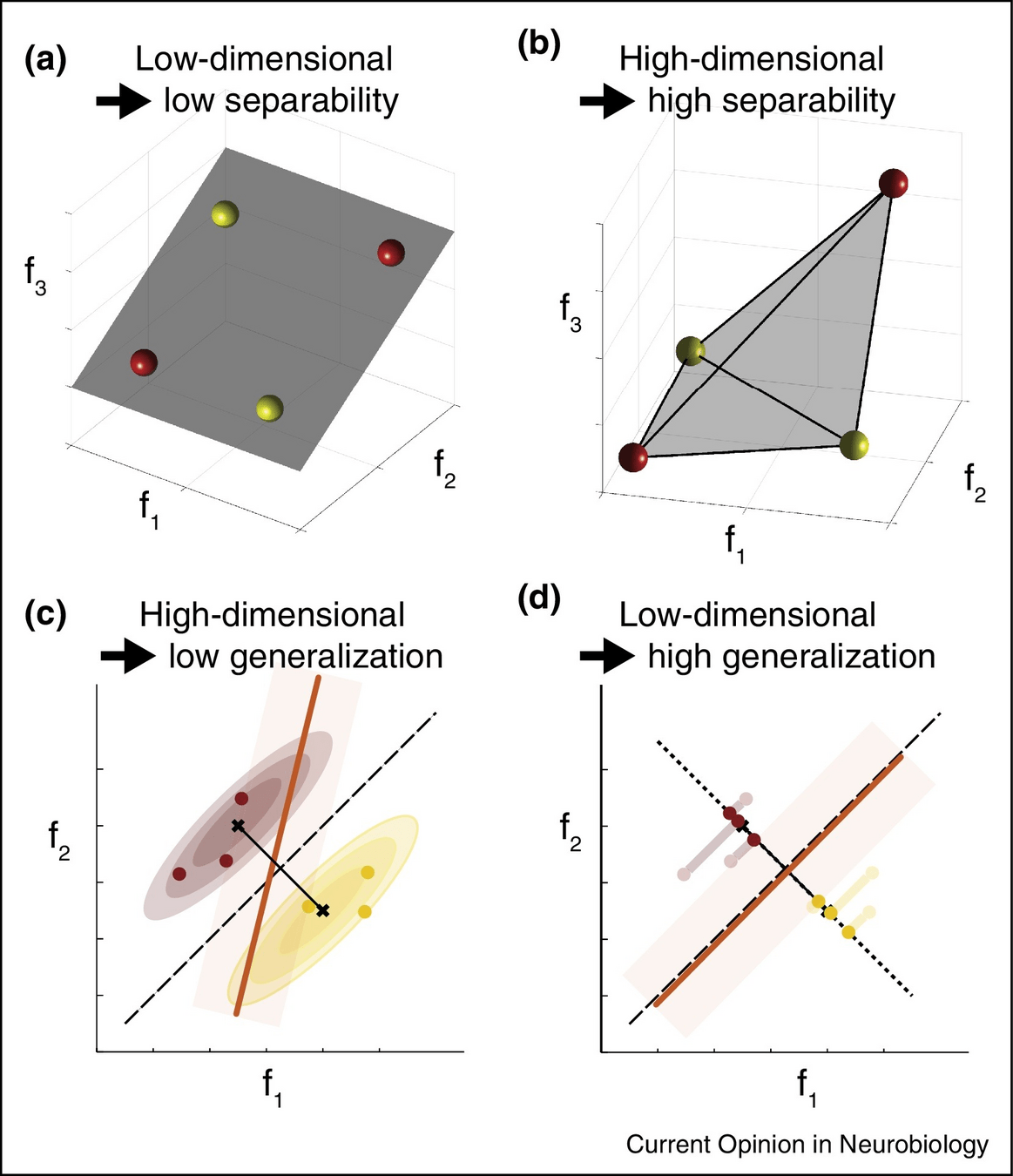

When does this approach make sense?

data manifold spans a low-dimensional subspace

data spans both ambient dimensions

data lives in a 1-D subspace

“latent dimension”

A common approach is to study latent dimensions.

When does this approach make sense?

A common approach is to study latent dimensions.

When does this approach make sense?

Catalog all the latent dimensions!

data manifold spans a low-dimensional subspace

- remove noise

- visualize data

- extract salient patterns

“ [...] the topographies in ventrotemporal cortex (VTC) that support a wide range of stimulus distinctions [...] can be modeled with 35 basis functions”

The visual code is found to be low-dimensional.

“Thus, our fMRI data are sufficient to recover semantic spaces for individual subjects that consist of 6 to 8 dimensions.”

The visual code is found to be low-dimensional.

The visual code is found to be low-dimensional.

66 dimensions

Principal Component Analysis

Non-negative Matrix Factorization

Sparse Positive Similarity Embedding

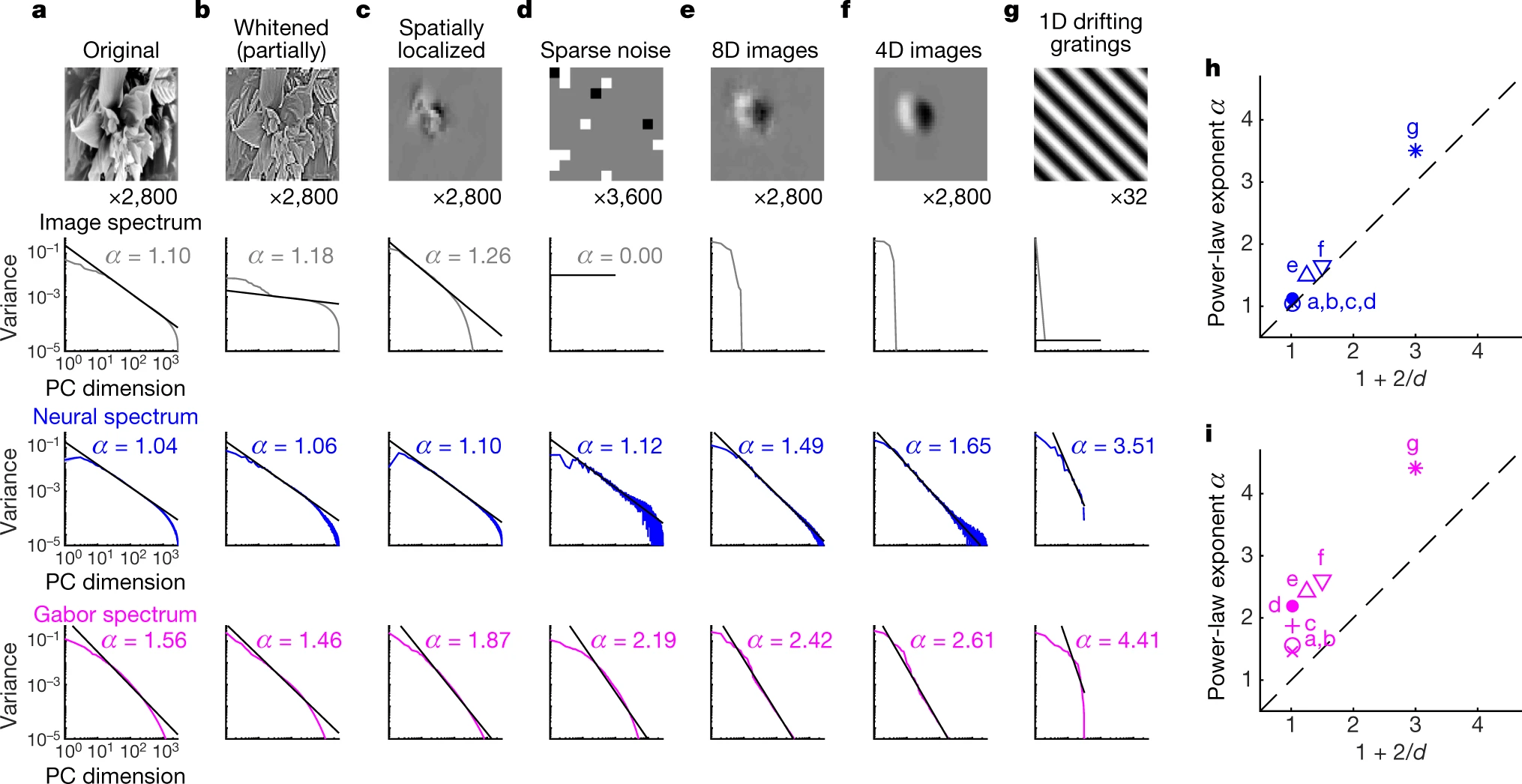

| dimensions | stimuli | system | method | |

|---|---|---|---|---|

| Haxby et al. (2011) | 35 | movies | human ventrotemporal cortex | PCA |

| Huth et al. (2012) | 6-8 (4 shared) | movies | human cortex | PCA |

| Lehky et al. (2014) | 60 (~87 estimate) | objects | macaque inferotemporal cortex | PCA |

| Tarhan et al. (2020) | 5 | visual actions | human cortex | k-means |

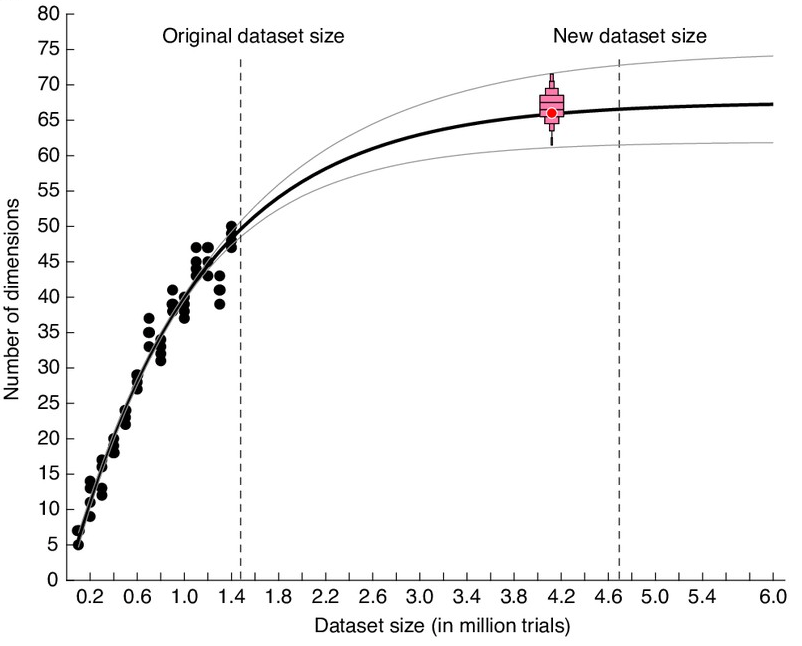

| Hebart et al. (2020) | 49 | objects | human behavior | SPoSE |

| Khosla et al. (2022) | 20 (5 reliable) | scenes | human ventral visual stream | NMF |

| Hebart et al. (2023) | 66 | objects | human behavior | SPoSE |

The visual code is found to be low-dimensional.

A lot of converging evidence that visual representations are low-dimensional across stimuli, species, and methods

~87 dimensions of object representations in monkey inferotemporal (IT) cortex

“A progressive shrinkage in the intrinsic dimensionality of object response manifolds at higher cortical levels might simplify the task of discriminating different objects or object categories.”

Computational goal of cortex: compress dimensionality.

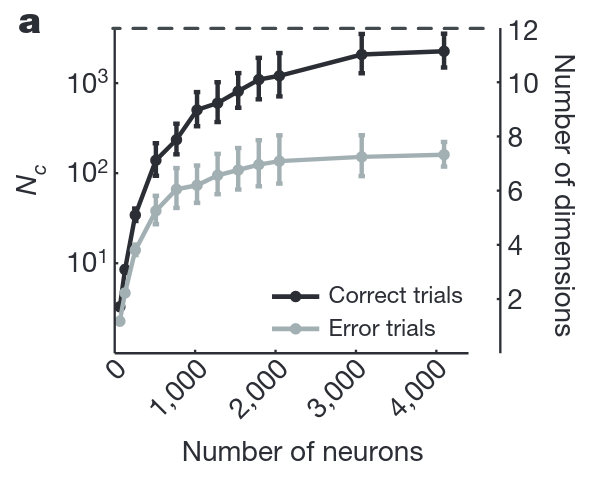

Previous work has found that dimensionality is bounded.

neural representations

mental representations

What if dimensionality doesn't saturate with dataset size?

But high-dimensional codes have benefits too.

high representational capacity, expressive

makes learning new tasks easier

Could visual representations be more high-dimensional than previously found?

We now have very large-scale, high-quality datasets.

Outline of today's talk

Visual cortex representations are high-dimensional.

1

They are self-similar over a huge range of spatial scales.

2

The same geometry underlies mental representations of images.

3

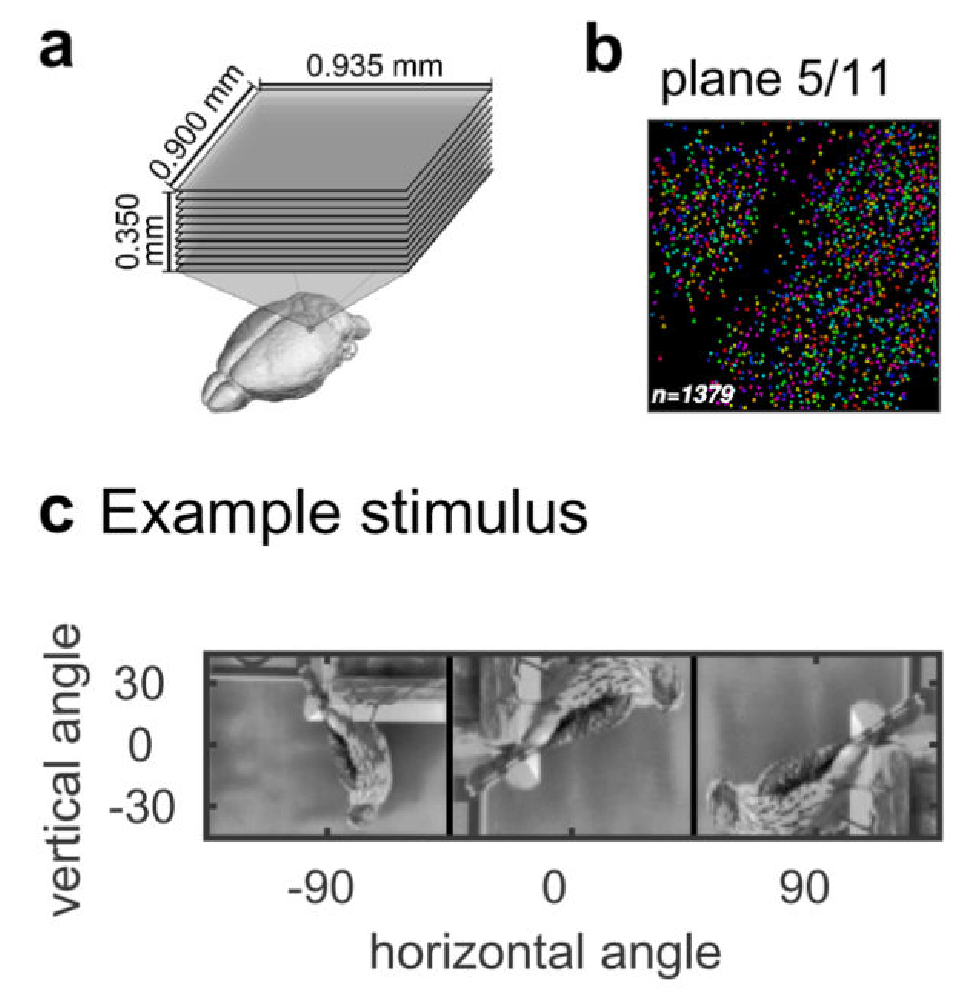

Gauthaman, Menard & Bonner, in preparation

8 subjects

7 T fMRI

"Have you seen this image before?"

continuous recognition

Credit: NordicNeuroLab

fMRI

functional magnetic resonance imaging

resolution

~1 mm

~3 s

coarse measure of neural activity

Ideal dataset to characterize dimensionality

-

very large-scale

-

naturalistic stimuli

-

complex scenes

How can we estimate dimensionality?

- same geometry

- new perspective

rotation

Principal Component Analysis

rank 1

rank 2

latent dimensions

ambient dimensions

high variance

low variance

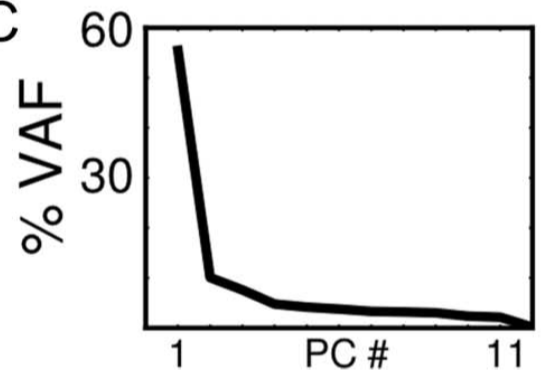

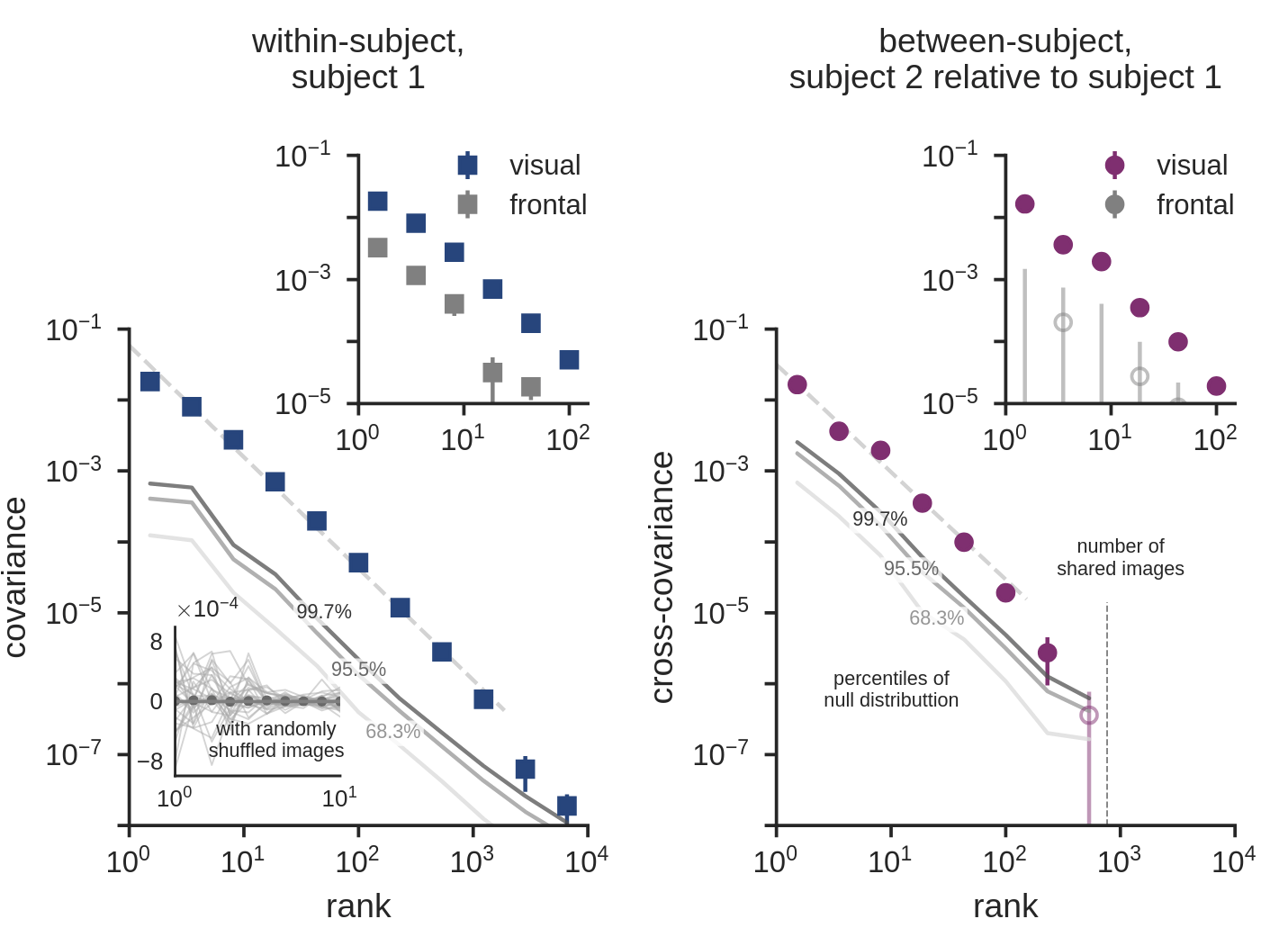

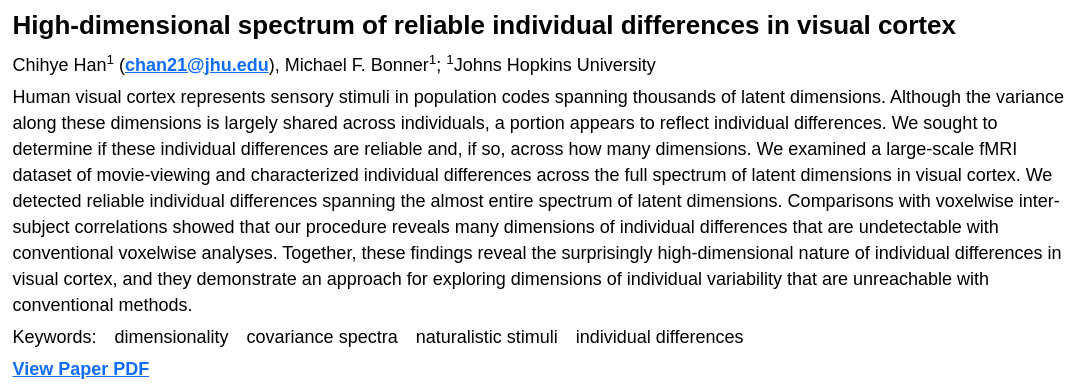

Key statistic: the covariance spectrum

latent dimensions sorted by variance

variance along the dimension

- logarithmic scales

- multiple orders of magnitude

low-dimensional code

high-dimensional code

no variance along other dimensions

variance along many dimensions

"core subset" of relevant dimensions

Key statistic: the covariance spectrum

variance along each dimension

Should we just apply PCA?

stimulus-related signal

trial-specific noise

generalizes

... across stimulus repetitions

... to novel images

trial 1

trial 2

neurons (or) voxels

stimuli

Learn latent dimensions

Step 1

Step 2

Evaluate reliable variance

If there is no reliable signal in the data, expected value = 0

Cross-decomposition measures stimulus-related signal.

The math of cross-decomposition

the (cross-)covariance matrix

its singular value decomposition

"splitting a matrix into the sum of rank-1 matrices"

The math of cross-decomposition

- Procrustes

- PLS-SVD (Partial Least Squares Singular Value Decomposition)

- hyperalignment (Haxby et al., 2011)

- the optimal rotation that aligns X to Y

- preserves geometry (shape; spectrum)

- "spectral decomposition of the cross-covariance"

PCA vs cross-decomposition

logarithmic binning + 8-fold cross-validation

... but cross-decomposition

has an expected value of 0.

PCA

cross-decomposition

If there is no stimulus-related signal

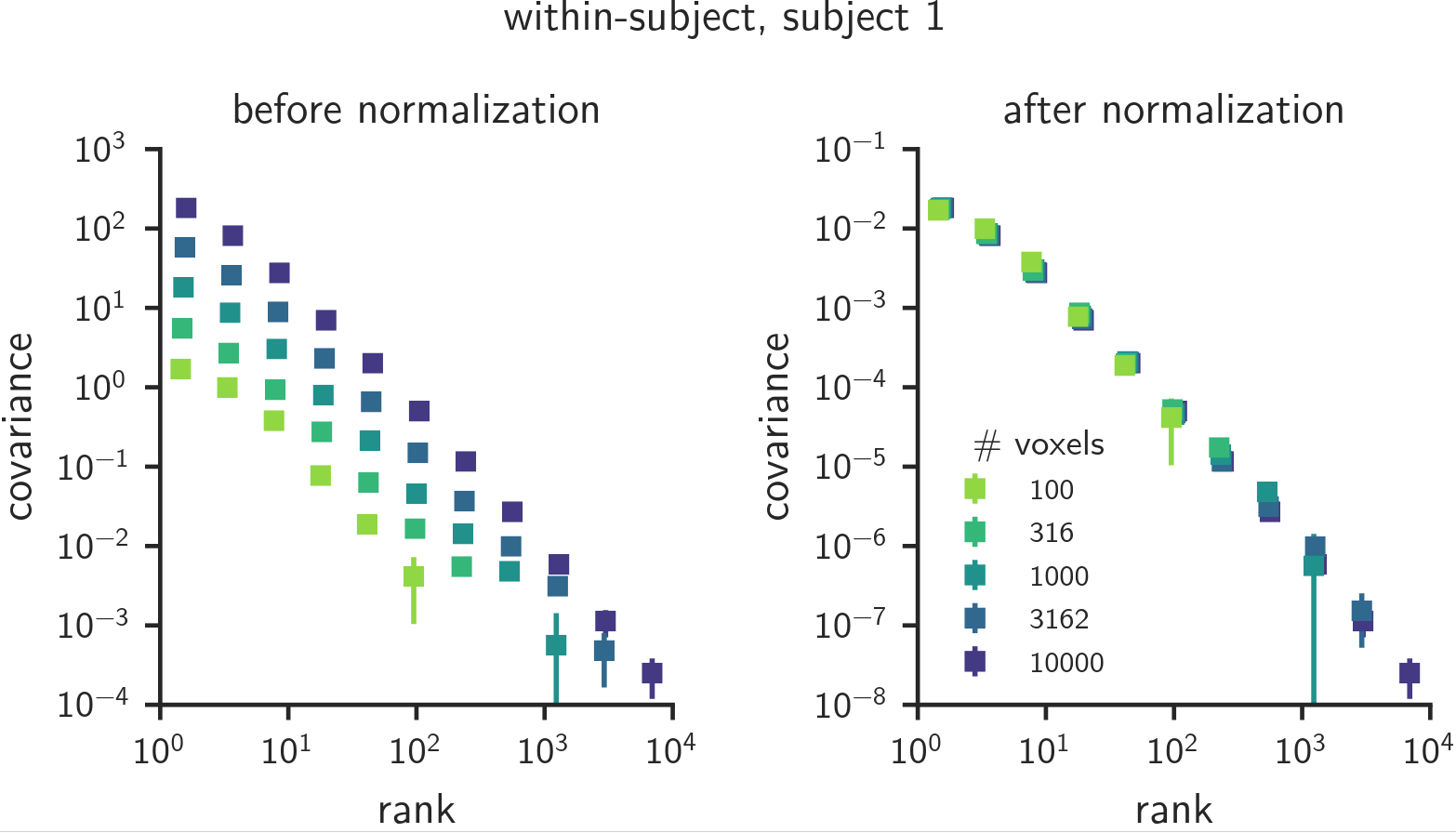

The covariance is normalized by the number of voxels.

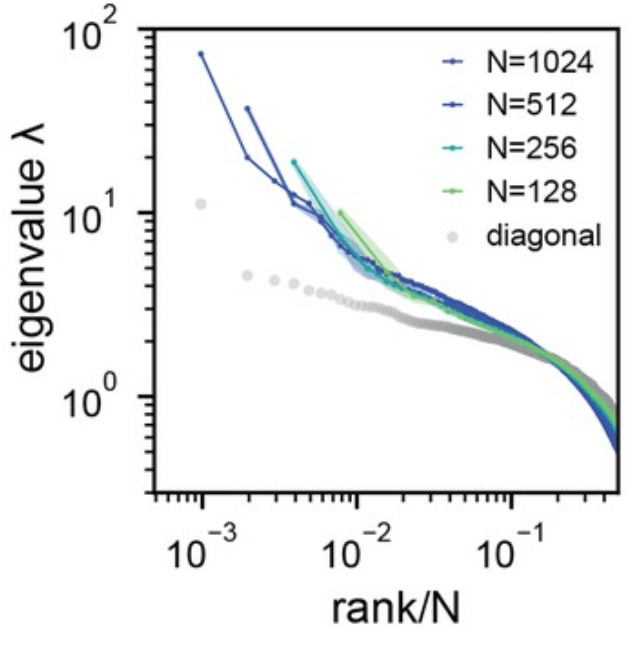

The spectrum depends on the size of the dataset.

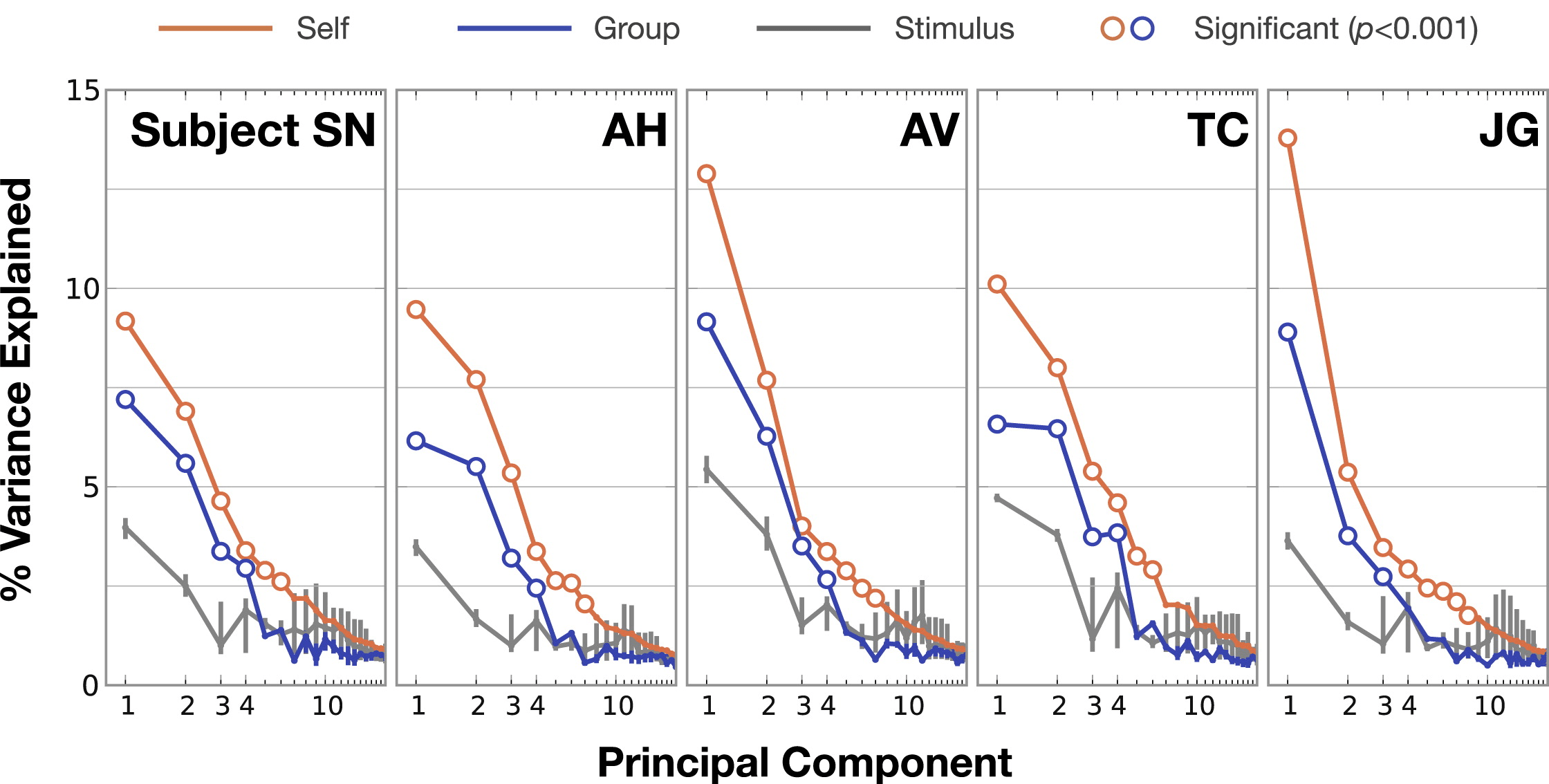

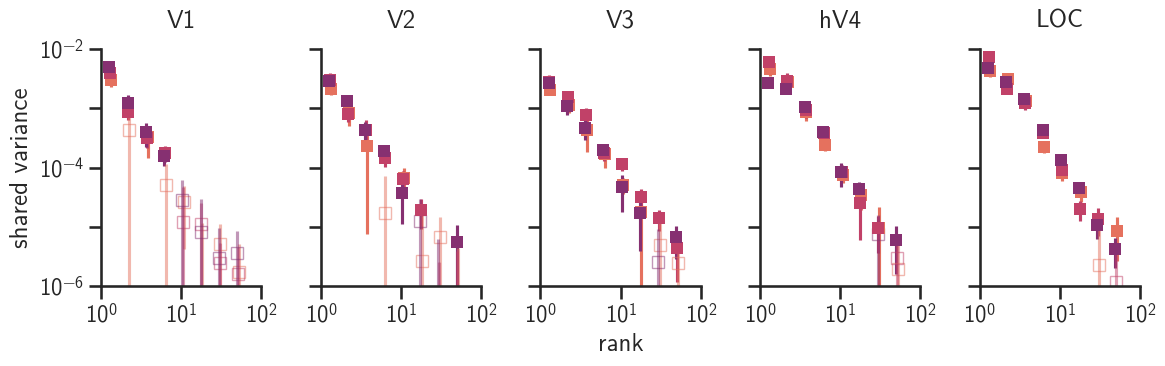

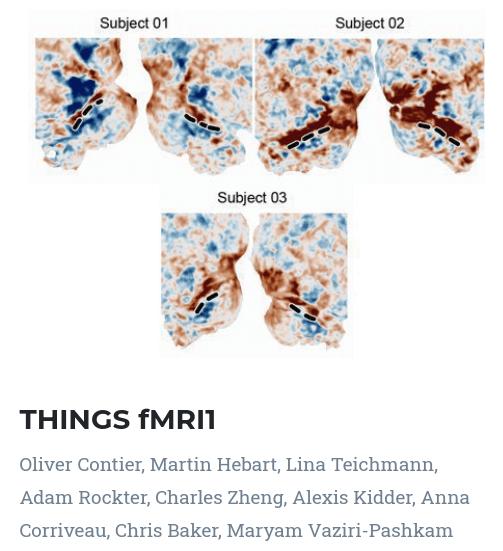

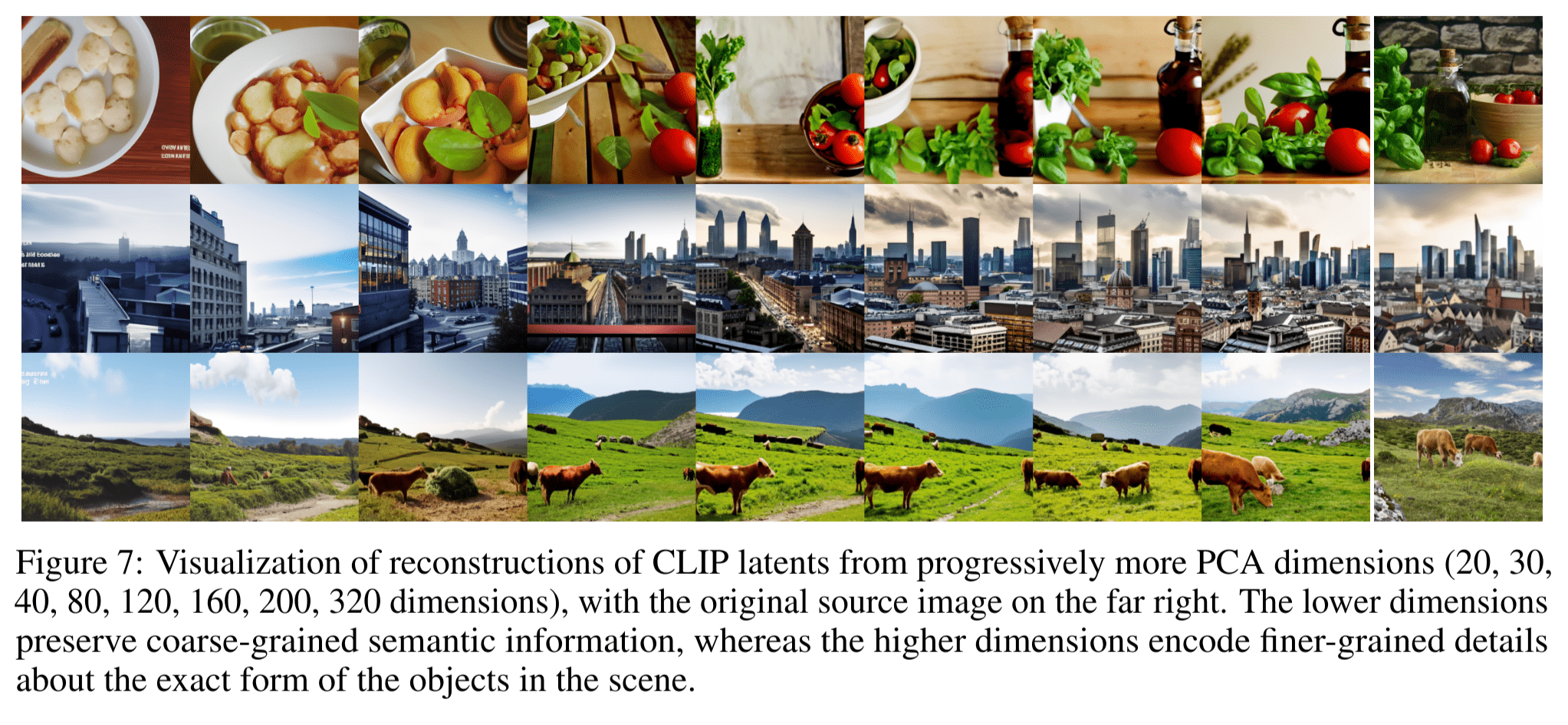

Our cross-validated covariance spectra

reliable variance on the test set

latent dimensions sorted by variance on the training set

Our cross-validated covariance spectra

vs

binned logarithmically to increase SNR

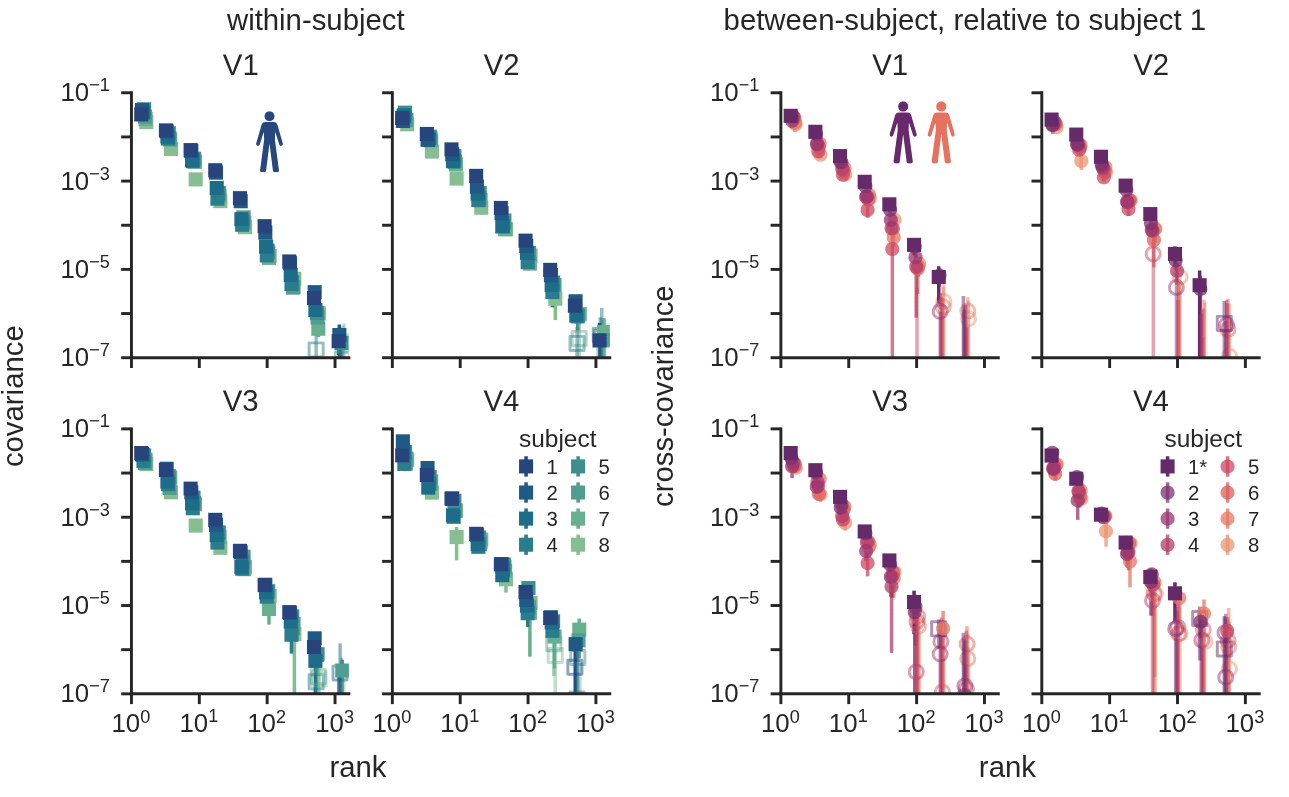

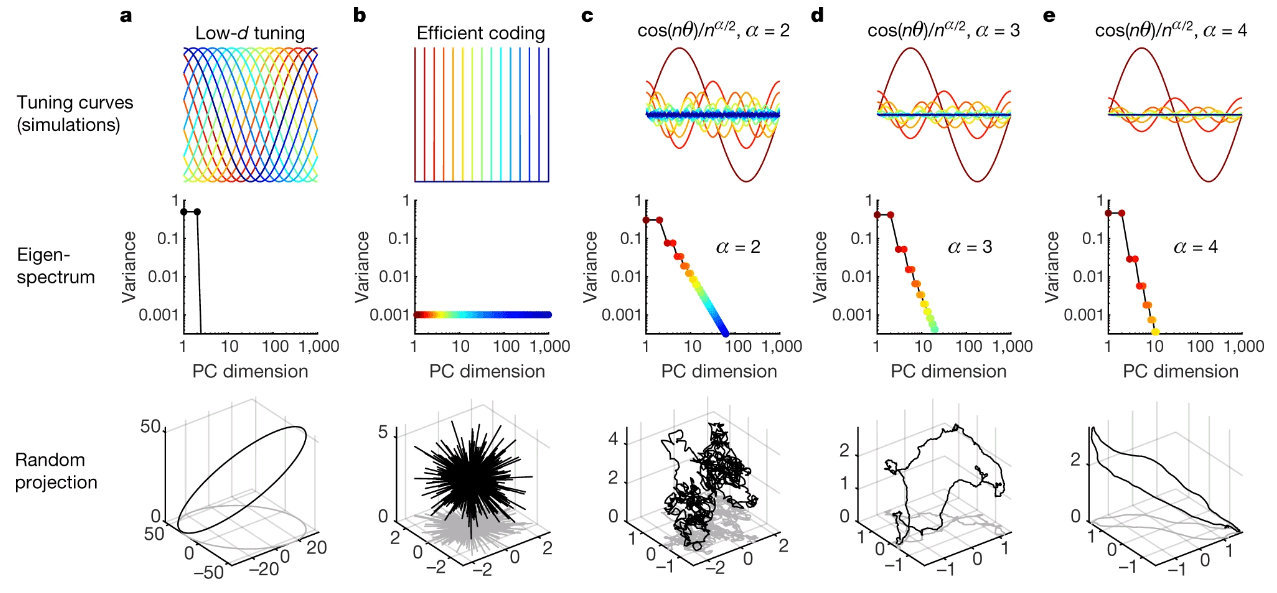

Visual cortex has power-law covariance spectra.

-

Not limited to a “core subset”

All dimensions are used, but weighted differently

-

Scale-free

All dimensions have variance distributed in the same way

-

No evidence of a break

More data, more dimensions?

Visual cortex has power-law covariance spectra.

artifact of analyzing a large ROI?

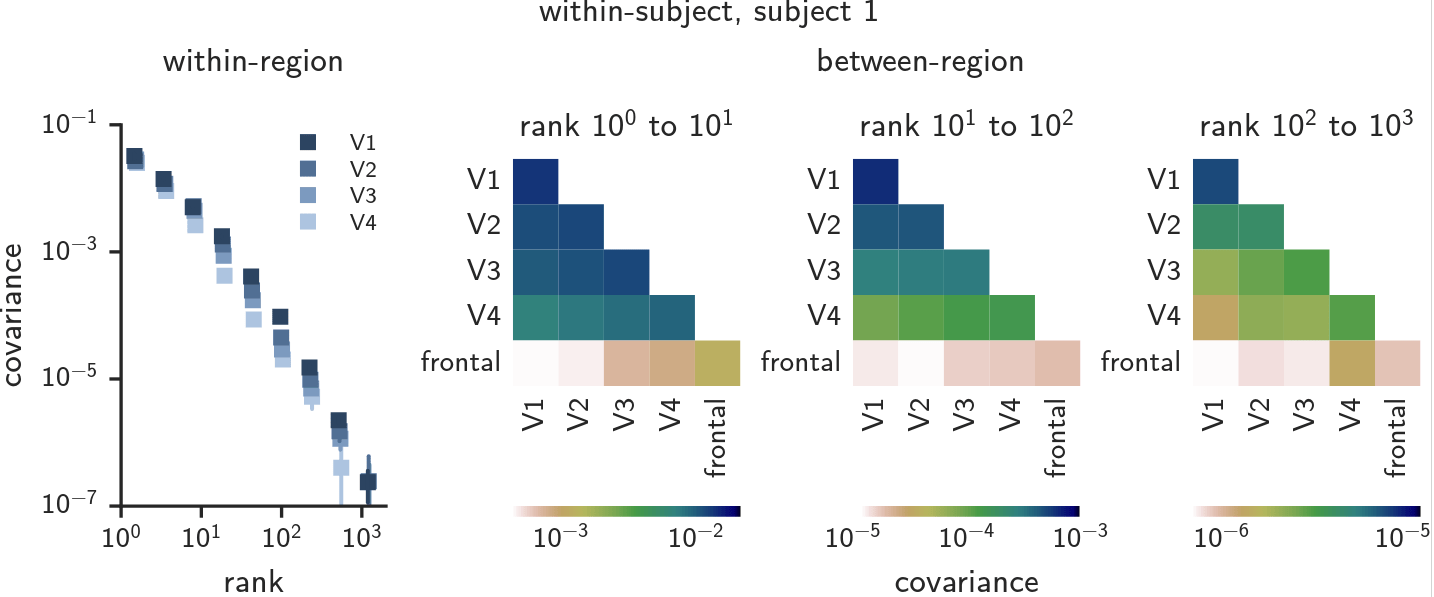

High-dimensional across multiple levels of the visual hierarchy

| dimensions | stimuli | system | method | |

|---|---|---|---|---|

| Huth et al. (2012) | 6-8 (4 shared) | movies | human cortex | PCA |

| Khosla et al. (2022) | 20 (5 reliable) | scenes | human ventral visual stream | NMF |

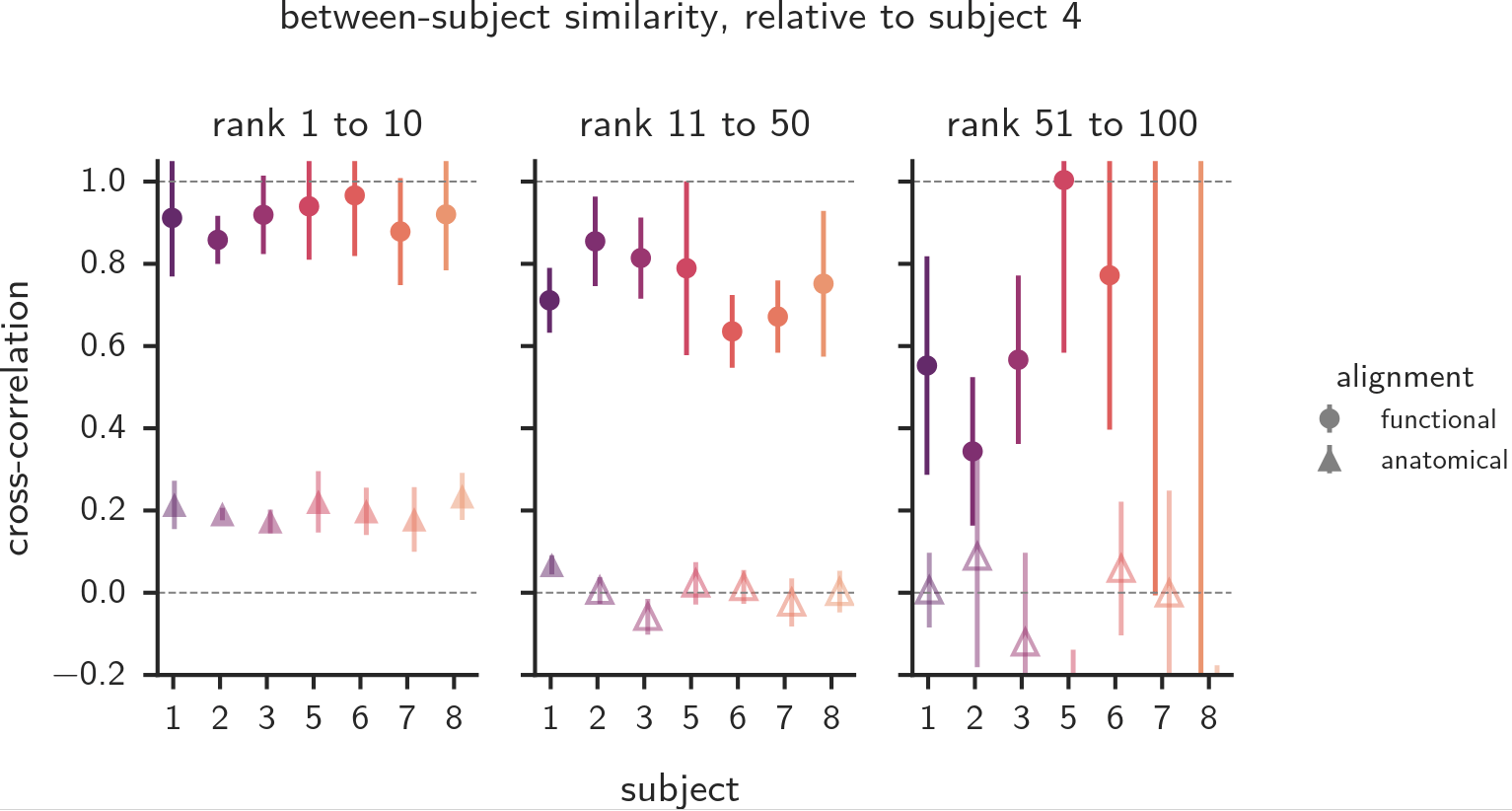

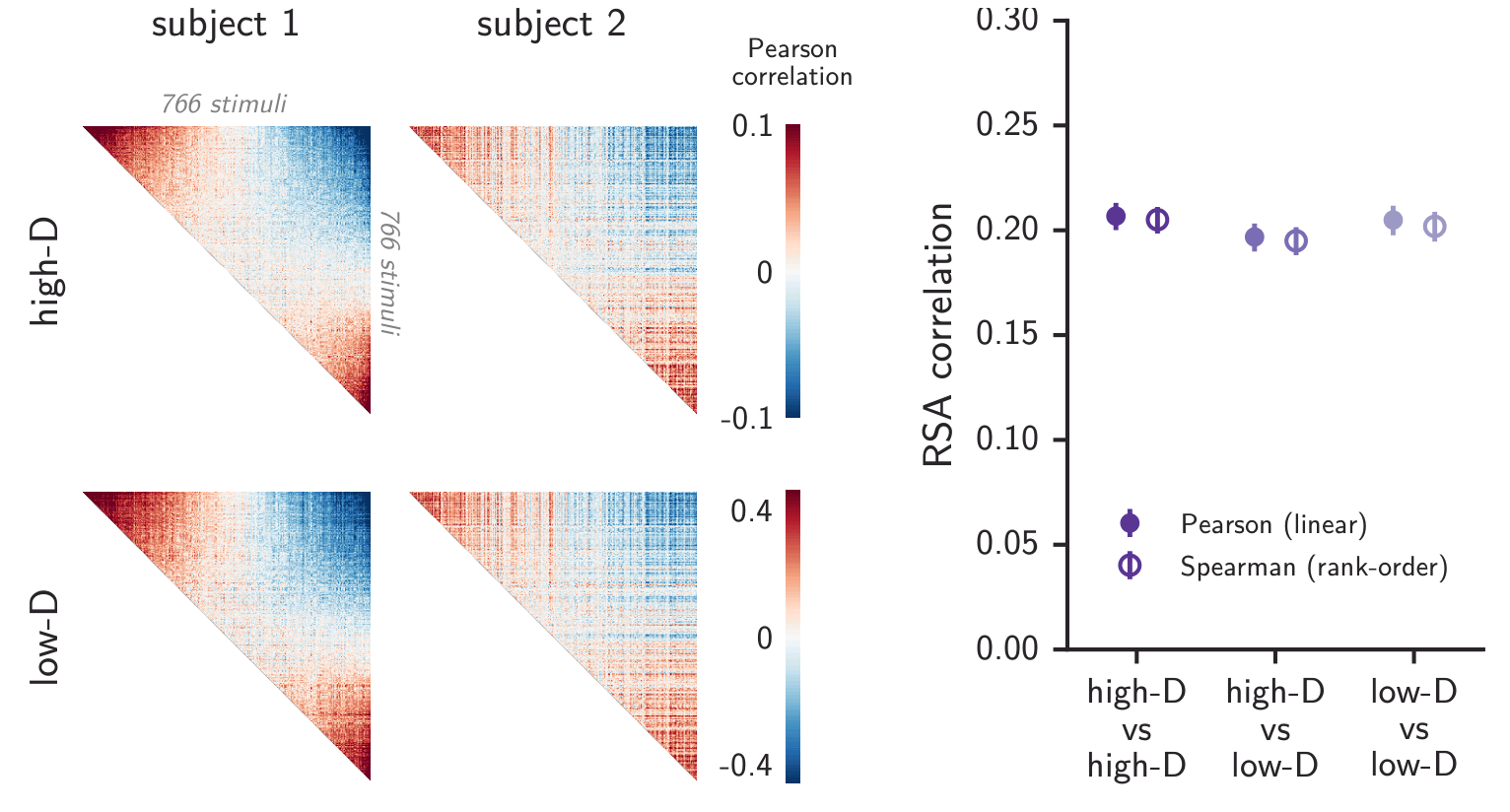

Is this high-dimensional structure shared across people?

vs

reliable, and shared

reliable, but idiosyncratic

Cross-decomposition still works!

generalizes

... across stimulus repetitions

... to novel images

... across participants

subject 1

subject 2

neurons (or) voxels

stimuli

Learn latent dimensions

Step 1

Step 2

Evaluate reliable variance

Functional – not anatomical – alignment is required

Cross-individual covariance spectra

Shared high-dimensional structure across individuals

reliable, and shared

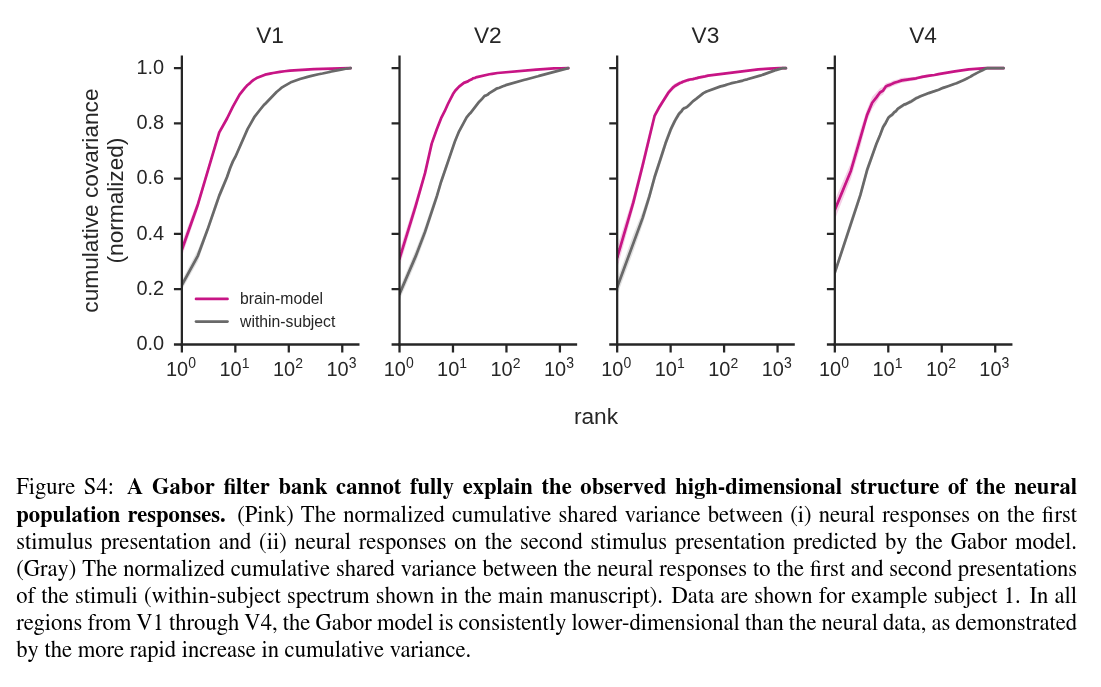

Are these spectra just an artifact of the method used?

No, they represent real high-dimensional signal!

Visual cortex representations are high-dimensional.

1

vs

vs

striking universality across individuals

high-dimensional

power-law covariance

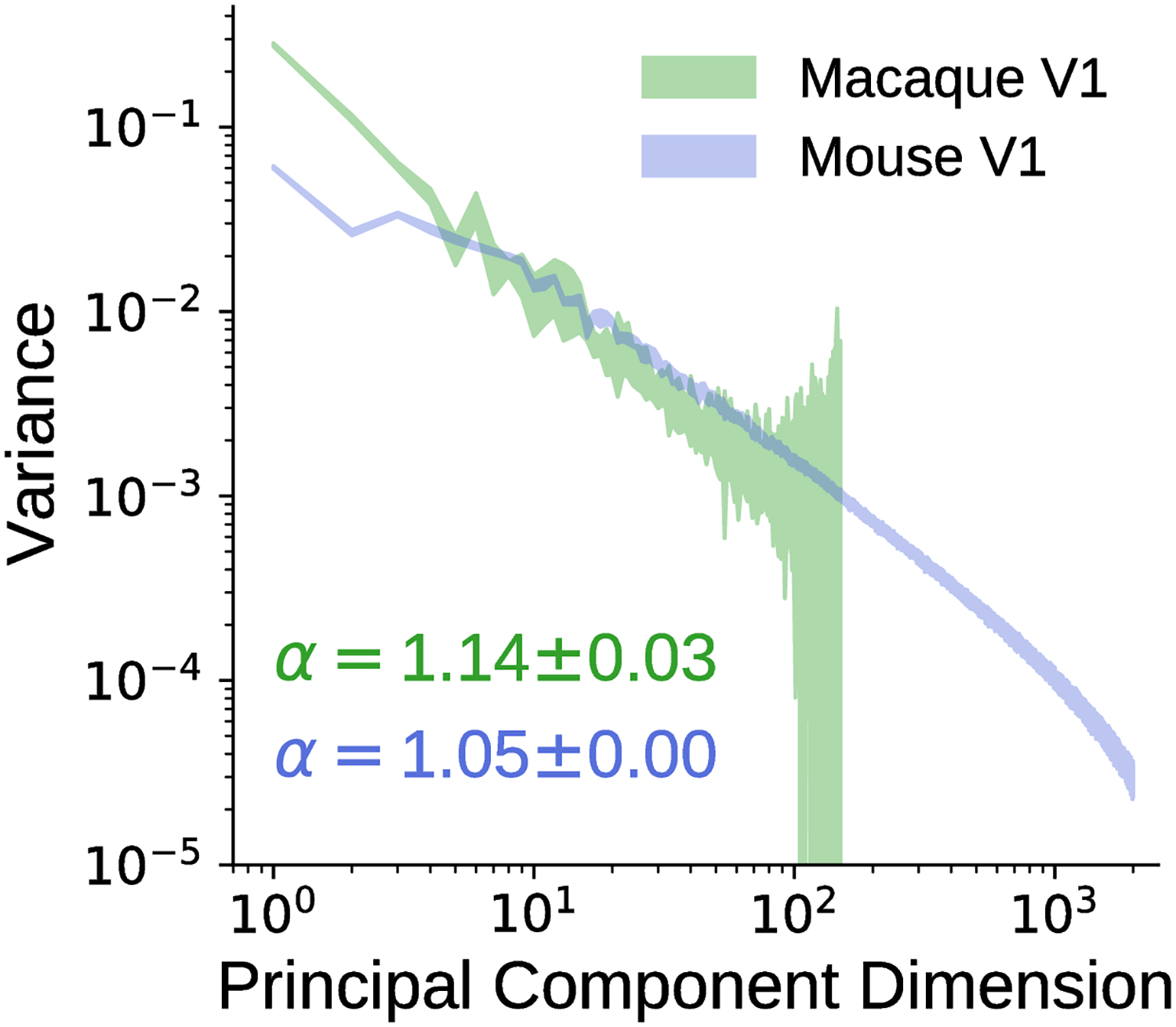

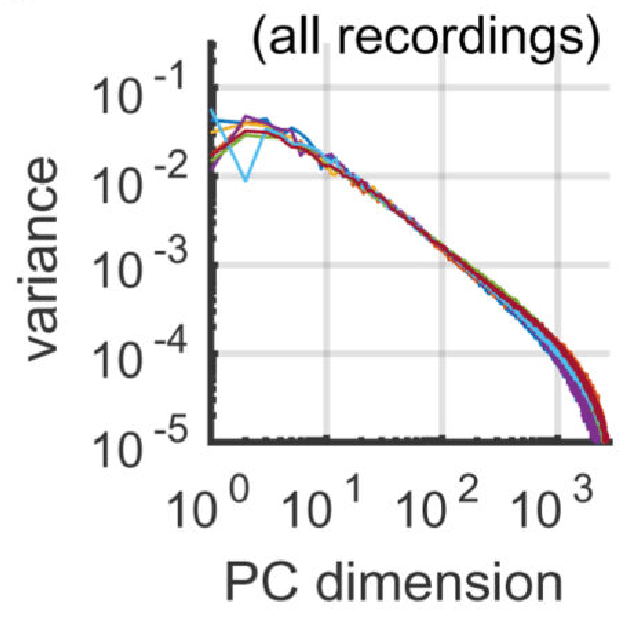

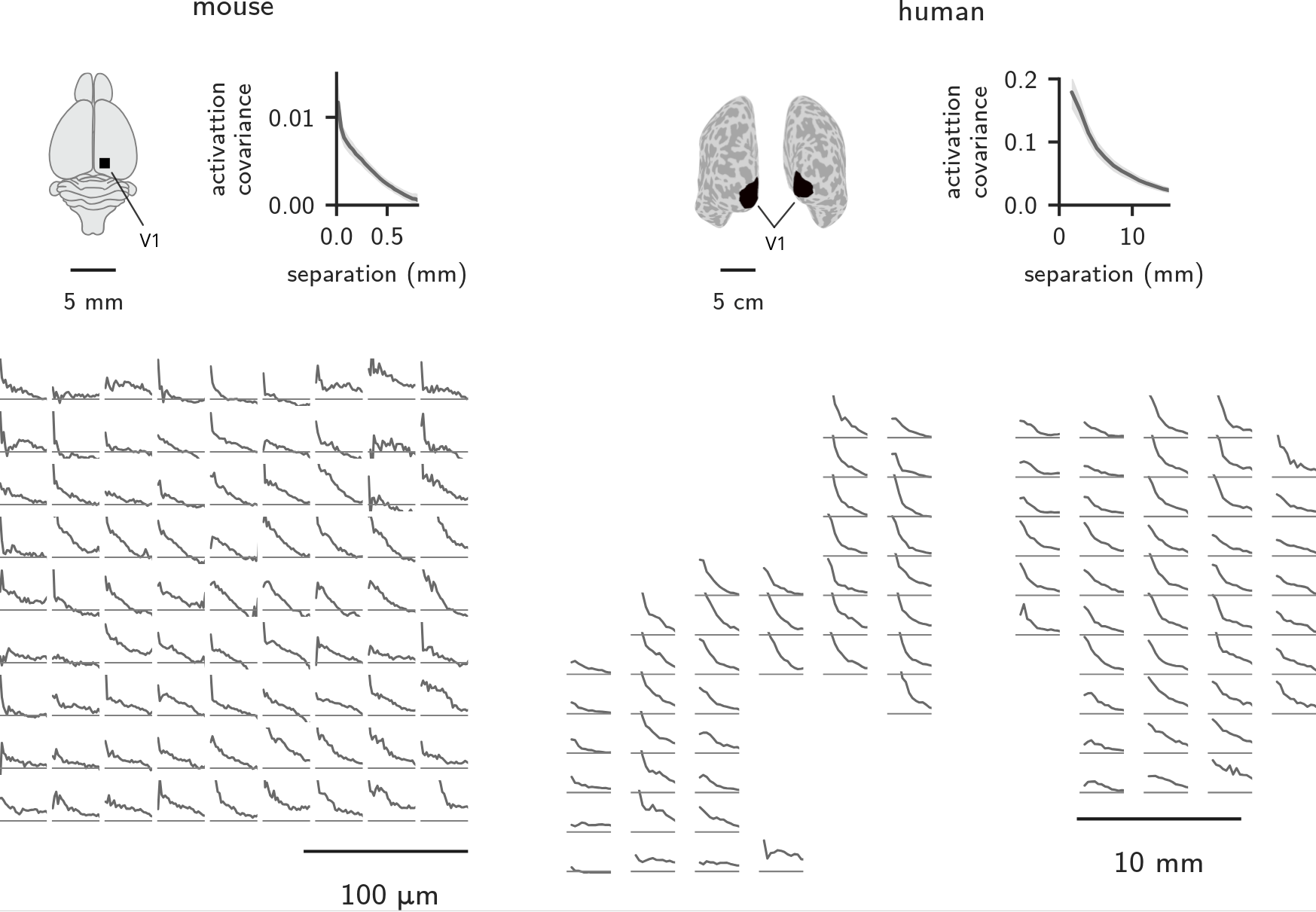

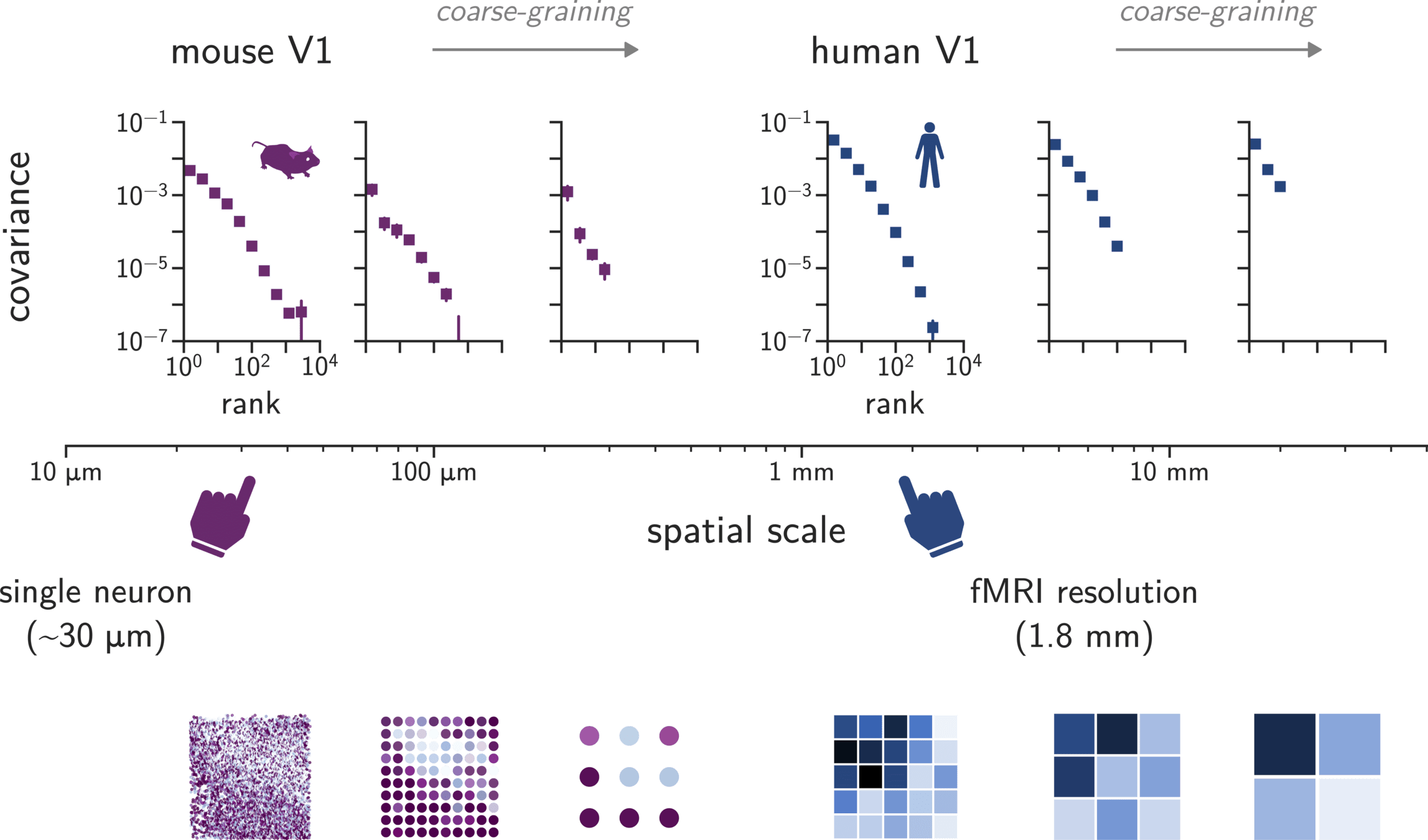

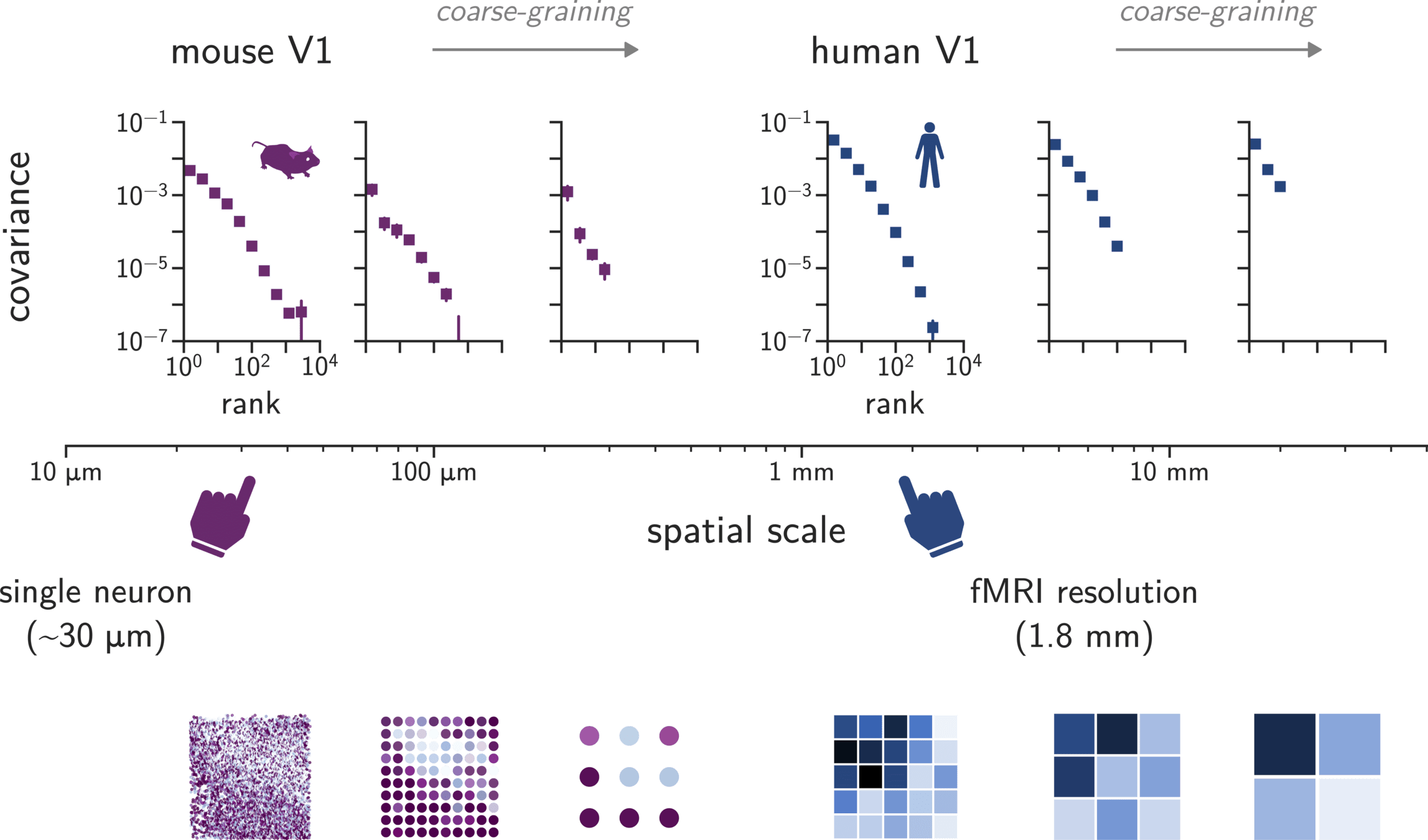

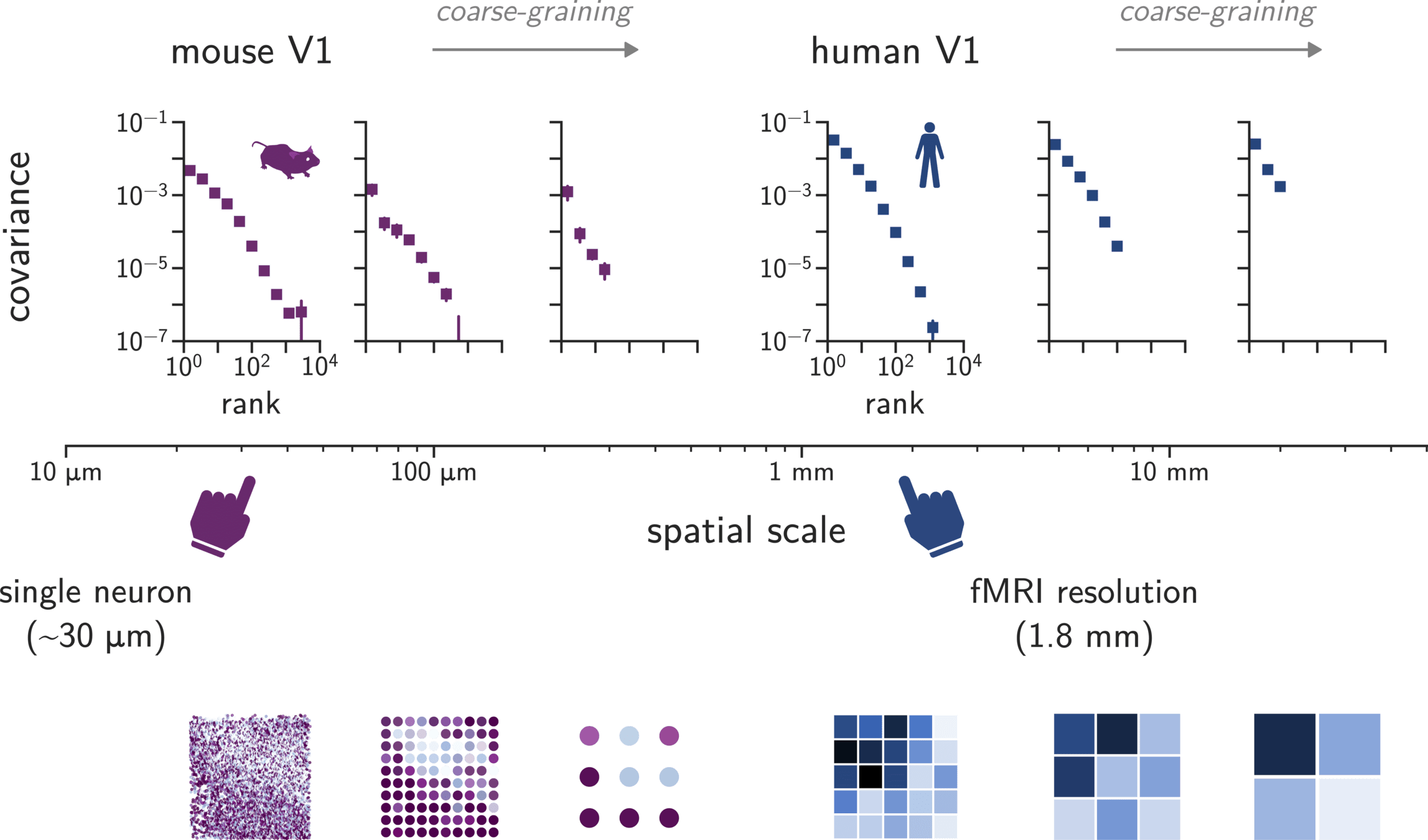

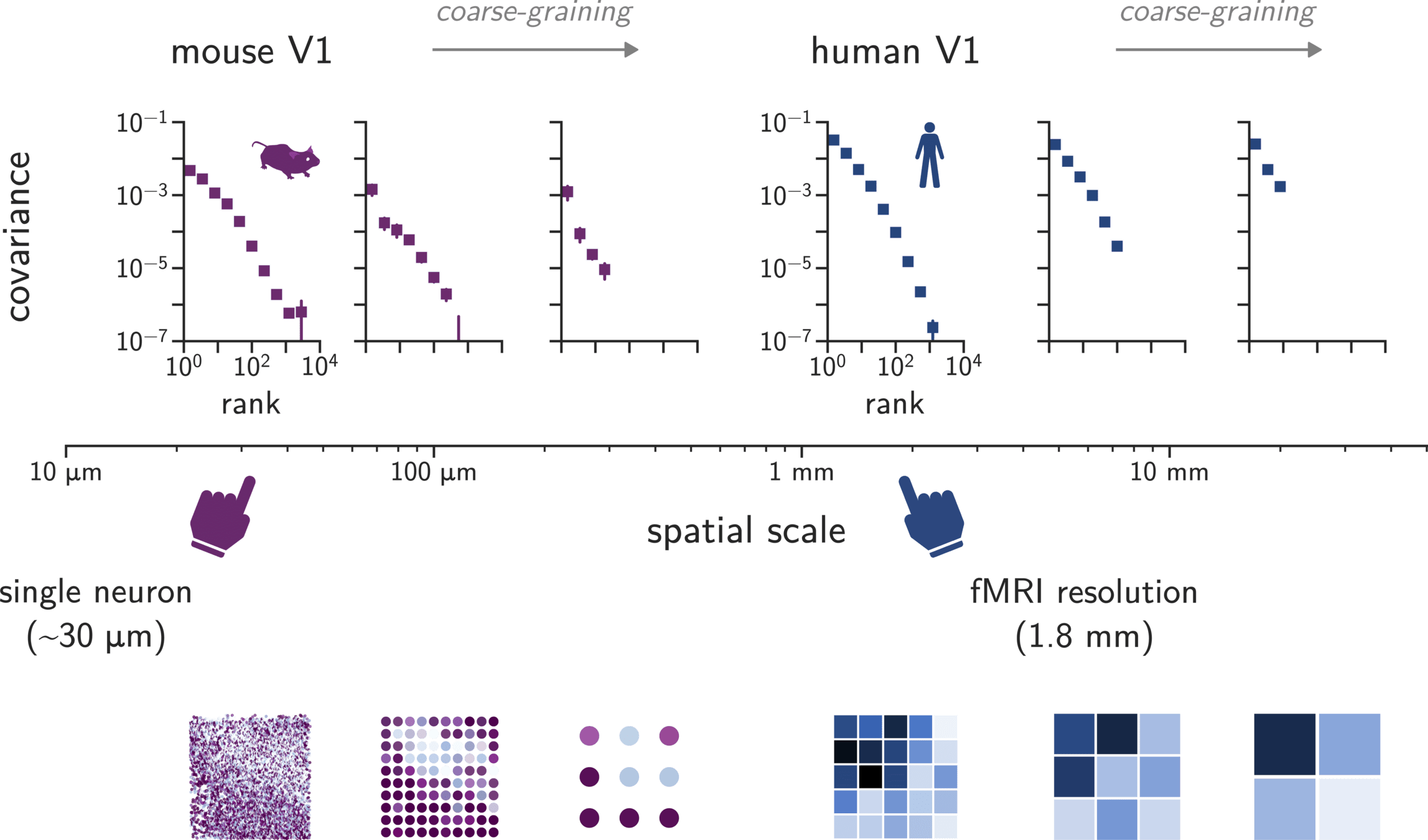

Similar power-law spectra in mouse V1

- calcium imaging

- 10,000 neurons

- 2,800 natural images

6 mice

calcium imaging

~10,000 V1 neurons

~2,800 natural images

Similar power-law spectra in mouse V1

Similar neural population statistics

- in different species,

- across imaging methods,

- at very different resolutions.

Why does this happen?

also shared across individuals!

Outline of today's talk

Visual cortex representations are high-dimensional.

1

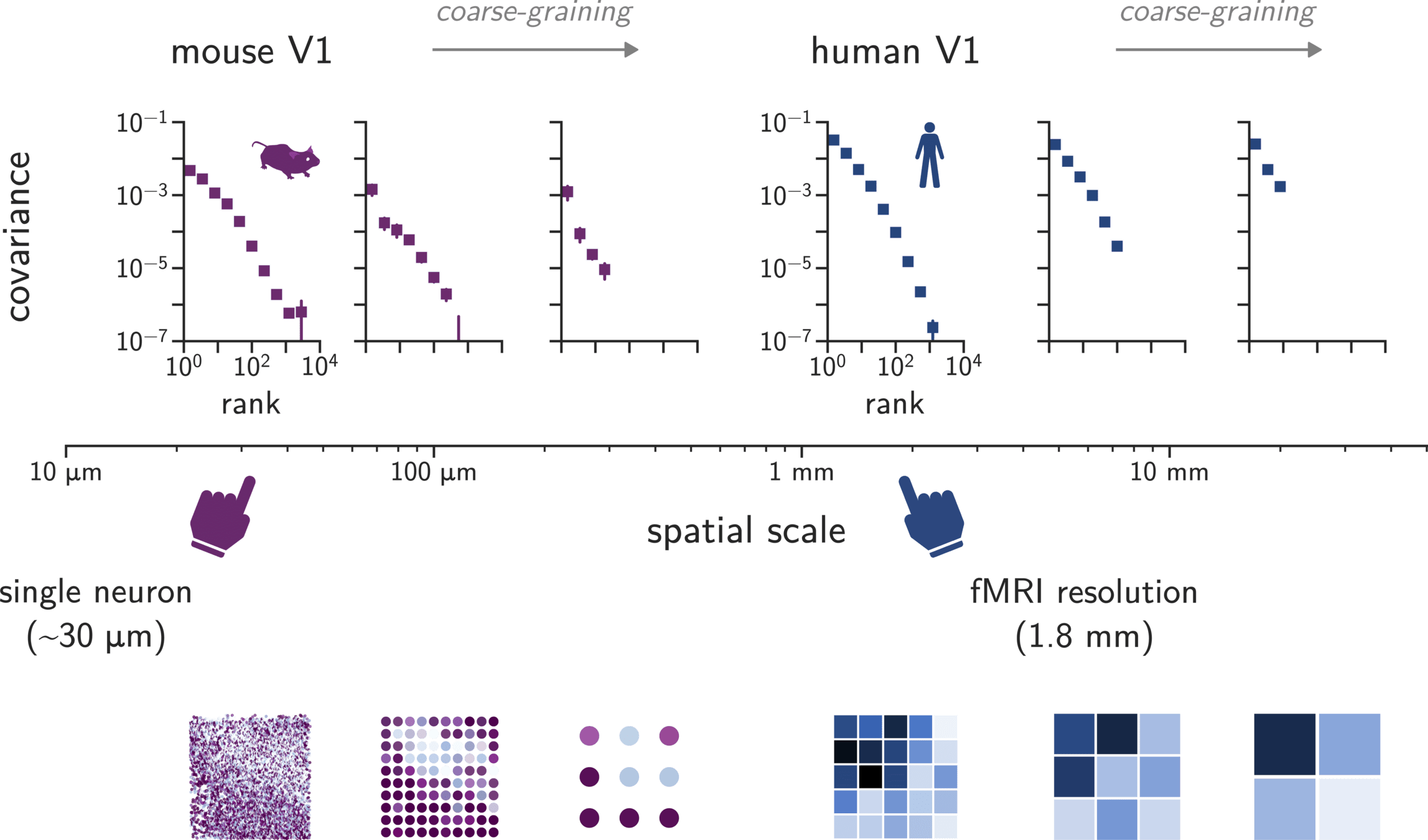

They are self-similar over a huge range of spatial scales.

2

The same geometry underlies mental representations of images.

3

Gauthaman, Menard & Bonner, in preparation

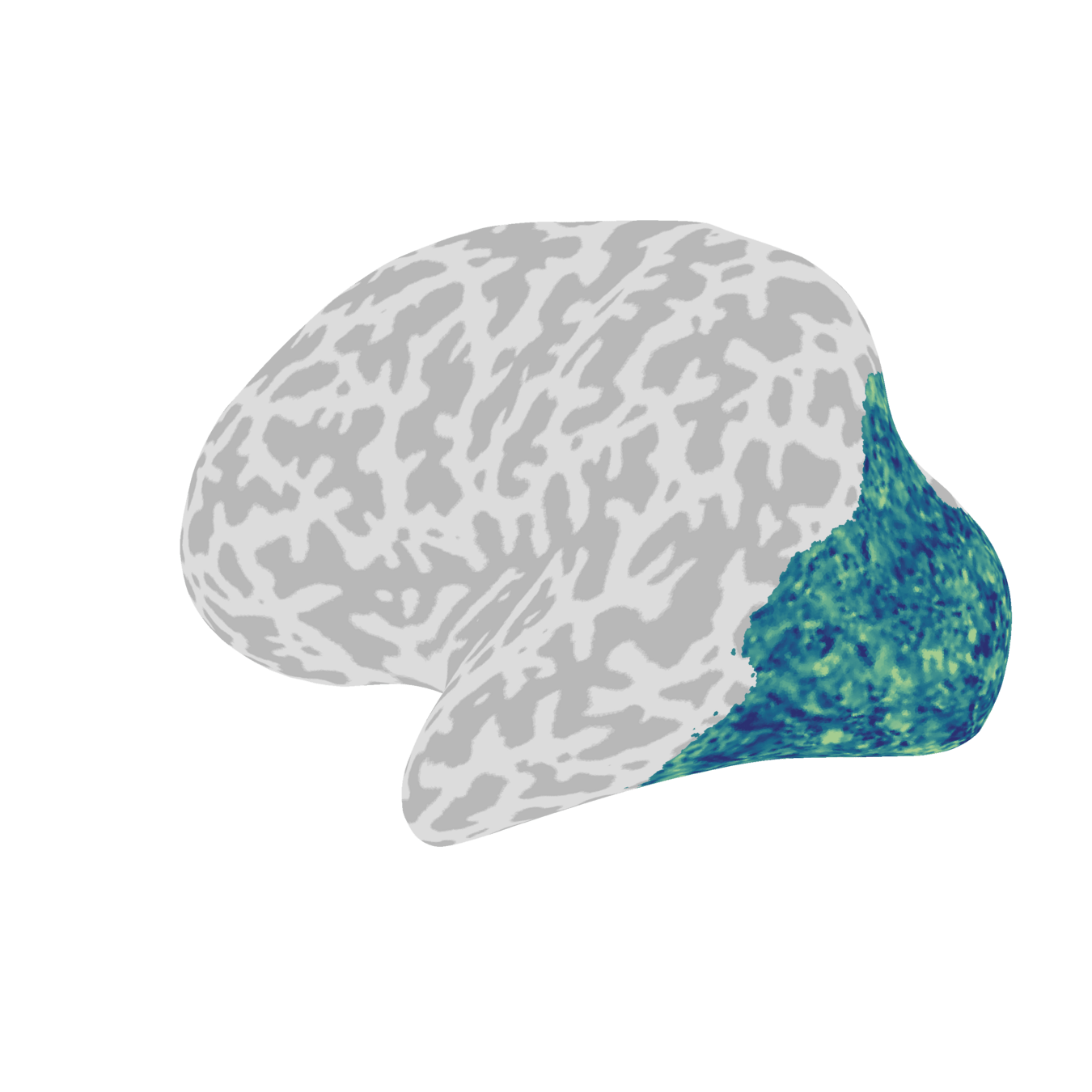

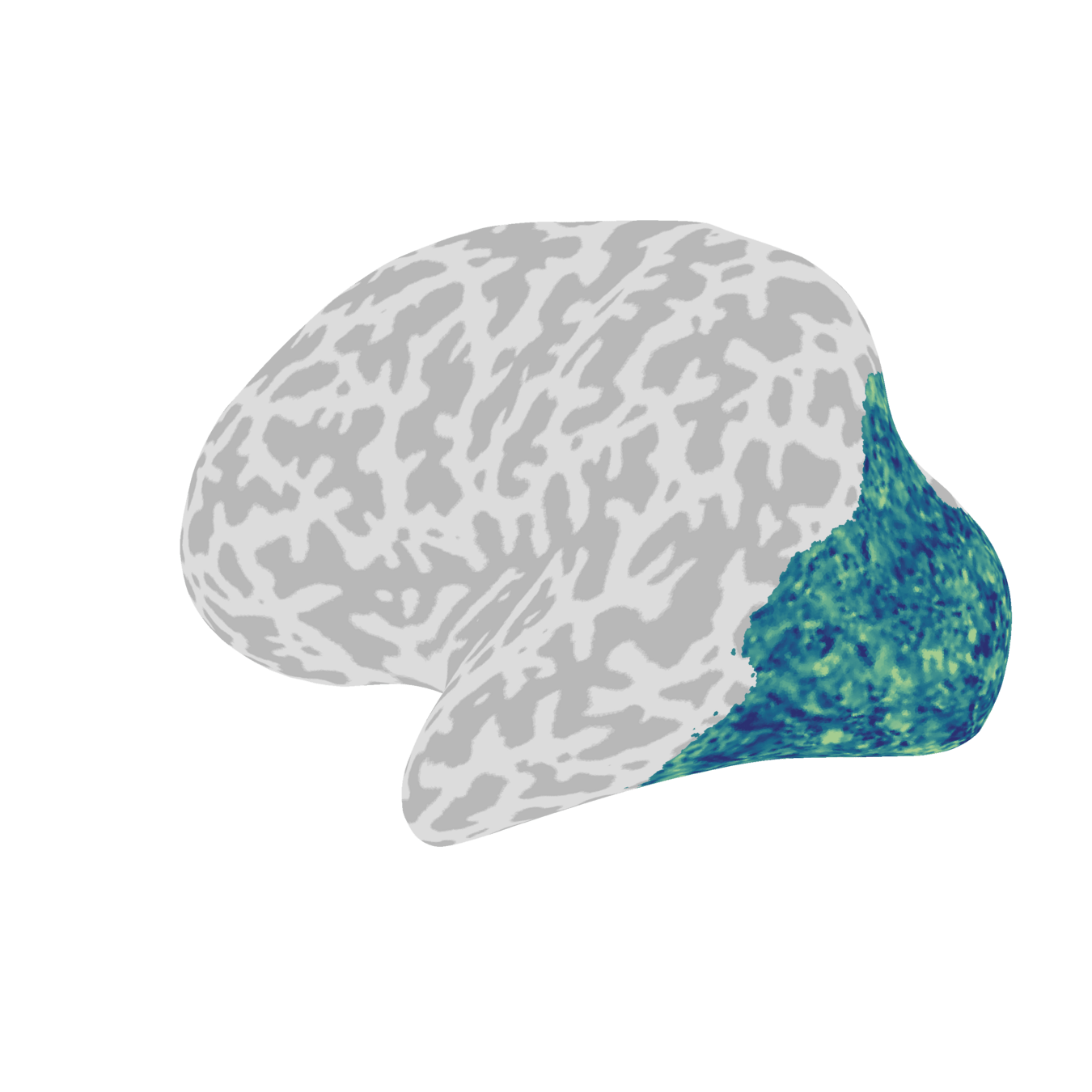

Learn latent dimensions

Step 1

Each is a linear combination of voxels

voxel 1

voxel 4

voxel N

...

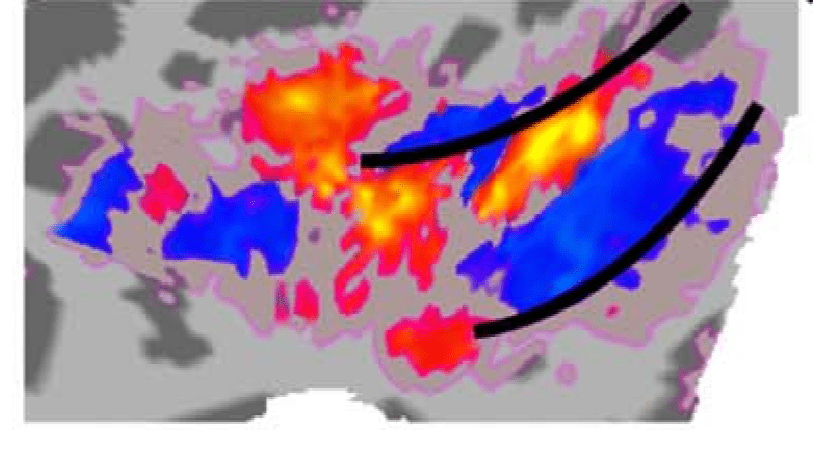

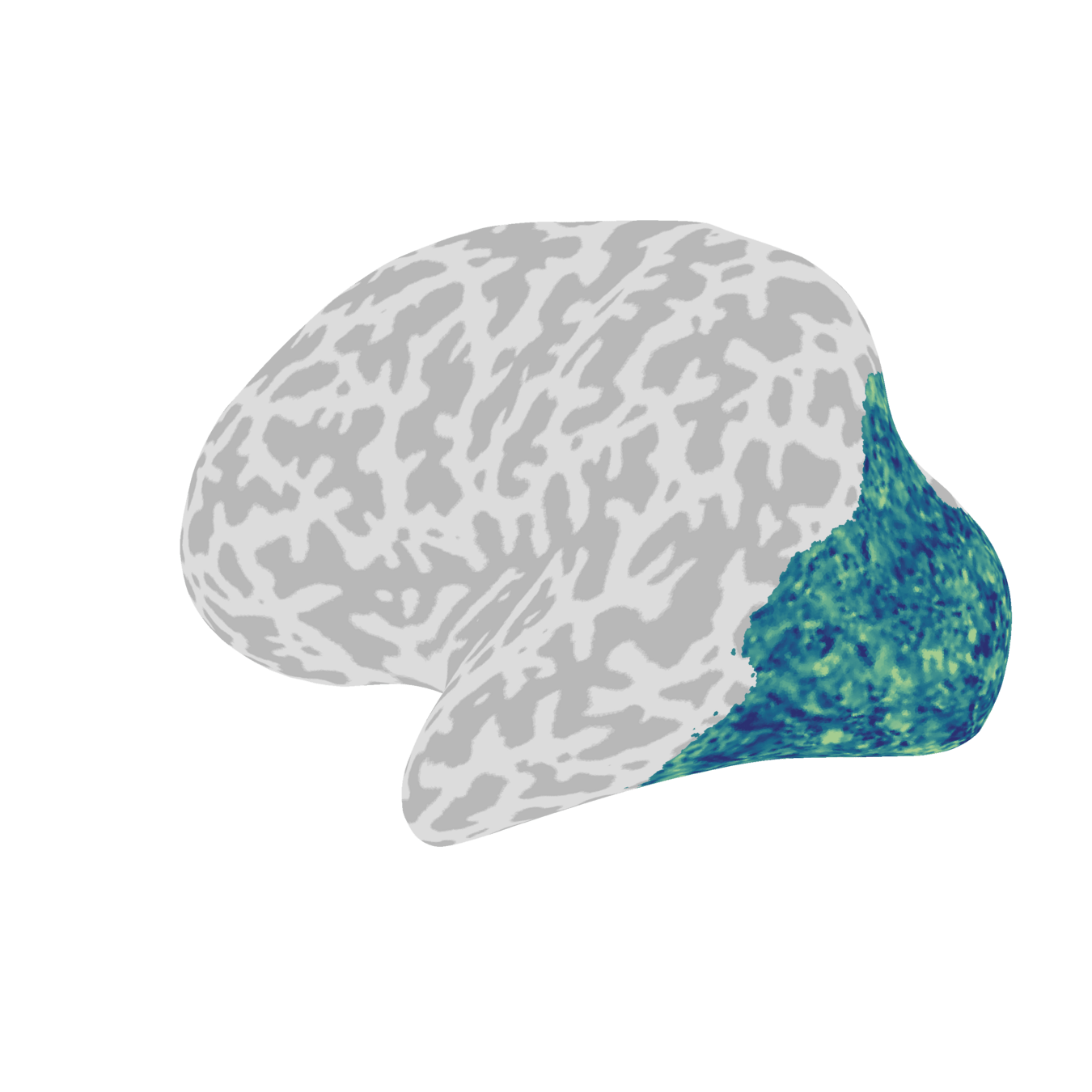

Latent dimensions are spatially distributed on cortex.

low-rank

high-rank

1

10

100

1,000

large spatial scale

small spatial scale

Latent dimensions are spatially distributed on cortex.

They show a rank-dependent spatial pattern.

1

30 mm

Latent dimensions are spatially distributed on cortex.

We can measure the characteristic spatial scale of each latent dimension.

human

mouse

10,000 neurons

primary visual cortex (V1)

Variance is distributed consistently over spatial scales.

human

mouse

Most variance in the data is on large spatial scales.

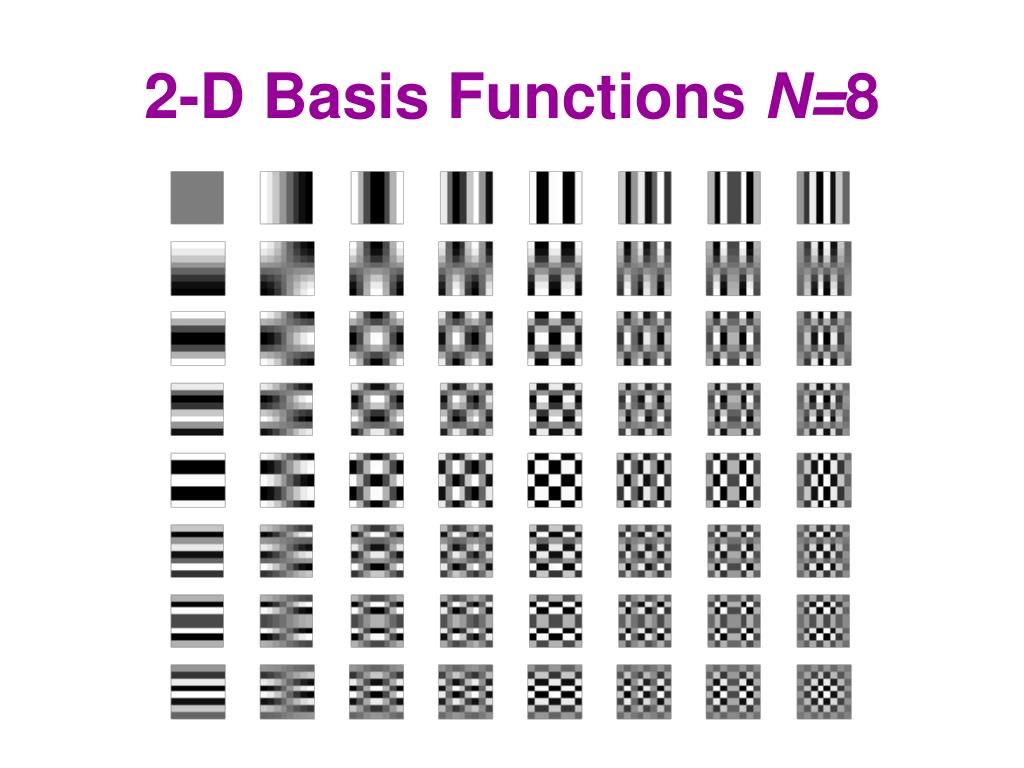

1-D Fourier basis

2-D Fourier basis

Reminiscent of a Fourier basis ...

V1 correlation functions are ~translationally invariant

Answer: when data are translationally invariant

When do latent dimensions look like a Fourier basis?

number of neurons in human V1

spacing between neurons

a code that leverages all latent dimensions

Spatial scale: a potential organizing principle?

- Most variance in the data is on large spatial scales, in both humans and mice

- Even low-resolution imaging should capture most of the variance in the data

Coarse-graining should preserve covariance spectra.

spatial binning

high-resolution data

simulated low-resolution data

V1 is self-similar over a wide range of spatial scales.

2

High-resolution neuroimaging probes low-variance dimensions

Invariance to spatial scale explains similar findings across fields

Outline of today's talk

Visual cortex representations are high-dimensional.

1

They are self-similar over a huge range of spatial scales.

2

The same geometry underlies mental representations of images.

3

Gauthaman, Menard & Bonner, in preparation

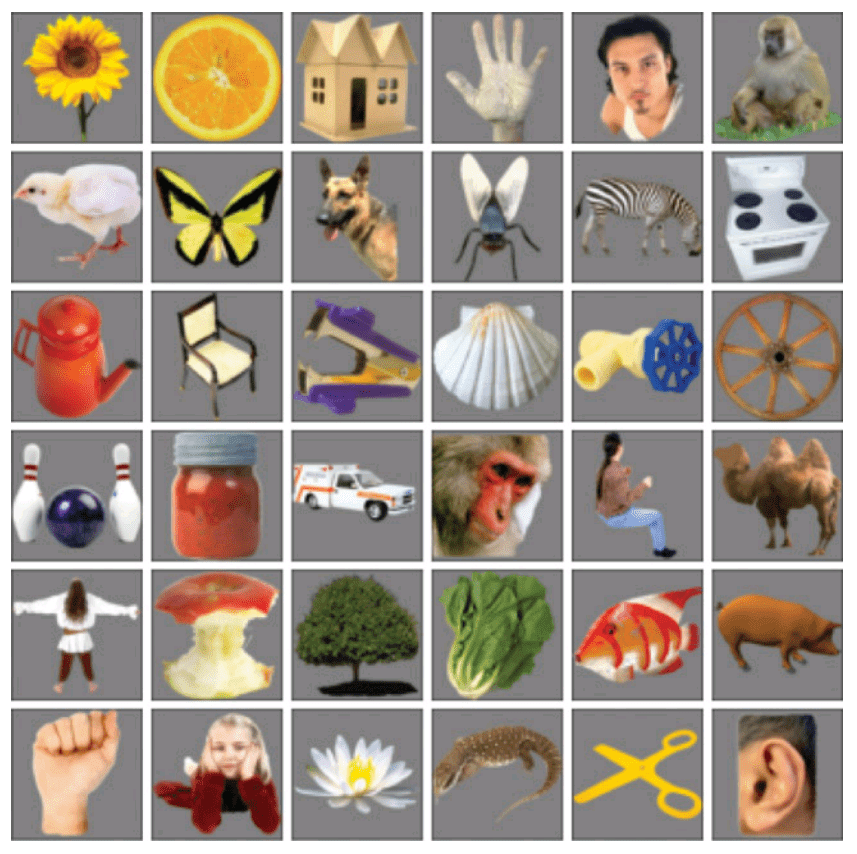

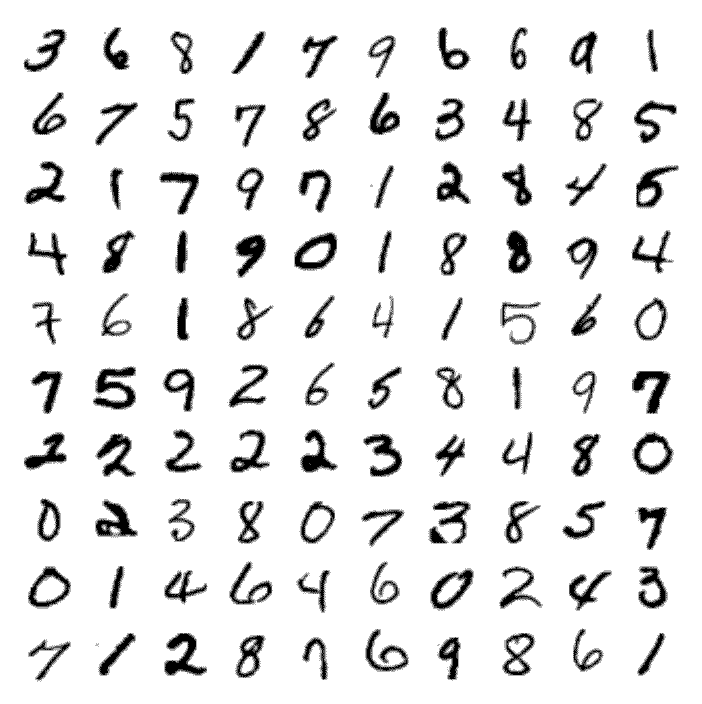

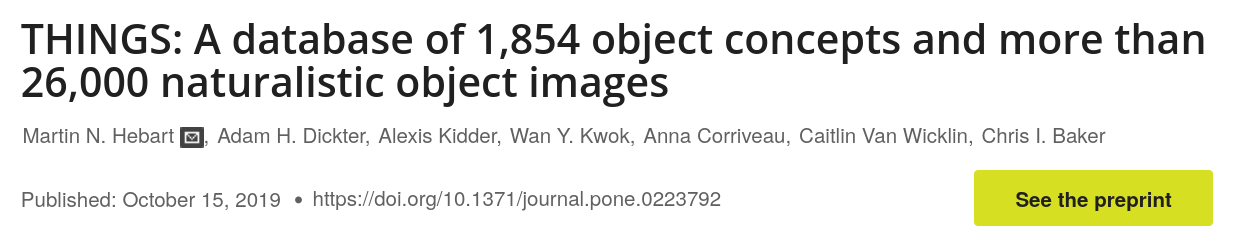

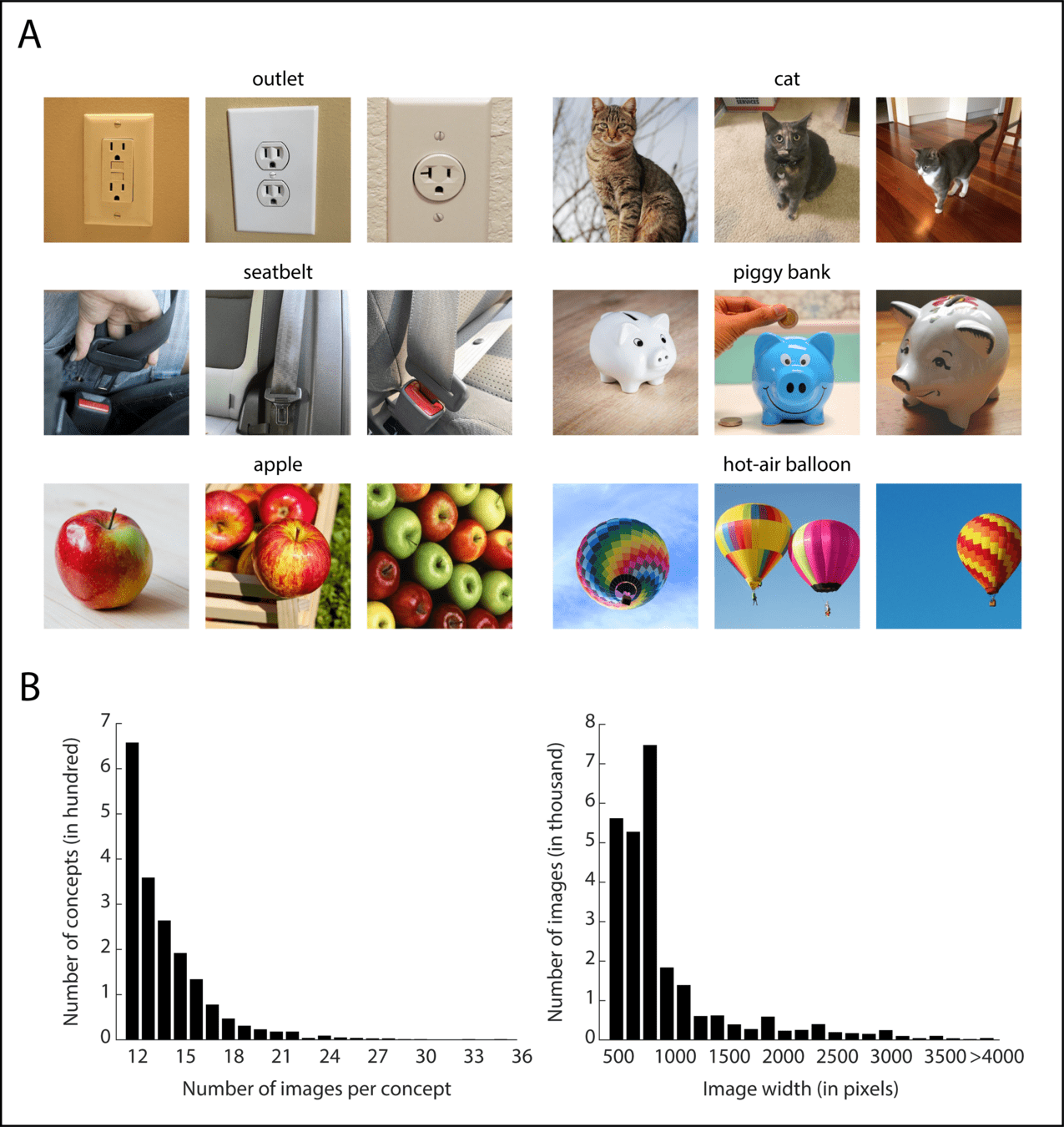

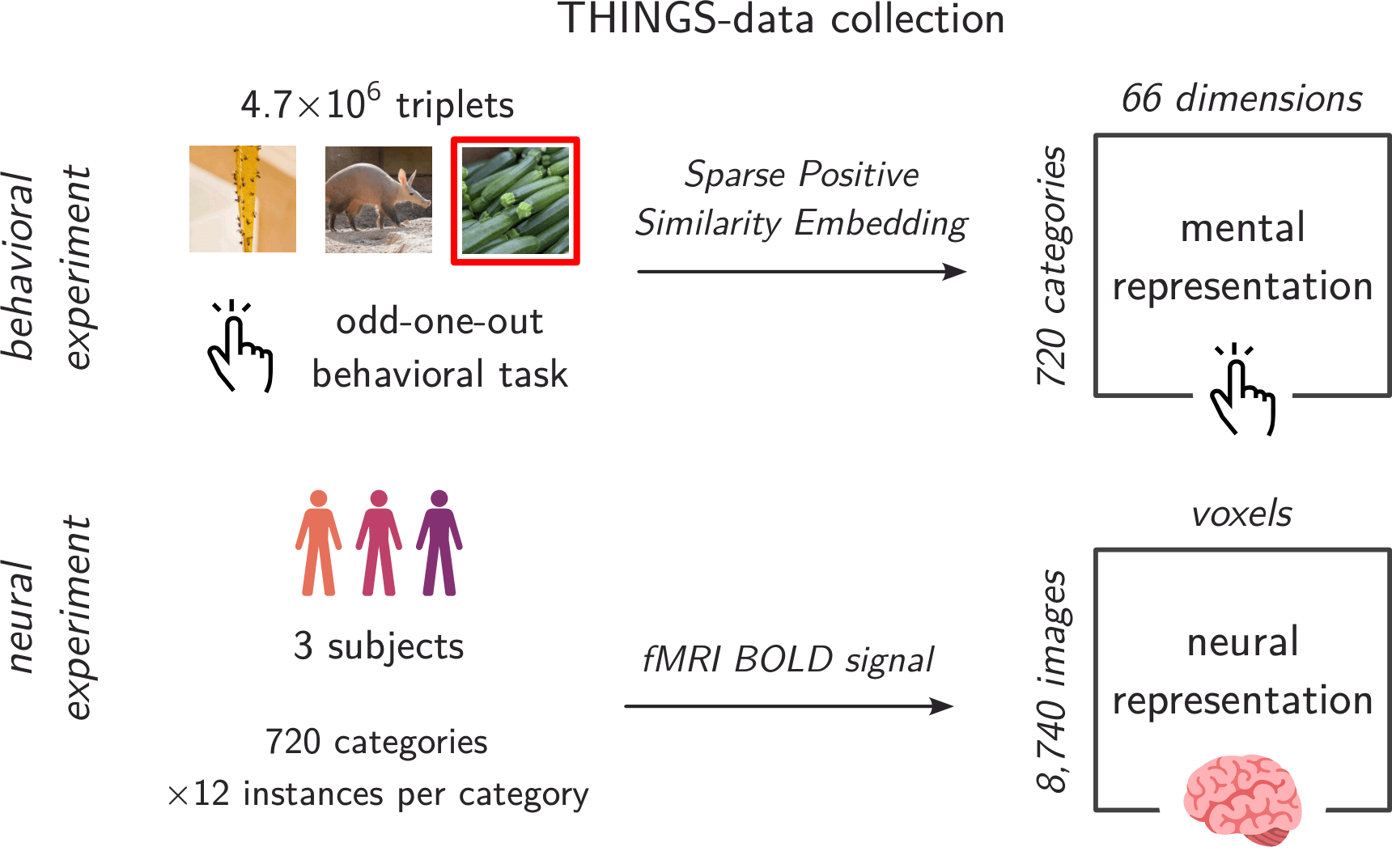

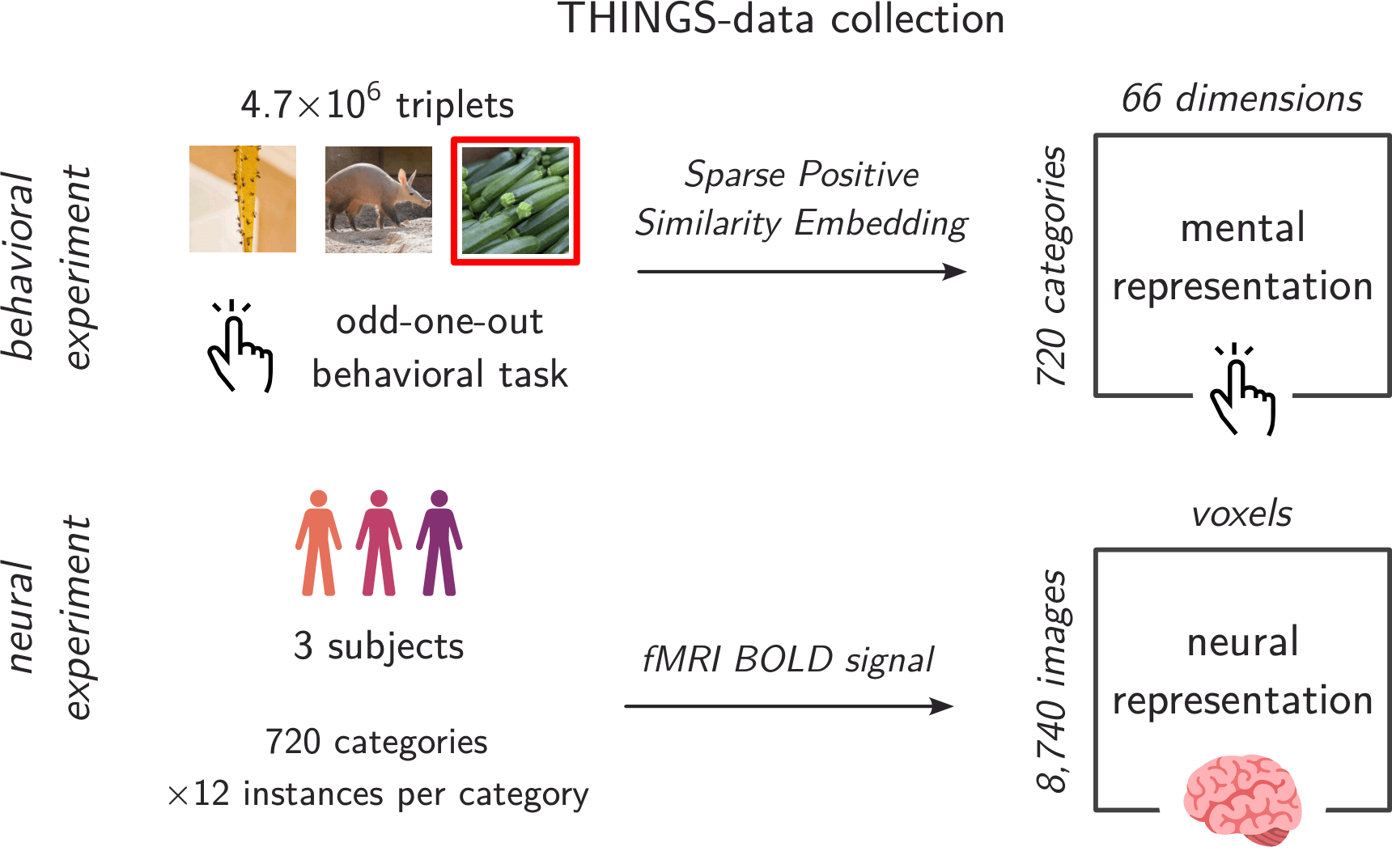

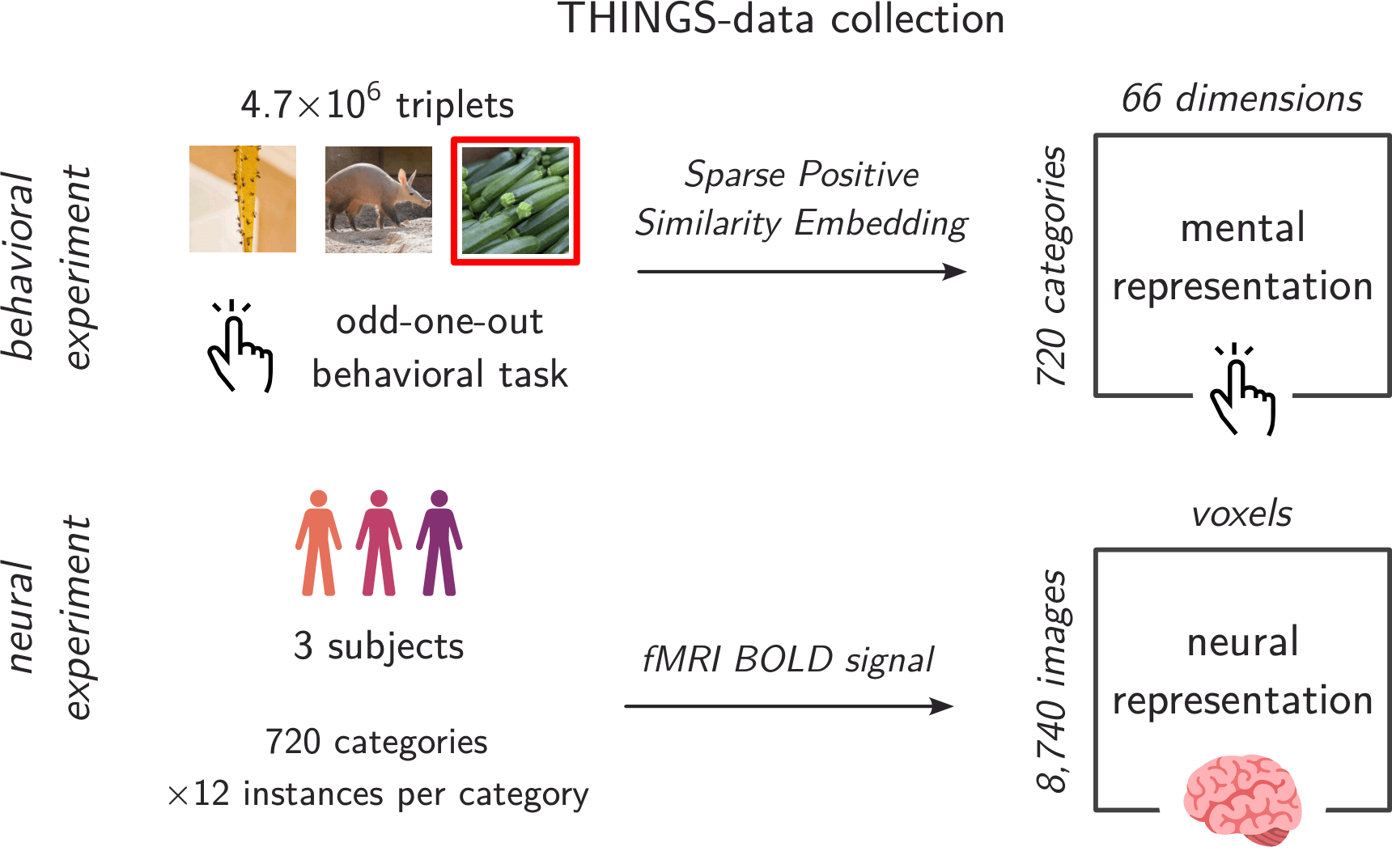

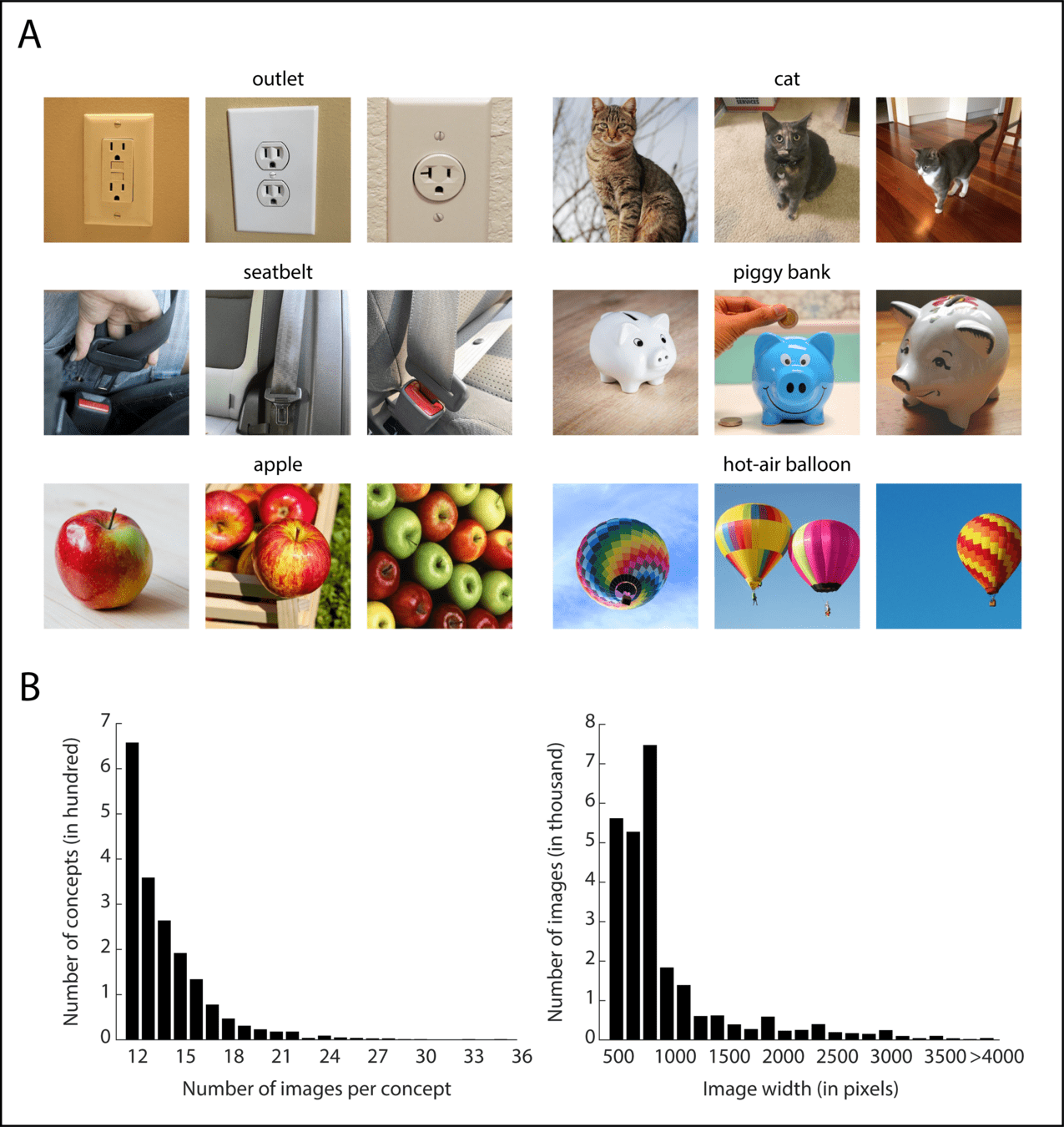

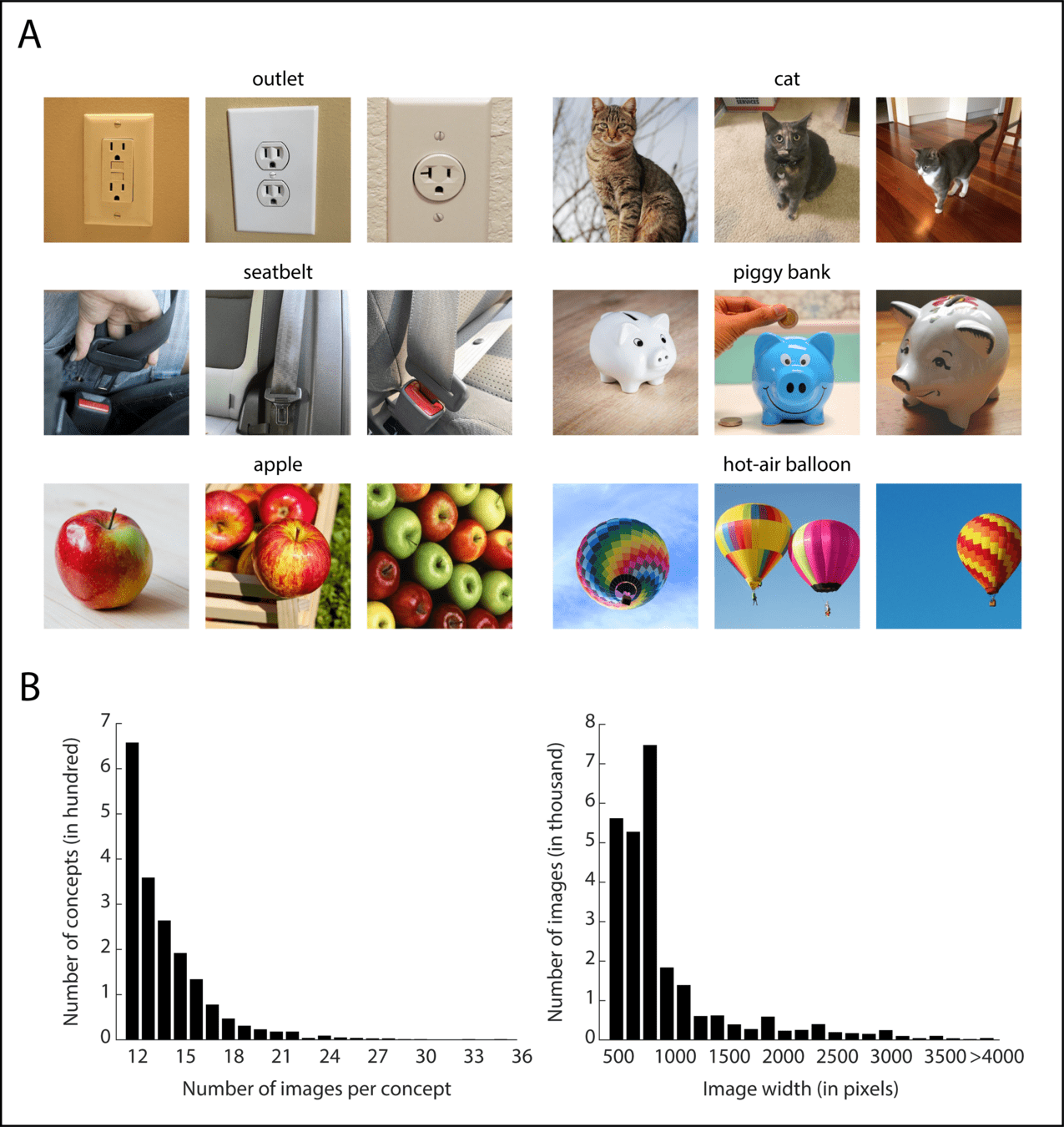

The THINGS initiative: same stimuli, many datasets

~26,000 images

1,854 categories

nameable, picturable nouns

The THINGS database has many diverse object categories.

What is the dimensionality of these neural representations of objects?

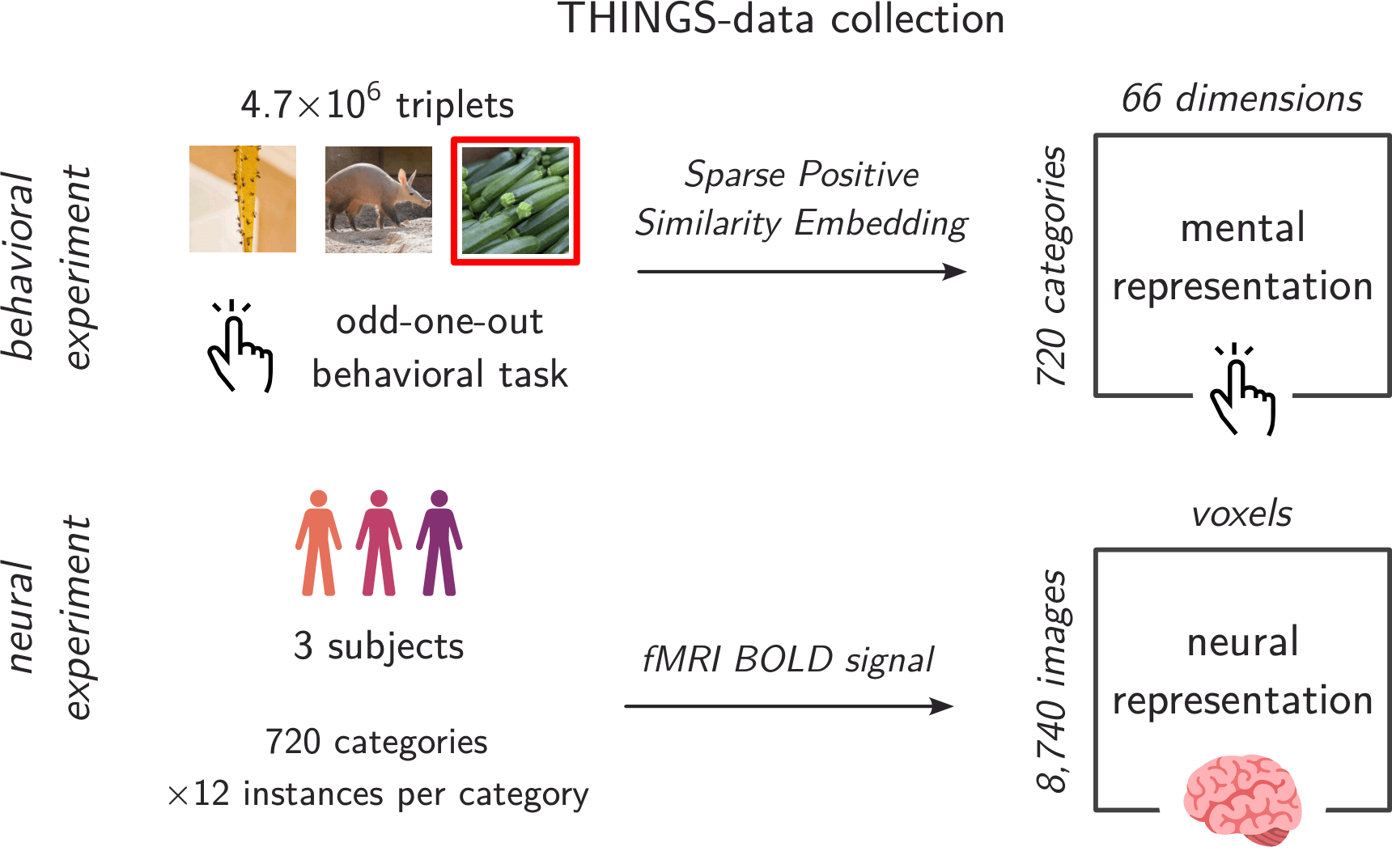

A large-scale fMRI dataset was collected on these objects.

Objects also evoke high-dimensional representations!

We replicate our earlier findings in the Natural Scenes Dataset.

increasingly abstract object representations

Which is the odd-one-out?

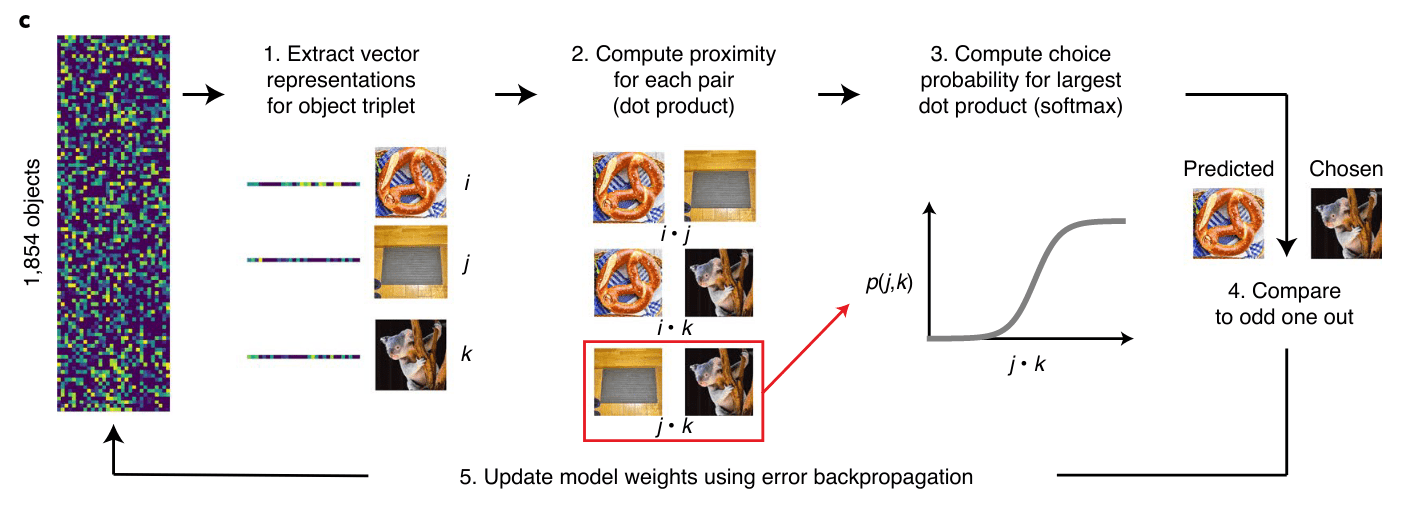

Large-scale similarity judgments were also collected.

Large-scale similarity judgments were also collected.

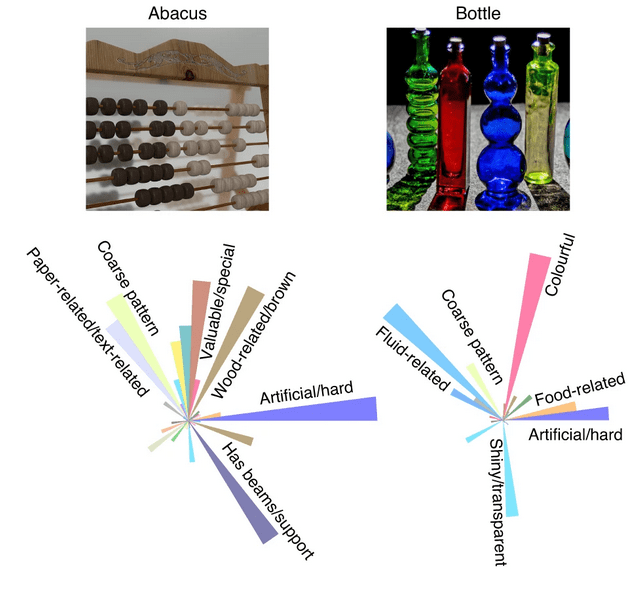

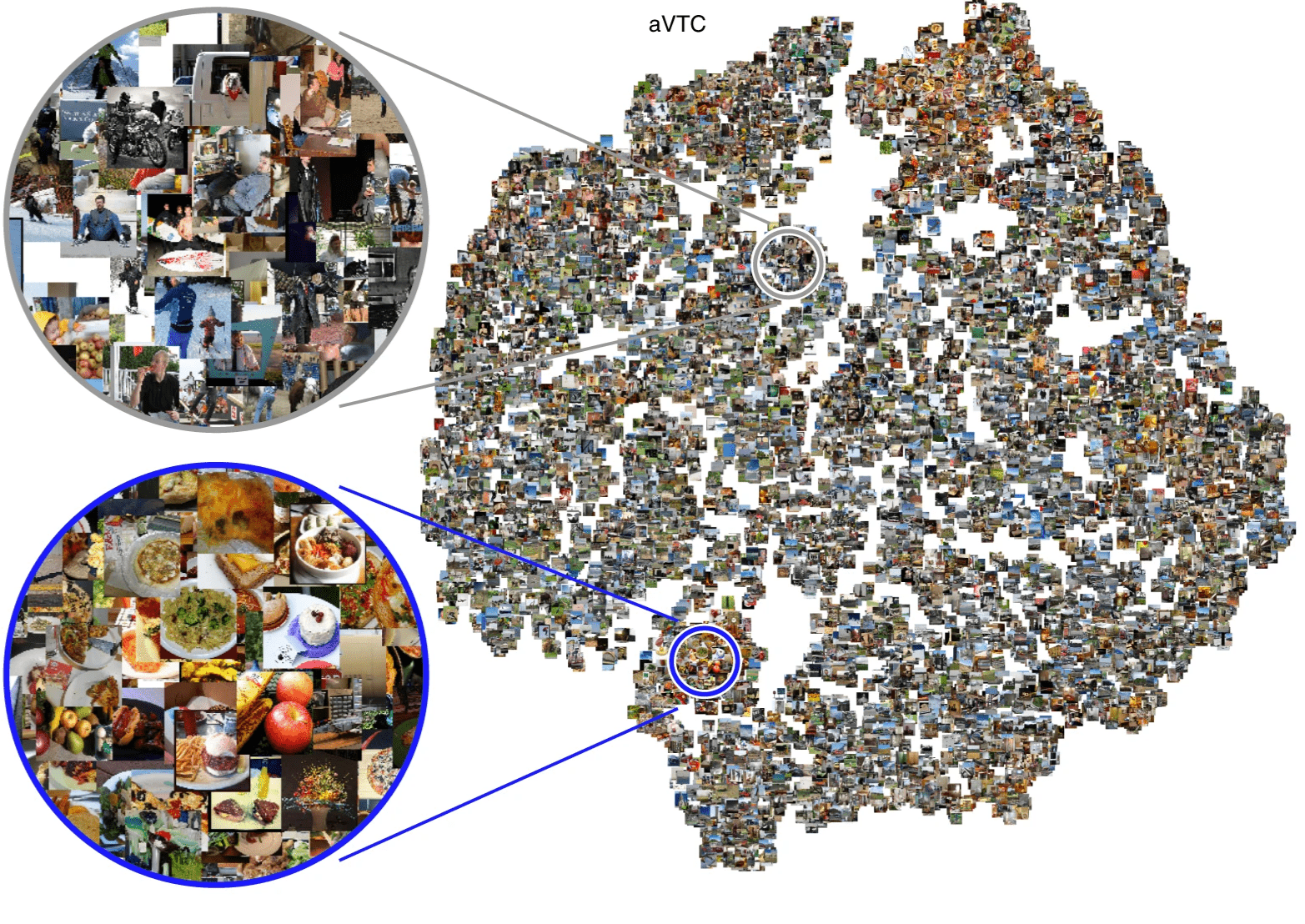

... and used to learn an embedding capturing the mental representations

that participants use when performing the odd-one-out task

Sparse Positive Similarity Embedding

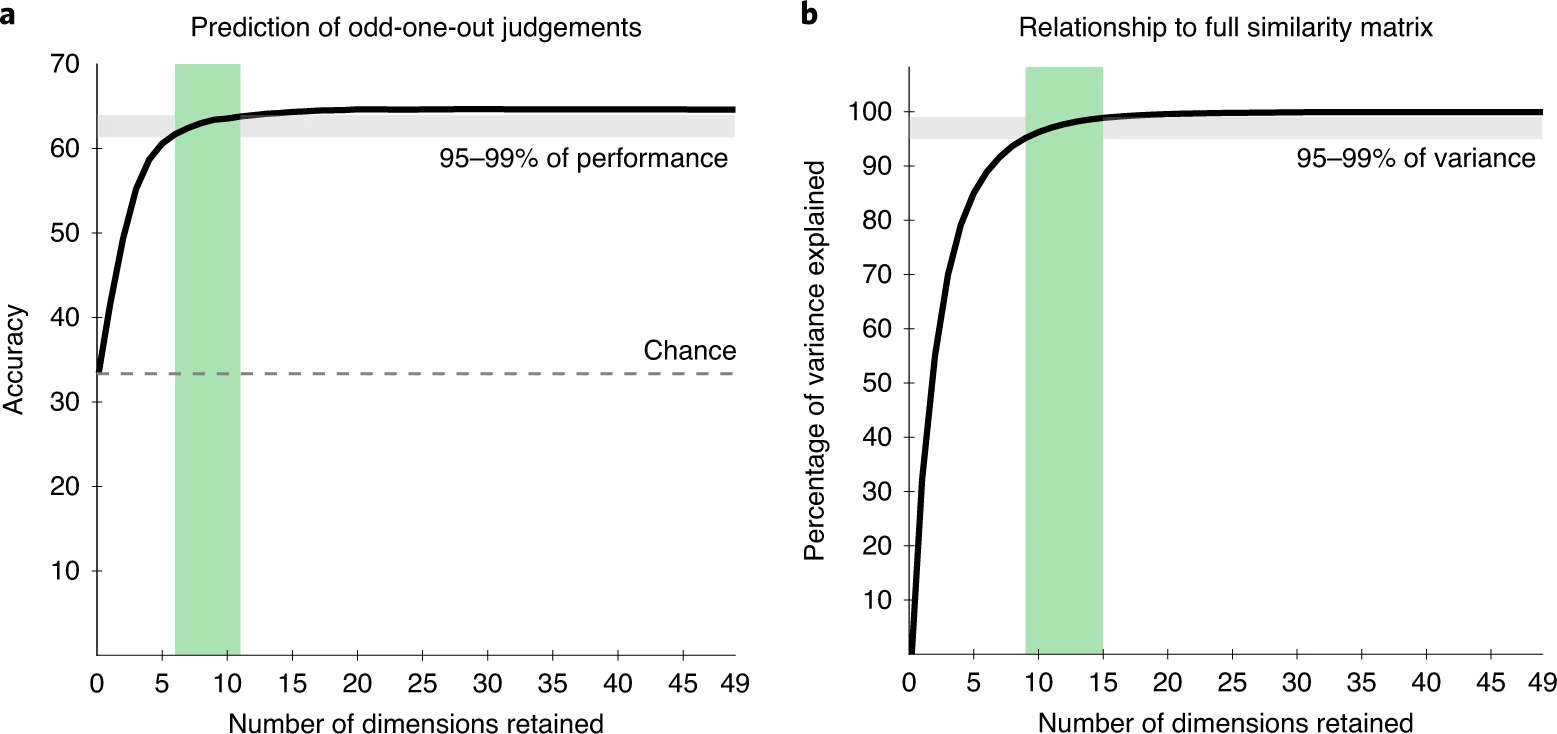

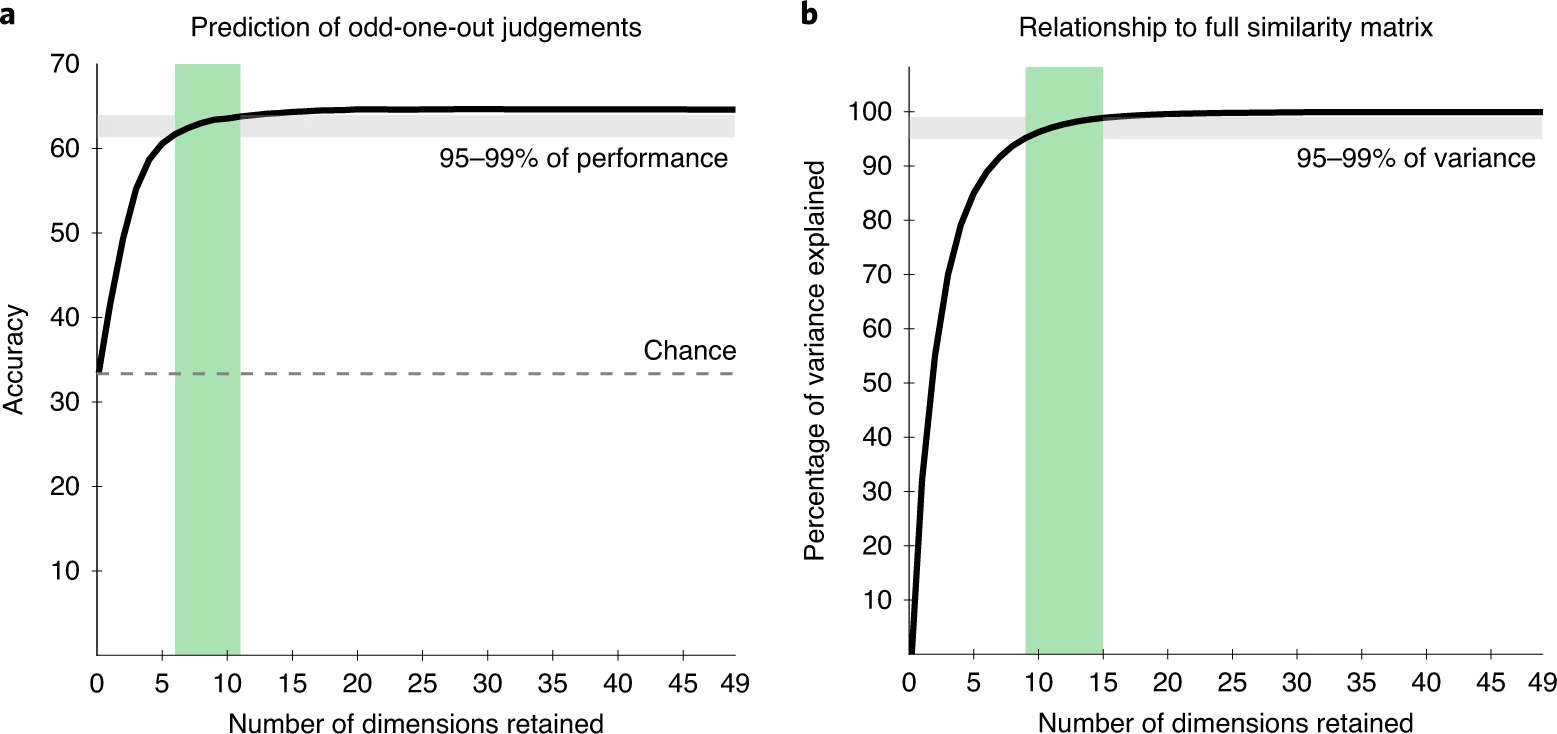

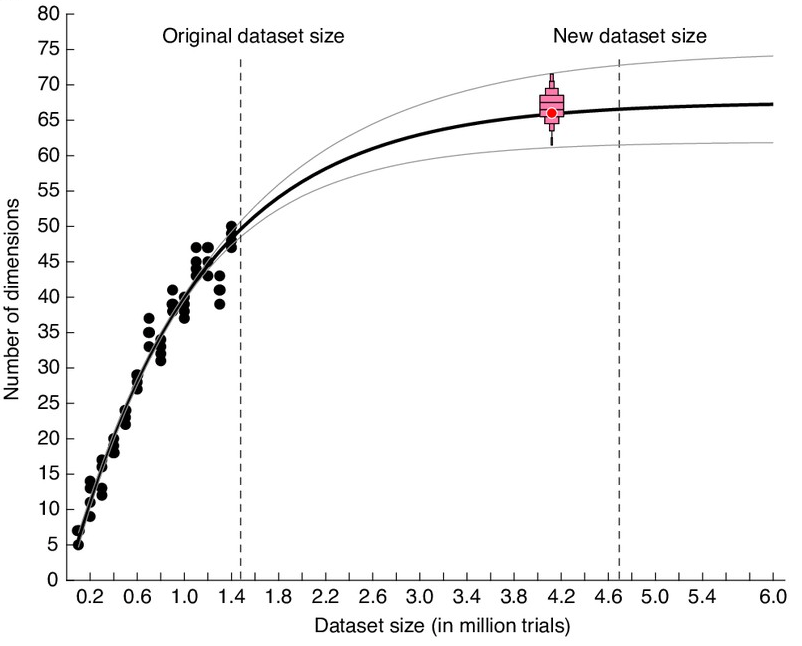

Mental representations appear low-dimensional.

What is the underlying structure of these mental representations?

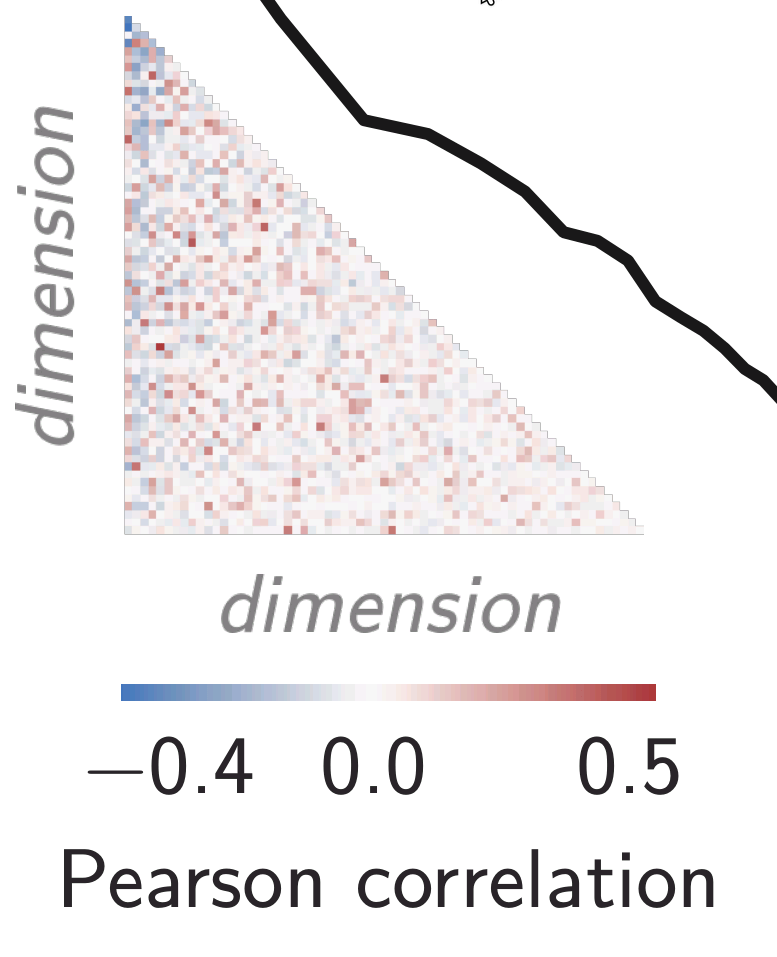

Covariance decays as a power-law

Latent dimensions have significant correlations

Mental representations have a power-law spectrum too!

There are power-law spectra in both cortical and behavioral data, but are they the same underlying representation?

How are neural and mental representations related?

generalizes

... to novel images

... across experiments

mental

neural

neurons (or) voxels

stimuli

Learn latent dimensions

Step 1

Step 2

Evaluate reliable variance

Cross-decomposition still works!

Systematic increase in shared dimensionality between neural and mental representations!

How are neural and mental representations related?

increasingly abstract object representations

Only 66 dimensions of mental representations were detected.

Why are mental representations so low-dimensional?

Scaling up the dataset doesn't help much!

49-D to 66-D

A potential cause: task complexity?

Why are mental representations so low-dimensional?

between-category triplets

(only coarse distinctions required)

within-category triplets

(fine distinctions required)

also has power-law covariance structure

Can we increase dimensionality using harder triplets?

coarse distinctions

fine distinctions

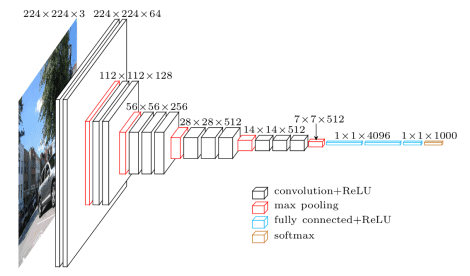

VGG-16 deep neural network

Can we increase dimensionality using harder triplets?

?

DNN

Yes, object representations increase in dimensionality when a DNN is forced to learn a more difficult task.

Can we increase dimensionality using harder triplets?

Visual cortex representations are high-dimensional.

1

They are self-similar over a huge range of spatial scales.

2

The same geometry underlies mental representations of images.

3

power-law covariance spectra

shared across subjects

remarkably similar across species

invariant to spatial scale

shared neural and mental representations

higher ranks encode finer distinctions

Thank you!

The Bonner Lab

The Isik Lab

The research presented in this dissertation was supported in part by a

- Johns Hopkins Catalyst Award to Michael F. Bonner,

- Institute for Data Intensive Engineering and Science Seed Funding to Michael F. Bonner and Brice Ménard, and

- grant NSF PHY-2309135 to the Kavli Institute for Theoretical Physics.

“Thank you for paying us to do

what we would gladly pay to do.”

– S P Arun, Vision Lab, Indian Institute of Science

Discussion

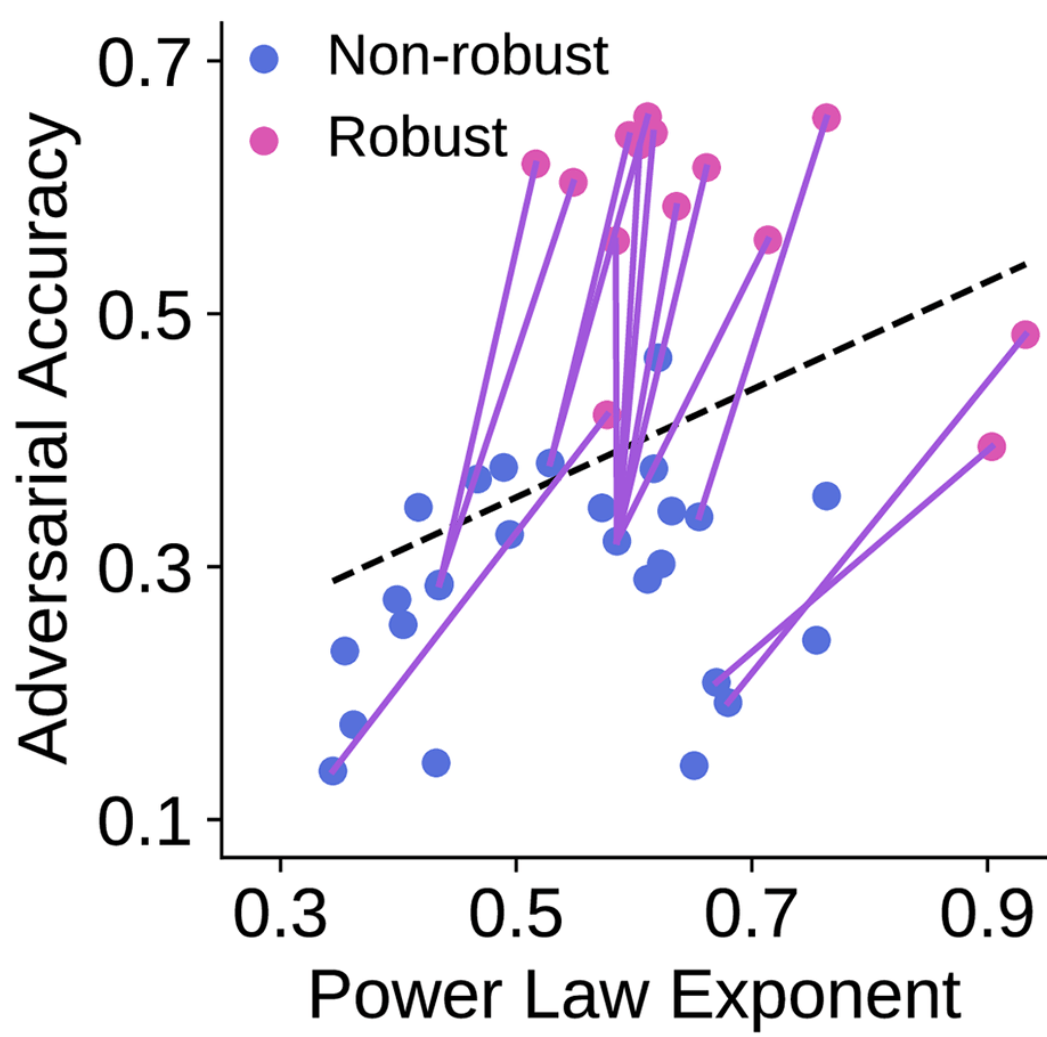

Emerging evidence supports a high-dimensional view.

across species, in systems neuroscience

artificial neural networks

Emerging evidence supports a high-dimensional view.

out-of-distribution classification accuracy

adversarial robustness

Emerging evidence supports a high-dimensional view.

outside the visual system

prefrontal cortex

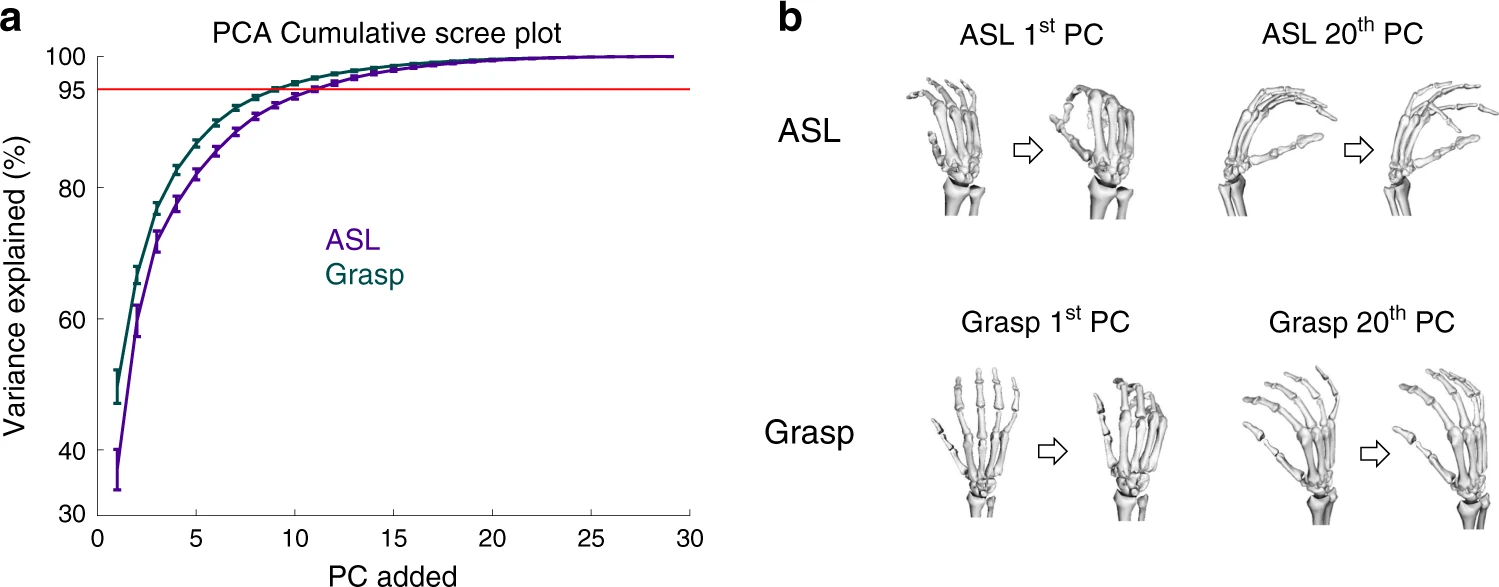

hand kinematics

Catalog all the latent dimensions!

Understand the generative mechanisms!

Not helpful to interpret every latent dimension

Cognitive visual neuroscience needs new approaches.

We want theory!

Cognitive visual neuroscience needs new approaches.

trial 1

trial 2

neurons (or) voxels

stimuli

Limitations of cross-decomposition:

- linear view of the system

- second-order (Gaussian) description

- not informative about visual features

We need new tools:

- nonlinear dimensionality metrics

- information-theoretic metrics

- ???

Standard methods are insensitive to high-dimensional structure.

Potential future directions

More variety in

- species

- neuroimaging techniques

- subject populations

For example,

- Are kids' representations more low-dimensional?

- What about patients with visual agnosia?

Potential future directions

- Are there systematic variations across regions of the brain?

- Does this generalize to other sensory or cognitive systems?

Potential future directions

also has power-law covariance structure

Using artificial vision systems to study the role of dimensionality, especially with causal manipulations

deep neural network

Potential future directions

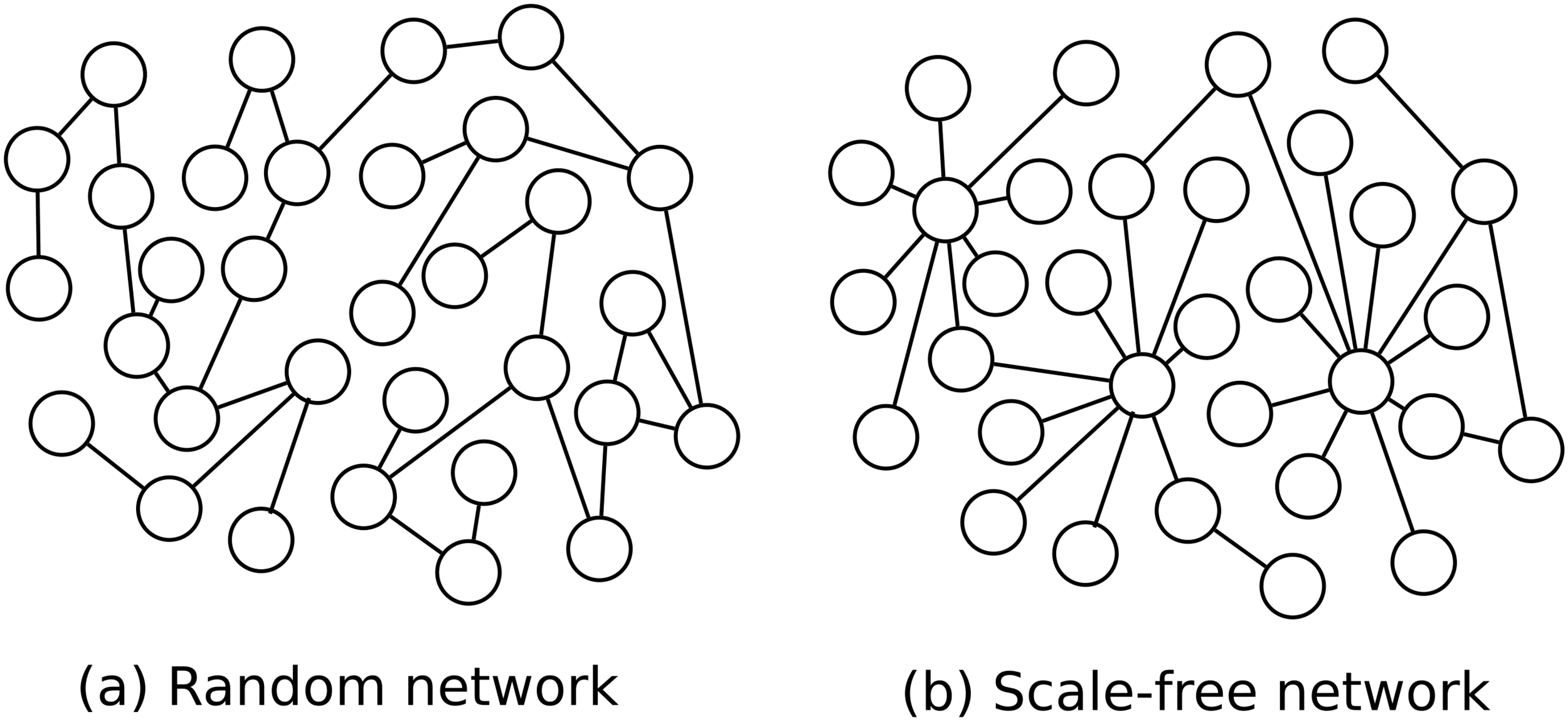

How are other scale-free aspects of cortex

- spatial organization

- temporal signals

- connectivity patterns

related to the scale-free representations we find here?

Chihye (Kelsey) Han

Are humans behaviorally sensitive to low-variance visual information present in the tail of the spectrum?

Individual differences in vision are high-dimensional.

FAQs

How much information about the stimulus can be encoded?

- animate vs inanimate

- stubby/boxy vs thin/elongated

How stable is the representation to irrelevant perturbations?

- invariant recognition

- smoothness of the code

low-D

high-D

Why is dimensionality a useful measure?

robustness

expressivity

Power-law index* of −1: a critical point

Is the power law simply due to stimulus statistics?

Why should we care about low-variance dimensions?

i love sci-fi and am willing to put up with a lot. Sci-fi movies/tv are usually underfunded, under-appreciated and misunderstood. I tried to like this, I really did, but it is to good tv sci-fi as babylon 5 is to star trek (the original). Silly prosthetics, cheap cardboard sets, stilted dialogues, cg that doesn't match the background, and painfully one-dimensional characters cannot be overcome with a'sci-fi'setting. (I'm sure there are those of you out there who think babylon 5 is good sci-fi tv. It's not. It's clichéd and uninspiring.) while us viewers might like emotion and character development, sci-fi is a genre that does not take itself seriously (cf. Star trek). [...]

i love and to up with a lot. Are, and. I to like this, I really did, but it is to good as is to (the).,,, that doesn't the, and characters be with a''. (I'm there are those of you out there who think is good. It's not. It's and.) while us like and character, is a that does not take [...]

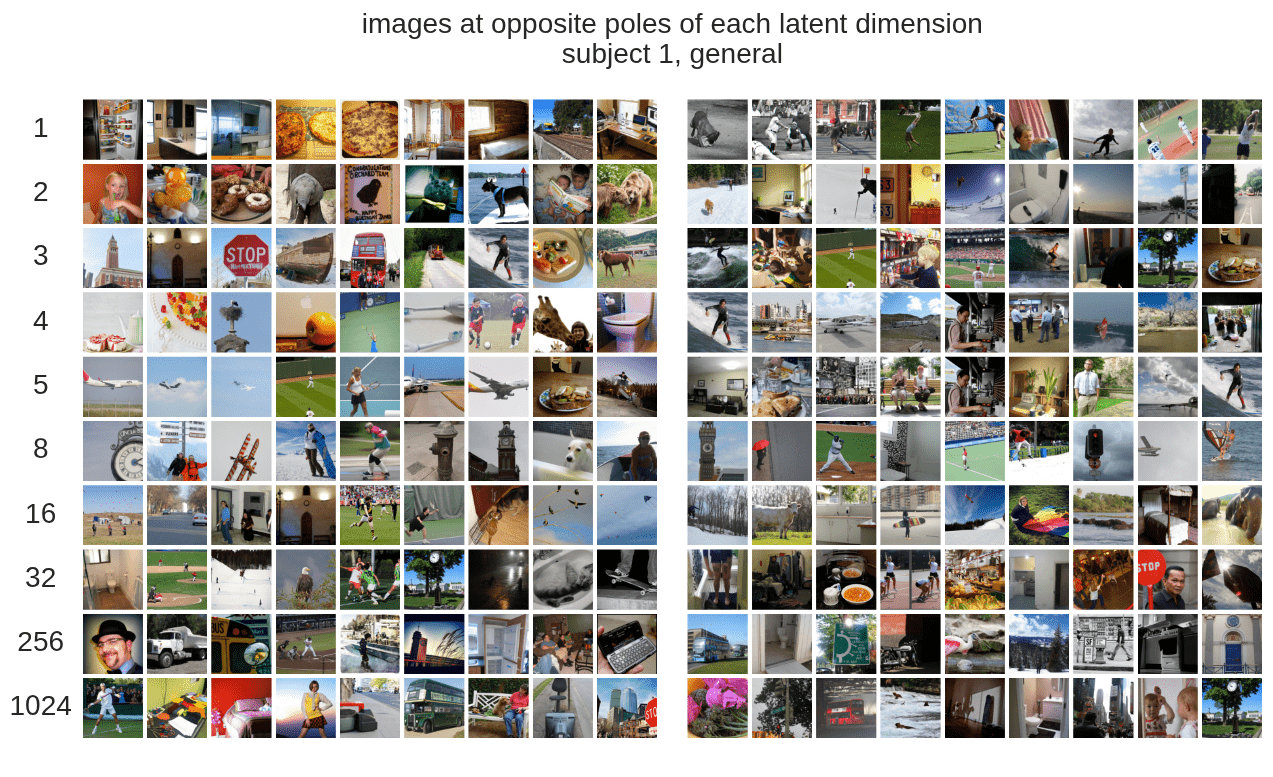

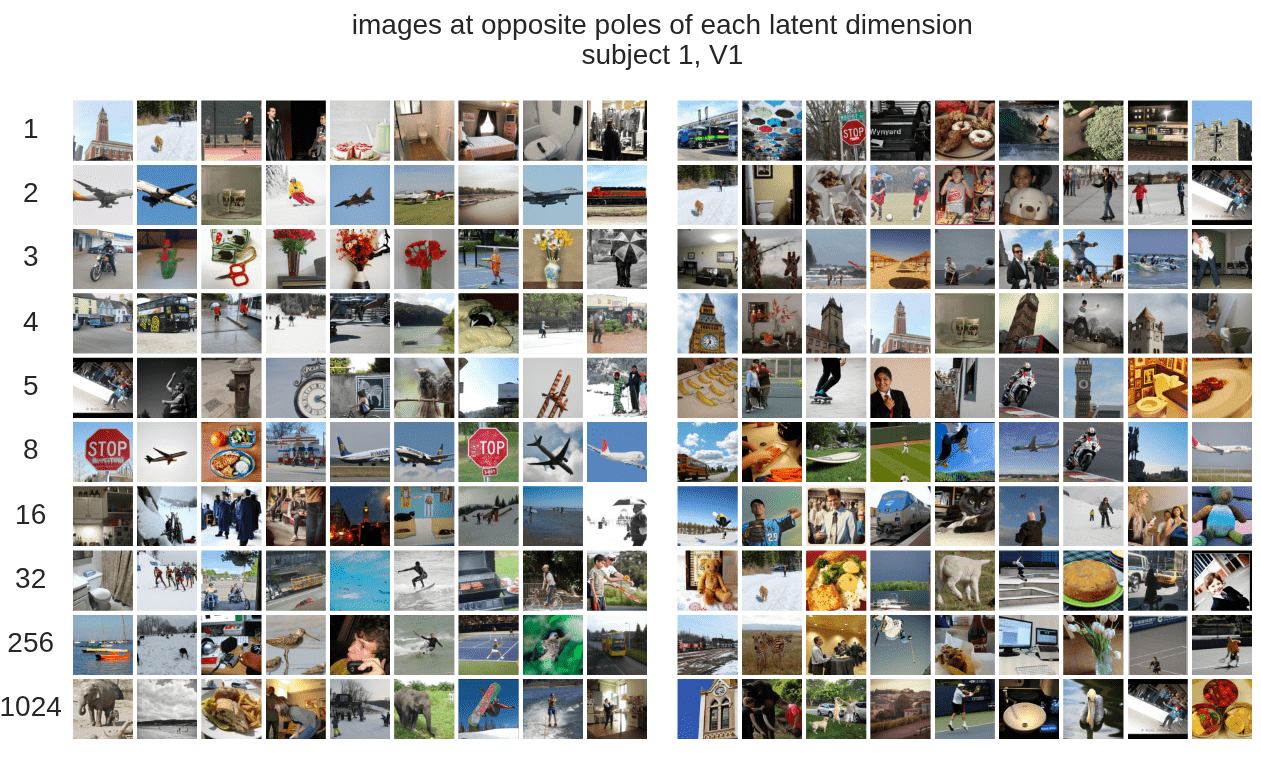

What are these latent dimensions encoding?

Where can I learn more about this fascinating stuff? ;)