Explainability in Machine Learning

Ramon Perez

From London with ❤️

September 15, 2023

Agenda

I. What is Explainability in Machine Learning? 🦾📝

II. Why is it Important? 🤔

III. How do we get started? 🛠️

IV. Demo 🤓

V. Explainability Recap 🏁

I. Explainability in Machine Learning 🦾📝

I. Explainability in Machine Learning 🦾📝

1 - What is it? 🤔

4 - Which methods exists? 🤖

2 - Interpretable ML vs Explainable ML

3 - What do we want from it?

1 - What it is? 🤔

Explainability in ML refers to a set of tools and methods for evaluating ML models and the data used to train them during and after development.

2 - Interpretable ML vs Explainable ML

Interpretable ML == Explainable ML

Industry

Academia

Interpretable ML is focused on understanding the inner workings of the system.

Explainable ML is focused on providing insights into the reasoning behind the systems' output. It includes interpretable ML by design.

3 - What do we want from ML?

Fairness

Accountability

Transparency

Are predictions being made without any discernible bias?

Can we trace these predictions reliably back to something or someone?

Can we explain how and why predictions are made?

4 - Which methods exists? 🤖

ML as a Service

Lola

Server

AWS

ML Model

ML as a Service

Lola

Server

AWS

ML Model

One Kind of Models

ML Model

Black Box Models

A black box model is a model that takes inputs and produces outputs, but its inner workings or the relationship between the inputs and outputs are not transparent and may be difficult to understand.

Examples of BB Models

ML Model

Black Box Models

RNN

DNN

CNN

Tree Ensembles

Explainability Methods for BBs

ML Model

Black Box Models

Accumulated local effects help us visualize how changes in individual variables affect a prediction of the model while holding all other variables constant.

Permutation Importance works by randomly permuting the values of a single feature in the dataset and measuring how much the accuracy of the model decreases as a result.

Anchor Methods or Statements describe a condition on the input data that, when satisfied, is highly likely to lead to the predicted output.

Another Kind of Models

ML Model

White Box Models

A white box model is a model whose internal logic, workings, and programming steps are transparent and can be easily understood and interpreted by a user.

Examples of WB Models

ML Model

White Box Models

Linear Regression

Decision Trees

Generalized Additive Models

Logistic Regression

Explainability Methods for WBs

ML Model

White Box Models

Similarity Explanations are instance-based explanations that focus on training data points to justify a model prediction on a test instance.

Interventional Tree SHAP attempts to account for correlations between variables in the model by breaking causal dependencies between the features.

Integrated Gradients attributes each feature by integrating its partial derivatives along a baseline-to-instance path.

Local Insights

ML Model

| v1 | v2 | v3 | v4 | v5 |

|---|---|---|---|---|

| 12 | 33 | 41 | 21 | 90 |

| Target |

|---|

| 0 |

Local interpretability techniques aim to give a better understanding of the model prediction for a specific instance or example.

Global Insights

ML Model

Global interpretability techniques, aim to give a better understanding of the model as a whole, and the global effects of the input features on the model's predictions.

In Reality, Explainibility Techniques can be...

...for models that are inherently transparent

Intrinsic

Model-Specific

...dependant on the model

...applied after model training for models that are typically more complex

Post hoc

Model-Agnostic

...independant of the model

Local

...to understand the model prediction for a specific example

Global

...to understand the global effects of the input features on the model prediction

II. Why is it Important? 🤔

Testing

Functionality

Research

III. How do we get Started?

Tools

Alibi

Alibi Explain is an open source Python library aimed at machine learning model inspection and interpretation. The focus of the library is to provide high-quality implementations of black-box, white-box, local and global explanation methods for classification and regression models.

Alibi Explain

Getting Started

pip install alibi

# or

conda install -c conda-forge alibiimport alibi

alibi.explainers.__all__

# outputs

[

'ALE', 'AnchorTabular', 'DistributedAnchorTabular',

'AnchorText', 'AnchorImage', 'CEM',

'Counterfactual', 'CounterfactualProto', 'CounterfactualRL',

'CounterfactualRLTabular', 'PartialDependence', 'TreePartialDependence',

'PartialDependenceVariance','PermutationImportance', 'plot_ale',

'plot_pd', 'plot_pd_variance', 'plot_permutation_importance',

'IntegratedGradients', 'KernelShap', 'TreeShap',

'GradientSimilarity'

]Method Walkthrough

from alibi.explainers import AnchorTabular # the main method

import pandas as pd

from sklearn.ensemble import RandomForestClassifierAnchors explain the behaviour of complex models with high-precision, if-then rules called anchors. These anchors are locally sufficient conditions that ensure the same prediction, given a set of inputs, with a high degree of confidence.

For example, an anchor attached to an income classifier would say the following: "Hugo makes more than £50,000 because he is married and his age is between 35 and 45 years"

Method Walkthrough

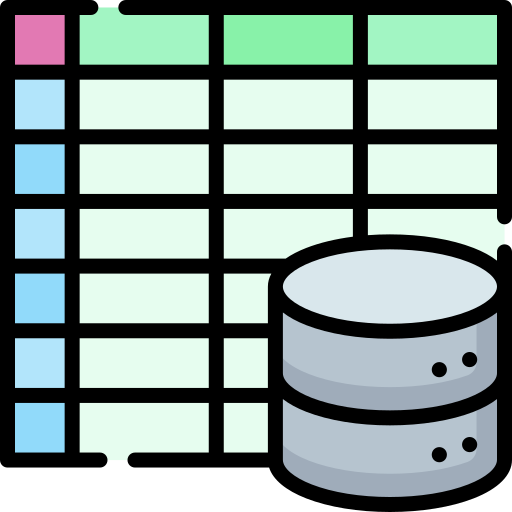

df = pd.read_csv("iris_data.csv")

df.head()| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | target |

|---|---|---|---|---|

| 5.1 | 3.8 | 1.5 | 0.3 | 0 |

| 6.0 | 2.2 | 5.0 | 1.5 | 2 |

| 4.4 | 3.2 | 1.3 | 0.2 | 0 |

| 6.3 | 2.9 | 5.6 | 1.8 | 2 |

idx = 145

X_train, y_train = dataset.data[:idx, :], dataset.target[:idx]

X_test, y_test = dataset.data[idx:, :], dataset.target[idx:]1 - Let's load our dataset

2 - Create a training and testing dataset

3 - Instantiate a classifier

clf = RandomForestClassifier(n_estimators=100, random_state=0)

clf.fit(X_train, Y_train)Method Walkthrough

4 - Instantiate our Alibi Explainer and fit it to the training set

explainer = AnchorTabular(clf.predict_proba, feature_names)

explainer.fit(X_train, disc_perc=(25, 50, 75))AnchorTabular(meta={

'name': 'AnchorTabular',

'type': ['blackbox'],

'explanations': ['local'],

'params': {'seed': None, 'disc_perc': (25, 50, 75)}

})5 - Get an Anchor for a random prediction in the test set

idx = 0

random_row = X_test[idx].reshape(1, -1)

predicted_class = explainer.predictor(random_row)[0]

print('Prediction: ', class_names[predicted_class])Prediction: virginicaMethod Walkthrough

6 - Set a precision threshold and explain a prediction

explanation = explainer.explain(X_test[idx], threshold=0.95)

print('Anchor: %s' % (' AND '.join(explanation.anchor)))

print('Precision: %.2f' % explanation.precision)

print('Coverage: %.2f' % explanation.coverage)Anchor: petal width (cm) > 1.80 AND sepal width (cm) <= 2.80

Precision: 0.98

Coverage: 0.32Interpretation: Using a threshold of 0.95 means that predictions on observations where the anchor holds will be the same as the prediction on the explained instance at least 95% of the time.

Anchors' Explained

The anchors method explains individual predictions of any black box classification model by finding a decision rule that “anchors” the prediction sufficiently.

A rule anchors a prediction if changes in other feature values do not affect the prediction.

Anchors use reinforcement learning techniques in combination with a graph search algorithm to reduce the number of model calls to a minimum while still being able to recover from local optima.

Anchors include the notion of coverage, stating precisely to which other, possibly unseen, instances they apply.

Source: Molnar, C. (2022). Interpretable machine learning: A guide for making Black Box models explainable. Christoph Molnar.

IV. Demo Time 🤓

V. Explainability Recap

Any System is Prone to Errors so Question the System

Understanding "Metrics" alone can be a recipe for disaster - Understand the "Why" instead

Data is Bias, therefore, so are machine learning models