automated

Docker

deployment

what

What we are trying to accomplish is to create a mechanism that gives developers, testers and operations a self-service mechanism for deploying Docker-based solutions. In this context, AL and it collection of 50+ containers is considered a single solution.

why

As an organization, we are not ready to deploy pieces of a solution individually. We need a gating mechanism that allows users to deploy a unique combination of containers as a single entity. QA, in particular, needs to be in complete control of what is deployed in order to better associate defects to the correct build.

how

- tie in the generation of a deployment fragments with automated Bamboo builds

- create a Docker Compose registry that holds the descriptors that are assembled as a result of the individual container builds

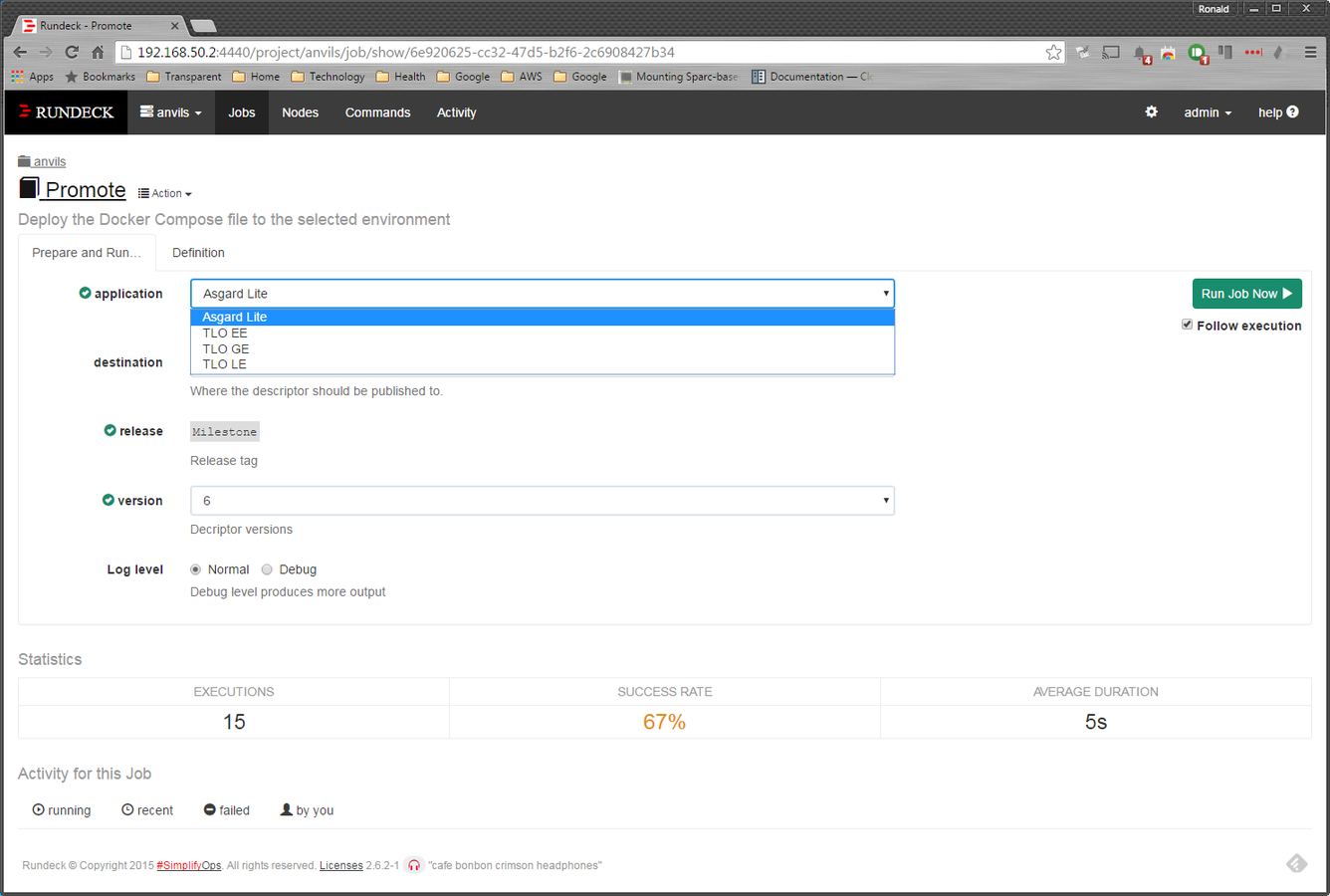

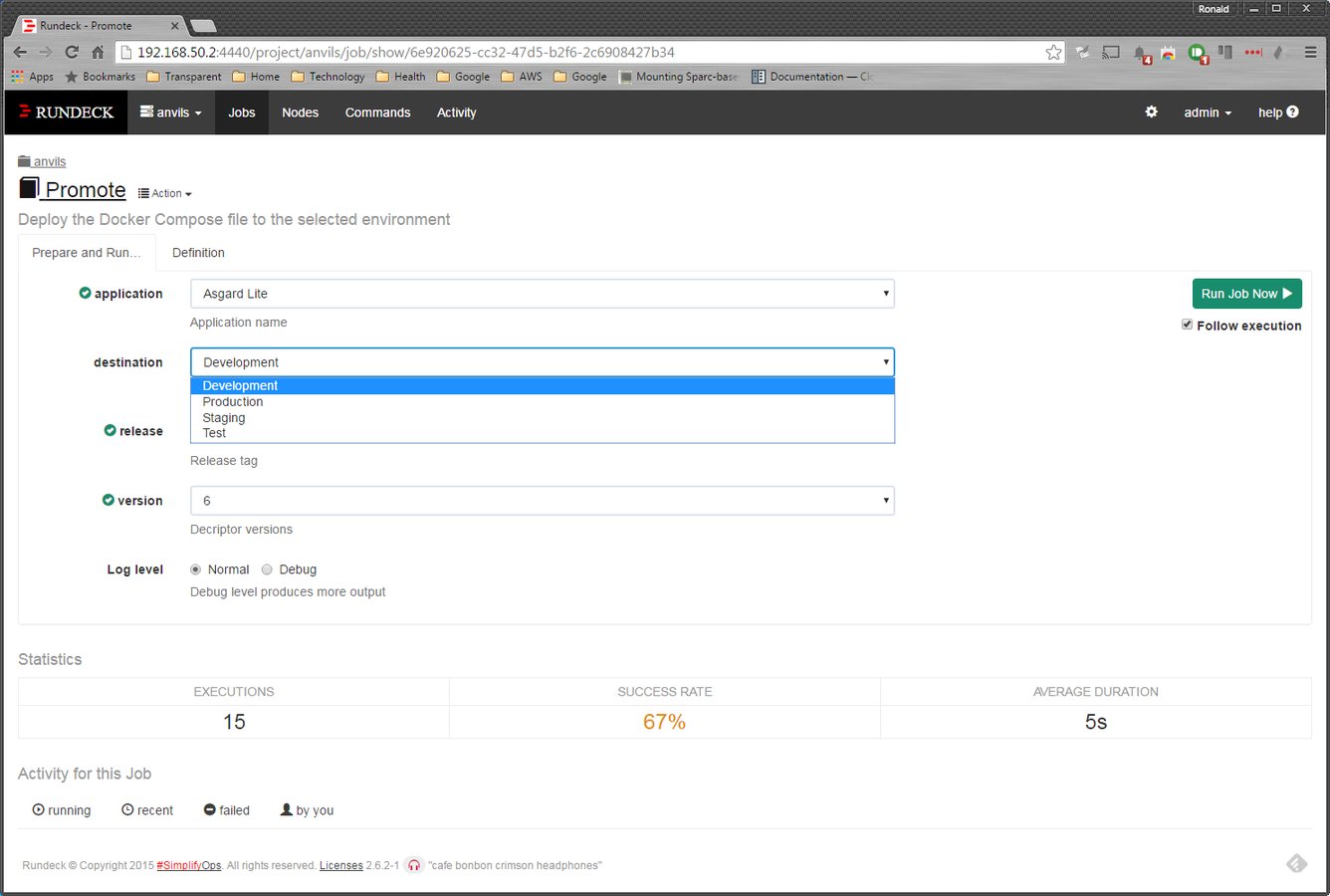

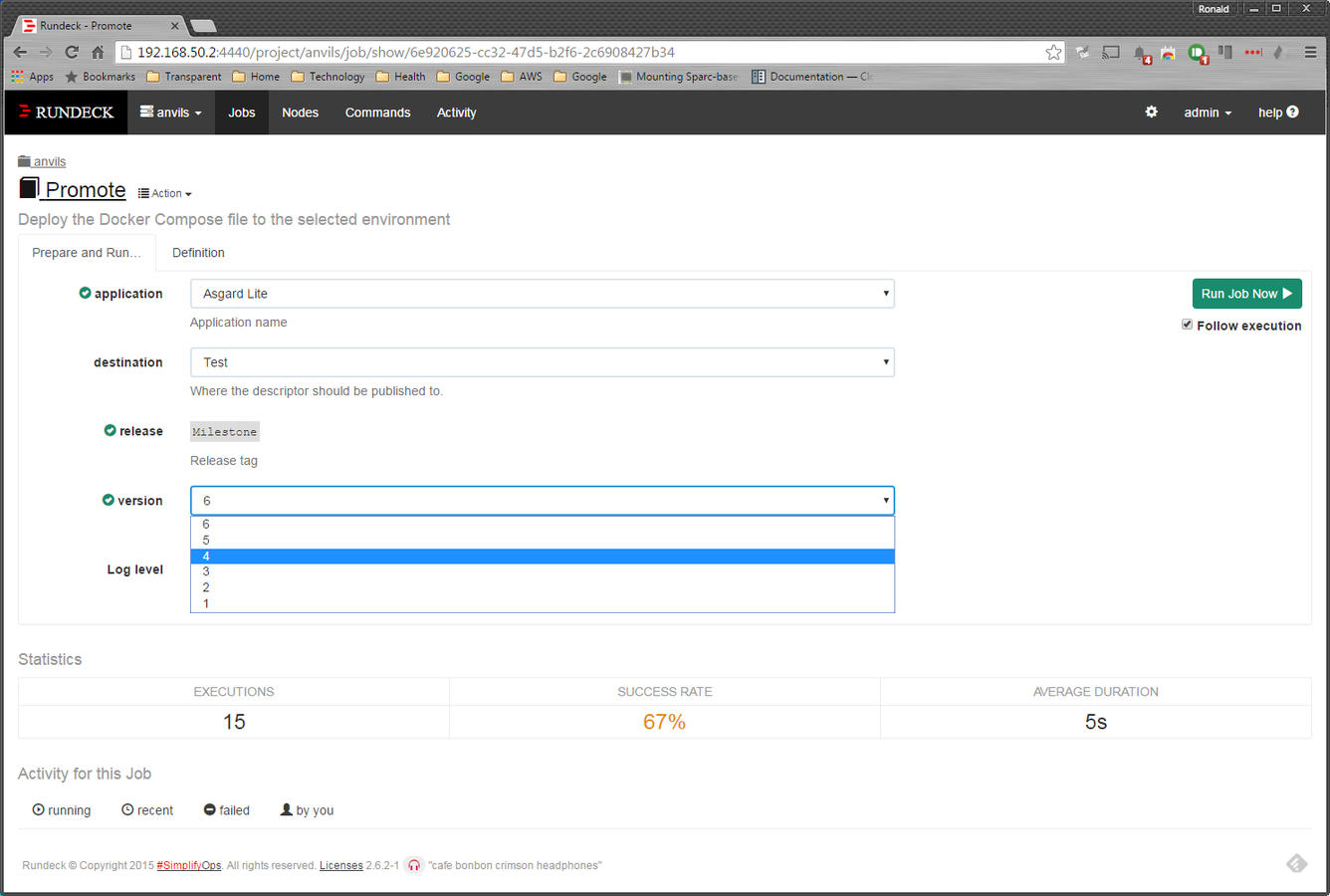

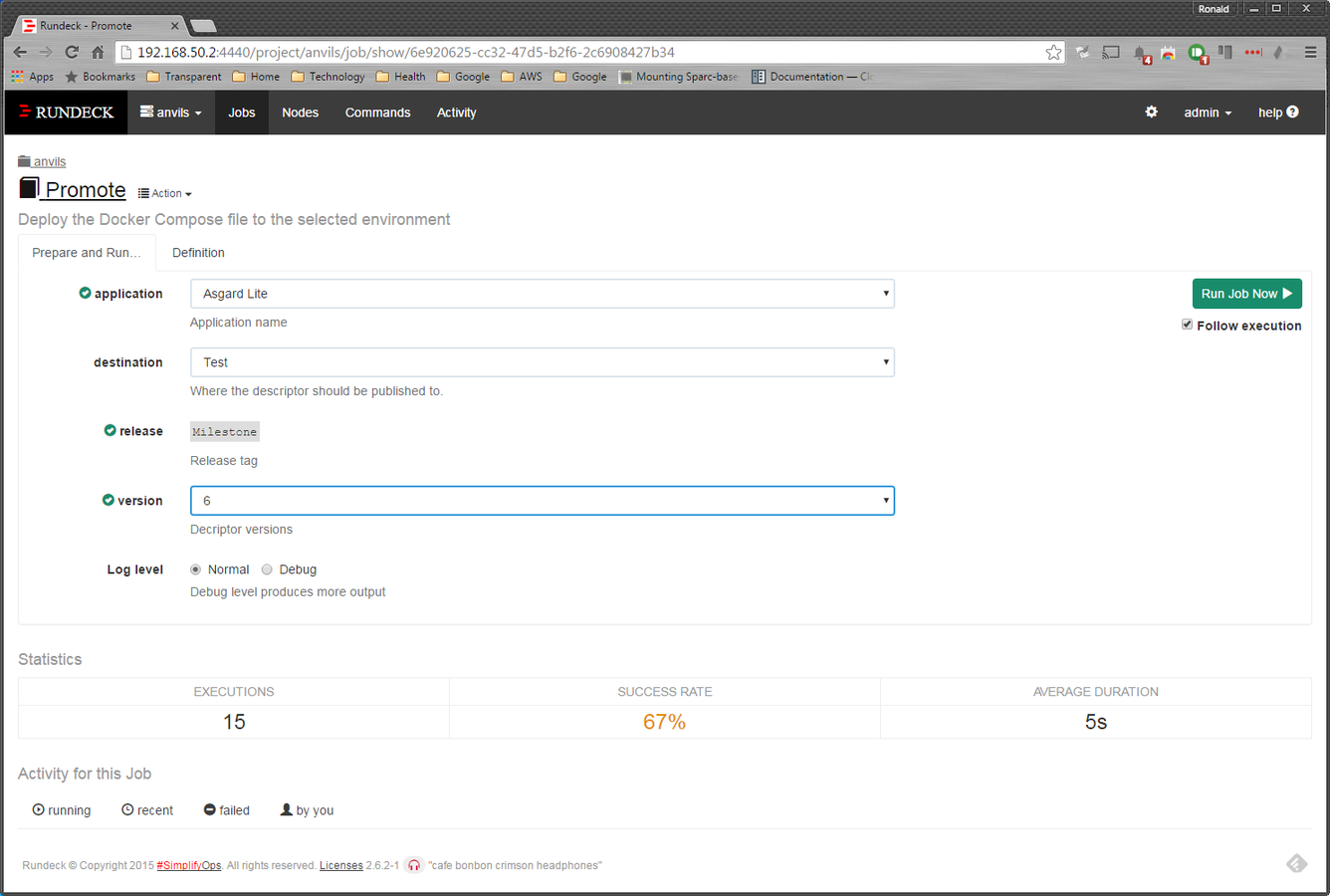

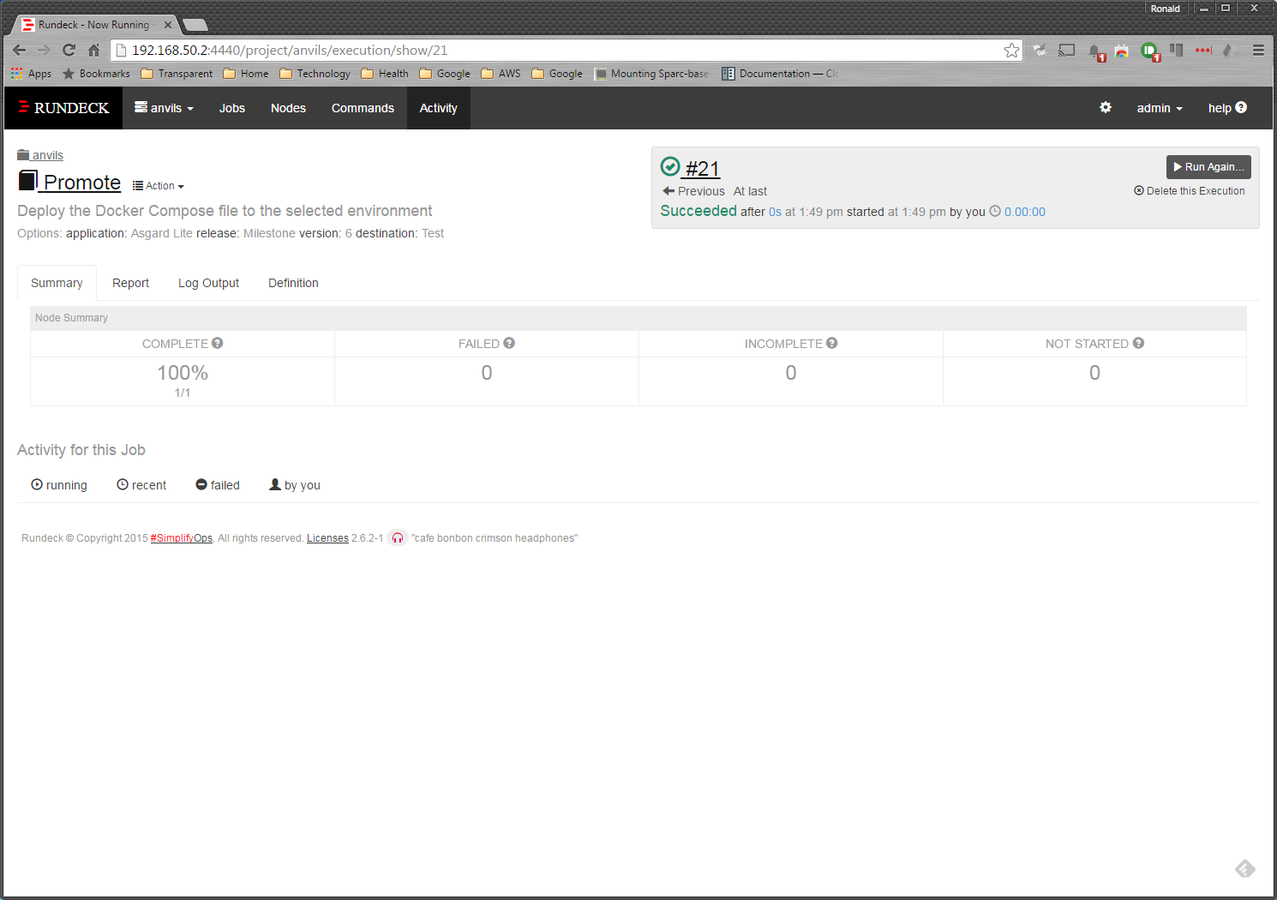

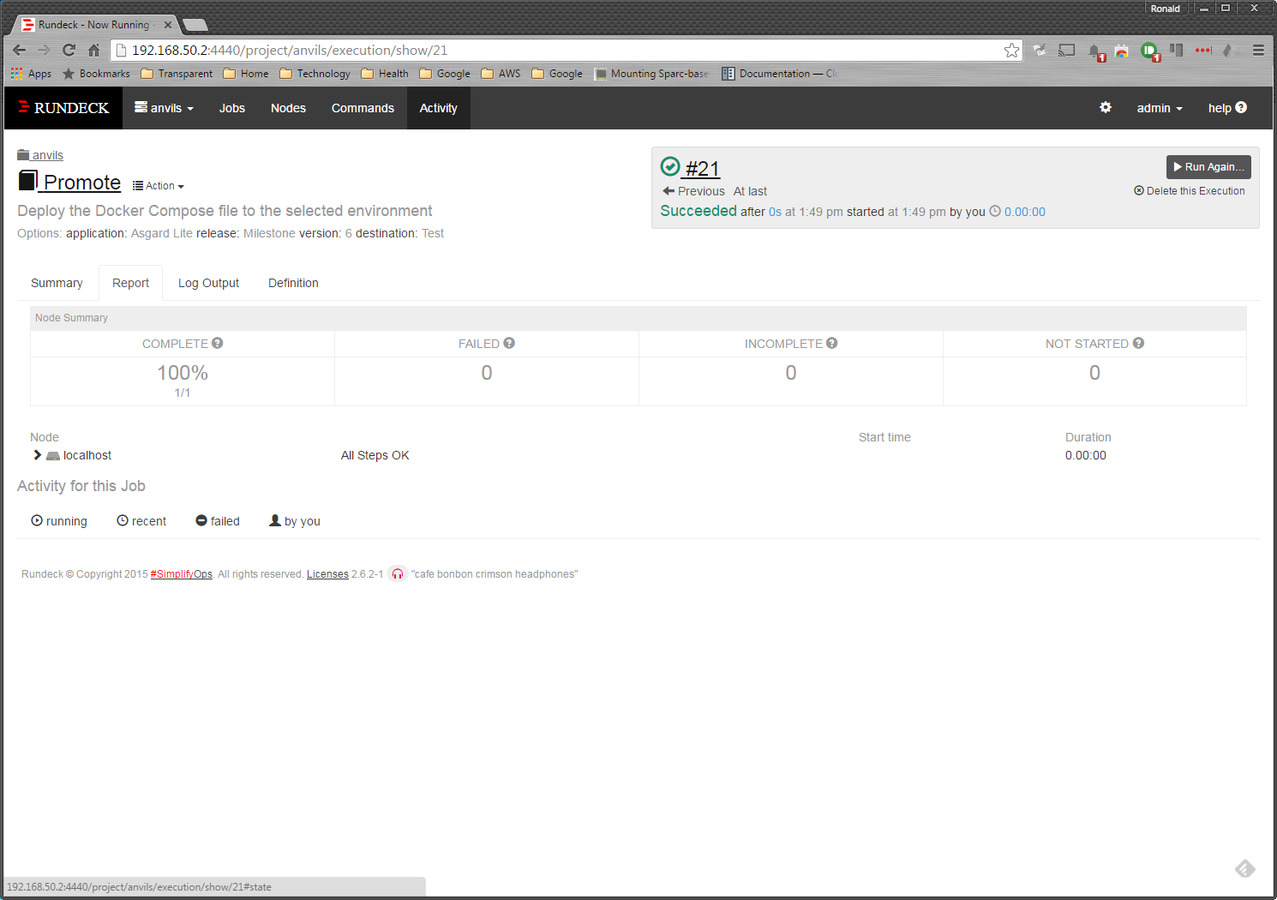

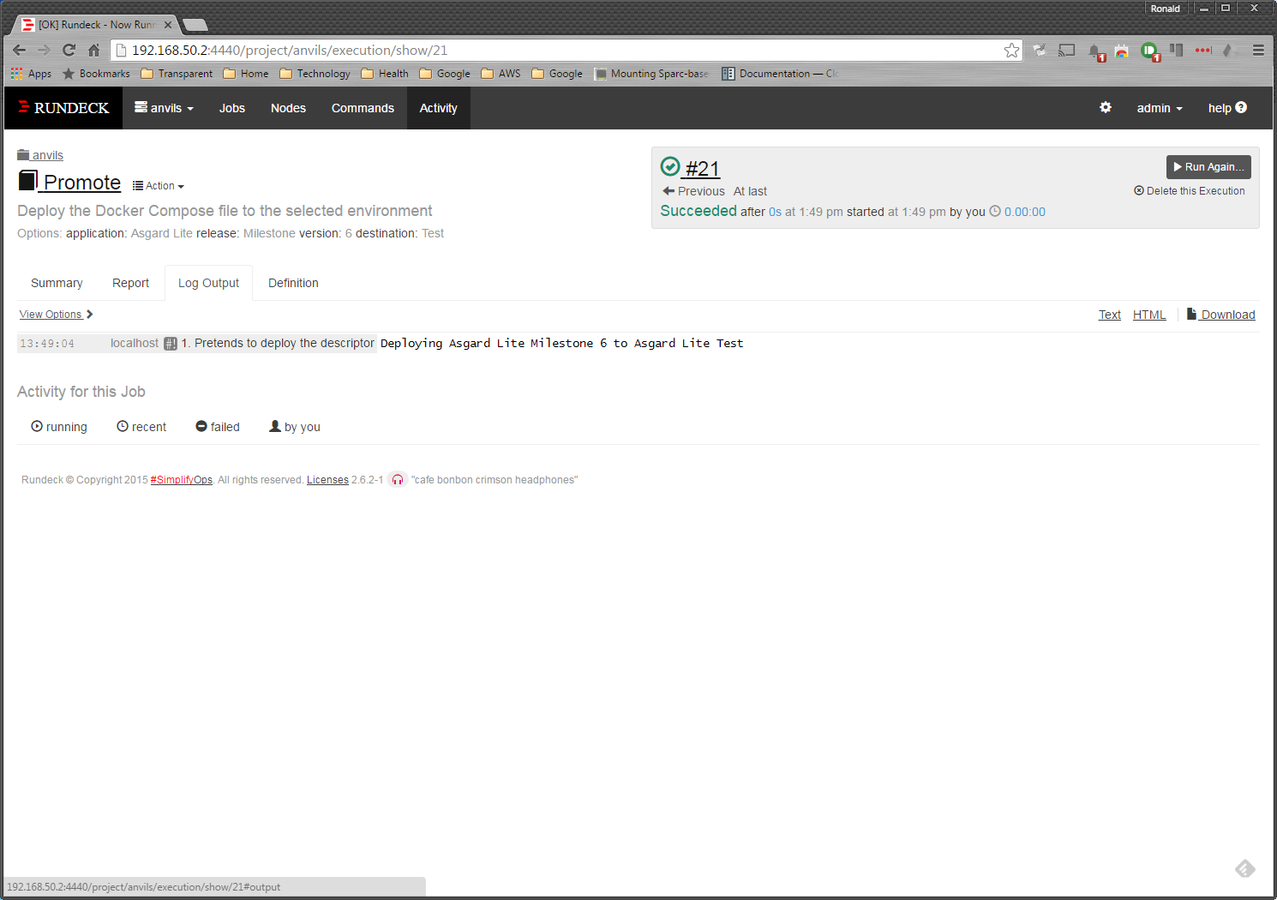

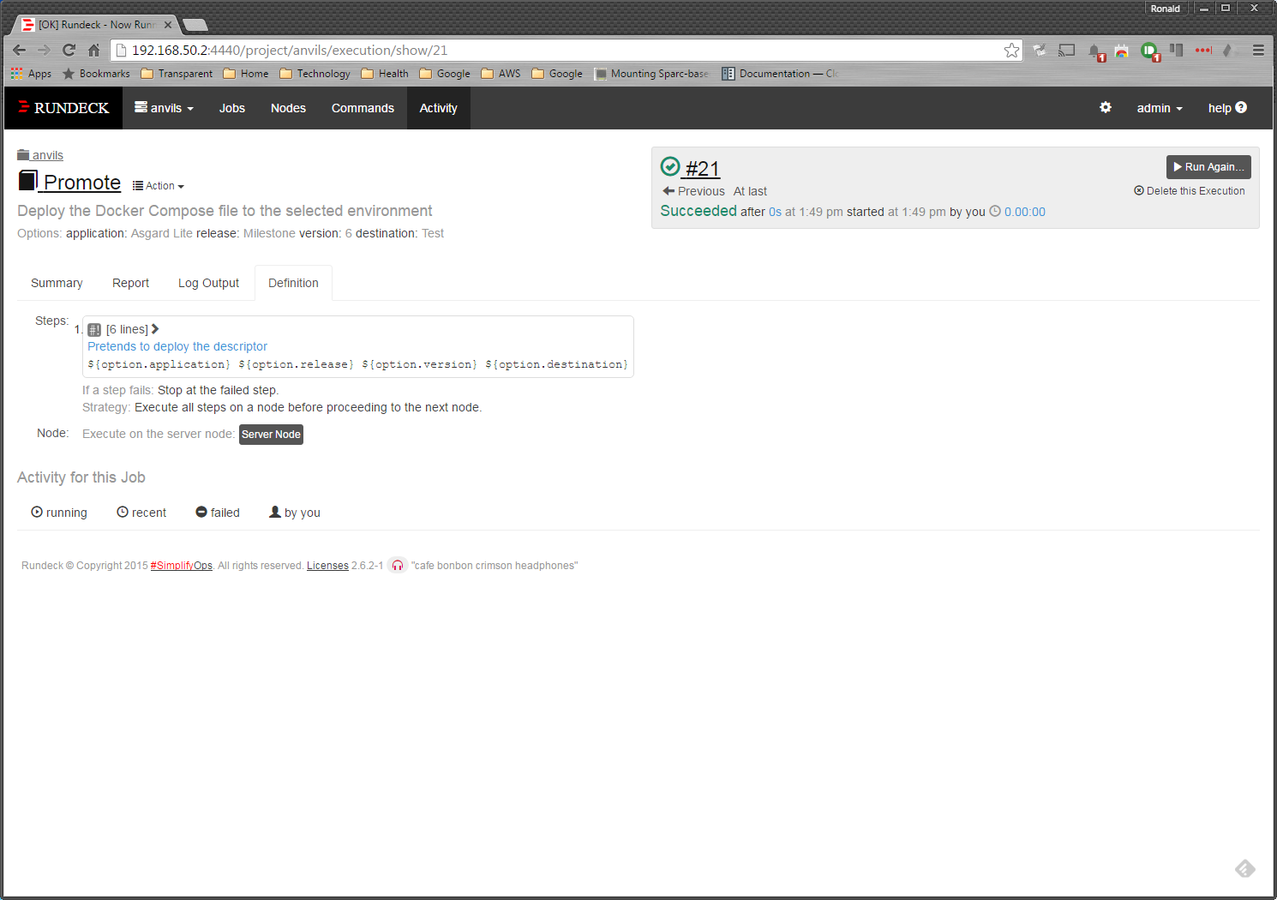

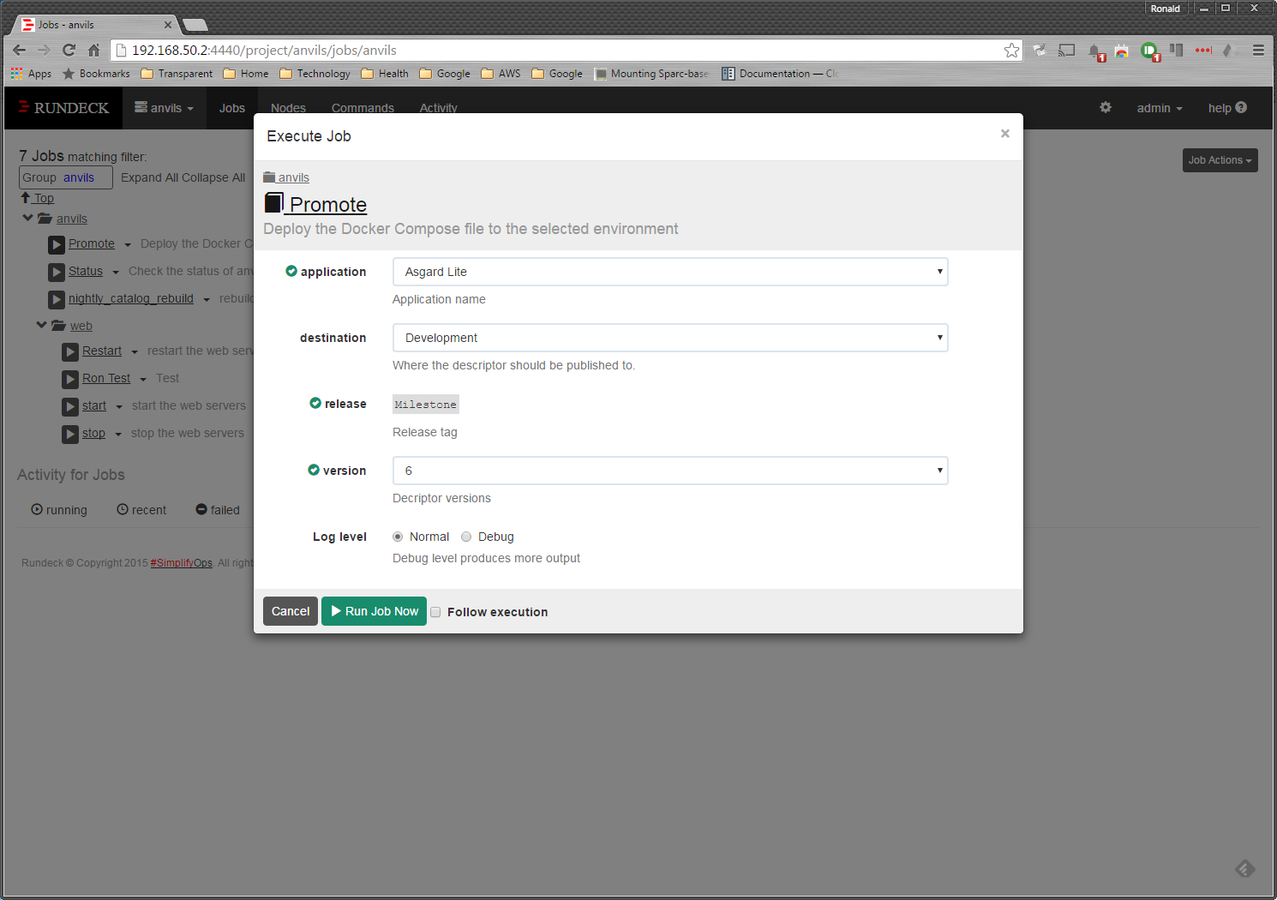

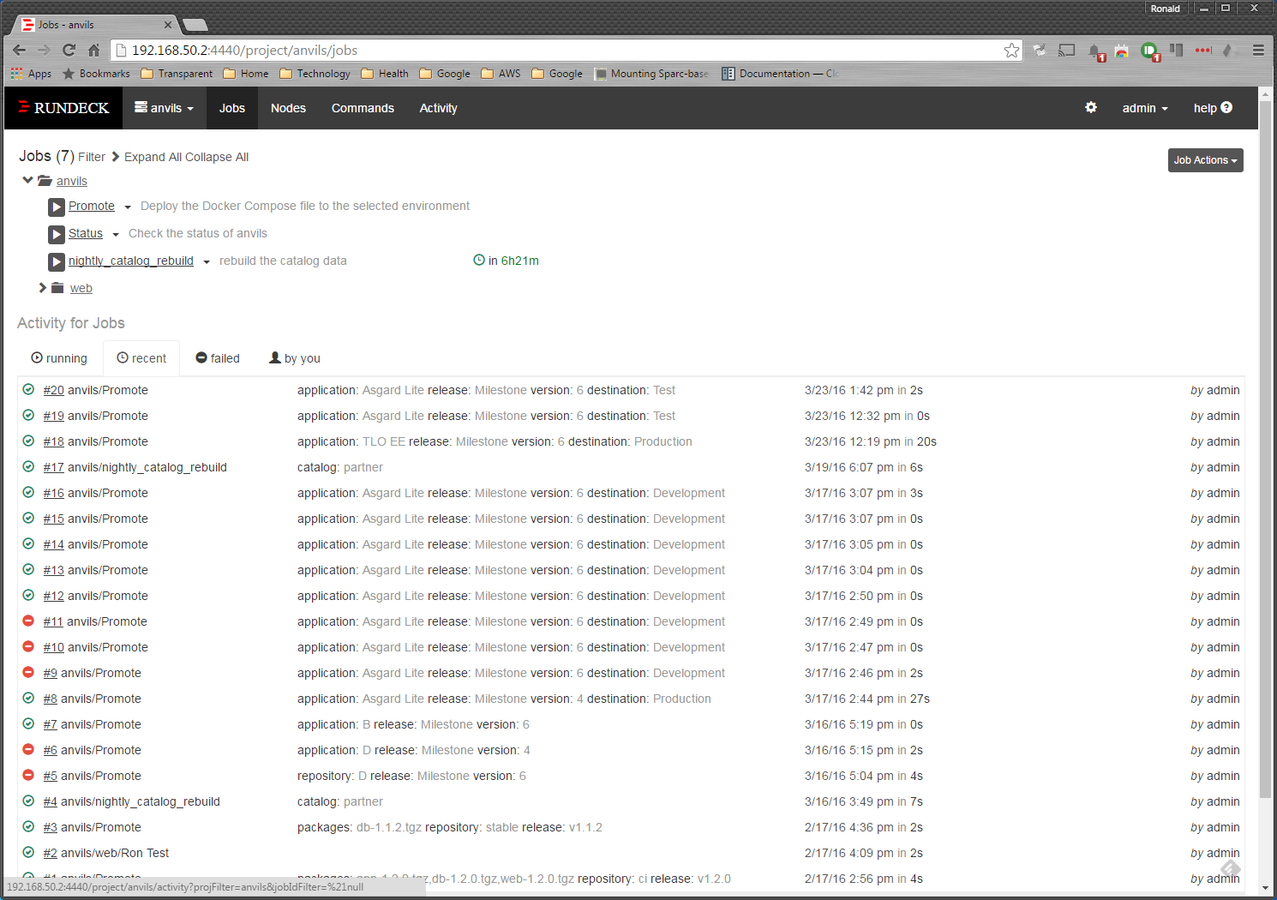

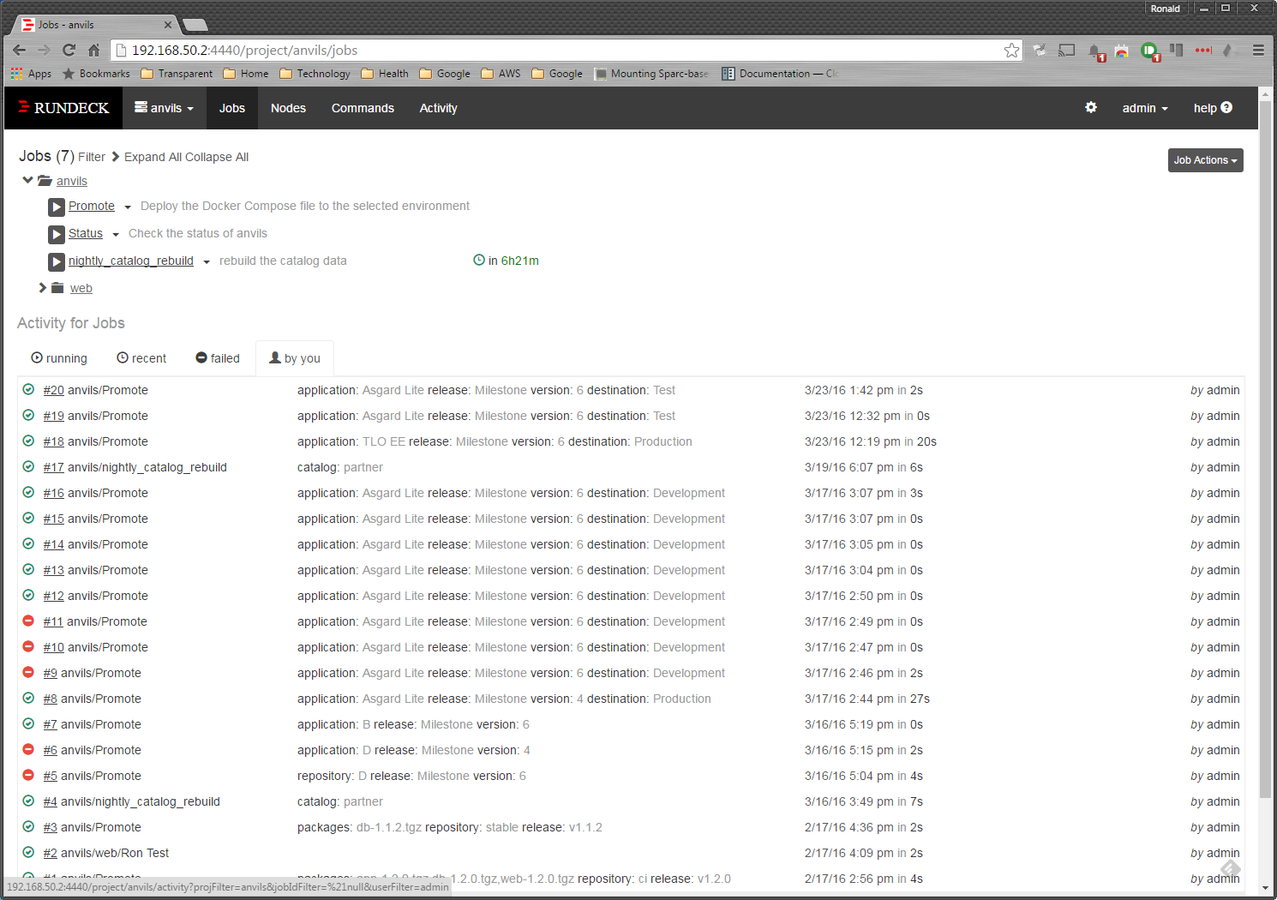

- provide self-service deployment of a particular descriptor via Rundeck, the open-source job scheduler

Sequence

- Bamboo completes with a successful test

- Bamboo makes a REST call to publish the current Docker Compose fragment to the compose registry

- User selects the desired descriptor to deploy from the Rundeck web interface

- Rundeck executes the deployment logic, most likely via an SSH command that fires off a Docker container that does the actual deployment

example

PUT /fragment HTTP/1.1

Accept: application/json

Content-Type: application/json

User-Agent: Java/1.8.0_72

Host: localhost:9090

Connection: keep-alive

Content-Length: 334

{

"fragment":"base64 encoding of the Transmission YML",

"applications":["TLO GE","TLO EE"],

"releases":["Milestone"]

}example

/descriptor/application/TLO GE/Milestone/1

transmission-data:

image: busybox

container_name: transmission-data

command: 'true'

volumes:

- /var/lib/transmission-daemon

transmission:

image: dperson/transmission

container_name: transmission

volumes_from:

- transmission-data

restart: always

net: host

ports:

- 9091:9091

- 51413:51413

environment:

TRUSER: admin

TRPASSWD: admin

TIMEZONE: UTCexample

PUT /fragment HTTP/1.1

Accept: application/json

Content-Type: application/json

User-Agent: Java/1.8.0_72

Host: localhost:9090

Connection: keep-alive

Content-Length: 334

{

"fragment":"base64 encoding of the Plex YML",

"applications":["TLO GE","TLO EE"],

"releases":["Milestone"]

}example

/descriptor/application/TLO GE/Milestone/2

transmission-data:

image: busybox

container_name: transmission-data

command: 'true'

volumes:

- /var/lib/transmission-daemon

transmission:

image: dperson/transmission

container_name: transmission

volumes_from:

- transmission-data

restart: always

net: host

ports:

- 9091:9091

- 51413:51413

environment:

TRUSER: admin

TRPASSWD: admin

TIMEZONE: UTC

plex-data:

image: busybox

container_name: plex-data

command: 'true'

volumes:

- /config

plex:

image: timhaak/plex

container_name: plex

restart: always

net: host

ports:

- 32400:32400

volumes_from:

- plex-data

- bittorrent-sync-data

volumes:

- /mnt/nas:/dataexample

PUT /fragment HTTP/1.1

Accept: application/json

Content-Type: application/json

User-Agent: Java/1.8.0_72

Host: localhost:9090

Connection: keep-alive

Content-Length: 334

{

"fragment":"base64 encoding of the MySQL YML",

"applications":["TLO GE","TLO EE"],

"releases":["Milestone"]

}example

/descriptor/application/TLO GE/Milestone/3

transmission-data:

image: busybox

container_name: transmission-data

command: 'true'

volumes:

- /var/lib/transmission-daemon

transmission:

image: dperson/transmission

container_name: transmission

volumes_from:

- transmission-data

restart: always

net: host

ports:

- 9091:9091

- 51413:51413

environment:

TRUSER: admin

TRPASSWD: admin

TIMEZONE: UTC

plex-data:

image: busybox

container_name: plex-data

command: 'true'

volumes:

- /config

plex:

image: timhaak/plex

container_name: plex

restart: always

net: host

ports:

- 32400:32400

volumes_from:

- plex-data

- bittorrent-sync-data

volumes:

- /mnt/nas:/data

mysql-data:

image: busybox

container_name: mysql-data

volumes:

- /var/lib/mysql

- /etc/mysql/conf.d

mysql:

image: mysql

container_name: mysql

net: host

volumes_from:

- mysql-data

restart: always

ports:

- 3306:3306

environment:

MYSQL_ROOT_PASSWORD: sa

MYSQL_USER: mysql

MYSQL_PASSWORD: mysql

MYSQL_DATABASE: owncloudexample

example

example

example

example

example

example

example

example

example

example

example

The Good

- Rundeck is off the shelf and under active development

- Rundeck can tie into LDAP for authentication

- We have some control over the UI

- Job execution is typically done via SSH so we can deploy Rundeck as a container

- Rundeck can use our existing MySQL infrastructure

- Docker Compose works both in development and AWS environments

- Docker Compose also works in Docker Swarm, if we decide to use that in development

The BAD

- I haven't figured out a way to make the development target actually work!

- How do I schedule on a particular developer's machine? Do we even need to?

- The job can "succeed" by sending the descriptor off to the scheduler but one or more containers might not actually start correctly. No feedback to the user after pushing the button. We need some sort of "all healthy" check.

The UGLY

- we don't have complete control over the Rundeck UI so it won't be pretty

- requires a homegrown Docker Compose registry, which we must maintain. I don't believe such a beast already exists out in the wild.

- we'll have to rig up all 50 Bamboo projects to send the descriptor to the registry

- need to investigate how Amazon ECS notifies us of deployment failures

- we would have to maintain the Rundeck container ourselves

next Steps

Do we continue down this path or is there something else we should investigate?