Decoding Neural Image Captioning

Anthill Inside 2017

Sachin Kumar @sachinkmr

About Me

-

Sophomore at NSIT,Delhi University-

B.E. in Information Technology

-

-

Teaching Assistant at Coding Blocks. -

Independent study on Deep Learning and its applications.

Outline

-

Intro to Neural Image Captioning(NIC) -

Motivation -

Dataset -

Deep Dive into NIC -

Results -

Your Implementation -

Summary

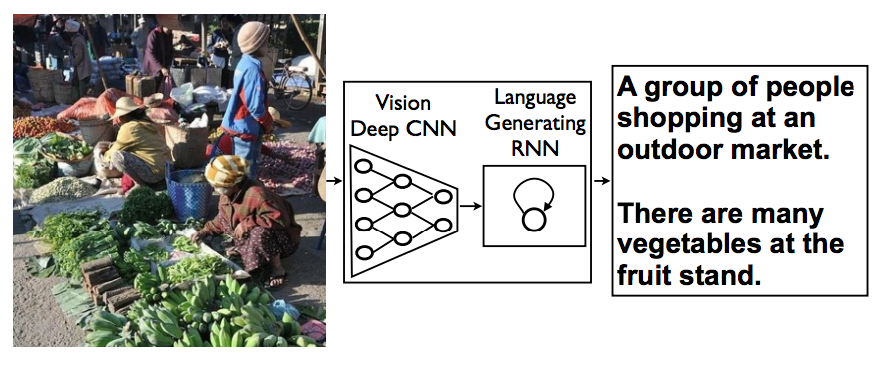

What is Neural Image Captioning?

Can we create a system, in which feeding an image, we can generate a reasonable caption in plain english ?

Input to the system:

Output :

-

A group of teenage boys on a road jumping joyfully. -

Four boys running and jumping. -

Four kids jumping on the street with a blue car in the back. -

Four young men are running on a street and jumping for joy. -

Several young man jumping down the street.

Let's See, How we can accomplish this!

The Task

Automatically describe images with words.

Why?

-

Useful for telling stories for album/photo uploads.

-

Gives detailed understanding of an image and an ability to communicate that information via natural language.

-

It's Cool!

Dataset

-

Flickr8k-

8K Images

-

-

MS Coco-

Training Images [80K/13GB] -

Validation Images [40K/6.2GB] -

Testing Images [40K/12.4GB]

-

The Model

We will combine deep convolutional nets for image classification with recurrent networks for sequence modelling, to create a single network that generates descriptions of images.

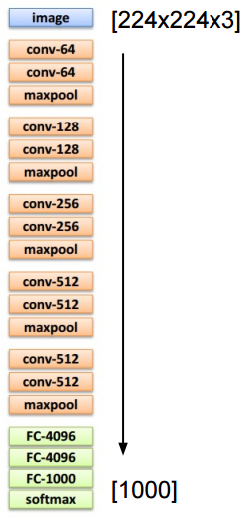

For image classification, we will use VGG 16 layer model, popularly known as VGG16.

-

Very simple and homogeneous.

-

Better accuracy because of increased depth of the network by adding more convolutional layers.

-

Which is feasible due to the use of very small (3 × 3) convolution filters in all layers.

CNN

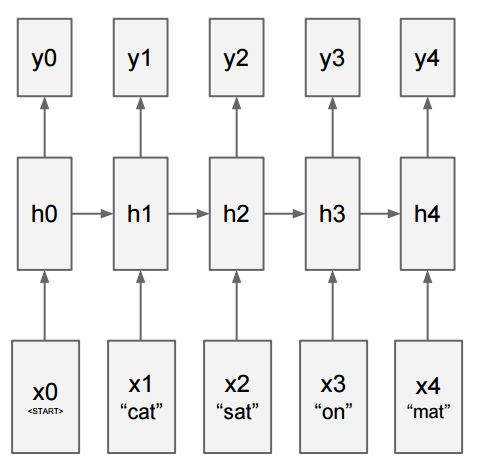

For sequence modelling, we use Recurrent Neural Network

P(next word | previous words)

RNN

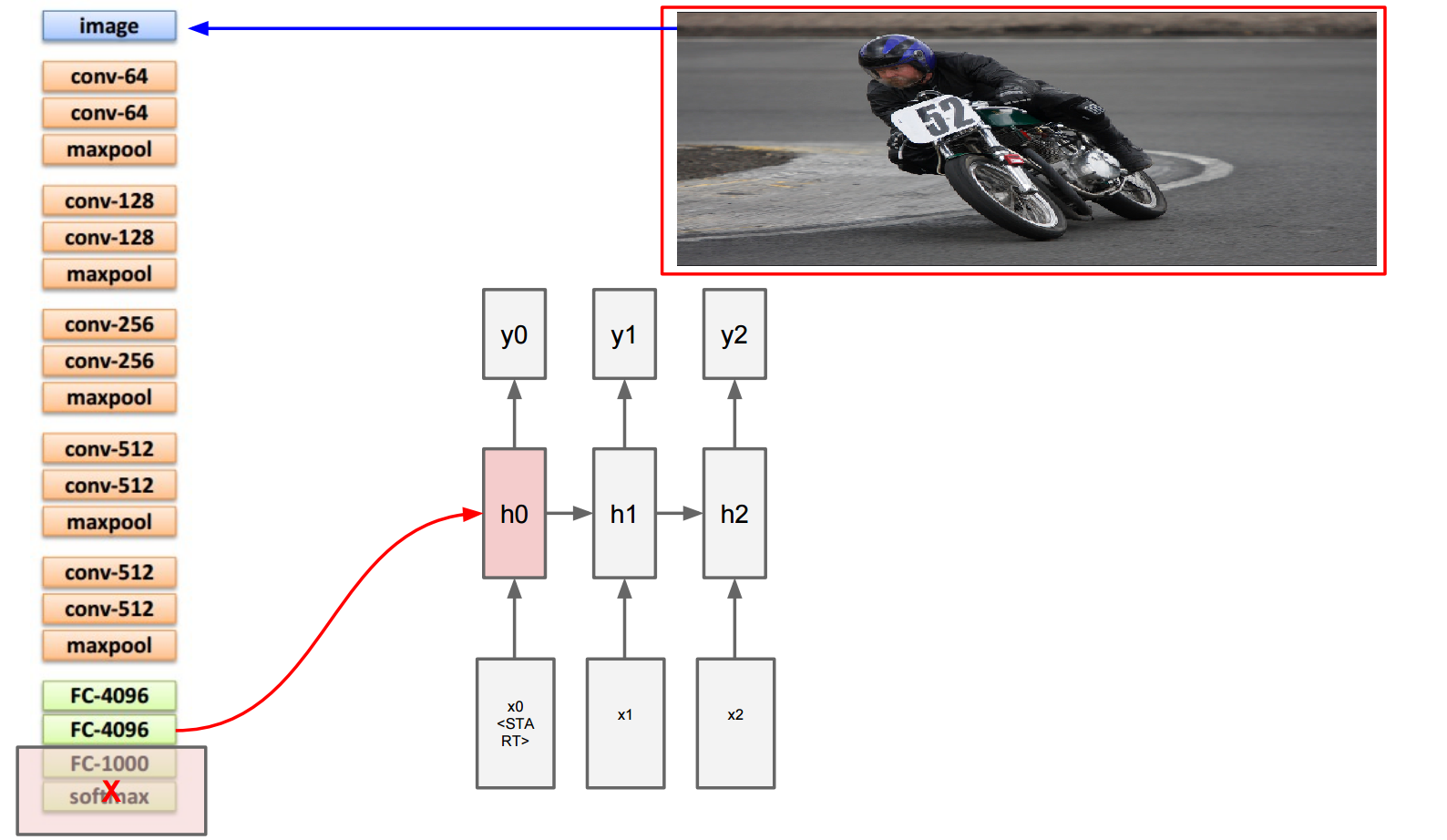

Merging CNN with RNN

Training Example

Before:

h0 = max(0, Wxh * x0)

Now:

h0 = max(0, Wxh * x0

+ Wih * v)

RNN vs LSTM

-

We will use a Long-Short Term Memory (LSTM) net, which has shown state-of-the art performance on sequence tasks such as translation and sequence generation.

-

Also due to its ability to deal with vanishing and exploding gradients, the most common challenge in designing and training RNNs.

RNN vs LSTM (contd.)

h1 = max(0, Wxh * x1 + Whh * h0)

LSTM changes the form of the equation for h1 such that: 1. More expressive multiplicative interactions. 2. Gradients flow nicer. 3. Network can explicitly decide to reset the hidden state.

-

LSTM model trained to predict word of sentence after it has seen image as well as previous words.

-

Use BPTT (Backprop through time) to train.

-

Loss function used : negative log likelihood as usual.

Model Summary

LSTM model combined with a CNN image embedder and word embeddings. The unrolled connections between the LSTM memories are in blue and they correspond to the recurrent connections. All LSTMs share the same parameters.

Results

Code on GitHub

Summary

Neural Image Captioning (NIC), an end-to-end neural network system that can automatically view an image and generate a reasonable description in plain English. We can say, NIC works as a Machine Translation problem, where

Source : Pixels

Target : English

Thank you!