Comparison of CCCC and SourceMeter -

Software Metrics tools

- Saksham (13114049)

- Samar Singh Hokar (13114050)

- Sandeep Singh (13114052)

What ?

- Source Metric: A software metric is a standard of measure of a degree to which a software system or process possesses some property.

-

e.g

- 1. Cohesion Metrics: Cohesion measures the strength of the relationship between pieces of functionality within a given module. For example, in highly cohesive systems functionality is strongly related.

- 2. Coupling: coupling is the degree of interdependence between software modules; a measure of how closely connected two routines or modules are.

- 3. Cyclomatic Complexity: Cyclomatic complexity is a software metric (measurement), used to indicate the complexity of a program. It is a quantitative measure of the number of linearly independent paths through a program's source code.

Some Software Metrics We Used

1. LOC: This metric counts the lines of non-blank, non-comment

source code in a function, module, or project.

Some Software Metrics We Used

2. WMC: This measure is a count of the number of functions defined in a module multiplied by a weighting factor.

Some Software Metrics We Used

3. NOC: Number of classes, interfaces, enums and annotations

which are directly derived from the class.

Some Software Metrics We Used

4.McCC: Complexity of the method expressed as the number of

independent control flow paths in it. It represents a lower bound

for the number of possible execution paths in the source code

and at the same time it is an upper bound for the minimum

number of test cases needed for achieving full branch test

coverage.

Why ?

SourceMeter and CCCC both calculate various types of code Metrics

The Calculations are done at

1. File Level

2. Module Level

Common Metrics in SourceMeter and CCCC

| SourceMeter Attributes | CCCC Attributes |

|---|---|

| LLOC | LOC |

| McCC | MVG |

| CLOC | COM |

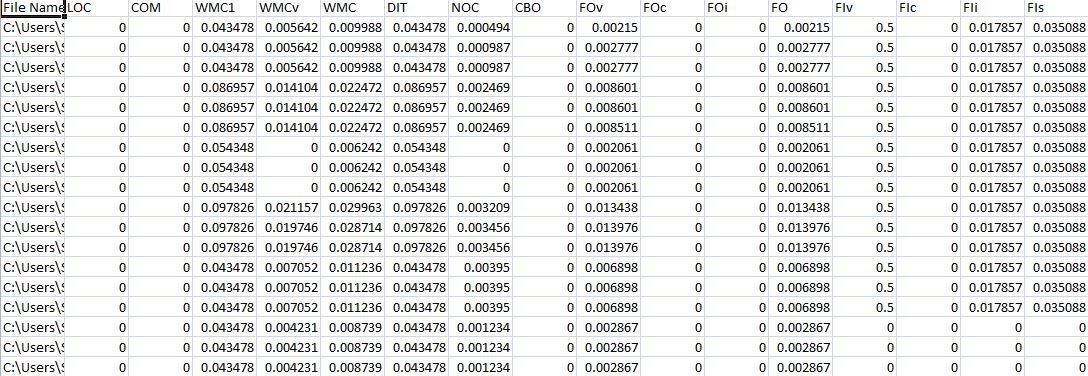

File Metrics

Common Metrics in SourceMeter and CCCC

| SourceMeter Attributes | CCCC Attributes |

|---|---|

| LLOC | LOC |

| NOI | FO |

| CLOC | COM |

| NII | FI |

| WMC | WMC |

| DIT | DIT |

| NOC | NOC |

| CBO | CBO |

Class/Module Metrics

CCCC is a freeware that comes with no warranty and is used extensively

Vs

While SourceMeter is a paid software that has been tested

We want to Calculate how much results of CCCC deviates from SourceMeter

How ?

Step 1 Choosing Projects

15 GitHub Projects varying over Domain[1]

On Average 5 Versions of Each Projects

| Android Universal Image Loader | ANTLR | ElasticSearch |

| jUnit4 | Neo4j | MapDB |

| McMM | Mission Control | OrientDB |

| Oryx | Titan | Eclipse for Ceylon |

| Hazelcast | BroadLeaf Commerce | Netty |

Step 2 Parsing Output

SourceCode

SourceCode

SourceMeter

CCCC

CSV Files

CSV Files

HTML Output

Step 3 Normalizing

Measurements show that, for the same software system and

metrics, the metrics values are tool depended [2]

SourceMeter

CCCC

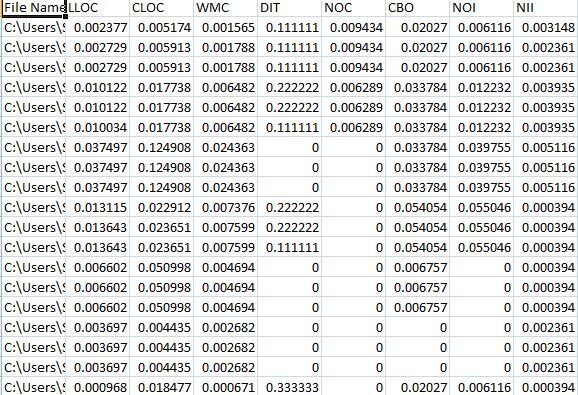

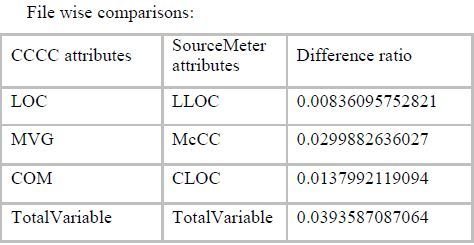

Step 4 Finding Differences

1. Attribute Wise

- Now we find the difference ratio of semantically same attributes of SourceMeter(A1) and CCCC(A2).

2. File Wise

- For data objects A1 and A2 from SourceMeter and CCCC respectively

Results

Max

MIN

Total

Conclusion

For FileWise Metrics

Both the tools give FileWise

Metrics within error range of 5% but same tools don't do

efficiently on ClassWise Metrics with error of 25%.

References

1.Tóth, Zoltán, Péter Gyimesi, and Rudolf Ferenc. "A Public Bug

Database Of Github Projects And Its Application In Bug Prediction".

N.p., 2017. Print

2. Rüdiger Lincke, Jonas Lundberg and Welf Löwe "Comparing Software Metrics Tools", Vaxjo University, Sweden