Deep Learning HMC

Building Topological Samplers for Lattice QCD

Sam Foreman

May, 2021

Text

MCMC in Lattice QCD

- Generating independent gauge configurations is a MAJOR bottleneck for LatticeQCD.

- As the lattice spacing, \(a \rightarrow 0\), the MCMC updates tend to get stuck in sectors of fixed gauge topology.

- This causes the number of steps needed to adequately sample different topological sectors to increase exponentially.

Critical slowing down!

Markov Chain Monte Carlo (MCMC)

- Goal: Draw independent samples from a target distribution, \(p(x)\)

- Starting from some initial state \(x_{0}\sim \mathcal{N}(0, \mathbb{1})\) , we generate proposal configurations \(x^{\prime}\)

- Use Metropolis-Hastings acceptance criteria

\(\big\{ x_{0}, x_{1}, x_{2}, \ldots, x_{m-1}, x_{m}, x_{m+1}, \ldots, x_{n-2}, x_{n-1}, x_{n} \big\}\)

1. Construct chain:

\(\big\{ x_{0}, x_{1}, x_{2}, \ldots, x_{m-1}, x_{m}, x_{m+1}, \ldots, x_{n-2}, x_{n-1}, x_{n} \big\}\)

2. Thermalize ("burn-in"):

\(\big\{ x_{0}, x_{1}, x_{2}, \ldots, x_{m-1}, x_{m}, x_{m+1}, \ldots, x_{n-2}, x_{n-1}, x_{n} \big\}\)

3. Drop correlated samples ("thinning"):

dropped configurations

Inefficient!

Issues with MCMC

- Generate proposal \(x^{\prime}\):

\(x^{\prime} = x + \delta\), where \(\delta \sim \mathcal{N}(0, \mathbb{1})\)

random walk

Hamiltonian Monte Carlo (HMC)

-

Introduce fictitious momentum:

\(v\sim\mathcal{N}(0, 1)\)

-

Target distribution:

\(p(x)\propto e^{-S(x)}\)

-

Joint target distribution:

-

The joint \((x, v)\) system obeys Hamilton's Equations:

lift to phase space

HMC: Leapfrog Integrator

(trajectory)

-

Hamilton's Eqs:

-

Hamiltonian:

-

\(N_{\mathrm{LF}}\) leapfrog steps:

Leapfrog Integrator

2. Full-step \(x\)-update:

3. Half-step \(v\)-update:

1. Half-step \(v\)-update:

HMC: Issues

- Cannot easily traverse low-density zones.

- What do we want in a good sampler?

- Fast mixing

- Fast burn-in

- Mix across energy levels

- Mix between modes

- Energy levels selected randomly \(\longrightarrow\) slow mixing!

Stuck!

Leapfrog Layer

- Introduce a persistent direction \(d \sim \mathcal{U}(+,-)\) (forward/backward)

- Introduce a discrete index \(k \in \{1, 2, \ldots, N_{\mathrm{LF}}\}\) to denote the current leapfrog step

- Let \(\xi = (x, v, \pm)\) denote a complete state, then the target distribution is given by

- Each leapfrog step transforms \(\xi_{k} = (x_{k}, v_{k}, \pm) \rightarrow (x''_{k}, v''_{k} \pm) = \xi''_{k}\) by passing it through the \(k^{\mathrm{th}}\) leapfrog layer

Leapfrog Layer

where \((s_{v}^{k}, q^{k}_{v}, t^{k}_{v})\), and \((s_{x}^{k}, q^{k}_{x}, t^{k}_{x})\), are parameterized by neural networks

- \(x\)-update \((d = +)\):

(\(m_{t}\)\(\odot x\)) -independent

masks:

Momentum (\(v_{k}\)) scaling

Gradient \(\partial_{x}S(x_{k})\) scaling

Translation

- \(v\)-update \((d = +)\):

(\(v\)-independent)

- Each leapfrog step transforms

by passing it through the \(k^{\mathrm{th}}\) leapfrog layer.

L2HMC: Generalized Leapfrog

- Complete (generalized) update:

- Half-step \(v\) update:

- Full-step \(\frac{1}{2} x\) update:

- Full-step \(\frac{1}{2} x\) update:

- Half-step \(v\) update:

Leapfrog Layer

masks:

Stack of fully-connected layers

Example: GMM \(\in\mathbb{R}^{2}\)

\(A(\xi',\xi)\) = acceptance probability

\(A(\xi'|\xi)\cdot\delta(\xi',\xi)\) = avg. distance

Note:

\(\xi'\) = proposed state

\(\xi\) = initial state

- Maximize expected squared jump distance:

- Define the squared jump distance:

HMC

L2HMC

Training Algorithm

construct trajectory

Compute loss + backprop

Metropolis-Hastings accept/reject

re-sample

momentum

+ direction

Annealing Schedule

- Introduce an annealing schedule during the training phase:

(varied slowly)

e.g. \( \{0.1, 0.2, \ldots, 0.9, 1.0\}\)

(increasing)

- Target distribution becomes:

- For \(\|\gamma_{t}\| < 1\), this helps to rescale (shrink) the energy barriers between isolated modes

- Allows our sampler to explore previously inaccessible regions of the target distribution

- Wilson action:

- Topological charge:

continuous, differentiable

discrete, hard to work with

- Link variables:

Lattice Gauge Theory

- We maximize the expected squared charge difference:

Loss function: \(\mathcal{L}(\theta)\)

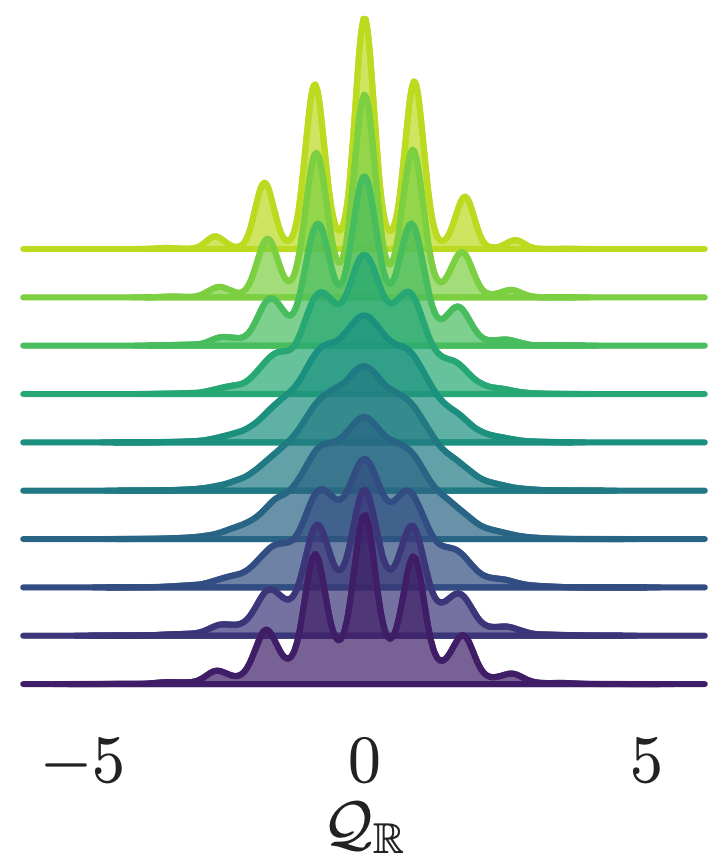

Results

- We maximize the expected squared charge difference:

Interpretation

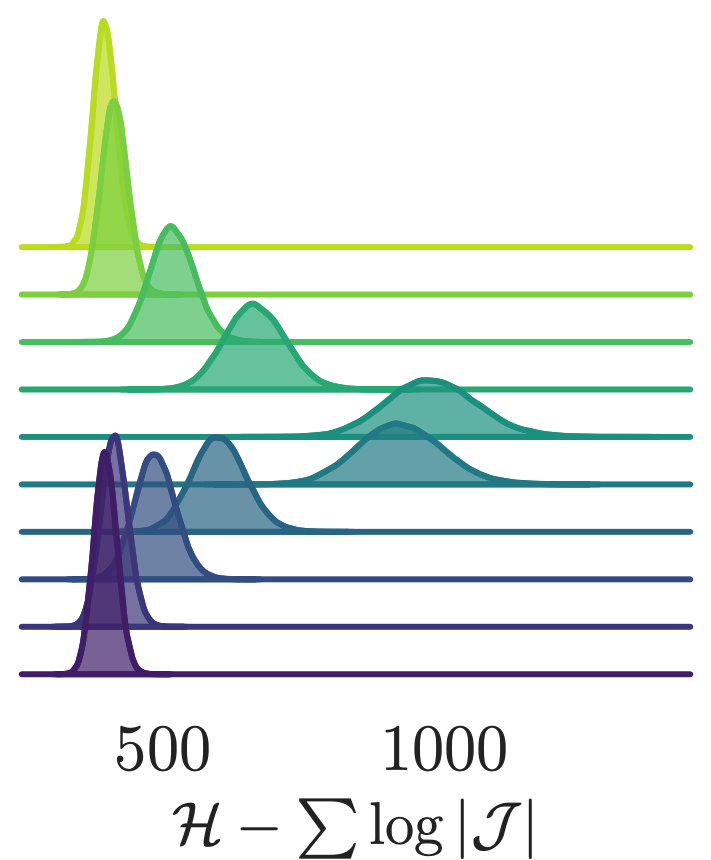

- Look at how different quantities evolve over a single trajectory

- See that the sampler artificially increases the energy during the first half of the trajectory (before returning to original value)

Leapfrog step

variation in the avg plaquette

continuous topological charge

shifted energy

Text

Leapfrog step

variation in the avg plaquette

continuous topological charge

shifted energy

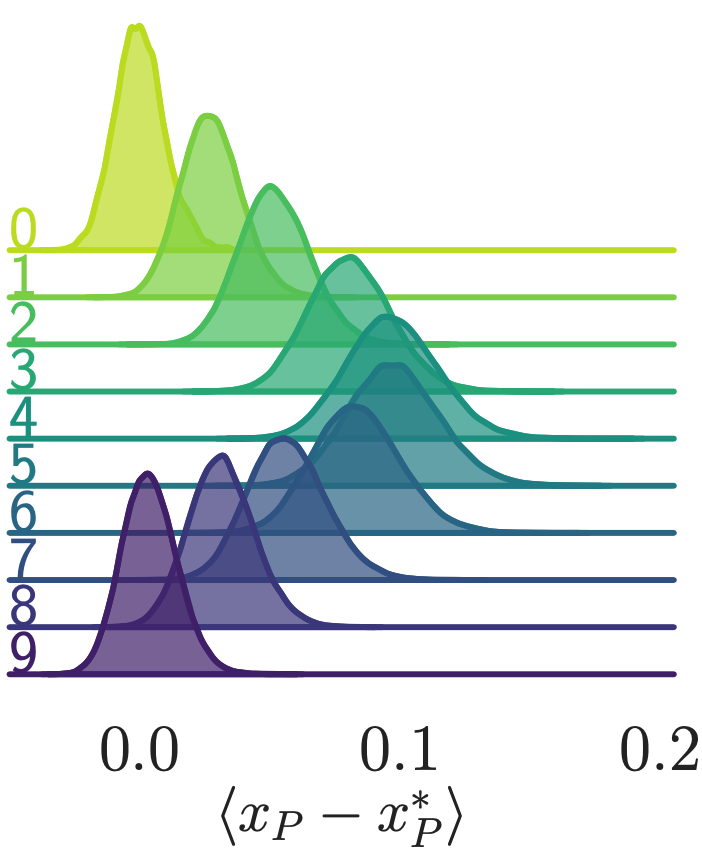

Interpretation

- Look at how the variation in \(\langle\delta x_{P}\rangle\) varies for different values of \(\beta\)

\(\beta = 7\)

\(\simeq \beta = 3\)

\(\beta = 5\)

\(\beta = 6\)

\(\beta = 7\)

Integrated Autocorrelation Time

- Want to calculate: \(\langle \mathcal{O}\rangle\propto \int \left[\mathcal{D} x\right] \mathcal{O}(x)e^{-S[x]}\)

- Instead, we account for the autocorrelation, so the variance becomes: \(\sigma^{2} = \frac{\tau^{\mathcal{O}}_{int}}{N}\mathrm{Var}\left[\mathcal{O}(x)\right]\)

- If we had independent configurations, we could approximate by \(\langle\mathcal{O}\rangle \simeq \frac{1}{N}\sum_{n=1}^{N} \mathcal{O}(x_{n})\longrightarrow \sigma^{2}=\frac{1}{N}\mathrm{Var}\left[\mathcal{O}(x)\right]\propto\frac{1}{N}\)

Thanks for listening!

Interested?

4096

8192

1024

2048

512

Scaling test: Training

\(4096 \sim 1.73\times\)

\(8192 \sim 2.19\times\)

\(1024 \sim 1.04\times\)

\(2048 \sim 1.29\times\)

\(512\sim 1\times\)

Scaling test: Training

Scaling test: Training

\(8192\sim \times\)

4096

1024

2048

512

Scaling test: Inference