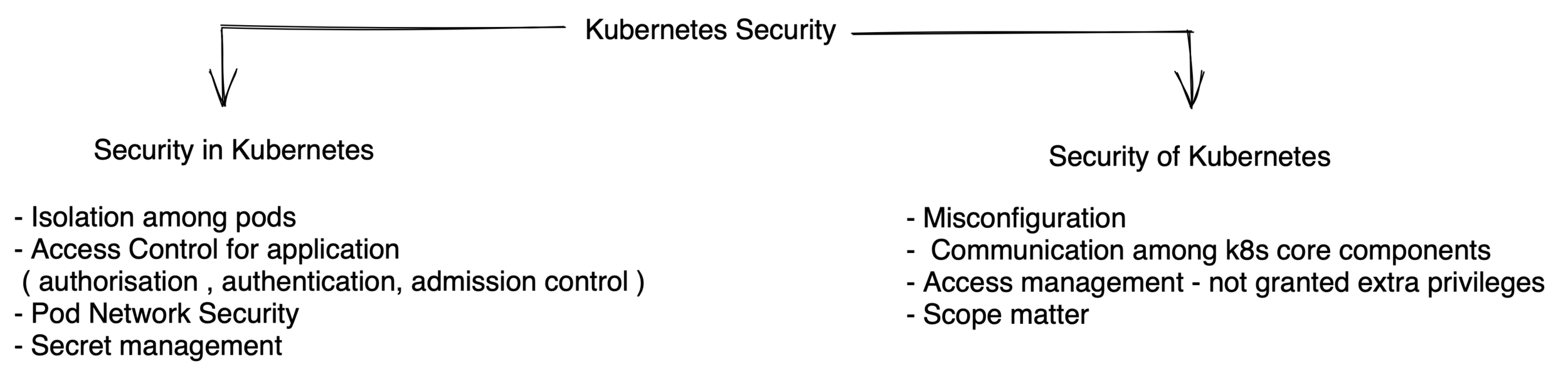

Demistifying Kubernetes Security

Sangam Biradar

Principal Security Advocate at Tenable

Docker Community Leader

AWS/CDF Community Builder

@sangamtwts

1. Secure Design and code

Static Analysis

Security Testing (SAST)

- security code is free of bug , specifically security bugs

- unsafe programming practices leads to security bugs

- Write secure code in first place

- Use scanners output to locate unsafe code blocks

- Complete the feedback loop

Software Composition Analysis (SBOM)

Identifying security issues in open-source and commercial software components

Dynamic Application Security Testing(DAST)

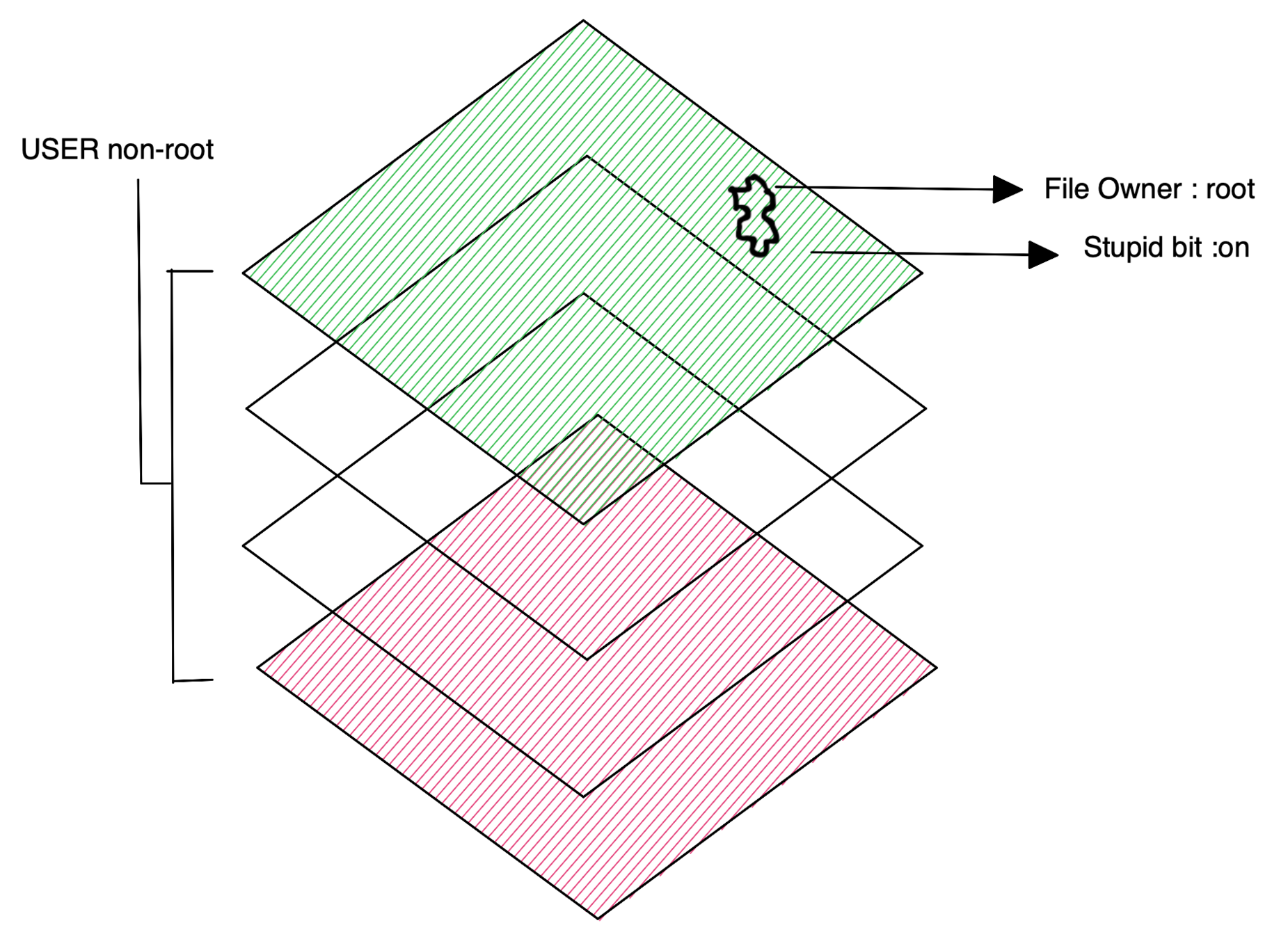

Privilege Escalation

Minimum Privileges

- No blanket privileges beyond subject's role

- User Instruction in Dockerfile

- Without user instruction , Container runs as a root

- Most application containers doesn't need root access

setuid

setguid

setuid bit on causes process to run with permissions of file owner

# Example: setuid bit on vs off

ls -l /etc/passwd

-rwsr-xr roor root 6421 Jun 20 2020 /etc/passwd <----- ON

ls -l /home/user/readme.txt

-rw-r--r-- user user 6424 Jun 2020 /home/user/readme.txt <---- OffUnwanted objects in an image

- Inevitably unwanted active threats and vulnerabilities will appear

- Malware

- Secrets

Security

Vulnerability

Secrets

Malware

Scanner

Image Scanning

- New Vulnerabilities emerge frequently

- A clean image yesterday may not be clean today

- Scan in CI pipeline after building the image

- Scan image registries

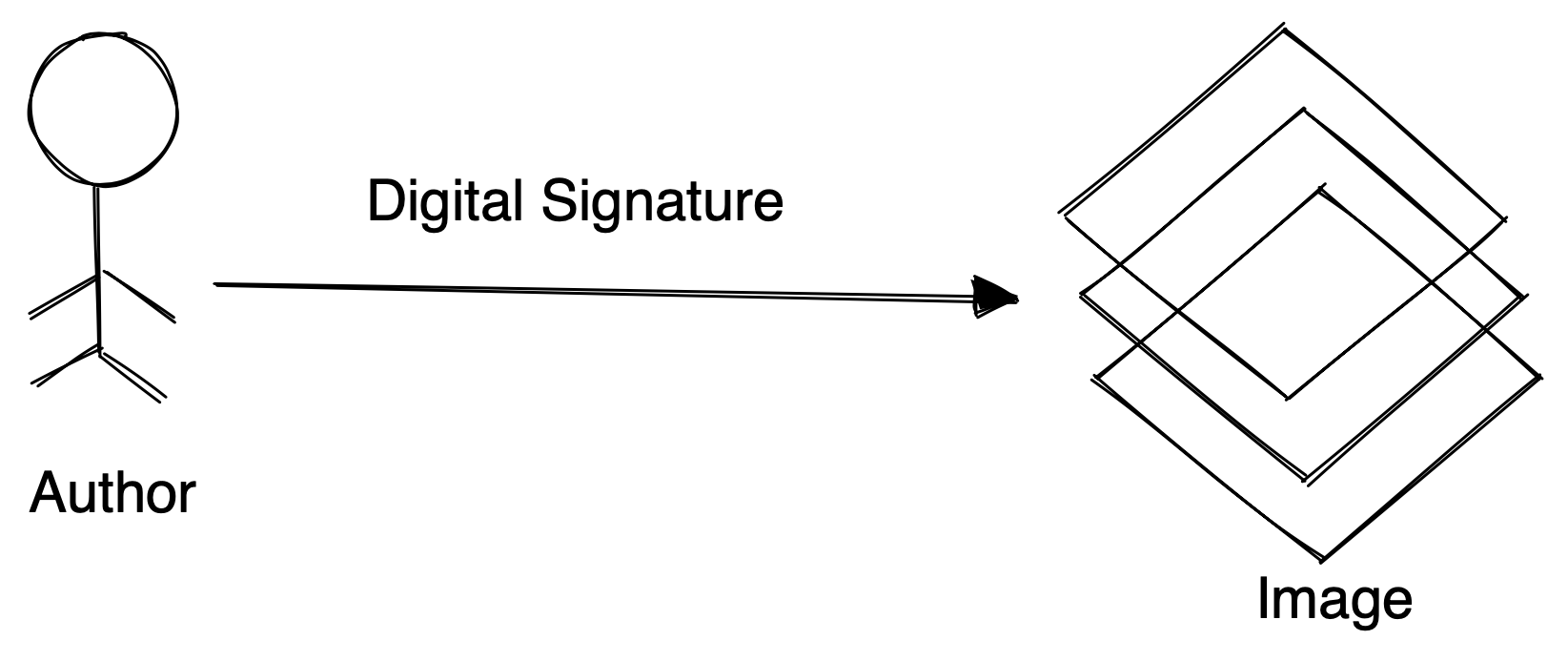

Image Signing :-

https://github.com/sigstore/cosign

TUF & Notary

Authenticate Subjects

- Encryption of network does not prevent from malicious user

- Permit only authenticated users

Code

Build

Stage

Test

Deploy

Code

Build

Stage

Test

Deploy

Code

Build

Stage

Test

Deploy

Continuous Integration

Continuous Delivery

Continuous Deployment

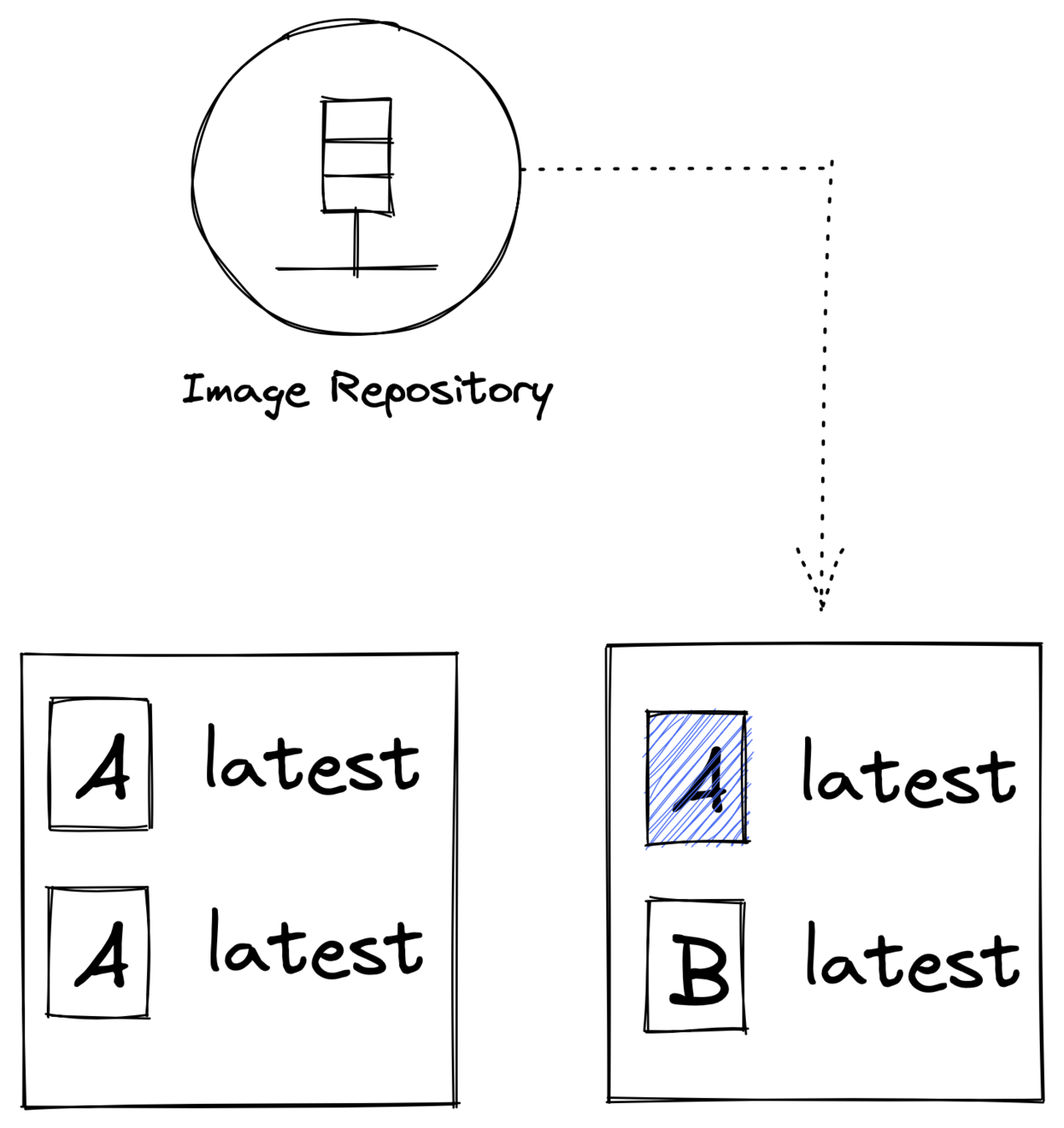

Use Correct Image

<hasing algorithm>:<hash value>

sha256:287379gaggfaghagja....Tag: human-friendly way to identify image version

Example : ngnix:latest

1. Use immutable tags

a. Orchestrator will pull an image with a unique identifier

2. Keep using mutable(latest) tag but augment with automated mechanism

3.Use digest instead of tags

Securing Hosts and Container Working Environment

Running a Container as Root

- User interaction in Dockerfile Set user ID for a Container

$ docker run --user 0 myImageOverride image instruction by starting a container as root with

$ docker run --user commandPrivileged Containers

- Container running as root ≠ Privileged container

- Open Container Initiative (OCI) specification default capabilities

https://github.com/opencontainers/runc/blob/main/libcontainer/SPEC.md#security

$ docker run --privileged myImage- Privileged containers pierce the wall of security between OS and Containers

- Most application containers do not need to run in privileged mode

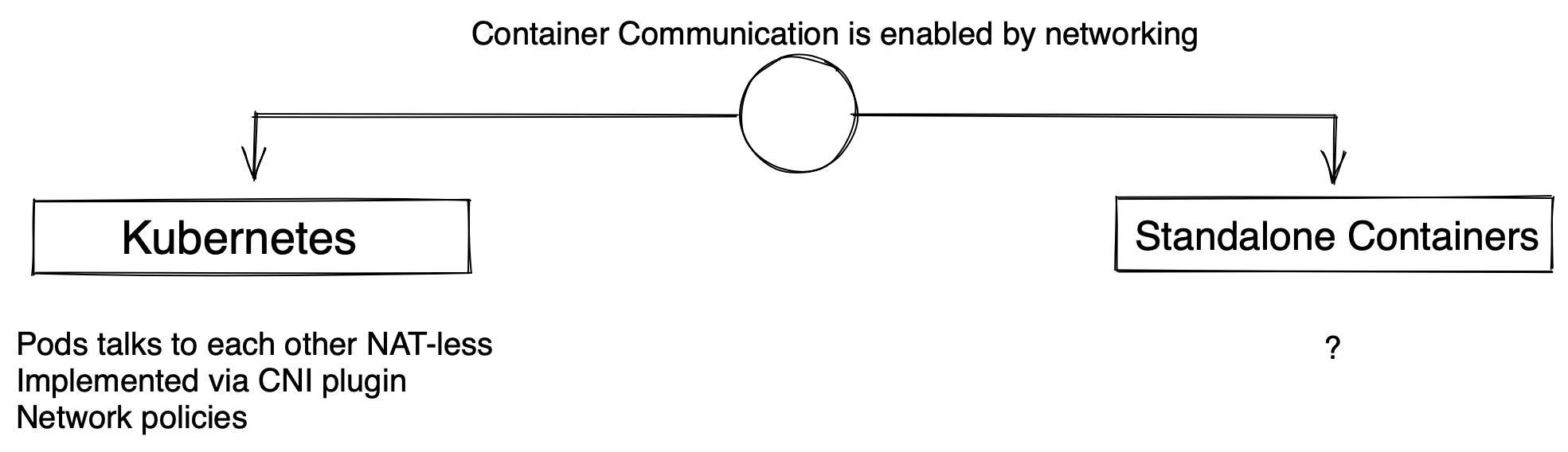

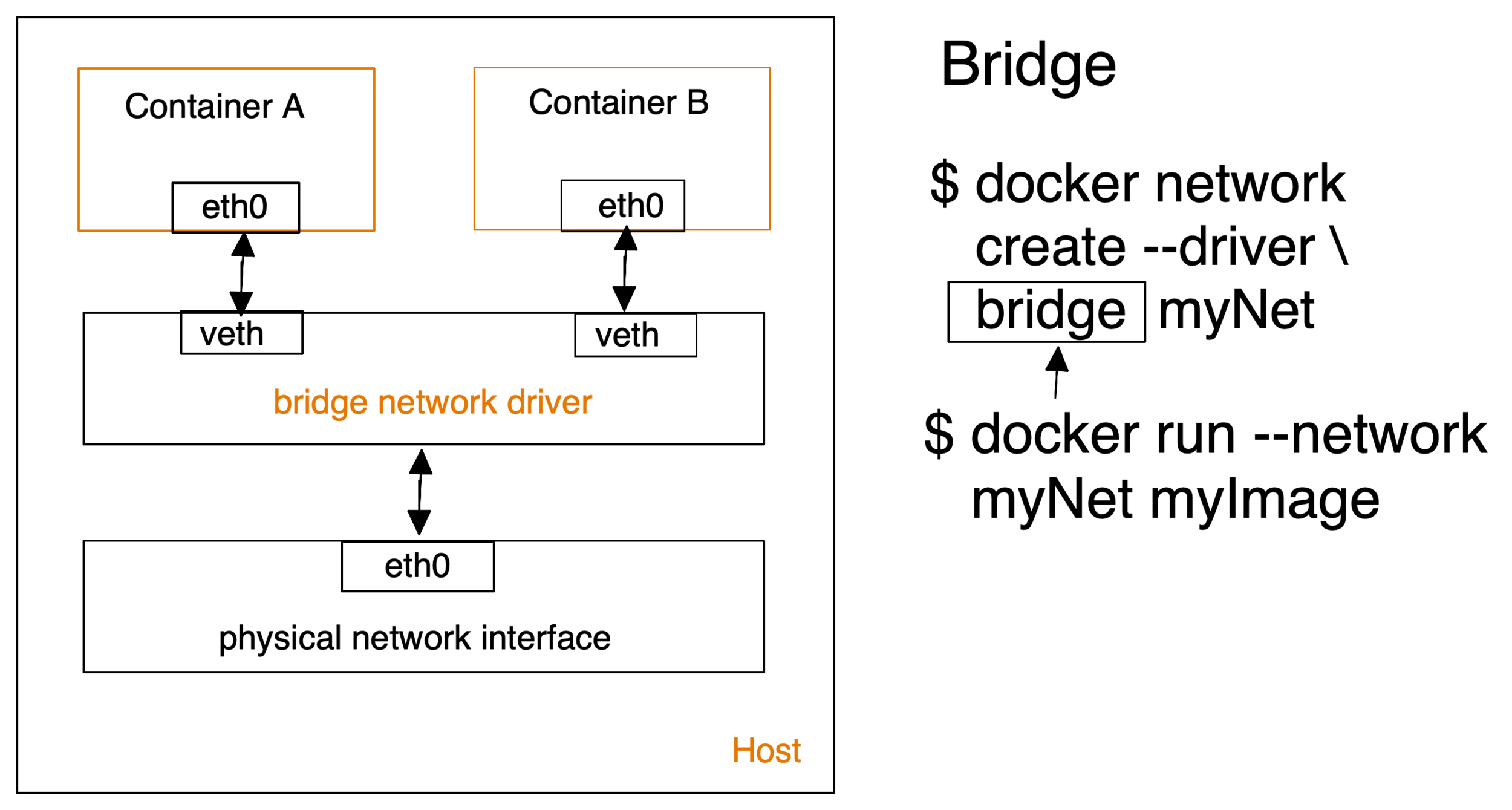

Container Networking

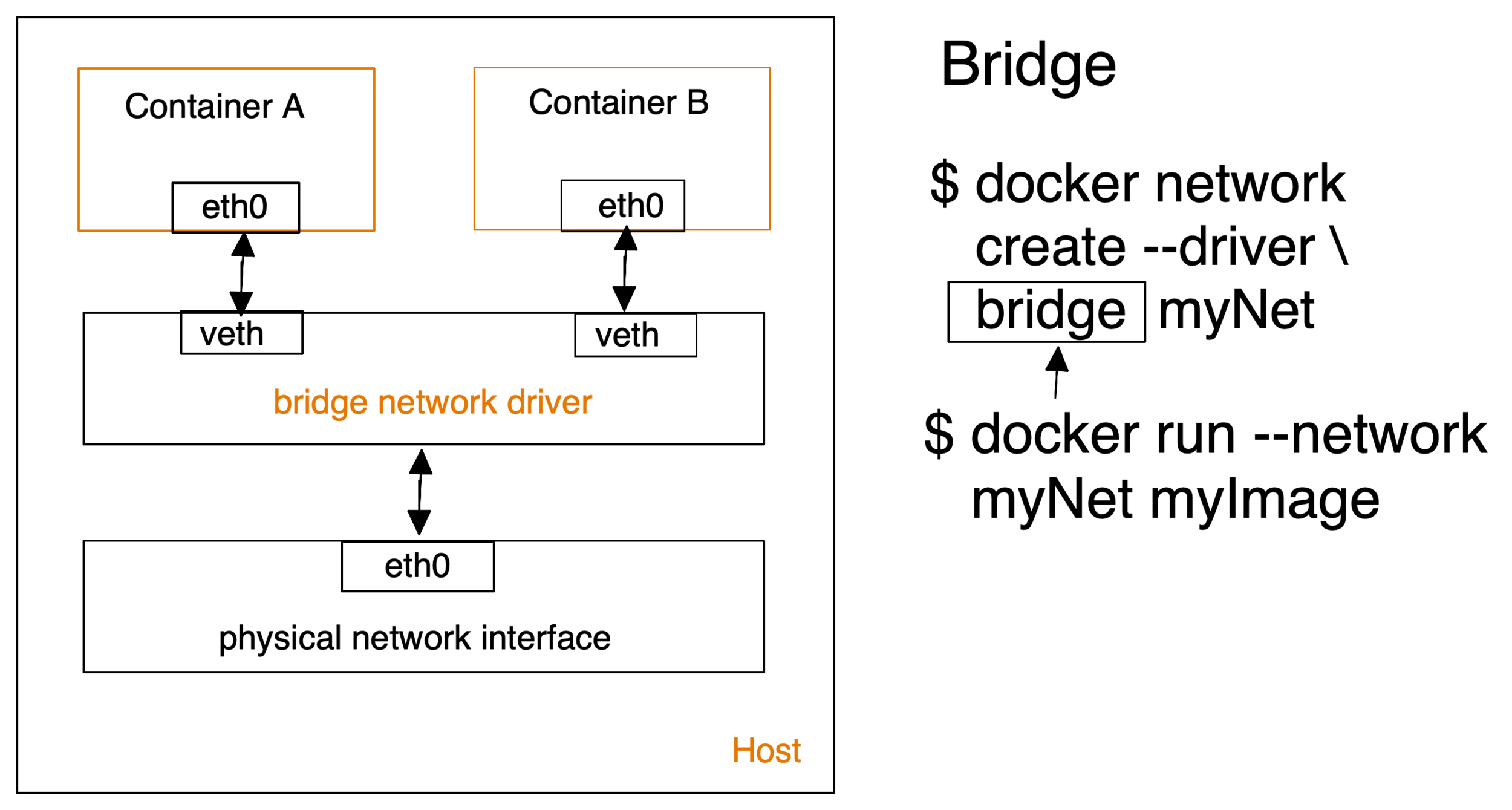

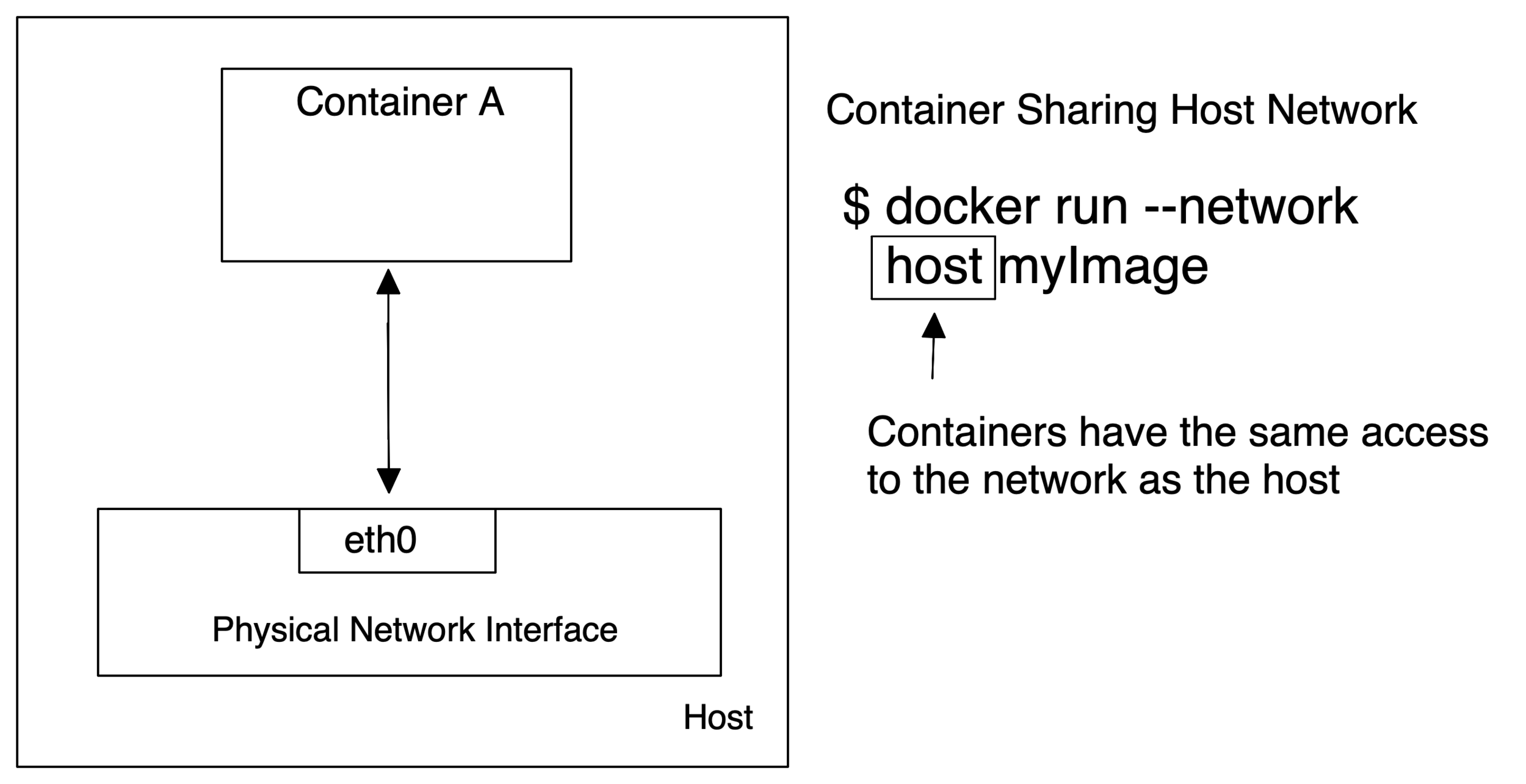

Standalone Containers

- multiple network drivers facilitate container communication

- Bridge

- special : Host, None

- Underlay networks

- overlay networks

Underlaying Network

- Container Communication by leveraging underlying physical interface

- Media access control virtual area network (Macvlan)

- Internet Protocol VLAN (ipvlan)

Overlay Networks

- Container communication by leveraging a virtual network

- Virtual network sits above host networks

Container Ports

- Containers are not directly routable from outside their cluster

- Map host port to target container port

-

$ docker run -p 8088:8080/tcp

Privileged Ports

- Docker automatically maps containers ports to available host port range (49153-65535)

- No restrictions on assigning privileged host ports(1-1024)

- Review of Dockerfile and audit of running containers

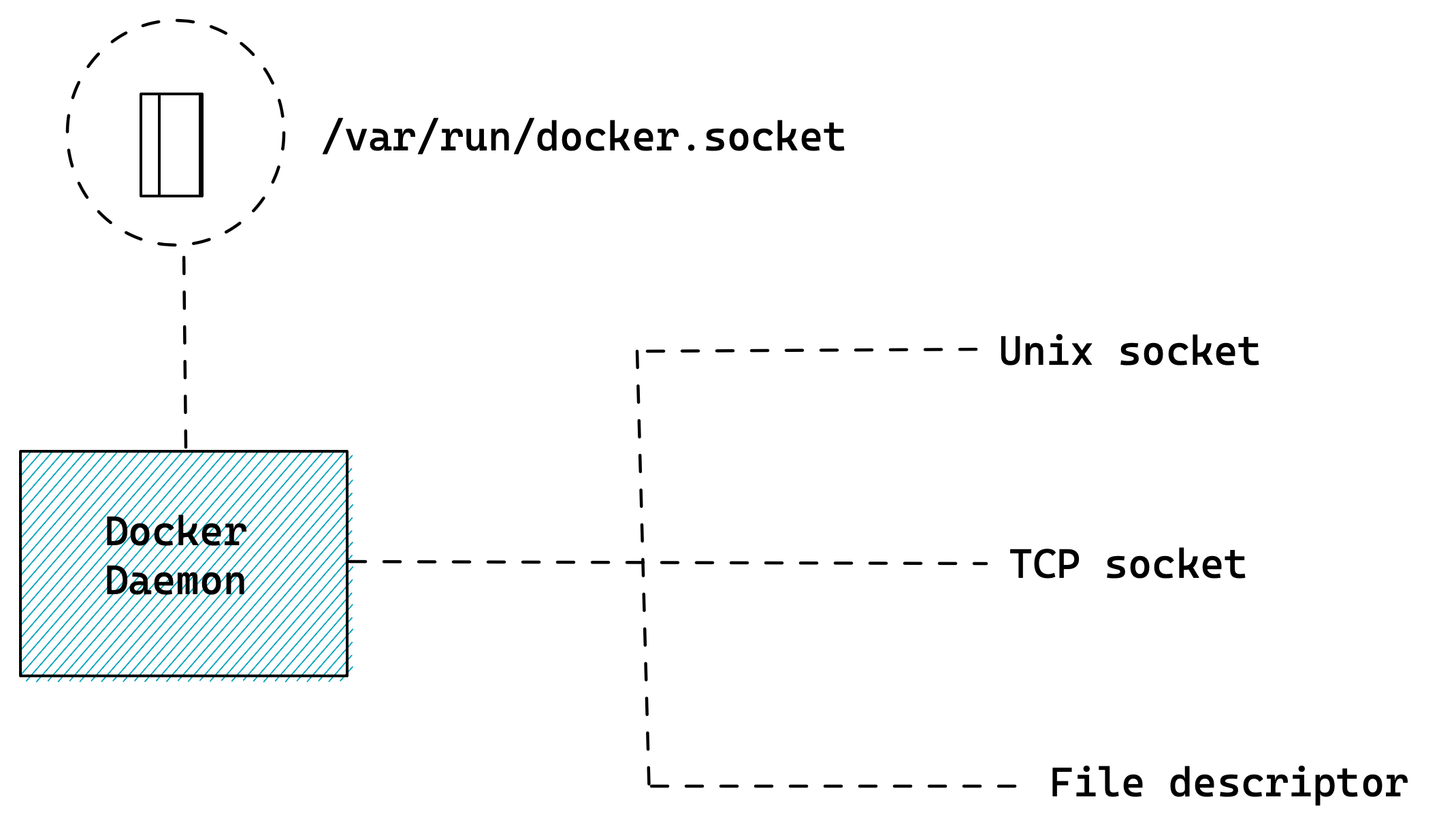

Docker Socket

process with access to socket file

can send any command to daemon

Do not mount socket inside a container

Default Network Bridge Security

every container has read access to traffic

Disable setting in docker daemon configuration file

Create a new bridge network and attach

only containers that need to communicate

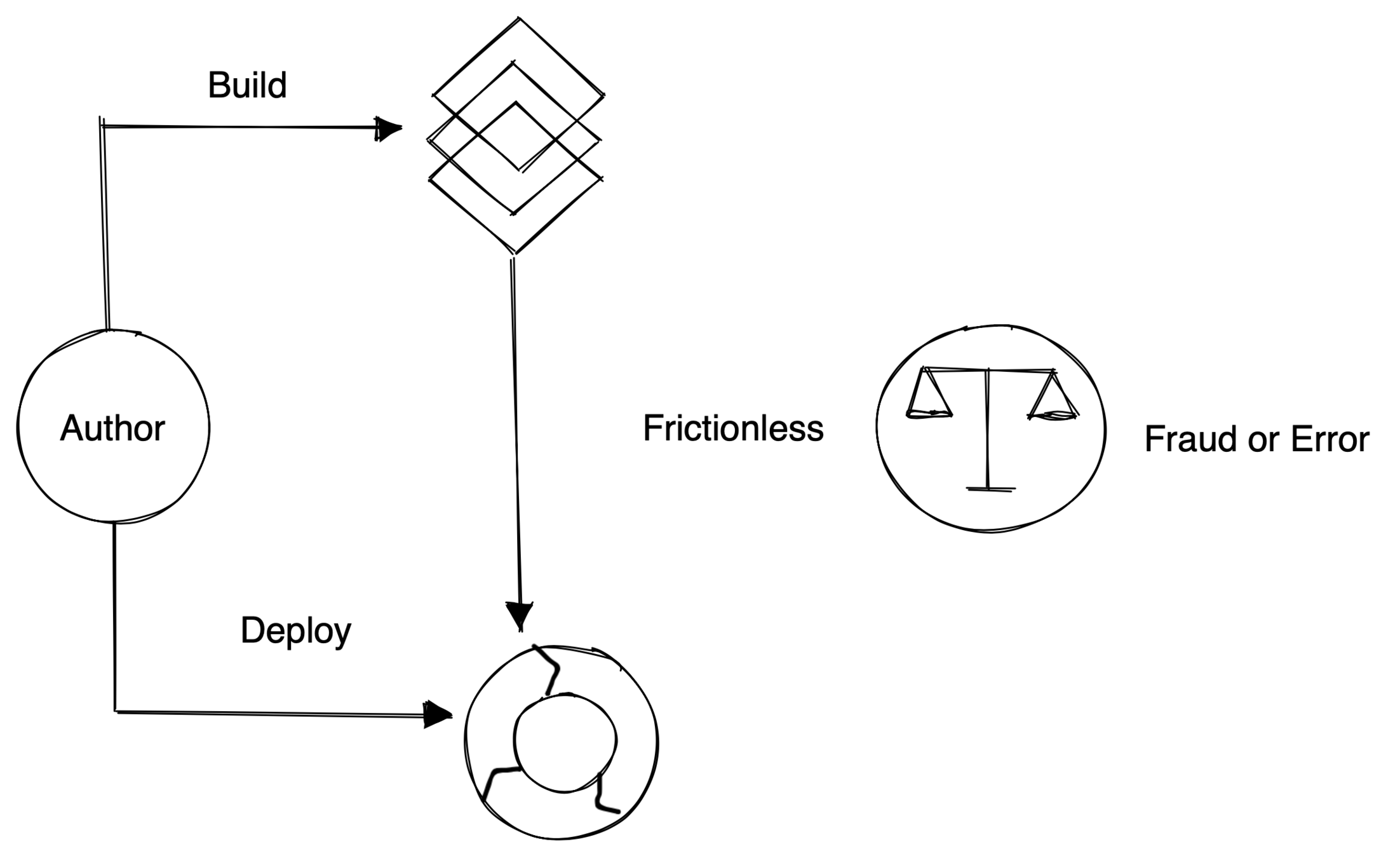

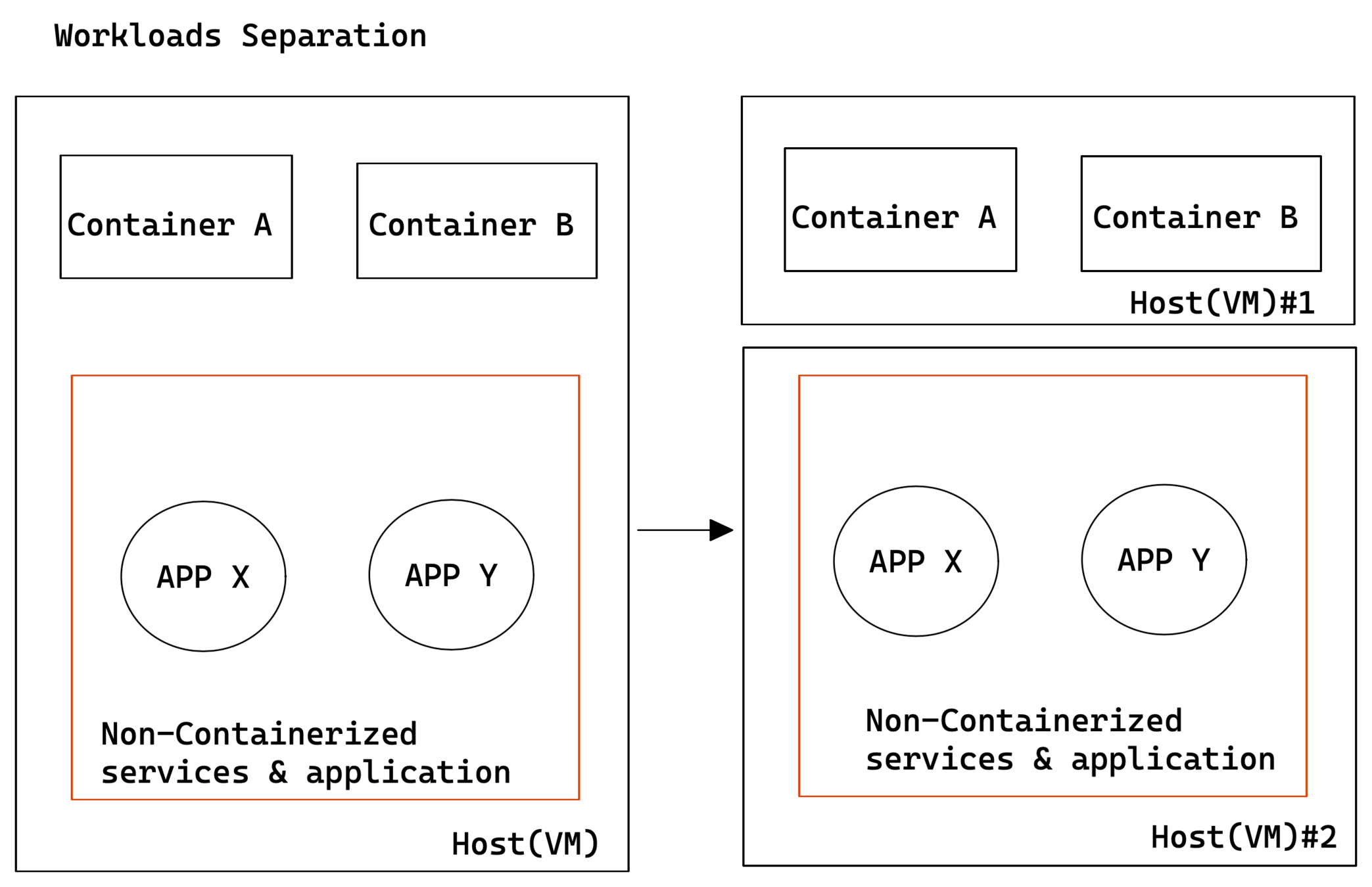

Host OS Protection

Potential for error & fraud

New attack vector

Configuration drift

Run Immutable host

Minimise Direct access to host

- lets k8s run and manage your containers

- treat your hosts as cattle , not pets

Host OS Vulnerabilities

- Use standardised and approved VM images for the hosts

- Continuously scan for security vulnerabilities

Host file system

- Protect host file system from malicious containers

- Mount host file system as read only to container

- Reduce attack spillover

- Containers not tied to a host

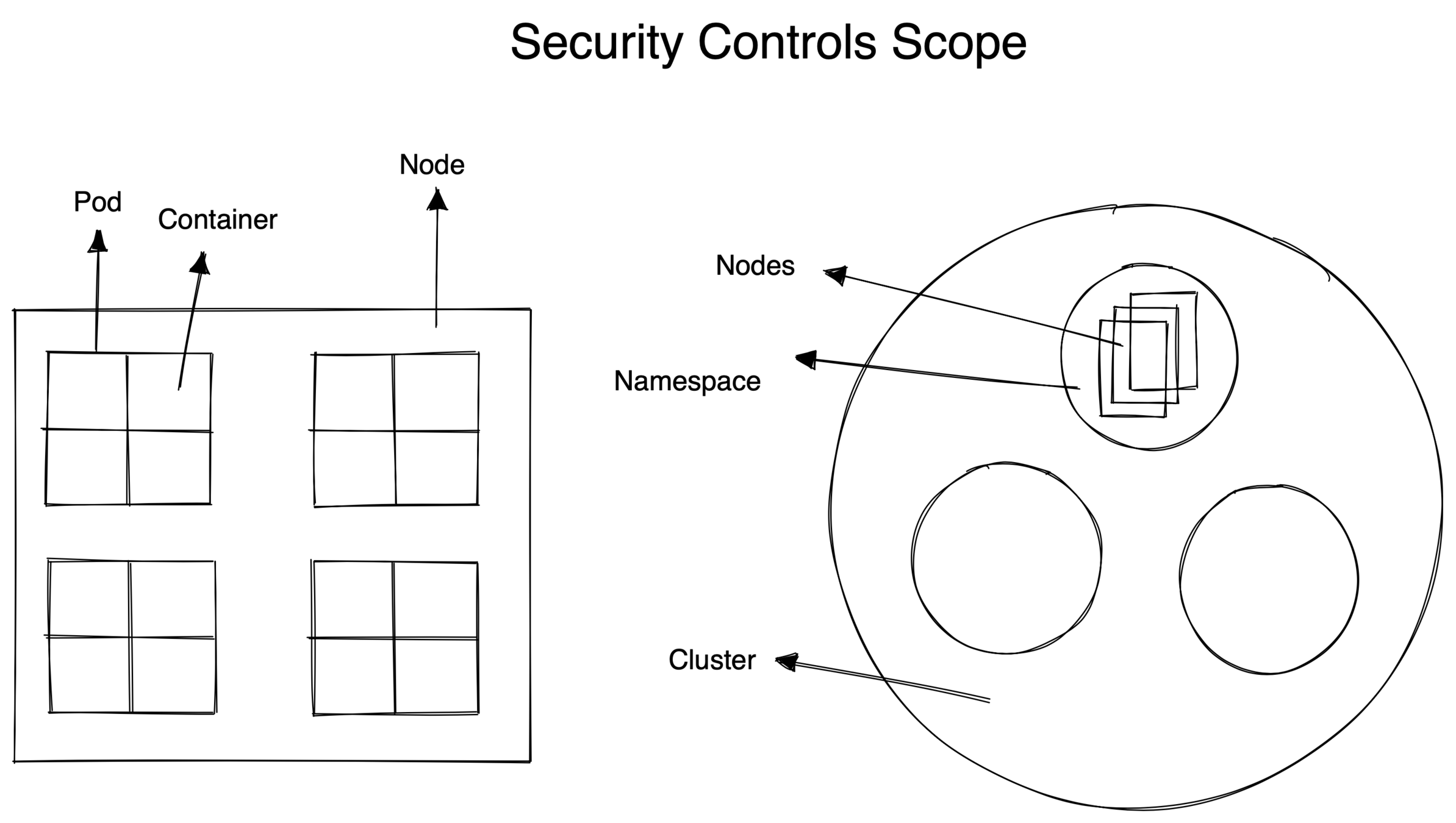

Securing Application In Kubernetes

Access Management

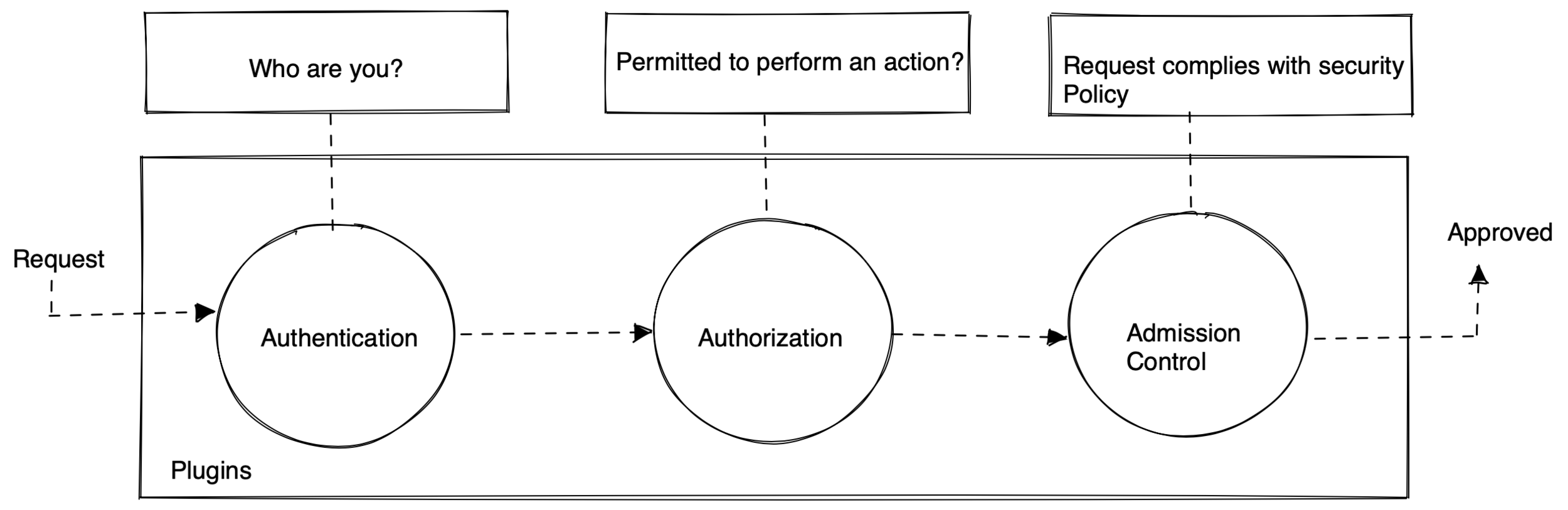

Authentication

- service accounts managed within Kubernetes

- User account managed outside of Kubernetes

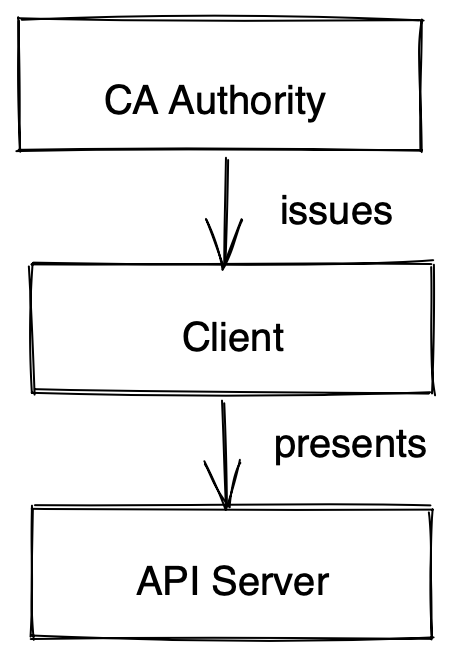

Authentication Methods

- Static authentication or token file

- X.509 client certificate

- OpenID Connect Tokens

- Service Account Tokens

others

Information is stored in a file

Password, username , and user ID

Base64-encoded value of user:password

Method should not be used in enterprise env

Based on OAuth 2.0 spec

Mechanism for generation and refreshing tokens

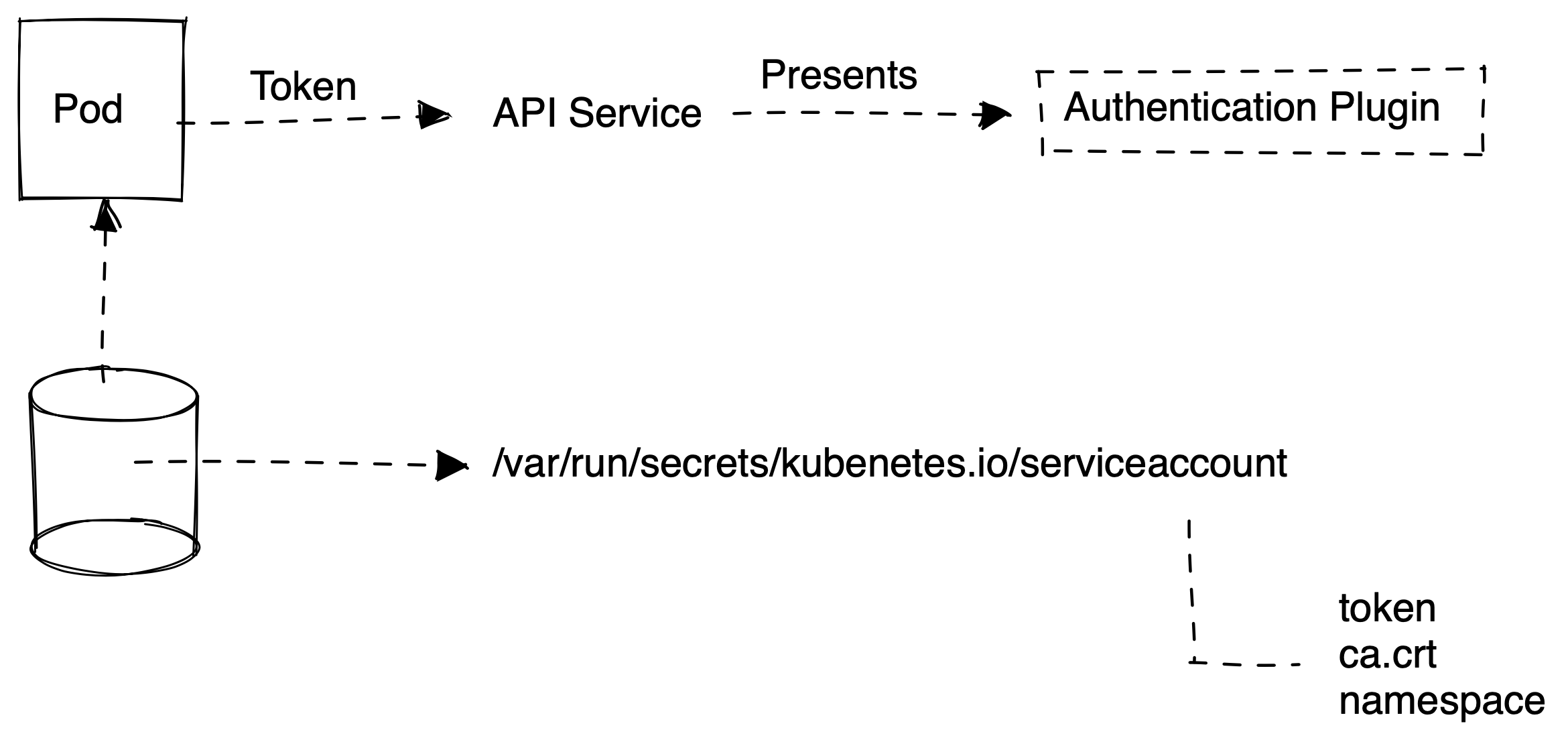

Service account

- Authenticating application using ServiceAccount

- Each pod is assigned a ServiceAccount by Default

- ServiceAccount allows controlling which resources a pod can access

$ kubectl create serviceaccount mySvcAct#Pod Spec With a Custom Service Account

apiVersion: v1

Kind: Pod

spec:

ServiceAccountName: mySvcActAuthorization

- weather request operation is permitted

- by default , all permission are denied

- verbs: create , update , delete and get

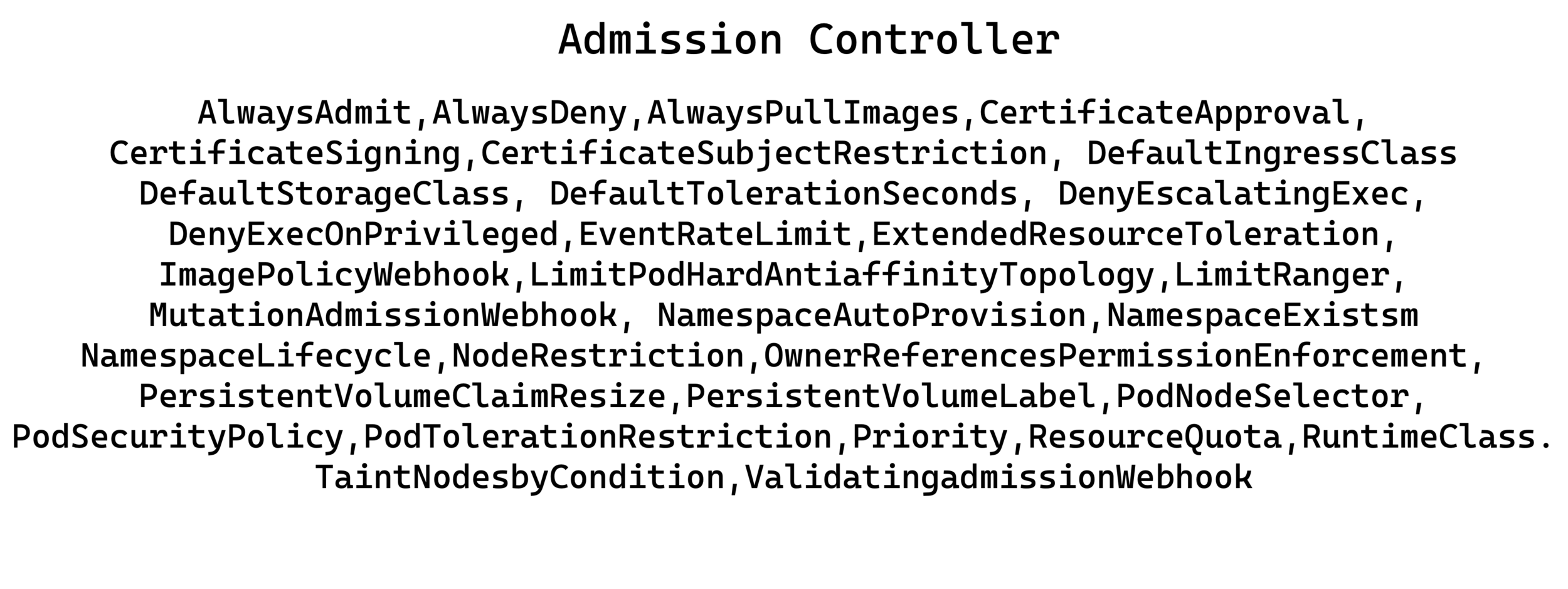

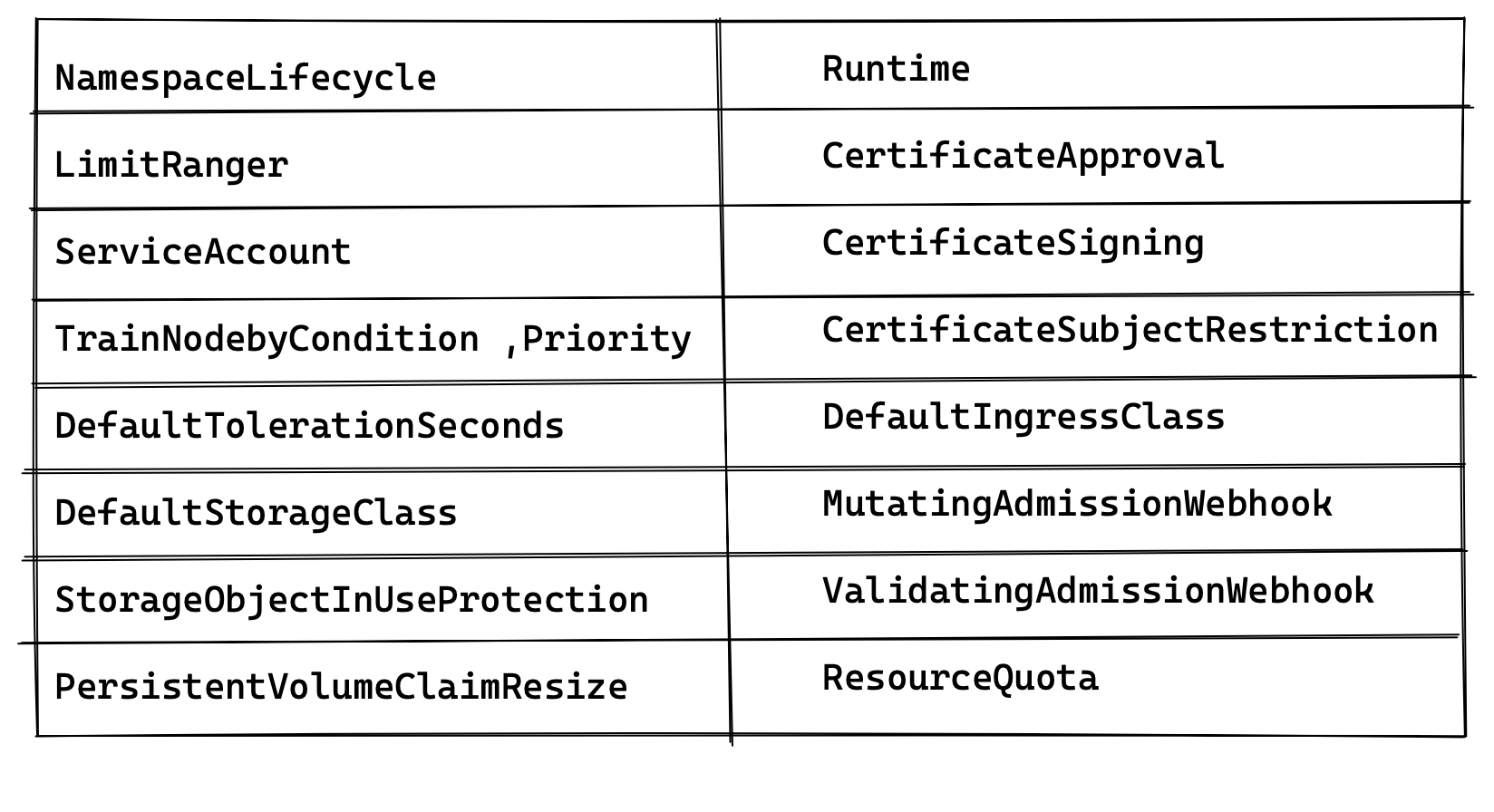

- Granular validation by admission controller

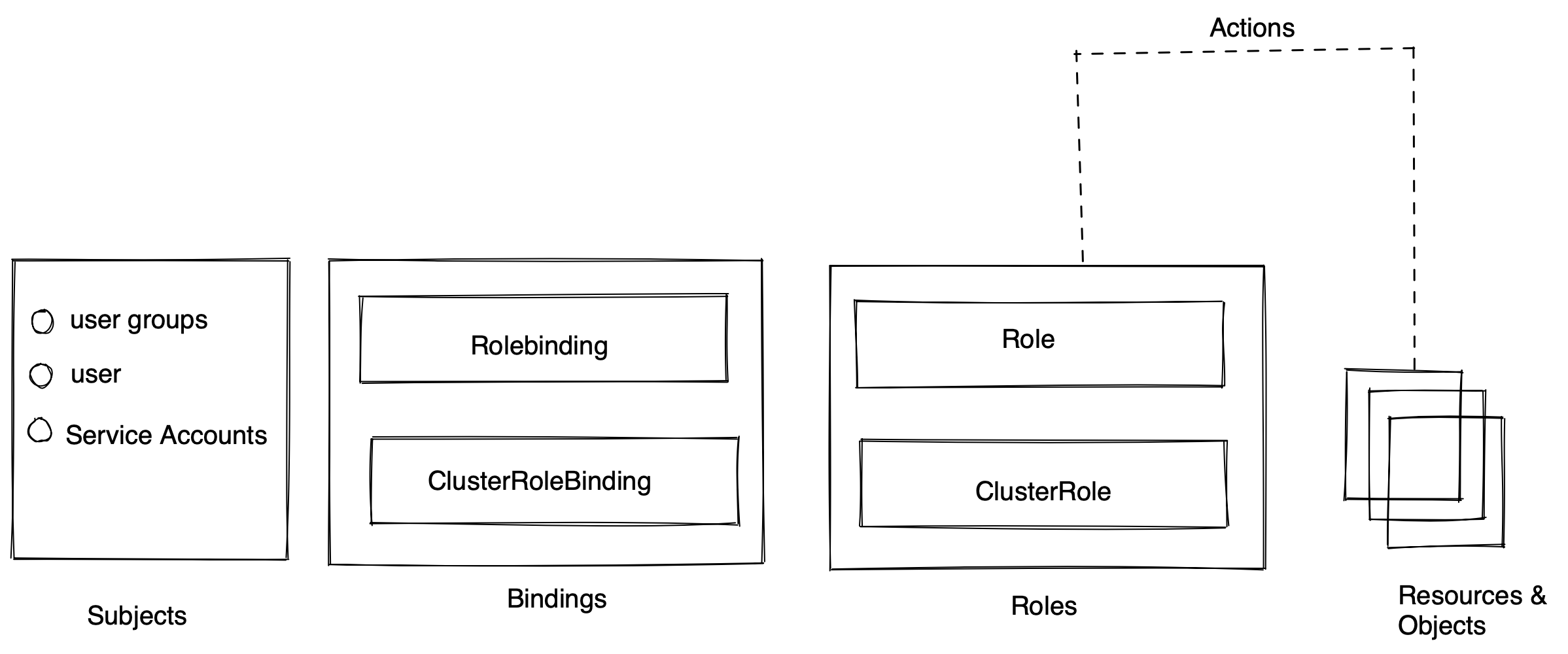

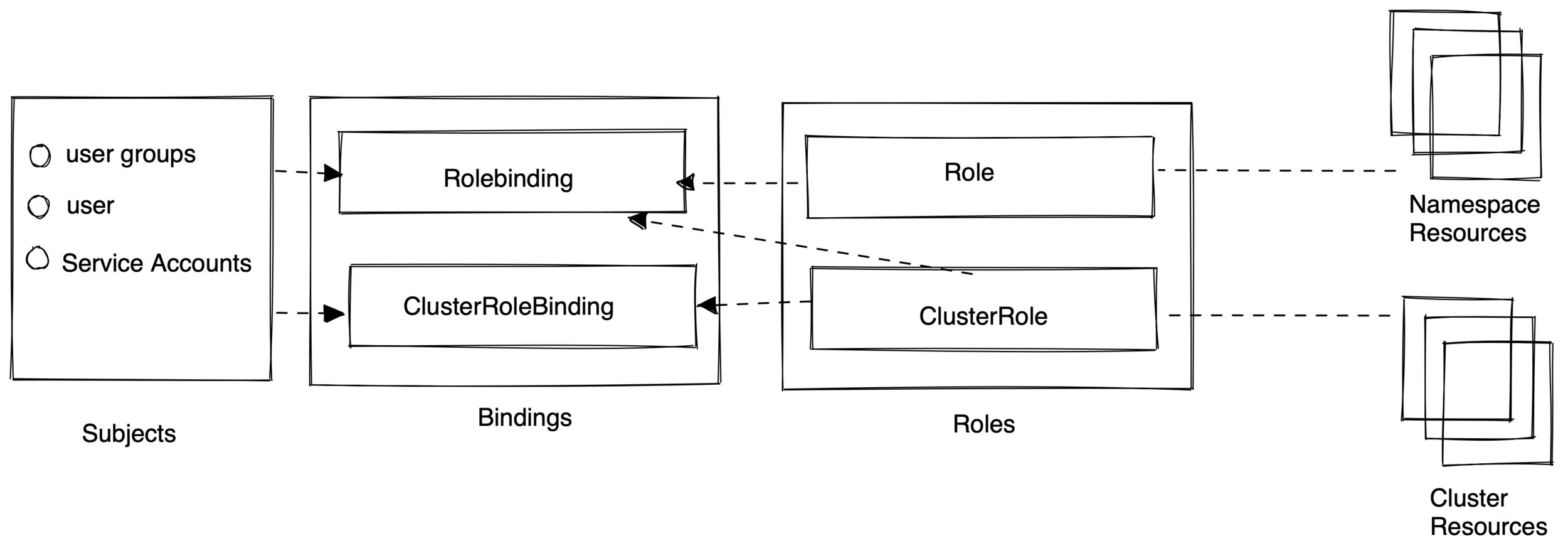

Role-based access control

- Recommendation Strategy: RBAC

- Grant or Deny access to operation based on subject's role

| get | create | update | Delete | |

|---|---|---|---|---|

| a | x | |||

| b | x | x | ||

| c | x | x | x |

- Intercepts request and validates

- Over 30 different admission controller plugins

$ kube-apiserver --enable-admission-plugins+<...>

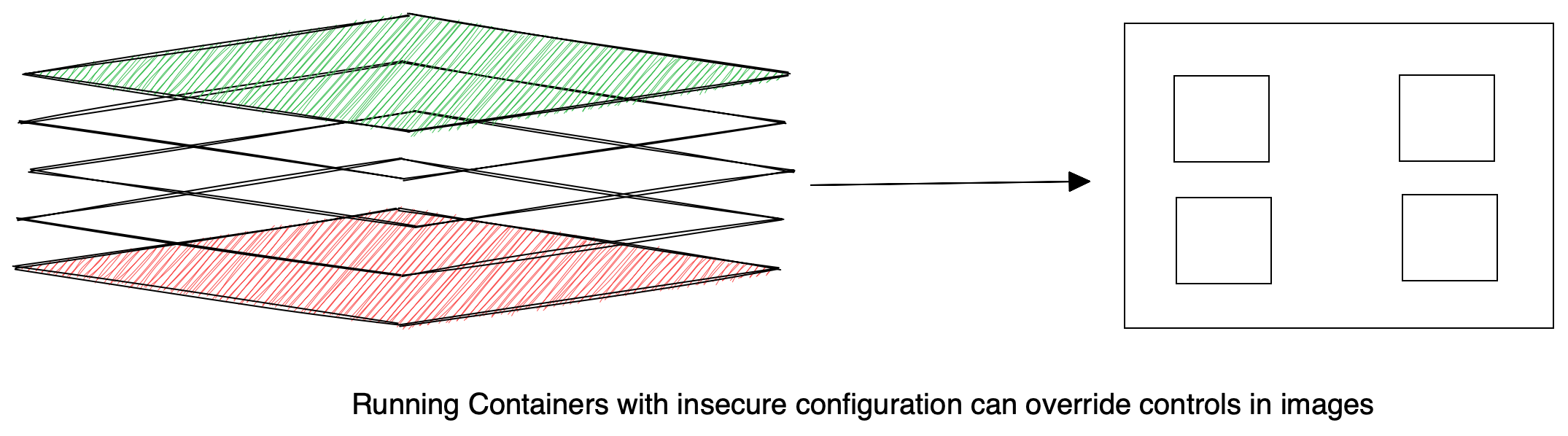

Why Security Context and Policies

- Access Control is required but ....

- Containers share a thin wall with the OS

- with Kubernetes , need a mechanism

- Security Context and Security Policies

Security Content vs Security policy

Security Context

- Mechanism for Developer

Security Policy

- Mechanism for admins

- Implemented via admission contrillers

Security Context in Pod Specification

# setting security context at container level

apiVersion: v1

Kind : Pod

spec:

Containers:

SecurityContext:

runAsNonRoot: true

privileged: true

...

...# setting security context at pod level

apiVersion: v1

Kind : Pod

spec:

SecurityContext:

runAsUser: 999

Security Policy

# pod security policy

apiVersion: v1

Kind : PodSecurityPolicy

spec:

runAsUser:

rule: runAsAny

privileged: false

readOnlyRootFileSystem: true

- enable admins

- Prevent pods(containers) that violate

security policies

Security Controls with PodSecurityPolicy

privileged: false runAsUser

Rule: MustRunAsNonRootreadOnlyFileSyatem: true Containers not permitted to run as privileged containers

Containers must not run as root

Containers must only run with a read-only root file system

allowedCapabilities:

SYS_TIMErequiredDropCapabilities:

SYS_ADMINContainers may add from only allowed capabilities

Containers must drop these capabilities

Kubernetes Network Security

- Kubernetes networking is different

- Designed to be backward compatible

- Every Pod can talk to other pods

- Pod Networking implemented via networking plugins

- Simplicity leads to network security challenges

Network Policies

- Not all pods need to talk to each other

- Segment your network

- Network Policies let you control inter-pod traffic

- Network plugin must support network policies

# Network Policy

kind: NetworkPolicy

spec:

podSelector:

matchLabels:

svcType: catalog

policyTypes:

- Ingress

- Egress

...

Network policy Example

- use labels to select pods the policy applied to

- Ingress : Incoming traffic

- Egress: outgoing traffic

# Network Policy

kind: NetworkPolicy

spec:

podSelector:

matchLabels:

svcType: inventory

ingress:

- from:

- podSelector:

matchlabels:

svcType: order

- Applies to pod matching label

- Allows only incoming traffic from matching pods

Secrets

- allow pods to get access to secrets

- Kubernetes components need access to secrets

- Secret object: key-value pair

- Stored in etcd as base64 encoded

kind: Pod

spec:

containers:

volumeMounts:

- name: mySecrets

mountPath: /etc/data

volumes:

- name: mySecrets

secret:

secretName: mykeys # Pod specification

kind: Pod

spec:

containers:

env:

- name: MY_VAULT_KEY //env variable

valueFrom:

name: mykeys

key: myVautkeySecrets

- Preferred approach : use secret volume

- Values may be stored in log files

- child process inherits environment of parent process

Hunt for threats in production platforms, and rank them based on their risk-of-exploit.

give a git start for ThreatMapper !

Cloud-Native Application Protection Platform

ETCD Security

- Pain text data storage

- Transport Security with HTTPS

- Authentication with HTTPS certificate

ETCD

api server

hey etcd

store this data

name:sangam

ETCD

hacker

etcd dump

ETCD_VER=v3.5.1

# choose either URL

GOOGLE_URL=https://storage.googleapis.com/etcd

GITHUB_URL=https://github.com/etcd-io/etcd/releases/download

DOWNLOAD_URL=${GOOGLE_URL}

rm -f /tmp/etcd-${ETCD_VER}-darwin-amd64.zip

rm -rf /tmp/etcd-download-test && mkdir -p /tmp/etcd-download-test

curl -L ${DOWNLOAD_URL}/${ETCD_VER}/etcd-${ETCD_VER}-darwin-amd64.zip -o /tmp/etcd-${ETCD_VER}-darwin-amd64.zip

unzip /tmp/etcd-${ETCD_VER}-darwin-amd64.zip -d /tmp && rm -f /tmp/etcd-${ETCD_VER}-darwin-amd64.zip

mv /tmp/etcd-${ETCD_VER}-darwin-amd64/* /tmp/etcd-download-test && rm -rf mv /tmp/etcd-${ETCD_VER}-darwin-amd64

/tmp/etcd-download-test/etcd --version

/tmp/etcd-download-test/etcdctl version

/tmp/etcd-download-test/etcdutl versionmv /tmp/etcd-download-test/etcd /usr/local/bin/

etcdetcdctl put mykey "this is awesome"

etcdctl get mykeyetcd_version=v3.4.16

curl -L https://github.com/coreos/etcd/releases/download/$etcd_version/etcd-$etcd_version-linux-amd64.tar.gz

-o etcd-$etcd_version-linux-amd64.tar.gz

tar xzvf etcd-$etcd_version-linux-amd64.tar.gz

rm etcd-$etcd_version-linux-amd64.tar.gz

cp etcd-$etcd_version-linux-amd64/etcdctl /usr/local/bin/

rm -rf etcd-$etcd_version-linux-amd64

etcdctl version

Install etcdctl On Ubuntu 16.04/18.04

Get etcd information

kubectl describe pod etcd-master -n kube-systemmaster1_ip=172.60.70.150

master1_ip=172.60.70.151

master1_ip=172.60.70.152

export endpoint="https://172.60.70.150:2379,${master2_ip}:2379,${master3_ip}:2379"

export flags="--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key"

endpoints=$(sudo ETCDCTL_API=3 etcdctl member list $flags --endpoints=${endpoint} \

--write-out=json | jq -r '.members | map(.clientURLs) | add | join(",")')

check etcd server on master nodes

verify with these commands

sudo ETCDCTL_API=3 etcdctl $flags --endpoints=${endpoints} member list

sudo ETCDCTL_API=3 etcdctl $flags --endpoints=${endpoints} endpoint status

sudo ETCDCTL_API=3 etcdctl $flags --endpoints=${endpoints} endpoint health

sudo ETCDCTL_API=3 etcdctl $flags --endpoints=${endpoints} alarm list

etcdctl member list $flags --endpoints=${endpoint} --write-out=table

etcdctl endpoint status $flags --endpoints=${endpoint} --write-out=table

ETCDCTL_API=3 etcdctl --endpoints=[ENDPOINT] --cacert=[CA CERT] --cert=[ETCD SERVER CERT]

--key=[ETCD SERVER KEY] snapshot save [BACKUP FILE NAME]

Backup | Restore etcd

Commnads pattern:

Sample command:

ETCDCTL_API=3 etcdctl --endpoints ${endpoints} $flags snapshot save kubeme-test

ETCDCTL_API=3 etcdctl --endpoints ${endpoints} $flags snapshot status kubeme-test

ETCDCTL_API=3 etcdctl --endpoints ${endpoints} $flags snapshot restore kubeme-test

A Kubernetes CronJob to Back Up the etcd Data

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: backup

namespace: kube-system

spec:

# Run every six hours.

schedule: "0 */6 * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: backup

# Same image as in /etc/kubernetes/manifests/etcd.yaml

image: k8s.gcr.io/etcd-amd64:3.1.12

env:

- name: ETCDCTL_API

value: "3"

command: ["/bin/sh"]

args: ["-c", "etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt --key=/etc/kubernetes/pki/etcd/healthcheck-client.key snapshot save /backup/etcd-snapshot-$(date +%Y-%m-%d_%H:%M:%S_%Z).db"]

volumeMounts:

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

readOnly: true

- mountPath: /backup

name: etcd-backup-dir

restartPolicy: OnFailure

nodeSelector:

node-role.kubernetes.io/master: ""

tolerations:

- effect: NoSchedule

operator: Exists

hostNetwork: true

volumes:

- name: etcd-certs

hostPath:

path: /etc/kubernetes/pki/etcd

type: DirectoryOrCreate

- name: etcd-backup-dir

hostPath:

path: /opt/etcd-backup

type: DirectoryOrCreate

A Kubernetes CronJob to Back Up the etcd Data

export ETCDCTL_API=3

export ETCDCTL_CACERT=/etc/kubernetes/pki/etcd/ca.crt

export ETCDCTL_CERT=/etc/kubernetes/pki/etcd/healthcheck-client.crt

export ETCDCTL_KEY=/etc/kubernetes/pki/etcd/healthcheck-client.key

etcdctl get /registry/services/specs/default/kubernetesetcd cheet sheet

kubectl create job --from=cronjob/<cronjob-name> <job-name>run job from cronjob