A Gentle Introduction to Machine Learning and Neural Networks

Sean Meling Murray,

Department of Mathematics,

University of Bergen

Sources: New York Post, Futurism, VICE Motherboard, The Conversation, The Verge

Machine learning gives computers the ability to learn from data without being explicitly programmed to do so.

Algorithms allow us to build models that make data-driven predictions about the stuff we're interested in.

Wikipedia:

Regression

Typically used when we want our model to predict a continuous numerical value.

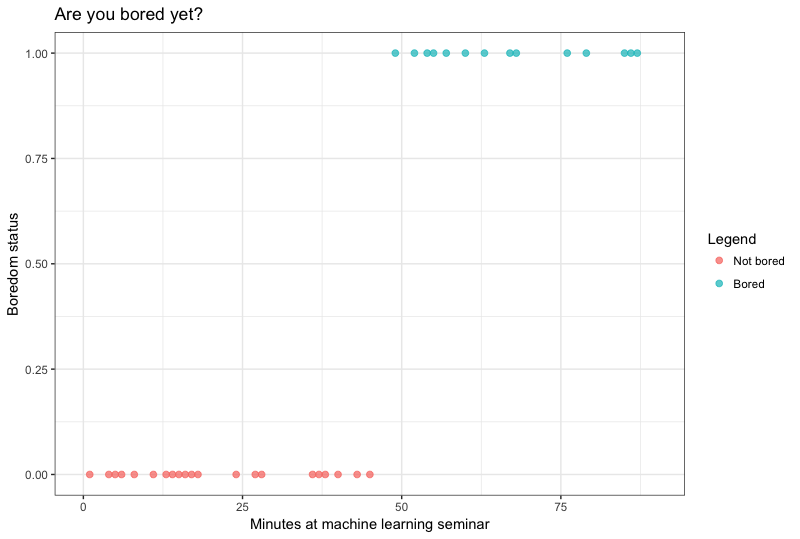

Classification

Typically used when we want our model to classify an event or a thing into one of K categories.

E.g. How much is my apartment worth given size, number of rooms, etc.?

E.g. Given today's housing market, will I be able to sell my apartment (yes or no)?

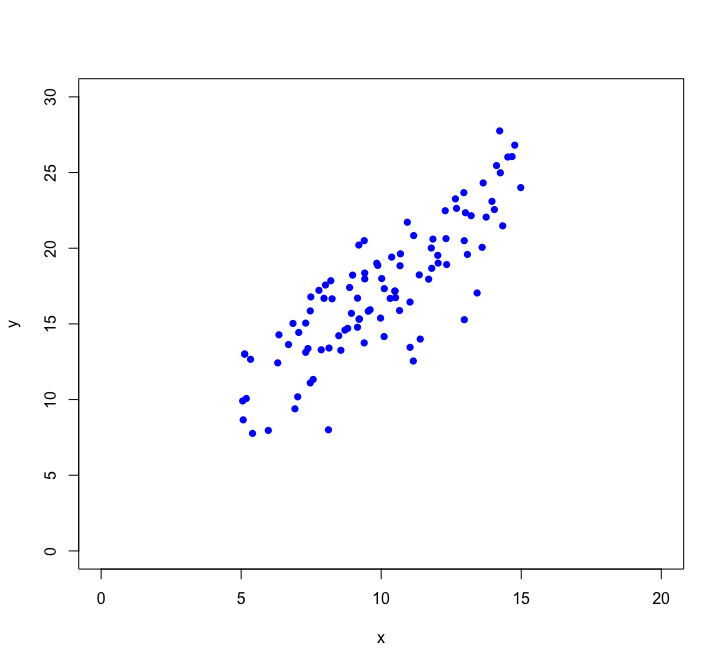

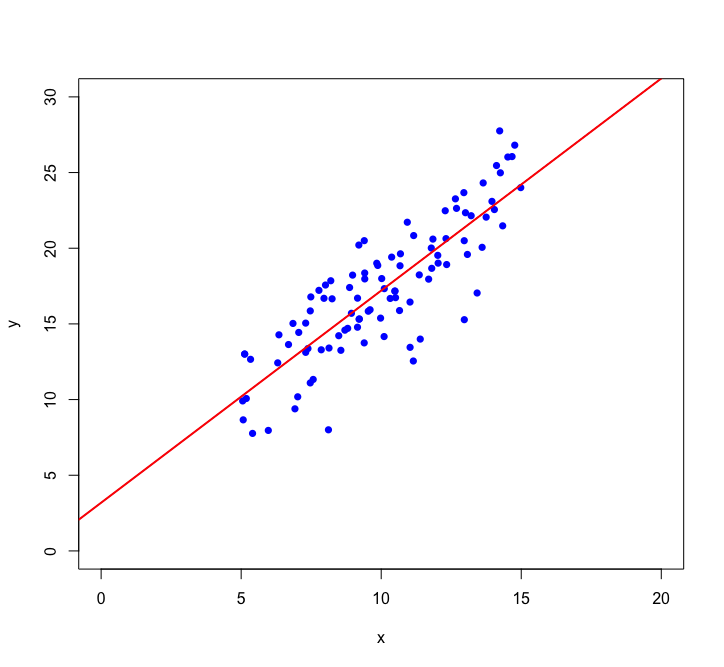

Let's look closer at linear regression:

Using we want to find the straight line that best fits the data:

This is

our model

intercept

slope

parameters

input aka. features, i.e. properties of the thing we are trying to predict

output

Supervised

We compare our model's prediction to a known label or value and try to make the prediction error as small as possible.

Unsupervised

We try to identify meaningful patterns in unlabelled data. In other words, we let the data speak for itself.

Source: Tango with Code

Source: Sagar Sharma, towardsdatascience.com

We want to minimize the prediction errors:

Mean squared error (MSE)

Using the input-output pairs in our data, which combination of weight settings gives us the lowest value of the cost function?

Cost

True value

Prediction

Training

, optimizing

learning

,

Solve:

Let's visualize the regression line a little differently...

Intercept

Feature

Let's call these circles neurons!

What if we want to model a non-linear relationship?

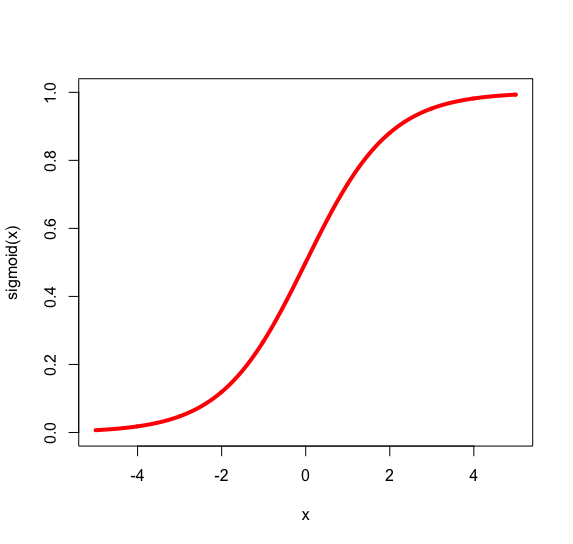

Thresholding

We call the weighted sum of inputs the activation of the neuron. The non-linear transformation is called the activation function.

Neural network!

weights

weights

Composition of non-linear functions!

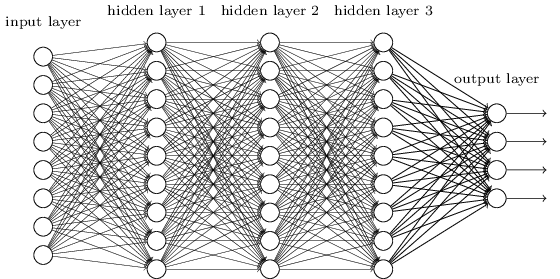

Network architecture

The number of neurons in a layer is it's width.

Many layers results in a deep model, and is why we call it deep learning.

Image credit: KDNuggets

The number of layers in a network is it's depth.

How does a neural network learn?

1. Backpropagation

2. Gradient descent

1. Backpropagation

Check out 3blue1brown on YouTube

Backprop algorithm calculates the gradients of cost function wrt. the networks weights using the chain rule!

Iteratively nudge the weights in the direction where cost decreases the most, i.e. the negative of the gradient calculated in step 1.

Source: Tango with Code

2. Gradient descent

Deep Learning models

Convolutional neural networks

Image recognition and object detection:

https://github.com/shaoanlu/Udacity-SDCND-Vehicle-Detection

Recurrent neural networks

Natural language processing:

http://karpathy.github.io/2015/05/21/rnn-effectiveness/

Reinforcement learning

Building software agents that

learn by reacting to the environment:

From the documentary AlphaGo

Google DeepMind

Generative adversarial networks

Pairs of networks that

generate images and other types of data:

https://github.com/junyanz/CycleGAN