$Who am i

Hey I am sheeraz

I am CEO at hacking laymen

I am a network penetration tester

I am a web developer and designer

BCA II year student at BSSS

“Secrets have a cost, they are not for free.”

― the amazing spiderman

What's Spidering?

Web spiders are the most powerful and useful tools developed for both good and bad intentions on the internet. A spider serves one major function, spider (like Google) works is by crawling a web site one page at a time, gathering and storing the relevant information such as email addresses, meta-tags, hidden form data, URL information, links, etc. The spider then crawls all the links in that page, collecting relevant information in each following page, and so on. Before you know it, the spider has crawled thousands of links and pages gathering bits of information and storing it into a database. This web of paths is where the term 'spider' is derived from.

How Spidering Works

- Manual Browsing

- Advanced Tools to do spidering for you

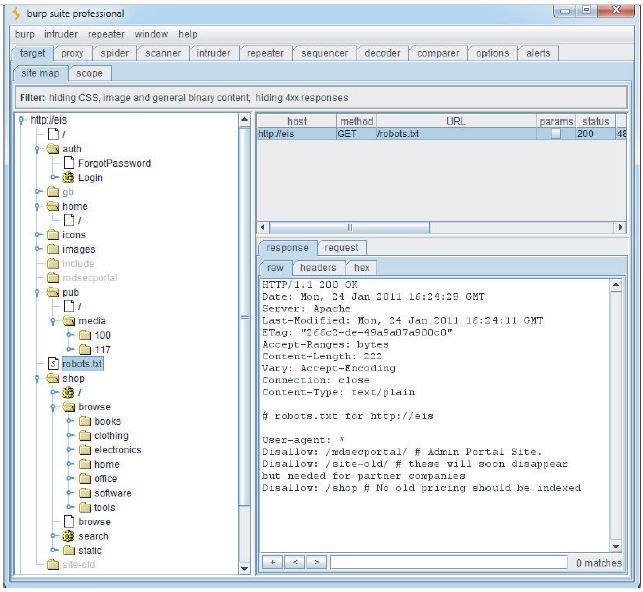

- Robots.txt

- Finding Directories

- Versions

Tools For Spidering

- Burp Suite

- Zed Attack Proxy

- WebScarab

- CAT

Where Tools Spidering Fail! :(

- Spider Might Request /Logout

- Spider Might Not Through Complex JavaScript code -> Generated links

- Spider Might Submit Invalid input to a sensitive function the app may break the session defensively

- App May be using per page tokens may break spidering because spider do not have a token for each page

- Sequence of requests going to the server probably cause the entire session to be terminated if the application is aware of it

What a typical spider looks like

User Directed Spidering

This is a more sophisticated and controlled technique that is usually preferable to automated spidering. Here, the user walks through the application in the normal way using a standard browser, attempting to navigate through all the application’s functionality. As he does so, the resulting traffic is passed through a tool combining an intercepting proxy and spider, which monitors all requests and responses. The tool builds a map of the application, incorporating all the URLs visited by the browser.

Benifits For User Directed Spidering

-

Where the application uses unusual or complex mechanisms for navigation,

the user can follow these using a browser in the normal way. Any functions

and content accessed by the user are processed by the proxy/spider tool. -

The user controls all data submitted to the application and can ensure that data validation requirements are met.

-

The user can log in to the application in the usual way and ensure that the authenticated session remains active throughout the mapping process. If any action performed results in session termination, the user can log in again and continue browsing.

-

Any dangerous functionality, such as deleteUser.jsp, is fully enumerated

and incorporated into the proxy’s site map, because links to it will be

parsed out of the application’s responses. But the user can use discretion

in deciding which functions to actually request or carry out.

Hidden Content

-

Backup copies of live files. In the case of dynamic pages, their file extension may have changed to one that is not mapped as executable, enabling you to review the page source for vulnerabilities that can then be exploited

on the live page. -

Backup archives that contain a full snapshot of files within (or indeed

outside) the web root, possibly enabling you to easily identify all content

and functionality within the application. -

New functionality that has been deployed to the server for testing but not

yet linked from the main application. -

Default application functionality in an off-the-shelf application that has

been superficially hidden from the user but is still present on the server. -

Old versions of files that have not been removed from the server. In the

case of dynamic pages, these may contain vulnerabilities that have been

fixed in the current version but that can still be exploited in the old version.

Hidden Content

-

configuration and include files containing sensitive data such as database

credentials. -

Source files from which the live application’s functionality has been

compiled. -

Comments in source code that in extreme cases may contain information

such as usernames and passwords but that more likely provide information

about the state of the application. Key phrases such as “test this function”

or something similar are strong indicators of where to start hunting for

vulnerabilities. -

Log files that may contain sensitive information such as valid usernames,

session tokens, URLs visited, and actions performed.

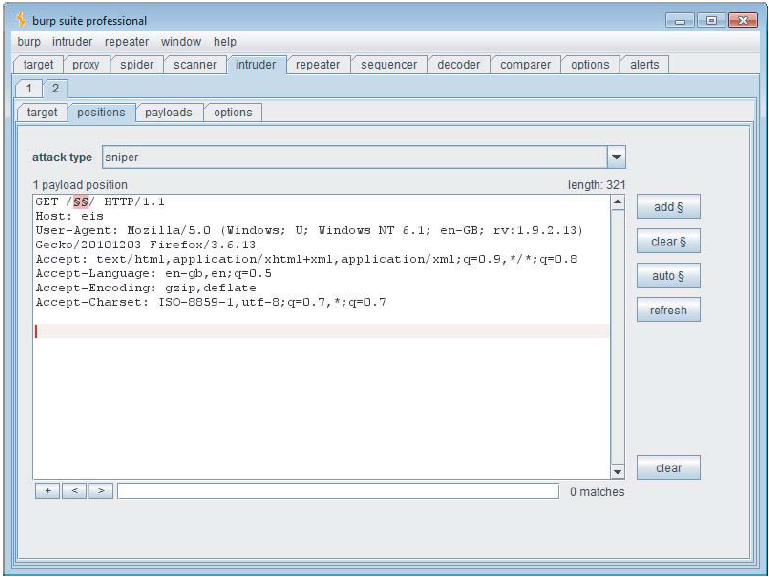

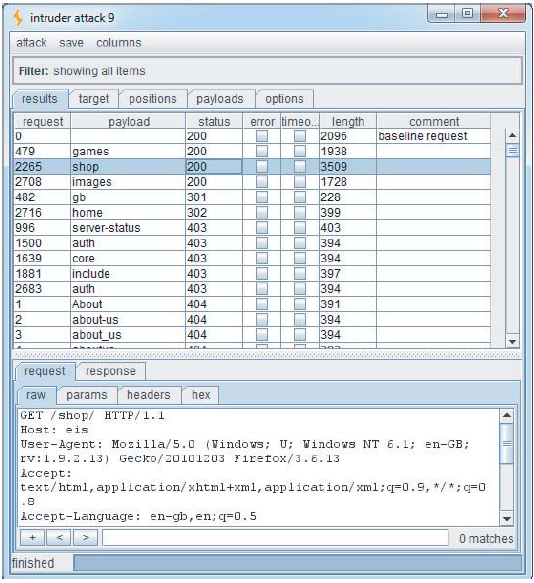

Brute Force

- Brute-forcing Directories/Files

False Alarm

- 200 doesn't always mean OK

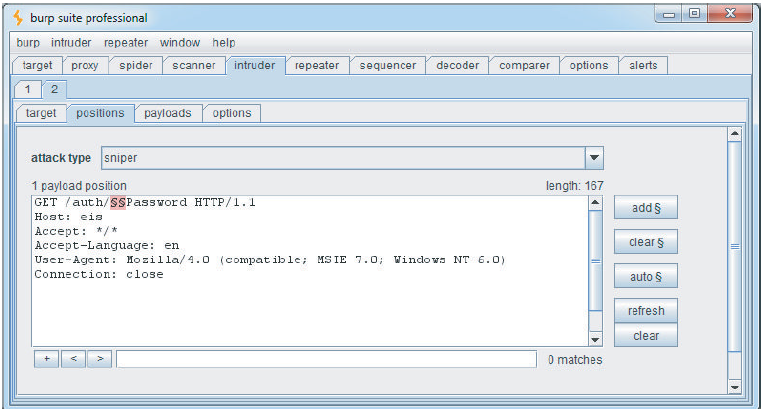

Selective Brute Force

http://eis/auth/AddPassword

http://eis/auth/ForgotPassword

http://eis/auth/GetPassword

http://eis/auth/ResetPassword

http://eis/auth/RetrievePassword

http://eis/auth/UpdatePassword

How to be Bruda (brute force yoda)

- Brute force using built-in lists of common file and directory names

- Dynamic generation of wordlists based on resource names observed within the target application

- Extrapolation of resource names containing numbers and dates

- Testing for alternative file extensions on identified resources

- Spidering from discovered content

- Automatic fingerprinting of valid and invalid responses to reduce false positives

Internet is your friend

- Search engines such as Google, Yahoo, and MSN. These maintain a finegrained index of all content that their powerful spiders have discovered, and also cached copies of much of this content, which persists even after the original content has been removed.

- Web archives such as the WayBack Machine, located at www.archive.org. These archives maintain a historical record of a large number of websites. In many cases they allow users to browse a fully replicated snapshot of a given site as it existed at various dates going back several years.

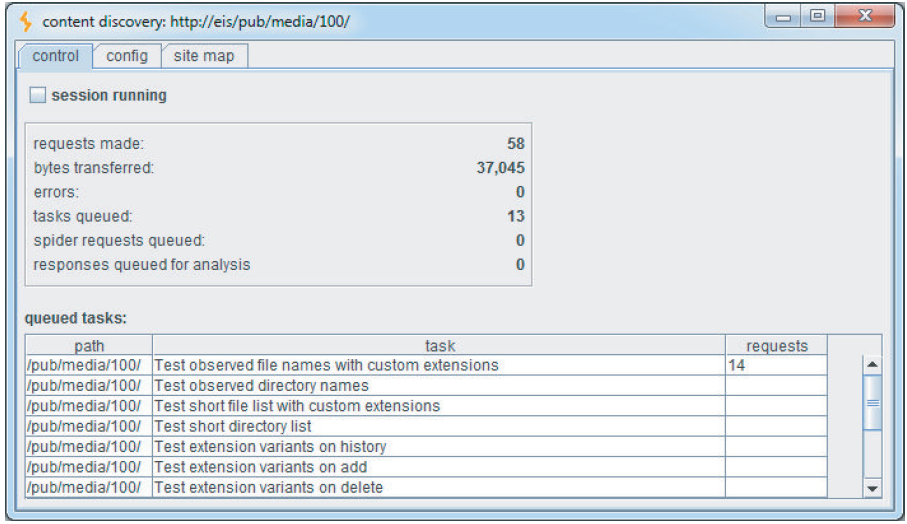

DirBuster

DirBuster

Leveraging Web Server

Vulnerabilities may exist at the web server layer that enables you to discover content and functionality that are not linked within the web application itself. For example, bugs within web server software can allow an attacker to list the

contents of directories or obtain the raw source for dynamic server-executable pages.

Furthermore, many web applications incorporate common third-party components for standard functionality, such as shopping carts, discussion forums, or content management system (CMS) functions. These are often installed to a fixed location relative to the web root or to the application’s starting directory.

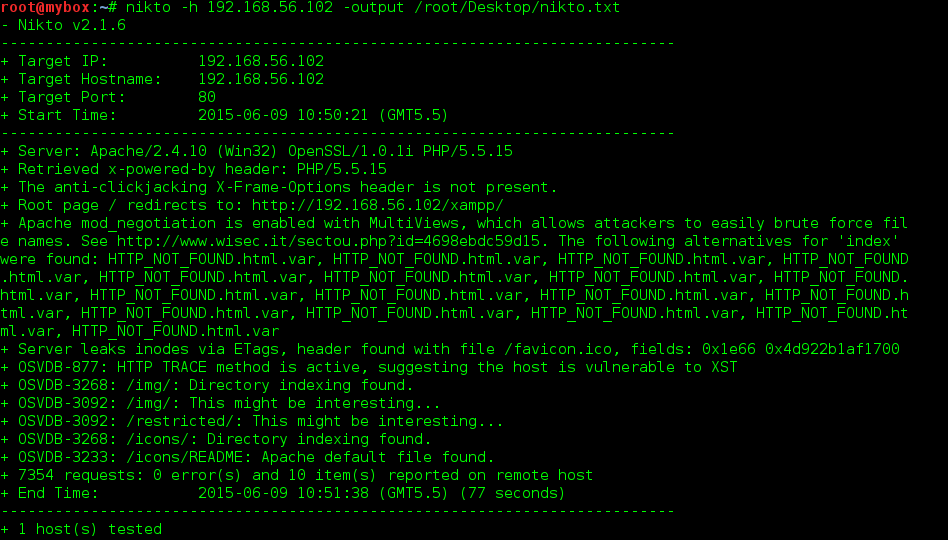

Tools For Web server spidering

- Nikto

- Wikto

- Microsoft Baseline Security Analyzer (MBSA)

Nikto at a glimpse

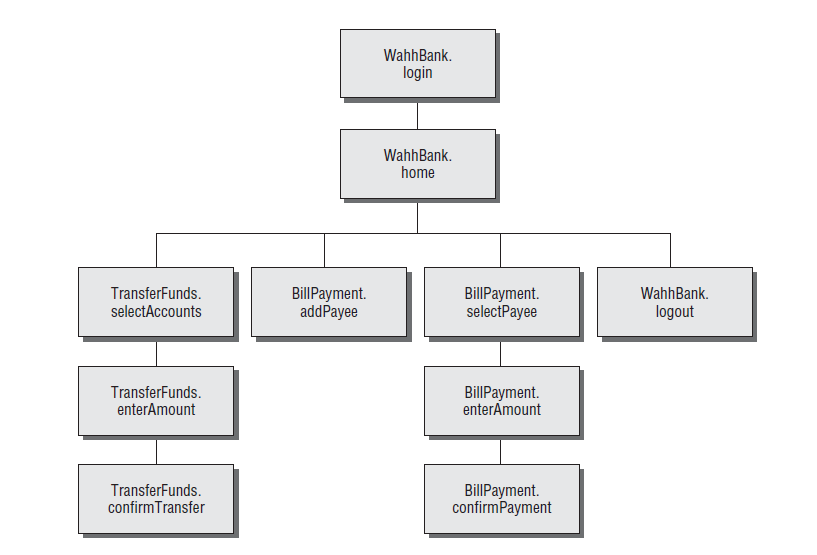

Functional Paths

Discovering Hidden Parameters

DEBUG=TRUE

- turn off certain input validation checks

- allow the user to bypass certain access controls

- display verbose debug information

in its response

Analyzing the Application

- The application’s core functionality — the actions that can be leveraged to perform when used as intended

- Other, more peripheral application behaviour, including off-site links, error messages, administrative and logging functions, and the use of redirects

- The core security mechanisms and how they function — in particular, management of session state, access controls, and authentication mechanisms and supporting logic (user registration, password change, and account recovery)

Analyzing the Application

- All the different locations at which the application processes user-supplied input — every URL, query string parameter, item of POST data, and cookie

- The technologies employed on the client side, including forms, clientside scripts, thick-client components (Java applets, ActiveX controls, and

Flash), and cookies - The technologies employed on the server side, including static and dynamic pages, the types of request parameters employed, the use of SSL, web server software, interaction with databases, e-mail systems, and other back-end components

- Any other details that may be gleaned about the internal structure and functionality of the server-side application — the mechanisms it uses behind the scenes to deliver the functionality and behavior that are visible from the client perspective

Identifying Entry Points for User Input

The majority of ways in which the application captures user input for serverside

processing should be obvious when reviewing the HTTP requests that are

generated as you walk through the application’s functionality.

Identifying Entry Points for User Input

- Every URL string up to the query string marker

- Every parameter submitted within the URL query string

- Every parameter submitted within the body of a POST request

- Every cookie

- Every other HTTP header that the application might process — in particular, the User-Agent, Referer, Accept, Accept-Language, and Host headers

URL File Paths

- applications that use REST-style URLs,

the parts of the URL that precede the query string can in fact function as data

parameters and are just as important as entry points for user input as the query

string itself.

http://eis/shop/browse/electronics/iPhone3G/

Identifying Server-Side Technologies

- Banner Grabbing

- HTTP Fingerprinting

- File Extensions

- Directory Names

- Session Tokens

Banner Grabbing

- Server: Apache/1.3.31 (Unix) mod_gzip/1.3.26.1a mod_auth_passthrough/ 1.8 mod_log_bytes/1.2 mod_bwlimited/1.4 PHP/4.3.9 FrontPage/

5.0.2.2634a mod_ssl/2.8.20 OpenSSL/0.9.7a - Templates used to build HTML pages

- Custom HTTP headers

- URL query string parameters

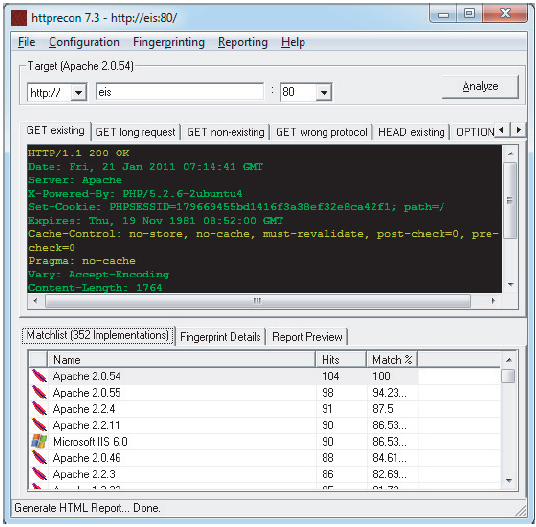

HTTP Fingerprinting

File Extensions

- asp — Microsoft Active Server Pages

-

aspx — Microsoft ASP.NET -

jsp — Java Server Pages -

cfm — Cold Fusion -

php — The PHP language - d2w — WebSphere

- pl — The Perl language

-

py — The Python language -

dll — Usually compiled native code (C or C++) -

nsf orntf — Lotus Domino

Directory Names

- servlet — Java servlets

- pls — Oracle Application Server PL/SQL gateway

- cfdocs or cfide — Cold Fusion

- SilverStream — The SilverStream web server

- WebObjects or {function}.woa — Apple WebObjects

- rails — Ruby on Rails

Session Tokens

- JSESSIONID — The Java Platform

- ASPSESSIONID — Microsoft IIS server

- ASP.NET_SessionId — Microsoft ASP.NET

- CFID/CFTOKEN — Cold Fusion

- PHPSESSID — PHP

HTTP Headers

- HTTP headers such as Referrer and User-Agent. These headers should always be considered as possible entry points for input-based attacks.

-

The IP address of the client’s network connection typically

is available to applications via platform APIs. However, to handle cases where the application resides behind a load balancer or proxy, applications may use the IP address specified in the X-Forwarded-For request header if it is present. Developers may then mistakenly assume that the IP address value is untainted and process it in dangerous ways. By adding a suitably crafted X-Forwarded-For header, you may be able to deliver attacks such as SQL injection or persistent cross-site scripting.

Mapping the Attack Surface

- Client-side validation — Checks may not be replicated on the server

- Database interaction — SQL injection

- File uploading and downloading — Path traversal vulnerabilities, stored

cross-site scripting - Display of user-supplied data — Cross-site scripting

- Native code components or interaction — Buffer overflows

- Use of third-party application components — Known vulnerabilities

- Identifiable web server software — Common configuration weaknesses,

- known software bugs

Mapping the Attack Surface

- Dynamic redirects — Redirection and header injection attacks

- Social networking features — username enumeration, stored cross-site

scripting - Login — Username enumeration, weak passwords, ability to use brute

force - Multistage login — Logic fl aws

- Session state — Predictable tokens, insecure handling of tokens

- Access controls — Horizontal and vertical privilege escalation

Mapping the Attack Surface

- Off-site links — Leakage of query string parameters in the Referrer header

- Interfaces to external systems — Shortcuts in the handling of sessions and/or access controls

- Error messages — Information leakage

- E-mail interaction — E-mail and/or command injection

- Native code components or interaction — Buffer overflows

- Use of third-party application components — Known vulnerabilities

Mapping the Attack Surface

- Identifiable web server software — Common configuration weaknesses, known software bugs

- User impersonation functions — Privilege escalation

- Use of cleartext communications — Session hijacking, capture of credentials and other sensitive data

“No matter how buried it gets, or lost you feel, you must promise me, that you will hold on to hope and keep it alive. We have to be greater than what we suffer. My wish for you is to become hope. People need that.”

― Peter Parker Spiderman

Thank You ― Sheeraz Ali