Calibration class project

Sheng Long

12/03/24

What did I do?

🧭 I had three guiding questions:

- Scoring rules, (financial) incentives, and calibration --- how are these concepts related? What are some implied assumptions?

- e.g. are proper scoring rules better than non-proper scoring rules as payoff functions for participants? Do participants perceive/react to them in different ways?

- What kind of behavioral research has been done related to calibration?

- 📊 What are some existing ways to graphically communicate classification performance metrics, such as calibration? How effective are they for e.g., choosing classifiers?

Scoring rules, (financial) incentives, and calibration

Winkler, Robert L., et al. "Scoring rules and the evaluation of probabilities." Test 5 (1996): 1-60.

- How are these concepts related?

- Scoring rules evaluate the "goodness" of probability forecasts (against observed frequencies)

- Calibration is one potential attribute of "goodness"

- Strictly proper scoring rules provide an incentive for forecasters to report truthfully

- Forecasters must report truthfully to maximize expected score

- Strictly proper scoring rules imply that all other things being equal, a perfectly calibrated forecaster will earn a higher average score

... for probability assessment

Scoring rules, financial incentives, and calibration

Winkler, Robert L., et al. "Scoring rules and the evaluation of probabilities." Test 5 (1996): 1-60.

- Calibration can be viewed as one attribute of "goodness" of probability forecasts

- Other attributes include:

- discrimination, or the degree to which the probabilities discriminate between occasions on which \(A\) occurs and occasions on which \(A\) does not occur

- resolution, or the degree to which conditional means \(E(x|p)\) differ from the base rate \(E(x)\)

- unconditional bias, or the degree to which the overall mean probability \(E(p)\) differs from the base rate

Scoring rules, financial incentives, and calibration

Camerer, Colin F., and Robin M. Hogarth. "The effects of financial incentives in experiments: A review and capital-labor-production framework." Journal of risk and uncertainty 19 (1999): 7-42.

- What are the effects of financial incentives in experiments?

- Camerer and Hogarth proposed a "capital-labor-production framework"

- Experiment participants come to experiments with knowledge and goal

cognitive capital

(e.g. heuristics, perceptual skills)

some objective function to maximize

(e.g. maximize monetary payoff while minimizing effort)

- Different tasks have different production requirements

- some tasks require attention and diligence, others require domain-specific knowledge

- Labor = effort

Scoring rules, financial incentives, and calibration

Camerer, Colin F., and Robin M. Hogarth. "The effects of financial incentives in experiments: A review and capital-labor-production framework." Journal of risk and uncertainty 19 (1999): 7-42.

- Camerer and Hogarth proposed a "capital-labor-production framework" and reviewed 74 experimental papers in which the level of financial performance-based incentive given to subjects was varied

- They found that

- incentives sometimes improve performance, but often don't

- incentives improve performance in easy tasks that are effort-responsive

- incentives sometimes hurt when problems are too difficult or when thinking harder makes things worse

- incentives don't affect mean performance but often reduce variance in responses

Scoring rules, financial incentives, and calibration

Camerer, Colin F., and Robin M. Hogarth. "The effects of financial incentives in experiments: A review and capital-labor-production framework." Journal of risk and uncertainty 19 (1999): 7-42.

- In the experimental papers surveyed by Camerer and Hogarth, the following papers utilize scoring rules as payoff functions to participants:

- Phillpis and Edwards (1966)

- Grether (1980)

- Wright and Aboull-Ezz (1988)

- Wallste, Budescu, and Zwick (1993)

Scoring rules, financial incentives, and calibration

Camerer, Colin F., and Robin M. Hogarth. "The effects of financial incentives in experiments: A review and capital-labor-production framework." Journal of risk and uncertainty 19 (1999): 7-42.

- Wright and Aboull-Ezz (1988) --- Effects of Extrinsic Incentives on the Quality of Frequency Assessments

- Participants: 51 MBA students

- Task: estimate frequency for 3 variables

- salaries, age, GMAT scores

- given range, assign frequencies into "buckets"

Scoring rules, financial incentives, and calibration

Camerer, Colin F., and Robin M. Hogarth. "The effects of financial incentives in experiments: A review and capital-labor-production framework." Journal of risk and uncertainty 19 (1999): 7-42.

- Wright and Aboull-Ezz (1988) --- Effects of Extrinsic Incentives on the Quality of Frequency Assessments

- Two incentive conditions

- non-performance contingent, flat rate ($3 each)

- flat rate $3 + additional amount contingent on relative accuracy of assessment (overall score = mean squared error over 27 frequency categories)

- They found that incentivized subjects did have lower absolute errors in probability than the no-incentive subjects

- However, Camerer and Hogarth argue that there is a confound in their experiment design

Scoring rules, financial incentives, and calibration

Camerer, Colin F., and Robin M. Hogarth. "The effects of financial incentives in experiments: A review and capital-labor-production framework." Journal of risk and uncertainty 19 (1999): 7-42.

- Wright and Aboull-Ezz (1988) --- Effects of Extrinsic Incentives on the Quality of Frequency Assessments

- The confound is between the incentive and the use and explanation of scoring rule (MSE)

- There should be four conditions:

- flat rate pay, no feedback

- flat rate pay, scoring rule feedback

- scoring rule pay, scoring rule feedback

- scoring rule pay, no feedback

Calibration-related behavioral research

Calibration of probabilities: The state of the art to 1980

1982

... meterologists have been interested in calibration as far back as 1906

Calibration-related behavioral research

Calibration of probabilities: The state of the art to 1980

1982

1906

meteorologists

1950

Glenn Brier (meteorologist) proposed an approach to verify the accuracy of probabilistic forecasts

Calibration-related behavioral research

Calibration of probabilities: The state of the art to 1980

1982

1906

meteorologists

1950

Glenn Brier & Brier score

1991

Calibration and probability judgements: Conceptual and methodological issues

... much of the research on calibration is "dust-bowl empiricism"

Calibration-related behavioral research

Calibration of probabilities: The state of the art to 1980

1982

1906

meteorologists

1950

Glenn Brier & Brier score

1991

Calibration and probability judgements: Conceptual and methodological issues

and many many more papers and stylized facts

Encoding Subjective Probabilities: A Psychological and Psychometric Review

subjective probability

1983

2004

Perspectives on probability judgment calibration

1973

Subjective probability and its measurement

e.g. hard-easy effect --- people tend to be over-confident in hard questions but under-confident in easy questions

Calibration-related behavioral research

- Some concluding thoughts:

- Huge and diverse literature (statistics, meteorology, decision/risk analysis, psychology) but not much cross-fertilization

- With behavior research on probability judgments, one cannot escape the deeply entwined normative and descriptive aspects.

- From a normative perspective:

- How should information about uncertainty be conveyed in a most accurate, coherent, and efficient way? How do we assess whether such criteria have been satisfied?

- From a descriptive perspective:

- How do people respond to probability judgments/forecasts?

- Should scoring rules be designed around known cognitive biases or try to correct for them?

philosophical tangent : subjective probability vs observed frequency

Graphs of classification performance metric

- Receiver Operator Characteristic (ROC)

- Area under the curve (AUC)

- Precision-Recall Plot

- Calibration plot

more related to discrimination

i.e., the ability to distinguish between different outcomes

predictions agreeing with actual rates of outcomes

Lindhiem, Oliver, et al. "The importance of calibration in clinical psychology." Assessment 27.4 (2020): 840-854.

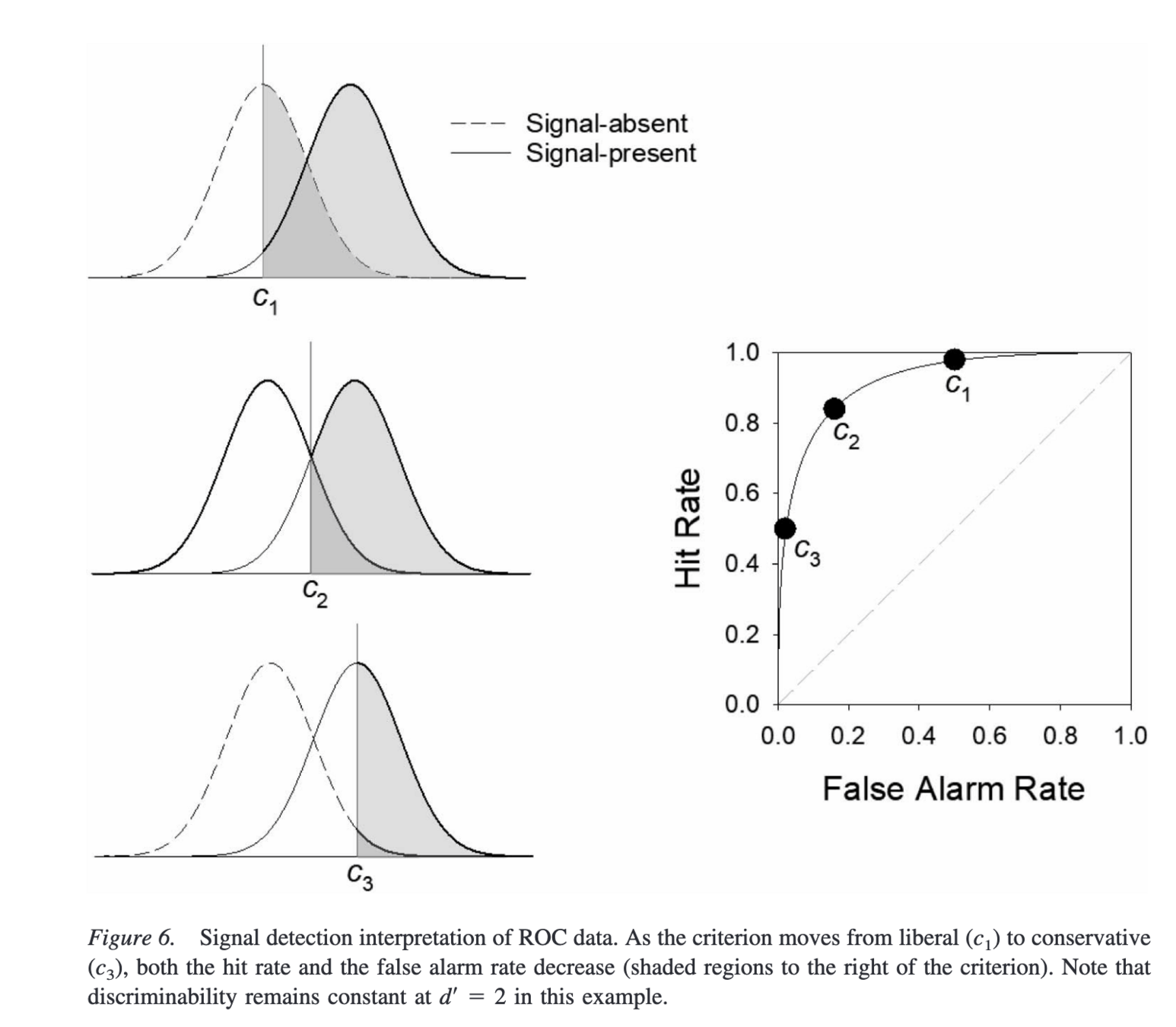

ROC curve

- \(x\): false positive rate

- \(y\): true positive rate

| predicted positive | predicted negative | |

|---|---|---|

| Positive | True positive | False negative |

| Negative | False positive | True negative |

confusion/error matrix

Wixted, John T. “The Forgotten History of Signal Detection Theory.” Journal of Experimental Psychology. Learning, Memory, and Cognition, vol. 46, no. 2, Feb. 2020, pp. 201–33. PubMed, https://doi.org/10.1037/xlm0000732.

False positive

True positive

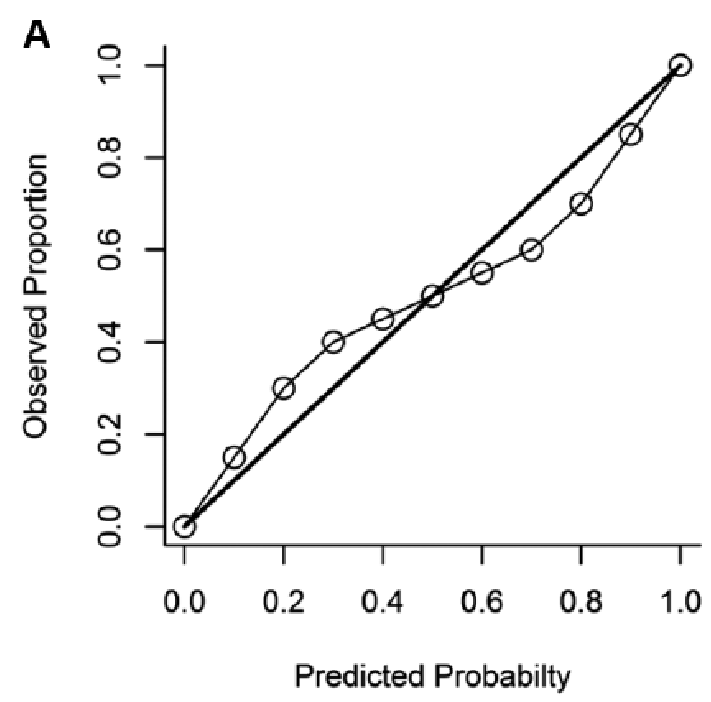

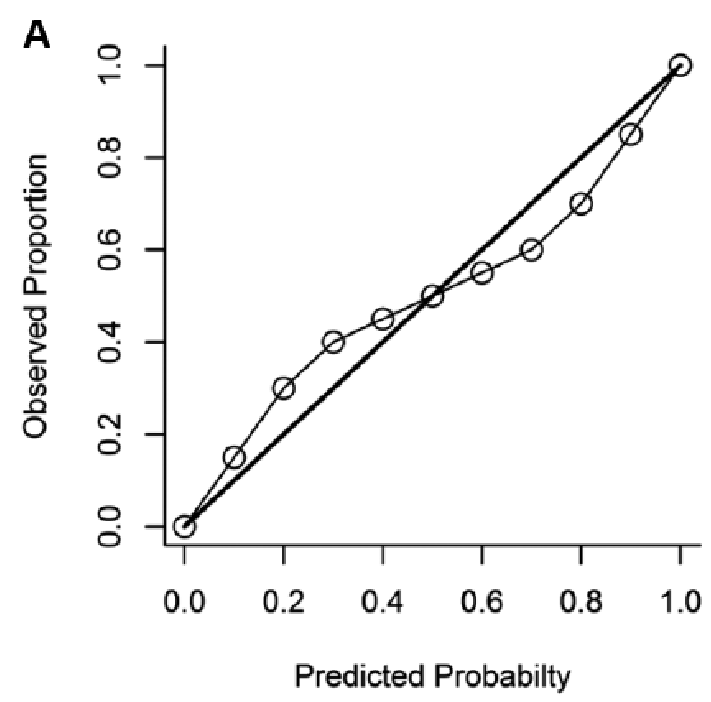

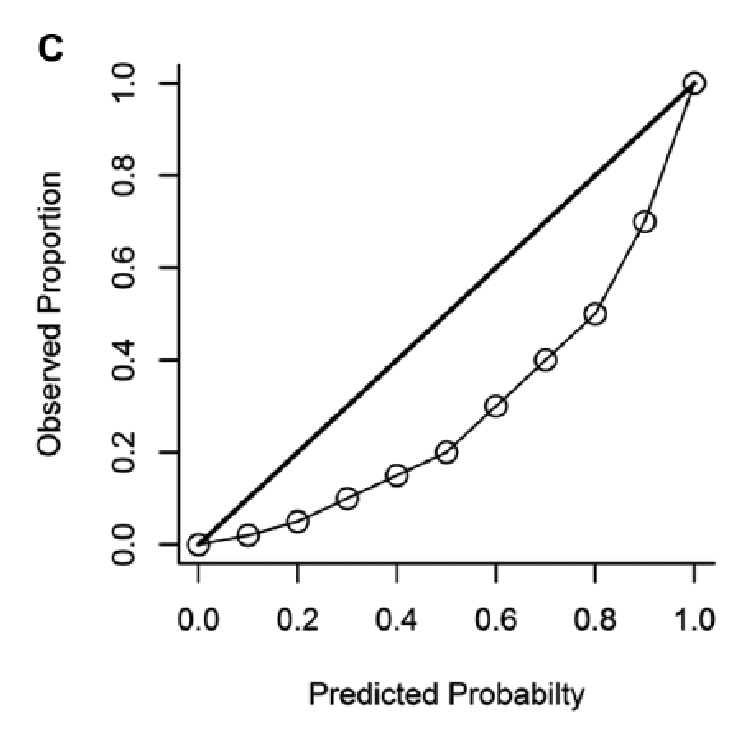

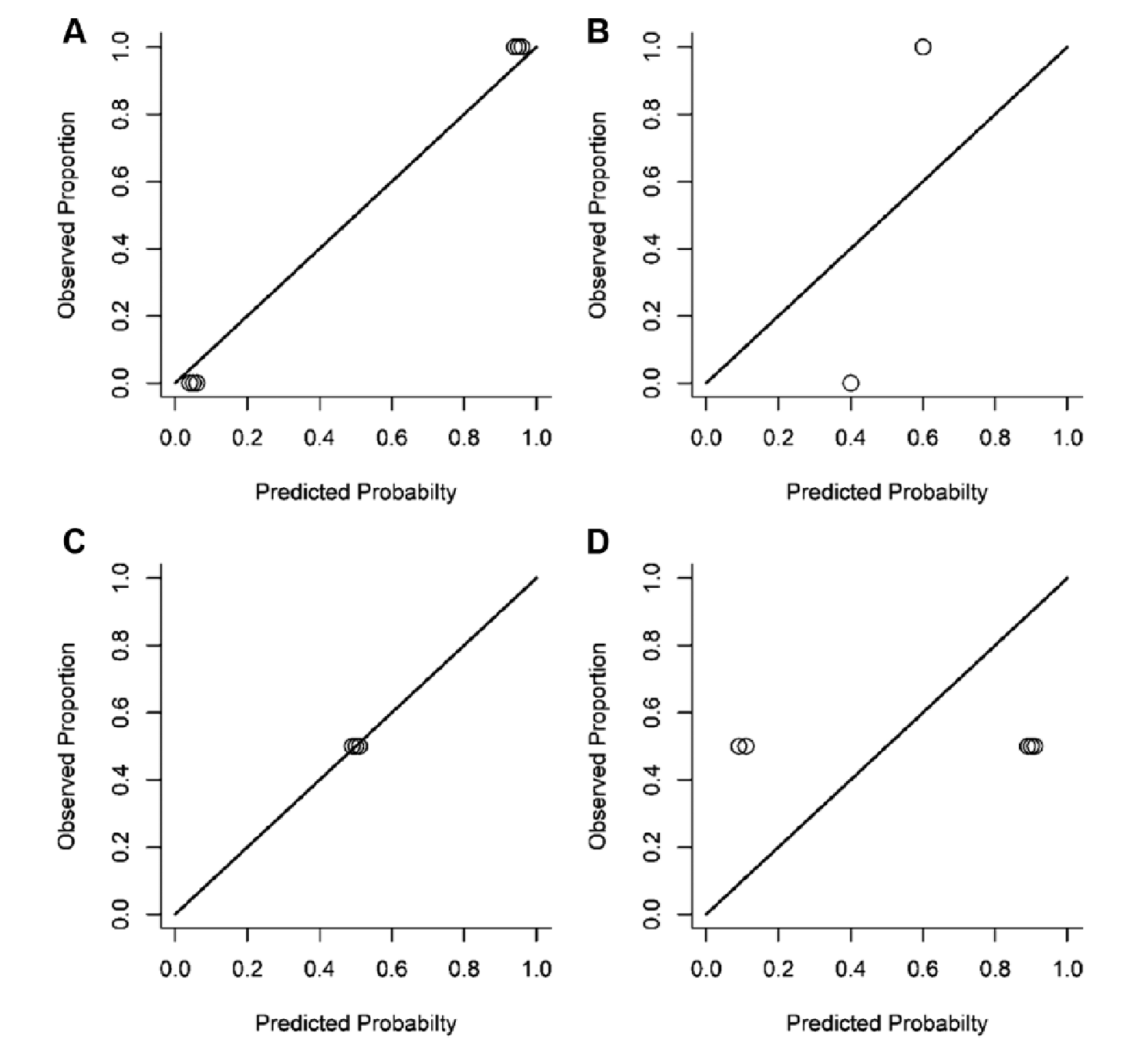

Calibration plot

- \(x\): predicted probability

- \(y\): observed frequency

45 degree line \(\implies\) perfect calibration

🤔 How calibrated is this classifier?

Over-extremity

predictions are consistently too close to 0 or 1

To ground things more concretely, suppose we built classifiers for children ADHD detection.

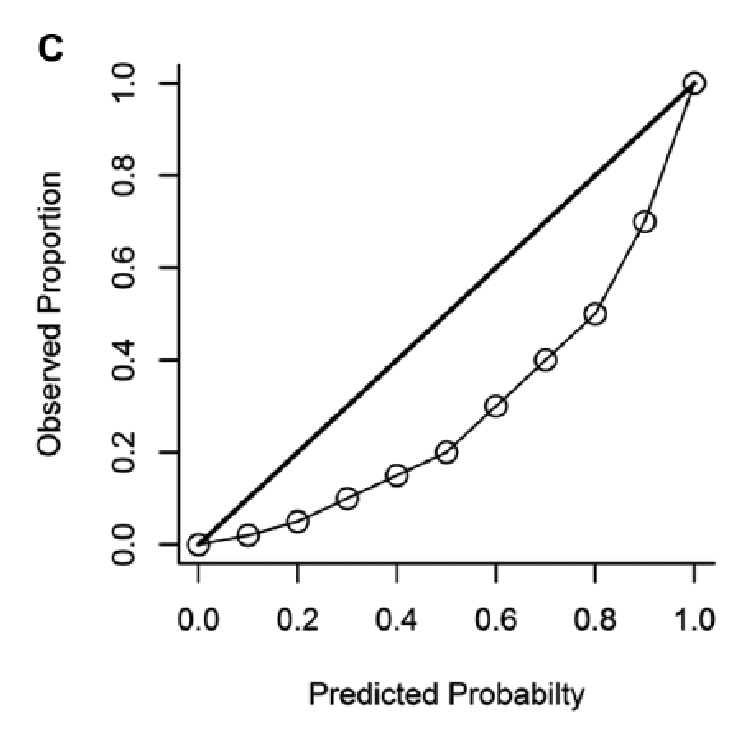

Calibration plot

- \(x\): predicted probability

- \(y\): observed frequency

Over-prediction

predicted probability consistently > observed proportion

🤔 How calibrated is this classifier?

Calibration plot

- \(x\): predicted probability

- \(y\): observed frequency

Benefits of graphical approaches:

- can easily spot mis-calibration by looking for the deviation from the diagonal

Calibration plot --- Quiz time!

❓Which model(s) has good discrimination and which has good calibration?

A: good discrimination, good calibration

B: good discrimination, bad calibration

C: bad discrimination, good calibration

D: bad discrimination, bad calibration

To summarize ...

What did I do?

Next steps?

- identified key survey papers related to existing behavioral research related to calibration

- identified experimental papers that utilize scoring rules

- explored visualization types for classification performance

- Experiment design --- how do we separate the impact of financial incentives and scoring rule?

- Scoring rules that account for task difficulty

- Behavioral assumptions to extend rational agent benchmark framework (e.g., prospect theory)