Train. Serve. Deploy!

Story of a NLP Model ft. PyTorch, Docker, Uwsgi and Nginx

whoami

- Data Scientist at GoDaddy

- Work with deep learning based language modeling

- Dancing, hiking, (more recently) talking!

- Meme curator, huge The Office fan

ML Modeling

Training

Testing

ML Modeling

THEN WHAT?

ML Modeling

DEPLOYMENT

What This Talk IS

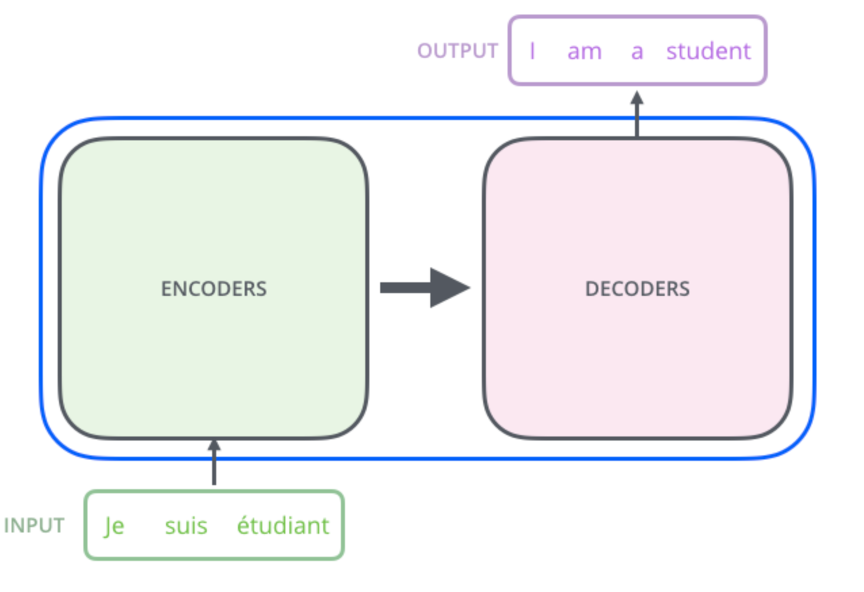

Machine Translation Systems: seq2seq model

Data

$ head orig/de-en/train.tags.de-en.de

<url>http://www.ted.com/talks/lang/de/stephen_palumbi_following_the_mercury_trail.html</url>

Das Meer kann ziemlich kompliziert sein.

Und was menschliche Gesundheit ist, kann auch ziemlich kompliziert sein.

.

.

$ head orig/de-en/train.tags.de-en.en

<url>http://www.ted.com/talks/stephen_palumbi_following_the_mercury_trail.html</url>

It can be a very complicated thing, the ocean.

And it can be a very complicated thing, what human health is.

de

en

Fairseq

Quick prototyping of seq2seq models

Fairseq: Preprocessing

TRAIN=$tmp/train.en-de

BPE_CODE=$prep/code

rm -f $TRAIN

for l in $src $tgt; do

cat $tmp/train.$l >> $TRAIN

done

echo "learn_bpe.py on ${TRAIN}..."

python $BPEROOT/learn_bpe.py -s $BPE_TOKENS < $TRAIN > $BPE_CODE

for L in $src $tgt; do

for f in train.$L valid.$L test.$L; do

echo "apply_bpe.py to ${f}..."

python $BPEROOT/apply_bpe.py -c $BPE_CODE < $tmp/$f > $prep/$f

done

done

Fairseq: Training

CUDA_VISIBLE_DEVICES=0 fairseq-train \

data-bin/iwslt14.tokenized.de-en \

--arch transformer \

--share-decoder-input-output-embed \

--optimizer adam \

--adam-betas '(0.9, 0.98)' \

--clip-norm 0.0 \

--lr 5e-4 --lr-scheduler inverse_sqrt \

--warmup-updates 4000 \

--dropout 0.3 --weight-decay 0.0001 \

--criterion label_smoothed_cross_entropy \

--label-smoothing 0.1 \

--max-tokens 4096 --max-epoch 50 \

--encoder-embed-dim 128 \

--decoder-embed-dim 128

Model Serving

$ fairseq-interactive data-bin/iwslt14.tokenized.de-en \

> --path checkpoints/checkpoint_best.pt \

> --bpe subword_nmt \

> --remove-bpe \

> --bpe-codes iwslt14.tokenized.de-en/code \

> --beam 10

2020-07-15 00:23:09 | INFO | fairseq_cli.interactive | Namespace(all_gather_list_size=16384, beam=10, bf16=False, bpe='subword_nmt', bpe_codes='iwslt14.tokenized.de-en/code', bpe_separator='@@',

.

.

2020-07-15 00:23:09 | INFO | fairseq.tasks.translation | [de] dictionary: 8848 types

2020-07-15 00:23:09 | INFO | fairseq.tasks.translation | [en] dictionary: 6640 types

2020-07-15 00:23:09 | INFO | fairseq_cli.interactive | loading model(s) from checkpoints/checkpoint_best.pt

2020-07-15 00:23:10 | INFO | fairseq_cli.interactive | NOTE: hypothesis and token scores are output in base 2

2020-07-15 00:23:10 | INFO | fairseq_cli.interactive | Type the input sentence and press return:

danke

S-3 danke

H-3 -0.1772392988204956 thank you .

D-3 -0.1772392988204956 thank you .

P-3 -0.2396 -0.1043 -0.2216 -0.1435

Flask

Python code without an API

Python code with a Flask API

Flask App

from flask import Flask

from flask import Response

from flask import jsonify

from flask import make_response

from flask import request

from fairseq.models.transformer import TransformerModel

model = TransformerModel.from_pretrained(

'checkpoints',

'checkpoint_best.pt',

data_name_or_path='data-bin/iwslt14.tokenized.de-en',

bpe='subword_nmt',

bpe_codes='iwslt14.tokenized.de-en/code',

remove_bpe=True,

source_lang='de',

target_lang='en',

beam=30,

nbest=1,

cpu=True

)Flask App

@app.route('/translate', methods=['GET'])

def translate():

timers = {'total': -time.time()}

logger.info(str(datetime.now()) + ' received parameters: ' + str(request.args))

query = request.args.get('q', '').strip().lower()

if not query:

raise BadRequest('Query is empty.')

res = model.translate(query, verbose=True)

rv = {'result': res}

timers['total'] += time.time()

resp = make_response(jsonify(rv))

return resp

if __name__ == "__main__":

app.run(debug=False, host='0.0.0.0', port=5002, use_reloader=True, threaded=False)

uwsgi

Assistant Regional Manager

to the

Flask Server

uwsgi + flask

from flask_app import app as application

if __name__ == "__main__":

application.run()

wsgi.py:

[uwsgi]

module = wsgi:application

listen = 128

disable-logging = false

logto = /var/log/uwsgi/api.log

lazy-apps = true

master = true

processes = 3

threads = 1

buffer-size = 65535

socket = /app/wsgi.sock

chmod-socket = 666

enable-threads = true

threaded-logger = trueuwsgi.ini:

nginx

http requests

Route http requests to the uwsgi server

nginx

error_log /var/log/nginx/error.log warn;

events {

worker_connections 8192;

}

http {

.

.

access_log /var/log/nginx/access.log apm;

server {

listen 8002;

server_name 127.0.0.1;

location /translate {

include uwsgi_params;

uwsgi_pass unix:/app/wsgi.sock;

}

location / {

include uwsgi_params;

uwsgi_pass unix:/app/wsgi.sock;

}

}

}

supervisord

Me getting happy after setting nginx + uwsgi config files

Who will coordinate them?

Supervisord

supervisord

[supervisord]

nodaemon=true

logfile=/var/log/supervisor/supervisord.log

[program:uwsgi]

command=uwsgi --ini uwsgi.ini # --pyargv "inference/32k/"

directory=/app

stopsignal=TERM

stopwaitsecs=10

autorestart=true

startsecs=10

priority=3

stdout_logfile=/var/log/uwsgi/out.log

stdout_logfile_maxbytes=0

stderr_logfile=/var/log/uwsgi/err.log

stderr_logfile_maxbytes=0

[program:nginx]

command=nginx -c /etc/nginx/nginx.conf

autorestart=true

stopsignal=QUIT

stopwaitsecs=10

stdout_logfile=/var/log/nginx/out.log

stdout_logfile_maxbytes=0

stderr_logfile=/var/log/nginx/err.log

stderr_logfile_maxbytes=0Docker

World's best containerization software

I'm ready to start coding again

No doubt about it

Dockerfile

FROM ubuntu:18.04

RUN apt-get update && \

apt-get dist-upgrade -y && \

apt-get install -y build-essential curl g++ gettext-base git libfreetype6-dev libpng-dev libsnappy-dev \

libssl-dev pkg-config python3-dev python3-pip software-properties-common supervisor nginx \

unzip zip zlib1g-dev openjdk-8-jdk tmux wget bzip2 libpcre3 libpcre3-dev vim systemd ca-certificates && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

ENV LANG C.UTF-8

RUN pip3 install torch==1.4.0+cpu torchvision==0.5.0+cpu -f https://download.pytorch.org/whl/torch_stable.html && \

rm -rf /root/.cache /tmp/*

COPY ./requirements.txt /tmp/

RUN pip3 install -r /tmp/requirements.txt && \

rm -rf /root/.cache /tmp/*

COPY . /app

COPY nginx.conf /etc/nginx/

RUN mkdir -p /var/log/supervisor

RUN mkdir -p /var/log/uwsgi

# ========= setup uwsgi

RUN touch /app/wsgi.sock

RUN chmod 666 /app/wsgi.sock

WORKDIR /app

CMD ["bash", "entrypoint.sh"]

EXPOSE 8002

Docker

$ docker build -t mock_app:latest .

$ docker run -d -p 8002:8002 --name mock mock_app:latest

.

.

2020-07-19 09:16:59,130 CRIT Supervisor running as root (no user in config file)

2020-07-19 09:16:59,133 INFO supervisord started with pid 1

2020-07-19 09:17:00 | INFO | fairseq.file_utils | loading archive file checkpoints

2020-07-19 09:17:00 | INFO | fairseq.file_utils | loading archive file data-bin/iwslt14.tokenized.de-en

2020-07-19 09:17:00,138 INFO spawned: 'uwsgi' with pid 19

2020-07-19 09:17:00,145 INFO spawned: 'nginx' with pid 20

Does it work?

$ curl http://localhost:8002/health

OK

$ curl http://localhost:8002/translate?q=Mein+name+ist+shreya

{"result":"my name is shreya ."}Some Good Practices

- Check logs frequently:

- Caching

- Unittests

When you think the talk is over, but the presenter keeps babbling something

$ docker logs -f <container-name>

$ docker exec -it <container-id> bashQuestions?

Discord channel: talk-nlp-model

LinkedIn:

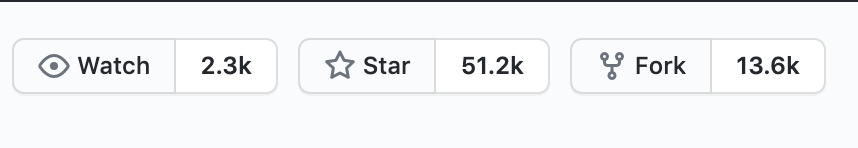

GitHub: ShreyaKhurana

Code: https://github.com/ShreyaKhurana/europython2020