Dalle reti neurali ai computer differenziabili

Simone Scardapane

Facebook Developer Circle Roma, 05 Dicembre

Software ed università

Most academic researchers write software for themselves. As Cook put it: “People who have only written software for their own use have no idea how much work goes into writing software for others.”

Quanto ripaga valorizzare la produzione di buon software all'università (es., JMLR MLOSS)?

Scopriamolo con un caso d'uso: il deep learning.

Deep learning?

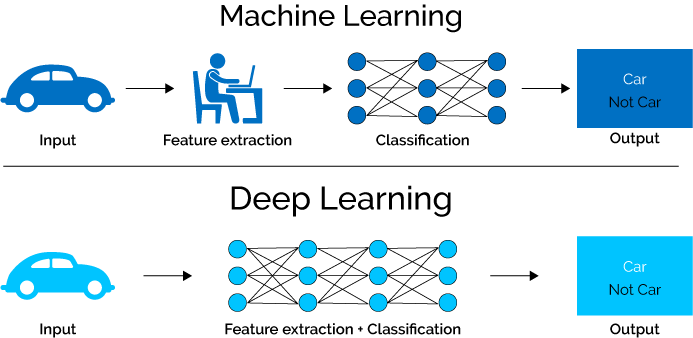

Machine learning

Un insieme di algoritmi per estrarre conoscenza, in base ad un determinato obiettivo, a partire dai dati.

Deep learning

Il deep learning è un sotto-campo del ML che permette di elaborare input estremamente complessi:

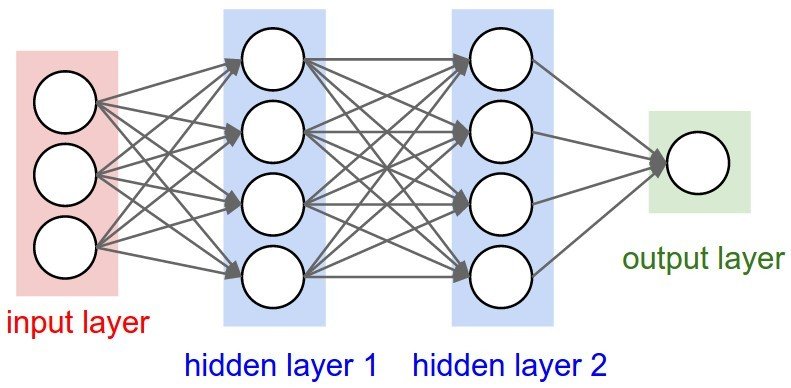

Reti neurali

Lo strumento più utile per il deep learning sono le reti neurali artificiali:

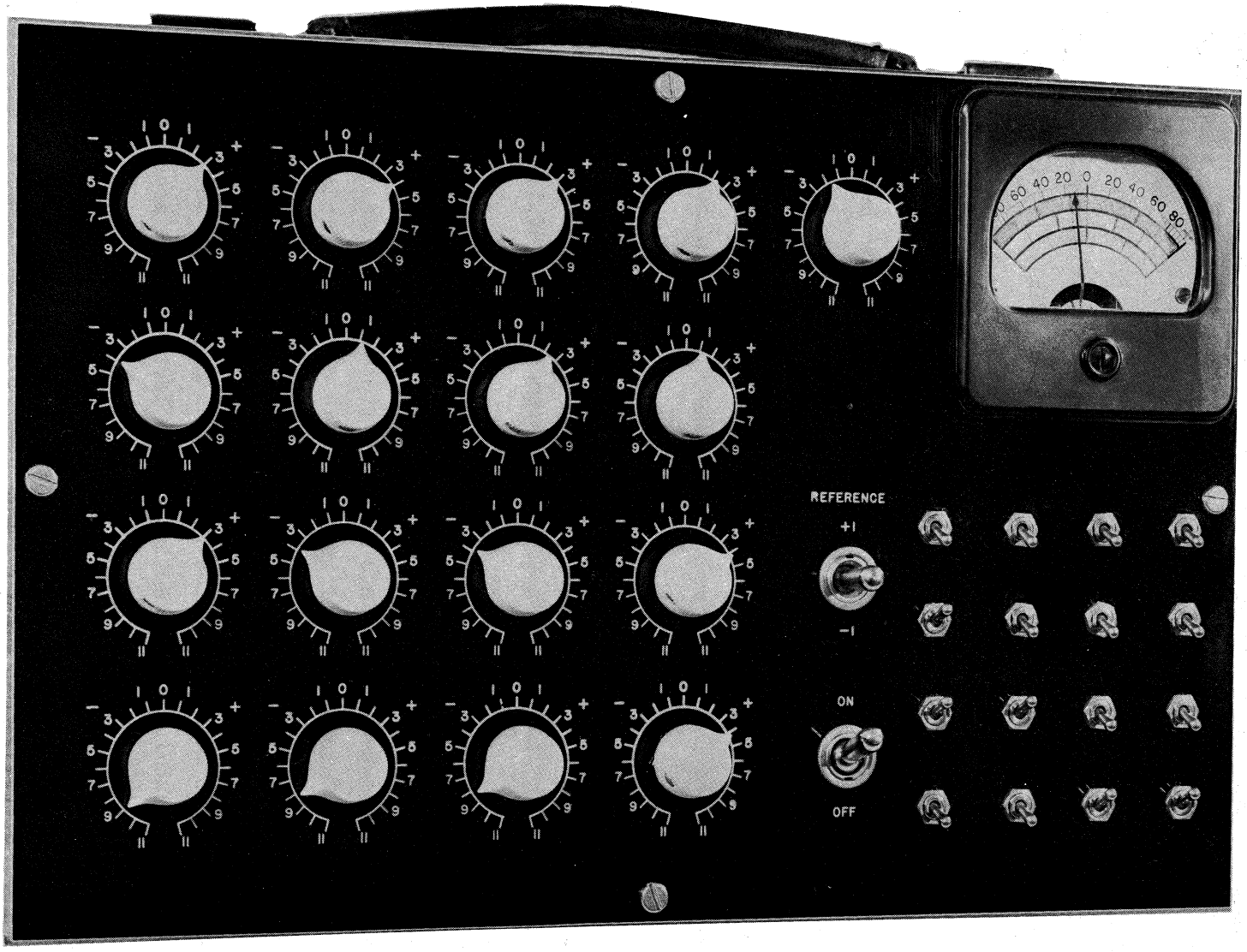

Storia delle reti neurali - 1958/1993

Perceptron (1958)

Adaline (1960)

LeNet 1 (1993)

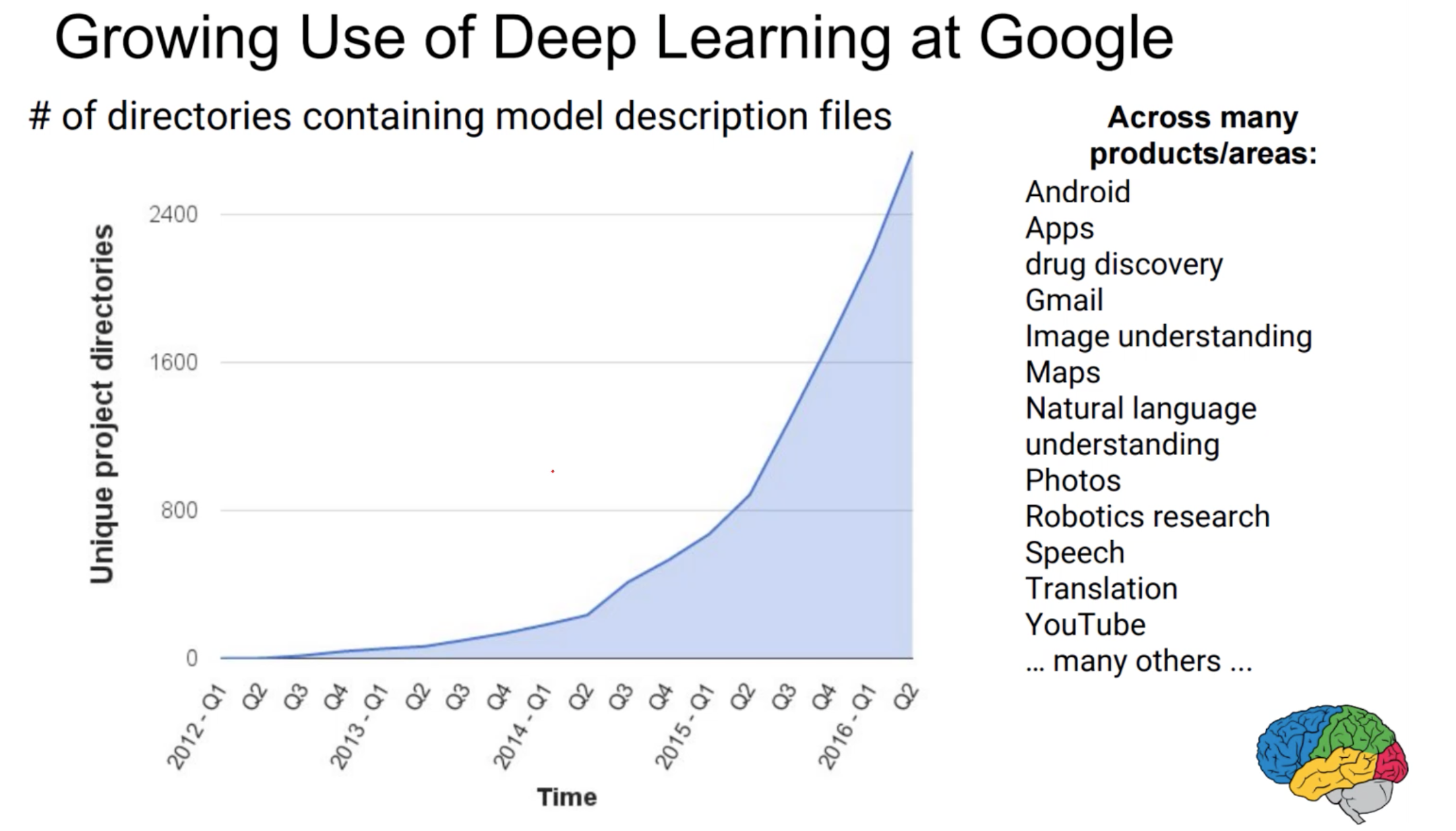

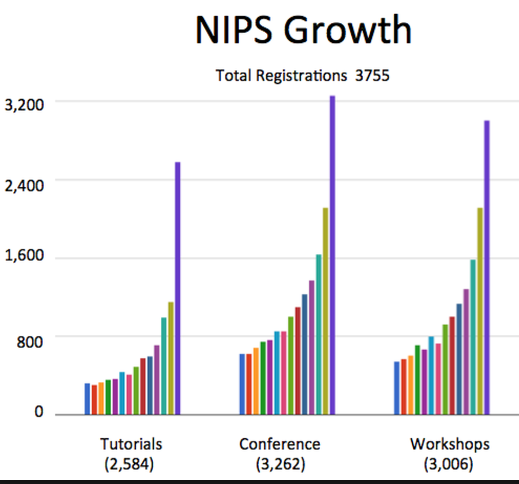

Il mondo è impazzito!

Cosa è successo?

Fattori scatenanti

- Innovazioni teoriche?

- Dati?

- Potenza di calcolo?

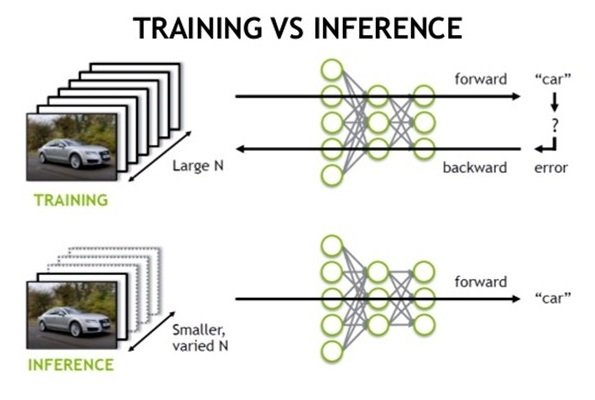

Forward / backward pass

Forward pass: relativamente semplice.

Backward pass: può diventare enormemente complicata.

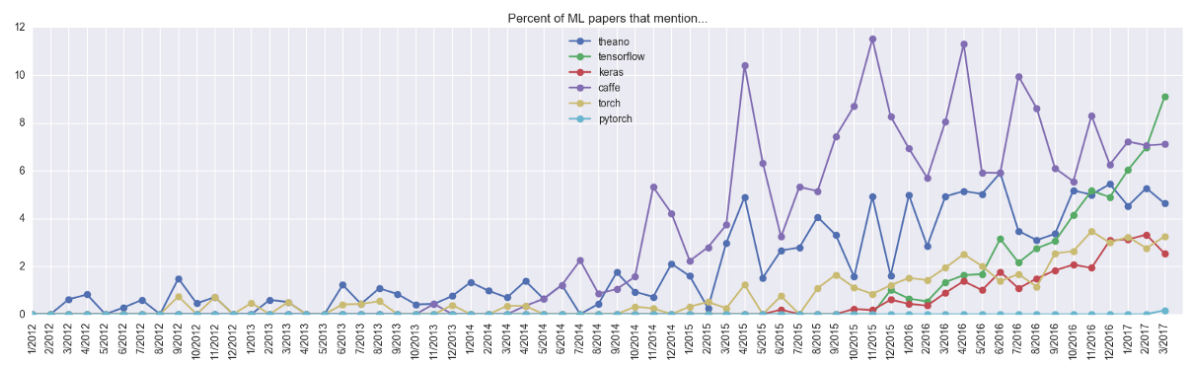

2010 - Theano (e simili)

import theano

import theano.tensor as T

import numpy as np

X = theano.shared(value=np.asarray([[1, 0], [0, 0], [0, 1], [1, 1]]), name='X')

y = theano.shared(value=np.asarray([[1], [0], [1], [0]]), name='y')

W1 = theano.shared(value=np.asarray(rng.uniform(size=(2, 1))), name='W', borrow=True)

output = T.nnet.sigmoid(T.dot(T.nnet.sigmoid(T.dot(X, W1)), W2))Forward pass (definition):

cost = T.sum((y - output) ** 2)

backward = [(T.grad(cost, W1)), (W2, W2 - LEARNING_RATE * T.grad(cost, W2))]

train = theano.function(inputs=[], outputs=[], updates=updates)Backward pass (automatic!):

2015 - Keras

from keras.models import Sequential

from keras.layers import Dense

model = Sequential()

model.add(Dense(units=64, activation='relu', input_dim=100))

model.add(Dense(units=10, activation='softmax'))Forward pass (definition):

model.compile(loss='categorical_crossentropy')

model.fit(x_train, y_train, epochs=5, batch_size=32)Backward pass (automatic!):

Ed ora?

Un'analogia

"People think that computer science is the art of geniuses but the actual reality is the opposite, just many people doing things that build on each other, like a wall of mini stones."

--- Donald Knuth

L'object-oriented programming ha permesso di astrarre funzionalità e combinarle modularmente.

Lo sviluppo del software per il deep learning sta a sua volta cambiando il mondo della ricerca.

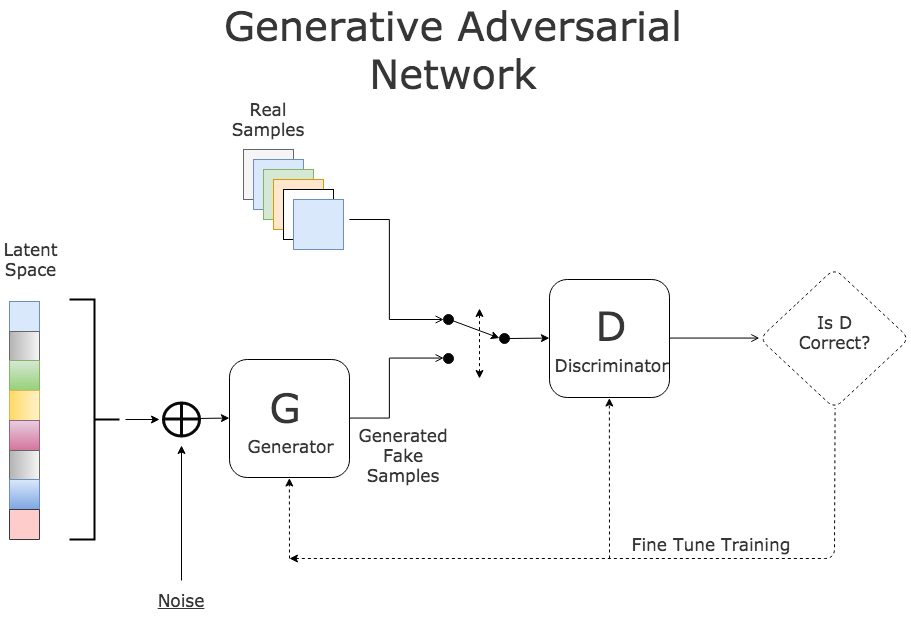

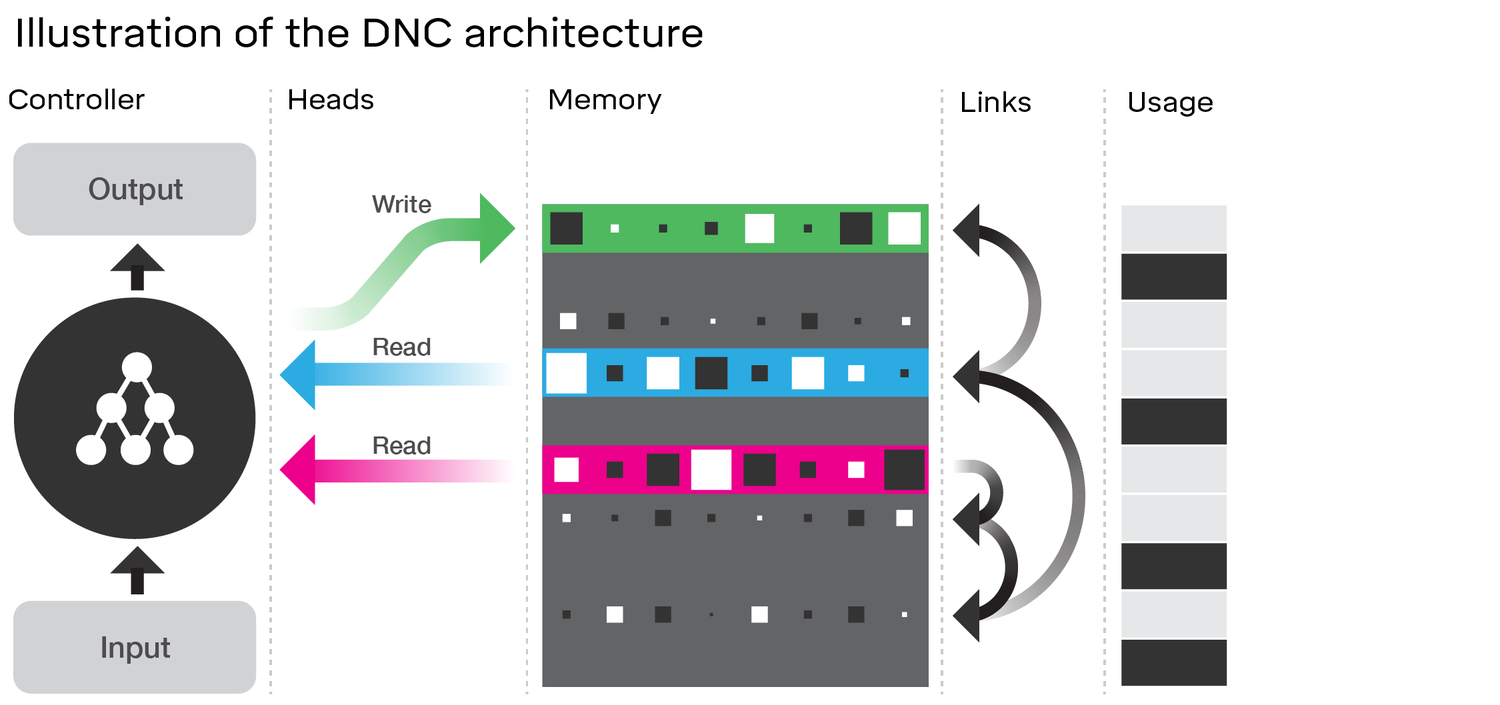

Differentiable Neural Computers

Graves, A., Wayne, G., Reynolds, M., Harley, T., Danihelka, I., Grabska-Barwińska, A., Colmenarejo, S.G., Grefenstette, E., Ramalho, T., Agapiou, J. and Badia, A.P., 2016. Hybrid computing using a neural network with dynamic external memory. Nature, 538(7626), pp.471-476.

Machine teaching is a paradigm shift from machine learning, akin to how other fields like language programming have shifted from optimizing performance to optimizing productivity.

Grazie mille dell'attenzione!