Quick Apache Arrow

bit.ly/quick-arrow-lunch

28 Feb 2019

I first heard about Arrow last year

Why would anyone need a pipeline between R and Python?

...or is it a broader need?

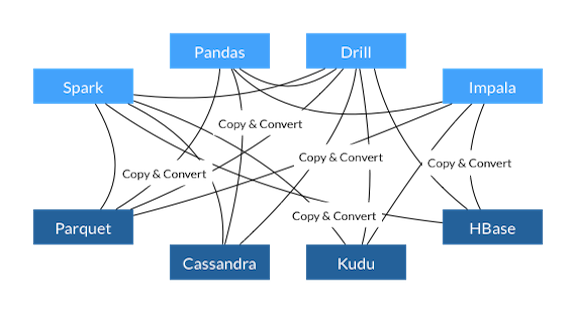

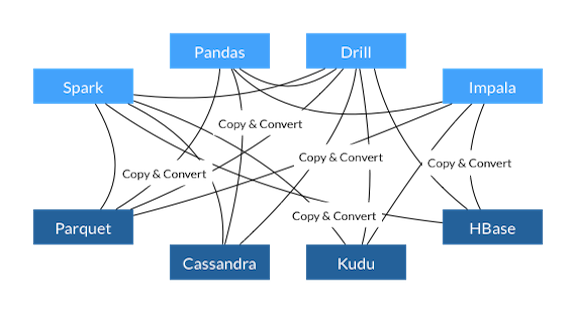

Before:

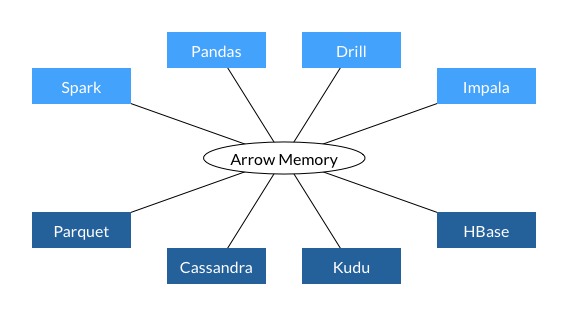

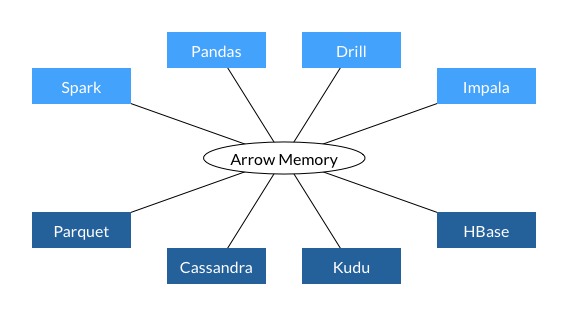

After:

Images lifted from arrow.apache.org/

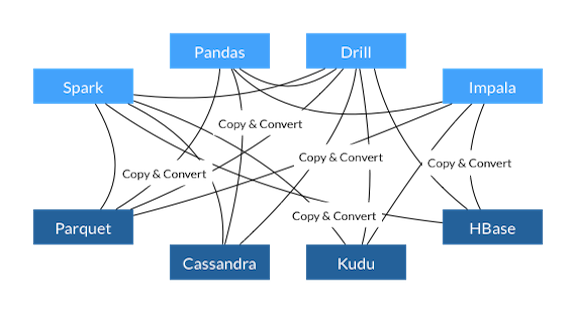

Before:

- Every platform has a unique representation of a dataset.

- Cross-language data translation affects speed.

- Cross-platform transcription uses multiple custom interfaces.

("the plight of the data consumer"—Dremio blog post)

Image lifted from arrow.apache.org/

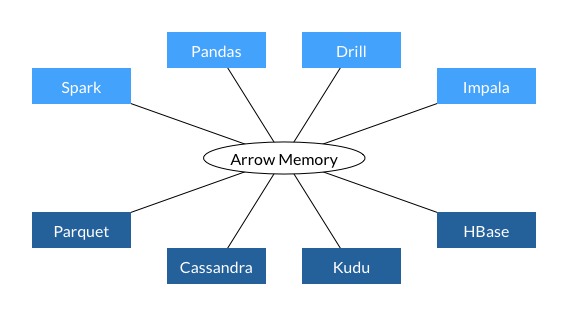

After:

- Every platform *still* has its unique representation of a dataset.

- But communication via only one common in-memory format reduces complexity.

Image lifted from arrow.apache.org/

Backstory

Where did the need come from?

It all started in 2004*

*Except for the Google File System paper (HDFS) from 2003 (Ghemawat, Gobioff, Leung)

In Chicago, people were throwing poo in the river...

...in California

Jeff Dean and Sanjay Ghemawat were finishing their OSDI paper: MapReduce, the inspiration for Hadoop.

image from giphy search for California

2004

2005

2006

2008

(also Wes McKinney starts learning Python) *source: DataCamp podcast

2009

2010

2012

2011

Big data meets Pandas

https://www.datacamp.com/community/blog/data-science-tool-building

The backstory intersects paths with Wes McKinney in 2015, while he's at Cloudera. (story in the DataCamp interview above)

- Leaders from multiple infrastructure projects

- All developing multiple format conversion tools

- Why not just one in-memory standard?

Now we know "Why"

So, *what* is Arrow?

Components

-

Feather (flatbuffer for serialization Pandas ↔ R)

-

proof of concept

-

-

Parquet (on-disk columnar storage format)

-

Arrow (in-memory columnar format)

-

C++, R, Python (use the C++ bindings) even Matlab

-

Go, Rust, Ruby, Java, Javascript (reimplemented)

-

-

Plasma (in-memory shared object store)

-

Gandiva (SQL engine for Arrow)

-

Flight (remote procedure calls based on gRPC)

Feather

(A proof of concept; still in codebase)

Python (write)

R (read)

import pandas as pd

import pyarrow.feather as feather

import numpy as np

x = np.random.sample(1000)

y = 10 * x**2 + 2 * x + 0.05 * np.random.sample(1000)

df = pd.DataFrame(dict(x=x, y=y))

df.to_feather('testing.feather')library(arrow)

dataframe <- read_feather('testing.feather')

png("rplot.png")

plot(y ~ x, data=dataframe)

dev.off()Parquet

On-disk data storage format

(Joined with Arrow December 2018)

import numpy as np

import pandas as pd

import pyarrow as pa

import pyarrow.parquet as pq

df = pd.DataFrame(

{'one':[-1, np.nan], 'two':['foo', 'bar'], 'three':[True, False]},

index=list('ab'))

table = pa.Table.from_pandas(df)

pq.write_table(table, 'example.parquet')Write

Read

import pyarrow.parquet as pq

table2 = pq.read_table('example.parquet')

df = table2.to_pandas()Arrow

- Why not just use Parquet? (or ORC)

-

Those are designed for on-disk storage and have

- compression

- optional separate files for metadata

- Arrow is in-memory and is

- for speed of access

- for cross-framework use (i.e. polyglot pickles)

-

Those are designed for on-disk storage and have

(cross-language in-memory columnar data format)

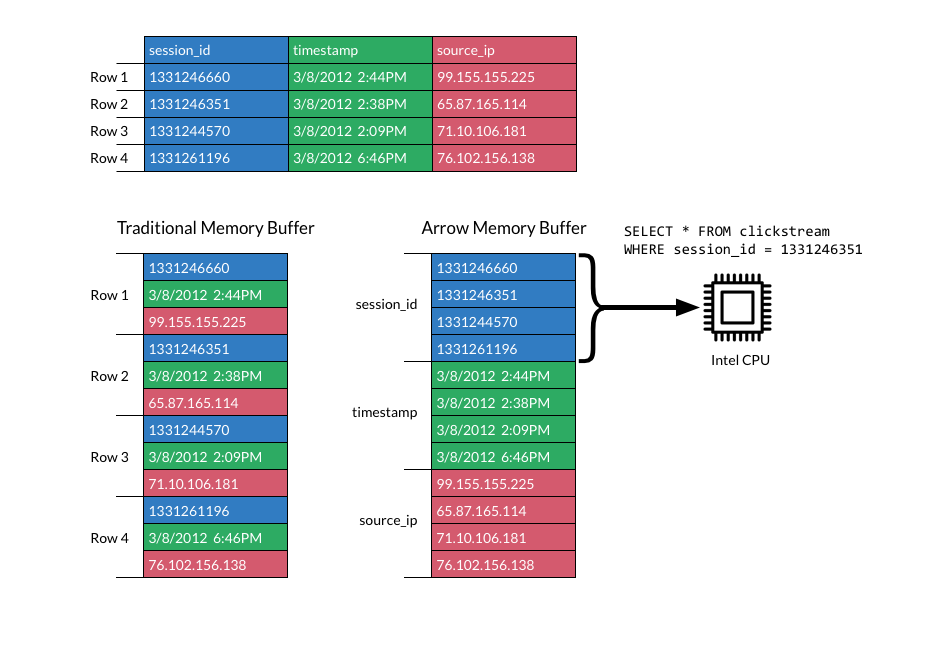

Column store

Like second-generation on-disk data stores (Parquet, ORC, etc.) in-memory columnar layouts allow faster computation over columns (mean, stdev, count of categories, etc.)

Image lifted from arrow.apache.org/

Arrow

(speed example: Spark, from the blog)

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.3.0-SNAPSHOT

/_/

Using Python version 2.7.13 (default, Dec 20 2016 23:09:15)

SparkSession available as 'spark'.

In [1]: from pyspark.sql.functions import rand

...: df = spark.range(1 << 22).toDF("id").withColumn("x", rand())

...: df.printSchema()

...:

root

|-- id: long (nullable = false)

|-- x: double (nullable = false)

In [2]: %time pdf = df.toPandas()

CPU times: user 17.4 s, sys: 792 ms, total: 18.1 s

Wall time: 20.7 s

In [3]: spark.conf.set("spark.sql.execution.arrow.enabled", "true")

In [4]: %time pdf = df.toPandas()

CPU times: user 40 ms, sys: 32 ms, total: 72 ms

Wall time: 737 msnot the default

Arrow

(components)

import pyarrow as pa

arr1 = pa.array([1,2])

arr2 = pa.array([3,4])

field1 = pa.field('col1', pa.int64())

field2 = pa.field('col2', pa.int64())

field1 = field1.add_metadata({'meta': 'foo'})

field2 = field2.add_metadata({'meta': 'bar'})

col1 = pa.column(field1, arr1)

col2 = pa.column(field2, arr2)

table = pa.Table.from_arrays([col1, col2])

batches = table.to_batches(chunksize=1)

print(table.to_pandas())

# col1 col2

# 0 1 3

# 1 2 4

pa.Table.from_pandas(table.to_pandas())An array is a sequence of values with known length and type.

A column name + data type

+ metadata = a field

A field + an array = a column

A table is a set of columns

You can split a table into row batches

You can convert between pyarrow tables and pandas data frames

(both directions)

Plasma

- In-memory object store. Documentation here.

- The goal is zero-copy data exchange between frameworks

(announced mid-2017)

# Create an object.

object_id = pyarrow.plasma.ObjectID(20 * b'a')

object_size = 1000

buffer = memoryview(client.create(object_id, object_size))

# Write to the buffer.

for i in range(1000):

buffer[i] = 0

# Seal the object making it immutable and available to other clients.

client.seal(object_id)# Get the object from the store. This blocks until the object has been sealed.

object_id = pyarrow.plasma.ObjectID(20 * b'a')

[buff] = client.get([object_id])

buffer = memoryview(buff)Create:

Use:

Can be copied because it's now immutable.

Gandiva

- Uses LLVM to JIT-compile SQL queries on the in-memory Arrow data

- The docs on the original page have literal SQL not ORM-SQL which you feed as a string to the compiler then execute

(Donated by Dremio November 2018)

Named after a mythical bow from an Indian legend that makes the arrows it fires 1000 times more powerful.

Flight

- Goal is to reduce deserialization time during intra-machine communication

- Built using of gRPC, a cross-language universal remote procedure call framework

(Announced as a new initiative in late 2018)

What the future holds

Wes McKinney has been thinking out loud on the listserv about the future of the project, and posted some ideas here.

(Note to Tanya: Click on this. It will be the start point for discussions. Scroll to "Goals")

Participate!

- Apache Roadshow in Chicago May 13-14

- https://arrow.apache.org/

- https://issues.apache.org/jira/projects/ARROW

- https://cwiki.apache.org/confluence/display/ARROW

Dedication

To my beautiful Mom, whose only joy in life was her children's happiness.

Rest in peace. You did a wonderful job. I love you.