Web Audio

Audio on Web

- <audio> Tag

- Web Audio API

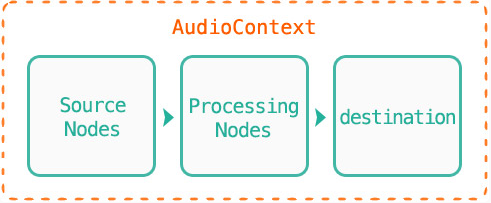

Main Elements

- AudioContext

- AudioNode

- connect

var AudioContext = AudioCOntext || webkitAudioContext;

var context = new AudioContect;

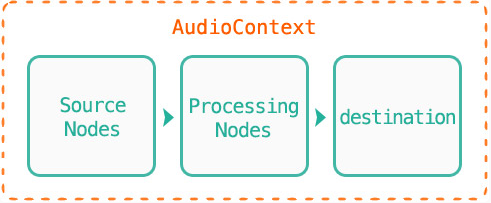

Main Elements

- AudioContext

- AudioNode

- connect

var AudioContext = AudioCOntext || webkitAudioContext;

var context = new AudioContect;

聲音來源

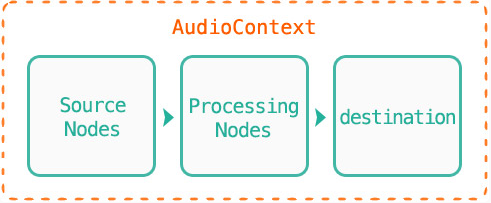

Main Elements

- AudioContext

- AudioNode

- connect

var AudioContext = AudioCOntext || webkitAudioContext;

var context = new AudioContect;

聲音來源

聲音處理模組

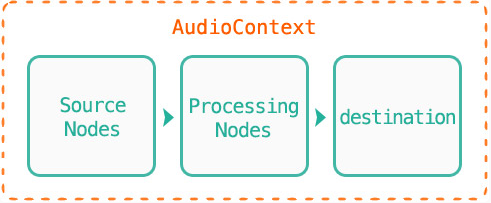

Main Elements

- AudioContext

- AudioNode

- connect

var AudioContext = AudioCOntext || webkitAudioContext;

var context = new AudioContect;

聲音來源

聲音處理模組

輸出節點

Node Modules

- GainNode // 音量

- Audio Param // 某個時間點的變化量

Node Modules

- GainNode // 音量

- Audio Param // 某個時間點的變化量

Live DEMO!!!!

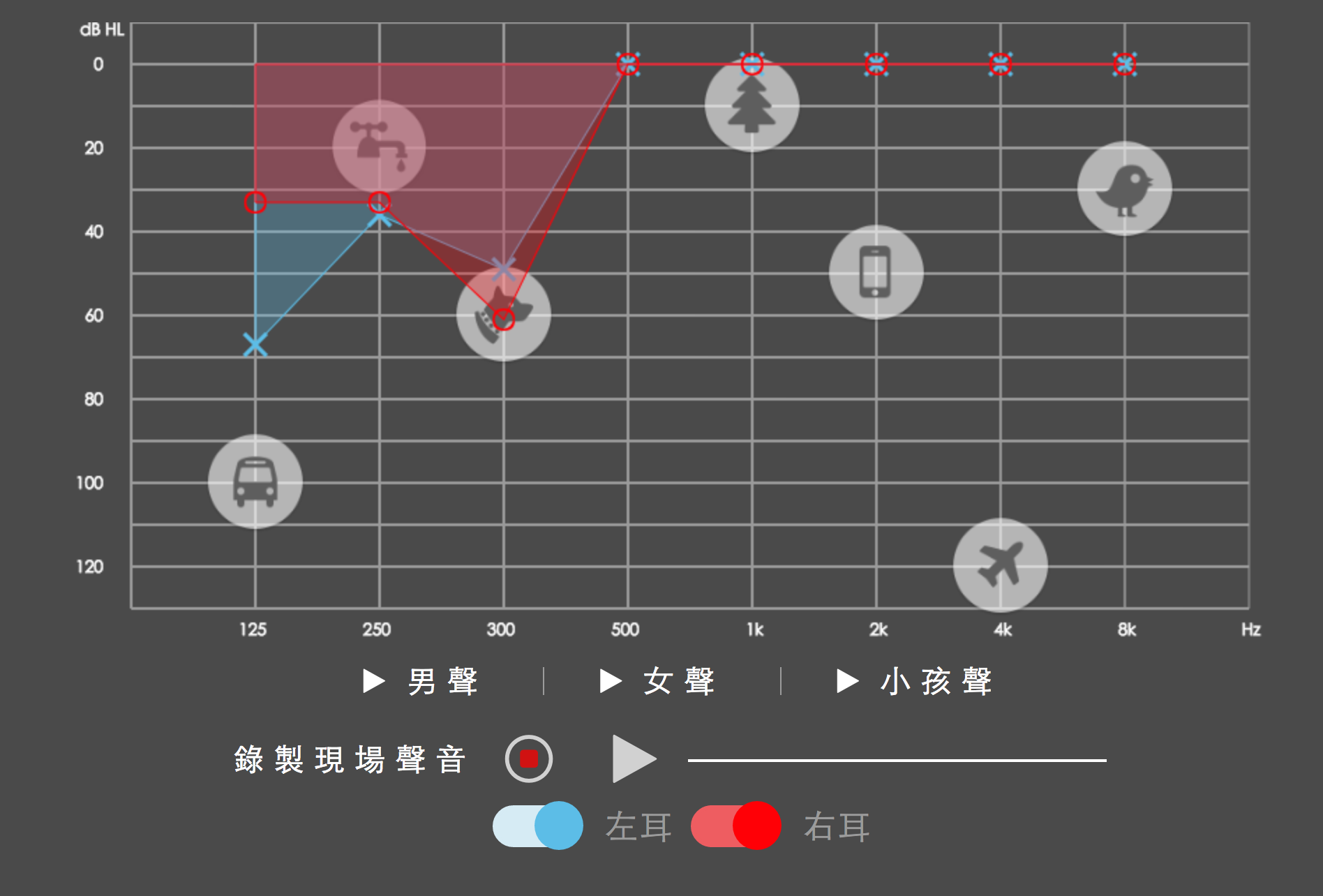

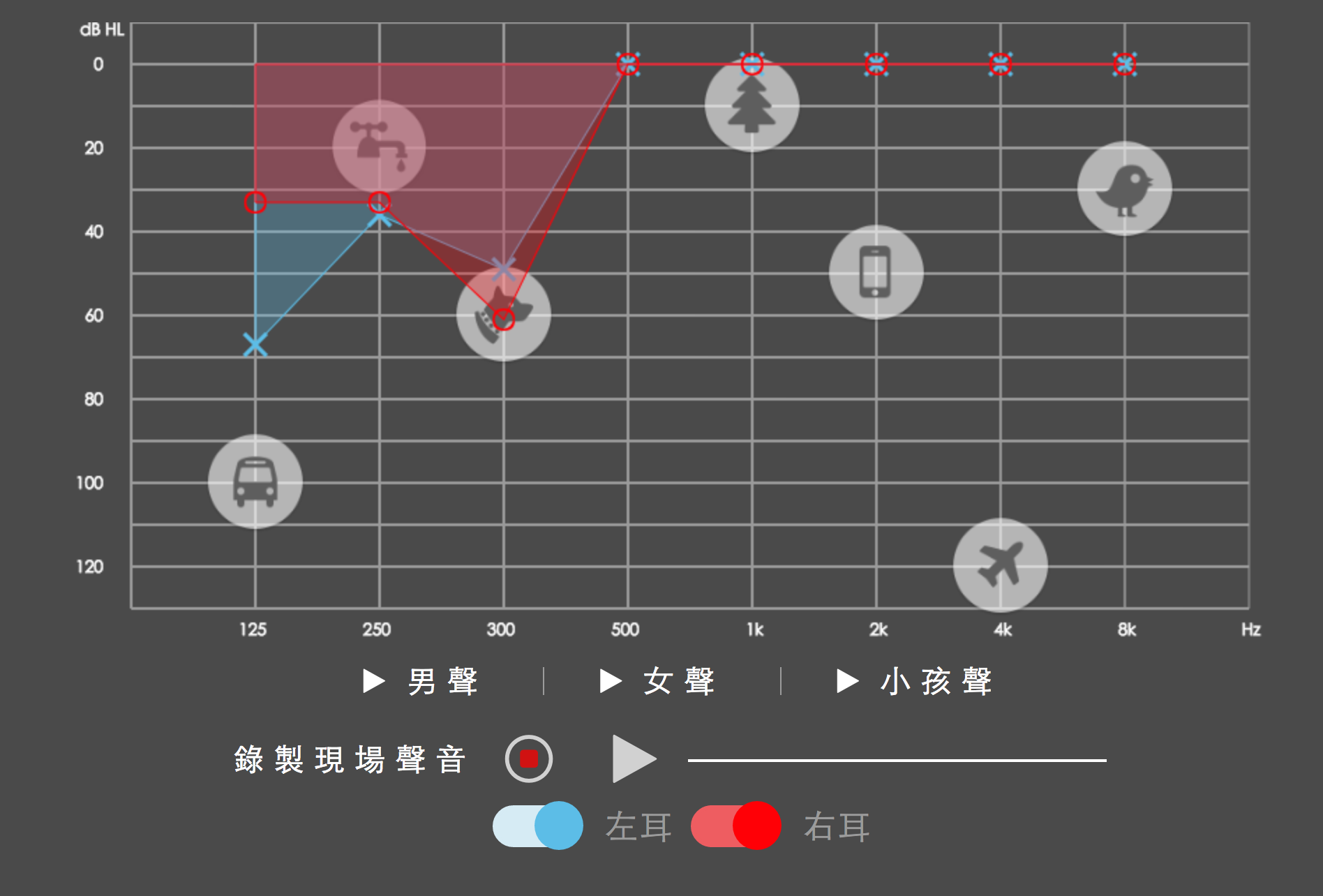

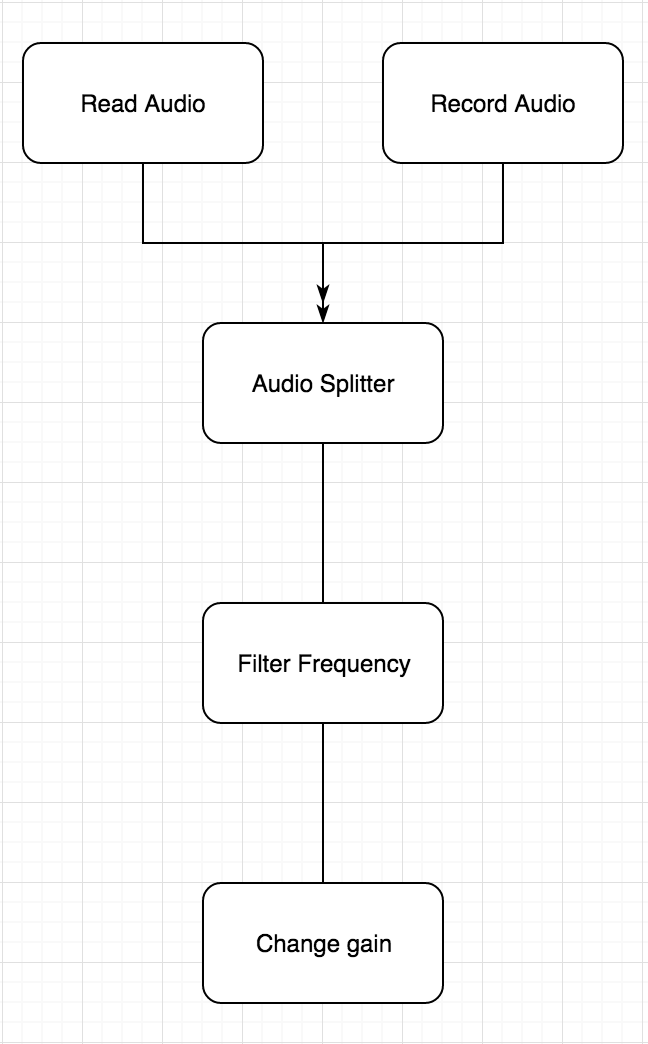

What we need...

So we need...

- Control Volume

- Split audio to left and right

- Filter Frequency

- Read .mp3 aduio

- Record audio

Read Audio

<audio controls autoplay>

<source src="test.mp3">

</audio>

--

var audioCtx = new(window.AudioContext || window.webkitAudioContext)();

var myAudio = document.querySelector('audio');

var source = audioCtx.createMediaElementSource(myAudio);fetch(url)

.then(response => response.arrayBuffer())

.then(response => context.decodeAudioData(response, audioBuffer => {

// Play Audio

const source = context.createBufferSource();

});Record Audio

- getUserMedia

- Stream

navigator.getUserMedia = (navigator.getUserMedia ||

navigator.mozGetUserMedia ||

navigator.msGetUserMedia ||

navigator.webkitGetUserMedia);

if (navigator.getUserMedia) {

const constraints = { audio: true };

navigator.getUserMedia(constraints, (stream) => {

source = context.createMediaStreamSource(stream);

// create a ScriptProcessorNode

if (!context.createScriptProcessor) {

node = context.createJavaScriptNode(BUFFER_SIZE, 1, 1);

} else {

node = context.createScriptProcessor(BUFFER_SIZE, 1, 1);

}

// listen to the audio data, and record into the buffer

node.onaudioprocess = (e) => {

const channel = e.inputBuffer.getChannelData(0);

const buffer = new Float32Array(BUFFER_SIZE);

for (let i = 0; i < BUFFER_SIZE; i++) {

buffer[i] = channel[i];

}

arrayBuffer.push(buffer);

};

// connect the ScriptProcessorNode with the input audio

source.connect(node);

node.connect(context.destination);

}, (e) => reject(e));

} else {

return false;

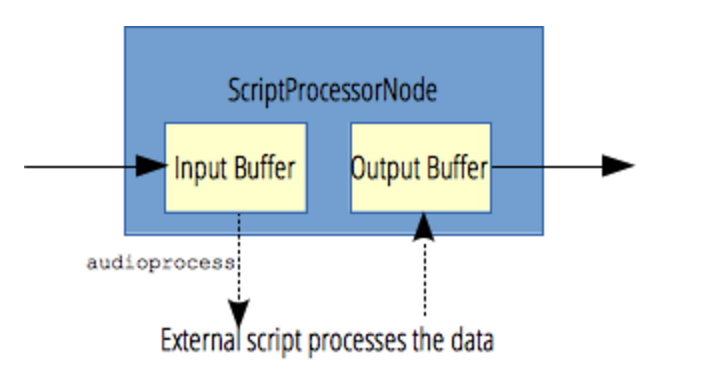

}ScriptProcessorNode

Fill Audio Buffer

const audioBuffer =

context.createBuffer(2, buffer.length * BUFFER_SIZE * 2, context.sampleRate);

const channel = audioBuffer.getChannelData(0);

const channel2 = audioBuffer.getChannelData(1);

for (let i = 0; i < buffer.length; i++) {

for (let j = 0; j < BUFFER_SIZE; j++) {

channel[i * BUFFER_SIZE + j] = buffer[i][j];

channel2[i * BUFFER_SIZE + j] = buffer[i][j];

}

}Audio Splitter

var ac = new AudioContext();

ac.decodeAudioData(someStereoBuffer, function(data) {

var source = ac.createBufferSource();

var splitter = ac.createChannelSplitter(2);

var merger = ac.createChannelMerger(2);

source.buffer = data;

source.connect(splitter);

var gainNode = ac.createGain();

gainNode.gain.value = 0.5;

splitter.connect(gainNode, 0);

gainNode.connect(merger, 0, 1);

var dest = ac.createMediaStreamDestination();

merger.connect(dest);

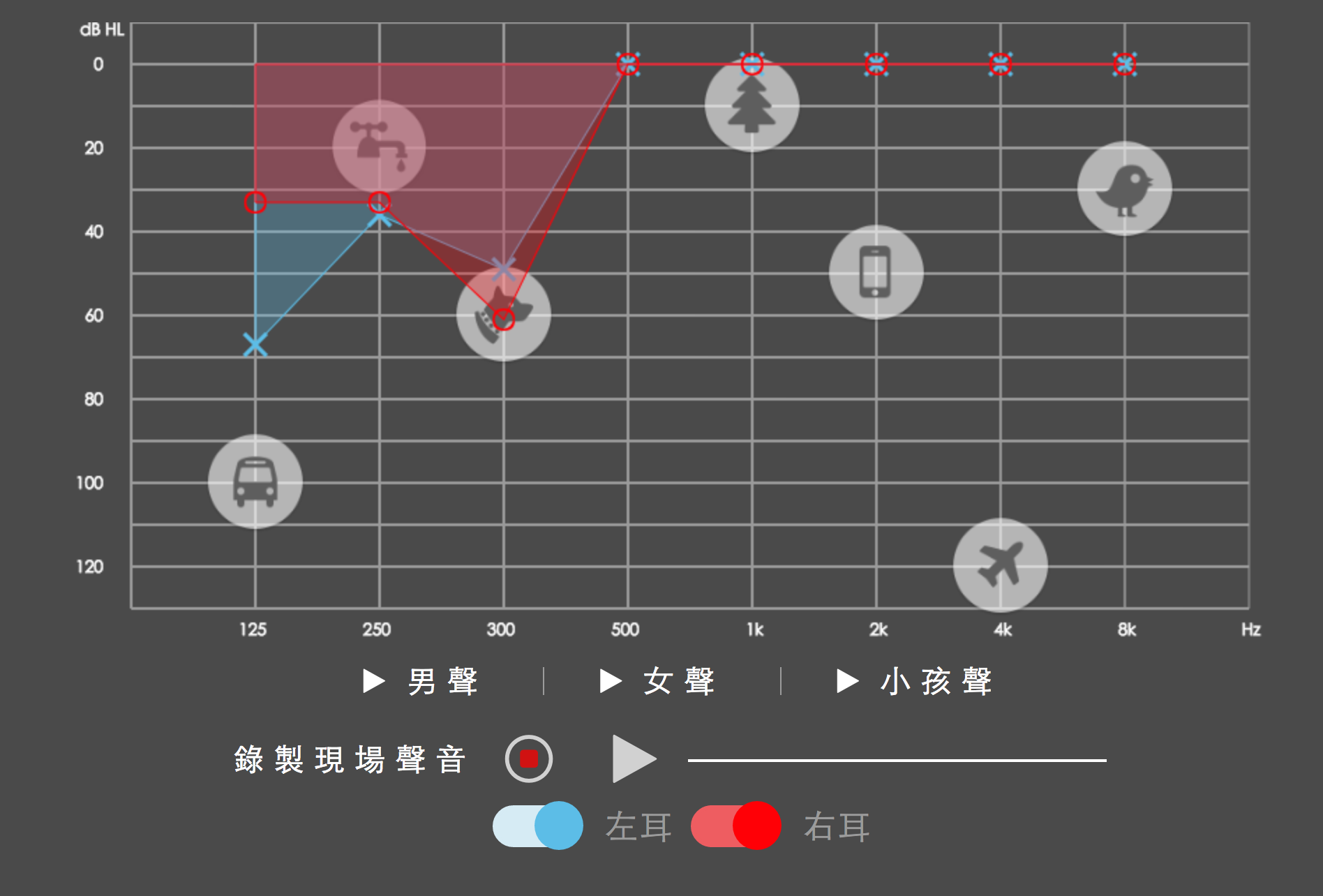

});BiquadFilterNode

- lowpass

- highpass

- bandpass - 除了設定頻率之外的全濾掉

- ...

filter.type = 'bandpass';

filter.frequency.value = frequency;return fetch(url)

.then(response => response.arrayBuffer())

.then(response => context.decodeAudioData(response, audioBuffer => {

// Play Audio

const source = context.createBufferSource();

source.buffer = audioBuffer;

source.loop = true;

const splitter = context.createChannelSplitter(2);

const merger = context.createChannelMerger(2);

source.connect(splitter);

setting.left.forEach(set => bindSetting(context, splitter, merger, set, false));

setting.right.forEach(set => bindSetting(context, splitter, merger, set, true));

merger.connect(context.destination);

resolve(source);

}, e => reject(e))

)const filter = ctx.createBiquadFilter();

const gainNode = ctx.createGain();

filter.type = 'bandpass';

filter.frequency.value = frequency;

gainNode.gain.value = 2 - gain;

splitter.connect(filter, 1);

filter.connect(gainNode);

gainNode.connect(merger, 0, right ? 1 : 0);