Horizontal Flows, Manifold Stochastics and

Geometric Deep Learning

Faculty of Science, University of Copenhagen

Stefan Sommer and Alex Bronstein

Department of Computer Science, University of Copenhagen

Technion – Israel Institute of Technology

Curvature, convolutions and translation invariance

Geometric deep learning

deep learning with manifold target or manifold domain (Bronstein'17)

Objectives:

- relate curvature to the obstruction of translation invariance when parallel transporting kernels

- define a convolution operator that naturally incorporates curvature

- define efficient manifold-valued convolution operator, generalizing Fréchet mean based operators

- exemplify applications of stochastics and bundle theory in geometric deep learning

Sommer, Bronstein: Horizontal Flows and Manifold Stochastics in Geometric Deep Learning. TPAMI, 2020, doi: 10.1109/TPAMI.2020.2994507

Convolutions on manifolds

- Euclidean: \(k\ast f(\mathbf x)=\int_{\mathbb R^d}k(-\mathbf v)f(\mathbf x+\mathbf v)d\mathbf v\)

- convolution in (pseudo-)coordinates, patch operator (Monti'16,Masci'15,Boscaini'16)

- is it possible to translate kernels? Can we remove the reliance on charts?

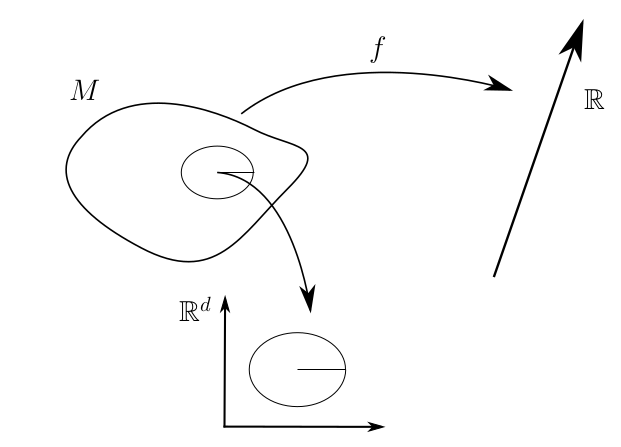

Holonomy obstructs translation invariance

- directional functions: \(f:M\times TM\to\mathbb R\) (Poulenard'18)

\[k\ast f(x,v)=\int_{T_xM}k_w( v)f(\overline{\mathrm{Exp}}_x(v))dv\] - gauge equivariant convolution: (Taco'19)

\[k\ast f(x)=\int_{\mathbb R^d} k(\mathbf v)\rho_{x\leftarrow\mathrm{Exp}_x(u_x\mathbf v)}f(\mathrm{Exp}_x(u_x\mathbf v))d\mathbf v \] - Holonomy: parallel transport path dependent

- rotation invariant kernels, orientation from e.g. curvature

Curvature and convolutions

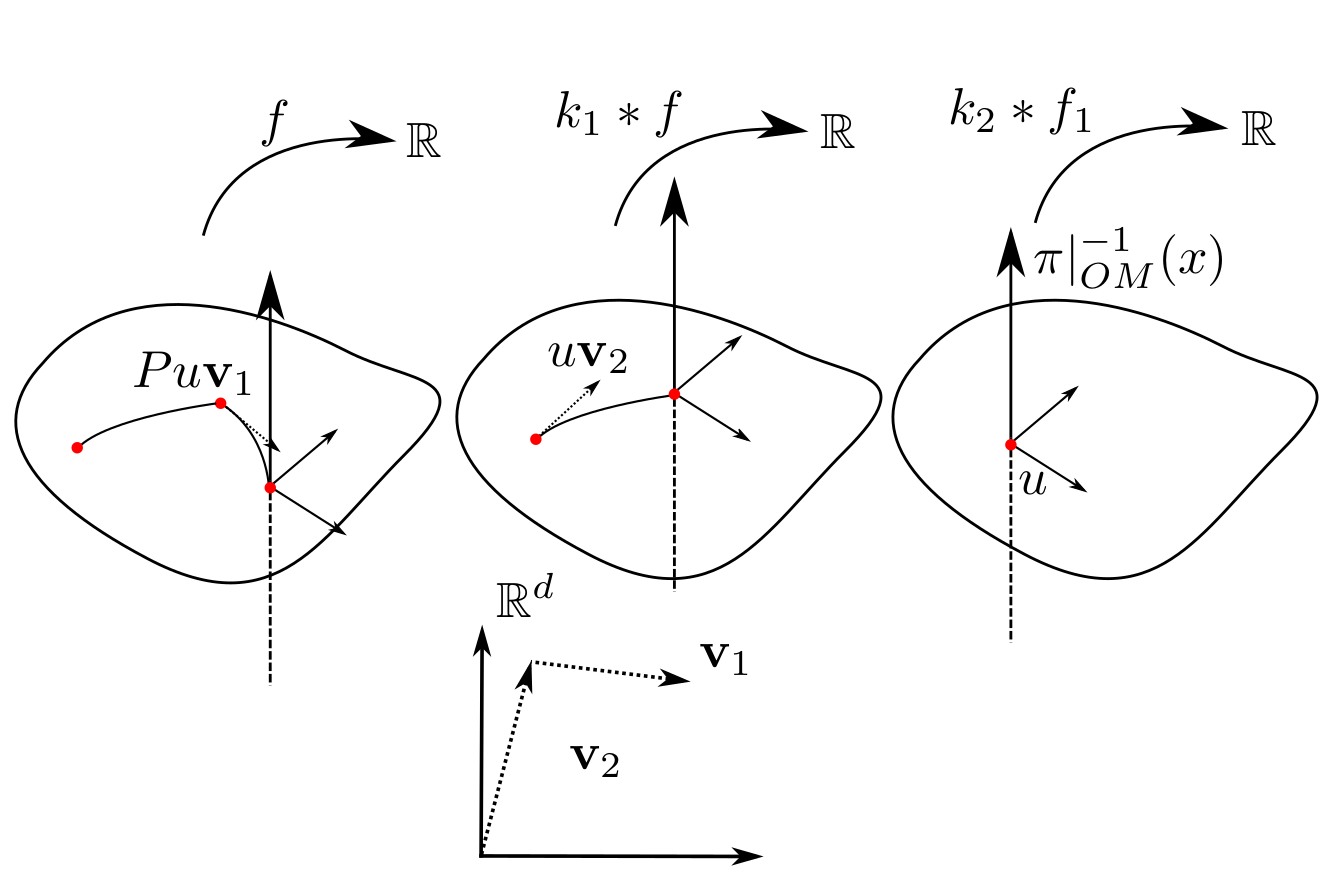

Directional functions: \(f:OM\to\mathbb R\)

\[k\ast f(u)=\int_{\R^d}k(-\mathbf v)f(P_{\gamma(x,u\mathbf v)}(u))d\mathbf v\]

\(k_1,k_2\) kernels with \(\mathrm{supp}(k_i)\subseteq B_r(0)\), and \(f\in C^3(OM,\mathbb R)\)

Riemannian curvature: \(R(v,u)=-\mathcal{C}([h_u(v),h_u(w)])\)

Theorem:

Non-commutativity:

\(k_2\ast (k_1\ast f)-k_1\ast (k_2\ast f) =\)

\(\int_{\mathbb R^d} \int_{\mathbb R^d} k_2(-\mathbf v_2)k_1(-\mathbf v_1) [h_u(u\mathbf v_2),h_u(u\mathbf v_1)]f d\mathbf v_1 d\mathbf v_2 + o(r^{d+1})\)

+ non-associtativity

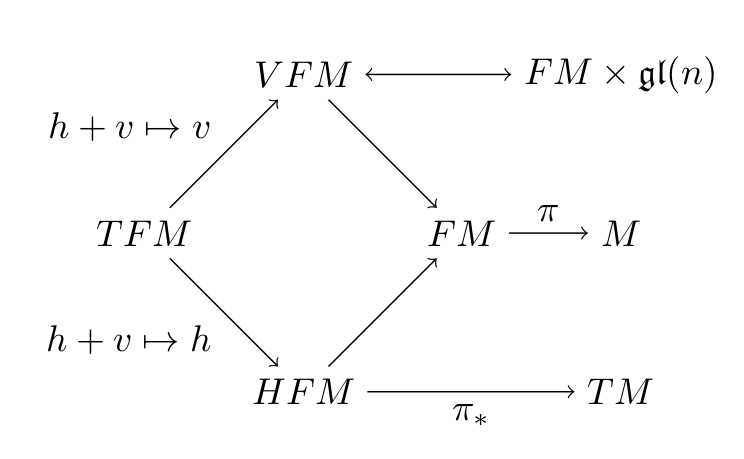

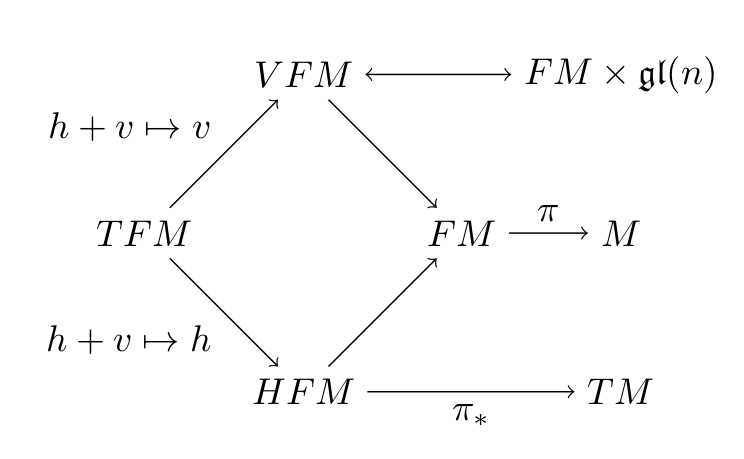

Frame bundles

- \(\pi:FM\to M\) is the bundle of linear frames, i.e.

\(u=(u_1,\ldots,u_d)\in FM\) is an ordered basis for \(T_xM\), \(x=\pi(u)\) - \(FM\) is a \(\mathrm{GL}(n)\) principal bundle: \(u:\mathbb R^d\to T_x M\) linear, invertible

- \(OM\) the subbundle of orthonormal frames (orientations)

- horizontal lift \(h_u:T_xM\to H_uFM\) and fields: \(H_i:FM\to TFM\)

\(h_u=(\pi_*|_{H_uFM})^{-1}\)

\(H_i(u)=h_u(ue_i)\)

\[k\ast f(u)=\int_{\R^d}k(-\mathbf v)f(P_{\gamma(x,u\mathbf v)}(u))d\mathbf v\]

Non-commutativity:

\(k_2\ast (k_1\ast f)-k_1\ast (k_2\ast f) =\)

\(\int_{\mathbb R^d} \int_{\mathbb R^d} k_2(-\mathbf v_2)k_1(-\mathbf v_1) [h_u(u\mathbf v_2),h_u(u\mathbf v_1)]f d\mathbf v_1 d\mathbf v_2 + o(r^{d+1})\)

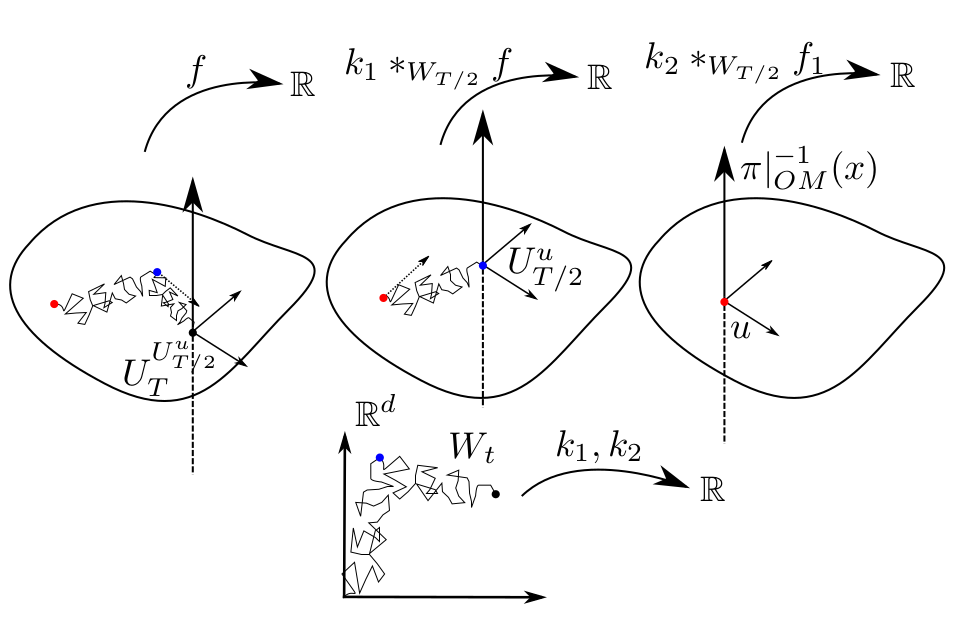

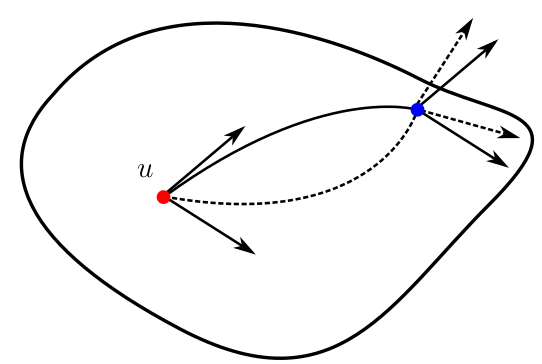

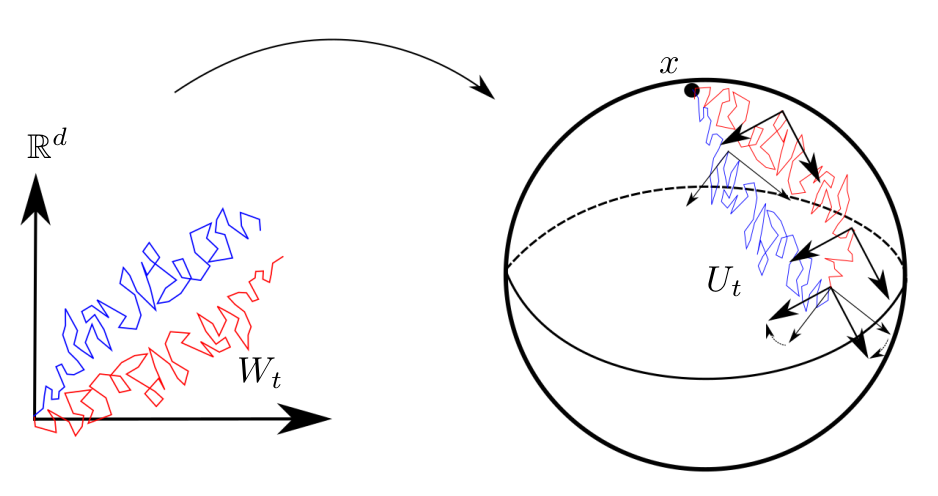

Transporting along all paths

Stochastic development:

\(dU_t=\sum_{i=1}^d H_i\circ_{\mathcal S} dW_t^i\)

\(W_t\) Euclidean Brownian motion

\(X_t=\pi(U_t)\) Riemannian Brownian motion

\(X_t\) supports stochastic parallel transport

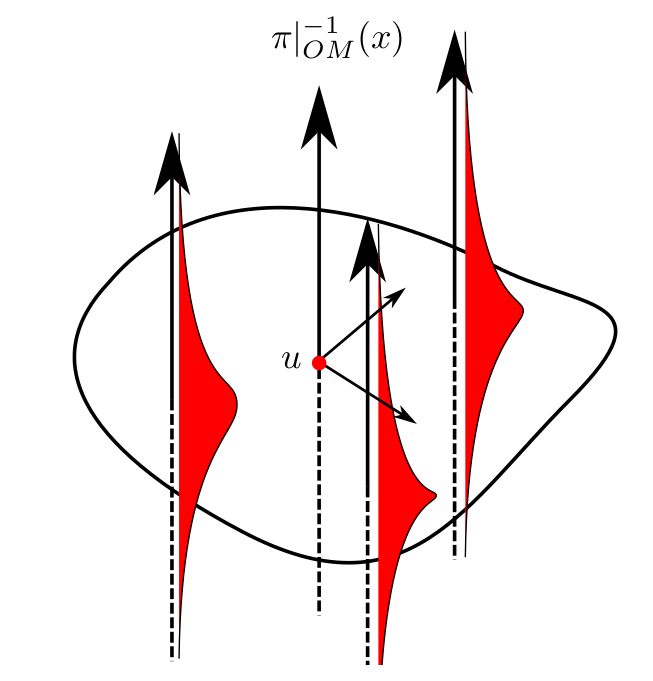

Fix \(T>0\): \(U_T\) probability distribution in \(FM\)

... as opposed to geodesics only

Need measure on path space \(W ([0, T ], M )\)

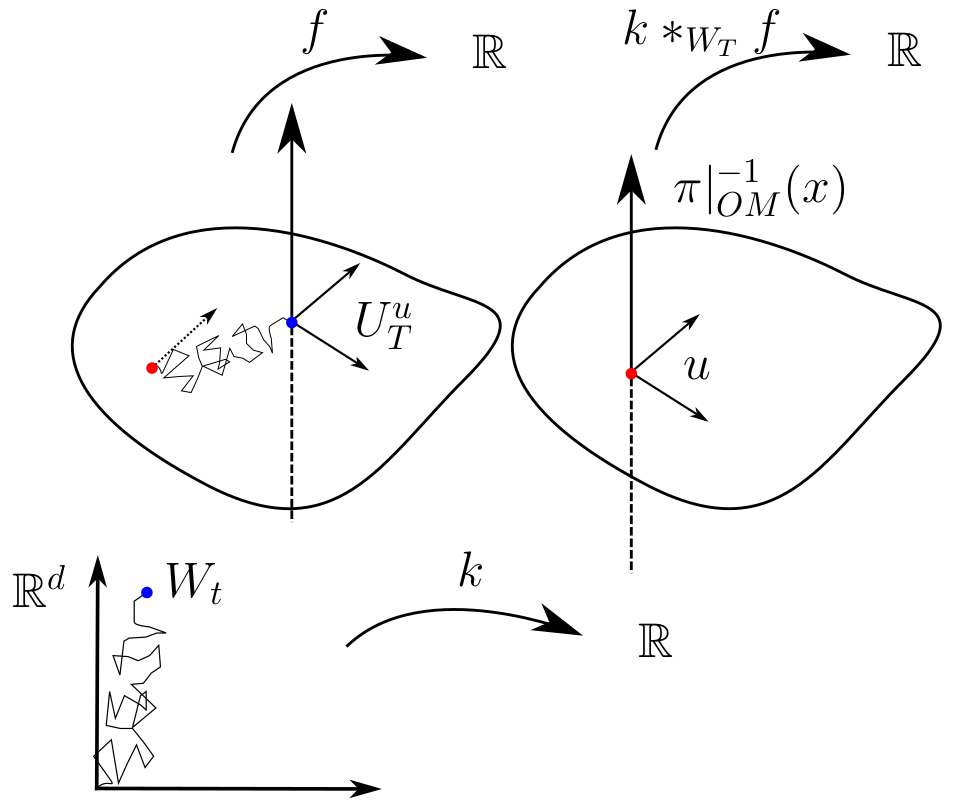

New convolution operator

\(k\ast_{W_T} f(u)=\int k(-W_T)f(U_T^u)\mathbb P(dW_t)=\mathrm{E}[k(-W_T)f(U_T^u)]\)

- orientation function:

\(f:OM\to\mathbb R\) - kernel: \(k:\mathbb R^d\to\mathbb R\)

- \(W_t\) Euclidean Brown. motion

- \(U_t\) \(OM\)-development of \(W_t\)

- link between \(W_t\) and \(U_t\):

stochastic development

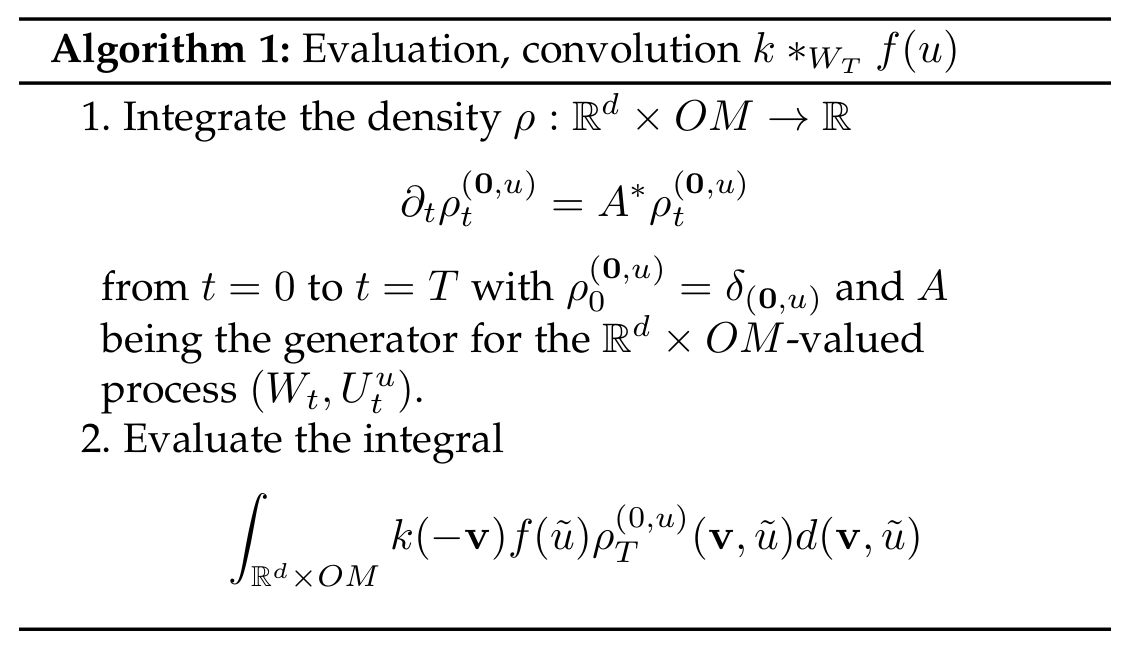

Properties and Algorithm

- smooth, global support

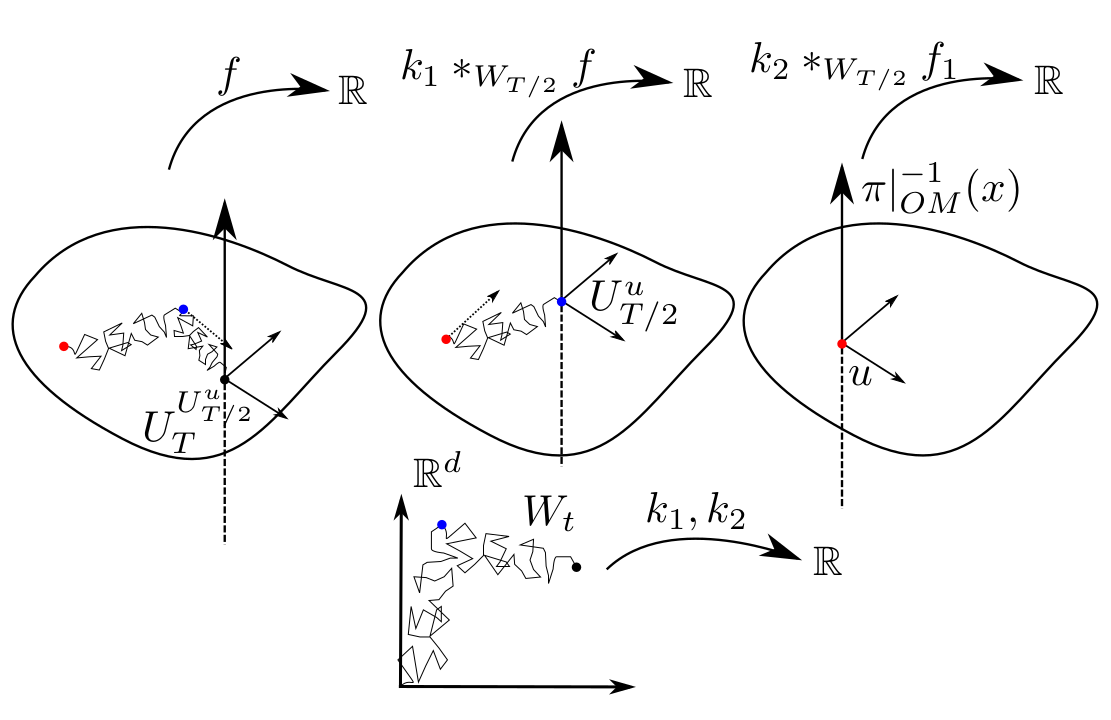

- Composition:

\(k_2\ast_{W_{T/2}} (k_1\ast_{W_{T/2}} f)(u)=\)

\(\mathrm{E}[k_2(-W_{T/2})k_1(-(W_T-W_{T/2}))f(U_T^u)]\) - Tensors: \(k^n_m\), \(f:OM\to\mathbb R^m\)

\(y^n=\mathrm{E}[k^n(-W_t)f(U_T^u)]\) - Equivariance: \(a\in O(d)\)

\(k\ast_{W_T} (a.f)(u)=a.(k\ast_{W_T} f)(u)\) - Non-linearities \(\phi_i\):

\(\phi_n(k_n\ast_{W_{T/n}} \phi_{n-1}(\cdots \phi_1(k_1\ast_{W_{T/n}} f))(u)\) - precomputed density \(\rho\)

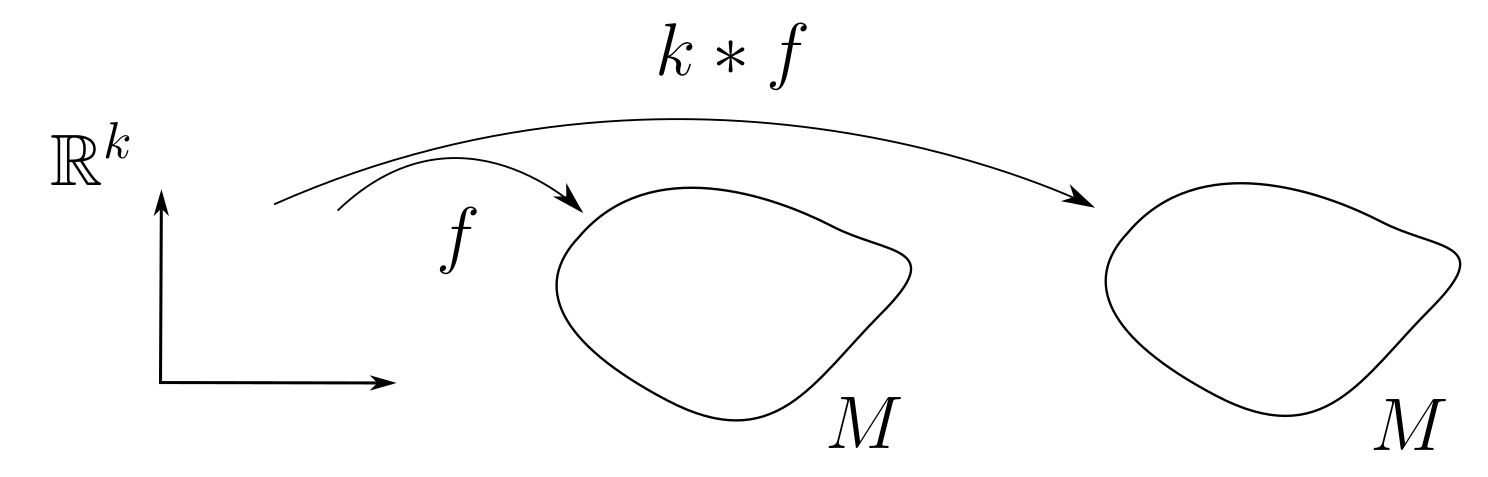

Sampling means

Manifold target:

Euclidean convolution:

\[k\ast f(\mathbf x)=\argmin_{\mathbf y\in\mathbb R}\mathrm E[k(\mathbf x-\mathbf z)\|\mathbf y-f(\mathbf z)\|^2]\]

conv. from weighted Fréchet mean: (Pennec'06/Chakraborty'19/'20)

\[k\ast f(x)=\argmin_{y\in M}\mathrm E[k(x,z)d(y,f(z))^2)]\]

kernel: \(k:M\times M\to\mathbb R\), \(\mathrm E[k(x,\cdot)]=1\)

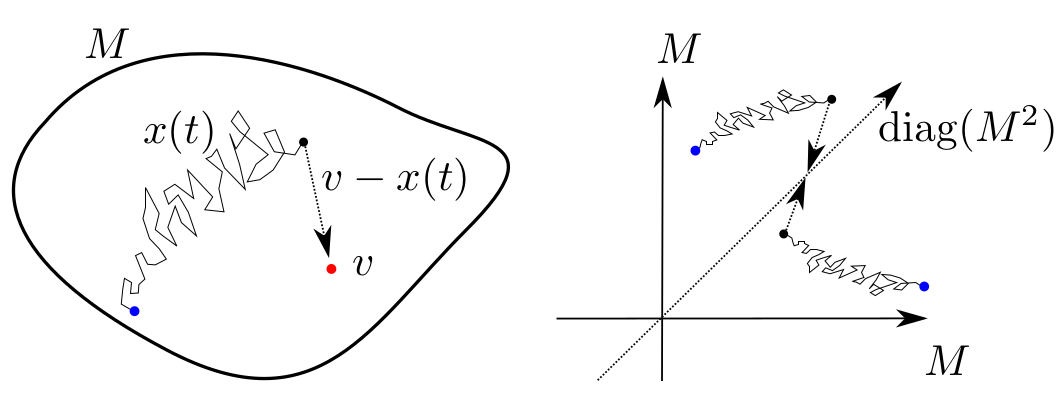

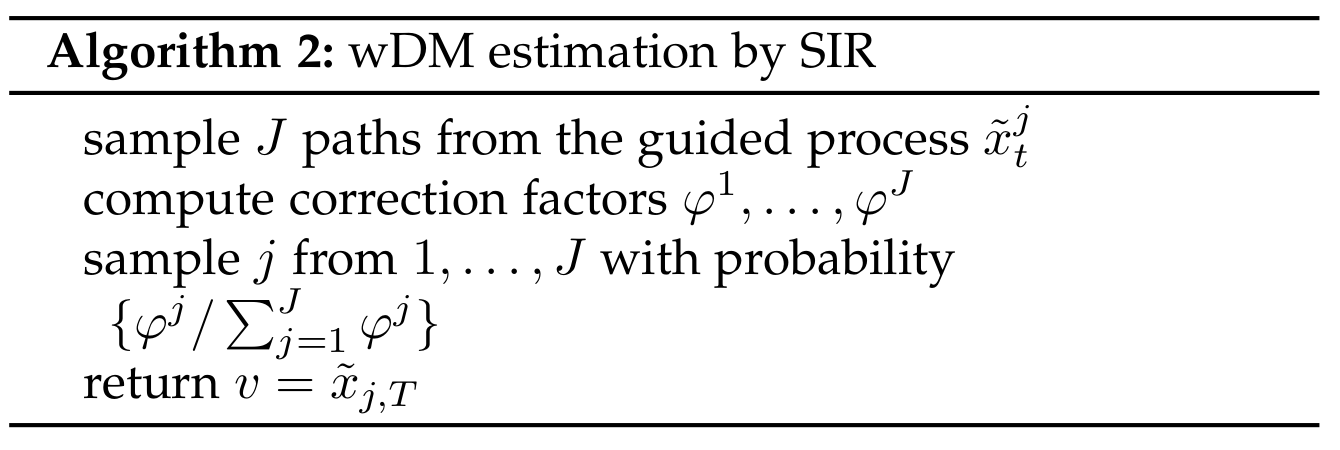

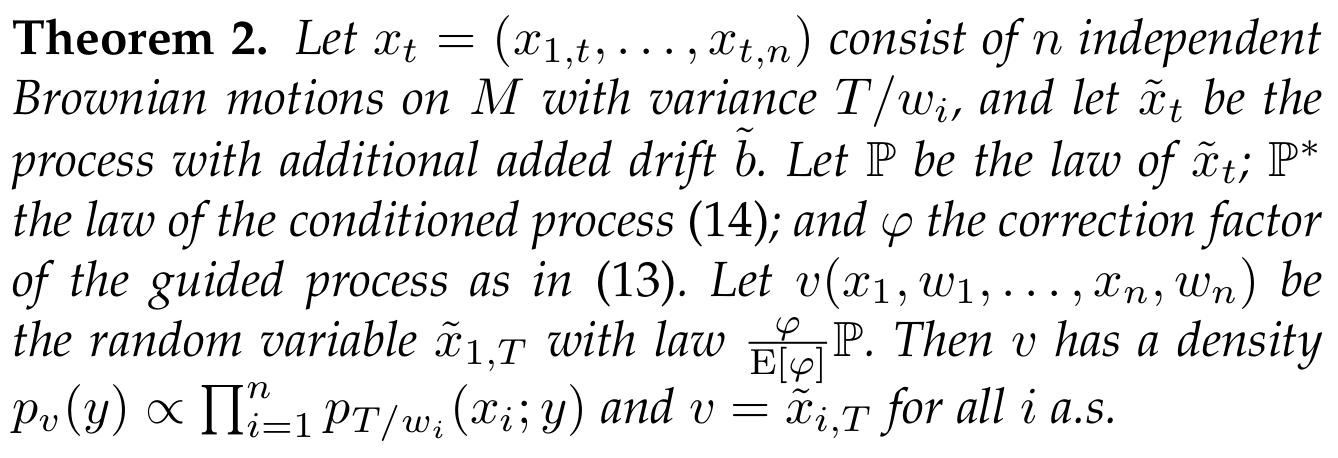

Bridge sampling to diagonal

- diffusion mean (Hansen'21) weighted:

\[k\ast f(x)=\argmax_{y\in M}\mathrm E[\log p_{T/k(x,z)}(f(z);y)]\] - \(p_T(x,\cdot)\) density of Brownian motion at \(T>0\)

- \(p_T(x,v)\) and \(k\ast f(x)\) can be approximated by bridge sampling:

bridge sampling: target \(v\)

bridge sampling: target \(\mathrm{diag}(M^2)\)

Algorithm

Stochasticity analogous to deep Gaussian process NNs (Gal'16): \[\mathbf y|\mathbf x,w=\mathcal{N}(\hat{y}(\mathbf x,w),\tau^{-1})\]

Horizontal Flows, Manifold Stochastics

- curvature obstructs translation invariance

- measures on paths spaces integrates this out

- manifold-valued operators can be sampled

- bundle theory and stochastics can inspire deep learning algorithms

code: http://bitbucket.com/stefansommer/jaxgeometry

slides: https://slides.com/stefansommer

References:

- Sommer, Bronstein: Horizontal Flows and Manifold Stochastics in Geometric Deep Learning, TPAMI, 2020, doi: 10.1109/TPAMI.2020.2994507

- Hansen, Eltzner, Huckemann, Sommer: Diffusion Means on Riemannian Manifolds, in preparation, 2020.

- Højgaard Jensen, Sommer: Simulation of Conditioned Diffusions on Riemannian Manifolds, in preparation, 2020.

- Sommer: Anisotropic Distributions on Manifolds: Template Estimation and Most Probable Paths, IPMI 2015, doi: 10.1007/978-3-319-19992-4_15.

- Sommer, Svane: Modelling Anisotropic Covariance using Stochastic Development and Sub-Riemannian Frame Bundle Geometry, JoGM, 2017, arXiv:1512.08544.

- Sommer: Anisotropically Weighted and Nonholonomically Constrained Evolutions, Entropy, 2017, arXiv:1609.00395 .