GerryChain.jl

aka

motivation

neato: julia "feels like python, runs like C"

SHAPEFILE_PATH = "./PA_VTD.json"

POPULATION_COL = "TOT_POP"

ASSIGNMENT_COL = "538GOP_PL"

# Initialize graph and partition

graph = BaseGraph(SHAPEFILE_PATH, POPULATION_COL, ASSIGNMENT_COL)

partition = Partition(graph, ASSIGNMENT_COL)

# Define parameters of chain (number of steps and population constraint)

pop_constraint = PopulationConstraint(graph, POPULATION_COL, 0.02)

num_steps = 10

# Initialize Election of interest

election = Election("SEN10", ["SEN10D", "SEN10R"], graph.num_dists)

# Define election-related metrics and scores

election_metrics = [

vote_count("count_d", election, "SEN10D"),

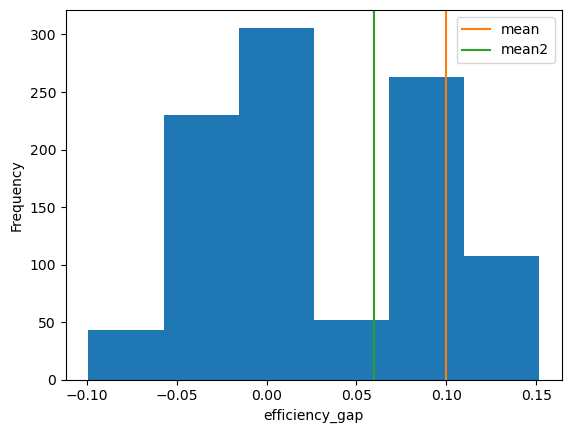

efficiency_gap("efficiency_gap", election, "SEN10D"),

seats_won("seats_won", election, "SEN10D"),

mean_median("mean_median", election, "SEN10D")

]

scores = [

DistrictAggregate("presd", "PRES12D"),

ElectionTracker(election, election_metrics)

]

# Run the chain

println("Running 10-step ReCom chain...")

chain_data = recom_chain(graph, partition, pop_constraint, num_steps, scores)

# Get values of all scores at the 10th state of the chain

score_dict = get_scores_at_step(chain_data, 10)

# Get all vote counts for each state of the chain

vote_counts_arr = get_score_values(chain_data, "count_d")running a chain in 34 lines of code!

where is our progress now?

- Most of core GerryChain is re-implemented in Julia! 🎊

- Some new features! ✨

- Package uploaded to Julia registry! 📦

-

using Pkg; Pkg.add("GerryChain")

-

1

some differences w/ gerrychain...

- "updaters" are now "scores"

- Tallies ➡ DistrictAggregate scores

- introduce district-level vs. plan-level scores

- saves time + memory

- acceptance functions must return a float in [0, 1] representing probability of acceptance rather than True or False

- forces user to think about what acceptance functions are for, reducing confusion w/ constraints!

- ReCom is first class in GerryChain.jl

- Python GerryChain very much built on the notion of "flips"

new features! ✨

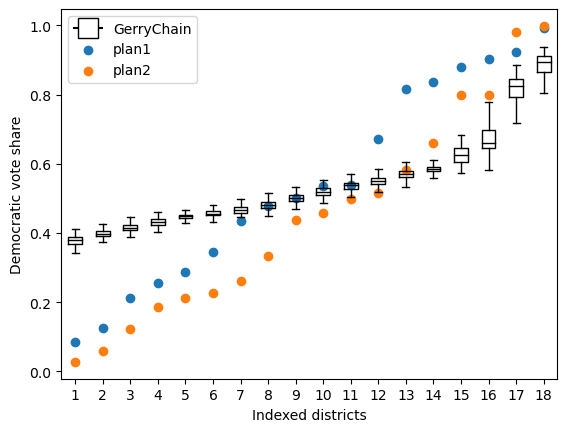

- built-in plotting 📊

- saving score values to CSV/json files 💾

- and of course, speed ⚡

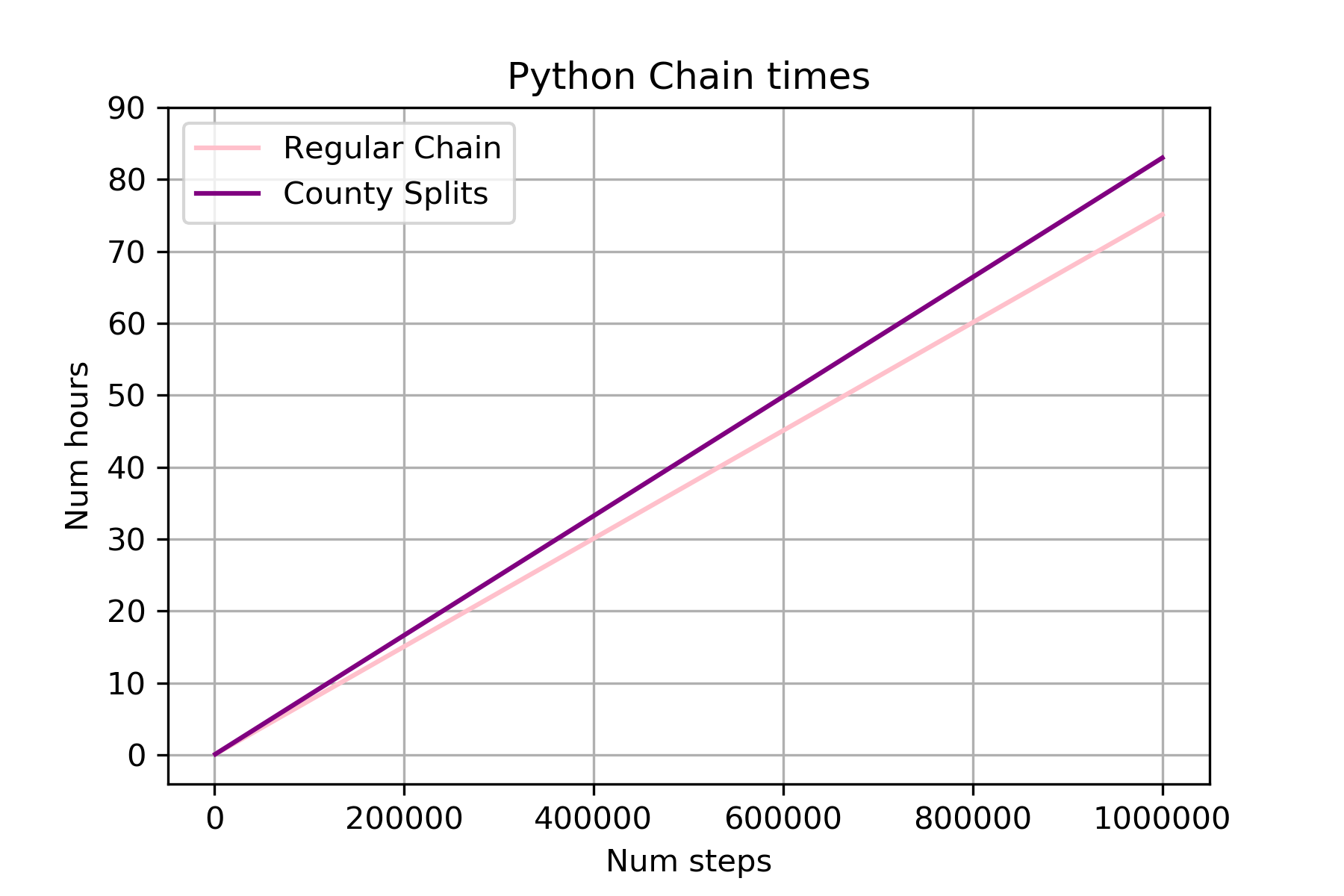

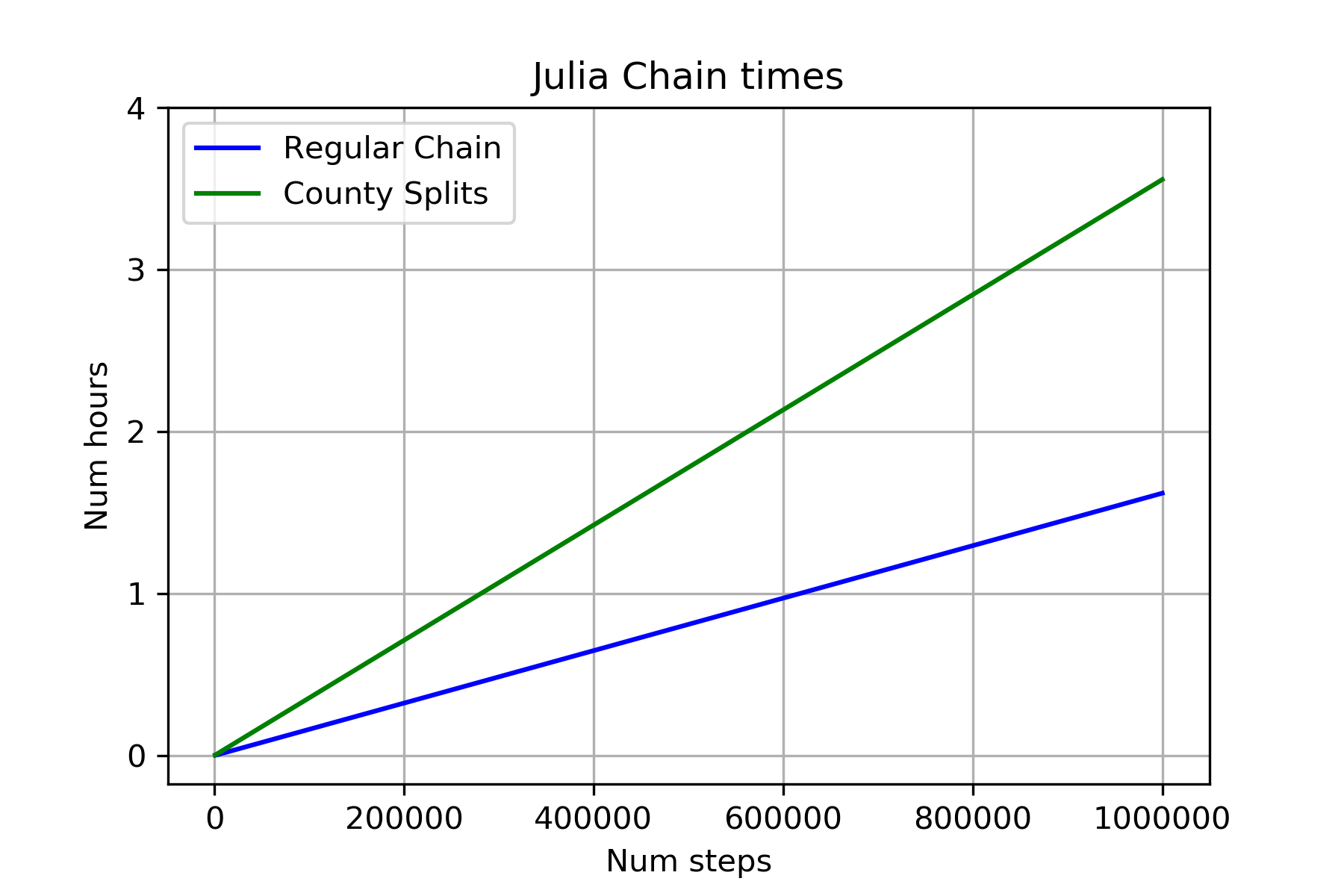

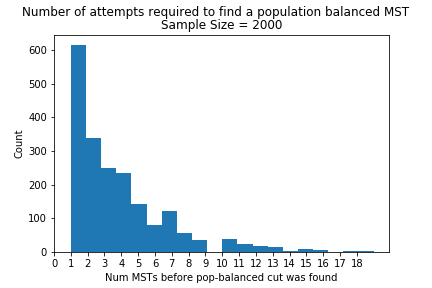

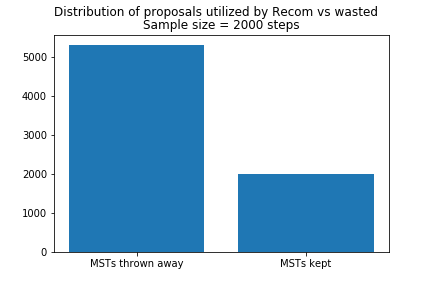

The speed of GerryChain depends a lot on how it is configured

Population Constraint: Tighter the bounds ➡ Longer times

Acceptance Probability: Lower probability ➡ Longer times

Number of updaters: More calculation ➡ Longer times

Num steps: More steps ➡ Longer times

Python Chain Times

Julia Chain Times

Julia and Python Chains, together

So currently we have a ~47x speedup in Julia over Python (over simple runs)

Up next... Parallelization

Up next... Parallelization

thank you!

leave us a 🌟 on github? 🥺👉👈