Design Patterns for Async & Parallel

swarnim arun

Systems

About me

-

Currently Senior Developer @ Cedana.ai

-

Indie Game Developer >My studio<

-

Love working with Rust, Zig, Gleam and other niche programming languages

-

Background in Compilers, Machine Learning, Distributed Infra & Cloud

-

Also love to dabble in Functional Programming.

> Mostly Haskell & F#

Concurrency

Concurrency, is when different parts of a system execute independently

- Concurrency in modern systems is an essential tool in the hands of programmers to help maximize performance, as most programs can't utilize all the system resources.

- Thus making them concurrent allows the system to best optimize the execution, to help maximize resource utilization.

Understanding Concurrency

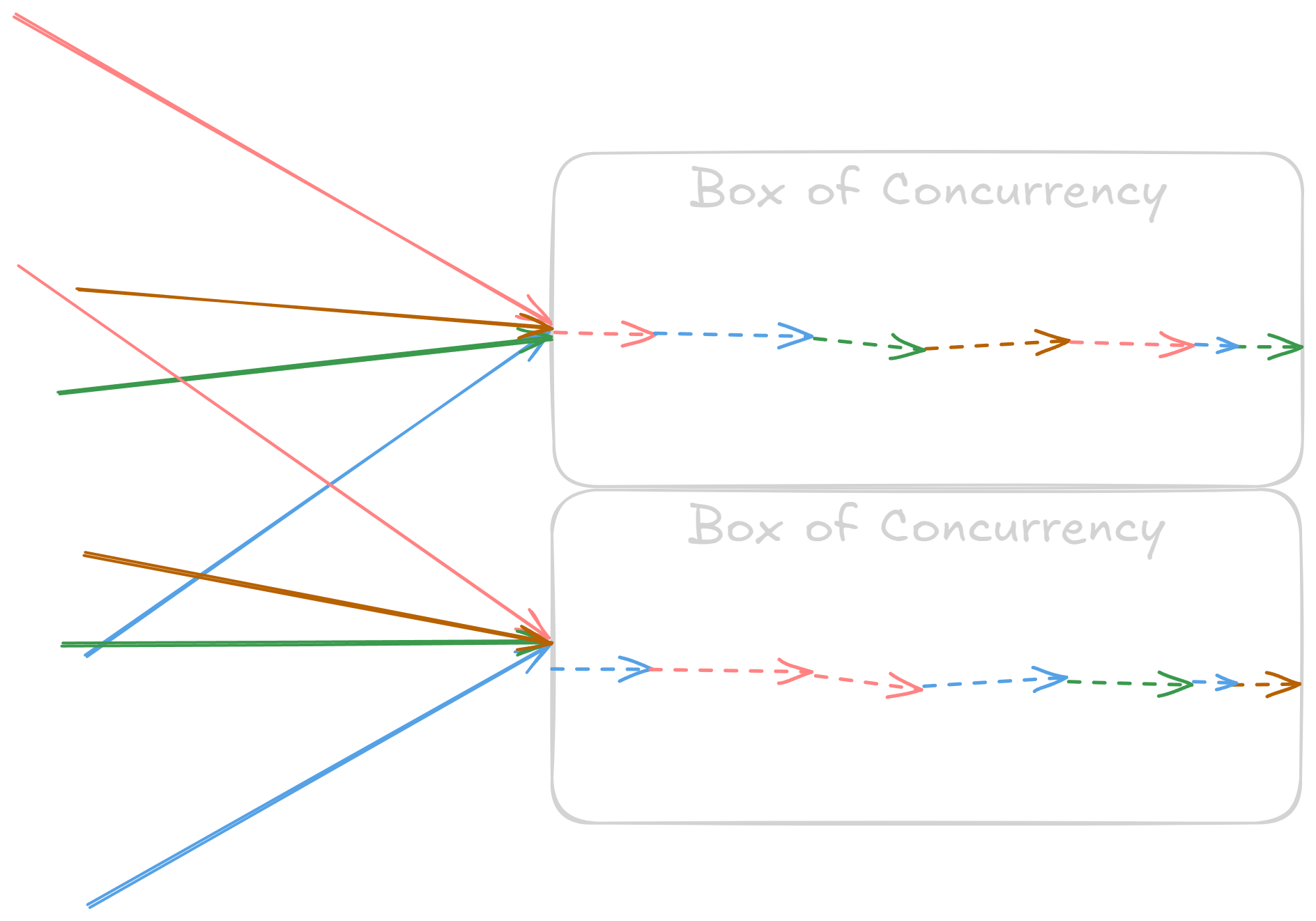

Imagine concurrent execution as an opaque box.

Once things go inside concurrency box we don't know in which order they are going to be executed.

Concurrency Models

The concurrency can be implemented using several models

and often languages prefer one over the other in their default

concurrency infrastructure.

I like to put them in 3 broad categories,

- Pre-emptive Scheduling

- Cooperative Scheduling

- Hybrid Scheduling (Partially Pre-emptive)

Concurrency Patterns(*)

Once you have a concurrency model you have a few patterns for you concurrent program. These may or may not be language specific.

- Communicating Sequential Processes

- Tuple Spaces

- Actor Model

- Async/Await

*non-exhaustive

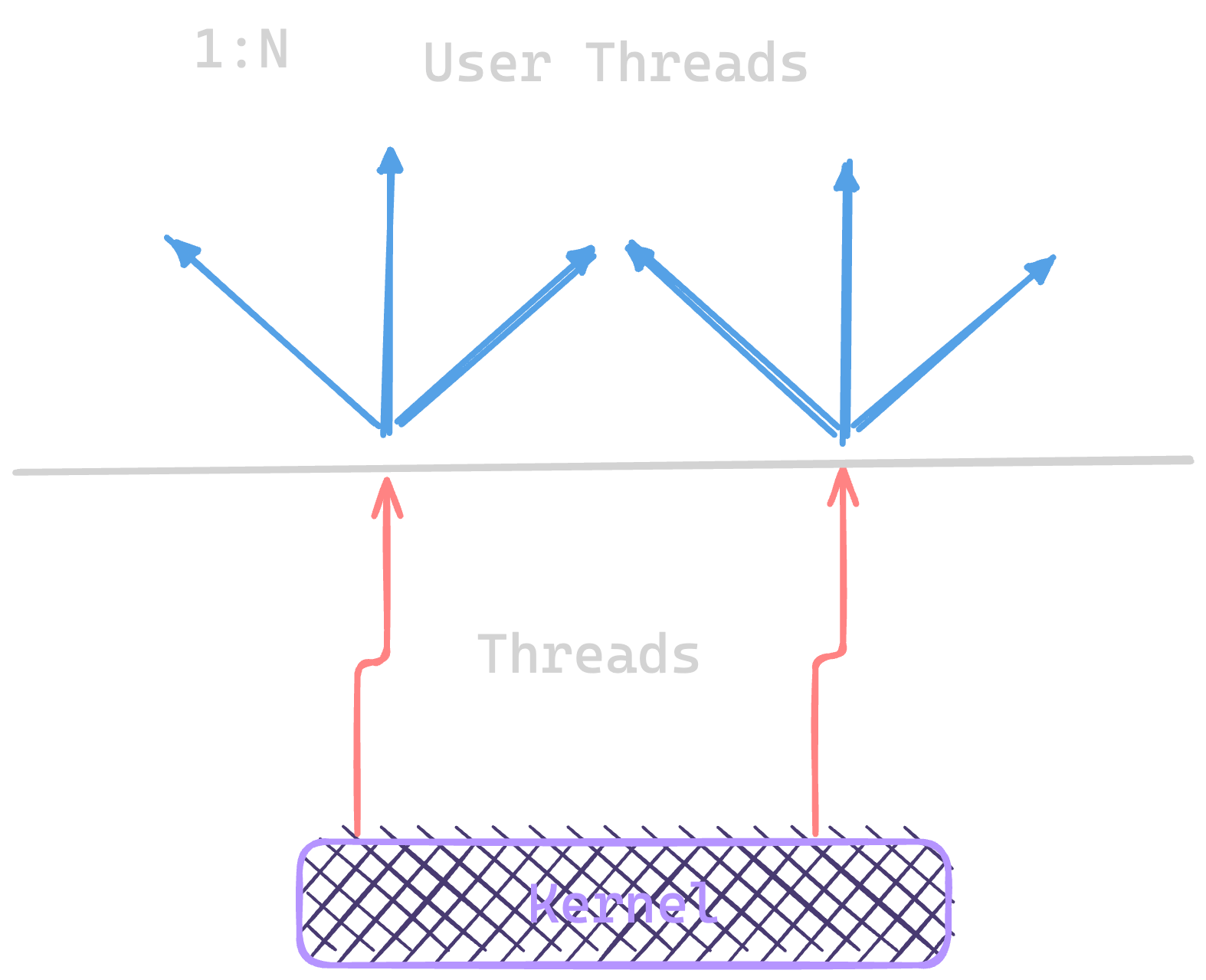

System vs User Threads

User-threads often referred to as "green-thread(s)", are generally less resource intensive threads, as they are entirely scheduled in user-space.

They are fairly popular in highly concurrent systems to provide a means for maximizing concurrency by avoiding kernel as a bottleneck.

Quick Note: About Threads

Native threads(managed by the operating system) can be considered pre-emptible.

While scheduling of user threads depends on the implementation, they are also indirectly pre-emptible by the OS.

Concurrency in Go

Go's implements preemptive scheduling with its own user threads and threading model "goroutine(s)". **Note it used to be cooperative.

Essentially, it provides us with simple `chan` communication abstraction for goroutine communication, and all the required synchronization primitives (Once, Mutex, etc).

package main

import (

"fmt"

"time"

)

func main() {

// consider using sync.WaitGroup instead

waitexit := make(chan int)

go func() {

defer func(){ waitexit<-0 }()

for i := range 10 {

fmt.Println("goroutine one: %d", i)

time.Sleep(1 * time.Second)

}

}()

go func() {

defer func(){ waitexit<-0 }()

for {

fmt.Println("goroutine two")

time.Sleep(2 * time.Second)

}

}()

_ = <-waitexit

}Concurrency in Elixir/GLEAM

BEAM implements what I like to call hybrid-scheduling, the compiler performs reduction counting & inserts yield points without direct user control.

Note: the VM itself requires yield points.

*(for brevity: consider a reduction equal to a function)

import gleam/erlang/process

import gleam/int

import gleam/io

import gleam/string

const sleep_time = 100

fn one(x: Int) {

case x {

10 -> Nil

_ -> {

string.append("process one: ", x |> int.to_string)

|> io.println

process.sleep(sleep_time)

one(x + 1)

}

}

}

fn two() {

"process two" |> io.println

process.sleep(sleep_time * 2)

two()

}

type Msg {

ProcessExited(process.ProcessDown)

}

pub fn main() {

let pm1 = process.monitor_process(process.start(a, True))

let pm2 = process.monitor_process(

process.start(fn() {

b(0)

},

True)

)

let s: process.Selector(Msg) = process.new_selector()

let s = process.selecting_process_down(s, pm1, ProcessExited)

let s = process.selecting_process_down(s, pm2, ProcessExited)

case process.select_forever(s) {

ProcessExited(process.ProcessDown(_, _)) -> Nil

}

}

Concurrency in Node/Deno

Javascript is one of the languages with a cooperative concurrency model, using Promise or Async tasks.

It is especially dependent on async for reactive applications because of it's limited multi-threading support.

async function main() {

const time = 100;

const one = async () => {

for (let i = 0; i < 10; i++) {

console.log("promise one: ", i);

await new Promise((r) => setTimeout(r, time));

}

};

const two = async () => {

for (;true; ) {

console.log("promise two");

await new Promise((r) => setTimeout(r, time * 2));

}

};

return await Promise.race([one(), two()]);

}

// NOTE: even though race succeeds

// Deno won't exit until all

// tasks have exited

await main();

// so we exit manually

Deno.exit(0);

// Otherwise consider using AbortController

// and registering abort event on AbortSignal

// to cancel async tasks manually after race finishesFearless Concurrency (in Rust)

Rust brings a more low level/system programming approach to concurrency modelling by avoiding modelling actual scheduling.

But instead provide semantics baked into the language that help define concurrency safety guarantees in the type system.

Rust does this by utilizing the ownership model with it's Send and Sync traits.

Is it Send?

i32

Yep

fn send<T: Send>(t: T) {}

fn main() {

send(0i32); // works

}&i32

Definitely

fn send<T: Send>(t: T) {}

fn main() {

let x = 0i32;

send(&x); // works

}&mut i32

Yus

fn send<T: Send>(t: T) {}

fn main() {

let mut x = 0i32;

send(&mut x); // works

}Is it Send?

Rc<i32>

Nope

use std::rc::Rc;

fn send<T: Send>(t: T) {}

fn main() {

let x = Rc::new(0i32);

send(x.clone()); // error

}MutexGuard<'_, i32>

Nope (note: Mutex<i32> is Send)

use std::sync::Mutex;

fn send<T: Send>(t: T) {}

fn main() {

let x = Mutex::new(0i32);

send(x.lock()); // error

}Is it Sync?

i32

Definitely

fn sync<T: Sync>(t: T) {}

fn main() {

sync(0i32); // works

}&i32

Yeah

fn sync<T: Sync>(t: T) {}

fn main() {

let x = 0i32;

sync(&x); // works

}fn(&i32) -> &i32

Yus

fn sync<T: Sync>(t: T) {}

fn f(x: &i32) -> &i32 { x }

fn main() {

sync(f); // works

}&mut i32

Whoops!

fn sync<T: Sync>(t: T) {}

fn main() {

let mut x = 0i32;

sync(&mut x); // error

}(async { Some(()) }).await ?

Rust uses stackless coroutines, this essentially means that the stack is shared by the coroutines and the operating system thread, aka the program.

Which entails that coroutine stack will be overwritten when context switching happens.

Only the top-level of the stack is stored. Essentially it's fixed size of "one".

// note: imaginary code with stackful coroutines

// also assume it's named `imagine`

fn main() {

// we can directly execute functions

// as coroutines

spawn(|| {

imagine::sleep(Duration::from_secs(10));

});

}

// consider `may` crate for a somewhat

// practical implementation of stackful coroutines#[tokio::main]

async fn main() {

// stackless-ness requires us

// to not be able to use fns as coroutine

tokio::sleep(Duration::from_secs(10)).await

}Problems with async Rust

Despite the amazing type system that Rust has and it's expressive-ness.

There are still a lot of pain points that still need more work on.

Especially in regards for ease of use and make it hard to make mistakes(aka removing the unexpected and less understood stuff).

Coupling of Futures & Executors

All Futures are in Rust are tightly coupled with the executors. Hence, each executor provides their own flavor of them.

This can often lead to mistakes where beginners might be use the same Futures, in different Executors, leading to sometimes hard to debug errors.

use tokio::{ io::{self, AsyncWriteExt}, net};

// there is no reactor running, must be called from the context of a Tokio 1.x runtime

fn main() -> io::Result<()> {

smol::block_on(async {

let mut stream = net::TcpStream::connect("example.com:80").await?;

let req = b"GET / HTTP/1.1\r\nHost: example.com\r\nConnection: close\r\n\r\n";

stream.write_all(req).await?;

let mut stdout = tokio::io::stdout();

io::copy(&mut stream, &mut stdout).await?;

Ok(())

})

} // DOES NOT WORK!!!! ._.use smol::{io, net, prelude::*, Unblock};

// WORKS! \o/

fn main() -> io::Result<()> {

let rt = tokio::runtime::Runtime::new().unwrap();

rt.block_on(async {

let mut stream = net::TcpStream::connect("example.com:80").await?;

let req = b"GET / HTTP/1.1\r\nHost: example.com\r\nConnection: close\r\n\r\n";

stream.write_all(req).await?;

let mut stdout = Unblock::new(std::io::stdout());

io::copy(stream, &mut stdout).await?;

Ok(())

})

}

Solution?

Just use, Tokio!

Cancellation & Timeout

Futures in Rust can already technically perform cancellation, but functioning of cancellation or timeouts is not always obvious.

As we know that a Future requires polling, we can cancel a Future by just dropping it and ensuring it can't be polled any longer.

As we know that a Future requires polling, we can cancel a Future by just dropping it and ensuring it can't be polled any longer.

async fn advance(&self) {

let mut system_state = self.state.lock().await;

// Take the inner state enum out, but always replace it!

let current_state = system_state.take().expect("invariant violated: 'state' was None!");

*system_state = Some(current_state.advance().await);

}use tokio::time::timeout;

use tokio::sync::oneshot;

use std::time::Duration;

let (tx, rx) = oneshot::channel();

async fn wrap(rx: _) {

// Wrap the future with a `Timeout` set to expire in 10 milliseconds.

if let Err(_) = timeout(Duration::from_millis(10), rx).await {

println!("did not receive value within 10 ms");

}

}

let x = wrap(rx);

// cancel your future!

drop(x);futures-concurrency

use futures_concurrency::prelude::*;

#[tokio::main]

pub async fn main() {

let v: Vec<_> = vec!["chashu", "nori"]

// provides combinators for streams and collections of futures

.into_co_stream()

.map(|msg| async move { format!("hello {msg}") })

.collect()

.await;

assert_eq!(v, &["hello chashu", "hello nori"]);

}draining the colors with `blocking`

use blocking::{unblock, Unblock};

use futures_lite::io;

use std::fs::File;

#[tokio::main]

pub async fn main() -> futures_lite::io::Result<()> {

let input = unblock(|| File::open("file.txt")).await?;

let input = Unblock::new(input);

let mut output = Unblock::new(std::io::stdout());

// async copy

io::copy(input, &mut output).await.map(|_| {})

}

shuttle

use shuttle::sync::{Arc, Mutex};

use shuttle::thread;

shuttle::check_random(|| {

let lock = Arc::new(Mutex::new(0u64));

let lock2 = lock.clone();

thread::spawn(move || {

*lock.lock().unwrap() = 1;

});

assert_eq!(0, *lock2.lock().unwrap());

}, 100);sync_wrapper

/// A mutual exclusion primitive that relies on static type information only

///

/// In some cases synchronization can be proven statically:

/// whenever you hold an exclusive `&mut`

/// reference, the Rust type system ensures that no other

/// part of the program can hold another

/// reference to the data. Therefore it is safe to

/// access it even if the current thread obtained

/// this reference via a channel.

use sync_wrapper::SyncWrapper;

use std::future::Future;

struct MyThing {

future: SyncWrapper<Box<dyn Future<Output = String> + Send>>,

}

impl MyThing {

// all accesses to `self.future` now require an

// exclusive reference or ownership

}

fn assert_sync<T: Sync>() {}

assert_sync::<MyThing>();

`monoio`

/// A echo example.

///

/// Run the example and `nc 127.0.0.1 50002` in another shell.

/// All your input will be echoed out.

use monoio::io::{AsyncReadRent, AsyncWriteRentExt};

use monoio::net::{TcpListener, TcpStream};

#[monoio::main]

async fn main() {

let listener = TcpListener::bind("127.0.0.1:50002").unwrap();

println!("listening");

loop {

let incoming = listener.accept().await;

match incoming {

Ok((stream, addr)) => {

println!("accepted a connection from {}", addr);

monoio::spawn(echo(stream));

}

Err(e) => {

println!("accepted connection failed: {}", e);

return;

}

}

}

}

async fn echo(mut stream: TcpStream) -> std::io::Result<()> {

let mut buf: Vec<u8> = Vec::with_capacity(8 * 1024);

let mut res;

loop {

// read

(res, buf) = stream.read(buf).await;

if res? == 0 {

return Ok(());

}

// write all

(res, buf) = stream.write_all(buf).await;

res?;

// clear

buf.clear();

}

}`may`

// required for old rust versions

#[macro_use]

extern crate may;

use may::net::TcpListener;

use std::io::{Read, Write};

fn main() {

let listener = TcpListener::bind("127.0.0.1:8000").unwrap();

while let Ok((mut stream, _)) = listener.accept() {

go!(move || {

let mut buf = vec![0; 1024 * 16]; // alloc in heap!

while let Ok(n) = stream.read(&mut buf) {

if n == 0 {

break;

}

stream.write_all(&buf[0..n]).unwrap();

}

});

}

}`moro`

let value = 22;

let result = moro::async_scope!(|scope| {

let future1 = scope.spawn(async {

let future2 = scope.spawn(async {

value // access stack values that outlive scope

});

let v = future2.await * 2;

v

});

let v = future1.await * 2;

v

})

.await;

eprintln!("{result}"); // prints 88Some more crates

- atomic-waker

- waker-fn

- deadpool

- want

- tagptr

** This is the list of crates I like to use. For brevity I won't discuss all of these.

Parallelism

Parallelism, is the act of running different parts within a system simultaneously

- Parallelism is the best solution to utilize the massive amounts of compute we have within the constraints of hardware scaling.

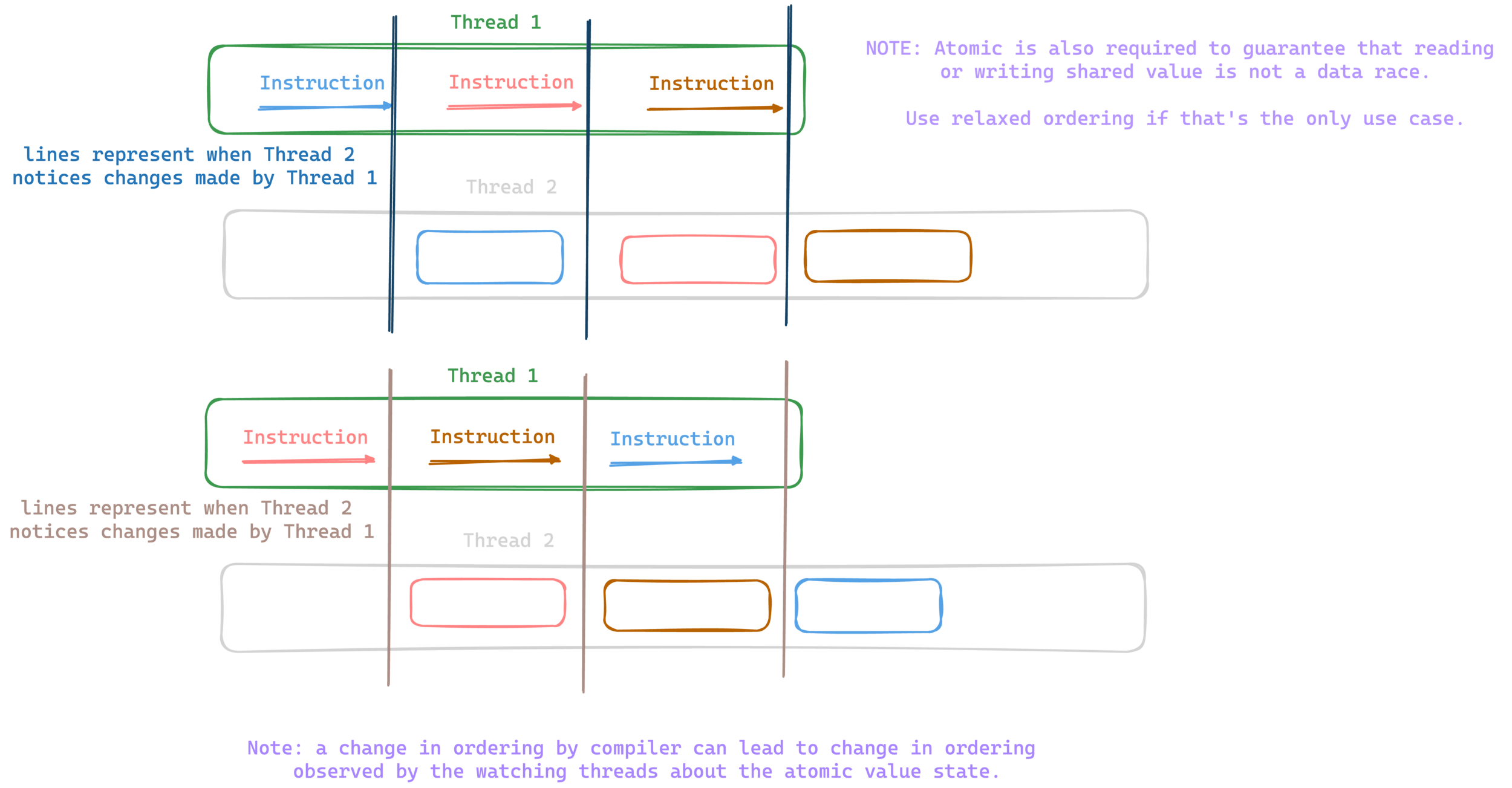

Atomics & Memory Ordering

Atomic accesses are how we tell the hardware and compiler that our program is cares about ordering of operations.

Each atomic access can be marked with an ordering that specifies what kind of relationship it establishes with other accesses "on the same thread".

-

Relaxed ordering:

-

Ordering::Relaxed

-

-

Release and acquire ordering:

-

Ordering::{Release, Acquire, AcqRel}

-

-

Sequentially consistent ordering:

-

Ordering::SeqCst

-

SeqCst: a sequentially consistent marking means nothing before or after this operation can be reordered

Acquire: these ensure that nothing after this operation can be reordered but stuff before it can be. Use with "Release".

Release: these ensure that nothing before this operation can be reordered but stuff after it can be reordered.

Relaxed: no ordering guarantees, only to ensure no data race

Used to define a critical section in a program

Why does ordering matter ??

locks

These are the most commonly used synchronization primitives.

Holding a lock means acquire contractual access to value behind the lock, and requires the other threads hoping to gain access to the value to block until the lock contract is fulfilled.

Mutex: Exclusive lock, only one lock can be acquired at once.

RwLock: Exclusive write lock, no locks can be acquired after a write lock and all locks need to be revoked before acquiring a write lock.

With N read locks when no write locks are in use.

When Atomics & When Locks

For brevity,

When your code doesn't need to consider the operation to be associative, or you need to define an arguably simple critical section of operations which are together associative.

Atomics are the ideal solution.

Otherwise, you should consider if atomics might be too hard to use correctly or in-fact likely to never produce desired behavior.

Generic Atomic<T> with `atomic`

// Note this works with T: NoUninit from bytemuck

pub fn main() {

let a = Atomic::new(0i64);

// NOTE: most modern platforms (x86)

assert_eq!(

Atomic::<i64>::is_lock_free(),

cfg!(target_has_atomic = "64") && mem::align_of::<i64>() == 8

);

}shred'ng data without locks

use shred::{DispatcherBuilder, Read, Resource, ResourceId, System, SystemData, World, Write};

#[derive(Debug, Default)]

struct ResA;

#[derive(Debug, Default)]

struct ResB;

#[derive(SystemData)] // Provided with `shred-derive` feature

struct Data<'a> {

a: Read<'a, ResA>,

b: Write<'a, ResB>,

}

struct EmptySystem;

impl<'a> System<'a> for EmptySystem {

type SystemData = Data<'a>;

fn run(&mut self, bundle: Data<'a>) {

// bundle reader & writer

println!("{:?}", &*bundle.a);

println!("{:?}", &*bundle.b);

}

}

fn main() {

let mut world = World::empty();

let mut dispatcher = DispatcherBuilder::new()

.with(EmptySystem, "empty", &[])

.build();

world.insert(ResA);

world.insert(ResB);

dispatcher.dispatch(&mut world);

}Some other parallelism crates

- crossbeam

- flume

- rayon

Optimizing for large scale compute efficiency!

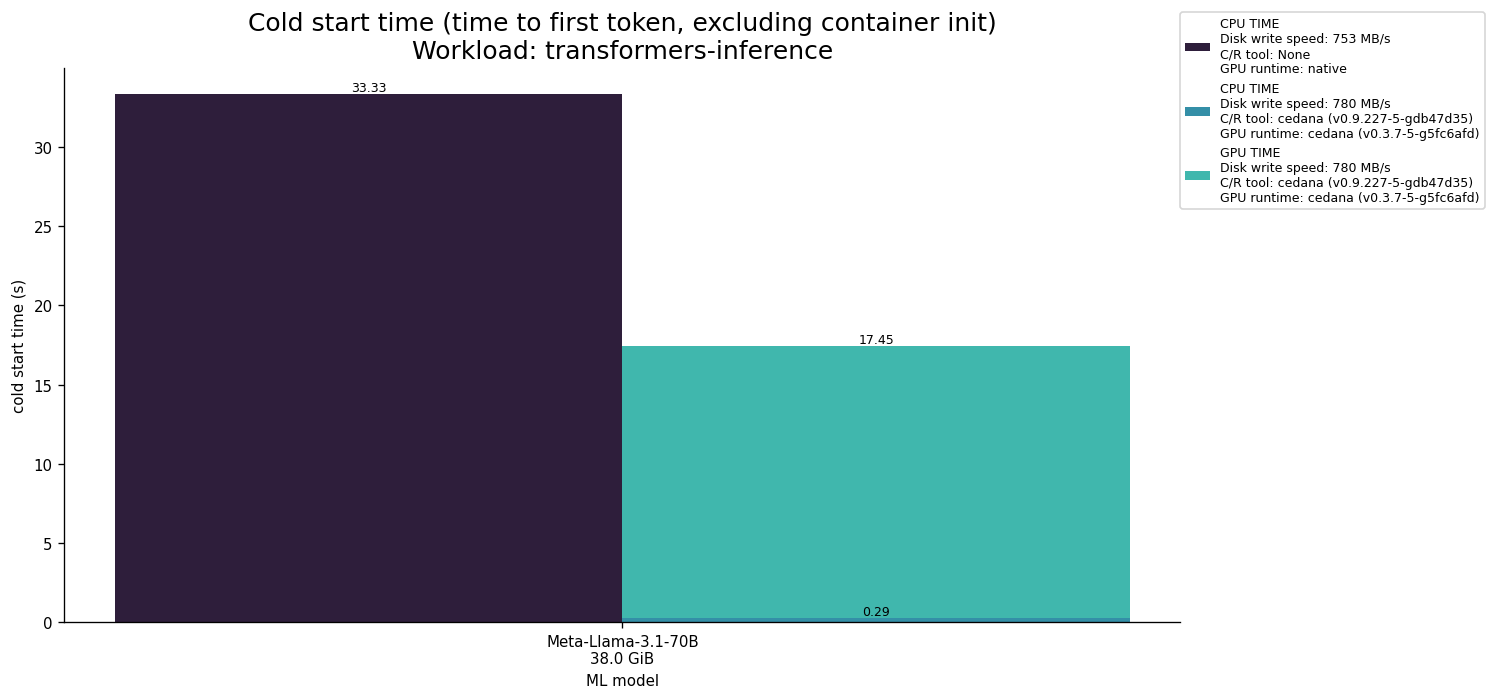

What do we do at Cedana?

We build two key things.

- Pre-emption & Live migrations of containers with snapshotting using CRIU

This includes custom solutions for GPU based snapshots (only support Nvidia atm) using our custom wrappers.

Thanks

Reach out to me at,

Twitter: @swarnimarun

Github: @swarnimarun

Discord: @swarnimarun