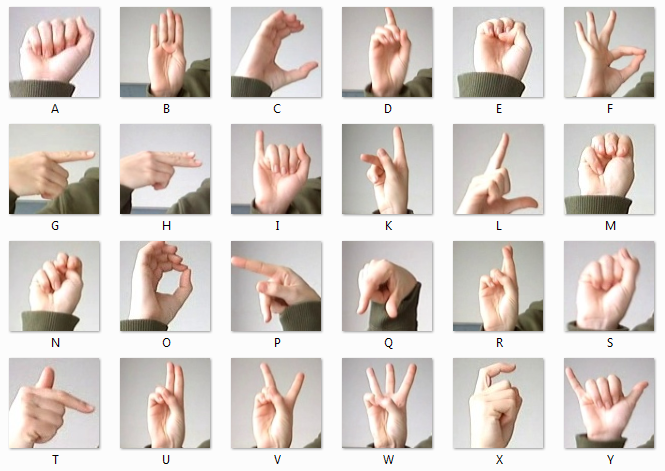

American Sign Language Translator

Problem Statement

- The people having speaking difficulties or hearing disability have a hard time in expressing thoughts and communicating with other people .

- As a result the social, emotional and linguistic growth of such people is hampered and they develop anxiety and depression symptoms.

Solution

- Integrating hand gesture recognition system using computer vision for establishing 2-way communication system.

- To provide information access and services to deaf and dumb people in American sign language.

- The project is scalable and has been extended to capture whole vocabulary of ASL through manual and non manual signs.

Methodology

- Hand Detection using YOLO, CNN and OpenCV.

- FFmpeg to extract hand detected from the video and then Deep CNN was used for mapping the hand gestures recognized to the nearest alphabet in American sign language(ASL).

- Converted the predicted text to speech to make it more interpretable.

IMPLEMENTATION

(ALL ALPHABETS)

1.00

IMPLEMENTATION

(TEST WORD)

Web Implementation

(Using NodeJS for backend and SocketIO for real-time communication)

1.00

Future Goals

-

Real time translation from English to other languages as well.

- Use Facial Detection to extract the user's expression from the video and use it to change tone of predicted speech.