Design Django models such that your future self will thank you 👋🏾

@Tarun_Garg2

Coming up 📚

- What is this talk about?

- Setting up the premise

- Key themes

- Audit your Models

- Timestamp your fields

- Soft deleting entries

- Use of choices

- Denormalisations

- ....

- Bring down the curtain

What is this talk about 🤷🏻

What is this talk about 🤷🏽♀️

Setting up premise 🐒

Setting up premise 🐒

Let's build Y.A.M.A. (Yet Another Messaging Application)

coz why not?

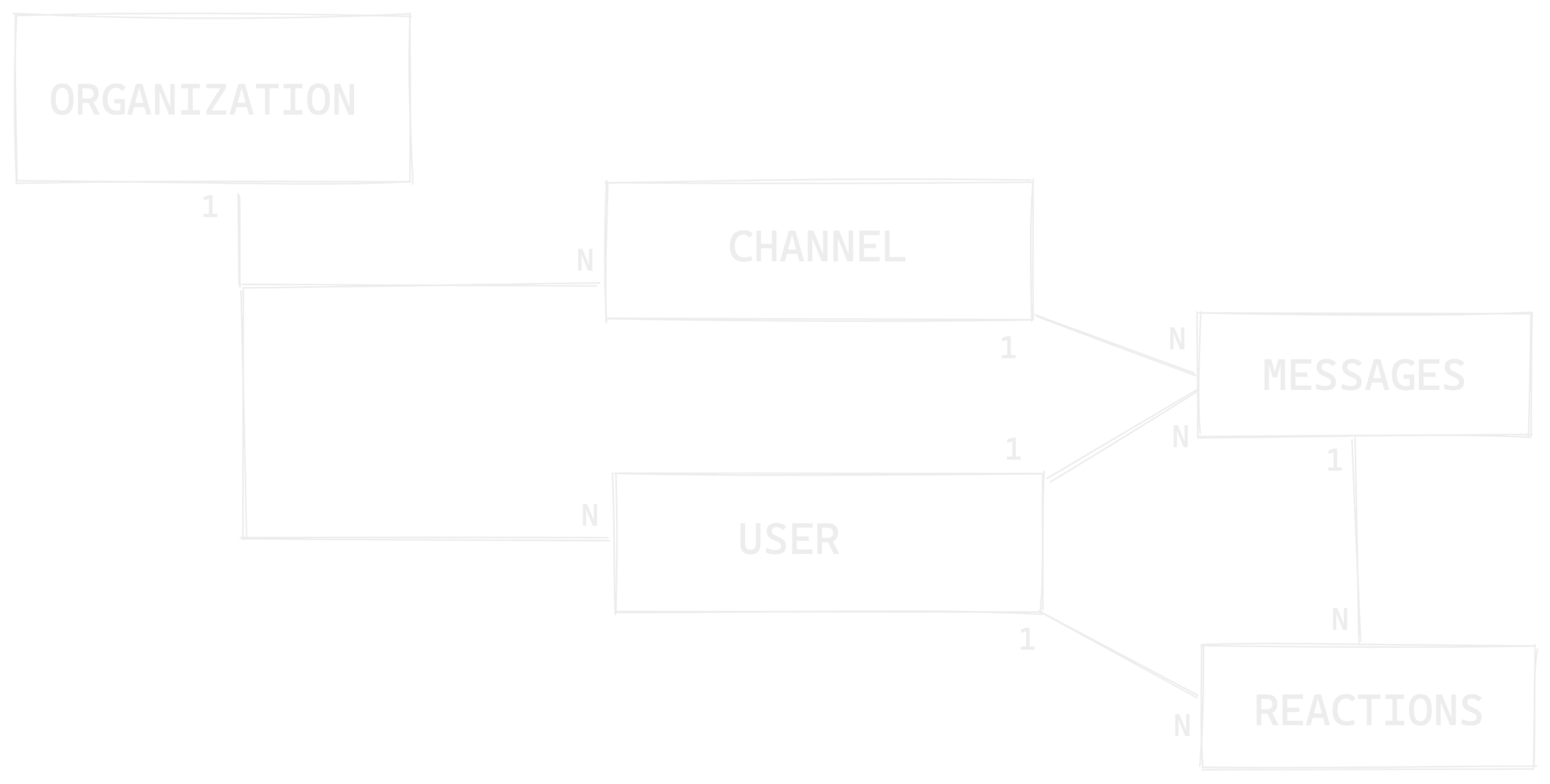

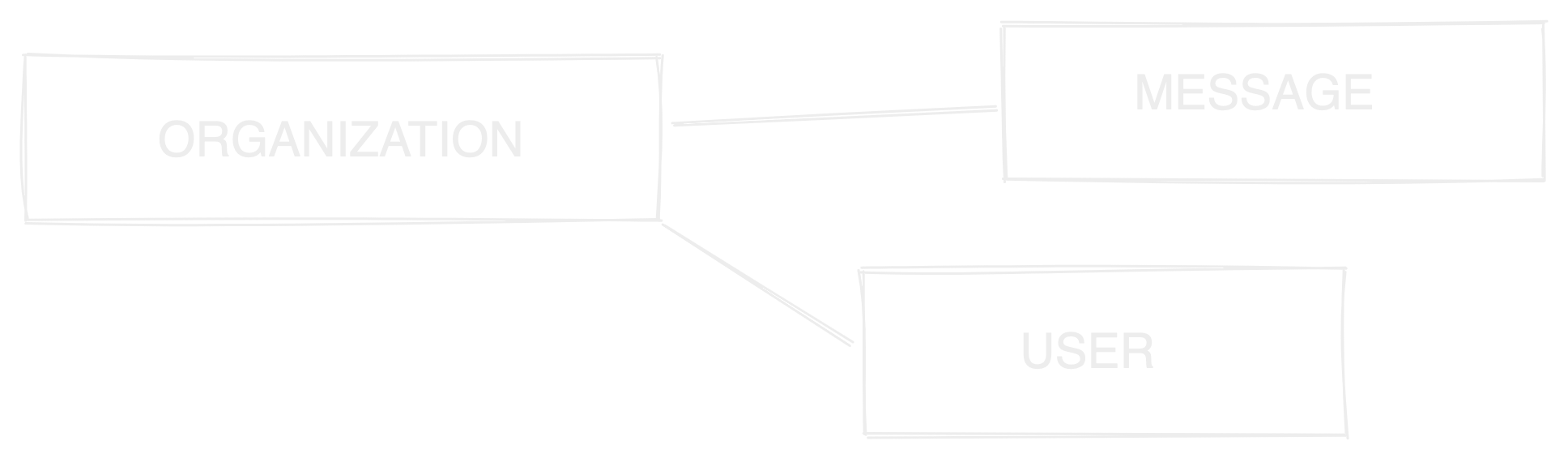

Y.A.M.A. 👻

Let's see a high-level database design for Y.A.M.A.

Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

class User(models.Model):

username = models.CharField(unique=True)

profile_picture = models.URLField()

...user related fields

Y.A.M.A. Adventures 👻

PM:- Hey, can you tell me why does this user exists in our system? 👀

Developer:- Sure, allow me some time to debug 🦟

Y.A.M.A. Adventures 👻

Developer:- I need to know when was this user created to debug this; and I don't have that info in my schema.

Theme #1

Audit your models 📝

Audit your models 📝

What is audit?

**googles**

An official examination of the present state of something.

Audit your models 📝

Have some metadata fields which

will help you audit your models

Audit your models 📝

class CreateUpdateAbstractModel:

created_at = models.DateTimeField(auto_now_add=True)

updated_at = models.DateTimeField(auto_now=True)

class Meta:

abstract = True

def __str__(self):

return str(self.id)Most basic form of auditing can be achieved by following snippet

For a more advanced version of auditing, you can add other fields such as -

updated_by, created_by, deleted_at, deleted_by, raw_json

Audit your models 📝

Things I learned from experience!!!

Having default=timezone.now has benefits like you can specify the value of the created_at while testing without having to mock timezone.now()

auto_now_add is a Django level construct vs default=timezone.now is database level construct

Audit your models 📝

Things I learned from experience!!!

Performance implications of having raw_json in models

- Django defaults to fetching all fields

- The way Postgres handles oversized attributes via Toast

Alternative?

• Evaluate for your use-case; after all, this is good to have.

• Have JSON as a 1:1 field in some other model

• Override Django manager to defer JSON

Audit your models 📝

you'll not regret it ;)

class User(models.Model):

created_at = models.DateTimeField(default=timezon.now)

updated_at = models.DateTimeField(auto_now=True)

...other auditing fields

username = models.CharField(unique=True)

profile_picture = models.URLField()

...We can alter our Y.A.M.A. user model with :-

Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

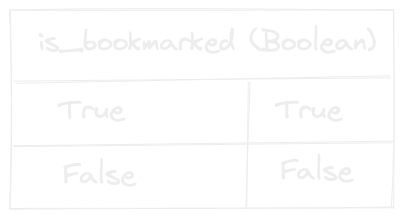

3 weeks later....

No no, nothing dramatic happens!

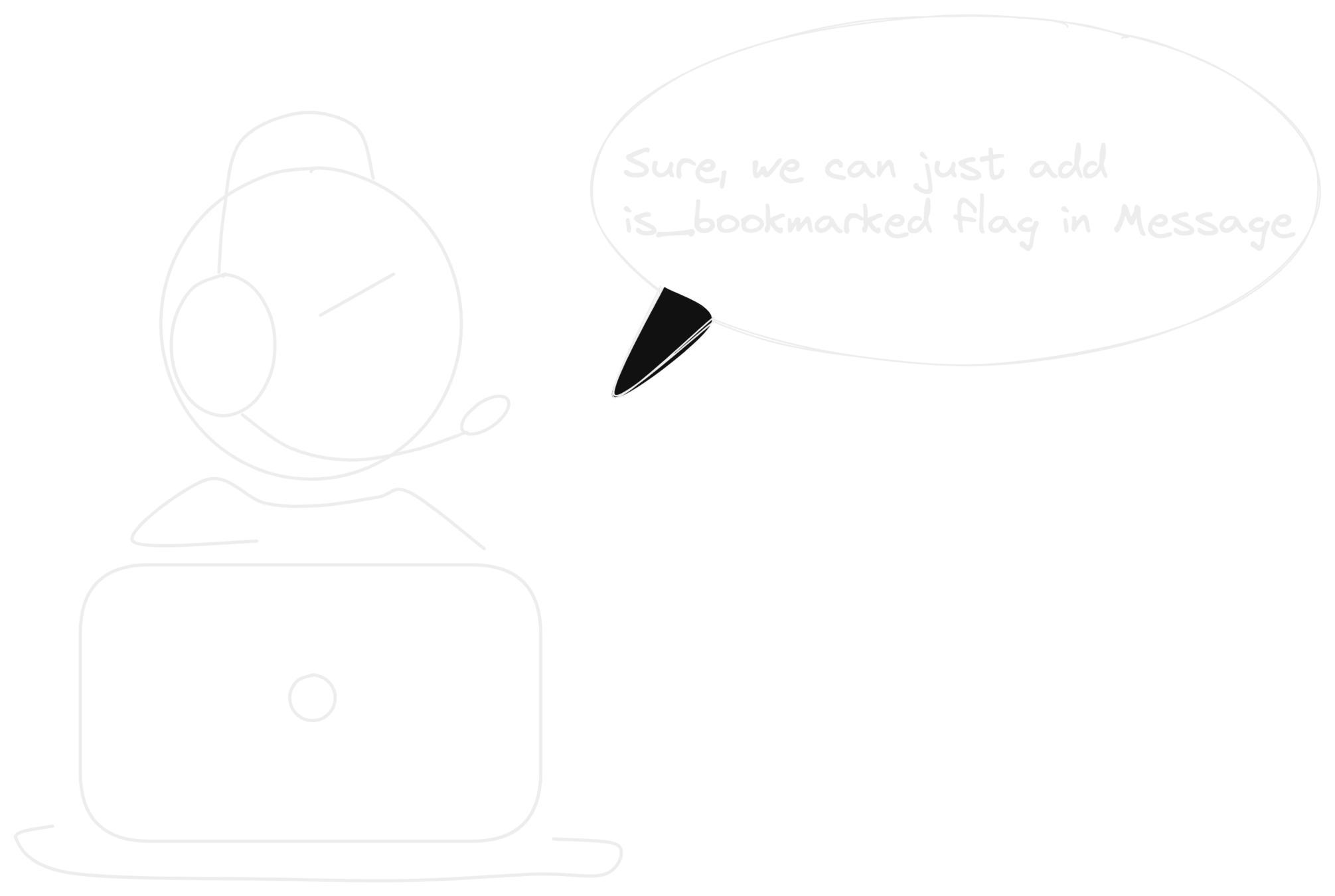

It's just that the boolean field can be swapped with something better here.

Theme #2

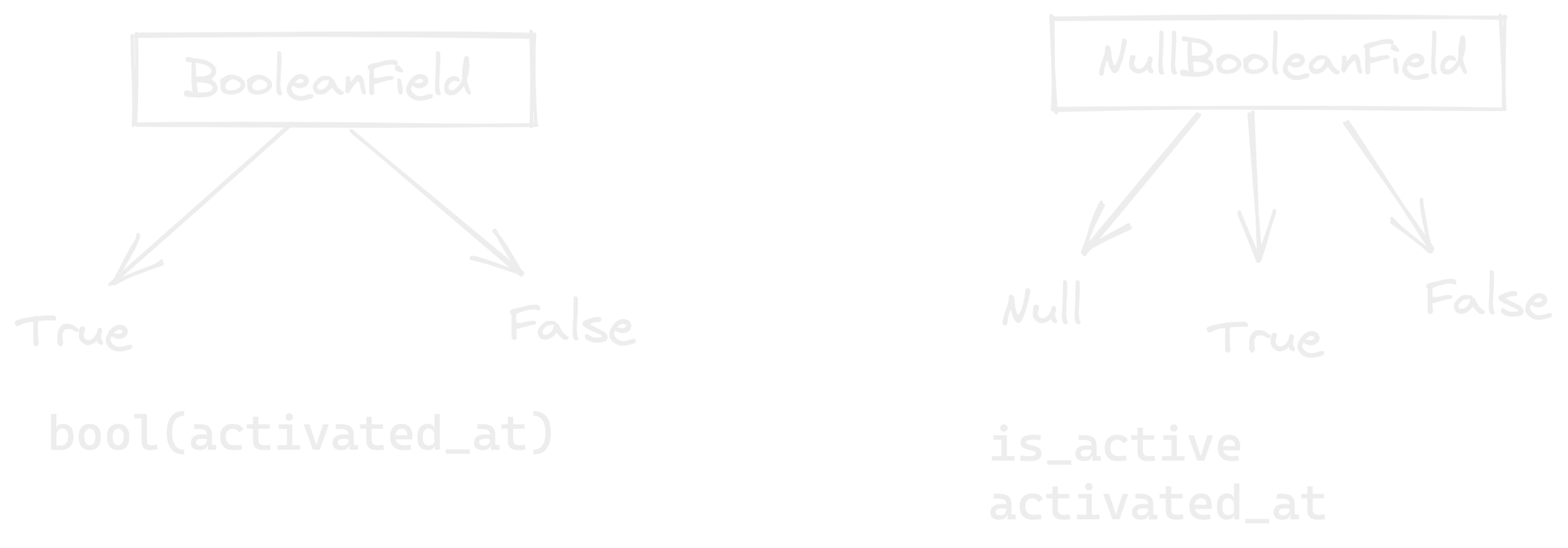

Timestamp your fields ⌚️

Timestamp your fields ⌚️

A digital record of the time of occurrence of a particular event.

What is timestamp?

**googles**

Timestamp your fields ⌚️

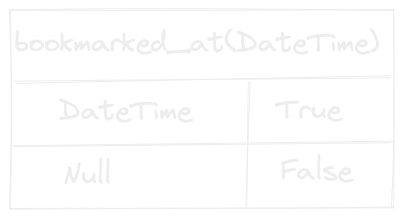

Essence almost remains the same, but having timestamped fields will provide benefits in future.

Timestamp your fields ⌚️

Things I learned from experience!!!

- Storage cost is almost negligible compared to readability and other benefits it provide

- This is hard to replicate this for NullBooleanField, since now we've three states to maintain

Timestamp your fields ⌚️

Things I learned from experience!!!

It's not either timestamp OR boolean debate always, sometimes you gotta have both of them also.

Timestamp your fields ⌚️

you'll not regret it ;)

class Message(models.Model):

created_at = models.DateTimeField(default=timezone.now)

updated_at = models.DateTimeField(auto_now=True)

...other auditing fields

user = models.ForeignKey(User)

message = models.TextField()

bookmarked_at = models.DateTimeField(null=True)

...We can now change our Message model schema with :-

Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

class Message(models.Model):

...auditing fields

user = models.ForeignKey(User)

deleted_at = models.DateTimeField()

...Y.A.M.A. Adventures 👻

(In future)

For every query involving messages, we had to make sure to add

deleted_at is NULL flag and it became a pain.

For Organizations where the deletion was frequent; then it also became a performance overhead to maintain.

Theme #3

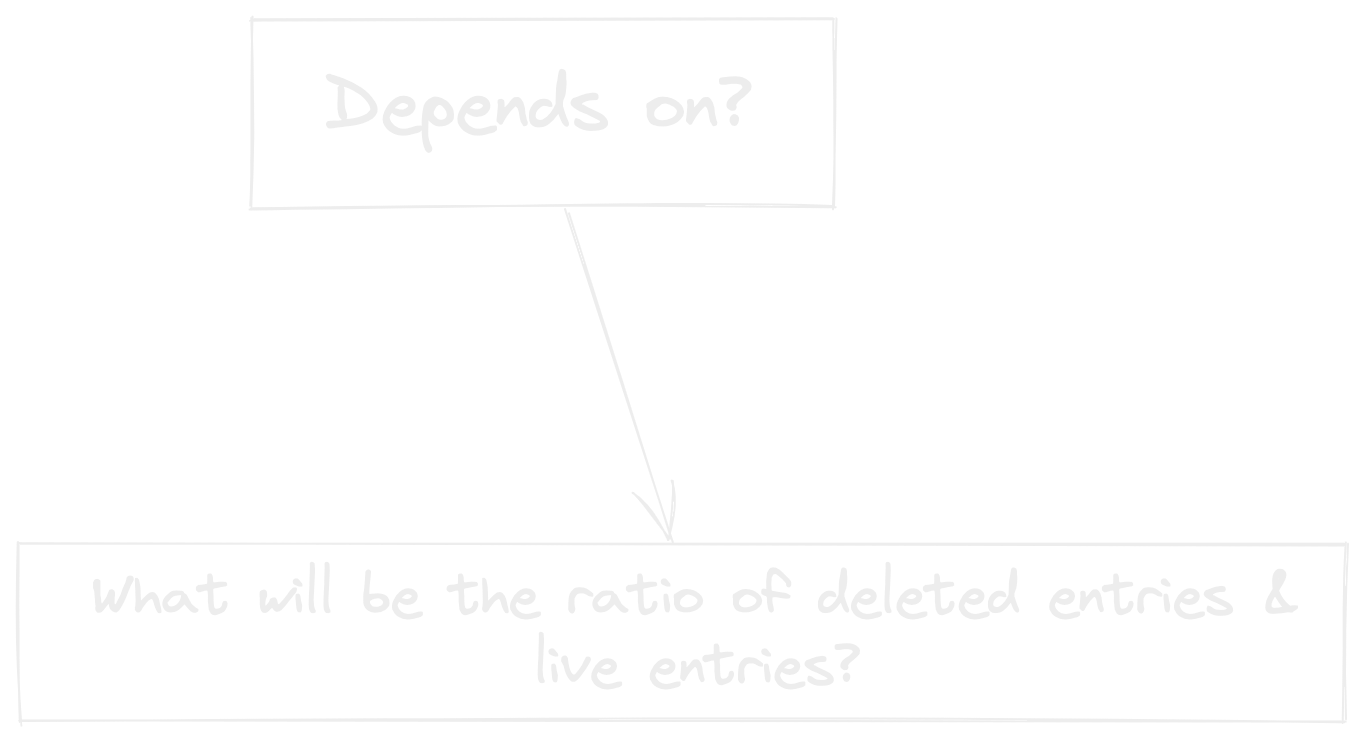

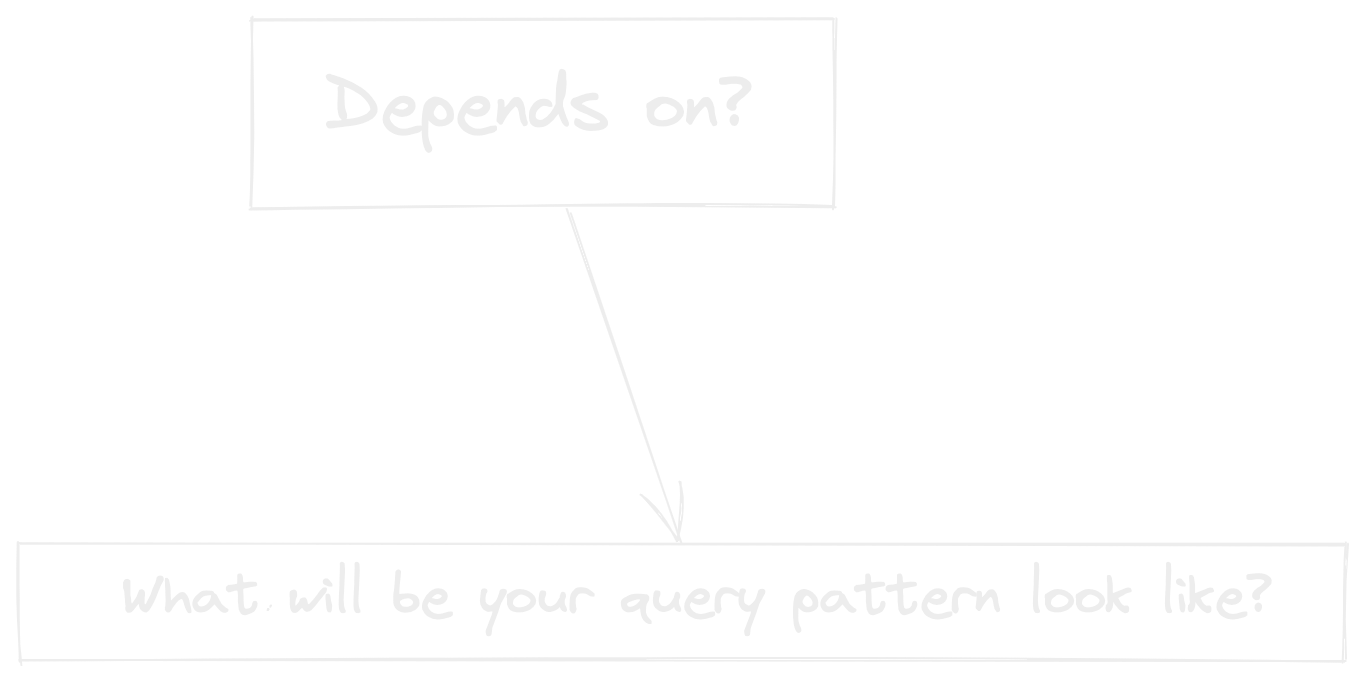

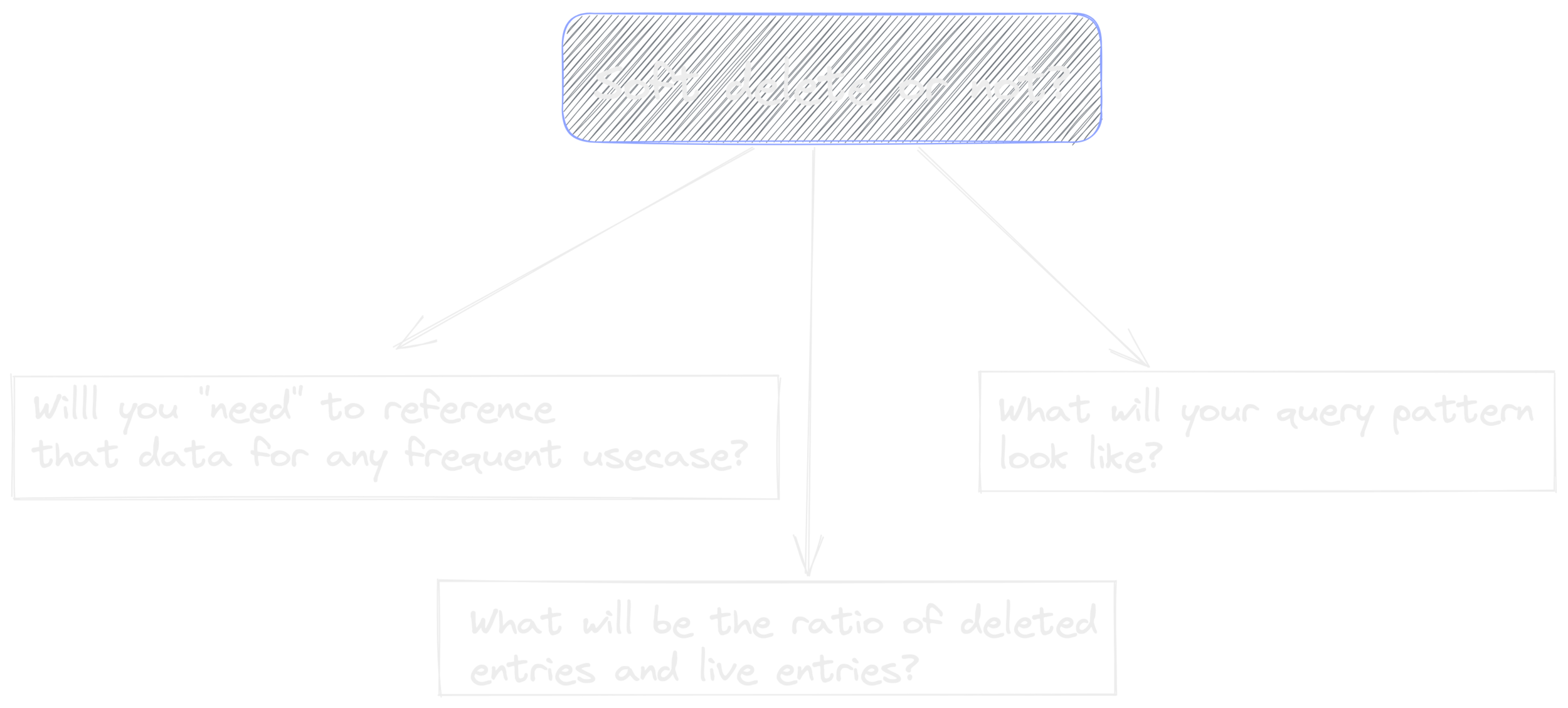

Soft delete or not ✍🏽

Soft delete or not ✍🏽

What is soft delete?

**duck-duck-go**

"Soft delete" in database lingo means that you set a flag on an existing table which indicates that a record has been deleted, instead of actually deleting the record.

Soft delete or not ✍🏽

Soft delete or not ✍🏽

Soft delete or not ✍🏽

Soft delete or not ✍🏽

Soft delete or not ✍🏽

Soft delete or not ✍🏽

In the case of Y.A.M.A.; we rarely needed to fetch deleted messages(except for compliance audit).

class DeletedMessage(models.Model):

...auditing fields

body = models.TextField()

deleted_at = models.DateTimeField()

original_message_id = models.PositiveIntegerField()

....Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

USER_ROLE_CHOICES = [

(0, 'Normal'),

(1, 'Admin'),

(2, 'Archived')

]

class User(models.Model):

... other fields

role = models.PositiveIntegerField(choices=USER_ROLE_CHOICES, default=0)

| pk | username | role |

|---|---|---|

| 4 | Test 4 | 0 |

| 5 | Test 5 | 2 |

select * from user where role=1Y.A.M.A. Adventures 👻

It Was Good Until It Wasn't

Django choices just do validation on ModelForm level i.e. it does not ensure constraint & quality check at the lowest level which is database.

Remembering all numerical mapping for roles became a pain

user.role = 3

user.save() # this will workTheme #4

Use of choices

Use of choices

Things I learned from experience!!!

Keep strings instead of numbers for value; the benefits outweighs the disadavantages

USER_ROLE_CHOICES = [

('normal', 'Normal'),

('admin', 'Admin'),

('archived', 'Archived')

]

class User(models.Model):

... other fields

role = models.CharField(choices=USER_ROLE_CHOICES, default='normal', max_length=10)

...

select * from user where role='normal'Use of choices

Things I learned from experience!!!

Keep choices as constant variables and namespaced.

class UserRole(models.TextChoices):

NORMAL = 'normal', 'Normal'

ADMIN = 'admin', 'Admin'

ARCHIVED = 'archived', 'Archived'

class User(models.Model):

...

role = models.CharField(choices=UserRole.choices, default=UserRole.NORMAL, max_length=10)

...Use of choices

Things I learned from experience!!!

Since Django does not enforce constraints for choices on Database level; we can force it to do so via :-

class UserRole(models.TextChoices):

NORMAL = 'normal', 'Normal'

ADMIN = 'admin', 'Admin'

ARCHIVED = 'archived', 'Archived'

class User(models.Model):

...

role = models.PositiveIntegerField(choices=UserRole.choices, default=UserRole.NORMAL)

...

class Meta:

constraints = [

models.CheckConstraint(

name="%(app_label)s_%(class)s_role_valid",

check=models.Q(role__in=UserRole.values),

)

]Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

Y.A.M.A. Adventures 👻

SELECT org.name, count(msg.id) from message msg

JOIN user u ON u.id=msg.user_id

JOIN organization org ON org.id=org.organization_id

GROUP BY 1Run following SQL every hour

Things were fine in the citadel until one Friday database server crashed due to this query 👹

Reason? Expensive join cost between user & message

Theme #5

Denormalization 🙌🏻

Denormalization 🙌🏻

What is denormalization?

**bings**

the process of adding precomputed redundant data to an otherwise normalized relational database to improve the read performance of the database

Denormalization 🙌🏻

It is OK to denormalize, in fact, denormalization might be one of the top reasons why new age databases are able to scale up

Before :-

Denormalization 🙌🏻

Option #1 :-

Option #2 :-

Denormalization 🙌🏻

- Everything done in excess is bad, and the same is true for both normalization and denormalization

- Everything done for software optimization is a tradeoff; Here the tradeoff is between - faster reads & slower writes v/s faster writes & slower reads

Things I learned from experience!!!

Bring down the curtain 🤗

- Auditing your models can go a long way in making debuggability easy

- When tempted to use boolean flags for something, evaluate whether you can use timestamp fields instead;

Kill two birds with one stone

-

Soft delete is good but it can be the root of problems also; so evaluate.

-

Use Django choices wisely and maintain consistency.

-

Denormalization is ok; is a tradeoff.

Don't follow any advice blindly from here(or anyone) without evaluating your use case.

🙏🏻 Open to

feedback or questions!

@Tarun_Garg2

tarungarg546