Send Your Apps

Back To School

3 funky strategies to make your NativeScript apps smarter!

Who am I?

Jen Looper - Progress - Senior Developer Advocate

@jenlooper

Help!

My apps are stupid and boring

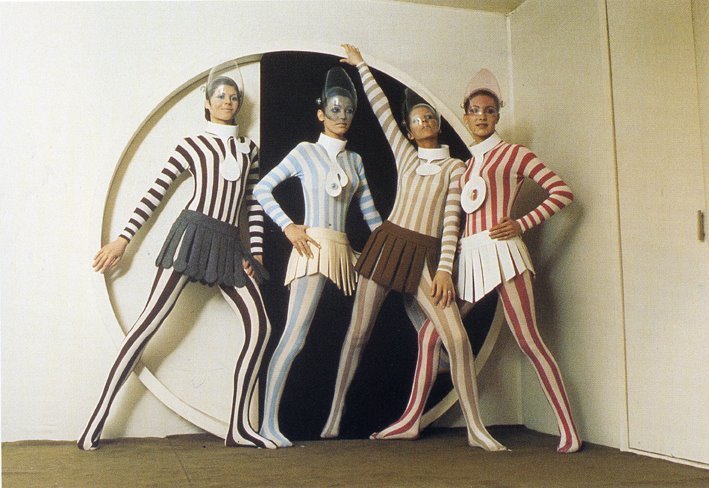

Get suited up!

It's back-to-school time for your apps!

Today's Curriculum:

- technique to make your app more 'sensitive'

- turn your app into a personal assistant with one plugin

- two machine learning APIs to try

3 ways to make your apps "smarter"

smarter = more human-like

QuickNoms - a smart recipe app

Powered by Firebase

Submit your recipes on the web!

QuickNoms.com

Mobile App Features:

Algolia search

Firebase Remote Config marquee

Text-To-Speech

goal: empathetic apps

1: Make your app sensitive

"wow, it's hot in here!"

build a sensor-powered recipe recommender

add a sensor integration!

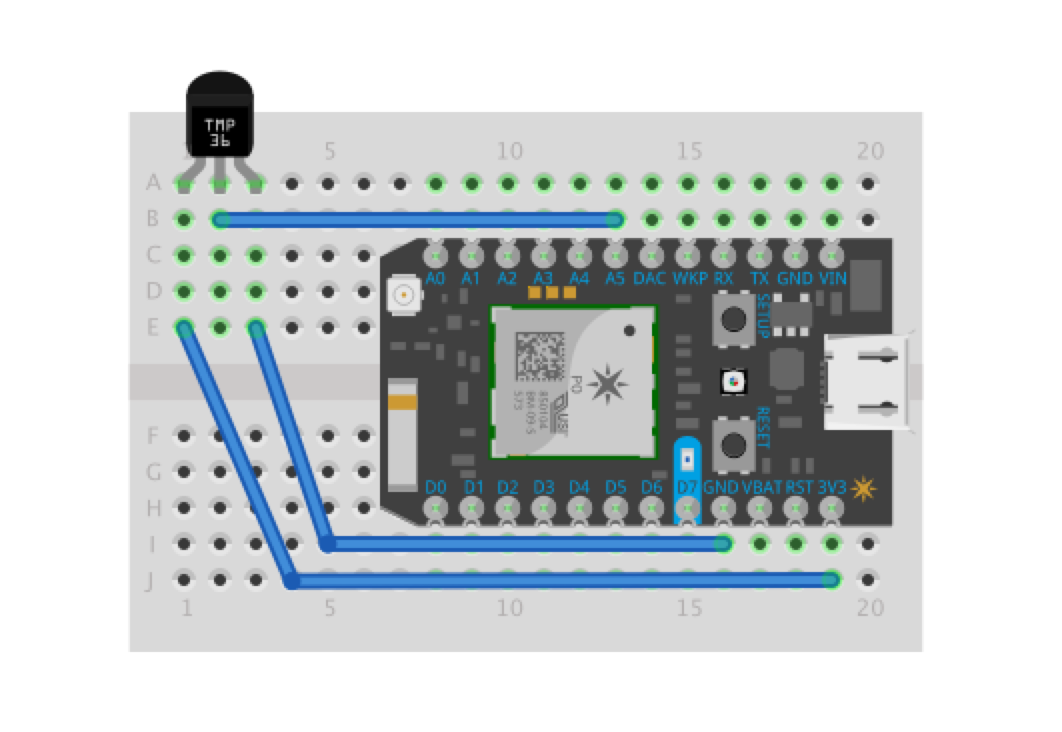

wifi-connected Particle Photon + temperature sensor - about $25 total

Build the device

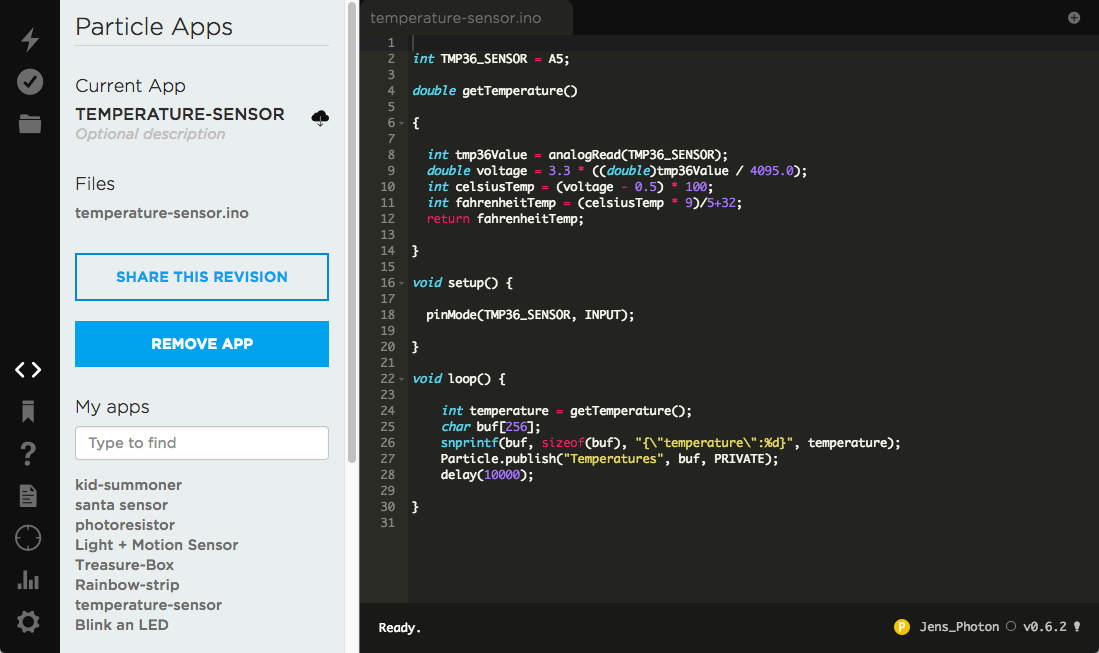

Flash code to Photon

Photon reads temp every 10 secs, writes data to Particle Cloud

Build webhook

webhook lives in Particle Cloud, watches for data written by Photon to cloud

webhook writes to Firebase

app consumes data and reacts

Select recipes tagged as 'hot' or 'cold' - atmosphere type recipes

Observable subscribes to

temperature saved to Firebase

ngOnInit(): void {

this.recipesService.getTemperatures(AuthService.deviceId).subscribe((temperature) => {

this.temperature$ = temperature[0].temperature;

if (Number(this.temperature$) > 70) {

this.gradient = "#ff6207, #ff731c, #ffe949";

this.recommendation = "It seems pretty warm in here! I'd recommend some recipes

that might be refreshing";

}

else {

this.gradient = "#05abe0, #53cbf1, #87e0fd";

this.recommendation = "It seems pretty cool in here! I'd recommend some recipes

that might be warm and toasty";

}

})

}Scalable?

Google Nest - use the API

demo

2: Humanize with plugins

Your app as a personal assistant

Try a plugin like Text-To-Speech

speak(text: string){

this.isSpeaking = true;

let speakOptions: SpeakOptions = {

text: text,

speakRate: 0.5,

finishedCallback: (() => {

this.isSpeaking = false;

})

}

this.TTS.speak(speakOptions);

}pass a string to the plugin to leverage native text-to-speech capability

demo

3: Add some Machine Learning

ML is easy

not

Use a third party with pretrained models

Specialists in image analysis

Took top 5 awards in 2013 ImageNet challenge

Innovative techniques in training models to analyze images

Offer useful pre-trained models like "Food" "Wedding" "NSFW"

Or, train your own model!

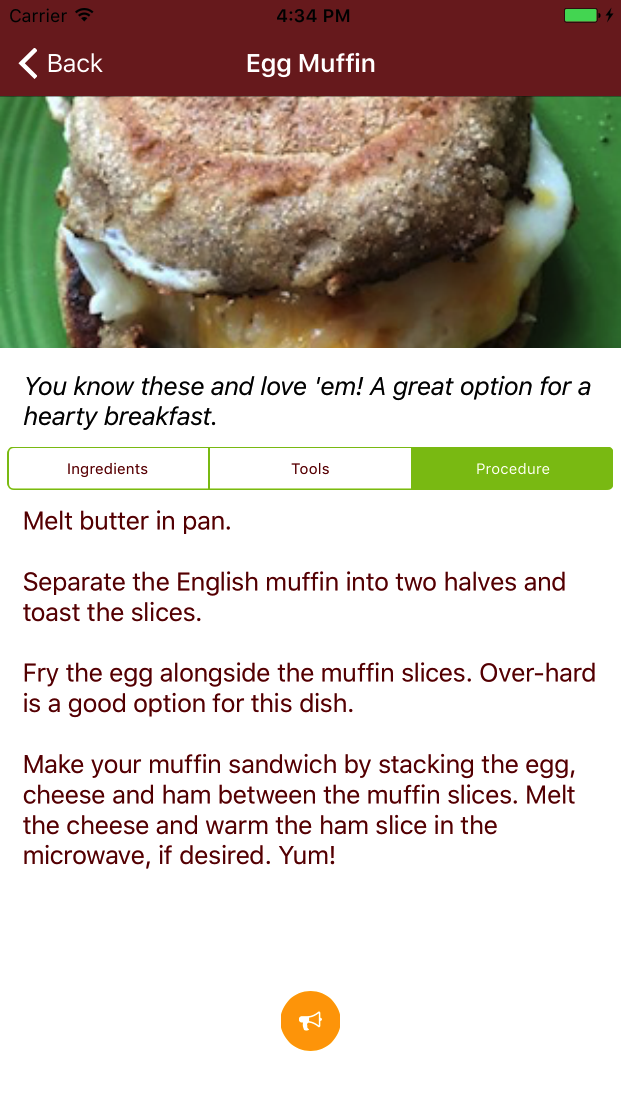

"Does this dish qualify as a QuickNom?"

Use Clarif.ai's pretrained Food model to analyze images of plates of food for inspiration

probably not!

might be!

Take a picture

takePhoto() {

const options: camera.CameraOptions = {

width: 300,

height: 300,

keepAspectRatio: true,

saveToGallery: false

};

camera.takePicture(options)

.then((imageAsset: ImageAsset) => {

this.processRecipePic(imageAsset);

}).catch(err => {

console.log(err.message);

});

}Send it to Clarif.ai via REST API call

public queryClarifaiAPI(imageAsBase64):Promise<any>{

return http.request({

url: AuthService.clarifaiUrl,

method: "POST",

headers: {

"Content-Type": "application/json",

"Authorization": "Key " + AuthService.clarifaiKey,

},

content: JSON.stringify({

"inputs": [{

"data": {

"image": {

"base64": imageAsBase64

}

}

}]

})

})

.then(function (response) {

return response

}

)}Analyze returned tags

.then(res => {

this.loader.hide();

try {

let result = res.content.toJSON();

let tags = result.outputs[0].data.concepts.map( mc => mc.name + '|' + mc.value );

let ingredients = [];

tags.forEach(function(entry) {

let prob = entry.split('|');

prob = prob[1];

let ingred = entry.split('|');

if(prob > 0.899){

ingredients.push(ingred[0])

}

});

//there should be between four and eight discernable ingredients

if (ingredients.length >= 4 && ingredients.length <= 8) {

alert("Yes! This dish might qualify as a QuickNom! It contains "+ingredients)

}

else {

alert("Hmm. This recipe doesn't have the qualifications of a QuickNom.

Try again!")

}

}if between 4 & 8 ingredients are listed with over .899 certainty,

it's a QuickNom!

QuickNom dishes have a few easy-to-see, simple ingredients

demo

"What can I make with an avocado?"

Use Google's Vision API to match images with recipes

Do it all with Google!

Leverage its consumption of millions of photos via Google Photos with Cloud Vision API

- Label Detection

- Explicit Content Detection

- Logo Detection

- Landmark Detection

- Face Detection

- Image Attributes

- Web Detection (search for similar)

takePhoto() {

const options: camera.CameraOptions = {

width: 300,

height: 300,

keepAspectRatio: true,

saveToGallery: false

};

camera.takePicture(options)

.then((imageAsset: ImageAsset) => {

this.processItemPic(imageAsset);

}).catch(err => {

console.log(err.message);

});

}Take a picture

public queryGoogleVisionAPI(imageAsBase64: string):Promise<any>{

return http.request({

url: "https://vision.googleapis.com/v1/images:annotate?key="+AuthService.googleKey,

method: "POST",

headers: {

"Content-Type": "application/json",

"Content-Length": imageAsBase64.length,

},

content: JSON.stringify({

"requests": [{

"image": {

"content": imageAsBase64

},

"features" : [

{

"type":"LABEL_DETECTION",

"maxResults":1

}

]

}]

})

})

.then(function (response) {

return response

}

)}Send it to Google

this.mlService.queryGoogleVisionAPI(imageAsBase64)

.then(res => {

let result = res.content.toJSON();

this.ingredient = result.responses[0].labelAnnotations.map( mc => mc.description );

this.ngZone.run(() => {

this.searchRecipes(this.ingredient)

})

});Grab the first label returned and send to Algolia search

demo

Looking forward

Machine learning on device

What if you don't want to make a bunch of REST API calls?

What if you need offline capability?

What if you need to reduce costs? (API calls can add up)

What if you need to train something really custom?

Machine learning on device

Just landed in iOS 11: Core ML

Train a model, let Core ML process it for your app on device

Machine learning on device

TensorFlow Mobile

Designed for low-end Androids, works for iOS and Android

Google Translate (realtime text recognition)

demo:

TensorFlow on iOS

demo:

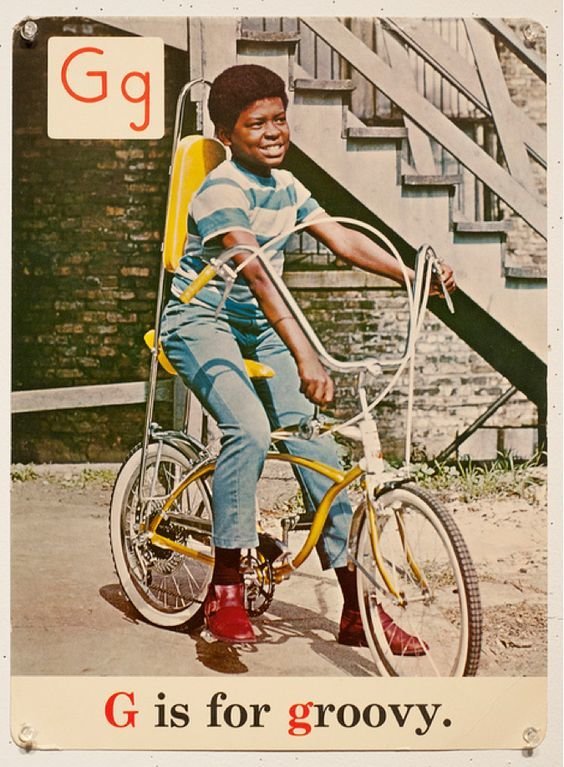

Thanks for a groovy time!

@jenlooper