What are adversarial examples?

@tiffanysouterre

Developer Relations

@Microsoft

Tiffany Souterre

WTM Ambassador

@WTM

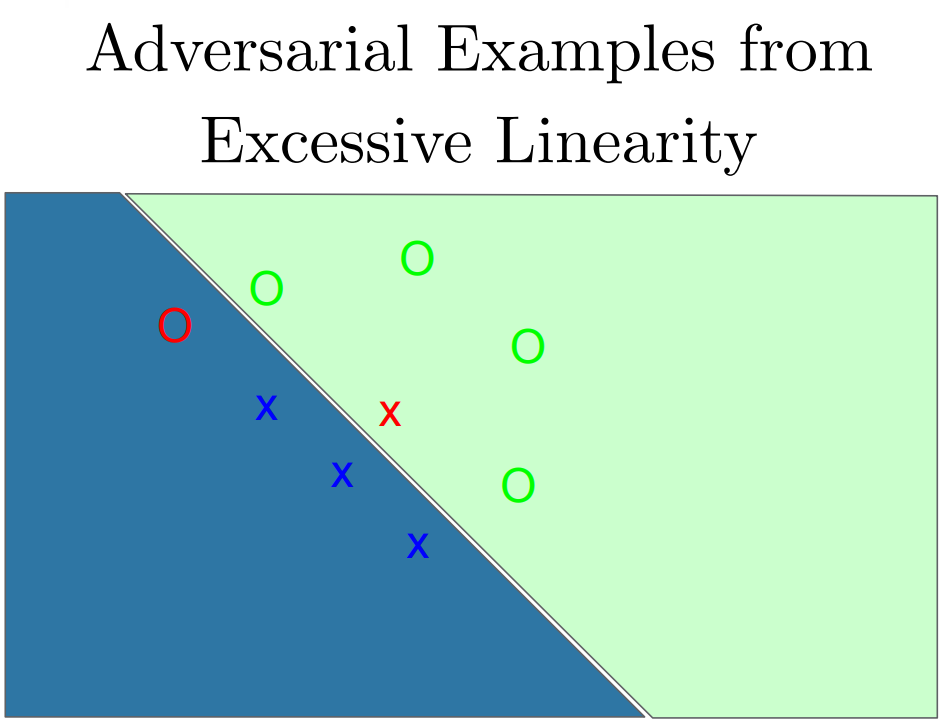

(Ian J. Goodfellow, et al. 2016)

(Christian Szegedy, et al. 2016)

(Kurakin A., et al. 2017)

(Mahmood Sharif, 2016)

(Anish Athalye, et al. 2018)

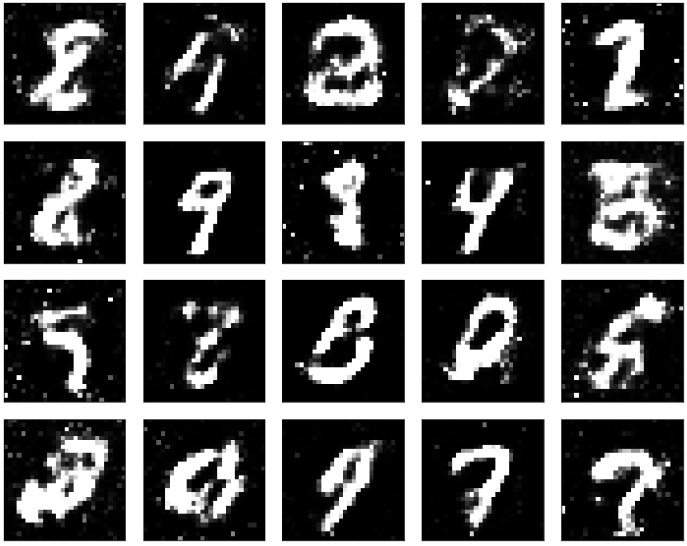

28 px

28 px

0.00

1.00

0.38

784 neurons

Input layer

0

1

2

3

4

5

7

8

9

6

Output layer

Hidden layers

784 neurons

Input layer

Hidden layers

28 px

28 px

784 neurons

Input layer

0

1

2

3

4

5

7

8

9

6

Output layer

Hidden layers

1 input layer

1 output layer

1 input layer

1 hidden layer

1 output layer

1 input layer

4 hidden layers

1 output layer

https://karpathy.github.io/2014/09/02/what-i-learned-from-competing-against-a-convnet-on-imagenet/

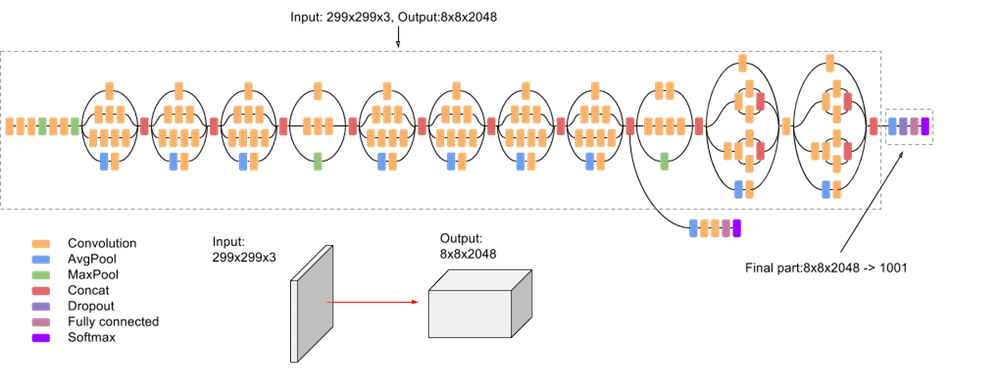

Inception-v3 Architecture

Trained for the ImageNet database (1000 classes)

Input : 299 x 299 x 3

import numpy as np

from keras.preprocessing import image

from keras.applications import inception_v3

img = image.load_img("katoun.png", target_size=(299, 299))

input_image = image.img_to_array(img)

img.show()

input_image /= 255.

input_image -= 0.5

input_image *= 2.

input_image = np.expand_dims(input_image, axis=0)model = inception_v3.InceptionV3()

predictions = model.predict(input_image)

predicted_classes = inception_v3.decode_predictions(predictions, top=1)

imagenet_id, name, confidence = predicted_classes[0][0]

print("This is a {} with {:.4}% confidence!".format(name, confidence * 100))| 0 | 1 | ... | 297 | 298 |

| 1 | ||||

| ⋮ | ||||

| 297 | ||||

| 298 |

| 0 | 1 | ... | 297 | 298 |

| 1 | ||||

| ⋮ | ||||

| 297 | ||||

| 298 |

| 0 | 1 | ... | 297 | 298 |

| 1 | ||||

| ⋮ | ||||

| 297 | ||||

| 298 |

This is a tabby with 86.86% confidence!

Inception V3

import numpy as np

from keras.preprocessing import image

from keras.applications import inception_v3

from keras import backend as K

from PIL import Image

model = inception_v3.InceptionV3()

model_input_layer = model.layers[0].input

model_output_layer = model.layers[-1].output

confidence_function = model_output_layer[0, object_type_to_fake]

gradient_function = K.gradients(confidence_function, model_input_layer)[0]

grab_confidence_and_gradients_from_model = K.function([model_input_layer, K.learning_phase()], [confidence_function, gradient_function])

spoon_confidence = 0

while spoon_confidence < 0.98:

spoon_confidence, gradients = grab_confidence_and_gradients_from_model([hacked_image, 0])

hacked_image += gradients * learning_rate

hacked_image = np.clip(hacked_image, original_image - 0.1, original_image + 0.1)

hacked_image = np.clip(hacked_image, -1.0, 1.0)

img = Image.fromarray(img.astype(np.uint8))

img.save("hacked-image.png")

Inception V3

spoon

0.00

tabby

0.87

This is a tabby with 86.86% confidence!

This is a spoon with 98.65% confidence!

This is a pineapple with 98.93% confidence!

Original white image

Hacked white image

Hacked white image saturated

Discriminator

Real

Fake

0

1

Real

Database

Generator

Fake

Generative Adversary Networks GAN

(Goodfellow 2016)

Model based optimization

4.5 years of GAN progress on face generation. https://t.co/kiQkuYULMC https://t.co/S4aBsU536b https://t.co/8di6K6BxVC https://t.co/UEFhewds2M https://t.co/s6hKQz9gLz pic.twitter.com/F9Dkcfrq8l

— Ian Goodfellow (@goodfellow_ian) January 15, 2019

(Egor Zakharov, et al. 2019)

(Egor Zakharov, et al. 2019)

Thank you!

@tiffanysouterre

(Papernot, et al. 2016)

(Ian J. Goodfellow, 2016)