How did Youtube Empower Creators with Smart Reply?

#003

Mobile Phone - Set to Landscape (Horizontal) view for better visibility

GOLD NUGGETS

Sources - They are clickable if you need more information

What's a Smart Reply?

What's a Smart Reply?

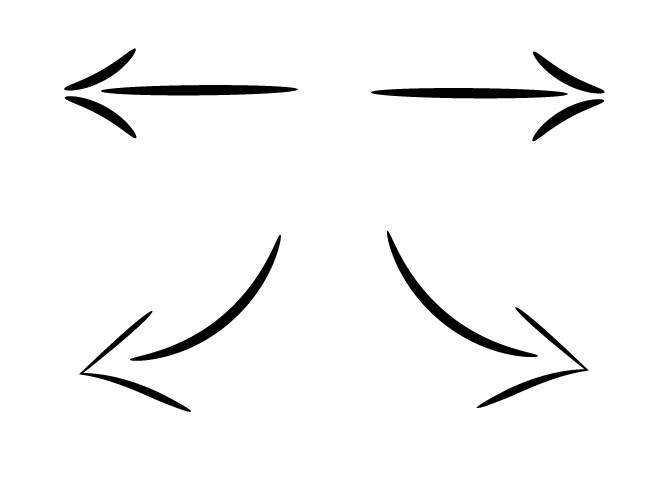

Method for automatically generating short email responses. It generates semantically diverse suggestions that can be used as a complete response with just one click. [1]

Smart Reply

GOLD NUGGETS

Why Build This Feature?

SmartReply built for YouTube and implemented in YouTube Studio helps creators engage more easily with their viewers. [1]

YouTube creators receive a large volume of responses to their videos. Moreover, the community of creators and viewers on YouTube is diverse, as reflected by the creativity of their comments, discussions and videos.

GOLD NUGGETS

What Google Products Besides Youtube Use Smart Reply?

Google Mail

Android Messages

Android Wear

Google Play Developer Console

What Google Products Besides Youtube Use Smart Reply?

Google Mail

Android Messages

Android Wear

Google Play Developer Console

The Smart Reply feature was first launched in 2015 for the Gmail. Since then, it became the backbone for other products as well. However, with each launch, it was required to customize Smart Reply for the specific task requirements. [1]

GOLD NUGGETS

Gmail's Auto Response Today

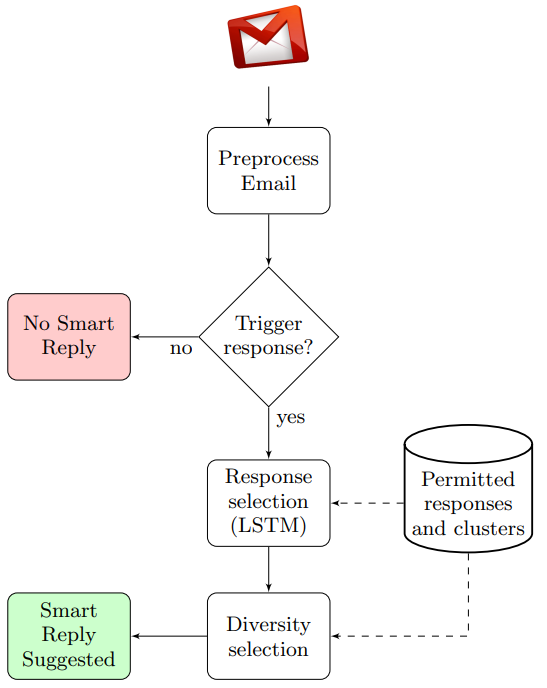

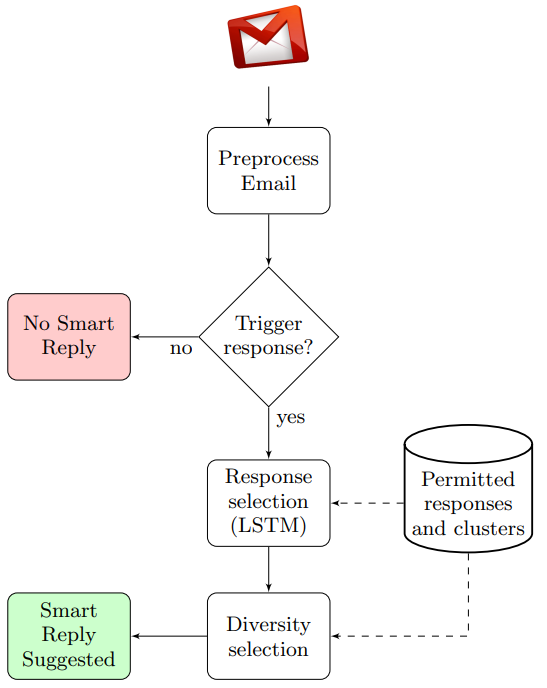

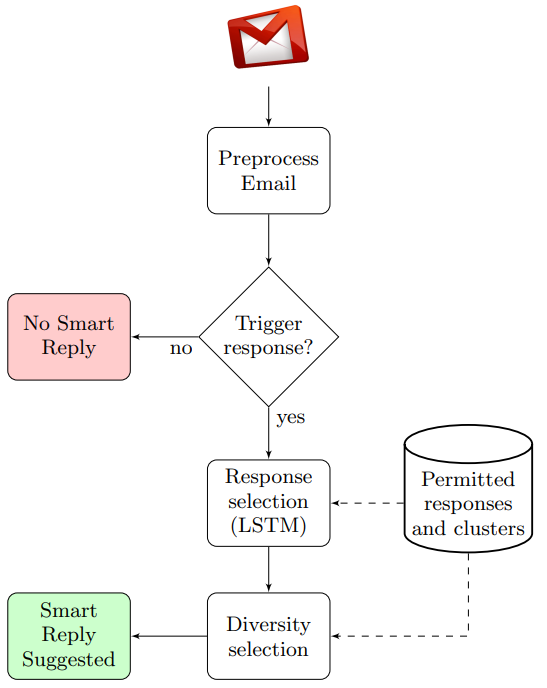

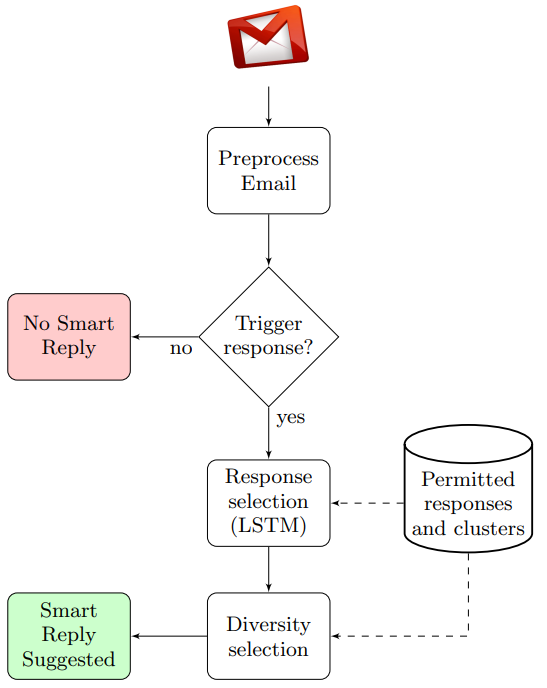

How Smart Reply Originally Worked for Gmail?

How Smart Reply Originally Worked for Gmail?

LSTM?

To properly explain LSTM, we need to start from...

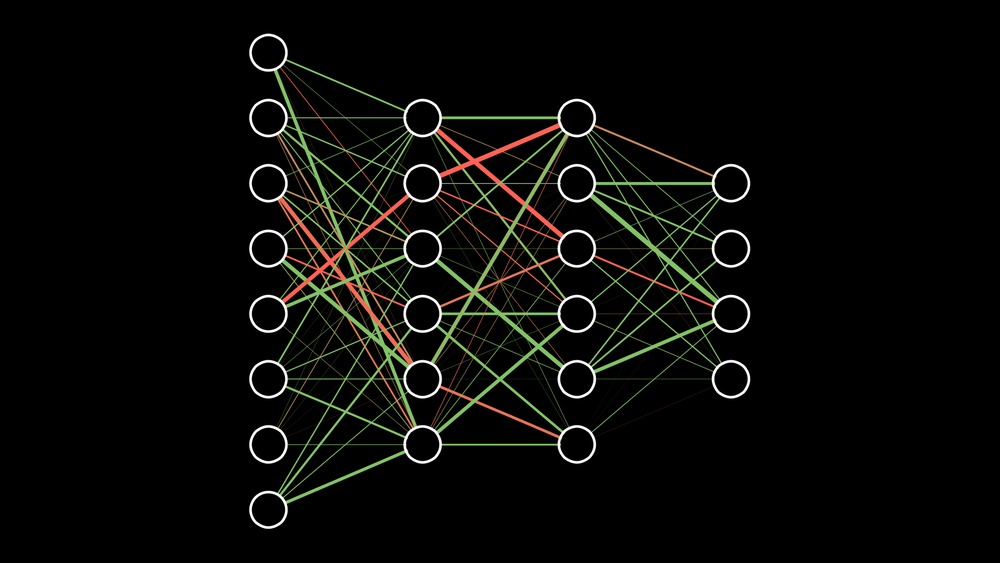

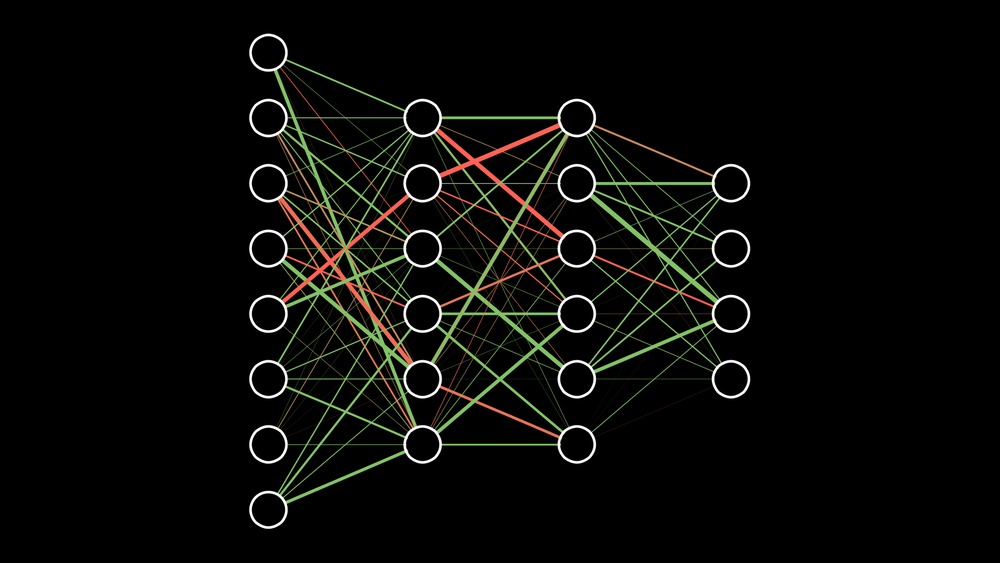

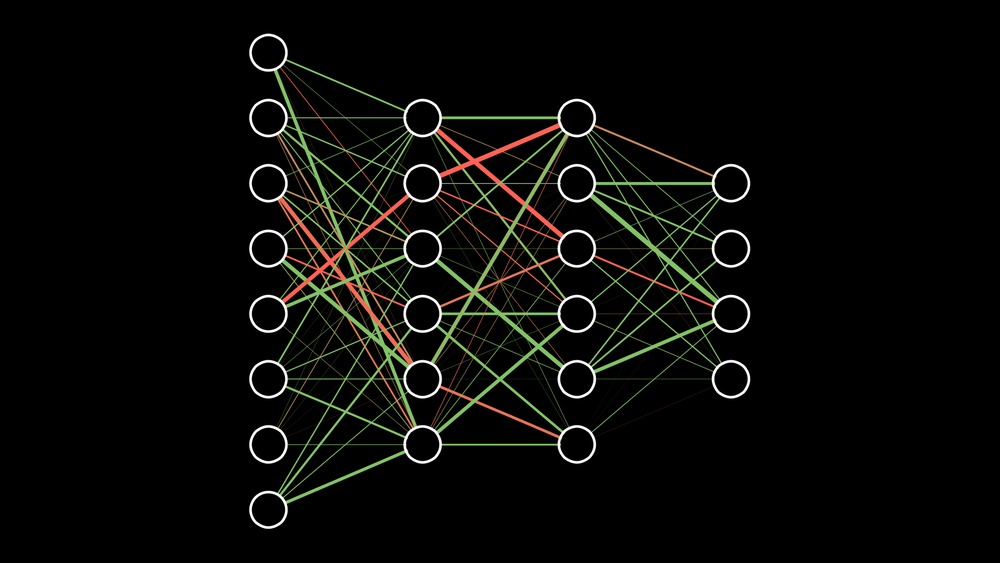

Neural Networks

Recurrent Neural Networks (RNNs)

GOLD NUGGETS

While RNNs learn similarly while training like NNs, in addition, they remember things learnt from prior input(s) while generating output(s). [1]

Recurrent Neural Networks (RNNs)

Humans don’t start their thinking from scratch every second. As you read this, you understand each word based on your understanding of previous words. You don’t throw everything away and start thinking from scratch again. Your thoughts have persistence.

Traditional neural networks can’t do this, and it seems like a major shortcoming. Recurrent neural networks address this issue. They are networks with loops in them, allowing information to persist. [1]

ELI5 - Explain Like I'm 5

GOLD NUGGETS

While RNNs learn similarly while training like NNs, in addition, they remember things learnt from prior input(s) while generating output(s). [1]

Problem with RNNs

The clouds are in the

In such cases, where the gap between the relevant information and the place that it’s needed is small, RNNs can learn to use the past information.

SKY.

The clouds are in the

I grew up in France. I speak fluent

Problem with RNNs

In such cases, where the gap between the relevant information and the place that it’s needed is small, RNNs can learn to use the past information.

FRENCH.

SKY.

Recent information suggests that the next word is probably a language name, but we need the context of France to solve the sentence. Unfortunately, as the information gap grows, RNNs become unable to learn to connect the information.

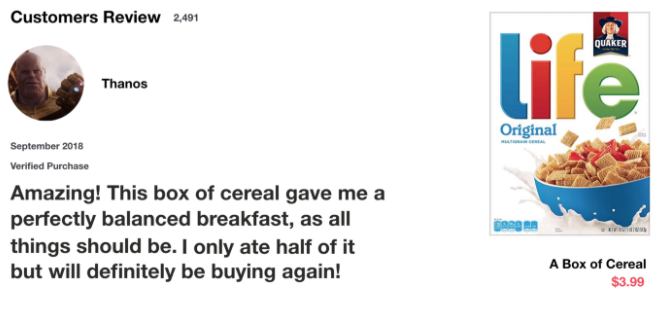

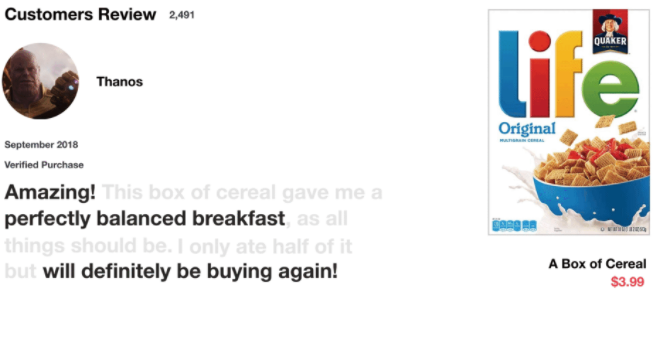

LSTM Example

Let’s start with a thought experiment. You're looking at reviews online to determine if you want to buy Life cereal. You’ll first read the review then determine if someone thought it was good or if it was bad.

When you read the review, your brain subconsciously only remembers important keywords. You pick up words like “amazing” and “perfectly balanced breakfast”. You don’t care much for words like “this”, “gave“, etc. If a friend asks you the next day what the review said, you might remember the main points though like “will definitely be buying again”.

LSTM Example

When you read the review, your brain subconsciously only remembers important keywords. You pick up words like “amazing” and “perfectly balanced breakfast”. You don’t care much for words like “this”, “gave“, etc. If a friend asks you the next day what the review said, you might remember the main points though like “will definitely be buying again”.

LSTMs are explicitly designed to avoid the problem with remembering information for long periods of time because that is practically their default behavior, not something they struggle to learn as RNN!

Long Short Term Memory (LSTM) Networks

AI KNOWLEDGE

LSTM Example

Challenge in Applying Gmail's Auto Reply to Youtube Creators

Emails - tend to be long and dominated by formal language

YouTube comments - reveal complex patterns of language switching, abbreviated words, slang, inconsistent usage of punctuation, and heavy utilization of emoji.

How They Made it Work?

Systems face significant challenges in the YouTube case, where a typical comment might include heterogeneous content like on the previous slide. In light of this, they decided to encode the text without any preprocessing.

How They Made it Work?

A Transformer Network is able to model words and phrases from the ground up even by feeding it text that has not been preprocessed, with comparable quality to word-based models.

How They Made it Work?

A Transformer Network? is able to model words and phrases from the ground up even by feeding it text that has not been preprocessed, with comparable quality to word-based models.

Transformer Networks

Transformers are designed to handle sequential data, such as natural language, for tasks such as translation and text summarization. Sequential data is any kind of data where the order matters.

However, unlike RNNs, Transformers do not require that the sequential data be processed in the order. For example, if the input data is a natural language sentence, the Transformer does not need to process the beginning of it before the end.

Transformer Networks

In a nutshell, they're position-aware neural networks.

The exact inner workings of attention are beyond the scope for us, but understand that attention computes the similarity between vectors (e.g. words).

They "read" the whole sentence at once. Each word gets represented given its own position and all the others words in the sentence and their position

ELI5 - Explain Like I'm 5

Transformers are designed to handle sequential data, such as natural language, for tasks such as translation and text summarization. Sequential data is any kind of data where the order matters.

However, unlike RNNs, Transformers do not require that the sequential data be processed in the order. For example, if the input data is a natural language sentence, the Transformer does not need to process the beginning of it before the end.

Transformers Example

GOLD NUGGETS

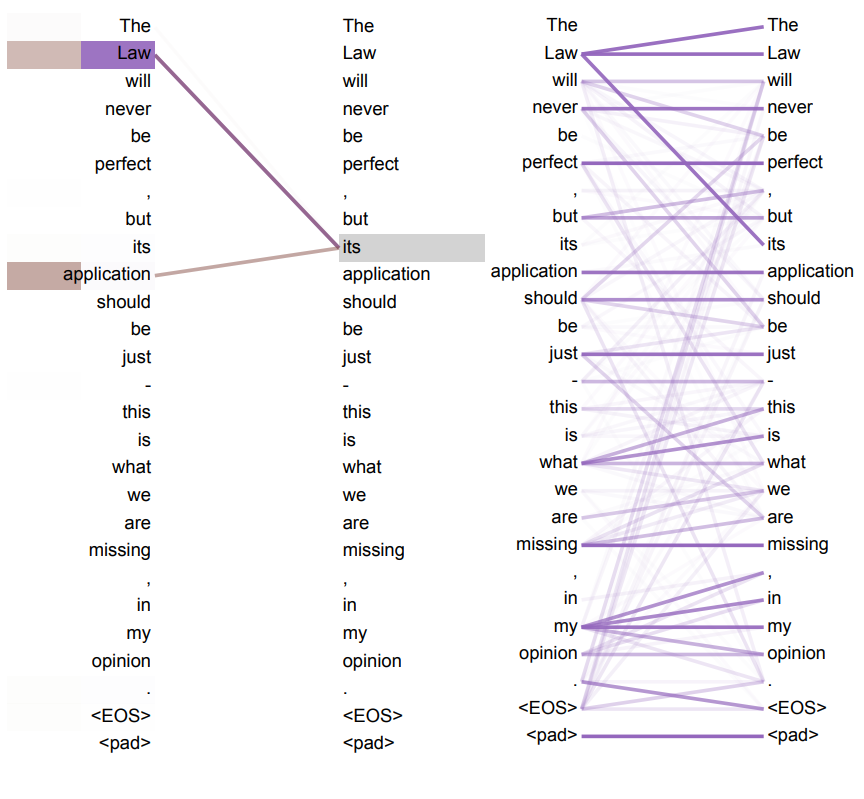

Transfomers are able to pay attention and see the connection between "its" and "Law" which was at the beginning of the sentence.

Youtube Cross-Lingual Model

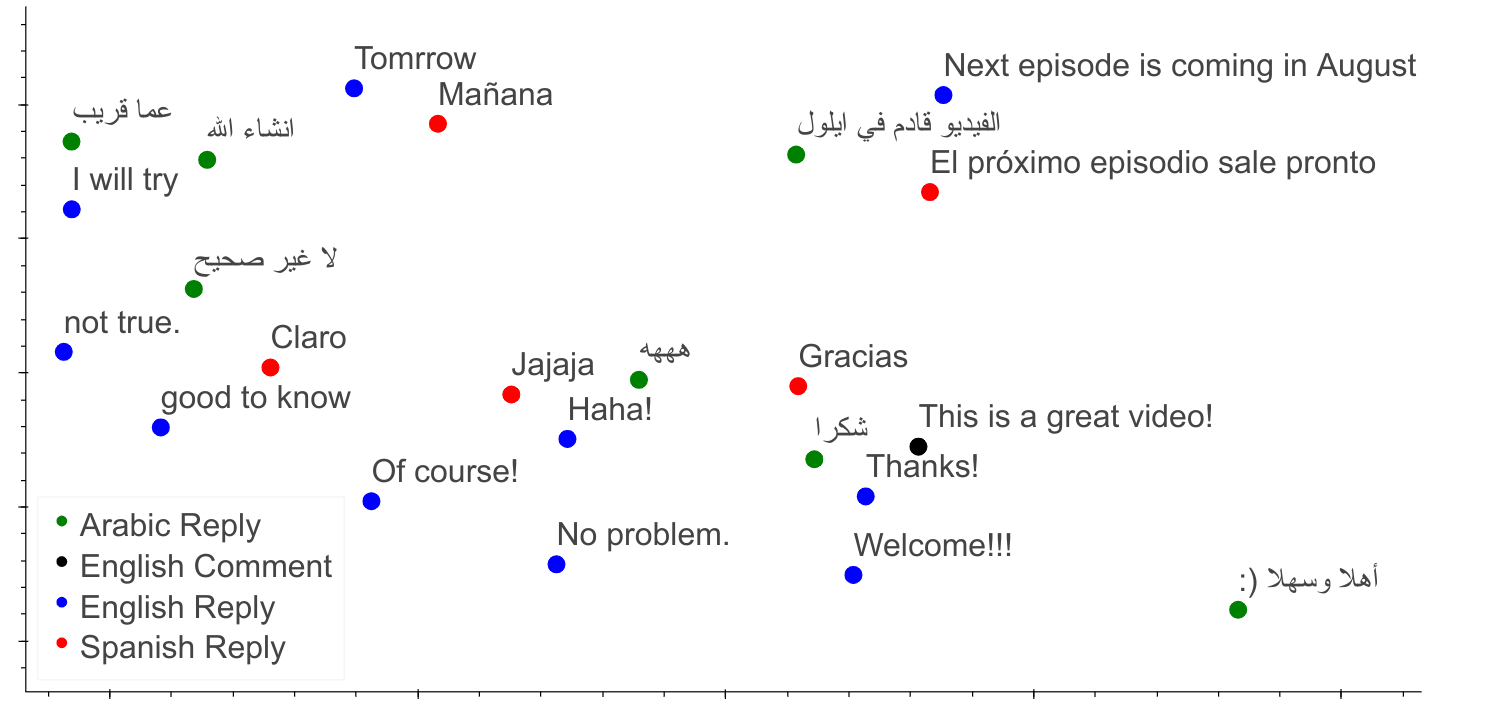

A 2D projection of the model encodings when presented with a hypothetical comment and a small list of potential replies. The neighborhood surrounding English comments (black color) consists of appropriate replies in English and their counterparts in Spanish and Arabic.

Youtube Cross-Lingual Model

GOLD NUGGETS

We’ve launched YouTube SmartReply, starting with English and Spanish comments, the first cross-lingual and character byte-based SmartReply.

YouTube is a global product with a diverse user base that generates heterogeneous content. Consequently, it is important that we continuously improve comments for this global audience, and SmartReply represents a strong step in this direction.

Rami Al-Rfou - Staff Research Scientist at Google Research

Or is it...

2021

Embracing Tech Readers

Congratulations!

For those that really liked the case study...

How did you like the case study?

You want to share the case study with others?

You want to receive news about new case studies?

You want to talk with me about AI use-cases or content creation?